1. Introduction

Object detection, one of the cornerstones of computer vision, has progressed markedly over the past decade thanks to rapid advances in computing technology. Small-object detection, an especially challenging yet indispensable sub-task, underpins critical applications such as aerial surveillance, autonomous driving, and medical image analysis.

Current small-object detection approaches are consistent with mainstream generic object-detection frameworks. Broadly, these approaches can primarily be classified into two categories. The first category is two-stage detectors, represented by [

1,

2,

3], which decompose the object-detection task into two steps. In the first step, region proposals are generated; in the second step, these proposals are classified and regressed. Although two-stage detectors achieve superior accuracy, their architectural complexity incurs higher computational latency. On the other hand, one-stage detectors, represented by [

4,

5,

6,

7,

8,

9,

10,

11], directly extract convolutional features from the input image based on a regression approach. This strategy offers a clear advantage in detection speed, although it typically suffers from lower accuracy compared to two-stage detectors. Historically, the vast majority of detectors have relied on convolutional neural networks (CNNs). Since Transformers made a major impact in the field of NLP [

12], researchers have sought to adapt them to computer vision, yielding remarkable results, as demonstrated in [

13,

14]. DETR [

15] employed a combination of CNNs and Transformers to develop an end-to-end object-detection framework, which was instrumental in integrating Transformers into object-detection. Subsequently, a series of excellent Transformer-based object detectors emerged, such as Deformable DETR [

16], Swin Transformer [

17], YOLOS [

18], DL-YOLOX [

19], and Relation DETR [

20]. Building on these methods, researchers have proposed numerous outstanding small-object detection techniques, including MARE [

21], DotD [

22], CZ Det [

23], and YOLOM [

24]. Yet small-object detection is still hindered by their low resolution, frequent occlusion, and weak discriminative cues.

To alleviate these challenges, small-object detection techniques address issues related to feature extraction and edge information by incorporating two types of cues: multiscale representations [

25,

26,

27] and contextual information [

28,

29,

30]. In mainstream small-object detectors, the backbone generates feature maps at different scales. The shallow feature map preserves fine-grained details, facilitating object classification, while the deep feature map contains more comprehensive semantic information that aids object localization [

31]. The feature pyramid network (FPN) [

32] is a pioneering technique that addresses the challenge of multiscale representation by integrating detailed information from shallow features with semantic information from deep features. FPN introduces a bottom-up, top-down structure in which deep features are up-sampled and combined element by element with shallow features [

33]. Such fusion boosts feature quality: the top-down pathway merges deep semantics with shallow details, thereby improving small object-detection accuracy. However, excessive feature fusion can erase fine details and thus degrade overall detection performance.

Another critical aspect involves leveraging contextual information to exploit relationships between small objects and their surrounding environments. By considering the context surrounding the objects, these methods can use additional cues from other objects or the background to confirm the positioning and categorization of small objects. Small objects carry limited features and often lack distinctive appearance features to separate them from the background. Contextual information is therefore indispensable for reliably detecting small objects. By incorporating the surrounding context, some works have improved feature representations and overall accuracy [

34]. For example, FA-SSD [

35] pioneered the use of contextual cues and attention mechanisms to enhance small-object detection accuracy. Container [

36] exploits long-range interactions, similar to those provided by Transformers, while retaining the inductive bias of local convolution operations, resulting in the faster convergence speeds typically observed in CNNs. Integrating attention into shallow layers further guides the network to focus on small objects while capturing contextual cues at that resolution. In summary, contextual cues deepen the semantic understanding of small objects. MA-FPN [

37] introduces a pixel-wise attention module: multiscale convolutions first produce a feature map that matches the input size, and channel attention then weights this map to yield fine-grained pixel-level attention. This approach captures appearance features, such as texture, shape, and color, that are crucial to accurately locate small objects. Leveraging these contextual cues enables the model to separate objects from cluttered backgrounds, thereby improving both localization and classification accuracy. However, incorporating an excessive amount of contextual information may deteriorate the model’s generalization ability, reduce its robustness against background noise, and ultimately lower detection accuracy.

Although these methods have made significant progress in small-object detection, several challenges remain unresolved. Specifically, small-object detectors still suffer from sparse discriminative features, imbalanced object distributions in benchmark datasets, and information loss caused by repeated down sampling, all of which hinder further progress. Several specialized small object detectors have also been designed for autonomous driving scenarios.

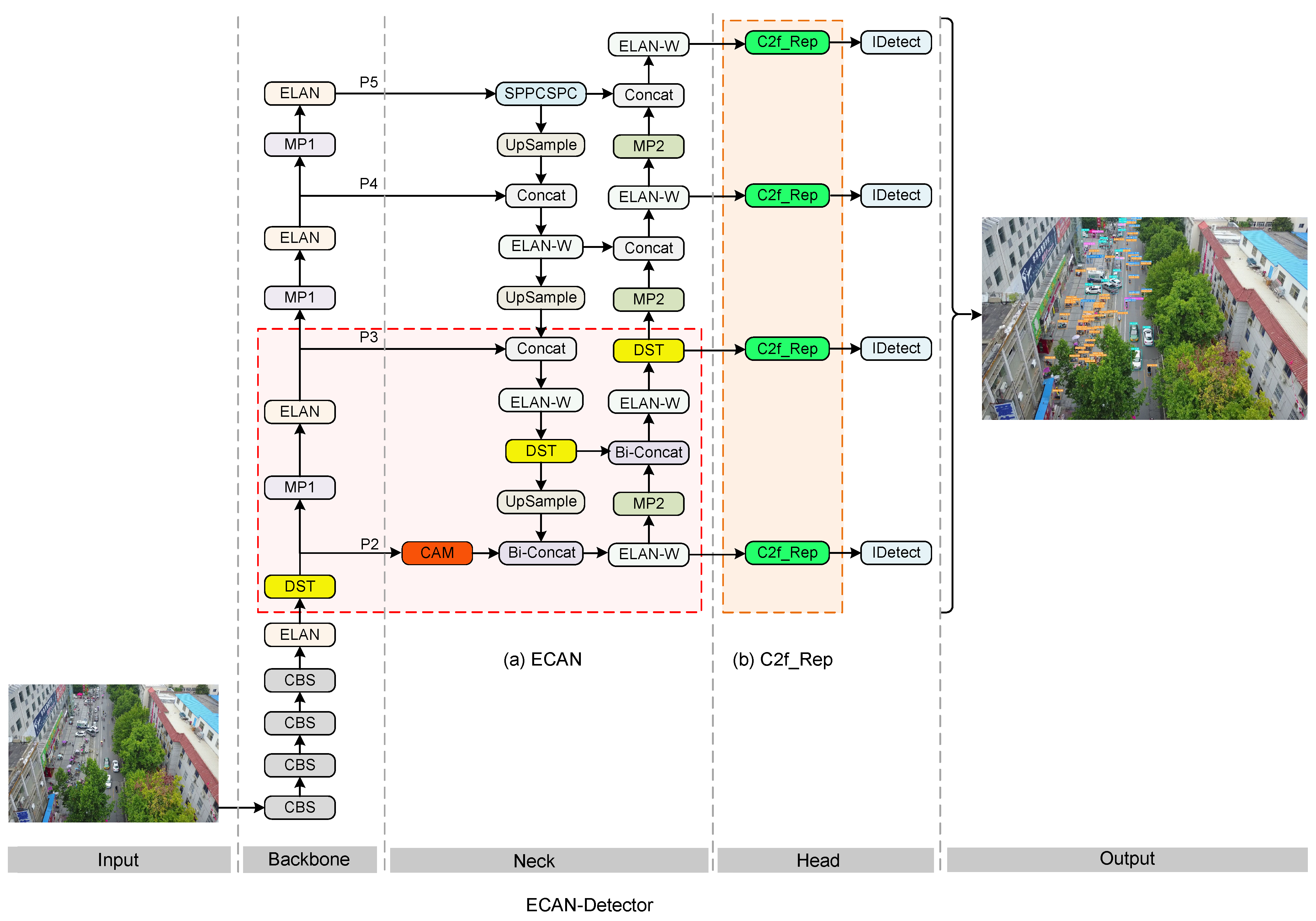

Inspired by seminal works [

38,

39,

40], we propose a novel ECAN-Detector, an efficient context-aggregation method based on YOLOv7 [

7] for small-object detection. First, to enhance the expressive power of shallow features, we introduce an extra feature layer, Shallow detection layer (P2), into the backbone, making the network more suitable for small-object detection scenarios and reducing the loss of effective features related to small objects. Meanwhile, leveraging the power of the Transformer in semantic feature extraction and long-range feature capture, we incorporate a dynamic scaled transformer (DST) into both the backbone and the neck, enabling the model to focus on salient regions globally and enhancing its spatial perception. In addition, to further fuse shallow detailed information with deep semantic information, a context-augmentation module (CAM) (CAM denotes the

context-augmentation module rather than a Class Activation Map.) is combined with the extra detection layer to integrate features from various receptive fields, achieving an optimal balance between global and local information and effectively combining components at multiple scales. Finally, to reduce the number of parameters while introducing richer gradient flow information, a faster implementation of two reparametrized convolutions (C2f_Rep) is adopted to replace the original convolution in the head. This redesign lowers the parameter budget and boosts small-object accuracy, markedly reducing missed and false detections in long-range autonomous-driving scenes. The overall structure of ECAN-Detector is shown in

Figure 1.

Our contributions can be summarized as follows:

We devise a novel, efficient context aggregation network that markedly improves detection precision on small objects.

We introduce an additional small-object detection layer to improve the extraction of detailed features from small objects. At the same time, DST and CAM, based on context-augmentation, further enhance performance.

To reduce the number of parameters and introduce richer gradient flow information, we propose replacing RepConv in the head with C2f_Rep to maintain a lightweight structure.

Extensive experiments conclusively demonstrate that our proposed method surpasses both the baseline and existing state-of-the-art (SOTA) detectors, underscoring its effectiveness in addressing small-object detection challenges.

The rest of the paper is organized as follows:

Section 2 reviews previous related work;

Section 3 describes our proposed detector, ECAN-Detector, and its modules;

Section 4 presents comparative experiments on VisDrone2021-DET [

41] and validates effectiveness of the method on DOTA [

42] and COCO [

43]; finally,

Section 5 summarizes the paper and discusses our shortcomings.

3. Our Methods

This section presents a detailed overview of the Efficient Context-Aggregation Network (ECAN). First, we introduce the baseline and the specific implementation details of our proposed method. Then, we outline ECAN-Detector and elaborate on its three core modules. Next, we introduce C2f_Rep in the head to further enhance the model’s detection capability in autonomous-driving scenarios. Finally, we propose an improved loss function that more effectively supervises small objects.

3.1. Preliminaries

YOLOv7 is an anchor-based one-stage detector composed of three main components: the backbone, neck, and head. The backbone primarily integrates ELAN [

56] and a redesigned max-pooling layer, while the neck adopts PAFPN [

57] enhanced with ELAN-W for multiscale feature extraction. In the head, RepConv [

58] is utilized to fuse multiscale feature maps, followed by IDetect, which predicts objects of different sizes separately. The loss function of YOLOv7 consists of three components: coordinate regression loss, object confidence loss, and classification loss. Both object confidence loss and classification loss use binary cross-entropy loss (BCEWithLogits Loss), while coordinate loss utilizes CIoU loss. In our setting, the YOLOv7 baseline runs only 24.8% AP, indicating sub optimal precision.

To tackle the aforementioned challenges, we design ECAN Detector, a YOLOv7-based architecture depicted in

Figure 1. In our detector, a shallow feature layer is first employed to enrich fine-grained features for small objects. Simultaneously, we introduce a Transformer-based DST module to further improve the network’s capacity for shallow feature extraction. A context-augmentation module (CAM) is inserted into the neck to exploit high-resolution features and capture detailed context. Moreover, in the head, the output features from the neck are processed using C2f_Rep, which replaces the original heavily parameterized convolution to extract more expressive features. This not only improves the accuracy of small-object detection but also reduces the number of parameters and computational complexity.

Through these optimizations, our proposed ECAN-Detector effectively addresses the key challenges of small-object detection, significantly enhancing detection accuracy and recall.

3.2. Our ECAN-Detector

In this subsection, we provide a detailed explanation of the implementation of the three key components of the ECAN-Detector. Specifically, P2 is employed for shallow feature extraction, while DST and CAM are designed to enhance contextual information and fuse multiscale features. These improvements make the network more effective in detecting small objects.

3.2.1. Shallow Detection Layer

In YOLOv7, the backbone primarily generates feature layers P3, P4, and P5 at resolutions of , , and , respectively, to detect small, medium, and large objects. However, in long-distance autonomous-driving scenarios, small objects are often occluded or lost due to downsampling and the limitations of anchor-based designs, resulting in reduced detection accuracy. To address this issue, we introduce an additional shallow feature layer, P2, with a resolution of . Instead of modifying existing layers, such as P3, we explicitly generate P2 from earlier backbone features to preserve fine-grained spatial details. Altering deeper layers would compromise high-level semantic information or require excessive up-sampling, both of which are detrimental to small-object localization. The P2 layer captures higher-resolution information that is critical for detecting objects smaller than 32 × 32 pixels (targets that are otherwise poorly represented at coarser scales). Furthermore, we further equip P2 with DST, CAM, and ELAN W, boosting feature richness and robustness in complex traffic scenes.

3.2.2. Dynamic Scaled Transformer

YOLOv7’s backbone consists of a series of traditional convolutions with a limited receptive field in shallow feature maps, which restricts its ability to capture global features. To capture richer context, we insert a dynamic scaled transformer (DST) into the shallow extraction stage based on [

39]. By integrating DST into the network, we harness the Transformer’s powerful global modeling capabilities.

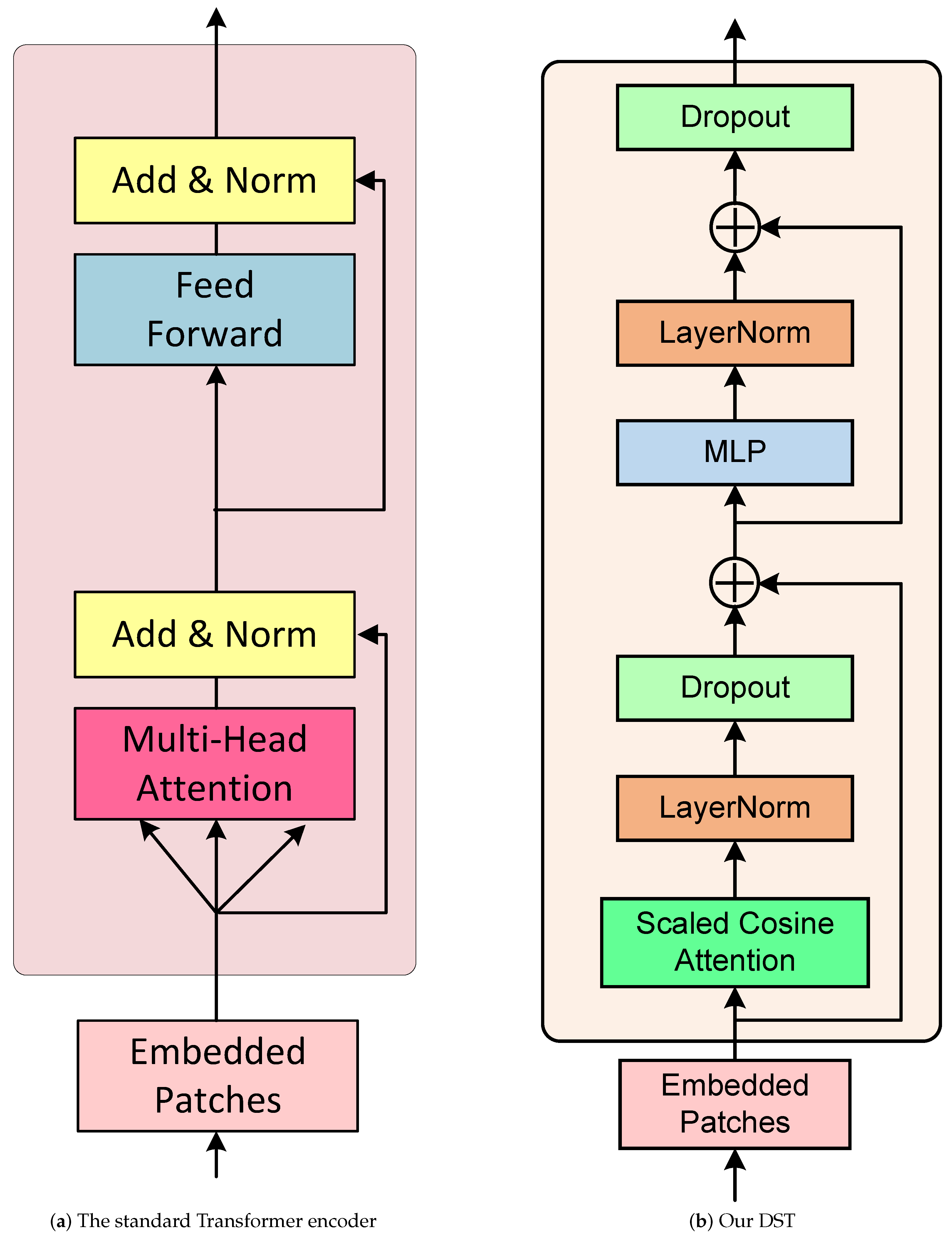

The Transformer architecture [

12] relies on a self-attention mechanism for parallel computation and operates as a non-recurrent network primarily composed of two components: an encoder and a decoder. The encoder structure, illustrated in

Figure 2a, processes the embedded input using multi-head attention to capture feature relationships, followed by a feedforward network for further feature extraction. In addition, residual connections and normalization layers ensure stability and efficient training.

DST is an encoder based on the Transformer architecture, with its specific implementation details illustrated in

Figure 2b. It primarily consists of two components: scaled-cosine attention and a feedforward network (FFN). Layer Normalization and Dropout further stabilize training and reduce over fitting.

Traditional multi-head attention computes the similarity score by taking the dot-product of the query, key, and value. However, as the vector dimension increases, the computational complexity grows, potentially leading to gradient explosion or vanishing gradients. To address this, we propose adopting scaled-cosine attention and introducing a scaling factor to stabilize attention weight calculations. This approach produces more balanced attention weights, better captures the relative relationships between feature vectors, and more effectively extracts contextual information around the target in complex backgrounds.

Additionally, cosine similarity is focused solely on directional similarity between vectors and is not affected by amplitude variations. This property improves the model’s robustness when handling features at different scales.

The input features

are first passed through an embedding layer, which flattens them into a sequence tensor and inputs them into an encoder. The encoder uses scaled-cosine attention to compute the cosine similarity between adjacent pixels. The formulation is shown in Equation (

1).

where

denotes the feature representation of the query of the input pixel

i, and

indicates the feature representation of the key to the input pixel

j.

and

are feature vectors based on the pixels at the corresponding positions in the input feature map

. The learnable temperature parameter

is constrained to be larger than 0.01. After this process, the input features

will be transformed into a new feature representation

, which contains more contextual information and helps to improve the model performance. The relative position bias is denoted by

, it is a learnable bias, usually generated through Equation (

2), where

f is a function representing the relative position between the query pixel

i and the key pixel

j.

In DST, the positional feedforward network consists of two fully connected layers that enhance feature representation through nonlinear transformations while incorporating positional information for each element in the sequence. However, as the number of network layers increases, the number of parameters grows significantly. To address this, instead of adopting the pre-norm approach used in ViT [

59], this paper employs a post-norm process, where normalization operations occur after the attention mechanism. This approach mitigates the accumulation of parameters as the network deepens, alleviates the gradient vanishing problem, and improves the model’s generalization ability.

Dropout further regularizes DST, lowering model complexity and preventing over fitting to improve learning and generalization. Additionally, integrating residual connections into both modules strengthens the network’s ability to selectively learn across different layers. These connections enrich feature representations and facilitate gradient propagation. As a result, deeper features propagate more effectively to the shallow network, ultimately improving the training process for deeper layers. The process is represented by Equation (

3).

Here,

and

represent the weight matrix of the fully connected layer,

and

denote the bias term of the fully connected layer. Finally, through residual connections, the features that have incorporated scaled-cosine attention are added together, resulting in the output features

. The general process of DST is shown in Algorithm 1.

| Algorithm 1 Dynamic scaled transformer (DST) |

Input: : the input feature map; L: the number of feature pyramid levels; : the learnable scaling factor for cosine similarity; : the weight matrices for the fully connected layers; : the bias terms for the fully connected layers; f: the function to compute relative position bias. Output: is the final feature representation after DST processing;

- 1:

procedure DST(, L, , , , , , f) - 2:

▹ Initialize the transformed feature map - 3:

Flatten() ▹ Flatten input feature map into a sequence tensor - 4:

▹ Compute queries - 5:

▹ Compute keys - 6:

▹ Compute values - 7:

for all pixels i in the feature map do - 8:

for all pixels j in the feature map do - 9:

- 10:

- 11:

- 12:

end for - 13:

end for - 14:

- 15:

- 16:

- 17:

- 18:

- 19:

- 20:

end procedure

|

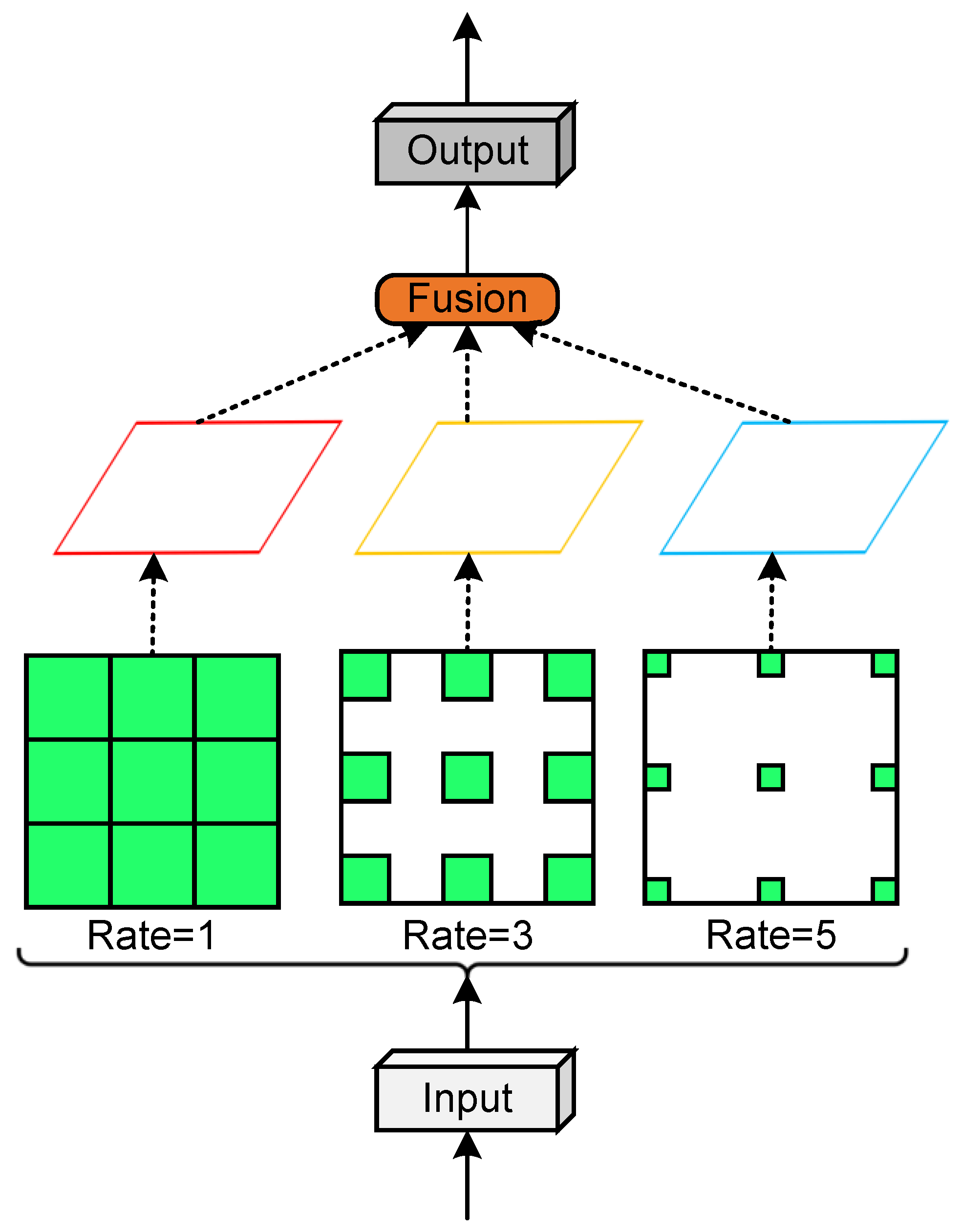

3.2.3. Context-Augmentation Module

As discussed in the previous section, traditional object-detection methods rely on shallow feature maps, which contain rich feature information about small objects. However, after multiple downsampling operations, CNNs significantly limit the information extracted. Therefore, incorporating contextual information around small objects within shallow feature maps enhances both their detection and feature representation.

To improve the identification of dense, small objects, we introduce a context-augmentation module (CAM) in the neck, which provides more detailed contextual information. Simultaneously, CAM integrates a rapid normalized fusion operation, effectively combining deep semantic information with shallow feature representations without significantly increasing the parameter count. The overall process is formulated in Equation (

4).

As shown in

Figure 1, in the P2 feature layer, contextual information around small objects is extracted using CAM [

60]. CAM employs three dilated convolutions with distinct expansion rates to capture contextual information from various receptive fields. The convolution kernel size in CAM is

, with dilation rates of 1, 3, and 5, respectively. These three dilated convolutions are then processed through a fusion operation.

In implementation, fusion operations typically fall into three categories: weighted fusion, adaptive fusion, and concatenation fusion. Experimental results indicate that traditional feature fusion methods may not effectively handle multiscale features, particularly when detecting small objects in complex backgrounds. Therefore, in this work, CAM introduces a specialized multiscale feature fusion operation that adaptively adjusts the fusion weights based on scene-specific characteristics, enhancing feature representation accuracy. The detailed implementation is illustrated in

Figure 3.

In the main network, we replace the original concatenation operation after CAM with fast normalized fusion to better integrate multiscale contextual information. The formula is shown in Equation (

5). First, the input features

are normalized to accelerate the model convergence. Then, a weighted average is used to fuse adjacent deep and shallow feature maps, therefore boosting the capacity to convey multiscale features. Accelerating feature propagation enhances network convergence, improving the ability of shallow networks to detect small objects.

Here, and are the learnable parameters obtained by training, representing the weights of the input feature maps of layer i and layer j, respectively. denotes the input features of layer i, is a small positive constant that ensures that the divisor is non-zero, thus ensuring the stability of the computation, and denotes the output features.

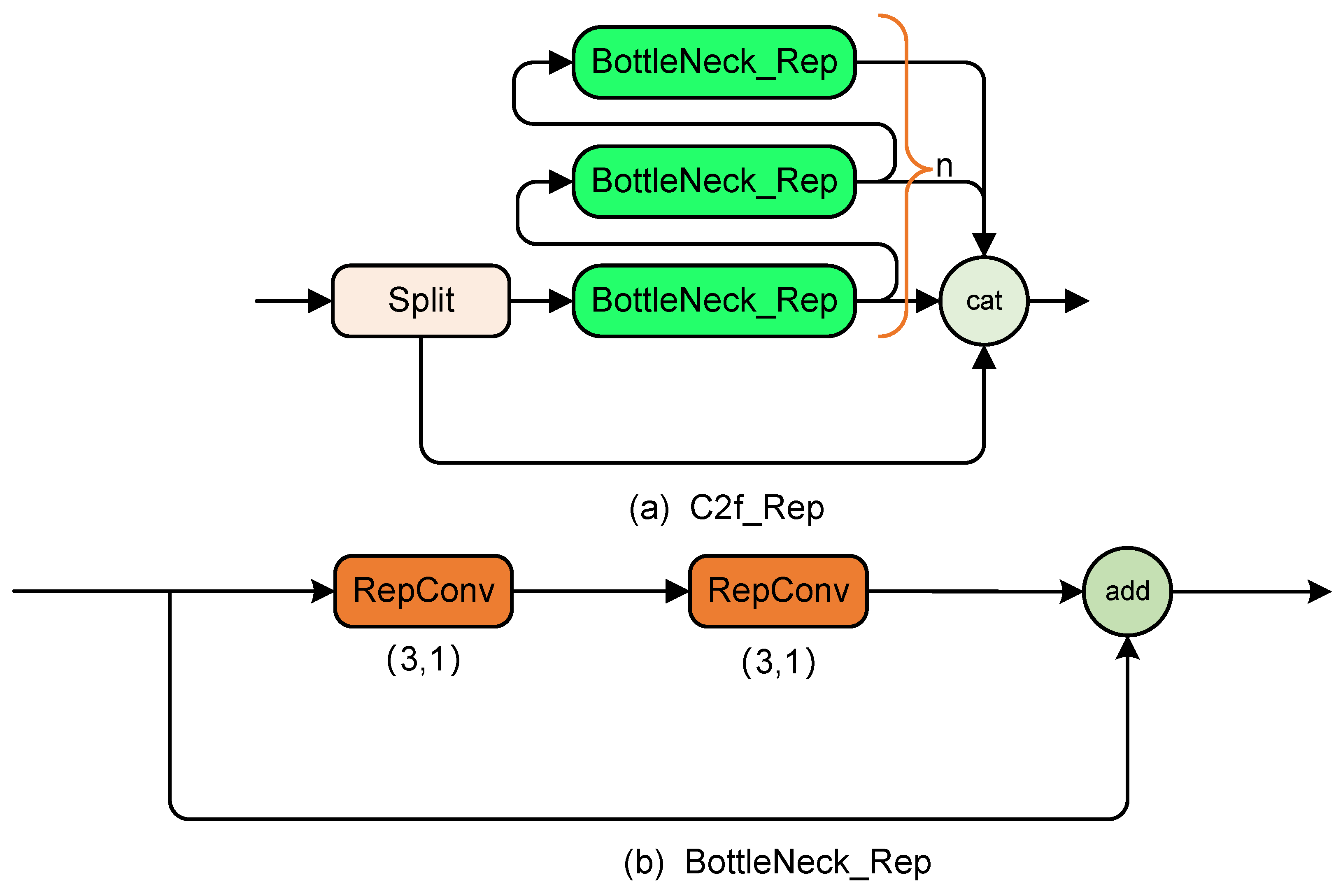

3.3. C2f_Rep

In YOLOv7, RepConv primarily reduces the number of FLOPs and model parameters. During training, it enhances performance by adding parallel

convolution branches and identity mapping branches to each

convolution, thereby improving the model’s efficiency and flexibility. Inspired by the work in [

8], we propose C2f_Rep, which consists mainly of CBS (Cross-branch Structure) and BottleNeck_Rep. CBS is responsible for the initial feature fusion, while BottleNeck_Rep further processes the features using depthwise separable and pointwise convolutions for efficient feature extraction. The architecture of C2f_Rep is shown in

Figure 4b. Compared to the original RepConv, C2f_Rep’s multibranch structure and enhanced gradient flow significantly improve small-object detection performance, while keeping the model lightweight by preventing a substantial increase in parameters.

In our design, the convolution output of YOLOv7’s Conv–BN–SiLU (CBS) block is split into n BottleNeck_Rep branches and then concatenated with the original features. A

convolution is subsequently applied to adjust the channel count and integrate the information. Unlike the original structure, which uses a single

convolution, the C2f_Rep structure introduces parallel BottleNeck_Rep branches to enhance feature diversity, as shown in

Figure 4a. Each BottleNeck_Rep, depicted in

Figure 4b, employs two stacked

reparametrized convolutions with residual connections, improving accuracy without increasing FLOPs or parameters.

3.4. Loss Function

To address the challenges of assigning positive anchors in small-object detection, we adopt an enhanced variant of the Optimal Transport Assignment (OTA) strategy [

61]. Unlike traditional fixed IoU threshold methods, OTA dynamically selects foreground anchors based on a unified cost matrix that incorporates both classification confidence and localization distance. This approach is particularly effective when target objects are sparsely distributed, as is common in small-object scenarios, where conventional assignment strategies often lead to suboptimal matches and imbalance. Using the principles of optimal transport, OTA provides a more robust and adaptive label assignment, improving convergence stability and overall detection accuracy.

The overall loss comprises three terms: CIoU-based box regression, BCE objectness, and BCE classification. Compared to YOLOv7’s default configuration, we introduce two key enhancements specifically tailored for dense small-object detection: first, we replace GIoU with Complete IoU (CIoU), which better penalizes localization errors in both aspect ratio and center alignment, especially beneficial for tightly clustered small targets; and second, we integrate OTA for dynamic label assignment based on spatial constraints and feature similarity.

Furthermore, we incorporate anchor scale-aware loss weighting and apply gain-adjusted anchor matching to normalize loss contributions across object sizes. This prevents large objects from dominating the optimization process and ensures balanced gradient updates throughout training. These combined modifications result in a more stable and effective loss design for detecting small and densely distributed targets.

In the above formulation, N denotes the number of positive samples, dynamically assigned by the OTA matcher. and represent the predicted and ground-truth bounding boxes, respectively. and are the predicted objectness confidence and its binary ground-truth label (1 for foreground, 0 for background). Similarly, and refer to the predicted class probability distribution and the one-hot encoded ground-truth class vector. The weights , , and control the relative contribution of each loss term. Following YOLOv7, we set them at 0.05, 1.0, and 0.5, respectively, unless otherwise specified.

4. Experiments

4.1. Datasets

This study evaluates the effectiveness of our proposed approach using two prominent autonomous-driving datasets and a widely recognized benchmark in the field of object detection: VisDrone2021-DET [

41] (

http://aiskyeye.com) (accessed on 19 May 2025), DOTA-v1.0 [

40] (

https://captain-whu.github.io/DOTA/dataset.html) (accessed on 19 May 2025), and the MS COCO 2017 [

43] (

https://cocodataset.org) (accessed on 19 May 2025). The VisDrone2021-DET dataset [

41] encompasses diverse real-world scenarios captured in 14 cities in China, reflecting various weather conditions, lighting environments, and urban and rural landscapes. It consists of 6471 images for training, 548 for validation, and 3190 for testing.

The DOTA dataset [

40] is a large-scale benchmark widely adopted in computer vision and remote-sensing tasks, focusing on object detection in aerial images. It contains aerial photographs captured by various sensors and platforms, with image resolutions ranging from

to

pixels. In this study, we utilize the DOTA-v1.0 version, which comprises 2806 images spanning different scenes, such as cities, villages, and highways. The dataset features 15 categories of common remote-sensing objects, including airplanes, ships, and vehicles, totaling 188,282 annotated instances. The dataset is partitioned into training, validation, and test sets with a ratio of 1/2, 1/6, and 1/3, respectively. To accommodate high-resolution images, all original images are resized to

pixels.

To further assess the generalization capability of our proposed method, we conduct experiments on the MS COCO 2017 dataset [

43], a widely recognized benchmark in the field of object detection. The dataset comprises 118,287 images for training, 5000 images for validation, and 40,670 images for testing (test-dev). It features 80 object categories spanning a wide range of everyday scenes, including people, animals, vehicles, and household objects. The images in COCO present significant challenges due to the high diversity in object scale, occlusion, and context. Each image contains an average of 7.7 object instances, often with overlapping objects and cluttered backgrounds, which makes detection tasks more complex. The dataset provides detailed instance-level annotations, including bounding boxes, object categories, and segmentation masks. During training and evaluation, all images are resized to 640 × 640 pixels to ensure consistency. This dataset serves as a robust benchmark for evaluating object-detection performance under real-world conditions.

4.2. Evaluation Metrics

To assess the effectiveness of our method, we follow the evaluation protocol of the MS COCO dataset, which measures accuracy using three metrics: AP, , and . Additionally, to demonstrate the practicality of our approach in detecting small objects, we report the evaluation metrics for , , and . Specifically, represents the average precision for small objects (those with sizes up to ), while and represent the average precision for medium ( to ) and large objects (larger than ), respectively.

4.3. Implementation Details

All models are trained on a single NVIDIA RTX 3090 GPU (24 GB) using PyTorch 1.12.0 with CUDA 11.6 and Python 3.8. Our training and inference pipelines are implemented in PyTorch, and we leverage libraries such as pycocotools for evaluation, albumentations for data augmentation, and OpenCV for image preprocessing.

We adopt the AdamW optimizer with hyperparameters and . The initial learning rate is set to 1.0 and decays to 1.0 following a cosine annealing schedule. We apply a weight decay of 5.0 and use an exponential moving average (EMA) with a momentum of 0.9999. All models are trained for 100 epochs on the VisDrone, COCO, and DOTA datasets, using a batch size of 8 images per GPU with an input resolution of 640 × 640. Additionally, mixed-precision training is enabled through AMP to accelerate computation and reduce memory usage.

We train the ECAN-Detector from scratch without using ImageNet pretrained weights to validate its architectural effectiveness. Our early tests showed that pretraining slightly reduced accuracy in small-object detection scenarios.

With these settings in place, we now analyze the contribution of each component.

4.4. Ablation Experiments

The experimental evaluation in this paper consists of several key components. First, we conduct a comprehensive ablation study on the VisDrone2021-DET dataset to assess the performance contribution of each module within the ECAN-Detector architecture. We then provide an in-depth analysis of the experimental results, including the overall detection performance and category-wise comparisons against baseline models. In addition, we investigate how fusion strategies and dilation rates in CAM affect detection accuracy. Subsequently, we evaluate the robustness and generalization capability of the proposed method on the DOTA dataset. To further emphasize the architectural novelty of ECAN-Detector, we conduct a comparative study on the COCO dataset against recent YOLO variants and Transformer-based detectors. Finally, we assess the practical deployability of the ECAN-Detector through real-world inference benchmarking on edge devices.

4.4.1. Performance Comparison of Each Sub-Module

To verify the effectiveness of our proposed method, we conduct ablation experiments on each sub-module of ECAN-Detector and analyze their impact on overall performance, as shown in

Table 1. Firstly, adding the P2 layer leads to a notable improvement in detection performance, with +1.8% AP overall and +2.0% AP

s compared to baseline. These results demonstrate that high-resolution features in the early stage significantly enhance the model’s ability to detect small and densely packed objects in aerial scenes. Next, we evaluate the effects of DST on performance. Based on the self-attention mechanism, DST excels at capturing contextual information around objects, enabling better adaptation to objects of different sizes. Compared to C2f_Rep, DST further boosts the overall accuracy, improving

by 3.1%. We also assess the impact of CAM by adding more detailed contextual information and performing multiscale feature fusion, which has a notable effect on

and

, enhancing them by 0.3% and 0.8%, respectively. Furthermore, experiments evaluate the performance of simultaneously integrating P2 and DST. The results show that, compared to adding P2 alone, the AP is further improved by 0.6%. Finally, in comparison to the baseline, ECAN-Detector demonstrates improvements of 3.1% in AP and 3.5% in

.

We note that applying CAM in isolation slightly reduces AP (24.8% → 24.7%) in

Table 1. This is attributed to CAM’s reliance on global context aggregation without concurrent spatial refinement. Specifically, multi-dilation fusion can introduce over-smoothing effects in clustered small-object regions, weakening boundary localization. Moreover, CAM was originally designed to enhance early high-resolution features, particularly in conjunction with the P2 shallow layer. If P2 is absent or excluded from the fusion process, CAM lacks sufficient high-frequency structural cues, making it less effective in recovering fine-grained object details. This further impairs detection performance, especially in scenarios involving small, low-contrast targets.

4.4.2. Efficiency Analysis of ECAN-Detector

In

Table 2, we systematically evaluate the individual and combined effects of P2, C2f_Rep, and CAM on model performance. First, we analyze the contribution of the P2 layer. Compared to baseline, P2 maintains nearly identical FLOPs and parameter counts while delivering a noticeable improvement in

. Next, we assess C2f_Rep, an improved variant of C2f, which slightly increases FLOPs and parameter count but significantly enhances detection performance for large objects. Subsequently, we evaluate the impact of CAM. By aggregating shallow contextual information, CAM substantially improves overall performance but introduces a considerable increase in FLOPs. We further examine the combined effect of P2 integration. When CAM is combined with P2, AP, and

improves by 2.4% each over the baseline, with only a modest rise in FLOPs and parameters. Overall, the integration of all three components (P2, C2f_Rep, and CAM) leads to further improvements, yielding gains of 3.1% in AP and 3.5% in

compared with the baseline.

4.4.3. Performance Comparison of C2f_Rep and C2f

In

Table 3, we evaluate the individual and combined effects of the C2f, C2f_Rep, and ECAN modules on model performance. First, we examine the impact of C2f. Compared to baseline, C2f achieves a modest improvement in

while maintaining nearly identical FLOPs and parameter counts. Next, we analyze C2f_Rep, which is an enhanced version of C2f. Although it introduces a slight increase in FLOPs and parameters, it leads to improved detection performance for large objects.

Subsequently, we assess the contribution of the ECAN module. By incorporating shallow contextual information, ECAN yields a substantial boost in overall performance, albeit with a noticeable increase in computational cost. We then compare the performance gains achieved by integrating both C2f and ECAN. This combined approach results in improvements of 3.0% in AP and 3.4% in over the baseline, with only a marginal increase in FLOPs and parameters.

Furthermore, when comparing C2f_Rep to C2f, we observe a 0.1% gain in both AP and , while maintaining the same FLOPs and parameter count. In general, the integration of C2f_Rep and ECAN leads to further performance enhancements, achieving a 3.1% increase in AP and a 3.5% increase in compared to the baseline model.

4.4.4. Transformer Encoder vs. DST Comparison

Although DST inherits the core concept of self-attention from standard Transformer encoders, it is architecturally distinct and optimized for dense small-object detection. First, DST replaces standard dot-product attention with a scaled-cosine attention (SCA) mechanism, which enhances stability and angular sensitivity in low-resolution feature maps. Second, unlike fixed-size token windows or global attention, DST adaptively scales the receptive field across spatial hierarchies, allowing more flexible multiscale aggregation. To empirically justify this design, we compare DST with a standard Transformer encoder under identical backbone and training conditions (

Table 4). Our DST achieves higher AP (25.4% vs 25.1%) and AP

s (16.8% vs 16.3%), while introducing only a marginal increase in parameters (+6.2M) and FLOPs (+17.1G). These results validate that DST is both structurally and functionally distinct from typical Transformer encoders and is more effective for small-object dense scenes like VisDrone.

4.4.5. Effects of Individual Classes

Single-class experiments allow fine-grained analysis of category-specific performance, revealing both strengths and weaknesses. They support capability assessment, issue diagnosis, targeted optimization, and better interpretability. The performance of individual classes on VisDrone 2021-DET at both the baseline and after adding each module independently is displayed in

Table 5. The results indicate notable per-class gains achieved by our method. ECAN shows the most remarkable enhancement in overall performance, achieving good results for the smaller classes in the original image, which are improved by 4%, 4%, 1.7%, and 1.6% on pedestrian, car, truck, and tricycle, respectively. Finally, after integrating all modules, overall performance improves markedly across every class relative to the baseline. Notably, Notably, the ECAN module boosts the Vehicle class by a remarkable 10.6 percentage points. Moreover, this method achieves the best detection performance in all six categories.

4.4.6. Different Fusion Methods

To evaluate fusion strategies within CAM,

Table 6 compares weighted, adaptive, and concatenation fusion. The experimental results clearly show that weighted fusion significantly improves all evaluation metrics. Adaptive fusion enhances accuracy for regular-sized objects, with minimal improvement in small-object detection. Concatenation fusion boosts the accuracy of large objects, but provides only limited enhancement for small objects. Since our method is primarily designed for small-object detection in complex traffic scenes, where small objects make up a large portion, the weighted fusion method proves to be more suitable for these scenarios.

4.4.7. CAM Dilation Rates

We conducted ablation experiments to investigate the impact of different dilation rate configurations in the CAM module. Specifically, we compared four settings: (2,4,6), (2,2,3), (5,2,1), and (1,3,5). As summarized in

Table 7, the (1,3,5) configuration consistently achieves the best performance, yielding the highest AP of 27.9% and AP

s of 19.9%. These results empirically validate our selection of (1,3,5) as the optimal dilation setting for CAM in the final ECAN-Detector model.

4.4.8. Robustness on DOTA

To further validate the robustness of ECAN-Detector, we evaluated it on the DOTA dataset [

42]. As shown in

Table 8, our method, starting with ECAN, significantly improves overall accuracy compared to the baseline. The improvements in AP and

are particularly notable, with increases of 0.8% and 0.6%, respectively, further demonstrating the robustness of our approach. Additionally, our method provides a distinct enhancement for medium and large objects, as demonstrated by the experimental results, which confirm the resilience of our approach.

4.4.9. Compared to YOLO Variants and Transformers

ECAN-Detector distinguishes itself from existing YOLO variants (e.g., YOLOv8 [

8], YOLOv9 [

9]) and Transformer-based detectors (e.g., DETR [

15], Swin Transformer [

17]) through both architectural innovations and its focus on small-object detection. While YOLOv8 and YOLOv9 mainly enhance backbone and head components via compound scaling and decoupled design, ECAN-Detector introduces two dedicated modules: the dynamic scaled transformer (DST) and the context-augmentation module (CAM).

DST employs a lightweight scaled-cosine attention mechanism to dynamically adjust receptive fields across spatial hierarchies, effectively capturing dispersed and context-dependent small targets. CAM supplements this by aggregating fine-grained context through multibranch dilated convolutions and normalized attention, helping the network recover localized details lost in early downsampling. Together, DST and CAM form a synergistic enhancement tailored to small-object challenges such as underdetection, low resolution, and occlusion.

In contrast, Transformer-based models such as DETR and Swin Transformer face key limitations in small-object detection, including excessive computational complexity and inadequate multiscale representation. For example, DETR requires over 500 training epochs and lacks explicit multiscale fusion, while Swin’s fixed window attention restricts contextual adaptability. ECAN-Detector maintains YOLO-style efficiency (one-stage pipeline, 100 epochs) while integrating attention-based context modeling.

As shown in

Table 9, ECAN-Detector achieves an AP

s of 37.8 on the COCO2017 validation set, surpassing YOLOv9-C and YOLOv8 by +1.6 and +1.1 points, respectively, while maintaining a favorable trade-off between accuracy and computational cost. Compared to Swin-S (838 GFLOPs) and DETR (28 FPS), ECAN runs at 86.2 FPS with only 42.9 GFLOPs, demonstrating its suitability for real-time small-object detection scenarios.

Table 9 compares ECAN-Detector against YOLOv8, YOLOv9, DETR, and Swin-S on the COCO2017 val set. ECAN achieves an AP

s of 38.4, outperforming YOLOv8n and YOLOv9s by and points, respectively, while maintaining lower or comparable FLOPs.

4.4.10. Edge Deployment Insights

We evaluate ECAN-Detector’s practical feasibility by benchmarking its performance under INT8 quantization with TensorRT on three representative devices: RTX 3090 (NVIDIA Corporation, Santa Clara, CA, USA), Jetson AGX Orin (NVIDIA Corporation, Santa Clara, CA, USA), and Jetson Xavier NX (NVIDIA Corporation, Santa Clara, CA, USA).

As shown in

Table 10, the model runs at over 25 FPS on Orin (INT8), meeting real-time constraints for many embedded vision systems.

4.5. Visualization of Results

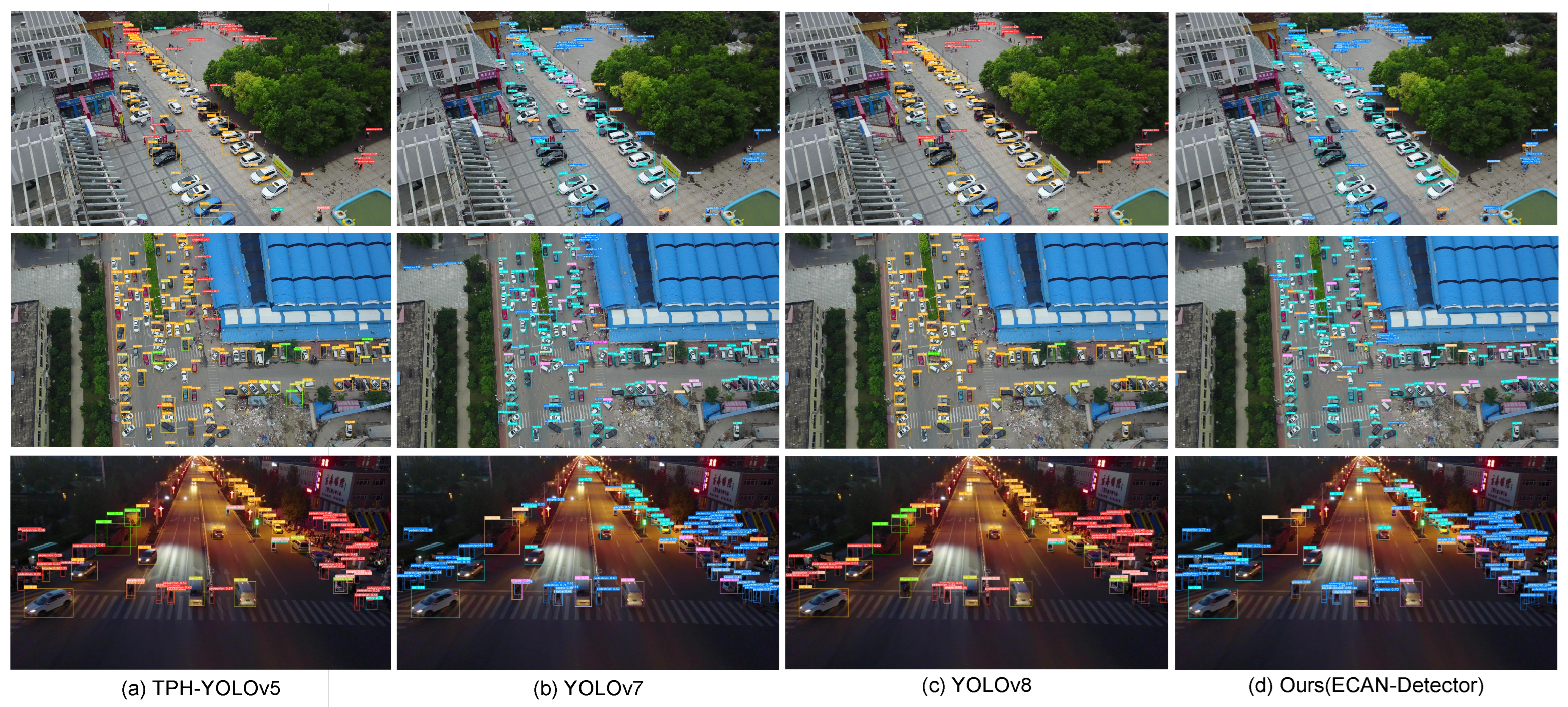

To clearly illustrate the superiority of our proposed method, we compare its detection results with those of TPH-YOLOv5, YOLOv7, and YOLOv8, as presented in

Figure 5. In the street scene depicted in the first row, YOLOv7 shows the poorest detection performance. Incorrectly classifies the traffic light on the left as a pedestrian and fails to detect the car on the right. TPH-YOLOv5 and YOLOv8 also miss detecting the tricycle in the middle. Our proposed method, however, achieves the most accurate detection in these regions. The only exception is the pedestrian, which is missed due to a tree obstruction on the left side.

In the second-row street scene near the mall, the other three models produce multiple false positives on the left side, misclassifying barriers and traffic lights as pedestrians. Our method outperforms them in the left area but still misses some pedestrians and vehicles in the densely packed area on the right.

In the night scene of the final row, YOLOv7 yields the poorest results, missing the tricycle on the zebra crossing and performing inadequately in the densely populated right region. In contrast, ECAN-Detector achieves the best detection performance among all detectors. Although most objects are correctly detected, several pedestrians and vehicles remain unrecognized due to low illumination in the nighttime scene.

Overall, our proposed method surpasses the other detectors in densely populated small-object scenarios and maintains robust performance under low-light conditions, significantly improving the baseline’s detection accuracy.

4.6. Discussion

Previous sections have already demonstrated the effectiveness of our method in both design and empirical results. In this section, we will explore the underlying reasons for its success, focusing on the additional modules and key factors that contribute to its performance. ECAN is specifically designed to strengthen the shallow network’s capability to accurately detect and represent small objects. As shown in

Table 1, the introduction of the P2 layer provides high-resolution feature representations, improving the capture of detailed information about small objects. Meanwhile, DST and CAM further strengthen the shallow contextual information. DST incorporates a scaling mechanism based on cosine attention to stabilize weight calculations, while CAM utilizes a scaling factor with three dilated convolutions to capture contextual information from different receptive fields.

Furthermore, the feature fusion operation effectively integrates both deep and shallow features, enhancing the network’s capacity to represent multiscale features. Our method excels in detecting small objects in crowded environments and performs well under challenging lighting conditions, resulting in significant improvements in detection performance over the baseline of 0.8% in AP and 0.6% in

, as demonstrated in

Table 6. As shown in

Table 5, among the fusion methods, the weighted feature fusion operation outperforms the other approaches, achieving the best results.

To further enhance the performance of small-object detection, we introduce C2f_Rep in the head, as shown in

Table 2. C2f_Rep effectively incorporates gradient flow information while remaining lightweight, without significantly increasing the number of parameters or computations. Additionally, in

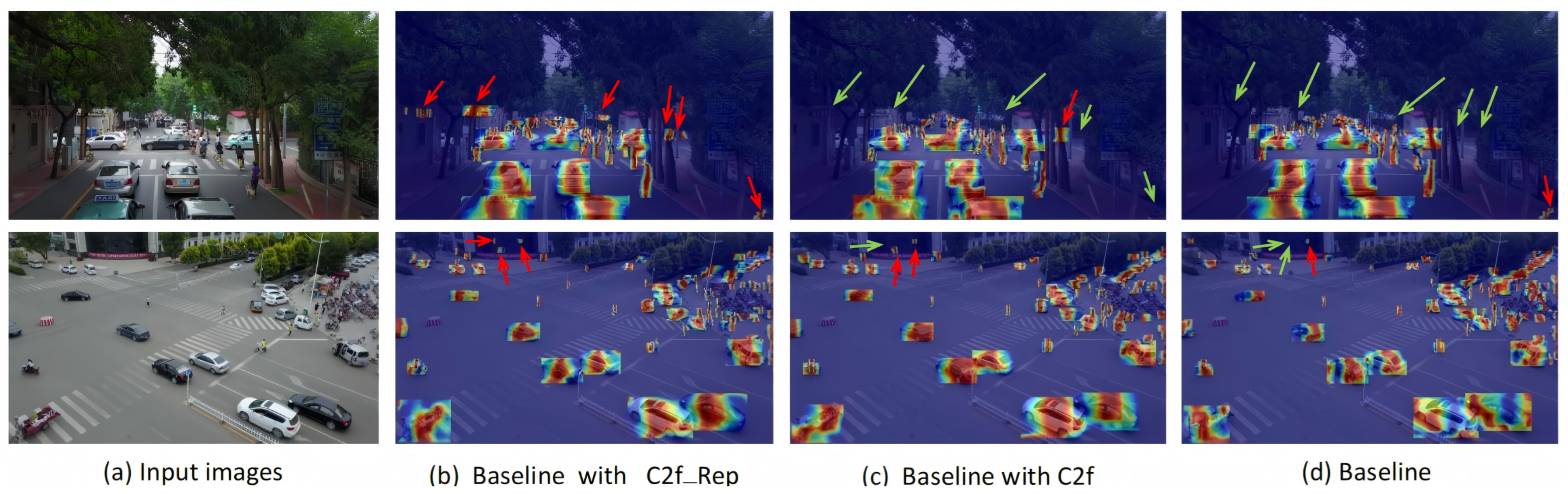

Figure 6, we compare the heatmap visualizations of the baseline model, the C2f addition, and our proposed C2f_Rep. In the zebra crossing scene (second row), the heatmap with C2f_Rep shows fewer false detections of objects reflected in the glass compared to the baseline and C2f heatmaps. It also performs better in detecting the densely populated area in the upper right corner. Overall, the heatmap generated by C2f_Rep outperforms the others, effectively reducing false positives and false negatives.

4.7. Comparison with SOTA Detectors

To further validate the superior performance of our method in dense small-object detection scenarios, we compared and analyzed our approach with other state-of-the-art (SOTA) detectors using the VisDrone2021-DET dataset. As shown in

Table 11 and

Table 12, our method achieves a significant improvement in detection precision, with an increase of 3.1% in AP and 3.5% in

. It outperforms Faster R-CNN [

1] and Cascade R-CNN [

3], achieving a 40.1% higher FPS and a 5.5% higher AP, all while maintaining similar computational complexity. Compared to DL-YOLOX [

62], our method achieves higher AP (+1.3%), AP50 (+4.5%), and APs (+11.3%), although it incurs a significantly higher computational cost and parameter volume. Furthermore, our method surpasses recent YOLO variants, including YOLOv5 [

5], YOLOv6 [

6], YOLOv8 [

8] and YOLOv9 [

9], in accuracy while maintaining comparable speed. In small-object detection, our method outperforms TPH-YOLOv5 [

38] in all metrics, demonstrating its effectiveness. However, computational efficiency remains an area for future improvement. However, computational efficiency remains an area for future improvement. Specifically, ECAN-Detector introduces a 2.3× increase in GFLOPs (105.3 to 246.3) and a 15.3% rise in parameters (37.2 M to 42.9 M) relative to the YOLOv7 baseline, which lowers real-time throughput from 128 FPS to 54 FPS. These overheads stem mainly from the additional P2 branch, DST blocks, and CAM fusion. Nonetheless, the accuracy gains (+3.1 AP, +3.5

) yield a markedly better accuracy-to-cost ratio than heavyweight two-stage models (e.g., Cascade R CNN, 239.4 GFLOPs/69.1 FPS) while still running above 50 FPS on an RTX 3090, making the model viable for near real-time perception on edge GPUs (e.g., Jetson AGX Orin) after TensorRT sparsity pruning.

Regarding the observed performance gap between overall AP (27.9%) and small-object APs (19.9%) on the VisDrone2021-DET dataset, this discrepancy arises from the challenging nature of the dataset. Over 72% of annotated targets are smaller than pixels, and the images often feature dense object distributions, severe occlusions, motion blur, and complex aerial perspectives. These factors make small objects especially difficult to detect and locate with high precision.

While our CAM and DST modules improve detection under such constraints, the extremely low resolution of small objects, often just a few pixels, limits the effectiveness of standard convolution and attention layers. CAM enhances contextual aggregation, improving recall for partially visible targets, while DST provides cross-scale feature interaction that helps recover structure. However, in cases where small objects are highly crowded or ambiguous, the fused context can also lead to over-smoothing. We mitigate this by jointly optimizing the loss weights and incorporating both modules, leading to a +3.5 APs gain over the YOLOv7 baseline.

5. Conclusions

In intelligent transportation scenarios, detecting small objects remains a major challenge due to their low resolution and limited feature representation. To tackle this, we present ECAN-Detector, an efficient context-aggregation network tailored for small-object detection in complex traffic scenes. Our design incorporates a high-resolution shallow detection layer, a dynamic scaled transformer (DST) for enhanced spatial awareness, and a context-augmentation module (CAM) to enrich contextual semantics. Additionally, we adopt a lightweight C2f_Rep module in place of RepConv in the detection head, achieving higher accuracy for small objects with minimal computational overhead. Experimental results on VisDrone2021-DET show a 3.1% AP gain over the baseline, with consistent improvements validated in the DOTA and COCO datasets. While the reported AP increase may appear incremental, it leads to substantial practical benefits in autonomous driving, especially in reliably detecting distant or occluded objects like pedestrians and road hazards under adverse conditions, ultimately enhancing perception robustness and system safety.

Limitations and Future Work: Despite its promising results, ECAN-Detector introduces a higher computational cost compared to the baseline, leading to slower inference. Enhancing real-time performance remains an open challenge. In future work, we plan to: (1) design loss functions specialized for dense small-object scenarios; (2) refine the backbone to better capture fine-grained details; and (3) improve anchor box allocation to reduce missed detections. We also aim to incorporate neural architecture search and channel sparsification to further optimize the model for edge deployment. These improvements will facilitate more efficient and deployable small-object detection systems.