Abstract

The topic of efficiently finding the global minimum of multidimensional functions is widely applicable to numerous problems in the modern world. Many algorithms have been proposed to address these problems, among which genetic algorithms and their variants are particularly notable. Their popularity is due to their exceptional performance in solving optimization problems and their adaptability to various types of problems. However, genetic algorithms require significant computational resources and time, prompting the need for parallel techniques. Moving in this research direction, a new global optimization method is presented here that exploits the use of parallel computing techniques in genetic algorithms. This innovative method employs autonomous parallel computing units that periodically share the optimal solutions they discover. Increasing the number of computational threads, coupled with solution exchange techniques, can significantly reduce the number of calls to the objective function, thus saving computational power. Also, a stopping rule is proposed that takes advantage of the parallel computational environment. The proposed method was tested on a broad array of benchmark functions from the relevant literature and compared with other global optimization techniques regarding its efficiency.

MSC:

49K10

1. Introduction

Typically, the task of locating the global minimum [1] of a function is defined as follows:

where the set (S) is as follows:

The values and are the left and right bounds, respectively, for the point . A systematic review of the optimization procedure can be found in the work of Fouskakis [2].

The previously defined problem has been tackled using a variety of methods, which have been successfully applied to a wide range of problems in various fields, such as medicine [3,4], chemistry [5,6], physics [7,8,9], economics [10,11], etc. Global optimization methods are divided into two main categories: deterministic and stochastic methods [12]. The first category belongs to the interval methods [13,14], where the set (S) is iteratively divided into subregions, and those that do not contain the global solution are discarded based on predefined criteria. Many related works have been published in the area of deterministic methods, including the work of Maranas and Floudas, who proposed a deterministic method for chemical problems [15], the TRUST method [16], the method suggested by Evtushenko and Posypkin [17], etc. In the second category, the search for the global minimum is based on randomness. Also, stochastic optimization methods are commonly used because they can be programmed more easily and do not depend on any previous information about the objective problem. Some stochastic optimization methods that have been used by researchers include ant colony optimization [18,19], controlled random search [20,21,22], particle swarm optimization [23,24,25], simulated annealing [26,27,28], differential evolution [29,30], and genetic algorithms [31,32,33]. Finally, there is a plethora of research referring to metaheuristic algorithms [34,35,36], offering new perspectives and solutions to problems in various fields.

The current work proposes a series of modifications in order to effectively parallelize the widely adopted method of genetic algorithms for solving Equation (1). Genetic algorithms, initially proposed by John Holland, constitute a fundamental technique in the field of stochastic methods [37]. Inspired by biology, these algorithms simulate the principles of evolution, including genetic mutation, natural selection, and the exchange of genetic material [38]. The integration of genetic algorithms with machine learning has proven effective in addressing complex problems and validating models. This interaction is highlighted in applications such as the design and optimization of 5G networks, contributing to path loss estimation and improving performance in indoor environments [39]. It is also applied to optimizing the movement of digital robots [40] and conserving energy in industrial robots with two arms [41]. Additionally, genetic algorithms have been employed to find optimal operating conditions for motors [42], optimize the placement of electric vehicle charging stations [43], manage energy [44], and have applications in other fields such as medicine [45,46] and agriculture [47].

Although genetic algorithms have proven to be effective, the optimization process requires significant computational resources and time. This emphasizes the necessity of implementing parallel techniques, as the execution of algorithms is significantly accelerated by the combined use of multiple computational resources. Modern parallel programming techniques include the message-passing interface (MPI) [48] and the OpenMP library [49]. Parallel programming techniques have also been incorporated in various cases into global optimization, such as the combination of simulated annealing and parallel techniques [50], the use of parallel methods in particle swarm optimization [51], the incorporation of radial basis functions in parallel stochastic optimization [52], etc. One of the main advantages of genetic algorithms over other global optimization techniques is that they can be easily parallelized and exploit modern computing units as well as the previously mentioned parallel programming techniques.

In the relevant literature, two major categories of parallel genetic algorithms appear, namely, island genetic algorithms and cellular genetic algorithms [53]. The island model is a parallel genetic algorithm (PGA), which manages several subpopulations on separate islands, and executes the genetic algorithm process on each island simultaneously for a different set of solutions. Island models have been utilized in various cases, such as molecular sequence alignment [54], the quadratic assignment problem [55], the placement of sensors/actuators in large structures [56], etc. Also, recently, Tsoulos et al. proposed an implementation of an island PGA [57]. Regarding the parallel cellular model of genetic algorithms, solutions are organized into a grid. Various diverse operators, such as crossovers and mutations, are applied to neighboring regions within the grid. For each solution, a descendant factor is created, replacing its position within the birth region. The model is flexible regarding the structure of the grid, neighborhood strategies, and settings. Implementations may involve multiple processors or graphical processing units, with information exchange possible through physical communication networks. The theory of parallel genetic algorithms has been thoroughly presented by a number of researchers in the literature [58,59]. Also, parallel genetic algorithms have been incorporated in combinatorial optimization [60].

The proposed method is based on the island technique and suggests a number of improvements to the general scheme of parallel Genetic Algorithms. Among these improvements are a series of techniques for propagating optimal solutions among islands that aim to speed up the convergence of the overall algorithm. In addition, the individual islands of the genetic algorithm periodically apply a local minimization technique with two goals: to discover the most accurate local minima of the objective function and to speed up the convergence of the overall algorithm without wasting computing power on previously discovered function values. Furthermore, an efficient termination rule based on asymptotic considerations, which was validated across a series of global optimization methods, is also incorporated into the current algorithm. The proposed method was applied to a series of problems appearing in the relevant literature. The experimental results indicate that the new method can effectively find the global minimum of the functions in a large percentage of cases, and the above modifications significantly accelerated the discovery of the global minimum as the number of individual islands in the genetic algorithm increased.

The remainder of the article follows this structure: In Section 2, the genetic algorithm is analyzed, and the parallelization, dissemination techniques (PT or migration methodologies), and termination criteria are discussed. Subsequently, in Section 3, the test functions used are presented in detail, along with the experimental results. Finally, in Section 4, some conclusions are outlined, and future explorations are formulated.

2. Method Description

This section begins with a detailed description of the base genetic algorithm and continues providing the details of the suggested modifications.

2.1. The Genetic Algorithm

Genetic algorithms are inspired by natural selection and the process of evolution. In their basic form, they start with an initial population of chromosomes, representing possible solutions to a specific problem. Each chromosome is represented as a “gene”, and its length is equal to the dimension of the problem. The algorithm processes these solutions through iterative steps, replicating and evolving the population of solutions. In each generation, the selected solutions are crossed and mutated to improve their fit to the problem. As generations progress, the population converges toward solutions with improved fit to the problem. Important factors affecting genetic algorithm performance include population size, selection rate, crossover and mutation probabilities, and strategic replacement of solutions. The choice of these parameters affects the ability of the algorithm to explore the solution space and converge to the optimal result. Subsequently, the operation of the genetic algorithm is presented through the replication and advancement of solution populations, step by step [61,62]. The steps of a typical genetic algorithm is shown in Algorithm 1.

2.2. Parallelization of Genetic Algorithm and Propagation Techniques

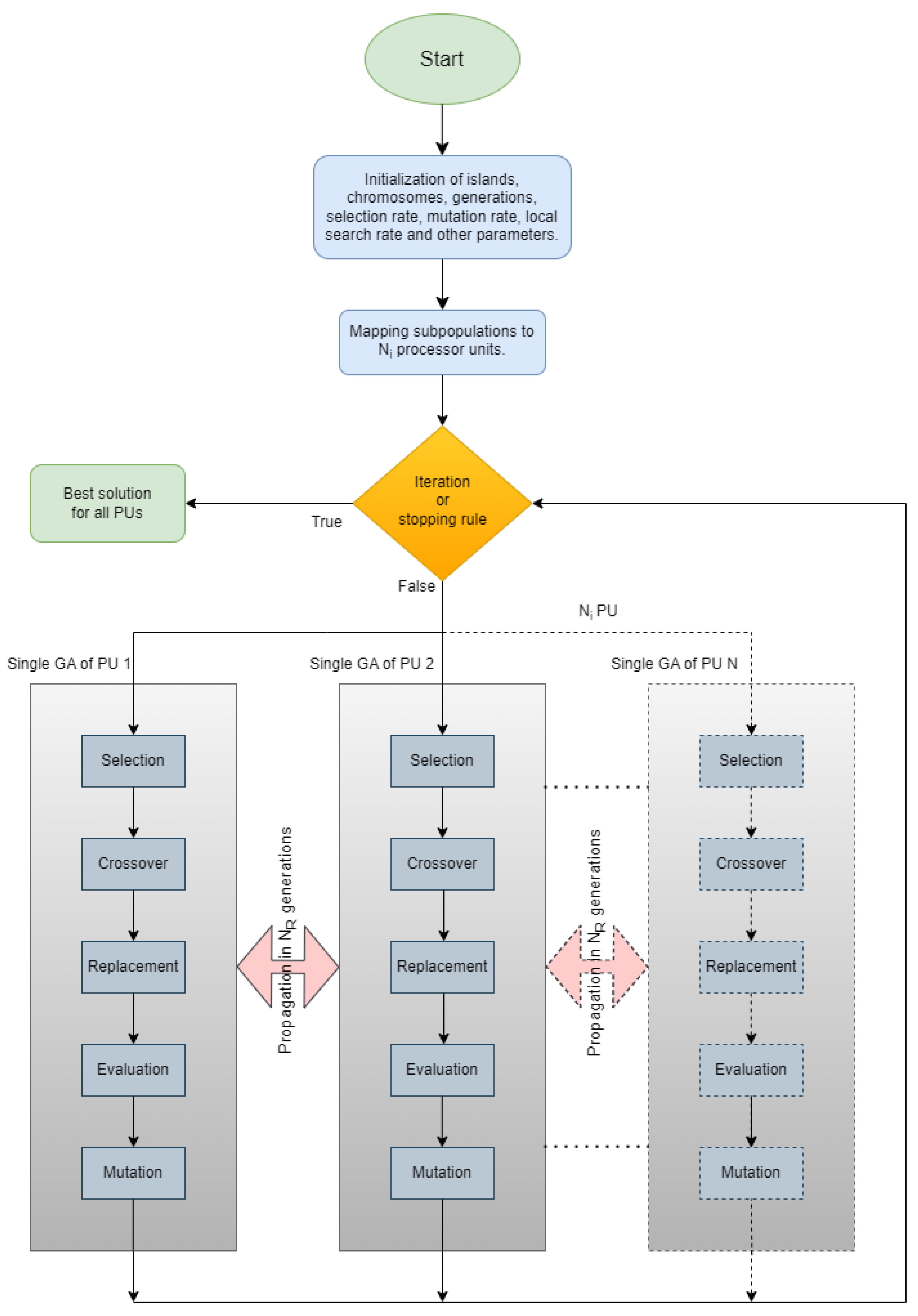

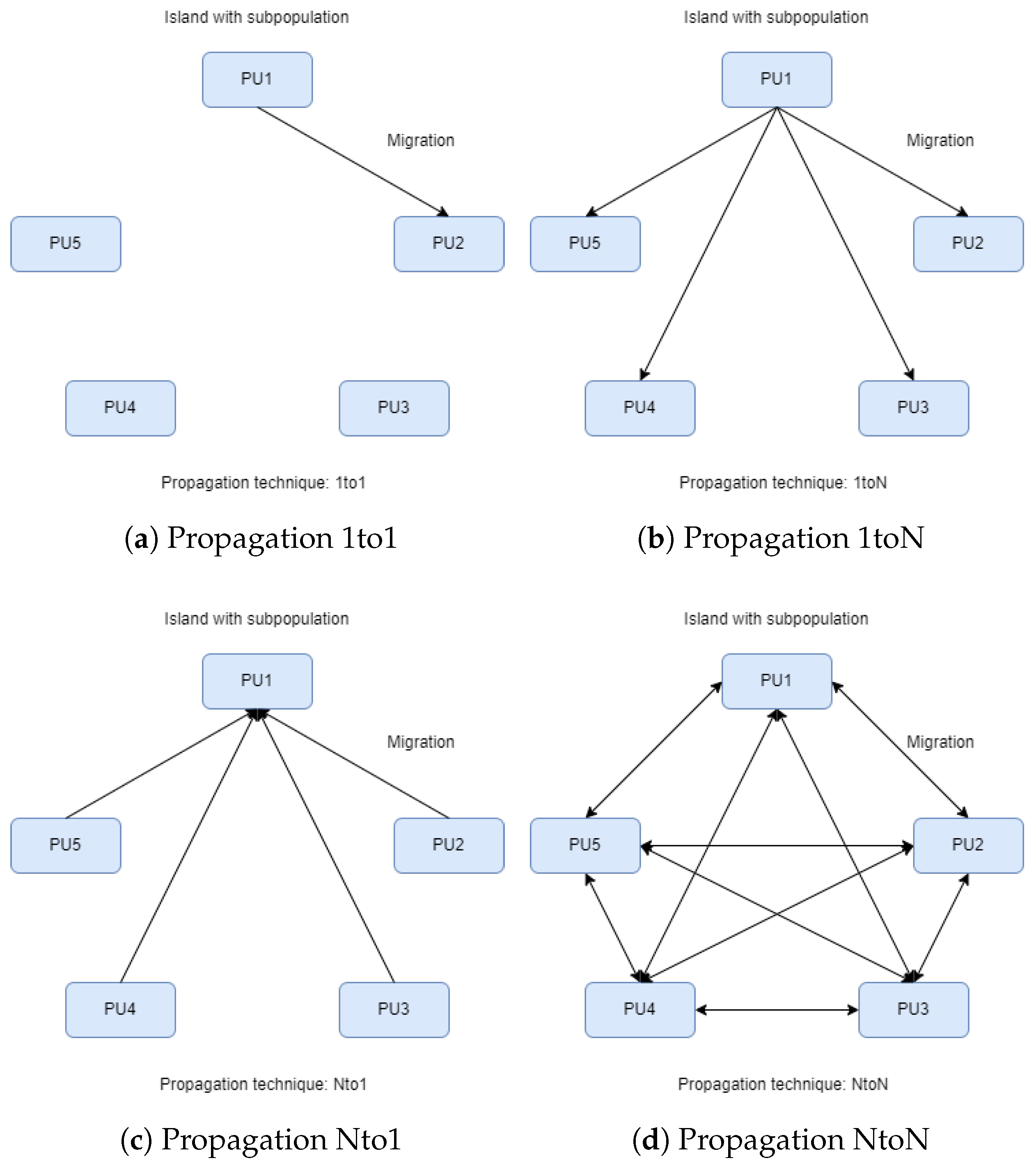

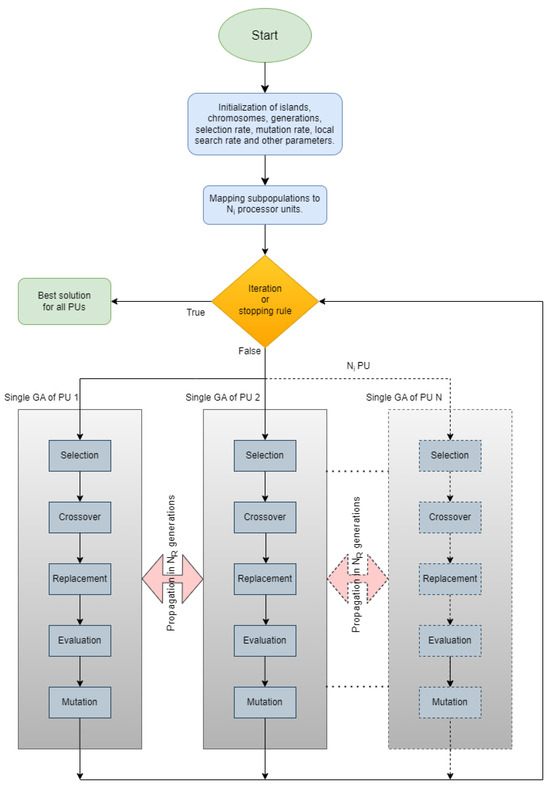

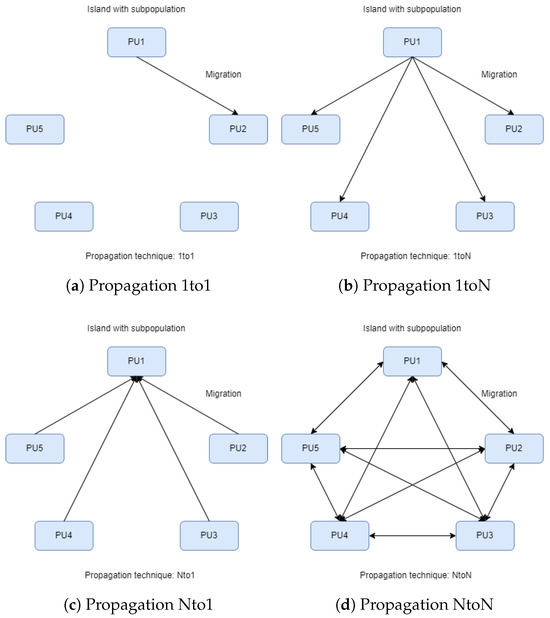

In the parallel island model of Figure 1, an evolving population is divided into various “islands”, each working concurrently to optimize a specific set of solutions. In this figure, each island implements a separate genetic algorithm as described in Section 2.1. The steps of the overall algorithm are also presented through a series of steps in Algorithm 2. In contrast to classical parallelization, which handles a central population, the island model features decentralized populations evolving independently. Each island exchanges information with others at specific points in evolution through migration, where solutions move from one island to another, influencing the overall convergence toward the optimal solution. Migration settings determine how often they occur and which solutions are selected for exchange. Each island can follow a similar search strategy, but for more variety or faster convergence, different approaches can be employed. Islands may have identical or diverse strategies, providing flexibility and efficiency in exploring the solution space. To implement this parallel model, each island is connected to a computational resource. For instance, as depicted in images of Figure 2, the execution of the parallel island model involves five islands, each managing a distinct set of solutions using five processor units (PUs). During the migration process, information related to solutions is exchanged among PUs. Figure 2 also depicts the four different techniques for spreading the chromosomes with the best functional values. In Figure 2a, we observe the migration of the best chromosomes from one island to another (randomly chosen). In Figure 2b, migration occurs from a randomly chosen island to all others. In Figure 2c, it occurs from all islands to a randomly chosen one, and finally, in Figure 2d, migration occurs from each island to all others.

| Algorithm 1 The steps of the genetic algorithm. |

|

Figure 1.

Parallelization of GA.

Figure 2.

Islands and propagation.

| Algorithm 2 The overall algorithm. |

|

The migration or propagation techniques, as described in this study, are periodically and synchronously performed in iterations on each processing unit. Below are the migration techniques that could be defined:

- 1to1: Optimal solutions migrate from a random island to another random one, replacing the worst solutions (see Figure 2a).

- 1toN: Optimal solutions migrate from a random island to all others, replacing the worst solutions (see Figure 2b).

- Nto1: All islands send their optimal solutions to a random island, replacing the worst solutions (see Figure 2c).

- NtoN: All islands send their optimal solutions to all other islands, replacing the worst solutions (see Figure 2d).

If we assume that the migration method “1toN” is executed, then a random island will transfer chromosomes to the other islands, except for itself. However, we keep the label “N” instead of “N-1” because the chromosomes exist on the island that sends them. The number of solutions participating in the migration and replacement process is fully customizable and will be discussed in the experiments below.

2.3. Termination Rule

The termination criterion employed in this study was originally introduced in the research conducted by Tsoulos [64] and it is formulated as follows:

- In each generation k, the chromosome with the best functional value is retrieved from the population. If this value does not change for a number of generations, then the algorithm should probably terminate.

- Consider as the associated variance of the quantity at generation k. The algorithm terminates whenwhere is the last generation where a lower value of is discovered.

3. Experiments

A series of benchmark functions from the relevant literature is introduced here, along with the conducted experiments and a discussion of the experimental results.

3.1. Test Functions

To assess the effectiveness of the proposed method in locating the overall minimum of functions, a set of well-known test functions cited in the relevant literature [66,67] was employed. The functions used here are as follows:

- The Bent cigar function is defined as follows:with the global minimum . For the conducted experiments, the value was used.

- The Bf1 function (Bohachevsky 1) is defined as follows:with .

- The Bf2 function (Bohachevsky 2) is defined as follows:with .

- The Branin function is given by with and with .

- The CM function. The cosine mixture function is given by the following:with . The value was used in the conducted experiments.

- Discus function. The function is defined as follows:with global minimum For the conducted experiments, the value was used.

- The Easom function. The function is given by the following equation:with .

- The exponential function. The function is given by the following:The global minimum is situated at , with a value of . In our experiments, we applied this function for , and referred to the respective instances as EXP4, EXP16, EXP64, and EXP100.

- Griewank2 function. The function is given by the following:

The global minimum is located at the with a value of 0.

- Gkls function. is a function with w local minima, described in [68] with , and n is a positive integer between 2 and 100. The value of the global minimum is −1, and in our experiments, we used and .

- Hansen function. , . The global minimum of the function is −176.541793.

- Hartman 3 function. The function is given by the following:with and andThe value of the global minimum is −3.862782.

- Hartman 6 function.with and andthe value of the global minimum is −3.322368.

- The high-conditioned elliptic function is defined as follows:Featuring a global minimum at , the experiments were conducted using the value .

- Potential function. As a test case, the molecular conformation corresponding to the global minimum of the energy of N atoms interacting via the Lennard–Jones potential [69] is utilized. The function to be minimized is defined as follows:In the current experiments, two different cases were studied: .

- Rastrigin function. This function is given by the following:

- Shekel 7 function.with and .

- Shekel 5 function.with and .

- Shekel 10 function.with and .

- Sinusoidal function. The function is given by the following:The global minimum is situated at with a value of . In the performed experiments, we examined scenarios with and . The parameter (z) is employed to offset the position of the global minimum [70].

- Test2N function. This function is given by the following equation:The function has in the specified range; in our experiments, we used .

- Test30N function. This function is given by the following:with . This function has local minima in the specified range, and we used in the conducted experiments.

3.2. Experimental Results

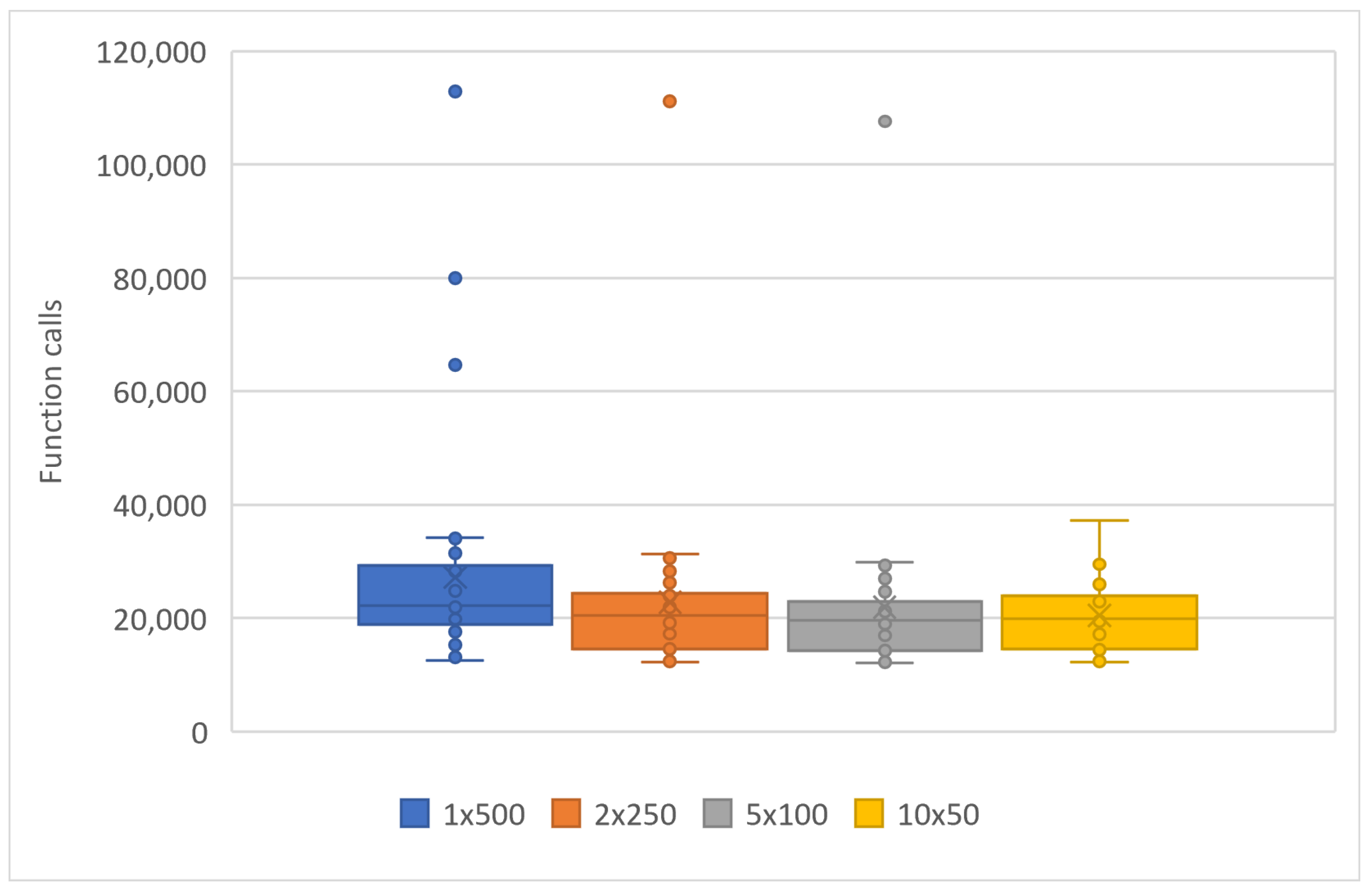

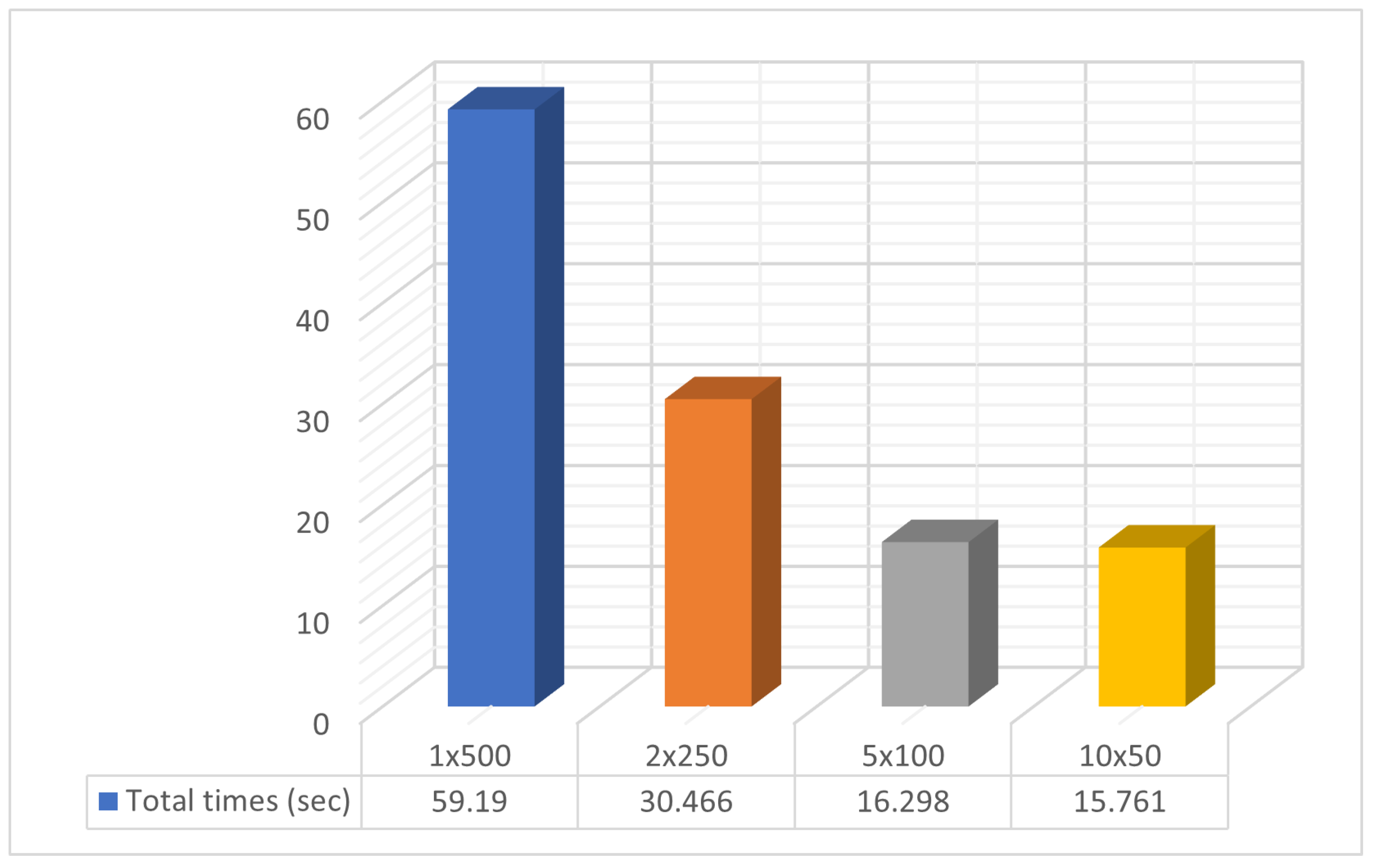

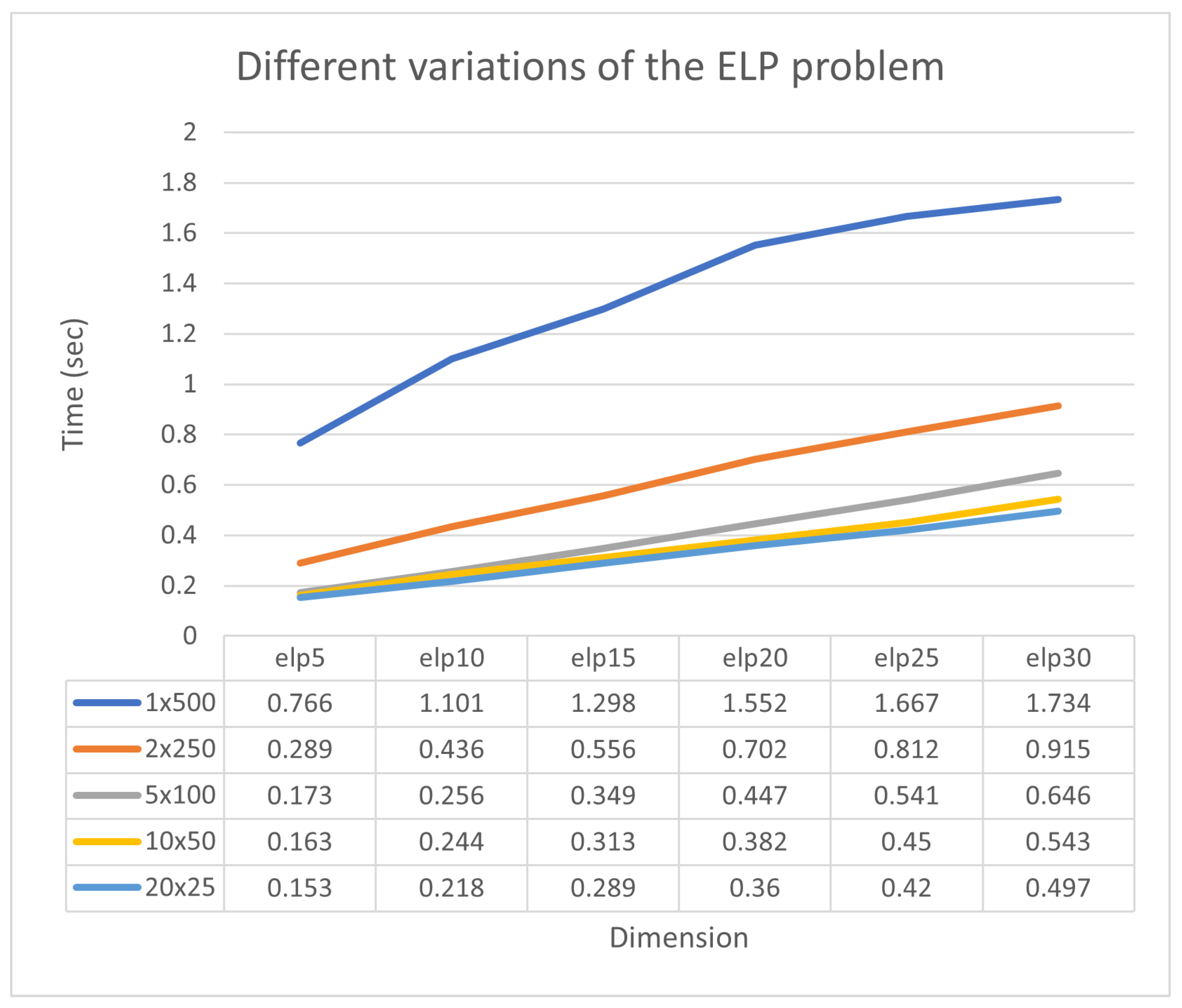

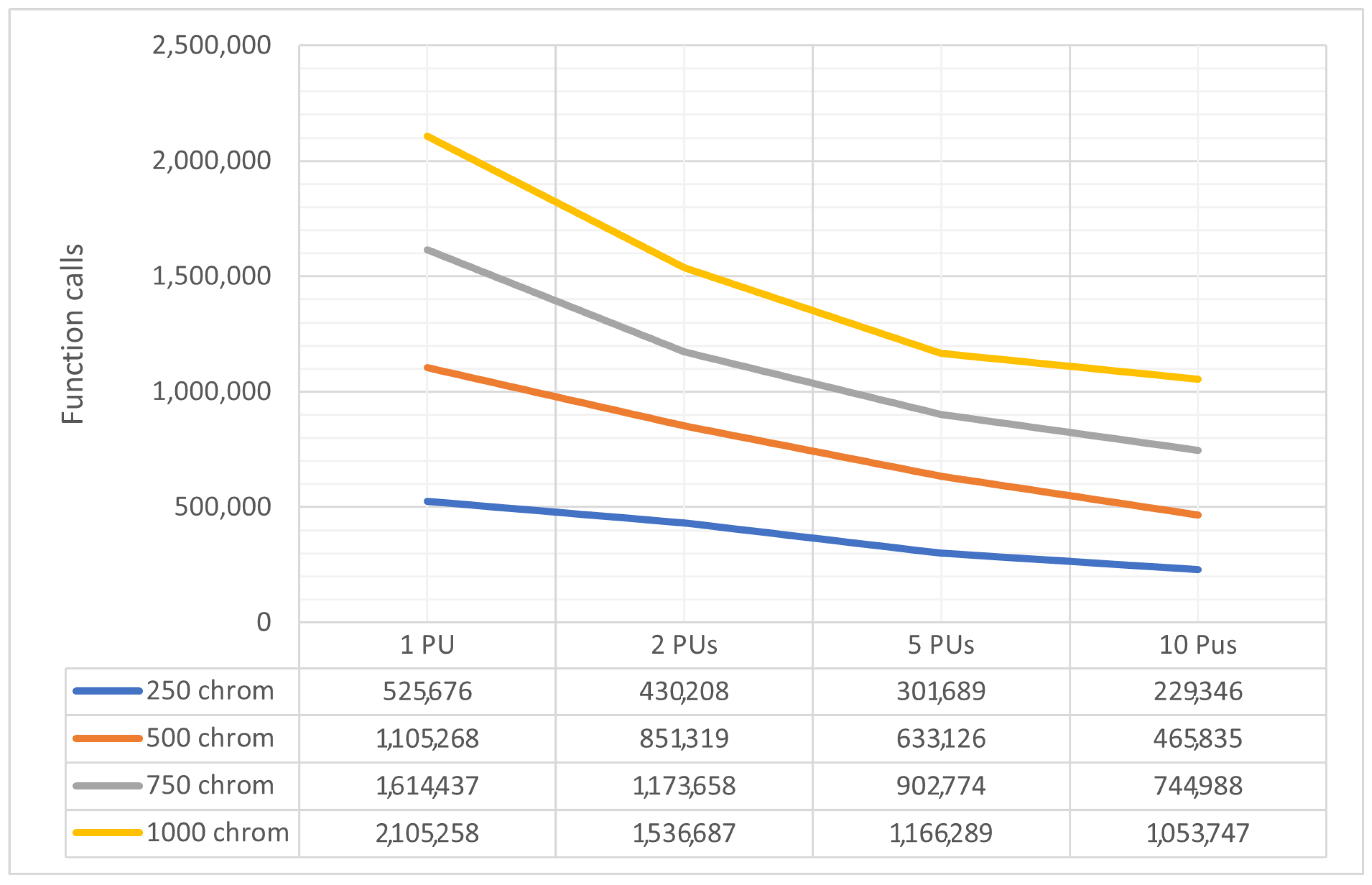

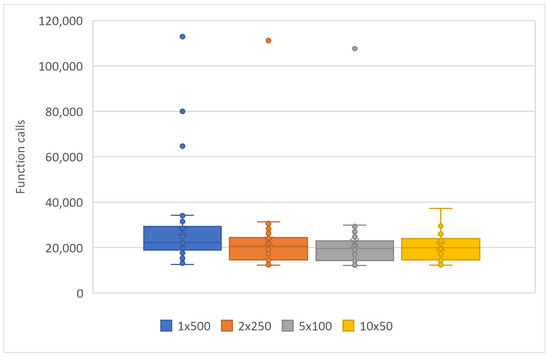

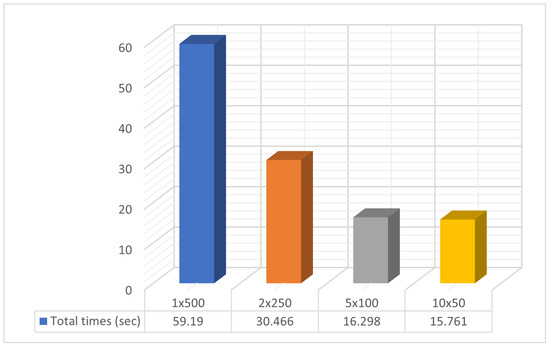

To evaluate the performance of the parallel genetic algorithm, a series of experiments were carried out. These experiments varied the number of parallel computing units from 1 to 10. The parallelization was achieved using the freely available OpenMP library [49], and the method was implemented in ANSI C++ within the OPTIMUS optimization package, accessible at https://github.com/itsoulos/OPTIMUS (accessed on 7 June 2024). All experiments were conducted on a system equipped with an AMD Ryzen 5950X processor, 128 GB of RAM, and running the Debian Linux operating system. The experimental settings are shown in Table 1. To ensure the reliability and validity of the research, experiments were conducted 30 times and concerned Table 2, Table 3 and Table 4. In Table 2, the number of objective function invocations for each problem and its solving time for various combinations of processing units (PUs) and chromosomes are provided. In the columns listing objective function invocation values, values in parentheses represent the percentage of executions where the overall optimum was successfully identified. The absence of this fraction indicates a 100% success rate, meaning that the global minimum was found in every run. Generally, across all problems, there is a decrease in the number of objective function invocations and execution time as the number of parallel computing units increases. The number of chromosomes remains constant in each case, e.g., 1PUx500chrom, 2PUx250chrom, etc. This is a positive result, indicating that parallelization improves the performance of the genetic algorithm. Figure 3 and Figure 4 are derived from Table 2. A statistical comparison of objective function invocations, solving times, and execution times similarly shows performance improvements and computation time reductions for problems as the number of computing units increases.

Table 1.

The following settings were initially used to conduct the experiments.

Table 2.

Statistical analysis comparing execution times (seconds) and function calls across varying numbers of processor units.

Table 3.

Evaluating function calls and times (seconds) using various propagation techniques for comparison.

Table 4.

Comparison of function calls using different stochastic optimization methods.

Figure 3.

Statistical comparison of function calls with different numbers of processor units.

Figure 4.

Statistical comparison of times with different numbers of processor units.

Specifically, in Figure 3, the objective function invocations are halved compared to the initial invocations with only two computational units. This reduction in invocations continues significantly as the number of computational units increases. In Figure 4, we observe similar behavior in the algorithm termination times. In this case, the times are significantly shorter in the parallel process with ten (10) computational units compared to a single computational unit. In the comparisons presented above, there is a reduction in the required computational power, as shown in Figure 3, along with a decrease in the time required to find solutions, as depicted in Figure 4. In Table 2, additional interesting details regarding objective function invocations and computational times are presented, such as minimum, maximum, mean, and standard deviations. In conclusion, as the workload is distributed among an increasing number of computational units, there is a performance improvement. This reinforces the overall methodology.

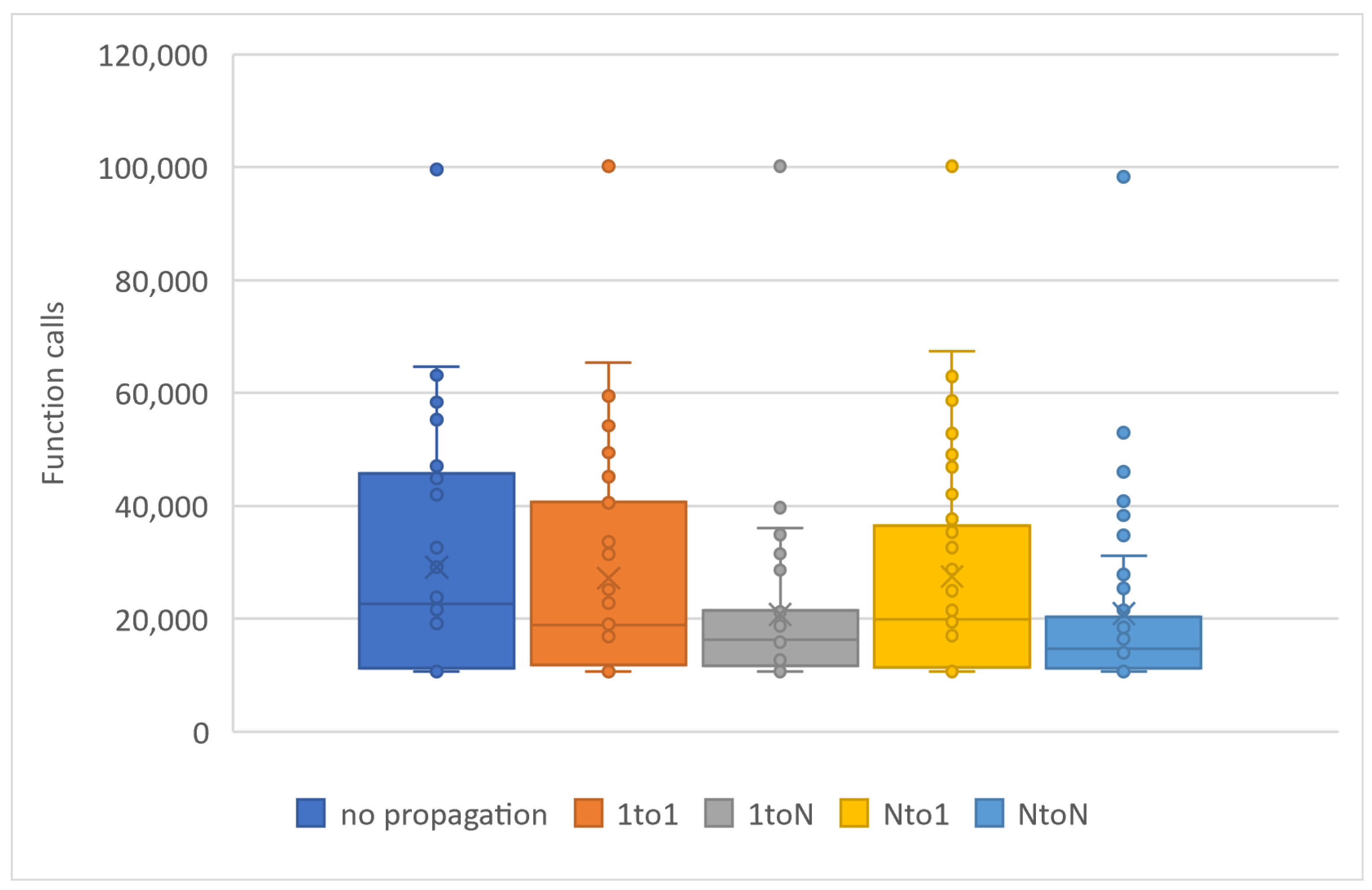

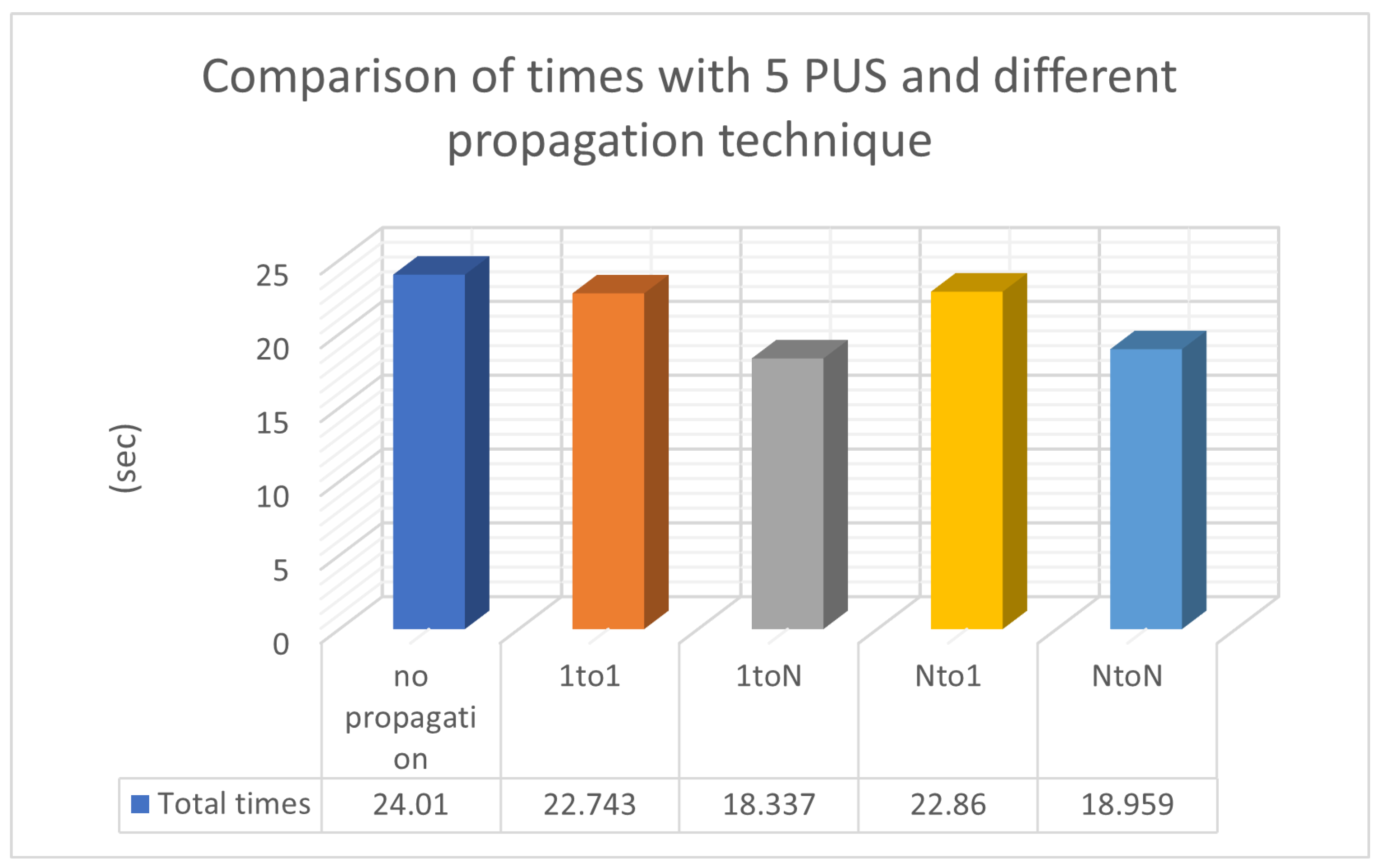

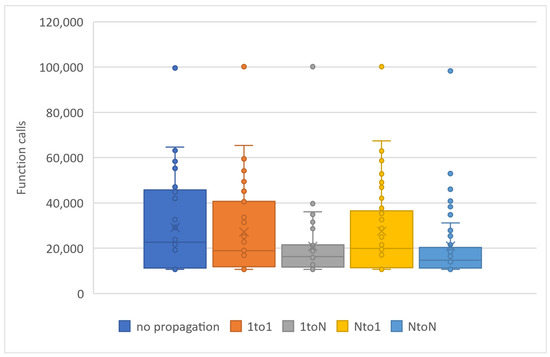

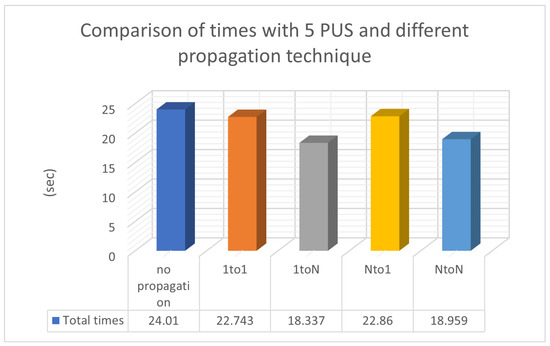

In Table 3, chromosome migration with the best functional values occurs in every generation, involving a specific number of ten chromosomes, N P = 10, participating in the propagation process. To enhance the implementation of propagation techniques, we increased the local optimization rate applied in Table 3 from 0.1% (as presented in Table 2) to 0.5% LSR. However, the level of local optimization was carefully controlled because an excessive increase could lead to a higher number of calls to the objective function. Conversely, reducing the LSR might lead to a decrease in the success rate concerning the identification of optimal chromosomes. In the statistical representation of Figure 5, we observe the superiority of the ‘1 to N’ propagation, meaning the transfer of ten chromosomes from a random island to all others. The ’N to N’ propagation appears to be equally effective. As a general rule, if we classify migration methods based on their performance, they will be ranked as follows: ‘1toN’ in Figure 2b, ‘NtoN’ in Figure 2d, ‘1to1’ in Figure 2a, and ’Nto1’ in Figure 2c. The first two strategies, where migration occurs across all islands, demonstrate better performance compared to the other two, where migration only affects one island. The success of ‘1toN’ in Figure 2b and ‘NtoN’ in Figure 2d, albeit with a slight difference, appears to be due to the migration of the best chromosomes to all islands. This leads to an improvement in the convergence of the algorithm towards better candidate solutions in a shorter time frame. The actual times are shown in Figure 6. During the conducted experiments, the “1-to-N” and “N-to-N” propagation techniques appear to perform better according to experimental evidence. A common feature of these two techniques is that the optimal solutions are distributed to all computing units, thereby improving the performance of each individual unit and consequently enhancing the overall performance of the general algorithm.

Figure 5.

Statistical comparison of function calls with 5 PUs and different propagation technique.

Figure 6.

Comparison of times with 5 PUS and different propagation techniques.

To conduct experiments among stochastic methods of global optimization, including particle swarm optimization (PSO), improved PSO (IPSO) [71], differential evolution with random selection (DE), differential evolution with tournament selection (TDE) [72], genetic algorithm (GA), and parallel genetic algorithm (PGA), certain parameters remained constant. Also, the parallel implementation of the GAlib library [73] was used in the comparative experiments. The population size for all consists of 500 particles, agents, or chromosomes. In PGA, the population consists of 20PU × 25chrom, while all other parameters remain the same as those described in Table 2. Any method employing LSR maintains this parameter at the same value. The double box is a termination rule that is consistent across all methods.

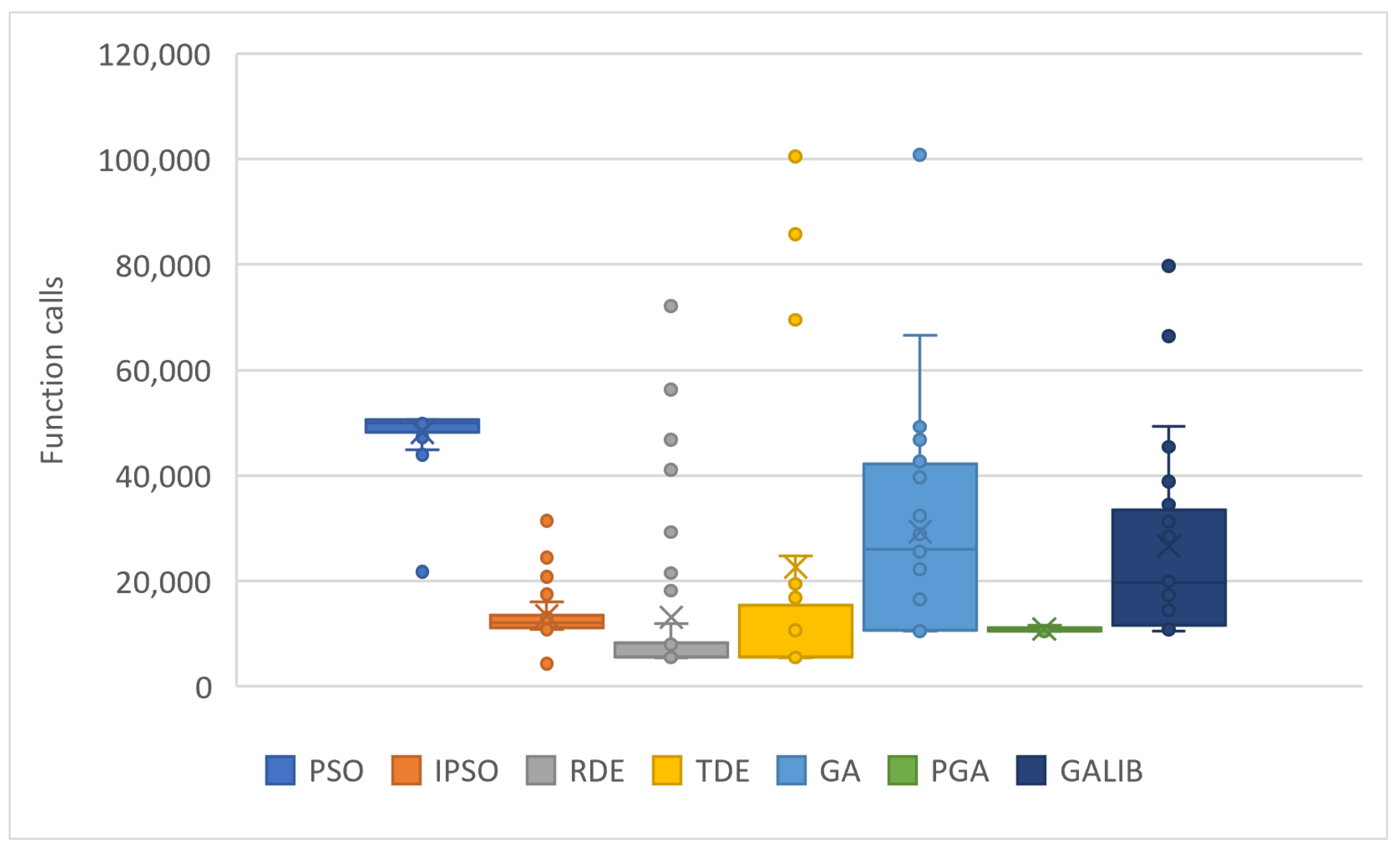

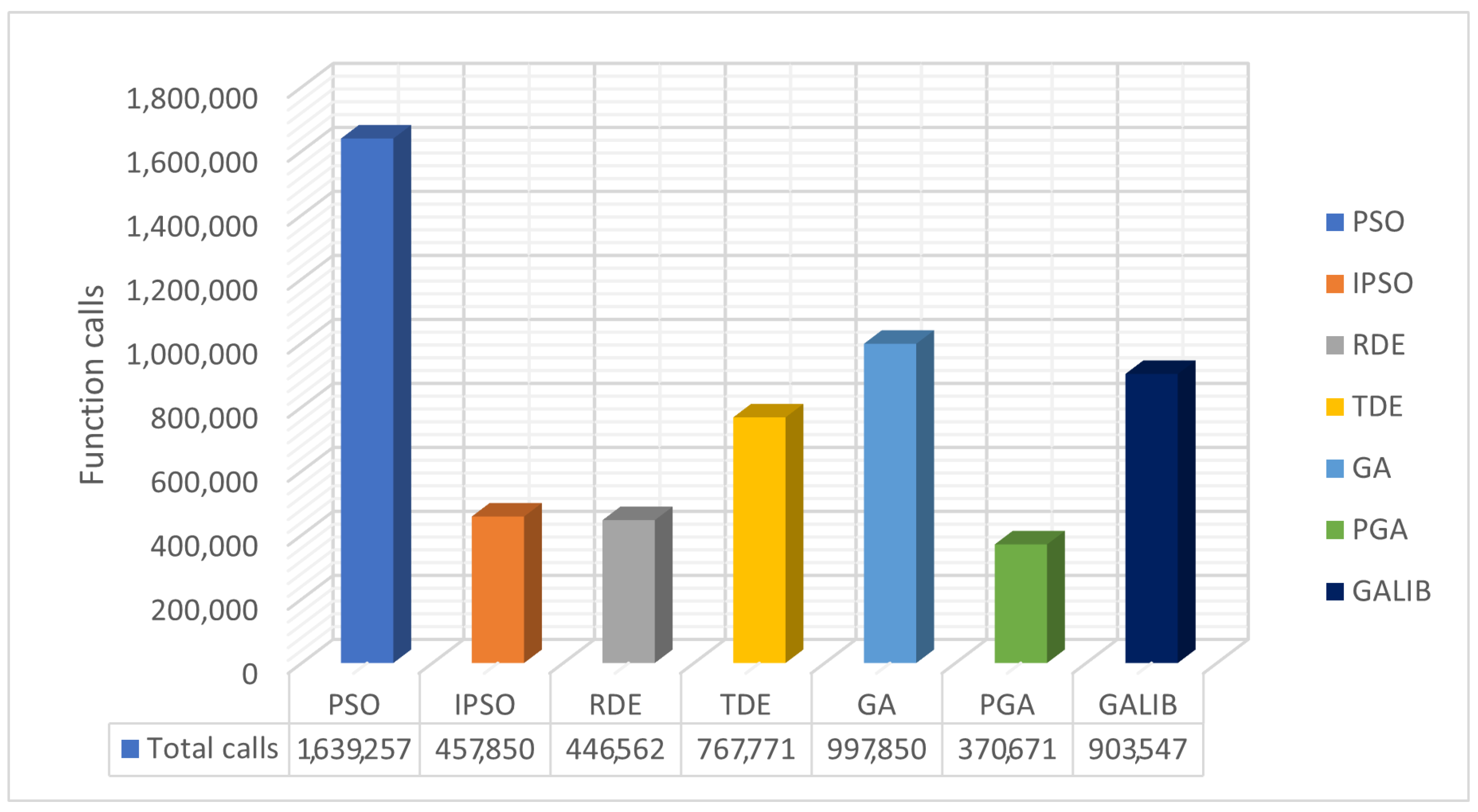

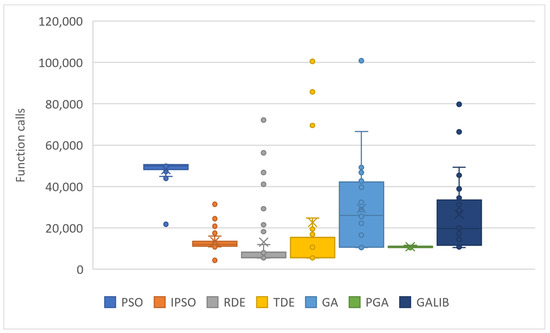

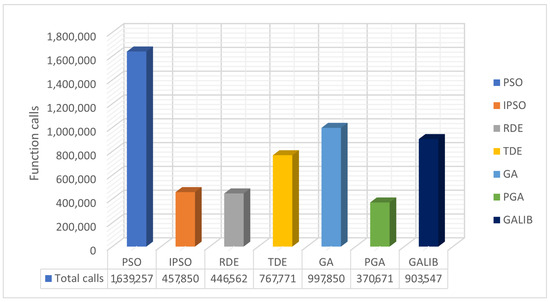

The values resulting from experiments in Table 4 are depicted in Figure 7 and Figure 8. The box plots of Figure 7 reveal the superiority of PGA, as the number of objective function calls remains at approximately 10,000 across all problems. Conversely, IPSO, DE, and TDE (especially DE) show a low number of calls in some problems, while in others, they experience significant increases. Each method has a specific lower limit of calls during initialization and optimization, which varies from method to method. PGA consistently meets this threshold with very small deviations, as illustrated in the same figure. Figure 8 presents the total call values for each method. This work was also compared against the parallel version of GAlib, found in recent literature. Although GAlib achieves a similar success rate in discovering the global minimum of the benchmark functions, it requires significantly more function calls than the proposed method for the same setup parameters.

Figure 7.

Statistical comparison of function calls using different stochastic optimization methods.

Figure 8.

Comparison of total function calls using different stochastic optimization methods.

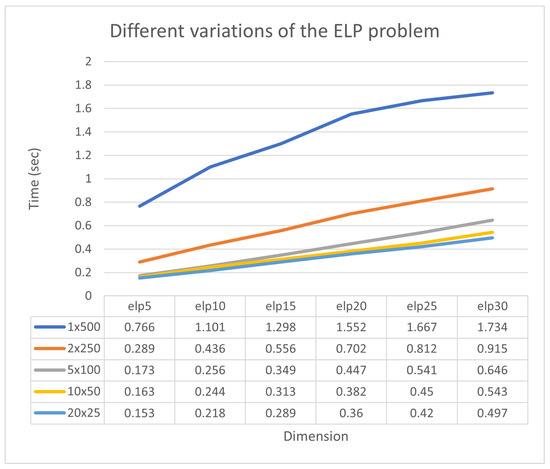

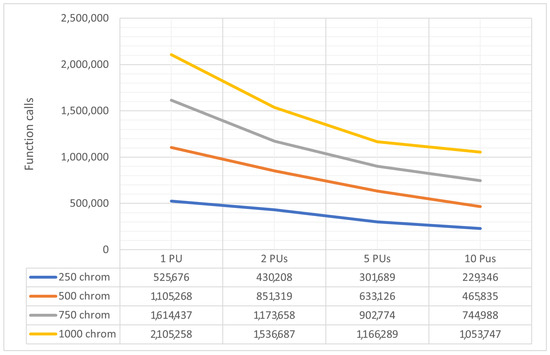

In Figure 9, it is observed that the collaboration of sub-listing units significantly accelerates the process of finding minima. Additionally, a new experiment was conducted where the number of chromosomes varied from 250 to 1000 and the number of processing units changed from 1 to 10. The total number of function calls for each case is graphically shown in Figure 10. The method maintains the same behavior for any number of chromosomes. This means that the set of required calls is significantly reduced by adding new parallel processing units. Of course, as expected, the total number of calls required increases as the number of available chromosomes increases.

Figure 9.

Different variations of the ELP problem.

Figure 10.

Comparison of function calls with different numbers of chromosomes.

4. Conclusions

According to the relevant literature, despite the high success rate that genetic algorithms exhibit in finding good functional values, they require significant computational power, leading to longer processing times. This manuscript introduces a parallel technique for global optimization, employing a genetic algorithm to solve the problem. Specifically, the initial population of chromosomes is divided into various subpopulations that run on different computational units. During the optimization process, the islands operate independently but periodically exchange chromosomes with good functional values. The number of chromosomes participating in migration is determined by the crossover and mutation rates. Additionally, periodic local optimization is performed on each computational unit, which should not require excessive computational power (function calls).

Experimental results revealed that even parallelization with just two computational units significantly reduces both the number of function calls and processing time, proving to be quite effective even with more computational units. Furthermore, it was observed that the most effective information exchange technique was the so-called ‘1toN’, with a slight difference from the ‘NtoN’, where a randomly selected subpopulation sends information to all other subpopulations. Moreover, the ‘NtoN’ technique—where all subpopulations send information to all other subpopulations—seems to perform equally well.

Similar dissemination techniques have been applied to other stochastic methods, such as the differential evolution (DE) method by Charilogis and Tsoulos [74] and the particle swarm optimization (PSO) method by Charilogis and Tsoulos [75]. In the case of differential evolution, the proposed dissemination technique is ‘1to1’ in Figure 2a and not ‘1toN’ in Figure 2b as suggested in this study. However, in the case of PSO and GA, the recommended dissemination technique is the same.

The parallelization of various methodologies of genetic algorithms or even different stochastic techniques for global optimization can be explored to enhance the methodology. However, in such heterogeneous environments, more efficient termination criteria are required, or even their combined use may be necessary.

Author Contributions

I.G.T. conceptualized the idea and methodology, supervised the technical aspects related to the software, and contributed to manuscript preparation. V.C. conducted the experiments using various datasets, performed statistical analysis, and collaborated with all authors in manuscript preparation. All authors have reviewed and endorsed the conclusive version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Acknowledgments

This research was financed by the European Union: Next Generation EU through the Program Greece 2.0 National Recovery and Resilience Plan, under the call RESEARCH—CREATE—INNOVATE, project name “iCREW: Intelligent small craft simulator for advanced crew training using Virtual Reality techniques” (project code: TAEDK-06195).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Törn, A.; Žilinskas, A. Global Optimization; Springer: Berlin/Heidelberg, Germany, 1989; Volume 350, pp. 1–255. [Google Scholar]

- Fouskakis, D.; Draper, D. Stochastic optimization: A review. Int. Stat. Rev. 2002, 70, 315–349. [Google Scholar] [CrossRef]

- Cherruault, Y. Global optimization in biology and medicine. Math. Comput. Model. 1994, 20, 119–132. [Google Scholar] [CrossRef]

- Lee, E.K. Large-Scale Optimization-Based Classification Models in Medicine and Biology. Ann. Biomed. Eng. 2007, 35, 1095–1109. [Google Scholar] [CrossRef]

- Liwo, A.; Lee, J.; Ripoll, D.R.; Pillardy, J.; Scheraga, H.A. Protein structure prediction by global optimization of a potential energy function. Biophysics 1999, 96, 5482–5485. [Google Scholar] [CrossRef] [PubMed]

- Shin, W.H.; Kim, J.K.; Kim, D.S.; Seok, C. GalaxyDock2: Protein-ligand docking using beta-complex and global optimization. J. Comput. Chem. 2013, 34, 2647–2656. [Google Scholar] [CrossRef] [PubMed]

- Duan, Q.; Sorooshian, S.; Gupta, V. Effective and efficient global optimization for conceptual rainfall-runoff models. Water Resour. Res. 1992, 28, 1015–1031. [Google Scholar] [CrossRef]

- Yang, L.; Robin, D.; Sannibale, F.; Steier, C.; Wan, W. Global optimization of an accelerator lattice using multiobjective genetic algorithms, Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers. Detect. Assoc. Equip. 2009, 609, 50–57. [Google Scholar] [CrossRef]

- Iuliano, E. Global optimization of benchmark aerodynamic cases using physics-based surrogate models. Aerosp. Sci. Technol. 2017, 67, 273–286. [Google Scholar] [CrossRef]

- Maranas, C.D.; Androulakis, I.P.; Floudas, C.A.; Berger, A.J.; Mulvey, J.M. Solving long-term financial planning problems via global optimization. J. Econ. Dyn. Control 1997, 21, 1405–1425. [Google Scholar] [CrossRef]

- Gaing, Z. Particle swarm optimization to solving the economic dispatch considering the generator constraints. IEEE Trans. Power Syst. 2003, 18, 1187–1195. [Google Scholar] [CrossRef]

- Liberti, L.; Kucherenko, S. Comparison of deterministic and stochastic approaches to global optimization. Int. Trans. Oper. Res. 2005, 12, 263–285. [Google Scholar] [CrossRef]

- Wolfe, M.A. Interval methods for global optimization. Appl. Math. Comput. 1996, 75, 179–206. [Google Scholar] [CrossRef]

- Csendes, T.; Ratz, D. Subdivision Direction Selection in Interval Methods for Global Optimization. SIAM J. Numer. Anal. 1997, 34, 922–938. [Google Scholar] [CrossRef]

- Maranas, C.D.; Floudas, C.A. A deterministic global optimization approach for molecular structure determination. J. Chem. Phys. 1994, 100, 1247. [Google Scholar] [CrossRef]

- Barhen, J.; Protopopescu, V.; Reister, D. TRUST: A Deterministic Algorithm for Global Optimization. Science 1997, 276, 1094–1097. [Google Scholar] [CrossRef]

- Evtushenko, Y.; Posypkin, M.A. Deterministic approach to global box-constrained optimization. Optim. Lett. 2013, 7, 819–829. [Google Scholar] [CrossRef]

- Dorigo, M.; Birattari, M.; Stutzle, T. Ant colony optimization. IEEE Comput. Intell. Mag. 2006, 1, 28–39. [Google Scholar] [CrossRef]

- Socha, K.; Dorigo, M. Ant colony optimization for continuous domains. Eur. J. Oper. Res. 2008, 185, 1155–1173. [Google Scholar] [CrossRef]

- Price, W.L. Global optimization by controlled random search. J. Optim. Theory Appl. 1983, 40, 333–348. [Google Scholar] [CrossRef]

- Křivý, I.; Tvrdík, J. The controlled random search algorithm in optimizing regression models. Comput. Stat. Data Anal. 1995, 20, 229–234. [Google Scholar] [CrossRef]

- Ali, M.M.; Törn, A.; Viitanen, S. A Numerical Comparison of Some Modified Controlled Random Search Algorithms. J. Glob. Optim. 1997, 11, 377–385. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar] [CrossRef]

- Trelea, I.C. The particle swarm optimization algorithm: Convergence analysis and parameter selection. Inf. Process. Lett. 2003, 85, 317–325. [Google Scholar] [CrossRef]

- Poli, R.; Kennedy, J.; Blackwell, T. Particle swarm optimization An Overview. Swarm Intell. 2007, 1, 33–57. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, C.D.; Vecchi, M.P. Optimization by simulated annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef] [PubMed]

- Ingber, L. Very fast simulated re-annealing. Math. Comput. Model. 1989, 12, 967–973. [Google Scholar] [CrossRef]

- Eglese, R.W. Simulated annealing: A tool for operational research. Eur. J. Oper. Res. 1990, 46, 271–281. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential Evolution—A Simple and Efficient Heuristic for Global Optimization over Continuous Spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Liu, J.; Lampinen, J. A Fuzzy Adaptive Differential Evolution Algorithm. Soft Comput. 2005, 9, 448–462. [Google Scholar] [CrossRef]

- Goldberg, D. Genetic Algorithms in Search, Optimization and Machine Learning; Addison-Wesley Publishing Company: Reading, MA, USA, 1989. [Google Scholar]

- Michaelewicz, Z. Genetic Algorithms + Data Structures = Evolution Programs; Springer: Berlin/Heidelberg, Germany, 1996. [Google Scholar]

- Grady, S.A.; Hussaini, M.Y.; Abdullah, M.M. Placement of wind turbines using genetic algorithms. Renew. Energy 2005, 30, 259–270. [Google Scholar] [CrossRef]

- Lepagnot, I.B.J.; Siarry, P. A survey on optimization metaheuristics. Inf. Sci. 2013, 237, 82–117. [Google Scholar]

- Dokeroglu, T.; Sevinc, E.; Kucukyilmaz, T.; Cosar, A. A survey on new generation metaheuristic algorithms. Comput. Ind. Eng. 2019, 137, 106040. [Google Scholar] [CrossRef]

- Hussain, K.; Salleh, M.N.M.; Cheng, S.; Shi, Y. Metaheuristic research: A comprehensive survey. Artif. Intell. Rev. 2019, 52, 2191–2233. [Google Scholar] [CrossRef]

- Holland, J.H. Genetic algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Stender, J. Parallel Genetic Algorithms: Theory & Applications; IOS Press: Amsterdam, The Netherlands, 1993. [Google Scholar]

- Santana, Y.H.; Alonso, R.M.; Nieto, G.G.; Martens, L.; Joseph, W.; Plets, D. Indoor genetic algorithm-based 5G network planning using a machine learning model for path loss estimation. Appl. Sci. 2022, 12, 3923. [Google Scholar] [CrossRef]

- Liu, X.; Jiang, D.; Tao, B.; Jiang, G.; Sun, Y.; Kong, J.; Chen, B. Genetic algorithm-based trajectory optimization for digital twin robots. Front. Bioeng. Biotechnol. 2022, 9, 793782. [Google Scholar] [CrossRef] [PubMed]

- Nonoyama, K.; Liu, Z.; Fujiwara, T.; Alam, M.M.; Nishi, T. Energy-efficient robot configuration and motion planning using genetic algorithm and particle swarm optimization. Energies 2022, 15, 2074. [Google Scholar] [CrossRef]

- Liu, K.; Deng, B.; Shen, Q.; Yang, J.; Li, Y. Optimization based on genetic algorithms on energy conservation potential of a high speed SI engine fueled with butanol–Gasoline blends. Energy Rep. 2022, 8, 69–80. [Google Scholar] [CrossRef]

- Zhou, G.; Zhu, Z.; Luo, S. Location optimization of electric vehicle charging stations: Based on cost model and genetic algorithm. Energy 2022, 247, 123437. [Google Scholar] [CrossRef]

- Min, D.; Song, Z.; Chen, H.; Wang, T.; Zhang, T. Genetic algorithm optimized neural network based fuel cell hybrid electric vehicle energy management strategy under start-stop condition. Appl. Energy 2022, 306, 118036. [Google Scholar] [CrossRef]

- Doewes, R.I.; Nair, R.; Sharma, T. Diagnosis of COVID-19 through blood sample using ensemble genetic algorithms and machine learning classifier. World J. Eng. 2022, 19, 175–182. [Google Scholar] [CrossRef]

- Choudhury, S.; Rana, M.; Chakraborty, A.; Majumder, S.; Roy, S.; RoyChowdhury, A.; Datta, S. Design of patient specific basal dental implant using Finite Element method and Artificial Neural Network technique. J. Eng. Med. 2022, 236, 1375–1387. [Google Scholar] [CrossRef] [PubMed]

- Chen, Q.; Hu, X. Design of intelligent control system for agricultural greenhouses based on adaptive improved genetic algorithm for multi-energy supply system. Energy Rep. 2022, 8, 12126–12138. [Google Scholar] [CrossRef]

- Graham, R.L.; Woodall, T.S.; Squyres, J.M. Open MPI: A flexible high performance MPI. In Proceedings of the Parallel Processing and Applied Mathematics: 6th International Conference (PPAM 2005), Poznań, Poland, 11–14 September 2005; Revised Selected Papers 6. Springer: Berlin/Heidelberg, Germany, 2006; pp. 228–239. [Google Scholar]

- Ayguadé, E.; Copty, N.; Duran, A.; Hoeflinger, J.; Lin, Y.; Massaioli, F.; Zhang, G. The design of OpenMP tasks. IEEE Trans. Parallel Distrib. Syst. 2008, 20, 404–418. [Google Scholar] [CrossRef]

- Onbaşoğlu, E.; Özdamar, L. Parallel simulated annealing algorithms in global optimization. J. Glob. Optim. 2001, 19, 27–50. [Google Scholar] [CrossRef]

- Schutte, J.F.; Reinbolt, J.A.; Fregly, B.J.; Haftka, R.T.; George, A.D. Parallel global optimization with the particle swarm algorithm. Int. J. Numer. Methods Eng. 2004, 61, 2296–2315. [Google Scholar] [CrossRef] [PubMed]

- Regis, R.G.; Shoemaker, C.A. Parallel stochastic global optimization using radial basis functions. J. Comput. 2009, 21, 411–426. [Google Scholar] [CrossRef]

- Harada, T.; Alba, E. Parallel Genetic Algorithms: A Useful Survey. ACM Comput. Surv. 2020, 53, 86. [Google Scholar] [CrossRef]

- Anbarasu, L.A.; Narayanasamy, P.; Sundararajan, V. Multiple molecular sequence alignment by island parallel genetic algorithm. Curr. Sci. 2000, 78, 858–863. [Google Scholar]

- Tosun, U.; Dokeroglu, T.; Cosar, C. A robust island parallel genetic algorithm for the quadratic assignment problem. Int. Prod. Res. 2013, 51, 4117–4133. [Google Scholar] [CrossRef]

- Nandy, A.; Chakraborty, D.; Shah, M.S. Optimal sensors/actuators placement in smart structure using island model parallel genetic algorithm. Int. J. Comput. 2019, 16, 1840018. [Google Scholar] [CrossRef]

- Tsoulos, I.G.; Tzallas, A.; Tsalikakis, D. PDoublePop: An implementation of parallel genetic algorithm for function optimization. Comput. Phys. Commun. 2016, 209, 183–189. [Google Scholar] [CrossRef]

- Shonkwiler, R. Parallel genetic algorithms. In ICGA; Morgan Kaufmann Publishers Inc: San Francisco, CA, USA, 1993; pp. 199–205. [Google Scholar]

- Cantú-Paz, E. A survey of parallel genetic algorithms. Calc. Paralleles Reseaux Syst. Repartis 1998, 10, 141–171. [Google Scholar]

- Mühlenbein, H. Parallel genetic algorithms in combinatorial optimization. In Computer Science and Operations Research; Elsevier: Amsterdam, The Netherlands, 1992; pp. 441–453. [Google Scholar]

- Lawrence, D. Handbook of Genetic Algorithms; Thomson Publishing Group: London, UK, 1991. [Google Scholar]

- Yu, X.; Gen, M. Introduction to Evolutionary Algorithms; Springer: Berlin/Heidelberg, Germany, 2010; ISBN 978-1-84996-128-8. e-ISBN 978-1-84996-129-5. [Google Scholar] [CrossRef]

- Kaelo, P.; Ali, M.M. Integrated crossover rules in real coded genetic algorithms. Eur. J. Oper. Res. 2007, 176, 60–76. [Google Scholar] [CrossRef]

- Tsoulos, I.G. Modifications of real code genetic algorithm for global optimization. Appl. Math. Comput. 2008, 203, 598–607. [Google Scholar] [CrossRef]

- Powell, M.J.D. A Tolerant Algorithm for Linearly Constrained Optimization Calculations. Math. Program. 1989, 45, 547–566. [Google Scholar] [CrossRef]

- Floudas, C.A.; Pardalos, P.M.; Adjiman, C.; Esposoto, W.; Gümüs, Z.; Harding, S.; Klepeis, J.; Meyer, C.; Schweiger, C. Handbook of Test Problems in Local and Global Optimization; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1999. [Google Scholar]

- Ali, M.M.; Khompatraporn, C.; Zabinsky, Z.B. A Numerical Evaluation of Several Stochastic Algorithms on Selected Continuous Global Optimization Test Problems. J. Glob. Optim. 2005, 31, 635–672. [Google Scholar] [CrossRef]

- Gaviano, M.; Ksasov, D.E.; Lera, D.; Sergeyev, Y.D. Software for generation of classes of test functions with known local and global minima for global optimization. ACM Trans. Math. Softw. 2003, 29, 469–480. [Google Scholar] [CrossRef]

- Lennard-Jones, J.E. On the Determination of Molecular Fields. Proc. R. Soc. Lond. A 1924, 106, 463–477. [Google Scholar]

- Zabinsky, Z.B.; Graesser, D.L.; Tuttle, M.E.; Kim, G.I. Global optimization of composite laminates using improving hit and run. In Recent Advances in Global Optimization; Princeton University Press: Princeton, NJ, USA, 1992; pp. 343–368. [Google Scholar]

- Charilogis, V.; Tsoulos, I. Toward an Ideal Particle Swarm Optimizer for Multidimensional Functions. Information 2022, 13, 217. [Google Scholar] [CrossRef]

- Charilogis, V.; Tsoulos, I.; Tzallas, A.; Karvounis, E. Modifications for the Differential Evolution Algorithm. Symmetry 2022, 14, 447. [Google Scholar] [CrossRef]

- Wall, M. GAlib: A C++ Library of Genetic Algorithm Components; Mechanical Engineering Department, Massachusetts Institute of Technology: Cambridge, MA, USA, 1996; p. 54. [Google Scholar]

- Charilogis, V.; Tsoulos, I.G. A Parallel Implementation of the Differential Evolution Method. Analytics 2023, 2, 17–30. [Google Scholar] [CrossRef]

- Charilogis, V.; Tsoulos, I.G.; Tzallas, A. An Improved Parallel Particle Swarm Optimization. Comput. Sci. 2023, 4, 766. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).