Fisher Information in Helmholtz–Boltzmann Thermodynamics of Mechanical Systems

Abstract

1. Introduction

2. Helmholtz–Boltzmann Thermodynamics of Mechanical Systems

2.1. The Multidimensional Probability Density

2.2. Multidimensional HB Thermodynamics

2.3. Relation with Microcanonical Entropy

3. Statistical Models and Fisher Matrix

- (1)

- (injectivity) the map , is one to one and

- (2)

- (regularity) the d functions defined on Xare linearly independent as functions on X for every .

Statistical Models in HB Thermodynamics

4. Fisher Matrix for Canonical Ensemble

5. Fisher Matrix in HB Thermodynamics. Examples

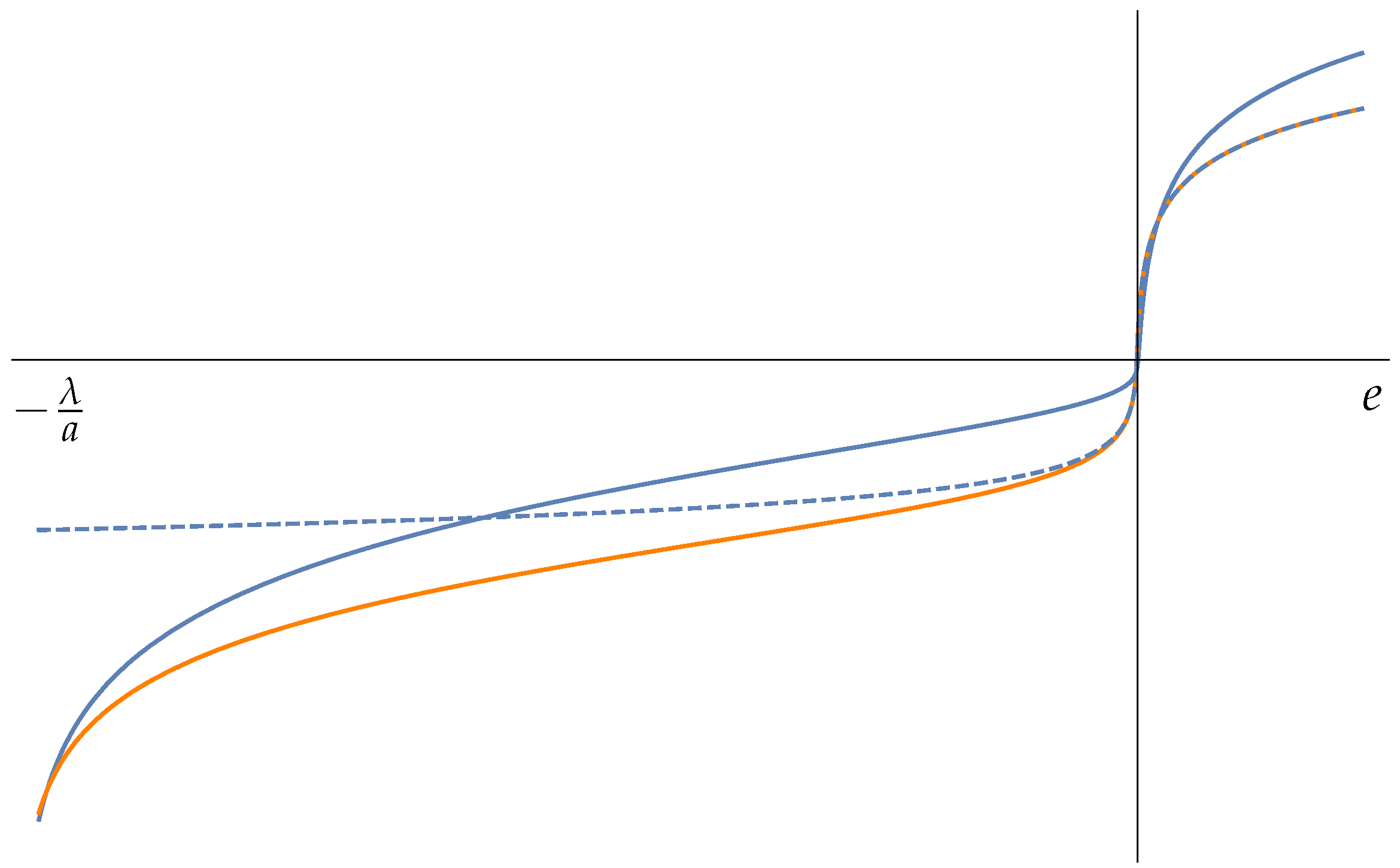

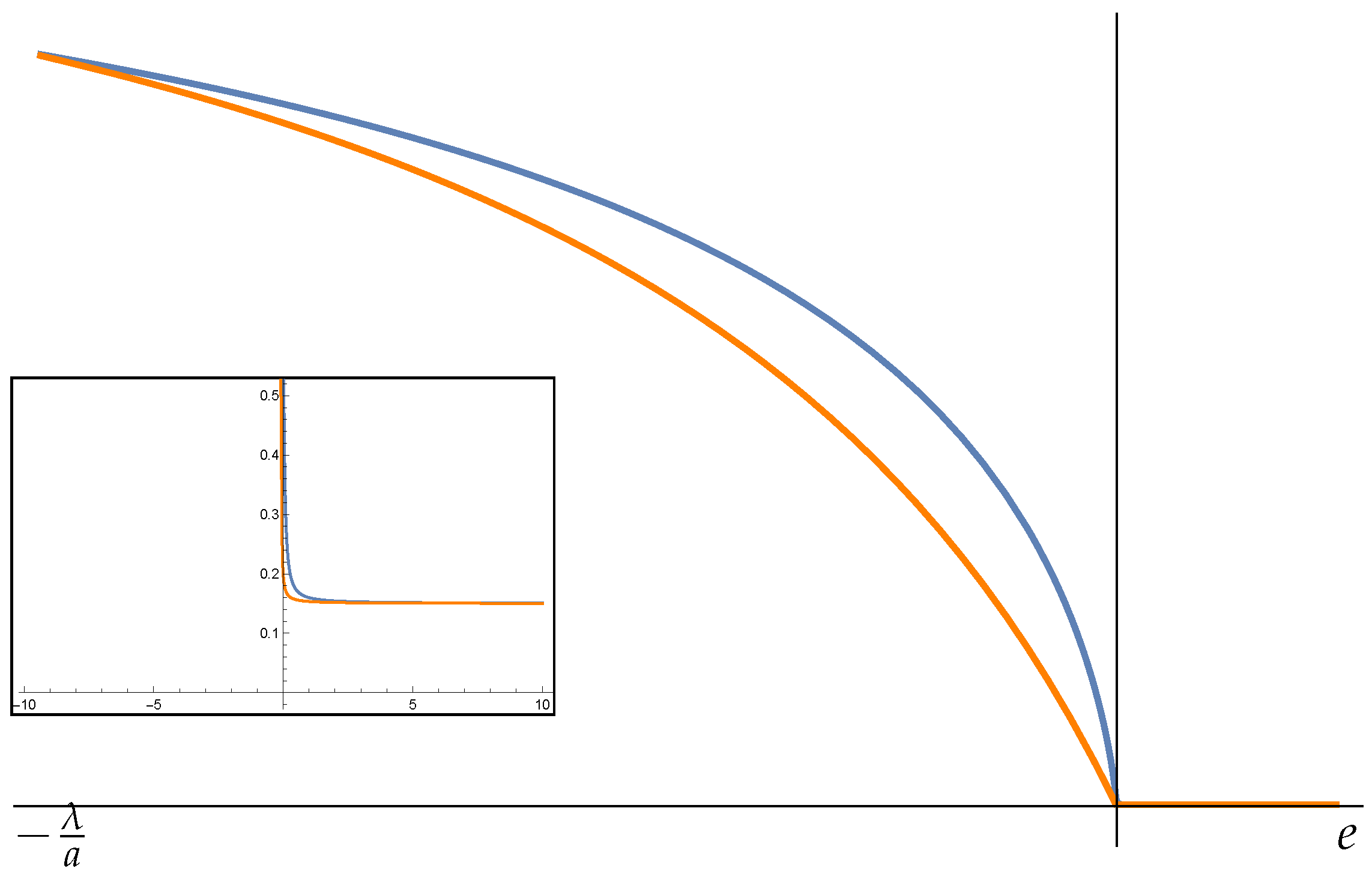

5.1. Harmonic Potential Energy

5.2. The Elastic Chain

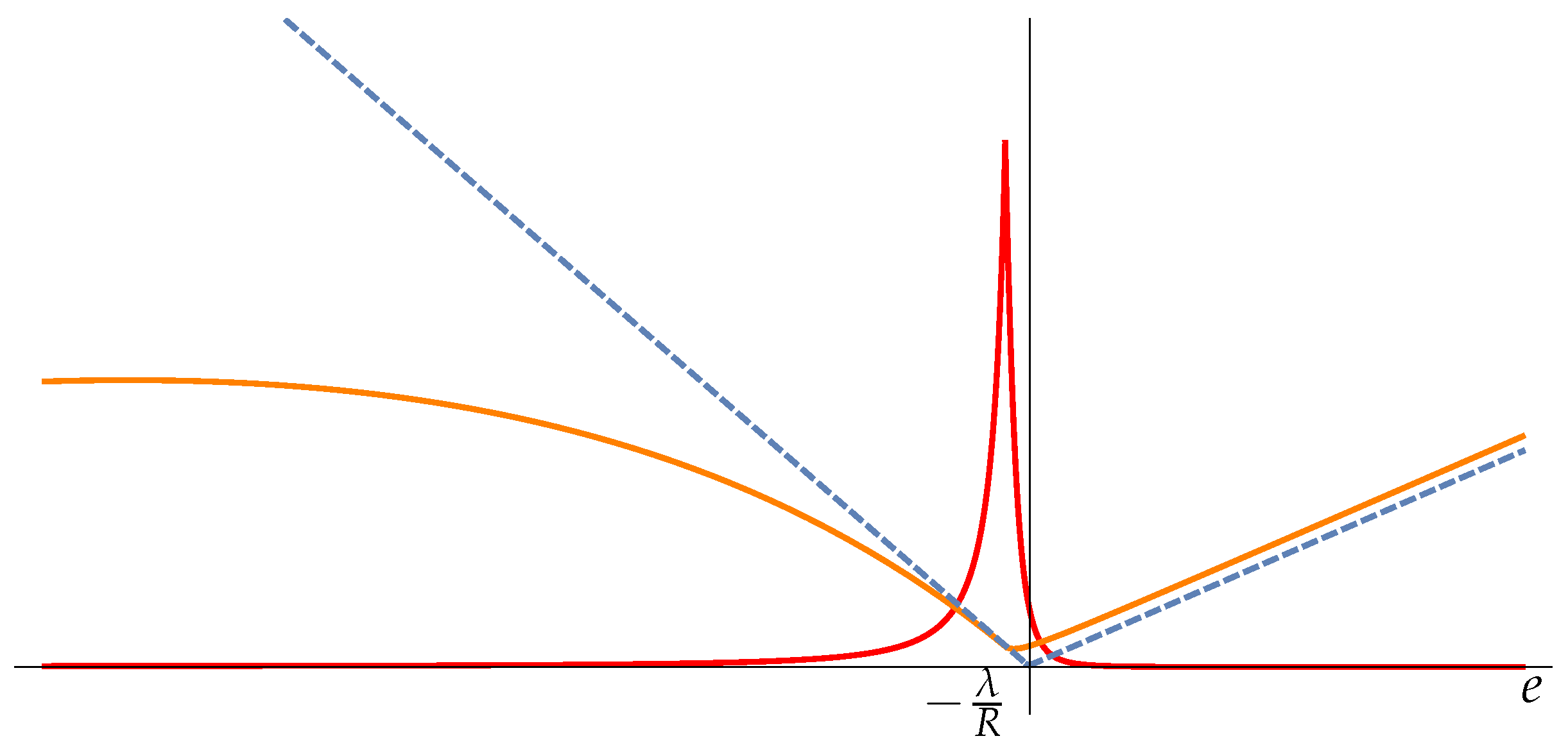

5.3. Two-Body System

6. Conclusions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Helmholtz, H. Principien der Statik Monocyklischer Systeme. Crelle’s J. 1884, 97S, 111–140, reprinted in Wissenschaftliche Abhandlungen; Walter de Gruyter GmbH: Leipzig, Germany, 1895; Volume III, pp. 142–162+179–202. [Google Scholar] [CrossRef]

- Helmholtz, H. Studien zur Statik Monocyklischer Systeme; Akademie der Wissenschaften zu Berlin: Berlin, Germany, 1884; pp. 159–177, reprinted in Wissenschaftliche Abhandlungen; Walter de Gruyter GmbH: Leipzig, Germany, 1895; Volume III, pp. 163–172+173–178. [Google Scholar]

- Boltzmann, L. Uber die Eigenschaften monozyklischer und anderer damit verwandter Systeme. Crelle’s J. 1884, 98, S68–S94, reprinted in Wissenschaftliche Abhandlungen von Ludwig Boltzmann; Hasenöhrl, F., Ed.; Johann Ambrosius Barth: Leipzig, Germany, 1909; Band III, p. 122; reprinted in Wissenschaftliche Abhandlungen von Ludwig Boltzmann; Chelsea Publ. Company: New York, NY, USA, 1968. [Google Scholar]

- Gibbs, J.W. Elementary Principles in Statistical Mechanics: Developed with Especial Reference to the Rational Foundations of Thermodynamics; C. Scribner’s Sons: New York, NY, USA, 1902. [Google Scholar]

- Gallavotti, G. Statistical Mechanics. A Short Treatise; Texts and Monographs in Physics; Springer: Berlin/Heidelberg, Germany, 1999. [Google Scholar]

- Porporato, A.; Rondoni, L. Deterministic engines extending Helmholtz thermodynamics. Phys. A Stat. Mech. Its Appl. 2024, 640, 129700. [Google Scholar] [CrossRef]

- Cardin, F.; Favretti, M. On the Helmholtz-Boltzmann thermodynamics of mechanical systems. Contin. Mech. Thermodyn. 2004, 16, 15–29. [Google Scholar] [CrossRef]

- Campisi, M. On the mechanical foundations of thermodynamics: The generalized Helmholtz theorem. Stud. Hist. Philos. Sci. Part Stud. Hist. Philos. Mod. Phys. 2005, 36, 275–290. [Google Scholar] [CrossRef]

- Fisher, R.A. On the mathematical foundations of theoretical statistics. Philos. Trans. R. Soc. Lond. Ser. A 1922, 222, 309–368. [Google Scholar]

- Rao, C.R. Information and the accuracy attainable in the estimation of statistical parameters. In Breakthroughs in Statistics; Springer: New York, NY, USA, 1992; pp. 235–247. [Google Scholar]

- Amari, S.; Nagaoka, H. Methods of Information Geometry; AMS and Oxford University Press: Oxford, UK, 2000. [Google Scholar]

- Amari, S. Information Geometry and Its Applications; Springer: Berlin/Heidelberg, Germany, 2016; Volume 194. [Google Scholar]

- Calin, O.; Udriste, C. Geometric Modeling in Probability and Statistics; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Crooks, G.E. Fisher Information and Statistical Mechanics. Tech. Note 008v4. 2012. Available online: http://threeplusone.com/fisher (accessed on 1 July 2025).

- Arnold, J.; Lörch, N.; Holtorf, F.; Schäfer, F. Machine learning phase transitions: Connections to the Fisher information. arXiv 2023, arXiv:2311.10710. [Google Scholar]

- Brunel, N.; Nadal, J.P. Mutual information, Fisher information, and population coding. Neural Comput. 1998, 10, 1731–1757. [Google Scholar] [CrossRef]

- Petz, D. Quantum Information Theory and Quantum Statistics; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Prokopenko, M.; Lizier, J.T.; Obst, O.; Wang, X.R. Relating Fisher information to order parameters. Phys. Rev. E 2011, 84, 041116. [Google Scholar] [CrossRef]

- Huang, K. Statistical Mechanics; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Favretti, M. Geometry and control of thermodynamic systems described by generalized exponential families. J. Geom. Phys. 2022, 176, 104497. [Google Scholar] [CrossRef]

- Favretti, M. Exponential Families with External Parameters. Entropy 2022, 24, 698. [Google Scholar] [CrossRef]

- Dauxois, T.; Ruffo, S.; Arimondo, E.; Wilkens, M. Dynamics and Thermodynamics of Systems with Long-Range Interactions: An Introduction; Springer: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Campa, A.; Dauxois, T.; Ruffo, S. Statistical mechanics and dynamics of solvable models with long-range interactions. Phys. Rep. 2009, 480, 57–159. [Google Scholar] [CrossRef]

- Thirring, W. Systems with negative specific heat. Z. Phys. 1970, 235, 339. [Google Scholar] [CrossRef]

- Padmanabhan, T. Statistical mechanics of gravitating systems. Phys. Rep. 1990, 188, 285–362. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Favretti, M. Fisher Information in Helmholtz–Boltzmann Thermodynamics of Mechanical Systems. Foundations 2025, 5, 24. https://doi.org/10.3390/foundations5030024

Favretti M. Fisher Information in Helmholtz–Boltzmann Thermodynamics of Mechanical Systems. Foundations. 2025; 5(3):24. https://doi.org/10.3390/foundations5030024

Chicago/Turabian StyleFavretti, Marco. 2025. "Fisher Information in Helmholtz–Boltzmann Thermodynamics of Mechanical Systems" Foundations 5, no. 3: 24. https://doi.org/10.3390/foundations5030024

APA StyleFavretti, M. (2025). Fisher Information in Helmholtz–Boltzmann Thermodynamics of Mechanical Systems. Foundations, 5(3), 24. https://doi.org/10.3390/foundations5030024