Abstract

This study evaluates four developed external Human–Machine Interface (eHMI) concepts for automated shuttles, focusing on improving communication with other road users, mainly pedestrians and cyclists. Without a human driver to signal intentions, eHMI systems can play a crucial role in conveying the shuttle’s movements and future path, fostering safety and trust. The four eHMI systems’ purple light projections, emotional eyes, auditory alerts, and informative text were tested in a virtual reality (VR) environment. Participant evaluations were collected using an approach inspired by Kansei engineering and Likert scales. Results show that auditory alerts and informative text-eHMI are most appreciated, with participants finding them relatively clear and easy to understand. In contrast, purple light projections were hard to see in daylight, and emotional eyes were often misinterpreted. Principal Component Analysis (PCA) identified three key factors for eHMI success: predictability, endangerment, and practicality. The findings underscore the need for intuitive, simple, and predictable designs, particularly in the absence of a driver. This study highlights how eHMI systems can support the integration of automated shuttles into public transport. It offers insights into design features that improve road safety and user experience, recommending further research on long-term effectiveness in real-world traffic conditions.

1. Introduction

As automated vehicles (AVs) have become an integral part of modern traffic systems, their interaction with other road users has emerged as a pivotal area of research and development. Unlike human drivers, AVs cannot rely on implicit interactional cues (e.g., gaze and facial expression) nor on explicit, conventionalized non-verbal signals such as hand gestures, to make their intentions recognizable. This absence of non-verbal cues presents significant challenges in fostering trust and predictability during interactions with pedestrians, cyclists, and other drivers [1,2].

To address these challenges, extensive research has been conducted on external human–machine interfaces (eHMIs) for AVs [3,4]. The most common type of eHMI is visual, encompassing a wide range of designs and modalities. These may include intent signals that indicate whether the vehicle intends to stop or proceed [5], often displayed through symbols or icons on dedicated screens. Other approaches involve anthropomorphic features such as eyes [6,7] or gaze-like indicators that convey recognition of vulnerable road users (VRUs) [8,9,10], LED strips [11,12,13], as well as ground projections [14,15,16], textual messages [4,17,18], or symbolic cues communicating the vehicle’s next action or advising pedestrians on how to behave [19,20]. The visual elements of these interfaces are typically positioned on the sides of the vehicle, on the windshield [5], or on the hood or grille area [4]. Ref. [12] compared these positions but did not find any position superior for decision making.

In addition to visual modalities, auditory eHMIs have also been explored. These systems can provide spatial cues to indicate the vehicle’s position or direction of movement [21], as well as verbal or non-verbal alerts. Auditory cues have also been found to enhance interactions, with engine sounds increasing VRU awareness and beeping sounds serving as alerts to other road users of approaching shuttles [22]. Since 2021, electric vehicles have been required to emit artificial sounds that signal their presence, position, and speed to enhance pedestrian safety [23]. Auditory cues have therefore become more common.

Several empirical studies have examined how road users perceive and interpret eHMI communication. Ref. [24] explored the feasibility of using eye-tracking technology to assess the effectiveness of external human–machine interfaces (eHMI) on AVs in a field study. Their study also examines how pedestrians visually perceive and interpret communication cues from a vehicle. One of their conclusions was that many individuals seek eye contact with a driver. Ref. [2] investigated how eHMI can facilitate interactions between AVs and pedestrians by conveying vehicle intent. They highlight the critical role of communication in ensuring mutual understanding of each other’s intent. Ref. [25] explored VRUs’ experiences of sharing the road with slow automated shuttles, and through a survey found that participants wished to receive clearer information about the vehicle’s actions—such as whether it was turning or stopping—as well as confirmation that they had been detected. Ref. [26] analyzed interface designs across three long-term automated shuttle projects and found that while eHMIs can improve subjective perceptions of safety, their objective impact is limited by the reduced ecological validity of both simulated and field studies, with simple light-based indicators proving most robust across contexts.

The most frequently studied scenario concerns facilitating interactions between AVs and VRUs—particularly pedestrians—who need to decide whether it is safe to cross in front of a vehicle whose intentions may otherwise be ambiguous [8,11,27,28].

Despite extensive research, there is currently no consensus on which eHMI design is most effective [16,29]. One limitation in the field is that most experiments rely on artificial setups or participants with little or no real-world experience of AVs. As highlighted by [30] the ability to investigate eHMI based on vehicles already in public operation using participants familiar with the traffic provides valuable insights into how users interact with systems in realistic and familiar environments, thereby enhancing the relevance and reliability of the research.

Opportunity for such research exists in Linköping, Sweden, where automated shuttles have been operating on the campus of Linköping University for the past five years. These shuttles have become a regular feature of the campus traffic environment, allowing for naturalistic observations of how pedestrians and cyclists interact with them. Since their deployment in 2019, a substantial body of research has emerged focusing on these vehicles—both globally and locally.

Video-based studies have examined how cyclists and pedestrians interpret the intents of shuttles [31] as well as long-term interactions between cyclists and automated shuttles [32]. For instance, ref. [33] analyzed cyclists’ behaviors when automated shuttles operated in Linköping campus bike lanes, revealing that cyclists often adjusted their trajectories and opted to use sidewalks in the presence of shuttles. Similarly, ref. [31] conducted analyses of video recordings to examine how cyclists interact with the automated shuttles in everyday traffic. The study revealed that while cyclists expected the shuttles to adjust dynamically, for instance by slowing down to allow overtaking or deviating from their path—the vehicles instead responded with abrupt emergency braking. This mismatch exposed specific gaps in coordination, namely the shuttles’ limited capacity for continuous adjustment and their inability to communicate constraints on their movements.

Together, these findings underscore the importance of studying external communication systems in realistic, contextually rich environments where automated shuttles are already integrated into everyday traffic.

The present exploratory study therefore aims to examine how four divergent eHMI design concepts are perceived by road users external to automated shuttles, with the goal of enhancing communication towards improving traffic flow. For this purpose, a mix of individuals–both familiar and unfamiliar with the vehicles–was invited to evaluate the design concepts. In contrast to typical eHMI studies, this study was based on a digital twin of a real-world established shuttle service, which the participants encountered. A virtual reality (VR) simulation setup based on the real-world shuttle model was hence used for experiencing the different eHMI designs.

2. Materials

2.1. Existing Shuttle Service

The automated shuttles operated in Linköping, a mid-sized city in southern Sweden. The service ran weekdays from 8:00 a.m. to 5:30 p.m., and on weekends from 11:00 a.m. to 3:40 p.m. The shuttles did not follow a fixed schedule but adhered to strategically placed stops to facilitate seamless transfers, particularly at the local university. From its official launch in 2020 to the data collection period in 2024, the shuttles had served over 18,000 passengers.

The vehicles used by the time of the study were two identical electric prototypes: the EasyMile EZ10 Generation 2, made by EasyMile (Toulouse, France) (see Figure 1). These shuttles navigated using Lidar, 3D Lidar, and advanced GPS technology. The shuttles used in the study can be classified as Level 3 AVs according to [34,35].

Figure 1.

Shuttles from front (left) and back (right) with the current design. Photos: My Weidel.

The shuttles followed a pre-programmed route (see Figure 2) with centimeter-level precision, employing two digital safety zones. If an object entered the outer zone, the vehicle decelerated; if the inner zone was breached, it stopped immediately. These safety zones depended on the vehicle’s speed and extended around the shuttle, with a larger area in front. However, they did not take into account the direction or the size of approaching objects.

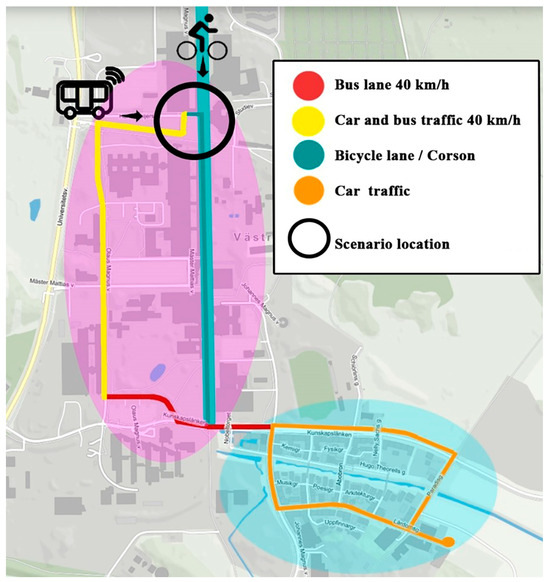

Figure 2.

Service area for the shuttle. Blue oval area indicating Vallastaden and purple showing the Campus area.

The shuttles had a colorful design, marked to indicate their research purpose. They were also equipped with an orange triangle on the back, indicating to other road users that it was a slow-moving vehicle, in accordance with the Swedish law [36]. To warn other road users about the harsh jerks a sign is taped on the back of the shuttle saying: “Keep distance, I can brake hard”, in Swedish.

Due to legal requirements mandating a designated individual with legal accountability and the operational need for passenger service [37] a safety operator was present onboard the shuttle. Since the start of the project, trained safety operators have played a critical role in ensuring the safe operation of the shuttles and managing passenger interactions. Their responsibilities included coordinating software updates, monitoring sensor obstructions, and addressing passenger inquiries. At critical junctures, such as intersections or pedestrian crosswalks, operators actively confirmed safety before proceeding.

On the main corridor of the university, “Corson”, which spans 1.1 km, the shuttles shared pathways with cyclists, marked by signage. The corridor was lined with bike parking on one side and a pedestrian path on the other. During peak hours, congestion often occurred, especially around lecture starts. While pedestrians generally used separate paths, they frequently crossed the shuttle’s route to access facilities, creating dynamic traffic conditions.

In the residential area Vallastaden, the shuttles served areas near a school where, among others, children with cognitive disabilities go, and near a care home for individuals with dementia. These groups frequently used the shuttles for short trips, such as visits to parks or shops. The maximum speed was set to 13 km/h to make sure even fragile passengers would always feel safe traveling with the shuttles. Figure 2 depicts the situation on a map.

2.2. Previous Work: Development of eHMI Designs

An external Human–Machine Interface, an eHMI, is an outward-facing interface on a vehicle that communicates the vehicle’s current state and intended actions to people outside the vehicle. The eHMI systems used on the bus were developed in earlier work based on semi-structured interviews with experienced safety operators of the shuttle service acceptance [38] described in Section 2.1. The results from the interviews revealed three areas for improved communication to VRUs from the operator perspective: the shuttle needed to communicate the presence of the shuttle to VRUs, to communicate how close VRUs such as pedestrians and cyclists could approach the shuttle without making it stop, and to inform VRUs of when the shuttle was about to depart. Prior research and literature were then consulted to identify suitable concepts that could convey the operators’ expressed needs. This process led to the development of categorizations that contributed to the creation of four distinct messages requiring communication, which ultimately resulted in the design of four different explorative eHMI concepts that used different modalities.

With the assistance of design students from Linköping University, the four concepts were brought to life using the Unreal 5.3 software (Epic Games, Cary, NC, USA) and were demonstrated as an immersive experience for the participants. The students were given clear instructions about the purpose and method of communication. They were then allowed considerable freedom in the design process, but had to coordinate their ideas with the corresponding author. The final concepts were called “Purple Light”, “Eyes”, “Auditory Alert” and “Text”, respectively compare Figure 3. Videos of the concepts are available in the Supplementary Materials.

Figure 3.

Design concepts evaluated in the study. Upper left: Purple Light. The light is projected on the ground indicating the sensor-sensitive area around the shuttle. Upper right: Eyes. The bicycle is triggering the sad eyes by getting too close. Lower left: Auditory Alert. The sound intensifies when an object or a person gets closer to the vehicle. Lower right: Text. The text (in Swedish) indicates how soon the shuttle will be departing.

The VR-simulated bus was modeled on the actual behavior of the physical vehicle, including factors such as speed (ranging between 5 and 7 km/h where the interaction occurs in the scenario), positioning, and safety zones. For example, when an object entered the internal safety zone of the real bus, the bus was programmed to stop. The specifics for this zone are not publicly known due to a non-disclosure agreement with the manufacturer, but it is defined in the shuttle’s software, version 12.3 (21.06.3-final). Physical tests showed that the internal safety zone was approximately 80 cm from the side of the vehicle at the speed used in the scenario. This was replicated in the simulated shuttle to accurately reflect the real vehicle’s programming. A simulated bicycle was used to demonstrate the bus’s reactions and to ensure that all participants experienced the same conditions.

The different eHMI concepts were designed to serve distinct purposes, and while some interfaces communicated the bus’s intended actions, others conveyed the bus’s perception of its current interaction with a cyclist.

2.2.1. Purple Light

Safety operators expressed that it was difficult for surrounding road users to determine how close they could approach the bus without causing it to stop. Additionally, they noted that cyclists often passed by too quickly to notice whether the bus had stopped. To address this issue, visual and auditory communication methods were explored to illustrate the bus’s safety zones and hard braking.

To indicate the area monitored by the bus’s sensors, which causes the bus to stop when someone enters it, a purple light was projected onto the ground in the VR environment, inspired by the warning light used on forklifts [39]. The color purple was chosen specifically to avoid any resemblance to standard [40] or emergency vehicle lighting and was a color that could complement the bus’s color scheme. Projection has been used as an eHMI for road vehicles in multiple studies [14,15,16], but no studies illustrating the safety zone area have been found. Additionally, purple stripes on the side of the bus provide a visual indication that the bus has come to a stop. To alert passing cyclists who may be approaching too closely, the bus was equipped with a pre-recorded sound effect mimicking the abrupt screeching of tires during a sudden stop. Apart from this warning system, the bus operated silently.

2.2.2. Eyes

Based on the interviews, a general lack of caution around the vehicles was noted. VRUs appeared indifferent to whether the vehicles had to brake abruptly. Some speculated that this behavior stemmed from a lack of awareness that someone was inside the vehicle. Depictions of eyes have been utilized in previous studies to indicate that VRUs have been detected by a vehicle, signaling that it will stop to facilitate efficient and safe crossing [41]. However, this approach is not feasible in a scenario where an automated shuttle operates on a road with multiple VRUs. In this context, the eyes serve a different function. They are specifically designed to avoid giving the impression that the shuttle is looking in any particular direction. Aligned with the findings of [42], the eyes are instead employed to evoke sympathy and are hence more to be regarded emotional than informative. In this case, the intention is to encourage other road users to respect the shuttle, thereby ensuring a smoother journey for its passengers with fewer interruptions. In the VR implementation of the concept, the eyes remained neutral until a bicycle passed too closely, triggering a shift to a sad expression.

2.2.3. Auditory Alert

Since these electric buses lack traditional engine noise, alternative methods were sought to alert surrounding road users to the bus’s presence. This was combined with communication strategies to illustrate the bus’s safety zones, as safety operators reported that many individuals are unaware of how close they can approach the buses without affecting their operation. Similar to a parking sensor, the shuttles’ auditory alert implemented in VR became more intense as an object or person approached the areas monitored by its sensors. This feature could therefore serve as an early warning system before entering the sensitive zone. The closer one gets to the bus, the more intense and louder the sound, aligning with van [21], who demonstrated that object-based sound systems can create perceivable spatial sound objects. Their research further showed that dynamic sound objects—those that change in position or intensity—are easier for humans to locate than static ones. Conversely, as the individual or object moves away from the monitored zone, the intensity diminishes, and the volume decreases accordingly. The sound was designed to project in the direction of the person or object that entered the sensors’ detection area.

2.2.4. Text

The safety operators perceived that many road users were unaware of the bus’s intended route and purpose. To facilitate its use and improve understanding of when the bus would depart from the stop, a text-based eHMI was developed for the VR environment. By using display signs that showed a countdown and the destination of the bus standing still at a bus stop, the shuttle’s departure time was communicated clearly, in line with [19]. This was intended to help other road users determine whether they need to hurry to catch the bus, whether it is the right bus for them, or if they will be able to pass it before it moves. The signs were based on the displays commonly found on city buses today. This communication system would not only ease navigation for those sharing the roadway with the shuttle, but also assist potential passengers, making the system more accessible and user-friendly.

2.3. Evaluation Tool

To evaluate road users’ experiences of sharing the road with AVs, a measurement tool was developed, drawing inspiration from Kansei Engineering [43]. This approach seeks to translate emotions and experiences into design parameters, enabling the creation of products that better align with users’ emotional and functional needs.

Open-ended responses gathered from a survey on acceptance [38] served as input. In a previous study participants were asked questions such as: “What do you generally think of the automated shuttles you see around the campus/Vallastaden?”, “What do you like about the shuttles?”, and “What do you dislike about the shuttles?” Responses from 67 participants were analyzed, with adjectives and nouns extracted and noted on post-it notes. Five researchers, including the authors of this paper then categorized these terms into themes. From each theme, one representative word was selected, resulting in a set of nine descriptive words for the vehicle. These words encompassed both positive and negative descriptors as well as neutral and are as follows: cooperative, cool, communicative, dangerous, obstructive, irritating, attentive, predictable, and advanced.

The selected descriptive words were then treated according to the theory of the Semantic Space [44]. This theory posits that concepts can be understood and measured within a three-dimensional psychological space—Evaluation (e.g., good vs. bad), Potency (e.g., strong vs. weak), and Activity (e.g., active vs. passive). Applying this framework allows for a systematic analysis of how individuals perceive and evaluate the AVs in emotional and cognitive terms.

The final measurement tool incorporated these nine descriptors, with each statement formatted as “The vehicle is [descriptor]” and followed by a slider ranging from “Do not agree” on the left to “Fully agree” on the right, spanning 100 increments. The primary objective of this tool was to capture nuanced attitudes toward the AVs and their associated services.

3. Methods

This study employed an explorative within-subjects design, with each participant experiencing all eHMI systems and the shuttles without an eHMI. Below we describe the participants and the procedure that uses the materials described previously. The study was approved by the Swedish Ethical Review Authority.

3.1. Participants

Participants who expressed interest in the study were selected to ensure diversity across all participant groups. This included adults of various ages, cyclists with different levels of experience, and individuals with varying familiarity with the shuttles.

The study included 28 participants, comprising 18 men and 10 women. Previous research [45] has not suggested any gender-related differences in relation to experience of the shuttles. Consequently, participant selection was based on prior experience with shuttles, rather than a balanced gender distribution. Half of the participants (n = 14) had prior knowledge of the shuttles, as students, working in the area or living in Vallastaden, while the other half had not. The participants were aged between 18 and 72 years (M = 40.5, SD = 15.7). All individuals over the age of 18 who could ride a bicycle were eligible to participate. Recruitment was conducted through a Facebook advertisement. Participants selected received a registration link with detailed information about the study purpose and how to book a three-hour time slot.

The experiments were carried out every weekday over a three-week period. Participants included high school and university students, employees from Linköping and a neighboring town, as well as retirees. Rather than excluding individuals with visual impairments, the study also included participants who wore glasses and those predisposed to nausea. The only individuals excluded from participation were those who, via the recruitment questionnaire, reported having epilepsy or indicated that they were unable to wear a headset during the study.

3.2. Procedure

The study was carried out in situ at the VTI research institute. Participants received an introduction to the shuttle’s functionality. To ensure that all participants had a baseline experience of the shuttle’s current functionality, they were subjected to two situations while cycling: (A) overtaking the shuttle and (B) meeting the shuttle.

Each situation was practiced both on the campus area and in the simulator (see Figure 4).

Figure 4.

Top: Participants view, overtaking the shuttle. Bottom: Cyclist overtaking the shuttle in real life. Right: the simulator.

The order of these experiences was balanced, with half of the participants experiencing the real shuttle first and the other half starting with the simulator. After each experience, participants were asked to evaluate their impressions of the shuttle in its current state, without any eHMI.

After the baseline experience of the shuttle the participants were exposed to the four different eHMI design concepts while standing still and observing the shuttle in the VR simulator. Prior to interacting with the eHMI systems, a test leader provided participants with a brief explanation of the purpose of each system immediately before they experienced it. To minimize order effects, a fully counterbalanced sequence design was used, based on all 24 possible permutations of the four eHMIs.

The 28 participants were assigned these sequences so that each unique order was used at least once, and four sequences were randomly repeated for the remaining participants. Participants were allowed to revisit any system if they felt they had missed important details and were encouraged to ask questions regarding the system’s functionality. They were also given the opportunity to freely examine the eHMI systems, including inspecting specific features or details they regarded important. Most participants did not feel the need to revisit any system, and the total time in the simulator watching the eHMI concepts was usually between 5 and 8 min.

The XR-4 headset (from Varjo, Helsinki, Finland) equipped with built-in speakers, was used in the simulator for all participants except for six, who used the Varjo XR-3 when the XR-4 did not function. As the XR-3 headset lacks integrated speakers, neck headphones were used instead to provide a comparable auditory experience. In all other aspects relevant for this study, the headsets were equivalent.

All evaluations were conducted using the tool described in Section 2.3. After exposure to the various eHMI design concepts, participants completed questionnaires addressing all eHMI systems. The questionnaires comprised both qualitative items, allowing for open-ended responses, and quantitative items, see Section 2.3. The eHMI concepts were as noted above presented in a balanced order, and the questionnaires were completed in the same sequence, following the observation order. Finally, participants responded to five questions with 7-point Likert scales, regarding what they considered important when designing an eHMI system for automated shuttles. All participants were informed that honest feedback was sought and that there were no right or wrong responses. All responses were collected in Swedish and translated to English after analysis.

4. Results

One participant missed the questions about their overall impression of the eHMI, how easy it was to understand each eHMI, if they would like to have the eHMI on an automated shuttle and the free-text responses. Of the remaining 27 participants, 25 found the Text and 24 the Auditory Alert easy to understand. In comparison, only 6 of the participants rated the Purple Light as easy to understand, while 11 participants found the eHMI Eyes to be comprehensible. 26 participants answered that they would like to have the Text-eHMI. 15 answered the same for the Auditory Alert and 8, respectively, 7 participants answered so for Purple Light and Eyes.

A Friedman test showed a significant difference between the average ratings of the clear positive descriptors (cooperative, communicative, attentive, and predictable) of the five ways the bus was presented described previously, χ2(4) = 37.1, p < 0.001. The degree of agreement among participants was moderate as indicated by Kendall’s W = 0.33. Post hoc comparisons using the Durbin–Conover test with Bonferroni correction showed the following significantly higher ratings on the positive indicators: Auditory Alert over no eHMI (p = 0.01); Text over No eHMI (p = 0.01); Auditory Alert over Purple Light (p = 0.01); Text over Purple Light (p = 0.01); Auditory Alert over Eyes (p = 0.01); Text over Eyes (p = 0.01).

A Friedman test also showed a significant difference between the average ratings of the clear negative descriptors (dangerous, obstructive, and irritating) of the five ways the bus was presented, χ2(4) = 22.3, p < 0.001 but now with a lower degree of agreement among participants (Kendall’s W = 0.20). Post hoc comparisons using the Durbin–Conover test with Bonferroni correction showed the following significantly higher ratings on the negative indicators: No eHMI over Text (p = 0.03); Purple Light over Text (p = 0.01); Eyes over Text (p = 0.01); Auditory Alert over Text (p = 0.01).

To summarize, when rating positive indicators, the Auditory Alert and Text were preferred over the No eHMI condition, as well as over Purple Light and Eyes. For negative indicators, only Text received significantly lower negative ratings compared to the other eHMIs and no eHMI. The Auditory Alert, in contrast, elicited roughly the same number of negative impressions as the other eHMIs and no eHMI.

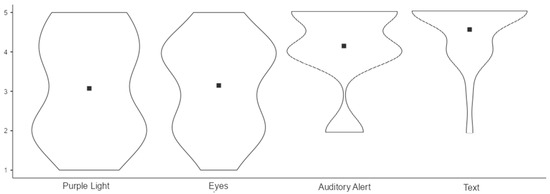

Participants were also asked to rate their overall impression of the eHMI (excluding the No eHMI condition; n = 27 due to missing data from one participant). Text (M = 4.56) and Auditory Alert (M = 4.11) received the most positive impressions from participants, whereas Eyes (M = 3.15) and Purple Light (M = 3.07) were rated lower. See Figure 5, which shows violin plots illustrating the distribution of responses for overall impressions of the eHMIs (1 = negative impression, 5 = positive impression). Black squares indicate the mean values.

Figure 5.

Violin plots showing the distribution of responses for the overall impression of the eHMIs. 1 = negative impression and 5 = positive impression. Black squares mark the means.

These results are consistent with the Durbin–Conover post hoc analyses reported above, showing that Text is the most preferred eHMI, while Auditory Alert is also preferred but with more mixed results compared to Text, whereas Purple Light and Eyes show clear mixed preferences among participants. Self-rated experience of riding bicycles overall and in urban areas showed no significant relationships to overall impression of the eHMIs. Spearman’s rank-order correlation showed a significant negative correlation between participants’ self-rated experience with the self-driving buses and their overall impressions of the auditory alert, rs(25) = −0.397, p = 0.041 This indicates that participants with more experience of the self-driving buses tended to rate the auditory alert slightly lower. Participants’ self-rated experience with the self-driving buses showed no correlation with the other eHMIs. This is in line with the positive but somewhat mixed impressions of the auditory alert reported above. This is further explored in the qualitative analysis in Section 4.3.

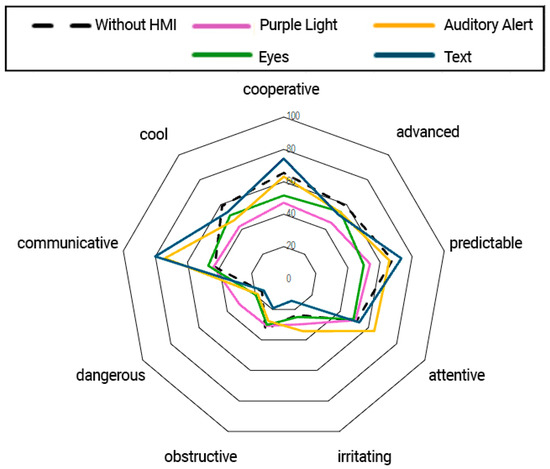

Figure 6 presents the results from the Kansei Engineering-inspired study conducted as part of this study. The graph depicts the average values for each of the tested eHMI concepts, with each colored line representing a unique “emotional fingerprint” for its respective concept. Upon analyzing the graph, several notable conclusions can be drawn.

Figure 6.

Emotional Finger Print–Result Kansei Engineering.

Two concepts stand out prominently: The eHMI Text and the Auditory Alert. To compare how participants experienced the different eHMIs in comparison to the buses without eHMI composite average scores of the clear positive (communicative, cooperative, attentive, and predictable) and negative attitudes (dangerous, obstructive, and irritating) to the bus were calculated.

Looking at the positive attitudes investigated, as presented above the Text-eHMI evoked more positive attitudes than the bus without an eHMI. The Auditory Alert also evoked more positive attitudes than the bus without an eHMI. A look at the emotional fingerprint (Figure 6) suggests that communicativeness was what mostly accounted for the differences in positive attitudes towards the eHMIs in comparison to the buses without eHMI. A Friedman test showed that communicativeness was differently rated across the eHMIs χ2(4) = 44.9, p < 0.001. Post hoc comparisons using the Durbin–Conover test with Bonferroni correction showed significantly higher ratings of communicativeness for Auditory Alert and Text-eHMI in comparison pairwise to all other conditions (p = 0.01 allover).

Text-eHMI as presented previously was the only eHMI that evoked fewer negative attitudes than the bus without an eHMI. Overall, the negative attitudes towards the buses persisted in being relatively low independent of the existence of an eHMI investigated in the study. Although, a Friedman test showed that how dangerous the bus was experienced differentiated between the eHMIs, χ2(4) = 12.8, p = 0.012. Specifically, using a Bonferroni corrected Durbin–Conover test, the shuttle with Purple Light was experienced as more dangerous than with Text-eHMI (p = 0.02). Predictability was also significantly rated differently across the eHMI, χ2(4) = 23.5, p < 0.001. Especially Eyes was experienced as less predictable than No eHMI (p = 0.01) and Text-eHMI (p = 0.01). So was also Purple Light in comparison to Text-eHMI (p = 0.01).

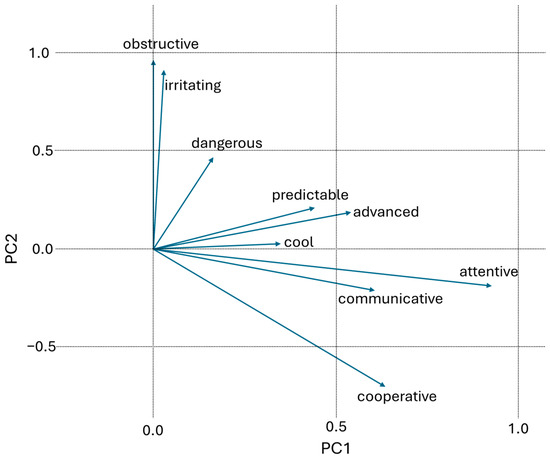

Further analysis of the collected data using Principal Component Analysis (PCA) with Varimax rotation reveals three distinct clusters of descriptors: Predictability, Endangerment, and Practicality. PCA is a statistical technique that reduces data complexity by identifying underlying components that explain the most variance. Varimax rotation enhances interpretability by maximizing the separation between these components, making clusters more distinct. In a Kansei Engineering context the PCA is primarily used to detect trends related to the above-mentioned dimensions of the Osgood’s Semantic Space. In this context, a relatively small number of user responses is typically sufficient [43,46]. This clustering provides deeper insights into participants’ perceptions. Notably, predictability plays a crucial role in shaping trust—participants tend to perceive the automated bus as safe and reliable when they also find it predictable.

A closer examination of the clusters (see Figure 7) reveals that the descriptors “obstructive”, “dangerous”, and “irritating” form one group, directly opposing “cooperative” along the same principal component. This suggests a dichotomy in perception—participants tend to view the bus as either dangerous or cooperative, with little overlap. In contrast, “predictable” and “advanced” are positioned at a 90-degree angle to this dimension, indicating that perceptions of predictability are independent of the danger-cooperation axis. Meanwhile, descriptors like “communicative” and “attentive” form a third cluster positioned at a 45-degree angle, suggesting a semi-dependent relationship with both of the previously mentioned dimensions.

Figure 7.

Load Plot after PCA using Varimax rotation.

This reinforces the importance of predictability in fostering a sense of safety and trust in automated vehicle interfaces. By illustrating how perceptions of danger, functionality, and communication shape user experience, this analysis provides a more nuanced understanding of public acceptance of automated transport.

The following section presents a summary of 27 participants’ free-text responses for each eHMI, analyzed using what [47] call codebook thematic analysis to identify recurring patterns and themes for the respective eHMI.

4.1. Purple Light

Many respondents reported that the lights were weak and difficult to see, particularly as they were presented in daylight. The lights were not clearly visible, neither at a distance nor at close range. “Difficult to see during the day; I would have liked to see the same test in an evening/night environment”. Several participants found the meaning conveyed by the lights to be unclear and not intuitive, which is illustrated by the following citations: “I do not understand what the purple light means”. “I did not think it was obvious what the purple lights were supposed to convey. It did not feel intuitive”.

4.2. Eyes

Participants thought that cyclists might have difficulty seeing the system’s “eyes”, particularly when approaching from behind, due to poor positioning and small size. “I couldn’t see the eyes as a cyclist”. Another opinion was that “It was hard to understand initially but might improve with experience”. Some found the system useful for conveying emotional feedback, but it required cyclists to adapt their behavior, which was not always clear or relevant. “It gave feedback on how the bus perceived the cyclist”. Some participants laughed and called it cute or sweet when it cried. It should also be taken into account that, in a university environment predominantly populated by students, some participants might deliberately attempt to trigger the ‘crying eyes’ display for amusement. Such behavior could have the opposite effect of that intended, leading to unnecessary braking events that may be uncomfortable for both the safety operator and the passengers. This was pointed out by two different participants while observing the eHMI.

4.3. Auditory Alert

The system was thought to be reassuring for cyclists and pedestrians, as auditory communication might help raise awareness of nearby automated shuttles. One respondent highlighted that it makes people realize when they are getting too close, suggesting that the sound is “clear and quite pleasant”. The system would need calibration to ensure the sound is noticeable without being disruptive, with concerns about its effectiveness in noisy settings or its potential to irritate nearby individuals. One participant pointed out that the alert was too late to be effective. “The sound came too late to be useful”. Some worry about the annoyance factor over time, and the possibility of cyclists not hearing it due to headphones was noted.

4.4. Text

Of the four concepts presented, participants found the eHMI Text most useful, pointing out that the system should be clear and easily recognizable, similar to the standard systems on regular buses. It should provide information of the shuttle’s destination and, ideally, include time indicators, which would enhance clarity. Familiarity with the area helps, but time indicators are still useful. There were also recommendations for signals about changes in speed or upcoming actions, so passengers can understand the shuttle’s manoeuvres.

Additionally, the participants recommended that simultaneous audio and text information were provided to ensure that all users can access the necessary details. In general, the system’s interface resembles that of regular buses, which increases comfort and trust in the vehicle.

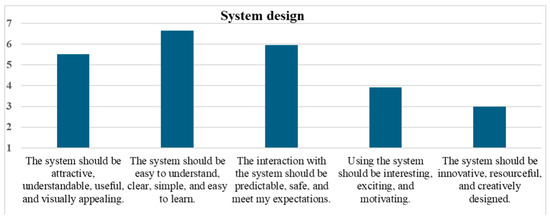

In the final question, where participants were asked what they considered important in the design of an interaction system for automated shuttles (see Figure 8), they responded that the system needs to be easy to understand, simple, clear, and predictable. In contrast, responses such as the system needs to be creative, innovative, exciting, and motivating were ranked lower. This is in line with previous responses and in favor of the Auditory Alert and Text-eHMIs. More on this in the discussion.

Figure 8.

Importance of system design features. 1 = not important at all, 7 = very important.

5. Discussion

The participants favored eHMI systems that were familiar and resembled features they had previously encountered in traffic. This preference may possibly partly stem from their ease of recognition, which enabled participants to understand the system’s planned movements effortlessly. The system’s clear and straightforward functionality, such as displaying the bus’s destination through text, could be another probable explanation. It was clear from the analyses that Text was clearly preferred both in comparison to the other eHMIs and to the bus without an eHMI. This aligns with the findings of [17], who also reported higher preference and comprehensibility for text-based eHMI designs. Auditory Alert was also preferred in comparison to the Purple Light, Eyes and No eHMI designs but also yielded no difference in negative impressions compared to those. Participants with more experience with buses reported a slightly lower overall impression of the Auditory Alert. Qualitative analysis of the interviews showed that the Auditory Alert has some room for improvement in both the sound itself and its triggering mechanism. It was experienced as attentive but also irritating. Participants expressed a desire to hear the sound earlier, which would provide a preventive warning before they approached the bus closely enough for it to stop. This highlights the need for further exploration of sound concepts as eHMIs, particularly in enhancing their effectiveness and intuitiveness. Nevertheless, auditory communication as an eHMI shows potential, in line with [21], even though the specific sound used in this study may not have been optimal.

The emotional fingerprint and statistical analysis showed that both Text and Auditory Alert were clearly perceived as the most communicative eHMIs. Important to acknowledge is that the Text- and Auditory Alert-eHMI were conceptually different from each other, for instance, in terms of perceptual modality and functional characteristics. This means that they are not necessarily competitors for the best future eHMI. A suggestion is that they have the potential to be used simultaneously, although this was not investigated in this study.

In our study, the eyes were difficult to interpret. It was unclear where they were looking, and their low level of detail made it challenging to recognize them as eyes. This likely contributed to the absence of the “cute” or empathetic effect that anthropomorphic eyes can provide [7]. Previous research has shown that eyes on vehicles can serve different communicative functions: [9] used eyes to establish eye contact with pedestrians, creating a sense of being seen, while [6] used eyes to indicate the intended driving direction of the vehicle.

What participants reported they would like to see or not see on AVs does not necessarily represent the best alternatives. This type of study cannot replace longitudinal studies that examine effects over time, and where people encounter shuttles equipped with an eHMI in real traffic. However, it can provide an indication of the types of eHMIs that should be explored further. The participants found the systems they were familiar with to be more useful and easier to understand. It remains unclear whether this is due to the more innovative eHMI systems having a function that is too abstract or simply because the participants were not accustomed to them. Several participants also highlighted the importance of turn signals, a feature not included in the scenarios. For instance, one participant stated that if the shuttle had used its turn signal before leaving the stop and entering the bike lane, its behavior would have resembled that of a “regular bus”, making it easier for individuals to understand how to react appropriately.

We acknowledge that the sample size of 28 participants is relatively small. However, such sample sizes are not uncommon in previous Kansei Engineering studies. While the data collected may not yield statistically robust results, it is often sufficient to support meaningful insights during early-stage product development. The eHMI concepts evaluated in this study fall within that category, making the findings relevant and informative despite the limited sample size.

In this study, all participants experienced interactions with the shuttle while cycling in the real world, but the eHMI systems were observed exclusively with participants in their role as pedestrians in the VR environment. The systems were partially designed to function for other road users, such as drivers and bus operators who share the road with the shuttles. However, the systems are not necessarily intended to communicate with operators of larger vehicles. For instance, sound effects may be challenging to detect from within a noisy vehicle. Similarly, the eyes may be difficult to notice once the vehicle has already passed. Additionally, projected purple marking on the ground may be particularly hard to see for drivers of vehicles with bright headlights.

There was criticism regarding the purple light projection, and it was for instance regarded as more dangerous than the other eHMIs. Visibility would have been improved if the scenario had not taken place during daylight, as the projected light on the ground turned out to be difficult to discern in daylight conditions. However, it is not practical to employ different communication strategies based on weather and light conditions. The purple light indicates the area covered by the bus’s sensors, allowing other road users to avoid entering that zone. The higher the speed, the larger the programmed safety area gets. The light becomes progressively weaker as the safety zone increases in size. At the speed the bus travels along the bicycle lane (9 km/h), the sensor range in front of the bus is approximately 2 m. No lighting solution has yet been identified that can effectively illuminate this distance in daylight. At 13 km/h, the sensor range extends to approximately 4 m. According to [48], visible light consists of wavelengths with varying detectability. Purple has the shortest wavelength and is the most difficult to perceive, particularly when competing with other light sources. This study therefore cannot establish if light projection is a suitable eHMI but it is nevertheless a challenging eHMI to implement. Ref. [14] reported similar findings. They found that projected light was difficult to perceive, particularly from a distance, and suggested that it could potentially be more distracting than helpful. The projection drew attention away from assessing the vehicle’s speed and the surrounding traffic situation. These findings support our results, indicating that projected light as an eHMI not only faces practical visibility limitations but may also introduce cognitive distractions that could reduce safety rather than enhance it.

It is essential to establish a standard for potential eHMIs. For instance, if an eHMI with ground projection were to be used, one might reasonably assume that a vehicle without such a projection does not require maintaining a safe distance. However, this does not mean the vehicle is inherently safe. Therefore, it may be more prudent first to rely on other communication methods to manage interactions between VRUs and AVs. Several participants in the study highlighted that indicators and brake lights should be more visible and could serve as effective communication tools. Predictability, as noted from the PCA, was also important for people to perceive the shuttle as safe and trustworthy. Hence, increasing the predictability for VRUs to understand what to expect from the shuttle in the next few moments is crucial and would enhance interaction.

To determine whether a concept functions effectively in practice, longitudinal studies on the use of eHMI are necessary. These studies are crucial for understanding if the concept works, whether there is a learning curve, and how it facilitates interactions. However, such studies require a well-designed eHMI to ensure clear insights can be obtained. The timeline for this development remains uncertain. Furthermore, due to current laws and regulations in Sweden, it is currently impossible to test lighting or dynamic eHMI in traffic environments [49].

Overall, functionality was rated higher than creativity by the participants. This is important to note since eHMI solutions should work for all the intended users, and an external HMI that functions for people who have difficulties learning new things would probably also be applicable to a larger group of people.

6. Conclusions

The purpose of this exploratory study was to examine how four divergent eHMI design concepts are perceived by road users external to automated shuttles, with the goal of enhancing communication towards improving traffic flow. The purpose has been accomplished by means of a VR simulation setup based on a real-world shuttle model.

The findings emphasize that clarity and ease of understanding are crucial features for eHMI systems communicating shuttle maneuvers. Results indicated a preference for text-based eHMIs and auditory alerts over those using purple light or eyes. Future research should further explore sound and text-based eHMIs to improve traffic flow. Future research should also focus on long-term studies of carefully selected eHMIs in real traffic conditions to advance standardization efforts for communicative external HMIs.

Supplementary Materials

The following supporting information can be downloaded at: https://drive.google.com/drive/folders/1C8isTI5O0rmSGxP2LObpwjuLtxW979ez?usp=sharing (accessed on 22 October 2025): PurpleLight_sound (Purple Light concept), Eyes (Eyes concept), Auditory alert (Auditory Alert concept), Text (Text concept).

Author Contributions

Conceptualization, M.W.; methodology, M.W., S.S., S.N. and M.F.; formal analysis, M.F. and S.S.; investigation, M.W.; resources, M.W., S.S., S.N. and M.F.; data curation, M.W. and M.F.; writing—original draft preparation, M.W.; writing—review and editing, S.N., M.F., S.S. and M.W.; supervision, S.S., M.F. and S.N.; All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Vinnova, registration number 2023-01217.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Swedish Ethical Review Authority (2024-04594-01, 26 August 2024).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data will be available upon request from the corresponding author.

Acknowledgments

This article is a revised and expanded version of a paper entitled Evaluation of External HMIs for Automated Shuttles Based on a Real-World Platform, which was presented at HUMANIST Conference 2025, Chemnitz, Germany, 28 August 2025. Our heartfelt thanks go to Rasmus Samuelsson, Hampus Ländin, and Emil Andersson, along with their project group, for their exceptional contributions in designing the eHMI systems tailored to the safety operators’ needs, as well as for creating the digital replica of the campus. Additionally, we extend our appreciation to the safety operators and all participants for their valuable involvement. We would also like to express our gratitude to Marius Brudvik Norell for his assistance with data collection and for preparing all survey data from paper format to our analysis software.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AV | Automated Vehicle |

| eHMI | External Human-Machine Interface |

| PCA | Principal Component Analysis |

| VR | Virtual Reality |

| VRU | Vulnerable Road User |

References

- Rasouli, A.; Tsotsos, J.K. Autonomous vehicles that interact with pedestrians: A survey of theory and practice. IEEE Trans. Intell. Transp. Syst. 2019, 21, 900–918. [Google Scholar] [CrossRef]

- Habibovic, A.; Lundgren, V.M.; Andersson, J.; Klingegård, M.; Lagström, T.; Sirkka, A.; Fagerlönn, J.; Edgren, C.; Fredriksson, R.; Krupenia, S.; et al. Communicating Intent of Automated Vehicles to Pedestrians. Front. Psychol. 2018, 9, 1336. [Google Scholar] [CrossRef] [PubMed]

- Carmona, J.; Guindel, C.; Garcia, F.; de la Escalera, A. eHMI: Review and Guidelines for Deployment on Autonomous Vehicles. Sensors 2021, 21, 2912. [Google Scholar] [CrossRef] [PubMed]

- de Clercq, K.; Dietrich, A.; Núñez Velasco, J.P.; de Winter, J.; Happee, R. External Human-Machine Interfaces on Automated Vehicles: Effects on Pedestrian Crossing Decisions. Hum. Factors J. Hum. Factors Ergon. Soc. 2019, 61, 1353–1370. [Google Scholar] [CrossRef] [PubMed]

- Nissan Motor Corporation. Nissan IDS Concept: Nissan’s Vision for the Future of EVs and Autonomous Driving; Nissan Motor Corporation: Franklin, TN, USA, 2015. [Google Scholar]

- Gui, X.; Toda, K.; Seo, S.H.; Chang, C.M.; Igarashi, T. “I am going this way”: Gazing Eyes on Self-Driving Car Show Multiple Driving Directions. In Proceedings of the 14th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Seoul, Republic of Korea, 17–20 September 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 319–329. [Google Scholar]

- Wang, Y.; Wijenayake, S.; Hoggenmüller, M.; Hespanhol, L.; Worrall, S.; Tomitsch, M. My eyes speak: Improving perceived sociability of autonomous vehicles in shared spaces through emotional robotic eyes. Proc. ACM Hum. Comput. Interact. 2023, 7, 214. [Google Scholar] [CrossRef]

- Chang, C.M.; Toda, K.; Gui, X.; Seo, S.H.; Igarashi, T. Can eyes on a car reduce traffic accidents? In Proceedings of the 14th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Seoul, Republic of Korea, 17–20 September 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 349–359. [Google Scholar]

- Gui, X.; Toda, K.; Seo, S.H.; Eckert, F.M.; Chang, C.M.; Chen, X.A.; Igarashi, T. A field study on pedestrians’ thoughts toward a car with gazing eyes. In Proceedings of the Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 1–7. [Google Scholar]

- Schlackl, D.; Weigl, K.; Riener, A. eHMI visualization on the entire car body: Results of a comparative evaluation of concepts for the communication between AVs and manual drivers. In Proceedings of the Mensch und Computer, Magdeburg, Germany, 6–9 September 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 79–83. [Google Scholar]

- Izquierdo, R.; Alonso, J.; Benderius, O.; Sotelo, M.Á.; Fernández Llorca, D. Pedestrian and passenger interaction with autonomous vehicles: Field study in a crosswalk scenario. Int. J. Hum. Comput. Interact. 2025, 41, 9587–9605. [Google Scholar] [CrossRef]

- Guo, F.; Lyu, W.; Ren, Z.; Li, M.; Liu, Z. A Video-Based, Eye-Tracking Study to Investigate the Effect of eHMI Modalities and Locations on Pedestrian–Automated Vehicle Interaction. Sustainability 2022, 14, 5633. [Google Scholar] [CrossRef]

- Mahadevan, K.; Somanath, S.; Sharlin, E. Communicating awareness and intent in autonomous vehicle-pedestrian interaction. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 1–12. [Google Scholar]

- Eisma, Y.B.; van Bergen, S.; Ter Brake, S.M.; Hensen, M.T.T.; Tempelaar, W.J.; de Winter, J.C. External human–machine interfaces: The effect of display location on crossing intentions and eye movements. Information 2019, 11, 13. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Holländer, K.; Hoggenmueller, M.; Parker, C.; Tomitsch, M. Designing for projection-based communication between autonomous vehicles and pedestrians. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Utrecht, The Netherlands, 21–25 September 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 284–294. [Google Scholar]

- Tran, T.T.M.; Parker, C.; Hoggenmüller, M. Exploring the Impact of Interconnected External Interfaces in Autonomous Vehicles on Pedestrian Safety and Experience. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2014; Association for Computing Machinery: New York, NY, USA, 2024; pp. 1–17. [Google Scholar]

- Bazilinskyy, P.; Dodou, D.; De Winter, J. Survey on eHMI concepts: The effect of text, color, and perspective. Transp. Res. F Traffic Psychol. Behav. 2019, 67, 175–194. [Google Scholar] [CrossRef]

- Chen, X.; Li, X.; Hou, Y.; Yang, W.; Dong, C.; Wang, H. Effect of eHMI-equipped automated vehicles on pedestrian crossing behavior and safety: A focus on blind spot scenarios. Accid. Anal. Prev. 2025, 212, 107915. [Google Scholar] [CrossRef] [PubMed]

- Hess, S.; Hensch, A.C.; Beggiato, M.; Krems, J.F. Effects of rearward countdown timers at highly automated shuttle buses to announce departing. Transp. Res. F Traffic Psychol. Behav. 2026, 116, 103379. [Google Scholar] [CrossRef]

- Krefting, I.; Trende, A.; Unni, A.; Rieger, J.; Luedtke, A.; Fränzle, M. Evaluation of graphical human-machine interfaces for turning manoeuvres in automated vehicles. In Proceedings of the 13th International Conference on Automotive User Interfaces and Interactive Vehicular Application, Leeds, UK, 9–14 September 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 77–80. [Google Scholar]

- van Laack, A.; Torschmied, A.; Tuzar, G.D. Immersive Audio HMI to Improve Situational Awareness. In Mensch und Computer 2015—Workshopband; De Gruyter Oldenbourg: Berlin, Germany, 2015; pp. 501–508. [Google Scholar]

- Pelikan, H.R.M.; Jung, M.F. Designing Robot Sound-In-Interaction: The Case of Autonomous Public Transport Shuttle Buses. In Proceedings of the HRI ’23: 2023 ACM/IEEE International Conference on Human-Robot Interaction, Stockholm, Sweden, 13–16 March 2023; pp. 172–182. [Google Scholar] [CrossRef]

- European Commission. Electric and Hybrid Cars: New Rules on Noise Emitting to Protect Vulnerable Road Users. 3 July 2019. Available online: https://single-market-economy.ec.europa.eu/news/electric-and-hybrid-cars-new-rules-noise-emitting-protect-vulnerable-road-users-2019-07-03_en (accessed on 13 October 2025).

- Lehet, D.; Novotný, J. Assessing the feasibility of using eye-tracking technology for assessment of external HMI. In Proceedings of the 2022 Smart City Symposium Prague (SCSP), Prague, Czech Republic, 26–27 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar]

- Merat, N.; Louw, T.; Madigan, R.; Wilbrink, M.; Schieben, A. What externally presented information do VRUs require when interacting with fully Automated Road Transport Systems in shared space? Accid Anal Prev. 2018, 118, 244–252. [Google Scholar] [CrossRef] [PubMed]

- Mirnig, A.G.; Gärtner, M.; Fröhlich, P.; Wallner, V.; Dahlman, A.S.; Anund, A.; Pokorny, P.; Hagenzieker, M.; Bjørnskau, T.; Aasvik, O.; et al. External communication of automated shuttles: Results, experiences, and lessons learned from three European long-term research projects. Front. Robot. AI 2022, 9, 949135. [Google Scholar] [CrossRef] [PubMed]

- Kalda, K.; Pizzagalli, S.-L.; Soe, R.-M.; Sell, R.; Bellone, M. Language of Driving for Autonomous Vehicles. Appl. Sci. 2022, 12, 5406. [Google Scholar] [CrossRef]

- Larsson, S.; Sjörs Dahlman, A. I thought it was a logo: A co-simulation study on interaction between drivers and pedestrians and HMI. In Proceedings of the 12th Young Researchers Seminar, Bergisch Gladbach & Cologne, Germany, 3–5 June 2025. [Google Scholar]

- Dey, D.; Matviienko, A.; Berger, M.; Pfleging, B.; Martens, M.; Terken, J. Communicating the intention of an automated vehicle to pedestrians: The contributions of eHMI and vehicle behavior. IT Inf. Technol. 2021, 63, 123–141. [Google Scholar] [CrossRef]

- Serrano, S.M.; Izquierdo, R.; Daza, I.G.; Sotelo, M.A.; Llorca, D.F. Digital twin in virtual reality for human-vehicle interactions in the context of autonomous driving. In Proceedings of the 2023 IEEE 26th International Conference on Intelligent Transportation Systems (ITSC), Bilbao, Spain, 24–28 September 2023; IEEE: Piscataway, NJ, USA, 2024; pp. 590–595. [Google Scholar]

- Pelikan, H. Why Autonomous Driving Is So Hard: The Social Dimension of Traffic. In Proceedings of the HRI ’21 Companion, Boulder, CO, USA, 8–11 March 2021; IEEE Computer Society: Los Alamitos, CA, USA, 2021; pp. 81–85, ISBN 9781450382908. [Google Scholar]

- Thellman, S.; Babel, F.; Ziemke, T. Cycling with Robots: How Long-Term Interaction Experience with Automated Shuttle Buses Shapes Cyclist Attitudes. In Proceedings of the Companion of the 2024 ACM/IEEE International Conference on Human-Robot Interaction, HRI 2024 Companion, Boulder, CO, USA, 11–15 March 2024; pp. 1043–1047. [Google Scholar] [CrossRef]

- Flöttered, Y.P.; Pereira, I.; Olstam, J.; Bieker-Walz, L. Investigating the behaviors of cyclists and pedestrians under automated shuttle operation. In Proceedings of the SUMO User Conference 2022, Virtual Event, 9–11 May 2022; TIB Open Publishing (Technische Informationsbibliothek): Hannover, Germany, 2022; Volume 3, pp. 69–82. [Google Scholar]

- International. SAE J3016 Update: Updates to SAE’s Levels of Driving Automation; SAE International: Warrendale, PA, USA, 2021; Available online: https://www.sae.org/blog/sae-j3016-update (accessed on 30 January 2025).

- Novakazi, F.; Johansson, M.; Strömberg, H.; Karlsson, M. Levels of What? Investigating Drivers’ Understanding of Different Levels of Automation in Vehicles. J. Cogn. Eng. Decis. Mak. 2021, 15, 116–132. [Google Scholar] [CrossRef]

- Transportstyrelsen. Regler för Motorredskap. 2024. Available online: https://www.transportstyrelsen.se/sv/vagtrafik/fordon/fordonsregler/regler-for-olika-fordonsslag/motorredskap/ (accessed on 21 October 2025).

- Swedish Government. Förordning (2017:309) om Försöksverksamhet Med Automatiserade Fordon. 2017. Available online: https://www.riksdagen.se/sv/dokument-och-lagar/dokument/svensk-forfattningssamling/forordning-2017309-om-forsoksverksamhet-med_sfs-2017-309/ (accessed on 12 October 2025).

- Weidel, M.; Nygårdhs, S.; Forsblad, M.; Schütte, S. Evaluation of External HMIs for Automated Shuttles Based on a Real-World Platform. In Proceedings of the HUMANIST Conference 2025, Technische Universität Chemnitz. Chemnitz, Germany, 27–29 August 2025. [Google Scholar]

- Top Tree. The Importance of Tree Safety Inspections: A Guide for Property Owners. 2022. Available online: https://www.toptreesafety.com/new_detail/nid/74107.html (accessed on 2 January 2025).

- Werner, A. New colours for autonomous driving: An evaluation of chromaticities for the external lighting equipment of autonomous vehicles. Colour Turn 2018. [Google Scholar] [CrossRef]

- Wang, Y.; Xue, Z.; Li, J.; Jia, S.; Yang, B. Human-Machine Interaction (HMI) Design for Intelligent Vehicles: From Human Factors Theory to Design Practice; Springer Nature: Berlin/Heidelberg, Germany, 2024. [Google Scholar]

- Schömbs, S.; Klein, J.; Roesler, E. Feeling with a robot—The role of anthropomorphism by design and the tendency to anthropomorphize in human-robot interaction. Front. Robot. AI 2023, 10, 1149601. [Google Scholar] [CrossRef] [PubMed]

- Nagamachi, M. Kansei engineering: A new ergonomic consumer-oriented technology for product development. Int. J. Ind. Ergon. 1995, 15, 3–11. [Google Scholar] [CrossRef]

- Osgood, C.E.; Suci, G.J.; Tannenbaum, P.H. The measurement of meaning. In Semantic Differential Technique—A Source Book; Osgoo, C.E., Snider, J.G., Eds.; University of Illinois Press: Champaign, IL, USA, 1957. [Google Scholar]

- Anund, A.; Larsson, K.; Weidel, M.; Nygårdhs, S.; Hardestam, H.; Monstein, C.; Skogsmo, I.; Bröms, P. Autonoma Elektrifierade bussar: Sammanlagda Erfarenheter Med Fokus på Användare. Statens väg-och Transportforskningsinstitut Website. 2023. Available online: https://vti.diva-portal.org/smash/get/diva2:1775817/FULLTEXT01.pdf (accessed on 22 October 2025).

- Schütte, S.; Mohd Lokman, A.; Coleman, S.; Marco-Almagro, L. Unlocking Emotions in Design: A Comprehensive Guide to Kansei Engineering; BoD-Bok on Demand: Stockholm, Sweden, 2023. [Google Scholar]

- Braun, V.; Clarke, V. One size fits all? What counts as quality practice in (reflexive) thematic analysis? Qual. Res. Psychol. 2021, 18, 328–352. [Google Scholar] [CrossRef]

- NASA. Visible Light. NASA Science. 2010. Available online: https://science.nasa.gov/ems/09_visiblelight/ (accessed on 2 January 2025).

- Swedish Transport Agency. TSFS 2013:63—Road Traffic: Regulations and General Advice on Cars and Trailers Towed by Cars; Swedish Transport Agency: Norrköping, Sweden, 2013; pp. 121–149. Available online: https://www.transportstyrelsen.se/tsfs/TSFS%202013_63.pdf (accessed on 13 October 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).