Abstract

This study reviews obstacle detection technologies in vegetation for autonomous vehicles or robots. Autonomous vehicles used in agriculture and as lawn mowers face many environmental obstacles that are difficult to recognize for the vehicle sensor. This review provides information on choosing appropriate sensors to detect obstacles through vegetation, based on experiments carried out in different agricultural fields. The experimental setup from the literature consists of sensors placed in front of obstacles, including a thermal camera; red, green, blue (RGB) camera; 360° camera; light detection and ranging (LiDAR); and radar. These sensors were used either in combination or single-handedly on agricultural vehicles to detect objects hidden inside the agricultural field. The thermal camera successfully detected hidden objects, such as barrels, human mannequins, and humans, as did LiDAR in one experiment. The RGB camera and stereo camera were less efficient at detecting hidden objects compared with protruding objects. Radar detects hidden objects easily but lacks resolution. Hyperspectral sensing systems can identify and classify objects, but they consume a lot of storage. To obtain clearer and more robust data of hidden objects in vegetation and extreme weather conditions, further experiments should be performed for various climatic conditions combining active and passive sensors.

1. Introduction

Autonomous guided vehicles (AGVs) are widely used for different applications, such as military tasks, disaster recovery during natural calamities for safety operations, astronomy, lawn mowers, and agricultural use. To improve workflow, optimize functionality and reduce manual labor, AGVs are needed with precision route plans guided by advanced sensing technology. However, the biggest challenges of these systems today are sensing the surrounding environment and applying the detected information to control vehicle motions. The surrounding environmental problems for AGVs include navigating through off-road terrain while sensing a variety of ferrous and non-ferrous materials, facing illumination effects such as underexposure and overexposure, shadow, and working in bad weather conditions []. Detecting organic obstacles such as tall grass, tuft, and small bushes, for a traversable path in navigation is difficult for AGVs. Adding to organic obstacles, camouflaged animals in the field are threatened when AGVs are working as demonstrated in previous research work []. In addition, on bumpy (positive obstacle) and dirt roads, AGVs should constantly scan their surroundings to determine the travelability path based on bumps and the size of holes (negative obstacles) []. In general, AGVs should classify different obstacles (organic obstacles, positive and negative obstacles, hidden obstacles, etc.) efficiently to navigate through a field which is currently a challenge for AGVs. To help AGVs classify different obstacles by various sensors and to avoid potential risks, this study focuses on the objective of finding the right sensors for AGVs by reviewing the latest technologies.

The advanced spatial sensing systems (including, active and passive sensors) equipped on AGVs have the potential to detect all kinds of obstacles on the route. In order to clearly navigate through vegetation, it is necessary to find suitable sensors that can work in all weather and light conditions and identify obstacles of various material composition with acceptable processing time that are more efficient, safe, robust, and cost competitive. Sensor selection depends on the specific application and environment conditions. The following paragraph illustrates the importance of electromagnetic spectrum for sensor selection.

2. Sensors and the Electromagnetic Spectrum

In order to detect obstacles through vegetation, it is important to know the vegetation range in the electromagnetic spectrum. Because plants use light for photosynthesis, their remote sensing application range varies between the ultraviolet (UV) (range 10–380 nm), visible (450–750 nm), and infrared spectra (850–1700 nm) [].

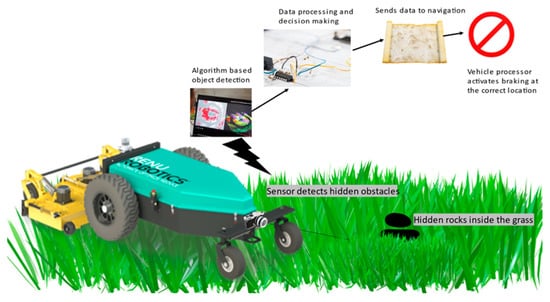

Based on the electromagnetic spectrum range for detecting plants, active and passive sensors are used for detection. Passive sensors detect electromagnetic radiation or light reflection from the objects. They work well with visible, infrared, thermal infrared, and even the microwave segment. Unlike passive sensors, active sensors have their own energy source, and emit pulse energy and receive the reflected energy to detect objects. Active sensors work well with the radio wave segment, and the typical sensors include LiDAR and radar. Along with these sensors, we need position estimation sensors such as accelerometers, gyroscopes, and global positioning system (GPS) [,] to track the vehicle’s location, orientation, and velocity. The information from post estimation sensors is further needed for synchronizing and registering subsequent frames from the imaging sensors. Tight integration of position solution with object detection is needed if object persistent principles are use in the object detection algorithms. As shown in Figure 1, one of the passive sensors detects the hidden objects in the field such as rocks and processes the data to the estimator sensor, which guides the vehicle in the right direction without damaging the vehicle.

Figure 1.

Concept of self-driving vehicle in the field.

The sensors allow self-driving vehicles such as AGVs to detect obstacles, while generating large amounts of sensing data, including point or pixel-based data. Processing those data is a challenge. The popular data-processing methods focus on point and pixelwise image classification, commonly referred to as semantic segmentation, which serves as a generic representation that allows for subsequent clustering, tracking, or further fusion with other modalities. Semantic segmentation can be first used to detect objects from the background [,]. Obstacle avoidance can be performed using a 2D or 3D bounding box to describe object location, trajectory, and size. Vehicle motion behavior can further distinguish passable obstacles (that must be traversable) and non-passable obstacles (that may be non-traversable).

2.1. Passive Sensors

Passive sensors measure the reflected solar electromagnetic energy from a surface in the presence of light [,]. These sensors do not have their own source of light and hence their performance is affected at night or by poor illumination conditions, except for thermal sensors. These sensors include cameras and computer vision technology that measures the distance of an object by receiving information about the position of the object []. Stereo cameras and RGB cameras detect protruding objects during the day. Thermal cameras work well in all light conditions. The following sections provide details on the passive sensors that may be used for obstacle detection under vegetation.

2.1.1. Stereo Cameras

There are two types of systems for passive sensing: monovision systems and stereovision systems. In the monovision system, one camera is used to estimate the distance which is based on reference points in the given camera field of view. The inconvenience of the monovision method is in estimating distances, recognizing the detected objects, and the complexity of the algorithm to classify the object categories while matching the real dimensions of the objects in different positions []. The stereovision system is a system that uses stereoscopic ranging techniques to calculate distance. This system is effective for depth sensing which uses two cameras as one and computes the distance with high accuracy []. The depth estimate is constrained by the distance of the baseline of two cameras. Short distances between two cameras results in a limited depth accuracy, whereas wide-baseline cameras provide better depth accuracy, but result into partial loss of spatial data and frequent occlusions [].

A stereo camera generates information on depth and 2D color image. Zaarane et al.’s [] method starts with capturing the scenes using both cameras. A vehicle detection algorithm (obstacle detection) is used in one image and a stereo matching algorithm is used on the other to match the detected vehicle (obstacle) []. The horizontal centroids of both objects are used to calculate and measure the distance and detect the depth of the obstacle.

Protruding objects and visually camouflaged animals can be identified with both the depth information and the 2D imaging data. In this way, depth-aware algorithms can be created based on the different perceptible characteristics (e.g., color, texture, shape, etc.) of object and depth data. The drawback of using a stereo camera is that the image quality and detection of obstacles is badly affected by illumination of light and weather conditions.

2.1.2. RGB Cameras

An RGB camera, captures the visible segment of an electromagnetic spectrum to provide information on the identified object’s color, texture, and shape in high resolution at a low cost. For RGB cameras, non-protruding (non-exposed) objects in tall grasses are noticeable to some extent. The performance of camera, however, is affected by bad weather conditions (e.g., fog, snow, and rain) and illumination conditions such as low light, night conditions and shadows. An RGB camera only provides 2D image data, and is unable to provide depth information of objects in 3D space. To compensate for the loss of depth information and obtain the positions of surrounding obstacles, the technologies of visual simultaneous localization and mapping (SLAM) [,] and structure from motion (SFM) [,] have been developed []. SLAM and SFM use multi-view geometry to estimate the motions (rotation and translation) and construct the unknown surrounding environment. However, they are limited to large scenes or quick movements.

2.1.3. Thermal Infrared Cameras

All objects emit infrared (or thermal) radiation for a temperature above absolute zero. Infrared radiation lies within the wavelength spectrum of 0.7–1000 μm. The mid-wavelength and long-wavelength infrared in the infrared spectral regions are often referred to as thermal infrared (TIR). TIR cameras with a detector are sensitive to either mid-wave infrared (MWIR) (3–5 μm) or long-wave infrared (LWIR) radiation (7–14 μm). The objects in the temperature range from approximately 190–1000 Kelvin emit radiation in this spectral range []. Thermal cameras detect electromagnetic spectrum radiation emitting from the target objects to form thermal images, which illustrate the heat and not the visible light of objects. They continuously provide daytime and nighttime thermal image data of passive terrain perception under any light, as well as foggy conditions. Rankin et al. [] introduced a TIR camera to provide imagery over the entire 24-h cycle.

An emissivity signature is a potential way to distinguish vegetation from objects or materials such as soil or rock. Each object has a specific emissivity for each spectral band and a certain temperature, and thus objects are clustered according to different regions of infrared color space of its emissivity, making it possible to distinguish into several classes. Vegetation and soil/rock materials have sufficiently different emissivity in both the broad MWIR and LWIR bands, which is illustrated in a study conducted by researchers from the California Institute of Technology, Jet Propulsion Laboratory (JPL) [].

2.1.4. Hyperspectral Sensing

Hyperspectral sensing is the technology of getting information about the chemical composition from the object’s emitted energy. The process involves dividing the electromagnetic spectrum into several narrow bands to read the spectral signatures of the materials in the generated image. This makes it easy to identify objects from a scene []. To detect the targeted object, the spectral signature of the object is obtained and matched with the spectral signature matching algorithms for a hyperspectral sensing image analysis.

Kwon et al. [] provided the overall idea of using hyperspectral sensing technology for obstacle detection in military application. Three hyperspectral sensors with different operating spectral ranges, dual band hyperspectral imager (DBHSI), an acousto-optical tunable filter imager, called SECOTS, and a visible- to near-infrared spectral imager SOC-700, were used to provide spectral data to the hyperspectral detection algorithms. DBHSI operates at the mid- and long-wave infrared bands and collects 128 bands of images simultaneously with a dual-color focal plane array to obtain hyperspectral images in two separate infrared spectral regions. SECOTS and SOC-700 span small, portable hyperspectral imagers operating at the visible to near-infrared bands. Newly developed hyperspectral anomaly and target detection algorithms were applied to the hyperspectral images generated and detect objects such as military vehicles, a barbed wire, and a chain-link fence []. This developed hyperspectral sensing system is expected to help unmanned guided vehicles to navigate safely in an unknown area.

It is possible to detect camouflaged animals using this system. However, it requires more data storage and consumes computation complexity to identify objects because of hyperspectral band selection. Gomez [] found that taking advantage of sensors such as radar and LiDAR, in addition to hyperspectral imaging, is advisable for developing remote sensing program strategies to produce specific application products.

2.2. Active Sensors

Active sensing methods measure the distance of objects by sending pulse signals to a target and receiving the signal bounced back, which are generally based on computing the time of flight (ToF) of laser, ultrasound, or radio signals of the electromagnetic spectrum to measure and search for objects []. LiDAR and radar are both active range sensors that provide distance measurements useful for detecting obstacles based on geometry, whereas passive camera sensors (e.g., color, thermal) provide visual clues useful for discriminating object classes.

2.2.1. LiDAR Sensors

LiDAR sensors use the ToF of reflected laser pulses to measure distances and detect objects []. A LiDAR camera emits billions of pulses per second up to 360° in all directions, thus generating a 3D matrix for the surrounding environment. Depending on the specs of different products, a LiDAR sensor generates up to millions of distance measurement points, as well as information on the position, shape, and movement of objects in seconds.

Although the benefits of LiDAR sensors are obvious, the main problems for obstacle detection and recognition in agricultural environments are (1) point cloud classification and (2) multimodal fusion. Point cloud classification deals with the issue of discriminating 3D point structures based on their shapes and neighborhoods for various applications, such as vehicle identification and tracking [], pedestrian-vehicle near-miss detection [], and background filtering []. Kragh [] proposed two methods for point classification of LiDAR-acquired 3D point clouds, which address sparsity and local point neighborhoods and were used for consistent feature extraction across entire point clouds. One method, based on a traditional processing pipeline, outperformed a generic 3D feature descriptor designed for dense point clouds. The other method used a 2D range image representation, semantic segmentation [] in 2D with deep learning. Together, the two methods showed that sparsity in LiDAR-acquired point clouds can be addressed intelligently by utilizing the known sample patterns. A combination of multiple representations may therefore accumulate the benefits and potentially provide increased accuracy and robustness []. To effectively use LiDAR for sensing obstacles in vegetation, LiDAR can be used in combination with stereo cameras to analyze the cloud data points with 2D images.

Multimodal fusion can increase classification robustness and confidence. It addresses the question of how LiDAR technology can work with other sensing modalities in agricultural environments. Kragh [] proposed and evaluated methods for sensor fusion between LiDAR and other sensors, incorporating spatial, temporal, and multimodal relationships to increase detection accuracy and thus enhance the safety of self-driving vehicles. The method consists of a self-supervised classification system using LiDAR to continuously supervise a visual classifier of traversability. LiDAR and camera data are then fused at the decision level with deep learning on range images. In the study [], Larson and Trivedi put forward a LiDAR-based method that uses geometric features of the outline of concave obstacles, which are sent to a support vector machine (SVM) classifier to detect obstacles. However, the laser can be reflected repeatedly in the pit resulting into the loss of observational information, and thus the suggestion is to combine LiDAR with other sensors, such as thermal cameras, for better concave obstacle detection.

2.2.2. Radar

Radar fires radio waves at a target area and monitors reflection from the objects within the area, generating position and distance data for the objects []. For remote sensing of trees and crops, object detection in vegetation was studied by Radar []. Detecting performance of radar involves two critical factors such as penetration depth of radar waves through the vegetation and angular (or spatial) resolution of the radar system. Penetration depth is the depth at which the signal strength of the radar is dropped (weaken) to 1/e (37%) [] of its original value. The range of wavelengths for remote sensing in vegetation varies from 70 cm (~0.5 GHz) to 1 cm (~30 GHz) []. Microwaves attenuation through vegetation increases generally with frequency []. Obstacle classification by radar can be summarized as attenuation, backscatter, phase variation, and depolarization []. In the presence of dust and fog, radar was successfully used for perception on autonomous vehicles [].

Convex obstacles such as trees, slopes, and hills can be detected easily by radars as they have a strong penetrating ability and can work well in bad weather conditions. Jing et al. [] used a Doppler-feature-based method to find the height of obstacles and classify convex obstacles. Since the accuracy is low, fusing the millimeter wave radar with other sensors is expected.

One experiment by Gusland et al. [] used radar with a pulse length of 250 μs, 0.4-GHz bandwidth, and four horizontally polarized transmit antennas. The obstacles included in this experiment were a small rock, large rock, concrete support, tree stub, and paint can. The experiment confirmed system functioning and cross-range resolution improvement due to the multiple-input, multiple-output configuration, and the initial results indicate that the system is capable of detecting obstacles hidden in vegetation. The contrast between relevant obstacles and vegetation clutter is of critical importance, defined as the maximum reflected power of the resolution cell containing the obstacle compared with the maximum of the vegetation surrounding it [].

3. Comparison of Sensors

Table 1 summarizes the advantages and disadvantages of each sensor and the potential use of each sensor to detect obstacles.

Table 1.

Sensor Comparison.

RGB cameras are low-cost compared with other sensors. The disadvantage of this sensor is that it cannot sense the depth of the object or work well in bad weather. Comparatively, stereo cameras can detect and sense obstacle depth, as well as identify camouflaged animals and protruding objects. A key ability of thermal cameras is that the sensing data are not affected by camouflaged animals and the illumination effect while the performance capabilities are affected by the ambient temperature []. Another drawback of thermal cameras is the low resolution and loss of range data when the camera is in motion, as well as when there is texture difference.

Hyperspectral imaging sensors provide better resolution and acquire images across several narrow spectral bands ranging from the visible region to mid-infrared region of the electromagnetic spectrum [,,,,,]. Thus, object identification and classification become easy. Although equipped with a wide spectral band, sensor performance is affected by variation in illumination, and the system is not robust with respect to the environment [].

LiDAR provides more accurate depth information for a longer range while capturing the data horizontally up to 360°. The drawback of LiDAR is in recognizing the objects due to the lack of visual and thermal information []. Radar also lacks in giving a good resolution due to the sparsity of data and thereby making object recognition a challenging task.

Thus, both active and passive sensors have certain limitations. The main inconvenience of using active sensors is the potential confusion of echoes from subsequent emitted pulses resulting in sparse data, and the accuracy range of distance for these systems is usually bounded between 1 and 4 m []. Although active sensors have certain disadvantages, their vision characteristics are not affected by climatic conditions. Comparatively, passive sensors have dense data but are affected by climatic conditions. Hence, fusing active and passive sensors can be an effective way to compensate for drawbacks of each type.

4. Fusion of Sensors Applications

The fusion of sensors is performed at two levels: low-level and high-level. Low-level fusion combines raw data of different sensors, and high-level fusion involves combining data from the sensors to classify objects into different categories []. At low-level fusion, the outputs of different sensors are fused together, and at high-level fusion, the outputs of sensors are categorized as obstacles detected by sensors. Cameras provide dense texture and semantic information about the scene, but have difficulty directly measuring the shape and location of a detected object []. LiDAR provides an accurate distance measurement of an object; however, precise point cloud segmentation for object detection involves computational complexity due to the sparsity in horizontal and vertical resolution of the scanning points. Radar provides object-level speed and location via range and range-rate but does not provide an accurate shape of the objects. Thus, passive sensors are used for sensing the appearance of the environment and the objects, whereas active sensors are used for geometric sensing. There have been several approaches made to combine different sensors to detect obstacles hidden under vegetation. The following sections identify popular fusion methods from recent research.

No single sensor can detect objects reliably and single-handedly in all weather conditions. Active sensors such as radar and LiDAR, and passive sensors such as RGB camera, stereo camera, and thermal camera and hyperspectral sensing have different pros and cons concerning illumination, weather, working range, and resolution, and thus a combination of these sensors are needed to accommodate various working environments [].

4.1. RGB and Infrared Camera

Microsoft’s Kinect sensor uses structured-light and ToF-based RGB-D cameras to detect obstacles []. It is a typical RGB and infrared combination camera. The cameras emit their own light and are more robust in low-light environments. However, they are not suitable for outdoor environments because of the submerged laser speckle under strong light. The ToF depth cameras emit infrared light and measure the observed object for depth measurements. They provide accurate depth measurement but have low resolution and significant acquisition noises [].

Nissimov et al. [] used the sensor to detect obstacles in a greenhouse robotic application. The RGB camera in the sensor takes images and the infrared laser emitter senses the depth of the image by emitting the infrared dots captured by infrared camera. Thus, the sensor uses color and depth information to detect obstacles. The gradient of the image is calculated, and based on a threshold value of the gradient, the object is classified as either traversable or non-traversable. Further, to increase the efficiency of the detected objects, a local binary pattern (LBP) texture analysis was conducted for the pixels near the detected objects. The paper confirmed that with the texture analysis, the system can detect unidentified objects near the detected objects. However, the drawback of this system is that it cannot be operated in all lighting conditions. The sensor requires the returning beams of the infrared emitter to be easily detectable within the image of the infrared camera, and thus the beams must be significantly stronger than the ambient light [].

4.2. Radar and Stereo Camera

Reina et al. [] conducted a fusion of a radar and stereo camera. Radar is a good sensor for range measurement, and stereo cameras provide clear resolution for detected objects. Thus, combining these sensors results in improved 3D localization of obstacles up to 30 m. Radar is used to obtain 2D points of the detected obstacles, which is then augmented with stereo camera data to obtain the obstacle information. The intensity range of the radar beam determines the obstacle detection performance. The detected obstacle range and contour is used with sub-cloud in a stereo-generated 3D point cloud. Sub-cloud is the volume of interested areas of given radar-labeled obstacles in the stereo-generated 3D point cloud. This helps to obtain stereoscopic 3D geometric and color information of the detected object.

Jha, Lohdi, and Chakravarty [] discussed the fusion of radar and stereo cameras, giving real-time information on the environment for navigation. The research used the 76.5-GHz millimeter wave radar to provide information on the range and azimuth of the detected objects. The camera output data were collected and processed framewise in real time by the YOLOv3 algorithm []. Thus, the weights of the trained YOLOv3 model were used to detect and identify objects, which were mapped with radar data to find the distance and angle of the objects for vehicle navigation. However, the study does not clearly explain the functionality of the fusion for detecting hidden objects; rather, it provides good results for object detection and identification.

4.3. LiDAR and Camera

Some researchers used self-supervised systems in which one device is used to monitor the other device (stereo radar, RGB-radar, and RGB-LiDAR []) and improve the detection performance. The difference between this system and sensor fusion is that fusing sensors provides accurate data rather than supervising the sensors. According to Kragh and Underwood [], assuming perfect calibration between the camera sensor and LiDAR sensor, involves fusing LiDAR points with camera images. The calibration of sensors is challenging and involves much computation effort.

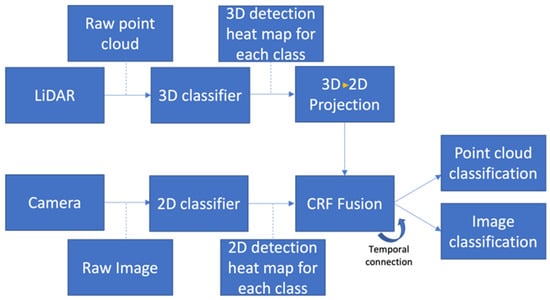

Semantic segmentation for object detection using LiDAR and a camera results in capturing the objects not easily detected by bounding boxes. By using the LiDAR and camera fusion as shown in Figure 2, visual information from color cameras is good for environmental sensing, and 3D LiDAR information serves to distinguish flat, traversable ground areas from non-traversable elements (includes trees and other obstacles in the path) []. The combination of appearance- and geometric-based detection processes is performed using the conditional random field [], which predicts objects based on the current data provided by the LiDAR and camera. The results from Kragh and Underwood [] show that, for a two-class classification problem (ground and nonground) where LiDAR distinguishes ground and nonground structures without the aid of the camera. To classify more objects into various classes (ground, sky, vegetation, and object), both the devices are needed to complement each other and improve the performance using the conditional random field (CRF) [].

Figure 2.

Fusing LiDAR and camera data to obtain classification.

Vehicle and pedestrian detection use a common fusion process of camera and LiDAR, where LiDAR data generate the regions of interest (ROIs) on the image by extrinsic calibration and then an image-based segmentation and detection method detects vehicles and pedestrians [,,,].

Fu et al. [] used a deep fusion architecture through a convolution neural network for fusing LiDAR data with RGB images to complete the depth map of the environment. This method can deal with sensor failure for AGV’s, improving the robustness of the perception system.

Starr and Lattimer [] combined a thermal camera with a 3D LiDAR to obtain good results for low-visibility detection. In their work, two sensors detect objects separately and then adopt the evidential fusion method (Dempster–Shafer theory []). Similarly, Zhang [] first proposed a two-step method of calibration between a 3D LiDAR and a thermal camera. The fusion algorithms between these two sensors are not limited to low-level fusion but can be extended for high-level fusion.

4.4. LiDAR and Radar

Another research task [] used low-power, ultra-wideband radar sensors in combination with higher-resolution range imaging devices (such as LiDAR and stereovision) for AGV. This combination of LiDAR and radar served effective at sensing sparse vegetation; however, it was less effective at sensing dense vegetation. This indicated treating dense vegetation area as a non-traversable path and sparse area as a traversable path, serving the advantage for detecting obstacles through vegetation [].

Kwon [] provides interesting research based on partly blocked pedestrian detection by using LiDAR and radar. The method considers the blocked depth projected part of the object to determine the existence of the blocked object. According to this study, radar detects the partially blocked object easily compared with LiDAR due to the Doppler (change in frequency) pattern. Thus, a partially blocked pedestrian is detected using the combination of occluded depth LiDAR data of human characteristics curve and the radar Doppler distribution of a pedestrian. Thus, this combination of sensor fusion scheme is useful in an AGV to detect hidden objects and prevent collision []. Similarly, object characteristic point-cloud classification of LiDAR can be experimented with an occluded hidden object to obtain the desired results.

Table 2 highlights the summary of the sensor fusion used for different applications and shows the area for potential research. For example, for spare vegetation or partially hidden objects detection, the LiDAR and radar combination works better. Radar and stereo camera are suitable for range measurement, object detection, and location tracking.

Table 2.

Sensor fusion and application.

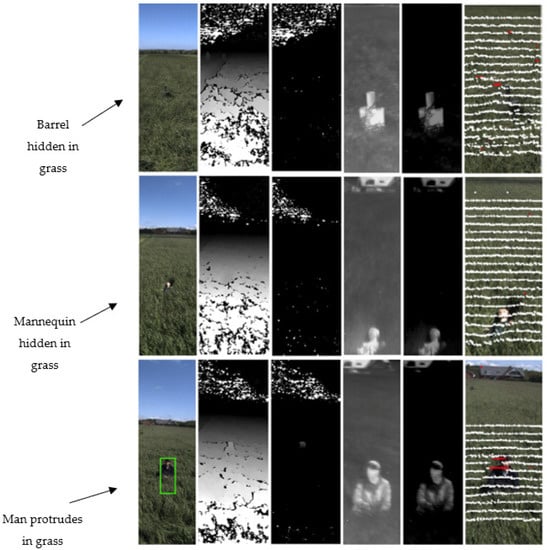

4.5. Multimodal Sensors

Kragh [] established a flexible vehicle-mounted sensor platform at Denmark (Figure 3), which recorded imaging and position data for a moving vehicle using an RGB camera (Logitech HD Pro C920), thermal camera (FLIR A65, 13 mm), stereo camera (Multisense S21 CMV2000), LiDAR (Velodyne HDL-32E), radar (Delphi ESR), and two position estimation sensors, GPS (Trimble BD982 GNSS) and IMU (Vectornav VN-100). The platform illustrated in Figure 3 includes seven sensors, and thermal and stereo cameras are linked with a frame grabber and provide image data to the controller via ethernet. The platform collected real time data from all the sensors where the data were used for offline processing. The following paragraph indicates detection of a human, barrel, and mannequin hidden inside the field using a multimodal sensing platform.

Figure 3.

Experimental setup for multimodal sensor platform [].

The experiment was conducted in an open-space lawn with high grass, and the objects were a partly hidden barrel, lying child mannequin, and sitting human (Figure 4). The RGB camera in the extreme left column of Figure 4, was able to detect the sitting human but was not able to detect hidden human. Thus, images from the stereo camera (next to RGB image column) can only detect the sitting human and not the barrel. The LiDAR sensor (extreme right image column) was more reliable and was able to reflect both the sitting human and protruded barrel but did not reflect the lying child mannequin. Among all, the thermal camera (next to stereo camera image column) achieved robust detection performance for all three objects. However, the thermal camera is affected by the warm climate where the temperature of the objects is similar, creating sensing issues []. Thus, from this study [], we found that the combination of sensors is required for effective detection of all hidden or partly hidden objects in the field.

Figure 4.

Barrel, child mannequin, and human detection experiment result [].

From the experiments and research studies, it was found that the use of multiple sensors is a difficult process that requires the fusion of various sensor data which adds to computational complexity. The data set must be expanded in inclement weather conditions to provide a thorough evaluation for complicated agricultural environments. For future work, experiments should be carried out on moving obstacles or organic objects (such as animal bodies).

5. Conclusions and Recommendations

This study preliminarily explores state-of-the-art obstacle detection in vegetation, introducing a series of sensors and technologies and discussing their attributes in various environments. Using multiple sensors would add to computational complexity; thus, we found that fusing two sensors would yield effective results while keeping the computational burden reasonable. As discussed, active sensors are effectively used for geometric sensing while detecting an accurate range of obstacles, and passive sensors provide a high-resolution appearance of environment and objects. Therefore, it was found that fusing data of an active and passive sensor results in effectively detecting hidden obstacles in vegetation. Sensor selection should be performed according to the application and environment. Radar is the affordable solution for an active sensor that penetrates vegetation, and can be fused with a stereo camera. The second-most effective sensor is the use of LiDAR in combination with a radar/stereo camera, which is effective in detecting objects. Thermal cameras effectively detect hidden objects; however, a combination of those sensors sharing their advantages is worth exploring for detecting obstacles in complicated vegetation environments. In addition, the vehicles mounted with sensors are moving, so a stable and reliable detection capability through the sensors for a self-driving vehicle should be studied as well. The needs of improving working conditions in the vegetation objects detection sector, including policy support and technological advancements, as well as the integration of other services, are critical and necessary for further deploying the sensor technologies in the real world.

Author Contributions

Conceptualization: all; methodology, S.L., L.Z. and M.B.; formal analysis, S.Y., L.Z., S.L., P.G. and M.B.; investigation, S.L.; data curation, S.L.; writing—original draft preparation, S.L. and L.Z.; writing—review and editing, S.Y., P.G. and M.B.; supervision, S.Y., L.Z., P.G. and M.B.; project administration, S.Y.; funding acquisition, M.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

This work was authored in part by the National Renewable Energy Laboratory, operated by Alliance for Sustainable Energy, LLC, for the U.S. Department of Energy (DOE) under Contract No. DE-AC36-08GO28308. Funding was provided by U.S. Department of Energy AMERICAN-MADE CHALLENGES VOUCHER. The views expressed in the article do not necessarily represent the views of the DOE or the U.S. Government. The U.S. Government retains and the publisher, by accepting the article for publication, acknowledges that the U.S. Government retains a nonexclusive, paid-up, irrevocable, worldwide license to publish or reproduce the published form of this work, or allow others to do so, for U.S. Government purposes.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Nguyen, D.-V. Vegetation Detection and Terrain Classification for Autonomous Navigation. 2013. Available online: https://www.researchgate.net/publication/265160356_Vegetation_Detection_and_Terrain_Classification_for_Autonomous_Navigation (accessed on 6 September 2021).

- Rasmussen, S.; Schrøder, A.; Mathiesen, R.; Nielsen, J.; Pertoldi, C.; Macdonald, D. Wildlife Conservation at a Garden Level: The Effect of Robotic Lawn Mowers on European Hedgehogs (Erinaceus europaeus). Animals 2021, 11, 1191. [Google Scholar] [CrossRef]

- Jinru, X.; Su, B. Significant Remote Sensing Vegetation Indices: A Review of Developments and Applications. J. Sens. 2017, 2017, 1353691. [Google Scholar]

- Zhu, L.; Holden, J.; Gonder, J. Trajectory Segmentation Map-Matching Approach for Large-Scale, High-Resolution GPS Data. Transp. Res. Rec. J. Transp. Res. Board 2017, 2645, 67–75. [Google Scholar] [CrossRef]

- Zhu, L.; Gonder, J.; Lin, L. Prediction of Individual Social-Demographic Role Based on Travel Behavior Variability Using Long-Term GPS Data. J. Adv. Transp. 2017, 2017, 7290248. [Google Scholar] [CrossRef] [Green Version]

- Laugraud, B.; Piérard, S.; van Droogenbroeck, M. Labgen-p-semantic: A first step for leveraging semantic segmentation in background generation. J. Imaging 2018, 4, 86. [Google Scholar] [CrossRef] [Green Version]

- Zeng, D.; Chen, X.; Zhu, M.; Goesele, M.; Kuijper, A. Background Subtraction with Real-Time Semantic Segmentation. IEEE Access 2019, 7, 153869–153884. [Google Scholar] [CrossRef]

- Zaarane, A.; Slimani, I.; Al Okaishi, W.; Atouf, I.; Hamdoun, A. Distance measurement system for autonomous vehicles using stereo camera. Array 2020, 5, 100016. [Google Scholar] [CrossRef]

- Aggarwal, S. Photogrammetry and Remote Sensing Division Indian Institute of Remote Sensing, Dehra Dun. Available online: https://www.preventionweb.net/files/1682_9970.pdf#page=28 (accessed on 6 September 2021).

- Salman, Y.D.; Ku-Mahamud, K.R.; Kamioka, E. Distance measurement for self-driving cars using stereo camera. In Proceedings of the International Conference on Computing and Informatics, Kuala Lumpur, Malaysia, 5–27 April 2017. [Google Scholar]

- Zhang, Z.; Han, Y.; Zhou, Y.; Dai, M. A novel absolute localization estimation of a target with monocular vision. Optik 2013, 124, 1218–1223. [Google Scholar] [CrossRef]

- Seitz, S.M.; Curless, B.; Diebel, J.; Scharstein, D.; Szeliski, R. A Comparison and Evaluation of Multi-View Stereo Reconstruction Algorithms. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006. [Google Scholar]

- Bailey, T.; Durrant-Whyte, H. Simultaneous localization and mapping (SLAM): Part II. IEEE Robot. Autom. Mag. 2006, 13, 108–117. [Google Scholar] [CrossRef] [Green Version]

- Choset, H.; Nagatani, K. Topological simultaneous localization and mapping (SLAM): Toward exact localization without explicit localization. IEEE Trans. Robot. Autom. 2001, 17, 125–137. [Google Scholar] [CrossRef] [Green Version]

- Schonberger, J.L.; Frahm, J.M. Structure-from-motion revisited. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Özyeşil, O.; Voroninski, V.; Basri, R.; Singer, A. A survey of structure from motion. Acta Numer. 2017, 26, 305–364. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, Present, and Future of Simultaneous Localization and Mapping: Toward the Robust-Perception Age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef] [Green Version]

- Ulaby, F.T.; Wilson, E.A. Microwave attenuation properties of vegetation canopies. IEEE Trans. Geosci. Remote. Sens. 1985, GE-23, 746–753. [Google Scholar] [CrossRef]

- Rankin, A.; Huertas, A.; Matthies, L.; Bajracharya, M.; Assad, C.; Brennan, S.; Bellutta, P.; Sherwin, G.W. Unmanned ground vehicle perception using thermal infrared cameras. In Proceedings of the Unmanned Systems Technology XIII. International Society for Optics and Photonics, SPIE Defense, Security and Sensing, Orlando, FL, USA, 25–29 April 2011. [Google Scholar]

- Gomez, R.B. Hyperspectral imaging: A useful technology for transportation analysis. Opt. Eng. 2002, 41, 2137–2143. [Google Scholar] [CrossRef]

- Hyperspectral Imaging and Obstacle Detection for Robotics Navigation. Available online: https://apps.dtic.mil/sti/pdfs/ADA486436.pdf (accessed on 12 April 2021).

- Killinger, D. Lidar (Light Detection and Ranging); Elsevier: Amsterdam, The Netherlands, 2014; pp. 292–312. [Google Scholar] [CrossRef]

- Zhao, J.; Xu, H.; Liu, H.; Wu, J.; Zheng, Y.; Wu, D. Detection and tracking of pedestrians and vehicles using roadside LiDAR sensors. Transp. Res. Part C Emerg. Technol. 2019, 100, 68–87. [Google Scholar] [CrossRef]

- Wu, J.; Xu, H.; Zheng, Y.; Tian, Z. A novel method of vehicle-pedestrian near-crash identification with roadside LiDAR data. Accid. Anal. Prev. 2018, 121, 238–249. [Google Scholar] [CrossRef]

- Wu, J.; Xu, H.; Zheng, J. Automatic background filtering and lane identification with roadside LiDAR data. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16 October 2017. [Google Scholar]

- Kragh, M.F. Lidar-Based Obstacle Detection and Recognition for Autonomous Agricultural Vehicles; AU Library Scholarly Publishing Services: Aarhus, Denmark, 2018. [Google Scholar] [CrossRef]

- Wang, P.; Chen, P.; Yuan, Y.; Liu, D.; Huang, Z.; Hou, X.; Cottrell, G. Understanding convolution for semantic segmentation. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018. [Google Scholar]

- Larson, J.; Trivedi, M. Lidar based off-road negative obstacle detection and analysis. In Proceedings of the 2011 14th International IEEE Conference on Intelligent Transportation Systems (ITSC), Washington, DC, USA, 5–7 October 2011. [Google Scholar]

- Manjunath, A.; Liu, Y.; Henriques, B.; Engstle, A. Radar based object detection and tracking for autonomous driving. In Proceedings of the in 2018 IEEE MTT-S International Conference on Microwaves for Intelligent Mobility (ICMIM), Munich, Germany, 15–17 April 2018. [Google Scholar]

- Matthies, L.; Bergh, C.; Castano, A.; Macedo, J.; Manduchi, R. Obstacle Detection in Foliage with Ladar and Radar. In Proceedings of the Eleventh International Symposium Robotics Research, Siena, Italy, 19–22 October 2003; Springer: Berlin/Heidelberg, Germany, 2005; Volume 15, pp. 291–300. [Google Scholar] [CrossRef] [Green Version]

- Richards, J. The Use of Multiple-Polarization Data in Foliage Penetrating (FOPEN) Synthetic Aperture Radar (SAR) Applications; SAND Report; Sandia National Laboratories: Albuquerque, NW, USA; Livermore, CA, USA, 2002.

- Reina, G.; Underwood, J.; Brooker, G.; Durrant-Whyte, H. Radar-based perception for autonomous outdoor vehicles. J. Field Robot. 2011, 28, 894–913. [Google Scholar] [CrossRef]

- Jing, X.; Du, Z.C.; Li, F. Obstacle detection by Doppler frequency shift. Electron. Sci. Technol. 2013, 26, 57–60. [Google Scholar]

- Gusland, D.; Torvik, B.; Finden, E.; Gulbrandsen, F.; Smestad, R. Imaging radar for navigation and surveillance on an autonomous unmanned ground vehicle capable of detecting obstacles obscured by vegetation. In Proceedings of the 2019 IEEE Radar Conference (RadarConf), Boston, MA, USA, 22–26 April 2019. [Google Scholar]

- Khan, M.J.; Khan, H.S.; Yousaf, A.; Khurshid, K.; Abbas, A. Modern Trends in Hyperspectral Image Analysis: A Review; IEEE: New York, NY, USA, 2018; pp. 14118–14129. [Google Scholar]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral Imaging: A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef] [Green Version]

- Govender, M.; Chetty, K.; Bulcock, H. A review of hyperspectral remote sensing and its application in vegetation and water resource studies. J. Water S. Afr. 2007, 33, 145–151. [Google Scholar] [CrossRef] [Green Version]

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Pölönen, I.; Hakala, T.; Litkey, P.; Mäkynen, J.; Pesonen, L. Processing and Assessment of Spectrometric, Stereoscopic Imagery Collected Using a Lightweight UAV Spectral Camera for Precision Agriculture. Remote Sens. 2013, 5, 5006–5039. [Google Scholar] [CrossRef] [Green Version]

- Wilcox, C.C.; Montes, M.; Yetzbacher, M.; Edelberg, J.; Schlupf, J. Micro-and Nanotechnology Sensors, Systems, and Applications X. In Proceedings of the SPIE Defence and Security, Orlando, FL, USA, 15 April 2018. [Google Scholar]

- Landgrebe, D. Information Extraction Principles and Methods for Multispectral and Hyperspectral Image Data. In Information Processing for Remote Sensing; World Scientific Publishing: Singapore, 1999. [Google Scholar]

- Stuart, M.B.; Mcgonigle, A.J.S.; Willmott, J.R. Hyperspectral Imaging in Environmental Monitoring: A Review of Recent Developments and Technological Advances in Compact Field Deployable Systems. Sensors 2019, 19, 3071. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kragh, M.; Underwood, J. Multimodal obstacle detection in unstructured environments with conditional random fields. J. Field Robot. 2019, 37, 53–72. [Google Scholar] [CrossRef] [Green Version]

- Fu, C.; Mertz, C.; Dolan, J.M. LIDAR and Monocular Camera Fusion: On-road Depth Completion for Autonomous Driving. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 273–278. [Google Scholar] [CrossRef]

- Zhang, Z. Microsoft Kinect Sensor and Its Effect. IEEE Multimed. 2012, 19, 4–10. [Google Scholar] [CrossRef] [Green Version]

- Hu, J.-W. A survey on multi-sensor fusion based obstacle detection for intelligent ground vehicles in off-road environments. Front. Inf. Technol. Electron. Eng. 2020, 21, 675–692. [Google Scholar] [CrossRef]

- Nissimov, S.; Goldberger, J.; Alchanatis, V. Obstacle detection in a greenhouse environment using the Kinect sensor. Comput. Electron. Agric. 2015, 113, 104–115. [Google Scholar] [CrossRef]

- Reina, G.; Milella, A.; Rouveure, R. Traversability analysis for off-road vehicles using stereo and radar data. In Proceedings of the 2015 IEEE International Conference on Industrial Technology (ICIT), Seville, Spain, 17–19 March 2015; pp. 540–546. [Google Scholar] [CrossRef]

- Jha, H.; Lodhi, V.; Chakravarty, D. Object Detection and Identification Using Vision and Radar Data Fusion System for Ground-Based Navigation. In Proceedings of the 2019 6th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 7–8 March 2019; pp. 590–593. [Google Scholar] [CrossRef]

- Zhao, L.; Li, S. Object detection algorithm based on improved YOLOv3. Electronics 2020, 9, 537. [Google Scholar] [CrossRef] [Green Version]

- Tseng, H.; Chang, P.C.; Andrew, G.; Jurafsky, D.; Manning, C.D. A conditional random field word segmenter for sighan bakeoff 2005. In Proceedings of the Fourth SIGHAN Workshop on Chinese Language Processing, Jeju Island, Korea, 14–15 October 2005. [Google Scholar]

- Premebida, C.; Nunes, U.J.C. Fusing LIDAR, camera and semantic information: A context-based approach for pedestrian detection. Int. J. Robot. Res. 2013, 32, 371–384. [Google Scholar] [CrossRef]

- García, F.; Garcia, J.; Ponz, A.; de la Escalera, A.; Armingol, J.M. Context aided pedestrian detection for danger estimation based on laser scanner and computer vision. Expert Syst. Appl. 2014, 41, 6646–6661. [Google Scholar] [CrossRef] [Green Version]

- Zhao, G.; Xiao, X.; Yuan, J.; Ng, G.W. Fusion of 3D-LIDAR and camera data for scene parsing. J. Vis. Commun. Image Represent. 2014, 25, 65–183. [Google Scholar] [CrossRef]

- Rubaiyat, A.H.M.; Fallah, Y.; Li, X.; Bansal, G.; Infotechnology, T. Multi-sensor Data Fusion for Vehicle Detection in Autonomous Vehicle Applications. Electron. Imaging 2018, 2018, 257-1–257-6. [Google Scholar] [CrossRef]

- Starr, J.W.; Lattimer, B.Y. Evidential Sensor Fusion of Long-Wavelength Infrared Stereo Vision and 3D-LIDAR for Rangefinding in Fire Environments. Fire Technol. 2017, 53, 1961–1983. [Google Scholar] [CrossRef]

- Shafer, G. Dempster-shafer theory. Encycl. Artif. Intell. 1992, 1, 330–331. [Google Scholar]

- Zhang, J.; Siritanawan, P.; Yue, Y.; Yang, C.; Wen, M.; Wang, D. A Two-step Method for Extrinsic Calibration between a Sparse 3D LiDAR and a Thermal Camera. In Proceedings of the 2018 15th International Conference on Control, Automation, Robotics and Vision (ICARCV), Singapore, 18–21 November 2018; pp. 1039–1044. [Google Scholar] [CrossRef]

- Yamauchi, B. Daredevil: Ultra-wideband radar sensing for small UGVs. In Proceedings of the Unmanned Systems Technology IX. International Society for Optics and Photonics, Orlando, FL, USA, 2 May 2007. [Google Scholar]

- Kwon, S.K. A Novel Human Detection Scheme and Occlusion Reasoning using LIDAR-RADAR Sensor Fusion. Master’s Thesis, University DGIST, Daegu, Korea, February 2017; p. 57. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).