Using Large Language Models to Extract Structured Data from Health Coaching Dialogues: A Comparative Study of Code Generation Versus Direct Information Extraction

Abstract

1. Introduction

2. Materials and Methods

2.1. Large Language Models Used

2.2. Architecture

2.3. Input Format

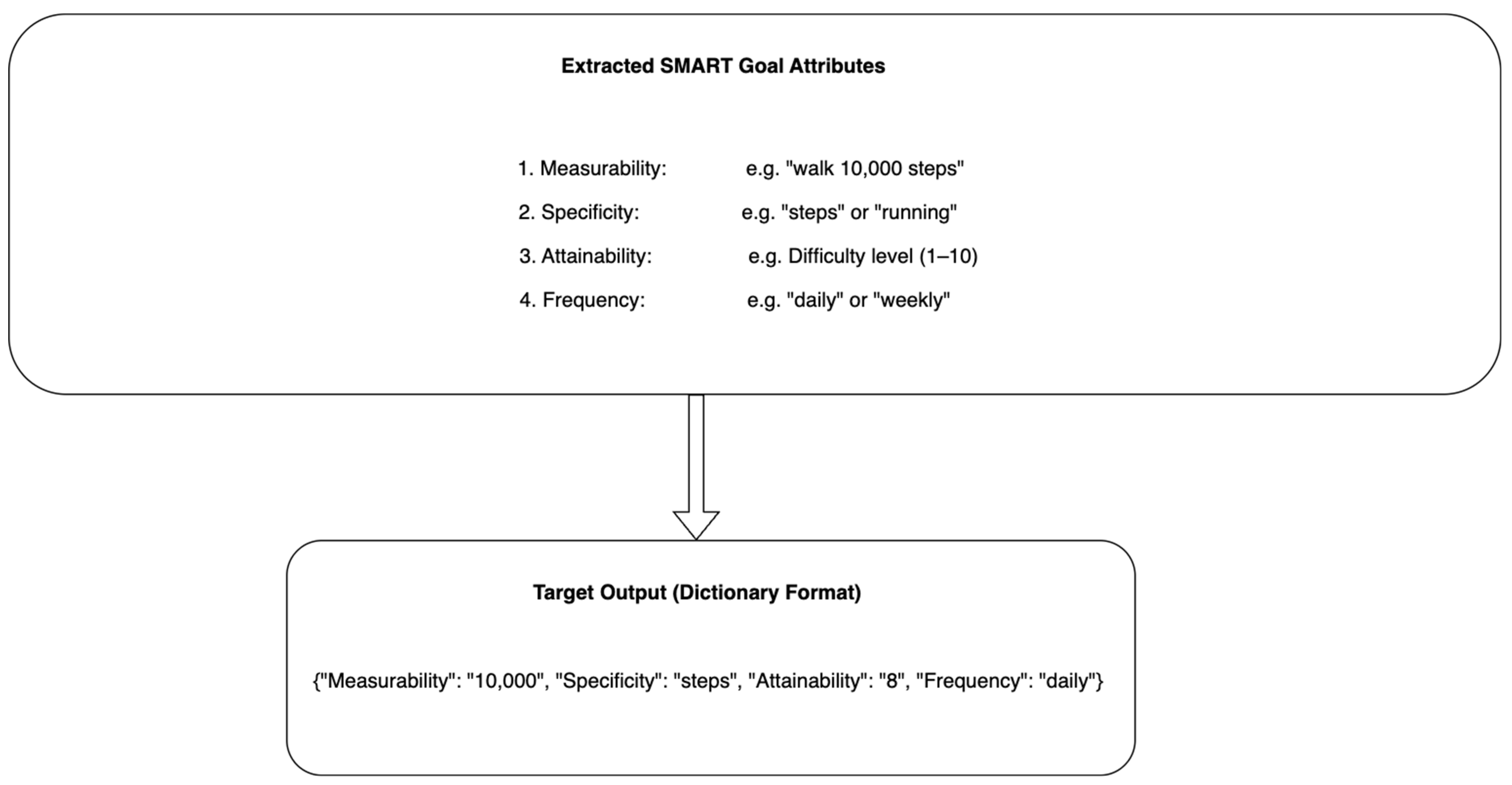

- Measurability (e.g., “walk 10,000 steps”);

- Specificity (e.g., “steps” or “running”);

- Attainability (estimated difficulty level);

- Frequency (e.g., “daily” or “weekly”).

2.4. Output Format

2.5. Dataset

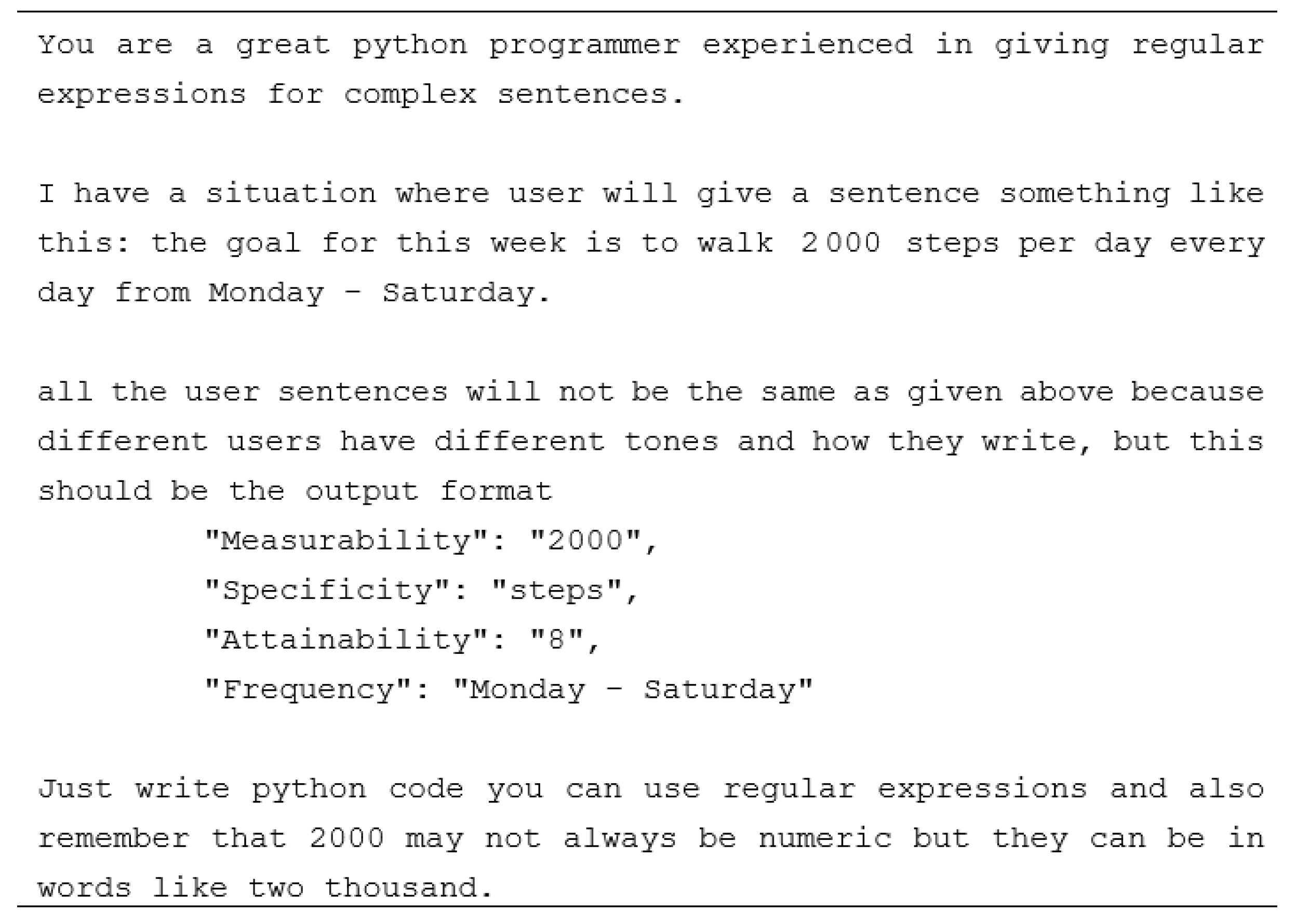

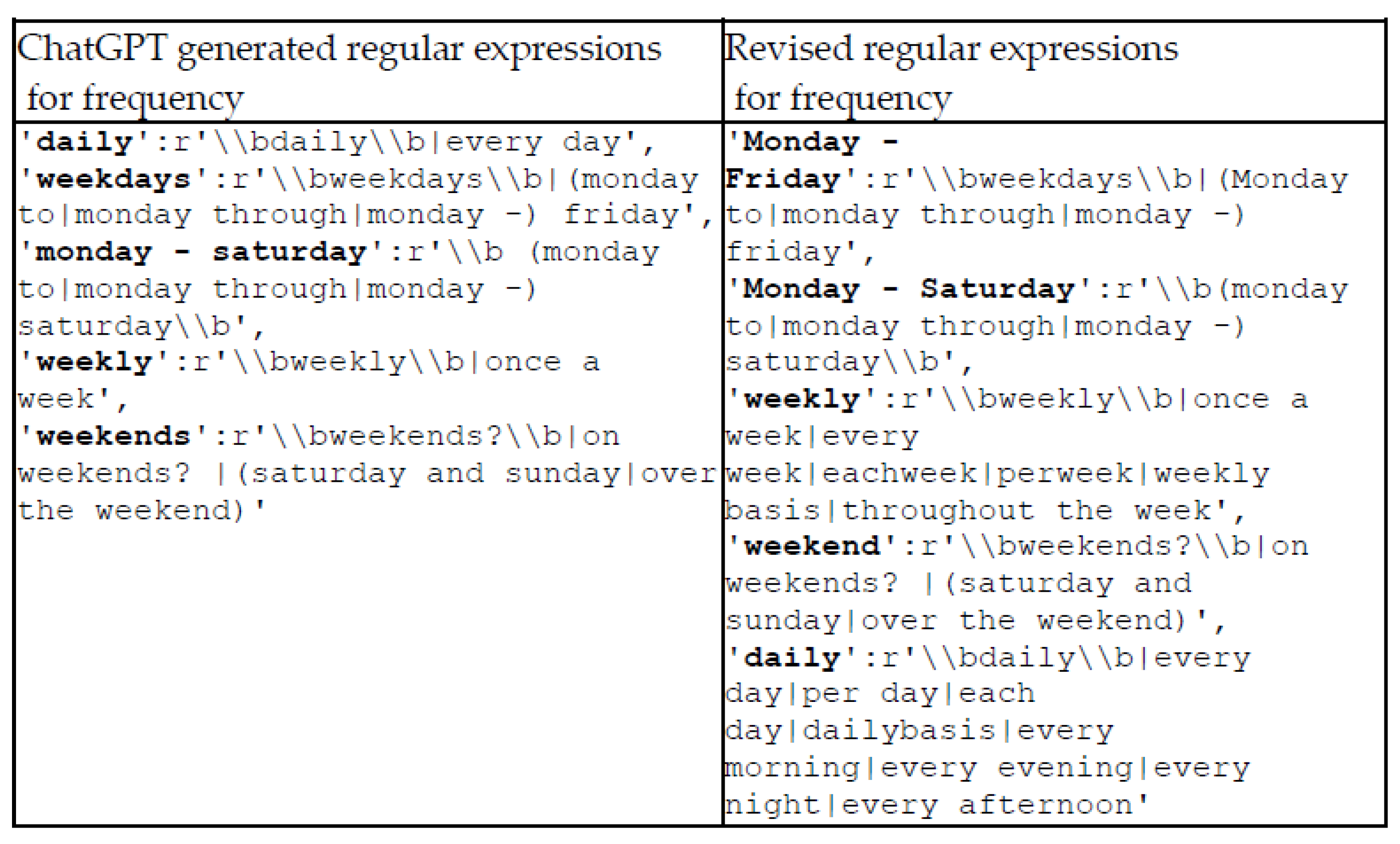

2.6. Automatic Generation of Pattern-Matching Functions

2.6.1. Initial Design and Setup

2.6.2. Extracting and Processing Data

2.6.3. Testing and Iterative Refinement

2.7. Direct Extraction of Goal-Related Attributes by LLMs

2.7.1. Development of Prompts for LLMs Without Fine-Tuning

- Zero-shot learning (ZSL)—Direct input parsing without prior examples;

- One-shot learning (1SL)—Input with just a single example to guide the model;

- Few-shot learning (FSL)—Input with multiple examples to improve accuracy.

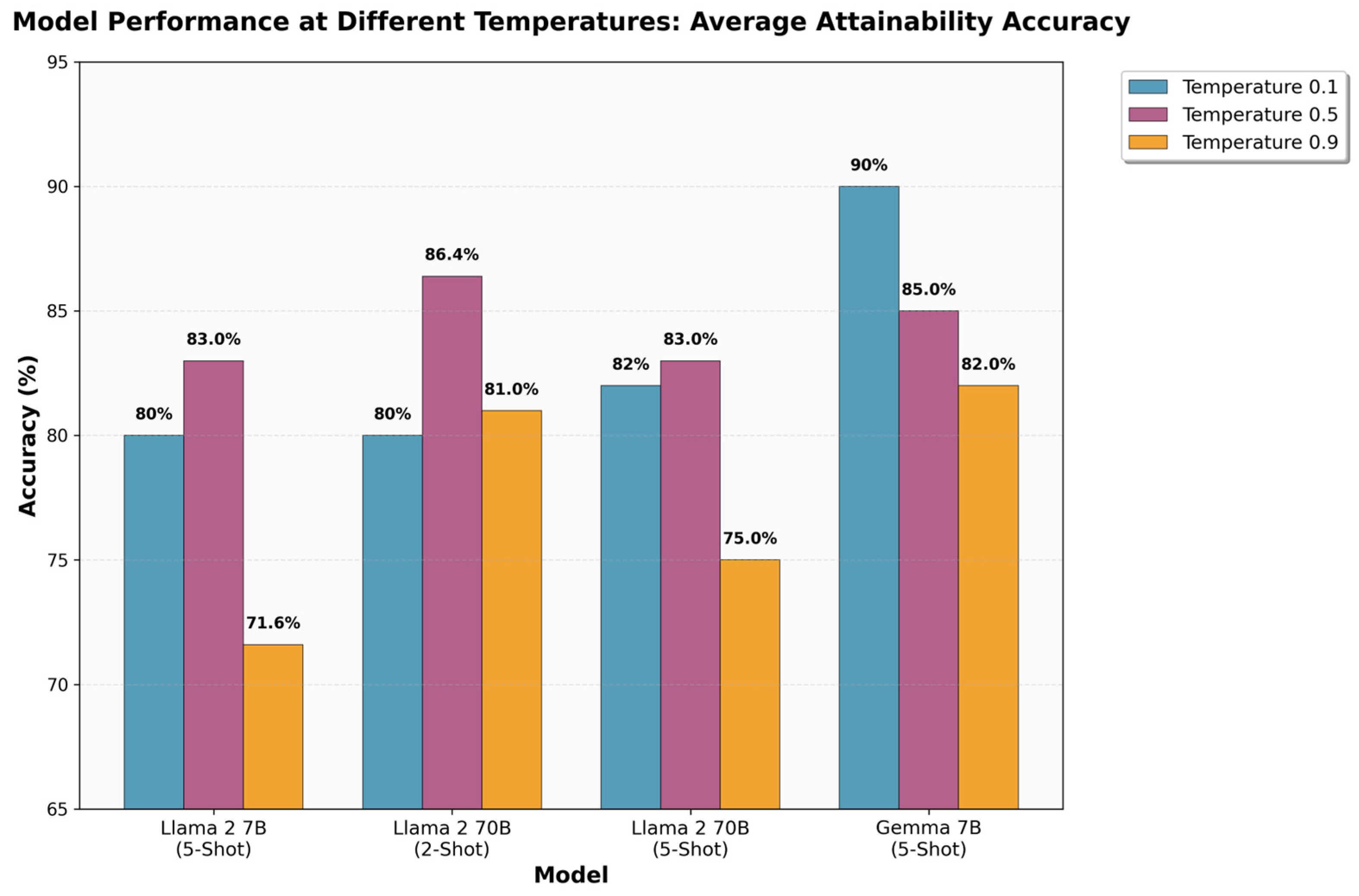

2.7.2. Evaluation and Optimization of Alternative Pretrained LLMs

- Zero-Shot Learning: Initial trials involved direct instruction-based prompting, where a simple user input, such as “I want to walk 1000 steps daily,” was provided for the model. The response format was explicitly defined using a structured template enclosed within <result> tags. At lower temperature settings, the model generated structured responses with accurate frequency values but lacked specificity in certain parameters. Higher temperature settings introduced increased variability, sometimes leading to incorrect but contextually relevant outputs.

- One-Shot Learning: To enhance the model’s comprehension of the expected output format, an explicit example was included in the prompt. This refined approach led to improved consistency in the output structure. Notably, repeated trials with identical inputs at lower temperatures yielded stable outputs, while higher temperatures introduced minor variations, particularly in the “attainability” field.

- Two-Shot Learning: Given the limitations observed in one-shot learning, an additional example with varied input characteristics was incorporated. This adjustment aimed to enhance the model’s understanding of different input styles. However, despite improvements at lower temperatures, inconsistencies persisted at higher temperatures.

- Five-Shot Learning: To further improve performance, the prompt was extended to include five diverse input examples. These covered a range of input styles, including numerical values, step count ranges, and missing frequency attributes. Overall, for this small model, five-shot performed well for a wide range of inputs.

2.7.3. Creation of Synthetic Training Data and Evaluation of Fine-Tuned Models

- Basic Goal-Setting Sentences: First, basic dataset entries comprising goal-oriented statements related to daily steps were created. These entries included measurable goals (number of steps), specificity (mentioning “steps”), attainability (a rating from 1 to 10), and the frequency with which the goals should be achieved.

- Intelligent Attainability Calculation: The dataset was improved by introducing an intelligent mechanism that calculates the “attainability” score based on the number of steps taken and their frequency. This adjustment made the dataset more realistic, as it factored in the difficulty of achieving higher step counts or more frequent activity.

- Inclusion of Ranges and Frequencies: To introduce more variability, flexible step goals were used instead of fixed numbers, such as “4000 to 5000 steps.” Prompts were given to instruct the model to use a frequency value from the list of frequencies provided, including “daily,” “Monday–Friday,” “Monday–Saturday,” “weekly,” and “weekend,” to have consistency in the frequency value.

- Goal Update Sentences: To allow for examples that update an existing goal, examples were created that used phrases like “Let’s increase the daily target to 8000 steps.”

- Conversational Context: To make the dataset entries appear more natural, samples were created to integrate the step goals into casual or unrelated conversations. This approach incorporated additional context not directly related to a goal, such as remarks about the weather, personal motivation, or other daily activities.

- Variety in Sentence Structures: A variety of creative scenarios were included to enhance the dataset. These scenarios reflect a diverse range of motivations for walking, such as adopting a dog or starting a new job near a park. Alternative reply styles were also used to infuse the dataset with different tones, from casual to motivational. Additionally, scenarios that emphasize goals following medical advice or personal health commitments were crafted to focus on health awareness and motivation.

2.7.4. Comparison Tests with ChatGPT

3. Results

3.1. Results of Information Extraction Using a Pattern-Matching Function

3.2. Results of Direct Extraction Using Large Language Models (LLMs)

3.2.1. Results for LLaMA 2 (7B) and Gemma 7B Without Fine-Tuning

- Zero-Shot (ZS): The models completely failed to understand the requirement and were unable to generate a relevant output.

- One-Shot (1S): With the addition of a single example, the model generated an appropriate analysis for inputs of the given style. However, when presented with other input formats, such as those containing a range, the model failed to extract the correct data. For instance, while the model accurately processed the input “I want to walk 10,000 steps daily,” when asked to process “I want to walk between 8000 and 12,000 steps daily,” it failed to capture and interpret the range correctly.

- Two-Shot (2S): No significant improvement was observed with 2S.

- Few-Shot (5S): The accuracy improved but still contained several errors; increasing the temperature did not help and sometimes resulted in format inconsistencies. Even at lower temperatures, the model generated incorrect values for certain entities.

3.2.2. Results of Fine-Tuning a Smaller Model

3.2.3. Results of ChatGPT-3.5

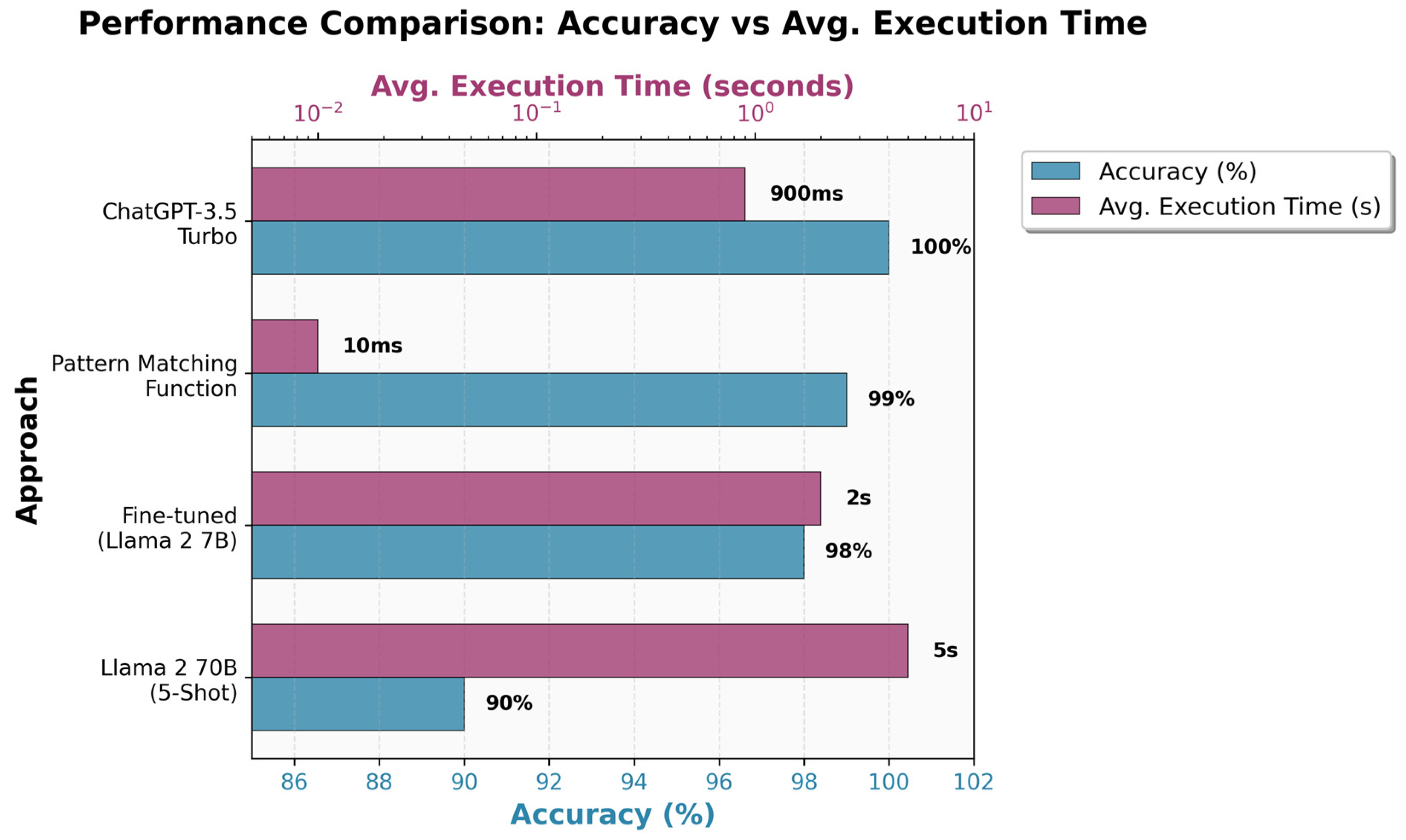

3.3. Comparative Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| LLM | Large language model |

| ML | Machine learning |

| LoRA | Low-Rank Adaptation |

| AI | Artificial intelligence |

Appendix A

| Prompt Type | Prompt |

|---|---|

| Zero-shot | <<sys>>For the given message, generate a response in JSON format that includes the following information: {Measurability: “value “, Specificity: “value”, Attainability: “value”, Frequency: “value”} surround the answer in between <result> and </result> tags. <<sys>> |

| One-shot | <<SYS>> surround the answer in between <result> and </result> tags. <</SYS>> [INST]”the goal for this week is to walk 2,000 steps per day every day.” [/INST] <result> { “Measurability”: “2000”, “Specificity”: “steps”, “Attainability”: “8”, “Frequency”: “daily” } </result> |

| Two-shot | <<SYS>>> surround the answer in between <result> and </result> tags. <</SYS>> [INST] “the goal for this week is to walk 2,000 steps per day every day.” [/INST] <result> { “Measurability”: “2000”, “Specificity”: “steps”, “Attainability”: “8”, “Frequency”: “daily” } </result> [INST] “I wanna TRY between 1,000 and 2,000 from Monday to Friday” [/INST] <result> { “Measurability”: “1000”, “Specificity”: “steps”, “Attainability”: “8”, “Frequency”: “Monday-Friday” } </result> |

| Five-shot | <<SYS>>> surround the answer in between <result> and </result> tags. <</SYS>> [INST] “the goal for this week is to walk 2,000 steps per day every day.” [/INST] <result> { “Measurability”: “2000”, “Specificity”: “steps”, “Attainability”: “8” “Frequency”: “daily” } </result> [INST] “I wanna TRY between 1,000 and 2,000 from Monday to Friday” [/INST] <result> { “Measurability”: “1000”, “Specificity”: “steps”, “Attainability”: “8”, “Frequency”: “Monday-Friday” } </result> [INST] “Hi! Ive been struggling a bit lately, so lets aim for a more achievable goal of 3,000 steps per day.” [/INST] <result> { “Measurability”: “3000”, “Specificity”: “steps”, “Attainability”: “5”, “Frequency”: “daily” } </result> [INST] “Good morning! Im feeling really determined this week. Lets push for 9,000 steps from Saturday to Sunday.” [/INST] <result> { “Measurability”: “9000”, “Specificity”: “steps”, “Attainability”: “10”, “Frequency”: “weekend” } </result> [INST] “15,000 steps…..” [/INST] <result> { “Measurability”: “15000”, “Specificity”: “steps”, “Attainability”: “7”, “Frequency”: “daily” } </result> |

References

- Gutnick, D.; Reims, K.; Davis, C.; Gainforth, H.; Jay, M.; Cole, S. Brief action planning to facilitate behavior change and support patient self-management. J. Sci. Commun. 2014, 21, 17–29. [Google Scholar]

- Lungu, A.; Boone, M.; Chen, S.; Chen, C.; Walser, R. Effectiveness of a cognitive behavioral coaching program delivered via video in real world settings. Telemed. e-Health 2021, 27, 47–54. [Google Scholar] [CrossRef] [PubMed]

- Beinema, T.; Davison, D.; Reidsma, D.; Banos, O.; Bruijnes, M.; Donval, B.; Valero, Á.F.; Heylen, D.; Hofs, D.; Huizing, G.; et al. Agents United: An open platform for multi-agent conversational systems. In Proceedings of the 21st ACM International Conference on Intelligent Virtual Agents, Virtual Event, Kyoto, Japan, 14–17 September 2021; pp. 17–24. [Google Scholar]

- Yarn Spinner. Available online: https://yarnspinner.dev/ (accessed on 3 June 2025).

- Van Hoan, N.; Hung, P. Arext: Automatic Regular Expression Testing Tool Based on Generating Strings with Full Coverage. In Proceedings of the 13th International Conference on Knowledge and Systems Engineering (KSE), Bangkok, Thailand, 10–12 November 2021. [Google Scholar]

- Kazama, J.; Makino, T.; Ohta, Y.; Tsujii, J. Tuning Support Vector Machines for biomedical named entity recognition. In Proceedings of the ACL-02 Workshop on Natural Language Processing in Biomedical Applications, Philadelphia, PA, USA, 11 July 2002; Volume 3, pp. 1–8. [Google Scholar]

- Zhao, S. Named entity recognition in biomedical texts using an HMM model. In Proceedings of the International Joint Workshop on Natural Language Processing in Biomedicine and Its Applications, Geneva, Switzerland, 28–29 August 2004; Association for Computational Linguistics, USA. 2004; pp. 84–87. [Google Scholar]

- McCallum, A.; Freitag, D.; Pereira, F. Maximum Entropy Markov Models for information extraction and segmentation. In Proceedings of the Seventeenth International Conference on Machine Learning (ICML 2000), Stanford, CA, USA, 29 June–2 July 2000; pp. 591–598. [Google Scholar]

- McCallum, A.; Li, W. Early results for named entity recognition with Conditional Random Fields, feature induction and web-enhanced lexicons. In Proceedings of the Seventh Conference on Natural Language Learning at HLT-NAACL, Edmonton, AB, Canada, 31 May–1 June 2003; pp. 188–191. [Google Scholar]

- Hu, Y.; Chen, Q.; Du, J.; Peng, X.; Keloth, V.; Zuo, X.; Zhou, Y.; Li, Z.; Jiang, X.; Lu, Z.; et al. Improving large language models for clinical named entity recognition via prompt engineering. J. Am. Med. Inform. Assoc. 2024, 31, 1812–1820. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Touvron, H.; Martin, L.; Stone, K.; Scialom, T. Llama 2: Open Foundation and Fine-Tuned Chat Models. arXiv 2023, arXiv:2307.09288. [Google Scholar] [CrossRef]

- Mesnard, T.; Hardin, C.; Dadashi, R.; Bhupatiraju, S.; Pathak, S.; Sifre, L.; Rivière, M.; Kale, M.S.; Love, J. Gemma: Open Models Based on Gemini Research and Technology. arXiv 2024, arXiv:2403.08295. [Google Scholar] [CrossRef]

- Dabrowski, J.; Munson, E.V. 40 years of searching for the best computer system response time. Interact. Comput. 2011, 23, 555–564. [Google Scholar] [CrossRef]

- Yu, M.; Zhou, R.; Cai, Z.; Tan, C.W.; Wang, H. Unravelling the relationship between response time and user experience in mobile applications. Internet Res. 2020, 30, 1353–1382. [Google Scholar] [CrossRef]

- Meta. Llama 2: Open Source, Free for Research and Commercial Use. Available online: https://www.llama.com/llama2/ (accessed on 21 July 2025).

- Hugging Face. Access to Gemma on Hugging Face. Available online: https://huggingface.co/google/gemma-7b (accessed on 21 July 2025).

- Open AI. GPT 3.5 Turbo: Legacy GPT Model for Cheaper Chat and Non-Chat Tasks. Available online: https://platform.openai.com/docs/models/gpt-3.5-turbo (accessed on 21 July 2025).

- Doran, G.T. There’s a SMART way to write management’s goals and objectives. Manag. Rev. 1981, 70, 35–36. [Google Scholar]

- Gupta, I.; Eugenio, B.D.; Ziebart, B.; Baiju, A.; Liu, B.; Gerber, B.; Sharp, L.; Nabulsi, N.; Smart, M. Human-Human Health Coaching via Text Messages: Corpus, Annotation, and Analysis. In Proceedings of the 21st Annual Meeting of the Special Interest Group on Discourse and Dialogue, Online, 21–24 July 2020; Association for Computational Linguistics. pp. 246–256. [Google Scholar]

- OpenAI. Available online: https://openai.com/ (accessed on 3 June 2025).

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. In Advances in Neural Information Processing Systems 33 (NeurIPS 2020), Proceedings of the 34th International Conference on Neural Information Processing Systems (NIPS ‘20), Vancouver, BC, Canada, 6–12 December 2020; Curran Associates Inc.: Red Hook, NY, USA, 2020; pp. 1877–1901. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Guo, X.; Chen, Y. Generative AI for Synthetic Data Generation: Methods, Challenges and the Future. arXiv 2024, arXiv:2403.04190. [Google Scholar] [CrossRef]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685. [Google Scholar]

- Hannun, A.; Digani, J.; Katharopoulos, A.; Collobert, R. MLX. Available online: https://github.com/ml-explore (accessed on 3 June 2025).

- MLX. Available online: https://ml-explore.github.io/mlx/build/html/index.html (accessed on 3 June 2025).

- Wen, B.; Norel, R.; Liu, J.; Stappenbeck, T.; Zulkernine, F.; Chen, H. Leveraging Large Language Models for Patient Engagement: The Power of Conversational AI in Digital Health. In Proceedings of the 2024 IEEE International Conference on Digital Health (ICDH), Shenzhen, China, 7–13 July 2024; IEEE: New York, NY, USA, 2024; pp. 104–113. [Google Scholar]

- Li, L.; Sayre, E.; Xie, H.; Falck, R.S.; Best, J.R.; Liu-Ambrose, T.; Grewal, N.; Hoens, A.M.; Noonan, G.; Feehan, L.M. Efficacy of a Community-Based Technology-Enabled Physical Activity Counseling Program for People with Knee Osteoarthritis: Proof-of-Concept Study. J. Med. Internet Res. 2018, 20, e159. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Feehan, L.; Xie, H.; Lu, N.; Shaw, C.; Gromala, D.; Zhu, S.; Aviña-Zubieta, J.; Hoens, A.; Koehn, C.; et al. Effects of a 12-Week Multifaceted Wearable-Based Program for People with Knee Osteoarthritis: Randomized Controlled Trial. JMIR Mhealth Uhealth 2020, 8, e19116. [Google Scholar] [CrossRef] [PubMed]

- White, N.; Bautista, V.; Lenz, T.; Cosimano, A. Using the SMART-EST Goals in Lifestyle Medicine Prescription. Am. J. Lifestyle Med. 2020, 14, 271–273. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

| Model | Developer | Size | Type | Architecture | Context Window | Reproducibility |

|---|---|---|---|---|---|---|

| Meta-Llama 2 7B Chat | Meta | 7 billion | Open weights | Transformer decoder | 4096 tokens | High |

| Meta-Llama 2 70B Chat | Meta | 70 billion | Open weights | Transformer decoder | 4096 tokens | High |

| Gemma 7B Instruct | 7 billion | Open weights | Transformer decoder | 8192 tokens | High | |

| ChatGPT-3.5-Turbo | OpenAI | ~175 billion * | Commercial | Transformer | 16,385 tokens | Limited (API variations) |

| Python Code with LLM-Drafted RegEx | Downloadable LLM Without Fine-Tuning | State-of-the-Art LLM Without Fine-Tuning | Downloadable LLM with Fine-Tuning | |

|---|---|---|---|---|

| Accuracy | Very high; 99% on covered data, but no answer on out-of-coverage data (unless logic for missing or default values is explicitly specified). | Moderate; best models for accuracy, such as Llama2 70B or Gemma 7B, do well depending on type of entity (e.g., specificity of up to 100%; measurability of up to 98%; frequency of up to 90%) | Excellent; Chat GPT-3.5-Turbo achieved 100% accuracy, with broad coverage | Very high; best fine-tuned models, such as Llama 2 7B, achieved 98%, with broad coverage |

| Execution speed | Always real time; 10 ms on average | Not real time; best of these models took 5 s on average | Nearly real time; Chat GPT-3.5-Turbo took 900 ms on average | Not real time; best of these models took 2 s on average |

| Transparency | Mostly clear: rules are readable (with some expertise); clear when out of coverage; when rules overlap, answer will depend on the order of rules | Opaque: weights have no interpretation; no indication of out of coverage (models may hallucinate) | Opaque: weights have no interpretation; no indication of out of coverage (models may hallucinate) | Opaque: weights have no interpretation; no indication of out of coverage (models may hallucinate) |

| Maintainability and reliability | High: can be updated through manual editing or by providing new labeled examples; no risk if out of coverage | Low: models are not editable, results do not improve with more data, and some risk if out of coverage | Mixed: models are not editable but perform well without effort; updates controlled by API owner | Moderate: models are not editable but can be downloaded and fine-tuned offline if new data is available; some risk if out of coverage |

| Best use case(s) | Either low- or high-budget real-time interaction, such as virtual or assisted counselling | Low-budget offline documentation tasks or for quick prototyping | High-budget offline documentation tasks or creation of synthetic training data | Low-budget offline documentation tasks or creation of synthetic training data |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kanduri, S.S.A.; Prasad, A.; McRoy, S. Using Large Language Models to Extract Structured Data from Health Coaching Dialogues: A Comparative Study of Code Generation Versus Direct Information Extraction. BioMedInformatics 2025, 5, 50. https://doi.org/10.3390/biomedinformatics5030050

Kanduri SSA, Prasad A, McRoy S. Using Large Language Models to Extract Structured Data from Health Coaching Dialogues: A Comparative Study of Code Generation Versus Direct Information Extraction. BioMedInformatics. 2025; 5(3):50. https://doi.org/10.3390/biomedinformatics5030050

Chicago/Turabian StyleKanduri, Sai Sangameswara Aadithya, Apoorv Prasad, and Susan McRoy. 2025. "Using Large Language Models to Extract Structured Data from Health Coaching Dialogues: A Comparative Study of Code Generation Versus Direct Information Extraction" BioMedInformatics 5, no. 3: 50. https://doi.org/10.3390/biomedinformatics5030050

APA StyleKanduri, S. S. A., Prasad, A., & McRoy, S. (2025). Using Large Language Models to Extract Structured Data from Health Coaching Dialogues: A Comparative Study of Code Generation Versus Direct Information Extraction. BioMedInformatics, 5(3), 50. https://doi.org/10.3390/biomedinformatics5030050