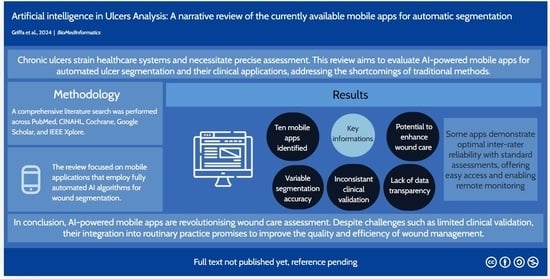

Artificial Intelligence in Wound Care: A Narrative Review of the Currently Available Mobile Apps for Automatic Ulcer Segmentation

Abstract

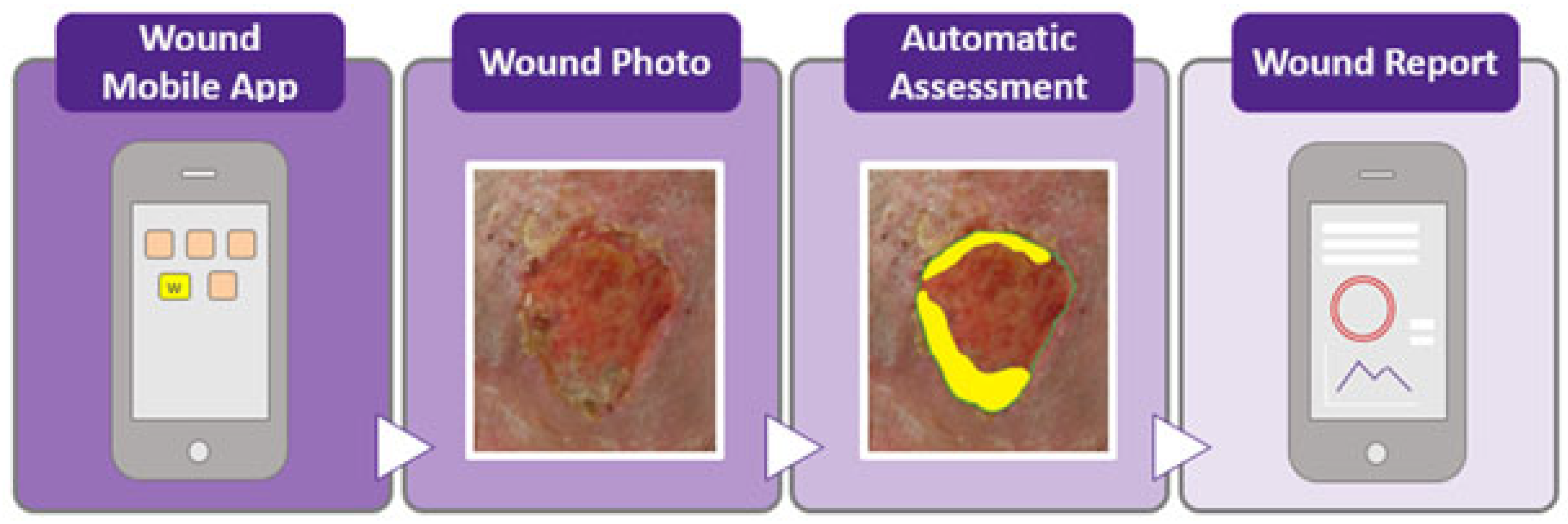

1. Introduction

2. Material and Methods

2.1. Literature Search Strategy

2.2. Data Extraction

2.3. App Selection Criteria and Scoring Classification

- Regulatory approval (up to 2 points): Applications were awarded up to two points if they had been approved or registered with recognised regulatory agencies such as the FDA or relevant European agencies. Regulatory approval reflects adherence to safety and efficacy standards, critical for clinical application. While important, this criterion was given a relatively low score to ensure that it did not unduly influence the overall ranking compared to performance metrics.

- Platform availability (up to 2 points): Applications received up to two points for availability on major mobile platforms (iOS and Android). Platform accessibility is important for widespread adoption by healthcare providers and patients, but it was assigned a lower weight to reflect its supportive role in usability rather than core clinical effectiveness.

- Peer-reviewed studies or validation data (up to 2 points): Up to two points were awarded if the application had been validated in peer-reviewed studies, as this provides a measure of scientific credibility. This criterion was scored modestly to acknowledge validation without allowing it to disproportionately affect the overall ranking.

- Disclosure of methods/algorithms and public dataset (up to 2 points): Points were given for transparency in algorithmic methods and the use of public datasets. Applications that disclosed their segmentation algorithms and datasets scored higher, as transparency facilitates scientific scrutiny and reproducibility. This criterion was also limited in score to keep it from outweighing core performance metrics.

- Inter-rater reliability and performance metrics (up to 10 points): This parameter was the most heavily weighted, with applications awarded up to ten points based on statistical measures of performance, such as inter-rater reliability, the Dice similarity coefficient, pixel-based accuracy, and AUC scores. High inter-rater reliability indicates consistent and reliable results, making it a crucial factor in clinical contexts. Given its importance in reflecting true algorithmic efficiency, this parameter had the highest potential score to ensure it influenced the overall ranking meaningfully.

3. Results

- Healthy.io’s Minuteful for Wound (2019, Israel)

- Wound Vision Scout Mobile App (USA, 2019)

- APD Skin Monitoring App (Singapore, 2019)

- GrabCut algorithm: This method uses an interactive segmentation based on graph cuts, requiring the user to draw a rectangle around the wound. While accurate, it is slow and demands manual input, making it less efficient [31].

- Colour thresholding: The second approach leverages colour detection based on typical wound hues (e.g., shades of red). It quickly separates wound pixels from the background and uses contour detection for area calculation. This method is faster and more accurate [32].

- NDKare App (Singapore, 2019)

- -

- For 2D wound segmentation, the NDKare app uses an image processing technique that automatically distinguishes the wound area from surrounding tissue based on pixel analysis. The app identifies the ulcer boundaries and allows users to adjust the outline manually, if needed. This segmentation calculates 2D metrics such as length, width, perimeter, and surface area, offering precise measurements of wounds captured by the smartphone’s camera.

- -

- For 3D wound segmentation, the technique is based on “structure from motion”. This technique creates a 3D model by analysing a video of the wound, capturing images from different angles, identifying key points, and reconstructing the wound in 3D using triangulation. The app then generates a “dense 3D point cloud” and a smooth surface reconstruction for depth and volume measurement.

- Clinic Gram (Barcelona, 2019)

- Swift Skin and Wound App (Canada, 2017)

- Cares4wounds (Singapore, 2019)

- Tissue Analytics (USA, 2014)

- ImitoWound (Switzerland 2020)

- WoundsWiseIQ (USA, 2015)

| App Name | State | Company, Industry, Other | Available on App Stores and/or Public Repositories | Studies | Healthcare Agency Evaluation | Public Dataset | Segmentation Technique | Reliability | Our Classification |

|---|---|---|---|---|---|---|---|---|---|

| Wound at home Healthy.io Minuteful for Wound | Israel | Healthy.io, a private company | 2/2 (App store, Android store) | 1/2 | 2/2, Yes | No | N/A | N/A | 5/18 |

| Wound Vision Scout App Mobile | USA | WoundVision LLC, a private company | N/a | 2/2 | No | No | N/A | N/A | 2/18 |

| APD Skin Monitoring App | Singapore | APD Lab, Private Company | 2/2 (App store, Android store) | 1/2, scarce | No | No | 1/1 Grabcut [31], RBG thresholds [32] | N/A | 4/18 |

| NdKare app | Singapore | Nucleus Dynamics Pte. Ltd., Private Company | 2/2 (App store, Android store, other repositories) | 2/2 | 2/2, Yes | No | 1/1 for 2D reconstruction, pixel analysis [54]. | 10/10 [55] | 17/18 |

| Clinicram | Spain | Skilled Skin SL, Private Company | No | No | 2/2, Yes | No | N/A | N/A | 2/18 |

| Swift Skin and Wound | Canada | Swift Medical Inc. | No | 2/2 | 2/2, Yes | No | 1/1, AutoTissue: tissue segmentation model; AutoTrace: wound segmentation model | 10/10 [41] | 15/18 |

| Care4wounds | Singapore | Tetsuyu Healthcare Holdings Pte Ltd. | 2/2 (App store, Android store) | 2/2 | 2/2, Yes | No | N/A | 9/10 [43] | 15/18 |

| Tissue Analytics | USA | Net Health Company | 2/2 (App store, Android store) | 2/2 | No | No | N/A | 10/10 [47] | 14/18 |

| ImitoWound | Switzerland | Imito AG | 2/2 (App store, Android store) | 2/2 | No | No | N/A | 10/10 [56] | 14/18 |

| WoundWiseIQ | USA | Med-Compliance IQ, Inc. | No | No | 2/2 | No | N/A | N/A | 2/18 |

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Mustoe, T.A.; O’Shaughnessy, K.; Kloeters, O. Chronic wound pathogenesis and current treatment strategies: A unifying hypothesis. Plast. Reconstr. Surg. 2006, 117 (Suppl. S7), 35S–41S. [Google Scholar] [CrossRef] [PubMed]

- Bowers, S.; Franco, E. Chronic Wounds: Evaluation and Management. Am. Fam. Physician 2020, 101, 159–166. [Google Scholar] [PubMed]

- Olsson, M.; Järbrink, K.; Divakar, U.; Bajpai, R.; Upton, Z.; Schmidtchen, A.; Car, J. The humanistic and economic burden of chronic wounds: A systematic review. Wound Repair Regen. Off. Publ. Wound Health Soc. Eur. Tissue Repair Soc. 2019, 27, 114–125. [Google Scholar] [CrossRef] [PubMed]

- Yee, A.; Harmon, J.; Yi, S. Quantitative Monitoring Wound Healing Status Through Three-Dimensional Imaging on Mobile Platforms. J. Am. Coll. Clin. Wound Spec. 2016, 8, 21–27. [Google Scholar] [CrossRef]

- Samaniego-Ruiz, M.-J.; Llatas, F.P.; Jiménez, O.S. Assessment of chronic wounds in adults: An integrative review. Rev. Esc. Enferm. USP 2018, 52, e03315. [Google Scholar] [CrossRef]

- Foltynski, P.; Ciechanowska, A.; Ladyzynski, P. Wound surface area measurement methods. Biocybern. Biomed. Eng. 2021, 41, 1454–1465. [Google Scholar] [CrossRef]

- Martinengo, L.; Olsson, M.; Bajpai, R.; Soljak, M.; Upton, Z.; Schmidtchen, A.; Car, J.; Järbrink, K. Prevalence of chronic wounds in the general population: Systematic review and meta-analysis of observational studies. Ann. Epidemiol. 2019, 29, 8–15. [Google Scholar] [CrossRef]

- Sen, C.K. Human Wounds and Its Burden: An Updated Compendium of Estimates. Adv. Wound Care 2019, 8, 39–48. [Google Scholar] [CrossRef]

- Smet, S.; Verhaeghe, S.; Beeckman, D.; Fourie, A.; Beele, H. The process of clinical decision-making in chronic wound care: A scenario-based think-aloud study. J. Tissue Viability 2024, 33, 231–238. [Google Scholar] [CrossRef]

- Khoo, R.; Jansen, S. The Evolving Field of Wound Measurement Techniques: A Literature Review. Wounds Compend. Clin. Res. Pract. 2016, 28, 175–181. [Google Scholar]

- Wang, C.; Yan, X.; Smith, M.; Kochhar, K.; Rubin, M.; Warren, S.M.; Wrobel, J.; Lee, H. A unified framework for automatic wound segmentation and analysis with deep convolutional neural networks. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 5 November 2015; pp. 2415–2418. [Google Scholar] [CrossRef]

- Koepp, J.; Baron, M.V.; Martins, P.R.H.; Brandenburg, C.; Kira, A.T.F.; Trindade, V.D.; Dominguez, L.M.L.; Carneiro, M.; Frozza, R.; Possuelo, L.G.; et al. The Quality of Mobile Apps Used for the Identification of Pressure Ulcers in Adults: Systematic Survey and Review of Apps in App Stores. JMIR MHealth UHealth 2020, 8, e14266. [Google Scholar] [CrossRef] [PubMed]

- Sutton, R.T.; Pincock, D.; Baumgart, D.C.; Sadowski, D.C.; Fedorak, R.N.; Kroeker, K.I. An overview of clinical decision support systems: Benefits, risks, and strategies for success. NPJ Digit. Med. 2020, 3, 17. [Google Scholar] [CrossRef]

- Iglesias, L.L.; Bellón, P.S.; Del Barrio, A.P.; Fernández-Miranda, P.M.; González, D.R.; Vega, J.A.; Mandly, A.A.G.; Blanco, J.A.P. A primer on deep learning and convolutional neural networks for clinicians. Insights Imaging 2021, 12, 117. [Google Scholar] [CrossRef]

- Le, D.T.P.; Pham, T.D. Unveiling the role of artificial intelligence for wound assessment and wound healing prediction. Explor. Med. 2023, 4, 589–611. [Google Scholar] [CrossRef]

- Shamloul, N.; Ghias, M.H.; Khachemoune, A. The Utility of Smartphone Applications and Technology in Wound Healing. Int. J. Low. Extrem. Wounds 2019, 18, 228–235. [Google Scholar] [CrossRef]

- Anisuzzaman, D.M.; Wang, C.; Rostami, B.; Gopalakrishnan, S.; Niezgoda, J.; Yu, Z. Image-Based Artificial Intelligence in Wound Assessment: A Systematic Review. Adv. Wound Care 2022, 11, 687–709. [Google Scholar] [CrossRef]

- Poon, T.W.K.; Friesen, M.R. Algorithms for Size and Color Detection of Smartphone Images of Chronic Wounds for Healthcare Applications. IEEE Access 2015, 3, 1799–1808. [Google Scholar] [CrossRef]

- Zhang, R.; Tian, D.; Xu, D.; Qian, W.; Yao, Y. A Survey of Wound Image Analysis Using Deep Learning: Classification, Detection, and Segmentation. IEEE Access 2022, 10, 79502–79515. [Google Scholar] [CrossRef]

- Kermany, D.S.; Goldbaum, M.; Cai, W.; Valentim, C.C.S.; Liang, H.; Baxter, S.L.; McKeown, A.; Yang, G.; Wu, X.; Yan, F.; et al. Identifying Medical Diagnoses and Treatable Diseases by Image-Based Deep Learning. Cell 2018, 172, 1122–1131.e9. [Google Scholar] [CrossRef]

- Scebba, G.; Zhang, J.; Catanzaro, S.; Mihai, C.; Distler, O.; Berli, M.; Karlen, W. Detect-and-segment: A deep learning approach to automate wound image segmentation. Inform. Med. Unlocked 2022, 29, 100884. [Google Scholar] [CrossRef]

- Sorour, S.E.; El-Mageed, A.A.A.; Albarrak, K.M.; Alnaim, A.K.; Wafa, A.A.; El-Shafeiy, E. Classification of Alzheimer’s disease using MRI data based on Deep Learning Techniques. J. King Saud Univ.—Comput. Inf. Sci. 2024, 36, 101940. [Google Scholar] [CrossRef]

- Healthy.io|Digital Wound Management. Available online: https://healthy.io/services/wound/ (accessed on 30 September 2024).

- Nussbaum, S.R.; Carter, M.J.; Fife, C.E.; DaVanzo, J.; Haught, R.; Nusgart, M.; Cartwright, D. An Economic Evaluation of the Impact, Cost, and Medicare Policy Implications of Chronic Nonhealing Wounds. Value Health J. Int. Soc. Pharmacoeconomics Outcomes Res. 2018, 21, 27–32. [Google Scholar] [CrossRef] [PubMed]

- Keegan, A.C.; Bose, S.; McDermott, K.M.; Starks White, M.P.; Stonko, D.P.; Jeddah, D.; Lev-Ari, E.; Rutkowski, J.; Sherman, R.; Abularrage, C.J.; et al. Implementation of a patient-centered remote wound monitoring system for management of diabetic foot ulcers. Front. Endocrinol. 2023, 14, 1157518, Erratum in Front. Endocrinol. 2023, 14, 1235970. [Google Scholar] [CrossRef]

- Kivity, S.; Rajuan, E.; Arbeli, S.; Alcalay, T.; Shiri, L.; Orvieto, N.; Alon, Y.; Saban, M. Optimising wound monitoring: Can digital tools improve healing outcomes and clinic efficiency. J. Clin. Nurs. 2024, 33, 4014–4023. [Google Scholar] [CrossRef] [PubMed]

- Wound Imaging Solutions—WoundVision. Available online: https://woundvision.com/ (accessed on 30 September 2024).

- Langemo, D.; Spahn, J.; Snodgrass, L. Accuracy and Reproducibility of the Wound Shape Measuring and Monitoring System. Adv. Skin Wound Care 2015, 28, 317–323. [Google Scholar] [CrossRef]

- Langemo, D.; Spahn, J.; Spahn, T.; Pinnamaneni, V.C. Comparison of standardized clinical evaluation of wounds using ruler length by width and Scout length by width measure and Scout perimeter trace. Adv. Skin Wound Care 2015, 28, 116–121. [Google Scholar] [CrossRef]

- Wu, W.; Yong, K.Y.W.; Federico, M.A.J.; Gan, S.K.-E. The APD Skin Monitoring App for Wound Monitoring: Image Processing, Area Plot, and Colour Histogram. Spamd [26Internet]. 2019. Available online: https://scienceopen.com/hosted-document?doi=10.30943/2019/28052019 (accessed on 30 September 2024).

- Tang, M.; Gorelick, L.; Veksler, O.; Boykov, Y. GrabCut in One Cut; CVF Open Access: Salt Lake City, UT, USA, 2013; pp. 1769–1776. Available online: https://openaccess.thecvf.com/content_iccv_2013/html/Tang_GrabCut_in_One_2013_ICCV_paper.html (accessed on 30 September 2024).

- Gupta, S.; Girshick, R.; Arbeláez, P.; Malik, J. Learning Rich Features from RGB-D Images for Object Detection and Segmentation. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 345–360. [Google Scholar]

- Nair, H.K.R. Increasing productivity with smartphone digital imagery wound measurements and analysis. J. Wound Care 2018, 27, S12–S19. [Google Scholar] [CrossRef]

- Clinicgram—The Revolutionary App that Allows You to Diagnose Diseases with Your Smartphone. Available online: https://www.clinicgram.com/ (accessed on 30 September 2024).

- Swift Skin and Wound Mobile App and Dashboards. Swift. Available online: https://swiftmedical.com/solution/ (accessed on 30 September 2024).

- Wang, S.C.; Anderson, J.A.E.; Evans, R.; Woo, K.; Beland, B.; Sasseville, D.; Moreau, L. Point-of-care wound visioning technology: Reproducibility and accuracy of a wound measurement app. PLoS ONE 2017, 12, e0183139. [Google Scholar] [CrossRef]

- Ramachandram, D.; Ramirez-GarciaLuna, J.L.; Fraser, R.D.J.; Martínez-Jiménez, M.A.; Arriaga-Caballero, J.E.; Allport, J. Fully Automated Wound Tissue Segmentation Using Deep Learning on Mobile Devices: Cohort Study. JMIR MHealth UHealth 2022, 10, e36977. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Liu, Z.; Agu, E.; Pedersen, P.; Lindsay, C.; Tulu, B.; Strong, D. Chronic Wound Image Augmentation and Assessment Using Semi-Supervised Progressive Multi-Granularity EfficientNet. IEEE Open J. Eng. Med. Biol. 2023, 5, 1–17. [Google Scholar] [CrossRef] [PubMed]

- EfficientNetB0—Furiosa Models. Available online: https://furiosa-ai.github.io/furiosa-models/latest/models/efficientnet_b0/ (accessed on 5 October 2024).

- Au, Y.; Beland, B.; Anderson, J.A.E.; Sasseville, D.; Wang, S.C. Time-Saving Comparison of Wound Measurement Between the Ruler Method and the Swift Skin and Wound App. J. Cutan. Med. Surg. 2019, 23, 226–228. [Google Scholar] [CrossRef] [PubMed]

- CARES4WOUNDS Wound Management System|Tetusyu Healthcare. Tetsuyu Healthcare. Available online: https://tetsuyuhealthcare.com/solutions/wound-management-system/ (accessed on 5 October 2024).

- Chan, K.S.; Chan, Y.M.; Tan, A.H.M.; Liang, S.; Cho, Y.T.; Hong, Q.; Yong, E.; Chong, L.R.C.; Zhang, L.; Tan, G.W.L.; et al. Clinical validation of an artificial intelligence-enabled wound imaging mobile application in diabetic foot ulcers. Int. Wound J. 2022, 19, 114–124. [Google Scholar] [CrossRef] [PubMed]

- Kitamura, A.; Nakagami, G.; Okabe, M.; Muto, S.; Abe, T.; Doorenbos, A.; Sanada, H. An application for real-time, remote consultations for wound care at home with wound, ostomy and continence nurses: A case study. Wound Pract. Res. 2022, 30, 158–162. [Google Scholar] [CrossRef]

- Home. Tissue Analytics. 2020. Available online: https://www.tissue-analytics.com/ (accessed on 5 October 2024).

- Barakat-Johnson, M.; Jones, A.; Burger, M.; Leong, T.; Frotjold, A.; Randall, S.; Kim, B.; Fethney, J.; Coyer, F. Reshaping wound care: Evaluation of an artificial intelligence app to improve wound assessment and management amid the COVID-19 pandemic. Int. Wound J. 2022, 19, 1561–1577. [Google Scholar] [CrossRef]

- Fong, K.Y.; Lai, T.P.; Chan, K.S.; See, I.J.L.; Goh, C.C.; Muthuveerappa, S.; Tan, A.H.; Liang, S.; Lo, Z.J. Clinical validation of a smartphone application for automated wound measurement in patients with venous leg ulcers. Int. Wound J. 2023, 20, 751–760. [Google Scholar] [CrossRef]

- Wound Assessment Tool—imitoWound App. imito AG. Available online: https://imito.io/en/imitowound (accessed on 5 October 2024).

- Guarro, G.; Cozzani, F.; Rossini, M.; Bonati, E.; Del Rio, P. Wounds morphologic assessment: Application and reproducibility of a virtual measuring system, pilot study. Acta Biomed. Atenei Parm. 2021, 92, e2021227. [Google Scholar] [CrossRef]

- Schroeder, A.B.; Dobson, E.T.A.; Rueden, C.T.; Tomancak, P.; Jug, F.; Eliceiri, K.W. The ImageJ ecosystem: Open-source software for image visualization, processing, and analysis. Protein Sci. Publ. Protein Soc. 2021, 30, 234–249. [Google Scholar] [CrossRef]

- Sia, D.K.; Mensah, K.B.; Opoku-Agyemang, T.; Folitse, R.D.; Darko, D.O. Mechanisms of ivermectin-induced wound healing. BMC Vet. Res. 2020, 16, 397. [Google Scholar] [CrossRef]

- Khac, A.D.; Jourdan, C.; Fazilleau, S.; Palayer, C.; Laffont, I.; Dupeyron, A.; Verdun, S.; Gelis, A. mHealth App for Pressure Ulcer Wound Assessment in Patients With Spinal Cord Injury: Clinical Validation Study. JMIR MHealth UHealth 2021, 9, e26443. [Google Scholar] [CrossRef]

- WoundWiseIQ—Image Analytics. Improved Outcomes. Available online: https://woundwiseiq.com/ (accessed on 5 October 2024).

- Phung, S.L.; Bouzerdoum, A.; Chai, D. Skin segmentation using color pixel classification: Analysis and comparison. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 148–154. [Google Scholar] [CrossRef] [PubMed]

- Kuang, B.; Pena, G.; Szpak, Z.; Edwards, S.; Battersby, R.; Cowled, P.; Dawson, J.; Fitridge, R. Assessment of a smartphone-based application for diabetic foot ulcer measurement. Wound Repair Regen. 2021, 29, 460–465. [Google Scholar] [CrossRef] [PubMed]

- Younis, P.H.M.; El Sebaie, A.E.M.; Waked, I.S.; Bayoumi, M.B.I. Validity and Reliability of a Smartphone Application in Measuring Surface Area of Lower Limb Chronic Wounds. Egypt. J. Hosp. Med. 2022, 89, 6612–6616. [Google Scholar] [CrossRef]

- El-Rashidy, N.; El-Sappagh, S.; Islam, S.M.R.; El-Bakry, H.M.; Abdelrazek, S. Mobile Health in Remote Patient Monitoring for Chronic Diseases: Principles, Trends, and Challenges. Diagnostics 2021, 11, 607. [Google Scholar] [CrossRef] [PubMed]

- Ohura, N.; Mitsuno, R.; Sakisaka, M.; Terabe, Y.; Morishige, Y.; Uchiyama, A.; Okoshi, T.; Shinji, I.; Takushima, A. Convolutional neural networks for wound detection: The role of artificial intelligence in wound care. J. Wound Care 2019, 28, S13–S24. [Google Scholar] [CrossRef]

- Dabas, M.; Schwartz, D.; Beeckman, D.; Gefen, A. Application of Artificial Intelligence Methodologies to Chronic Wound Care and Management: A Scoping Review. Adv. Wound Care 2023, 12, 205–240. [Google Scholar] [CrossRef]

- Kim, P.J.; Homsi, H.A.; Sachdeva, M.; Mufti, A.; Sibbald, R.G. Chronic Wound Telemedicine Models Before and During the COVID-19 Pandemic: A Scoping Review. Adv. Skin Wound Care 2022, 35, 87–94. [Google Scholar] [CrossRef]

- Foltynski, P.; Ladyzynski, P. Internet service for wound area measurement using digital planimetry with adaptive calibration and image segmentation with deep convolutional neural networks. Biocybern. Biomed. Eng. 2023, 43, 17–29. [Google Scholar] [CrossRef]

- Lucas, Y.; Niri, R.; Treuillet, S.; Douzi, H.; Castaneda, B. Wound Size Imaging: Ready for Smart Assessment and Monitoring. Adv. Wound Care 2021, 10, 641–661. [Google Scholar] [CrossRef]

- Stoyanov, S.R.; Hides, L.; Kavanagh, D.J.; Zelenko, O.; Tjondronegoro, D.; Mani, M. Mobile app rating scale: A new tool for assessing the quality of health mobile apps. JMIR MHealth UHealth 2015, 3, e27. [Google Scholar] [CrossRef]

- Curti, N.; Merli, Y.; Zengarini, C.; Giampieri, E.; Merlotti, A.; Dall’Olio, D.; Marcelli, E.; Bianchi, T.; Castellani, G. Effectiveness of Semi-Supervised Active Learning in Automated Wound Image Segmentation. Int. J. Mol. Sci. 2023, 24, 706. [Google Scholar] [CrossRef] [PubMed]

- Curti, N.; Merli, Y.; Zengarini, C.; Starace, M.; Rapparini, L.; Marcelli, E.; Carlini, G.; Buschi, D.; Castellani, G.C.; Piraccini, B.M.; et al. Automated Prediction of Photographic Wound Assessment Tool in Chronic Wound Images. J. Med. Syst. 2024, 48, 14. [Google Scholar] [CrossRef] [PubMed]

- Deniz-Garcia, A.; Fabelo, H.; Rodriguez-Almeida, A.J.; Zamora-Zamorano, G.; Castro-Fernandez, M.; Ruano, M.d.P.A.; Solvoll, T.; Granja, C.; Schopf, T.R.; Callico, G.M.; et al. Quality, Usability, and Effectiveness of mHealth Apps and the Role of Artificial Intelligence: Current Scenario and Challenges. J. Med. Internet Res. 2023, 25, e44030. [Google Scholar] [CrossRef] [PubMed]

- General Data Protection Regulation (GDPR)|EUR-Lex. Available online: https://eur-lex.europa.eu/EN/legal-content/summary/general-data-protection-regulation-gdpr.html (accessed on 5 October 2024).

- Huang, P.-H.; Pan, Y.-H.; Luo, Y.-S.; Chen, Y.-F.; Lo, Y.-C.; Chen, T.P.-C.; Perng, C.-K. Development of a deep learning-based tool to assist wound classification. J. Plast. Reconstr. Aesthetic Surg. JPRAS 2023, 79, 89–97. [Google Scholar] [CrossRef] [PubMed]

- Patel, Y.; Shah, T.; Dhar, M.K.; Zhang, T.; Niezgoda, J.; Gopalakrishnan, S.; Yu, Z. Integrated image and location analysis for wound classification: A deep learning approach. Sci. Rep. 2024, 14, 7043. [Google Scholar] [CrossRef]

- Malihi, L.; Hüsers, J.; Richter, M.L.; Moelleken, M.; Przysucha, M.; Busch, D.; Heggemann, J.; Hafer, G.; Wiemeyer, S.; Heidemann, G.; et al. Automatic Wound Type Classification with Convolutional Neural Networks. Stud. Health Technol. Inform. 2022, 295, 281–284. [Google Scholar] [CrossRef]

- Zhang, P. Image Enhancement Method Based on Deep Learning. Math. Probl. Eng. 2022, 2022, 6797367. [Google Scholar] [CrossRef]

- Chairat, S.; Chaichulee, S.; Dissaneewate, T.; Wangkulangkul, P.; Kongpanichakul, L. AI-Assisted Assessment of Wound Tissue with Automatic Color and Measurement Calibration on Images Taken with a Smartphone. Healthcare 2023, 11, 273. [Google Scholar] [CrossRef]

- Gagnon, J.; Probst, S.; Chartrand, J.; Lalonde, M. Self-supporting wound care mobile applications for nurses: A scoping review protocol. J. Tissue Viability 2023, 32, 79–84. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Griffa, D.; Natale, A.; Merli, Y.; Starace, M.; Curti, N.; Mussi, M.; Castellani, G.; Melandri, D.; Piraccini, B.M.; Zengarini, C. Artificial Intelligence in Wound Care: A Narrative Review of the Currently Available Mobile Apps for Automatic Ulcer Segmentation. BioMedInformatics 2024, 4, 2321-2337. https://doi.org/10.3390/biomedinformatics4040126

Griffa D, Natale A, Merli Y, Starace M, Curti N, Mussi M, Castellani G, Melandri D, Piraccini BM, Zengarini C. Artificial Intelligence in Wound Care: A Narrative Review of the Currently Available Mobile Apps for Automatic Ulcer Segmentation. BioMedInformatics. 2024; 4(4):2321-2337. https://doi.org/10.3390/biomedinformatics4040126

Chicago/Turabian StyleGriffa, Davide, Alessio Natale, Yuri Merli, Michela Starace, Nico Curti, Martina Mussi, Gastone Castellani, Davide Melandri, Bianca Maria Piraccini, and Corrado Zengarini. 2024. "Artificial Intelligence in Wound Care: A Narrative Review of the Currently Available Mobile Apps for Automatic Ulcer Segmentation" BioMedInformatics 4, no. 4: 2321-2337. https://doi.org/10.3390/biomedinformatics4040126

APA StyleGriffa, D., Natale, A., Merli, Y., Starace, M., Curti, N., Mussi, M., Castellani, G., Melandri, D., Piraccini, B. M., & Zengarini, C. (2024). Artificial Intelligence in Wound Care: A Narrative Review of the Currently Available Mobile Apps for Automatic Ulcer Segmentation. BioMedInformatics, 4(4), 2321-2337. https://doi.org/10.3390/biomedinformatics4040126