Should AI-Powered Whole-Genome Sequencing Be Used Routinely for Personalized Decision Support in Surgical Oncology—A Scoping Review

Abstract

1. Introduction

2. The Evolution of DNA Sequencing

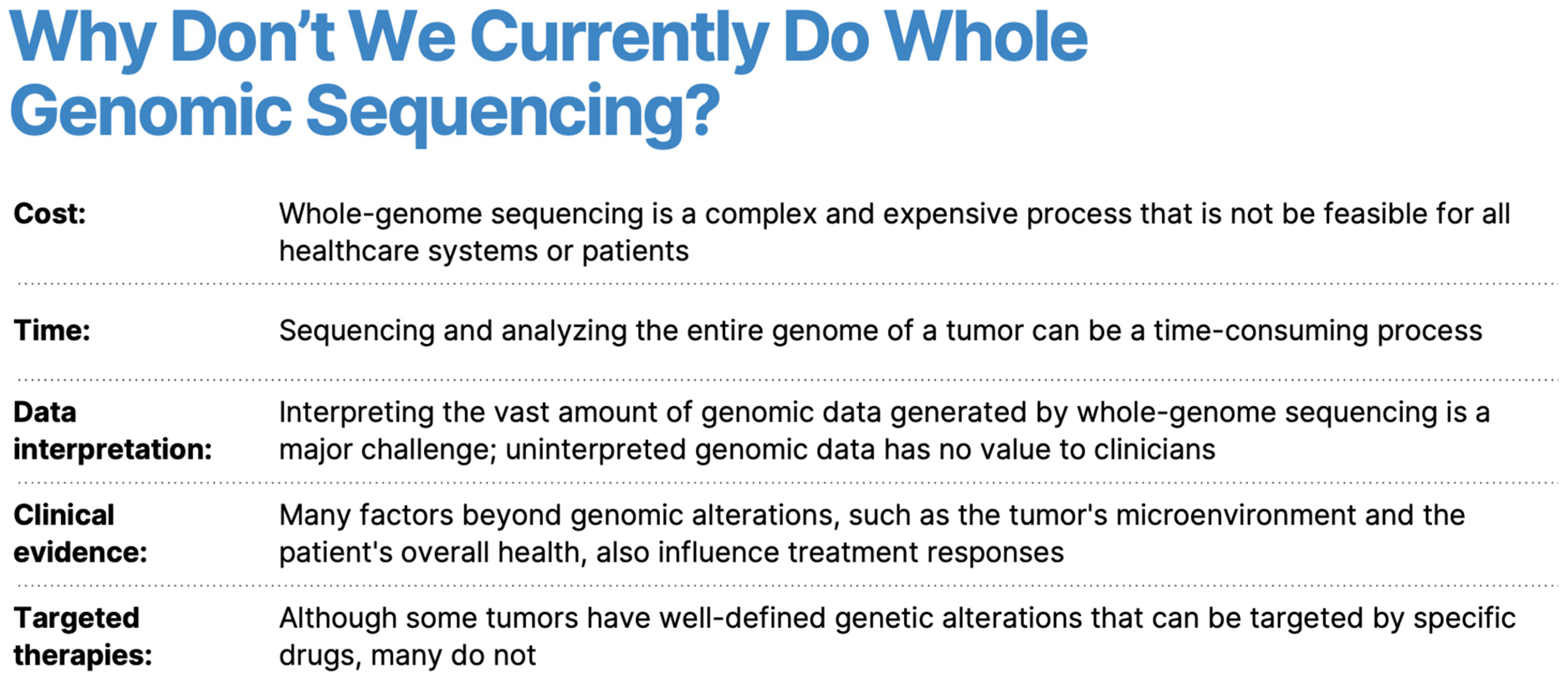

3. What Is Whole Genomic Sequencing (WGS)?

4. AI-Powered Whole Genomic Sequencing

5. Pharmacogenomic Deep Learning Models

6. Exploring AI-Powered Genomics in Multi-Omics Research

6.1. Radiomics, Pathomics and Surgomics

6.2. Proteomics, Transcriptomics, and Genomics

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Hasanbek, M. Data science and the role of artificial intelligence in medicine: Advancements, applications, and challenges. Eur. J. Mod. Med. Pract. 2024, 4, 90–93. [Google Scholar]

- Shendure, J.; Balasubramanian, S.; Church, G.M.; Gilbert, W.; Rogers, J.; Schloss, J.A.; Waterston, R.H. DNA sequencing at 40: Past, present and future. Nature 2017, 550, 345–353, Erratum in Nature 2019, 568, E11. [Google Scholar] [CrossRef] [PubMed]

- Sanger, F.; Coulson, A.R. A rapid method for determining sequences in DNA by primed synthesis with DNA polymerase. J. Mol. Biol. 1975, 94, 441–448. [Google Scholar] [CrossRef] [PubMed]

- Sanger, F.; Nicklen, S.; Coulson, A.R. DNA sequencing with chain-terminating inhibitors. Proc. Natl. Acad. Sci. USA 1977, 74, 5463–5467. [Google Scholar] [CrossRef] [PubMed]

- Masoudi-Nejad, A.; Narimani, Z.; Hosseinkhan, N. Next Generation Sequencing and Sequence Assembly: Methodologies and Algorithms; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013; Volume 4. [Google Scholar]

- El-Metwally, S.; Ouda, O.M.; Helmy, M. Next Generation Sequencing Technologies and Challenges in Sequence Assembly; Springer Science & Business: Berlin/Heidelberg, Germany, 2014; Volume 7. [Google Scholar]

- Sanger, F.; Coulson, A.; Barrell, B.G.; Smith, A.J.H.; Roe, B.A. Cloning in single-stranded bacteriophage as an aid to rapid DNA sequencing. J. Mol. Biol. 1980, 143, 161–178. [Google Scholar] [CrossRef]

- The Arabidopsis Genome Initiative. Analysis of the genome sequence of the flowering plant Arabidopsis thaliana. Nature 2000, 408, 796–815. [Google Scholar] [CrossRef] [PubMed]

- Goff, S.A.; Ricke, D.; Lan, T.-H.; Presting, G.; Wang, R.; Dunn, M.; Glazebrook, J.; Sessions, A.; Oeller, P.; Varma, H.; et al. A draft sequence of the rice genome (Oryza sativa L. ssp. japonica). Science 2002, 296, 92–100. [Google Scholar] [CrossRef]

- Rm, D. A map of human genome variation from population-scale sequencing. Nature 2010, 467, 1061–1073. [Google Scholar]

- Kchouk, M.; Gibrat, J.F.; Elloumi, M. Generations of sequencing technologies: From first to next generation. Biol. Med. 2017, 9, 395. [Google Scholar] [CrossRef]

- Maxam, A.M.; Gilbert, W. A new method for sequencing DNA. Proc. Natl. Acad. Sci. USA 1977, 74, 560–564. [Google Scholar] [CrossRef]

- Bayés, M.; Heath, S.; Gut, I.G. Applications of second generation sequencing technologies in complex disorders. Curr. Top. Behav. Neurogenet. 2012, 12, 321–343. [Google Scholar]

- Mardis, E.R. Next-generation DNA sequencing methods. Annu. Rev. Genom. Hum. Genet. 2008, 9, 387–402. [Google Scholar] [CrossRef]

- Liu, L.; Li, Y.; Li, S.; Hu, N.; He, Y.; Pong, R.; Lin, D.; Lu, L.; Law, M. Comparison of next-generation sequencing systems. J. Biomed. Biotechnol. 2012, 2012, 251364. [Google Scholar] [CrossRef]

- Reuter, J.A.; Spacek, D.V.; Snyder, M.P. High-throughput sequencing technologies. Mol. Cell 2015, 58, 586–597. [Google Scholar] [CrossRef] [PubMed]

- Loman, N.J.; Misra, R.V.; Dallman, T.J.; Constantinidou, C.; Gharbia, S.E.; Wain, J.; Pallen, M.J. Performance comparison of benchtop high-throughput sequencing platforms. Nat. Biotechnol. 2012, 30, 434–439. [Google Scholar] [CrossRef]

- Kulski, J.K. Next-generation sequencing—An overview of the history, tools, and “Omic” applications. Next Gener. Seq.-Adv. Appl. Chall. 2016, 10, 61964. [Google Scholar]

- Alic, A.S.; Ruzafa, D.; Dopazo, J.; Blanquer, I. Objective review of de novo stand-alone error correction methods for NGS data. Wiley Interdiscip. Rev. Comput. Mol. Sci. 2016, 6, 111–146. [Google Scholar] [CrossRef]

- Bentley, D.R.; Balasubramanian, S.; Swerdlow, H.P.; Smith, G.P.; Milton, J.; Brown, C.G.; Hall, K.P.; Evers, D.J.; Barnes, C.L.; Bignell, H.R.; et al. Accurate whole human genome sequencing using reversible terminator chemistry. Nature 2008, 456, 53–59. [Google Scholar] [CrossRef]

- Eid, J.; Fehr, A.; Gray, J.; Luong, K.; Lyle, J.; Otto, G.; Peluso, P.; Rank, D.; Baybayan, P.; Bettman, B.; et al. Real-time DNA sequencing from single polymerase molecules. Science 2009, 323, 133–138. [Google Scholar] [CrossRef] [PubMed]

- Braslavsky, I.; Hebert, B.; Kartalov, E.; Quake, S.R. Sequence information can be obtained from single DNA molecules. Proc. Natl. Acad. Sci. USA 2003, 100, 3960–3964. [Google Scholar] [CrossRef] [PubMed]

- Harris, T.D.; Buzby, P.R.; Babcock, H.; Beer, E.; Bowers, J.; Braslavsky, I.; Causey, M.; Colonell, J.; DiMeo, J.; Efcavitch, J.W.; et al. Single-molecule DNA sequencing of a viral genome. Science 2008, 320, 106–109. [Google Scholar] [CrossRef] [PubMed]

- McCoy, R.C.; Taylor, R.W.; Blauwkamp, T.A.; Kelley, J.L.; Kertesz, M.; Pushkarev, D.; Petrov, D.A.; Fiston-Lavier, A.-S. Illumina TruSeq synthetic long-reads empower de novo assembly and resolve complex, highly-repetitive transposable elements. PLoS ONE 2014, 9, e106689. [Google Scholar] [CrossRef] [PubMed]

- Rhoads, A.; Au, K.F. PacBio sequencing and its applications. Genom. Proteom. Bioinform. 2015, 13, 278–289. [Google Scholar] [CrossRef] [PubMed]

- Chin, C.-S.; Peluso, P.; Sedlazeck, F.J.; Nattestad, M.; Concepcion, G.T.; Clum, A.; Dunn, C.; O’Malley, R.; Figueroa-Balderas, R.; Morales-Cruz, A.; et al. Phased diploid genome assembly with single-molecule real-time sequencing. Nat. Methods 2016, 13, 1050–1054. [Google Scholar] [CrossRef] [PubMed]

- Koren, S.; Schatz, M.C.; Walenz, B.P.; Martin, J.; Howard, J.T.; Ganapathy, G.; Wang, Z.; Rasko, D.A.; McCombie, W.R.; Jarvis, E.D.; et al. Hybrid error correction and de novo assembly of single-molecule sequencing reads. Nat. Biotechnol. 2012, 30, 693–700. [Google Scholar] [CrossRef] [PubMed]

- Mikheyev, A.S.; Tin, M.M. A first look at the Oxford Nanopore MinION sequencer. Mol. Ecol. Resour. 2014, 14, 1097–1102. [Google Scholar] [CrossRef] [PubMed]

- Laehnemann, D.; Borkhardt, A.; McHardy, A.C. Denoising DNA deep sequencing data—High-throughput sequencing errors and their correction. Brief. Bioinform. 2016, 17, 154–179. [Google Scholar] [CrossRef]

- Laver, T.; Harrison, J.; O’neill, P.A.; Moore, K.; Farbos, A.; Paszkiewicz, K.; Studholme, D.J. Assessing the performance of the oxford nanopore technologies minion. Biomol. Detect. Quantif. 2015, 3, 1–8. [Google Scholar] [CrossRef]

- Ip, C.L.; Loose, M.; Tyson, J.R.; de Cesare, M.; Brown, B.L.; Jain, M.; Leggett, R.M.; Eccles, D.A.; Zalunin, V.; Urban, J.M.; et al. MinION Analysis and Reference Consortium: Phase 1 data release and analysis. F1000Research 2015, 4, 1075. [Google Scholar] [CrossRef]

- Behjati, S.; Tarpey, P.S. What is next generation sequencing? Arch. Dis. Child.-Educ. Pract. 2013, 98, 236–238. [Google Scholar] [CrossRef]

- Grada, A.; Weinbrecht, K. Next-generation sequencing: Methodology and application. J. Investig. Dermatol. 2013, 133, e11. [Google Scholar] [CrossRef]

- Slatko, B.E.; Gardner, A.F.; Ausubel, F.M. Overview of next-generation sequencing technologies. Curr. Protoc. Mol. Biol. 2018, 122, e59. [Google Scholar] [CrossRef]

- Podnar, J.; Deiderick, H.; Huerta, G.; Hunicke-Smith, S. Next-Generation sequencing RNA-Seq library construction. Curr. Protoc. Mol. Biol. 2014, 106, 4–21. [Google Scholar] [CrossRef]

- Nakagawa, H.; Fujita, M. Whole genome sequencing analysis for cancer genomics and precision medicine. Cancer Sci. 2018, 109, 513–522. [Google Scholar] [CrossRef]

- Poplin, R.; Chang, P.-C.; Alexander, D.; Schwartz, S.; Colthurst, T.; Ku, A.; Newburger, D.; Dijamco, J.; Nguyen, N.; Afshar, P.T.; et al. A universal SNP and small-indel variant caller using deep neural networks. Nat. Biotechnol. 2018, 36, 983–987. [Google Scholar] [CrossRef]

- Chen, N.C.; Kolesnikov, A.; Goel, S.; Yun, T.; Chang, P.C.; Carroll, A. Improving variant calling using population data and deep learning. BMC Bioinform. 2023, 24, 197. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Luo, R.; Sedlazeck, F.J.; Lam, T.W.; Schatz, M.C. A multi-task convolutional deep neural network for variant calling in single molecule sequencing. Nat. Commun. 2019, 10, 998. [Google Scholar] [CrossRef]

- Ahsan, M.U.; Gouru, A.; Chan, J.; Zhou, W.; Wang, K. A signal processing and deep learning framework for methylation detection using Oxford Nanopore sequencing. Nat. Commun. 2024, 15, 1448. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Cai, L.; Wu, Y.; Gao, J. DeepSV: Accurate calling of genomic deletions from high-throughput sequencing data using deep convolutional neural network. BMC Bioinform. 2019, 20, 665. [Google Scholar] [CrossRef]

- Singh, A.; Bhatia, P. Intelli-NGS: Intelligent NGS, a deep neural network-based artificial intelligence to delineate good and bad variant calls from IonTorrent sequencer data. bioRxiv 2019. [Google Scholar] [CrossRef]

- Gurovich, Y.; Hanani, Y.; Bar, O.; Nadav, G.; Fleischer, N.; Gelbman, D.; Basel-Salmon, L.; Krawitz, P.M.; Kamphausen, S.B.; Zenker, M.; et al. Identifying facial phenotypes of genetic disorders using deep learning. Nat. Med. 2019, 25, 60–64. [Google Scholar] [CrossRef]

- Park, S.; Min, S.; Choi, H.; Yoon, S. deepMiRGene: Deep neural network based precursor microrna prediction. arXiv 2016, arXiv:1605.00017. [Google Scholar]

- Boudellioua, I.; Kulmanov, M.; Schofield, P.N.; Gkoutos, G.V.; Hoehndorf, R. DeepPVP: Phenotype-based prioritization of causative variants using deep learning. BMC Bioinform. 2019, 20, 65. [Google Scholar] [CrossRef]

- Trieu, T.; Martinez-Fundichely, A.; Khurana, E. DeepMILO: A deep learning approach to predict the impact of non-coding sequence variants on 3D chromatin structure. Genome Biol. 2020, 21, 79. [Google Scholar] [CrossRef]

- Zhou, J.; Theesfeld, C.L.; Yao, K.; Chen, K.M.; Wong, A.K.; Troyanskaya, O.G. Deep learning sequence-based ab initio prediction of variant effects on expression and disease risk. Nat. Genet. 2018, 50, 1171–1179. [Google Scholar] [CrossRef]

- Hsieh, T.-C.; Mensah, M.A.; Pantel, J.T.; Aguilar, D.; Bar, O.; Bayat, A.; Becerra-Solano, L.; Bentzen, H.B.; Biskup, S.; Borisov, O.; et al. PEDIA: Prioritization of exome data by image analysis. Genet. Med. 2019, 21, 2807–2814. [Google Scholar] [CrossRef]

- Ravasio, V.; Ritelli, M.; Legati, A.; Giacopuzzi, E. Garfield-ngs: Genomic variants filtering by deep learning models in NGS. Bioinformatics 2018, 34, 3038–3040. [Google Scholar] [CrossRef]

- Arloth, J.; Eraslan, G.; Andlauer, T.F.M.; Martins, J.; Iurato, S.; Kühnel, B.; Waldenberger, M.; Frank, J.; Gold, R.; Hemmer, B.; et al. DeepWAS: Multivariate genotype-phenotype associations by directly integrating regulatory information using deep learning. PLoS Comput. Biol. 2020, 16, e1007616. [Google Scholar] [CrossRef]

- Kelley, D.R.; Snoek, J.; Rinn, J.L. Basset: Learning the regulatory code of the accessible genome with deep convolutional neural networks. Genome Res. 2016, 26, 990–999. [Google Scholar] [CrossRef]

- Quang, D.; Xie, X. DanQ: A hybrid convolutional and recurrent deep neural network for quantifying the function of DNA sequences. Nucleic Acids Res. 2016, 44, e107. [Google Scholar] [CrossRef]

- Singh, S.; Yang, Y.; Póczos, B.; Ma, J. Predicting enhancer-promoter interaction from genomic sequence with deep neural networks. Quant. Biol. 2019, 7, 122–137. [Google Scholar] [CrossRef]

- Zeng, W.; Wang, Y.; Jiang, R. Integrating distal and proximal information to predict gene expression via a densely connected convolutional neural network. Bioinformatics 2020, 36, 496–503. [Google Scholar] [CrossRef]

- Kalkatawi, M.; Magana-Mora, A.; Jankovic, B.; Bajic, V.B. DeepGSR: An optimized deep-learning structure for the recognition of genomic signals and regions. Bioinformatics 2019, 35, 1125–1132. [Google Scholar] [CrossRef]

- Jaganathan, K.; Panagiotopoulou, S.K.; McRae, J.F.; Darbandi, S.F.; Knowles, D.; Li, Y.I.; Kosmicki, J.A.; Arbelaez, J.; Cui, W.; Schwartz, G.B.; et al. Predicting splicing from primary sequence with deep learning. Cell 2019, 176, 535–548. [Google Scholar] [CrossRef]

- Du, J.; Jia, P.; Dai, Y.; Tao, C.; Zhao, Z.; Zhi, D. Gene2vec: Distributed representation of genes based on co-expression. BMC Genom. 2019, 20, 82. [Google Scholar] [CrossRef]

- Movva, R.; Greenside, P.; Marinov, G.K.; Nair, S.; Shrikumar, A.; Kundaje, A. Deciphering regulatory DNA sequences and noncoding genetic variants using neural network models of massively parallel reporter assays. PLoS ONE 2019, 14, e0218073. [Google Scholar] [CrossRef]

- Kaikkonen, M.U.; Lam, M.T.; Glass, C.K. Non-coding RNAs as regulators of gene expression and epigenetics. Cardiovasc. Res. 2011, 90, 430–440. [Google Scholar] [CrossRef]

- Chen, X.; Xu, H.; Shu, X.; Song, C.X. Mapping epigenetic modifications by sequencing technologies. Cell Death Differ. 2023. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Troyanskaya, O.G. Predicting effects of noncoding variants with deep learning–based sequence model. Nat. Methods 2015, 12, 931–934. [Google Scholar] [CrossRef] [PubMed]

- Chiu, Y.-C.; Chen, H.-I.H.; Zhang, T.; Zhang, S.; Gorthi, A.; Wang, L.-J.; Huang, Y.; Chen, Y. Predicting drug response of tumors from integrated genomic profiles by deep neural networks. BMC Med. Genom. 2019, 12, 18. [Google Scholar]

- Xie, L.; He, S.; Song, X.; Bo, X.; Zhang, Z. Deep learning-based transcriptome data classification for drug-target interaction prediction. BMC Genom. 2018, 19, 667. [Google Scholar] [CrossRef]

- Wang, Y.; Li, F.; Bharathwaj, M.; Rosas, N.C.; Leier, A.; Akutsu, T.; Webb, G.I.; Marquez-Lago, T.T.; Li, J.; Lithgow, T.; et al. DeepBL: A deep learning-based approach for in silico discovery of beta-lactamases. Brief. Bioinform. 2021, 22, bbaa301. [Google Scholar] [CrossRef]

- Pu, L.; Govindaraj, R.G.; Lemoine, J.M.; Wu, H.C.; Brylinski, M. DeepDrug3D: Classification of ligand-binding pockets in proteins with a convolutional neural network. PLoS Comput. Biol. 2019, 15, e1006718. [Google Scholar] [CrossRef]

- Kuenzi, B.M.; Park, J.; Fong, S.H.; Sanchez, K.S.; Lee, J.; Kreisberg, J.F.; Ma, J.; Ideker, T. Predicting drug response and synergy using a deep learning model of human cancer cells. Cancer Cell 2020, 38, 672–684. [Google Scholar] [CrossRef]

- Mavropoulos, A.; Johnson, C.; Lu, V.; Nieto, J.; Schneider, E.C.; Saini, K.; Phelan, M.L.; Hsie, L.X.; Wang, M.J.; Cruz, J.; et al. Artificial Intelligence-Driven Morphology-Based Enrichment of Malignant Cells from Body Fluid. Mod. Pathol. 2023, 36, 100195. [Google Scholar] [CrossRef] [PubMed]

- Qiu, H.; Wang, M.; Cao, T.; Feng, Y.; Zhang, Y.; Guo, R. Low-coverage whole-genome sequencing for the effective diagnosis of early endometrial cancer: A pilot study. Heliyon 2023, 9, e19323. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- van der Hoeven, J.J.M.; Monkhorst, K.; van de Wouw, A.J.; Roepman, P. Onbekende primaire tumor opsporen met ‘whole genome sequencing’ [Whole genome sequencing to find the primary tumour in cancer of unknown primary origin]. Ned. Tijdschr. Geneeskd. 2023, 167, D7625. [Google Scholar] [PubMed]

- Akhoundova, D.; Rubin, M.A. The grand challenge of moving cancer whole-genome sequencing into the clinic. Nat. Med. 2024, 30, 39–40. [Google Scholar] [CrossRef] [PubMed]

- Cao, T.M.; Tran, N.H.; Nguyen, P.L.; Pham, H. Multimodal contrastive learning for diagnosing Cardiovascular diseases from electrocardiography (ECG) signals and patient metadata. arXiv 2023, arXiv:2304.11080. [Google Scholar]

- Carreras, J.; Nakamura, N. Artificial Intelligence, Lymphoid Neoplasms, and Prediction of MYC, BCL2, and BCL6 Gene Expression Using a Pan-Cancer Panel in Diffuse Large B-Cell Lymphoma. Hemato 2024, 5, 119–143. [Google Scholar] [CrossRef]

- Gumbs, A.A.; Croner, R.; Abu-Hilal, M.; Bannone, E.; Ishizawa, T.; Spolverato, G.; Frigerio, I.; Siriwardena, A.; Messaoudi, N. Surgomics and the Artificial intelligence, Radiomics, Genomics, Oncopathomics and Surgomics (AiRGOS) Project. Artif. Intell. Surg. 2023, 3, 180–185. [Google Scholar] [CrossRef]

- Li, J.; Liu, H.; Liu, W.; Zong, P.; Huang, K.; Li, Z.; Li, H.; Xiong, T.; Tian, G.; Li, C.; et al. Predicting gastric cancer tumor mutational burden from histopathological images using multimodal deep learning. Brief. Funct. Genom. 2024, 23, 228–238. [Google Scholar] [CrossRef] [PubMed]

- Mondol, R.K.; Millar, E.K.A.; Graham, P.H.; Browne, L.; Sowmya, A.; Meijering, E. hist2RNA: An Efficient Deep Learning Architecture to Predict Gene Expression from Breast Cancer Histopathology Images. Cancers 2023, 15, 2569. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Bagger, F.O.; Borgwardt, L.; Jespersen, A.S.; Hansen, A.R.; Bertelsen, B.; Kodama, M.; Nielsen, F.C. Whole genome sequencing in clinical practice. BMC Med. Genom. 2024, 17, 39. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Ulph, F.; Bennett, R. Psychological and Ethical Challenges of Introducing Whole Genome Sequencing into Routine Newborn Screening: Lessons Learned from Existing Newborn Screening. New Bioeth. 2023, 29, 52–74. [Google Scholar] [CrossRef] [PubMed]

- Katsuya, Y. Current and future trends in whole genome sequencing in cancer. Cancer Biol. Med. 2024, 21, 16–20. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Preuer, K.; Lewis, R.P.; Hochreiter, S.; Bender, A.; Bulusu, K.C.; Klambauer, G. DeepSynergy: Predicting anti-cancer drug synergy with Deep Learning. Bioinformatics 2018, 34, 1538–1546. [Google Scholar] [CrossRef]

- Alharbi, W.S.; Rashid, M. A review of deep learning applications in human genomics using next-generation sequencing data. Hum. Genom. 2022, 16, 26. [Google Scholar] [CrossRef]

- Kinoshita, M.; Ueda, D.; Matsumoto, T.; Shinkawa, H.; Yamamoto, A.; Shiba, M.; Okada, T.; Tani, N.; Tanaka, S.; Kimura, K.; et al. Deep Learning Model Based on Contrast-Enhanced Computed Tomography Imaging to Predict Postoperative Early Recurrence after the Curative Resection of a Solitary Hepatocellular Carcinoma. Cancers 2023, 15, 2140. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Chen, L.; Zhang, C.; Xue, R.; Liu, M.; Bai, J.; Bao, J.; Wang, Y.; Jiang, N.; Li, Z.; Wang, W.; et al. Deep whole-genome analysis of 494 hepatocellular carcinomas. Nature 2024, 627, 586–593. [Google Scholar] [CrossRef] [PubMed]

- Samsom, K.G.; Bosch, L.J.W.; Schipper, L.J.; Schout, D.; Roepman, P.; Boelens, M.C.; Lalezari, F.; Klompenhouwer, E.G.; de Langen, A.J.; Buffart, T.E.; et al. Optimized whole-genome sequencing workflow for tumor diagnostics in routine pathology practice. Nat. Protoc. 2024, 19, 700–726. [Google Scholar] [CrossRef] [PubMed]

- Iacobucci, G. Whole genome sequencing can help guide cancer care, study reports. BMJ 2024, 384, q65. [Google Scholar] [CrossRef] [PubMed]

- Haga, Y.; Sakamoto, Y.; Kajiya, K.; Kawai, H.; Oka, M.; Motoi, N.; Shirasawa, M.; Yotsukura, M.; Watanabe, S.I.; Arai, M.; et al. Whole-genome sequencing reveals the molecular implications of the stepwise progression of lung adenocarcinoma. Nat. Commun. 2023, 14, 8375. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Lancia, G.; Varkila, M.R.J.; Cremer, O.L.; Spitoni, C. Two-step interpretable modeling of ICU-AIs. Artif. Intell. Med. 2024, 151, 102862. [Google Scholar] [CrossRef] [PubMed]

- Chow, B.J.W.; Fayyazifar, N.; Balamane, S.; Saha, N.; Clarkin, O.; Green, M.; Maiorana, A.; Golian, M.; Dwivedi, G. Interpreting Wide-Complex Tachycardia using Artificial Intelligence. Can. J. Cardiol. 2024, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Auffray, C.; Chen, Z.; Hood, L. Systems medicine: The future of medical genomics and healthcare. Genome Med. 2009, 1, 2. [Google Scholar] [CrossRef]

- Caudai, C.; Galizia, A.; Geraci, F.; Le Pera, L.; Morea, V.; Salerno, E.; Via, A.; Colombo, T. AI applications in functional genomics. Comput. Struct. Biotechnol. J. 2021, 19, 5762–5790. [Google Scholar] [CrossRef]

- Mann, M.; Kumar, C.; Zeng, W.; Strauss, M.T. Perspective Artificial intelligence for proteomics and biomarker discovery. Cell Syst. 2021, 12, 759–770. [Google Scholar] [CrossRef]

- Kiechle, F.L.; Holland-Staley, C.A. Genomics, transcriptomics, proteomics, and numbers. Arch. Pathol. Lab. Med. 2003, 127, 1089–1097. [Google Scholar] [CrossRef]

- Lowe, R.; Shirley, N.; Bleackley, M.; Dolan, S.; Shafee, T. Transcriptomics technologies. PLoS Comput. Biol. 2017, 13, e1005457. [Google Scholar] [CrossRef]

- Supplitt, S.; Karpinski, P.; Sasiadek, M.; Laczmanska, I. Current Achievements and Applications of Transcriptomics in Personalized Cancer Medicine. Int. J. Mol. Sci. 2021, 22, 1422. [Google Scholar] [CrossRef] [PubMed]

- Gui, Y.; He, X.; Yu, J.; Jing, J. Artificial Intelligence-Assisted Transcriptomic Analysis to Advance Cancer Immunotherapy. J. Clin. Med. 2023, 12, 1279. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alagarswamy, K.; Shi, W.; Boini, A.; Messaoudi, N.; Grasso, V.; Cattabiani, T.; Turner, B.; Croner, R.; Kahlert, U.D.; Gumbs, A. Should AI-Powered Whole-Genome Sequencing Be Used Routinely for Personalized Decision Support in Surgical Oncology—A Scoping Review. BioMedInformatics 2024, 4, 1757-1772. https://doi.org/10.3390/biomedinformatics4030096

Alagarswamy K, Shi W, Boini A, Messaoudi N, Grasso V, Cattabiani T, Turner B, Croner R, Kahlert UD, Gumbs A. Should AI-Powered Whole-Genome Sequencing Be Used Routinely for Personalized Decision Support in Surgical Oncology—A Scoping Review. BioMedInformatics. 2024; 4(3):1757-1772. https://doi.org/10.3390/biomedinformatics4030096

Chicago/Turabian StyleAlagarswamy, Kokiladevi, Wenjie Shi, Aishwarya Boini, Nouredin Messaoudi, Vincent Grasso, Thomas Cattabiani, Bruce Turner, Roland Croner, Ulf D. Kahlert, and Andrew Gumbs. 2024. "Should AI-Powered Whole-Genome Sequencing Be Used Routinely for Personalized Decision Support in Surgical Oncology—A Scoping Review" BioMedInformatics 4, no. 3: 1757-1772. https://doi.org/10.3390/biomedinformatics4030096

APA StyleAlagarswamy, K., Shi, W., Boini, A., Messaoudi, N., Grasso, V., Cattabiani, T., Turner, B., Croner, R., Kahlert, U. D., & Gumbs, A. (2024). Should AI-Powered Whole-Genome Sequencing Be Used Routinely for Personalized Decision Support in Surgical Oncology—A Scoping Review. BioMedInformatics, 4(3), 1757-1772. https://doi.org/10.3390/biomedinformatics4030096