Cloud Detection Methods for Optical Satellite Imagery: A Comprehensive Review

Abstract

1. Introduction

- Land Cover Monitoring: RS images help in monitoring changes in land cover (like forest, grassland, urban areas, and agriculture fields), urban growth & expansion (like development of buildings, roads, and other urban infrastructure), deforestation, monitoring mountains & canyons, and change detection [7,8,9].

- Geology and Natural Resource Management: RS is extensively used in locating mineral resources, monitoring mining processes, managing freshwater resources (such as lakes, rivers, and glaciers), and geological surveys for identifying rock formations, faults, folds, and other geological structures [6,14].

- Military Surveillance: RS helps in gathering visual intelligence like enemy activities, troop movement, equipment deployment, and infrastructure changes [15]. It also helps in keeping track of borders and suspicious activities and identifying potential threats. It monitors maritime activities like tracking naval vessels, illegal fishing, and smuggling [16].

- Oceanography: RS aids in estimating ocean currents, marine life distribution, understanding sea dynamics, and climate change effects [17].

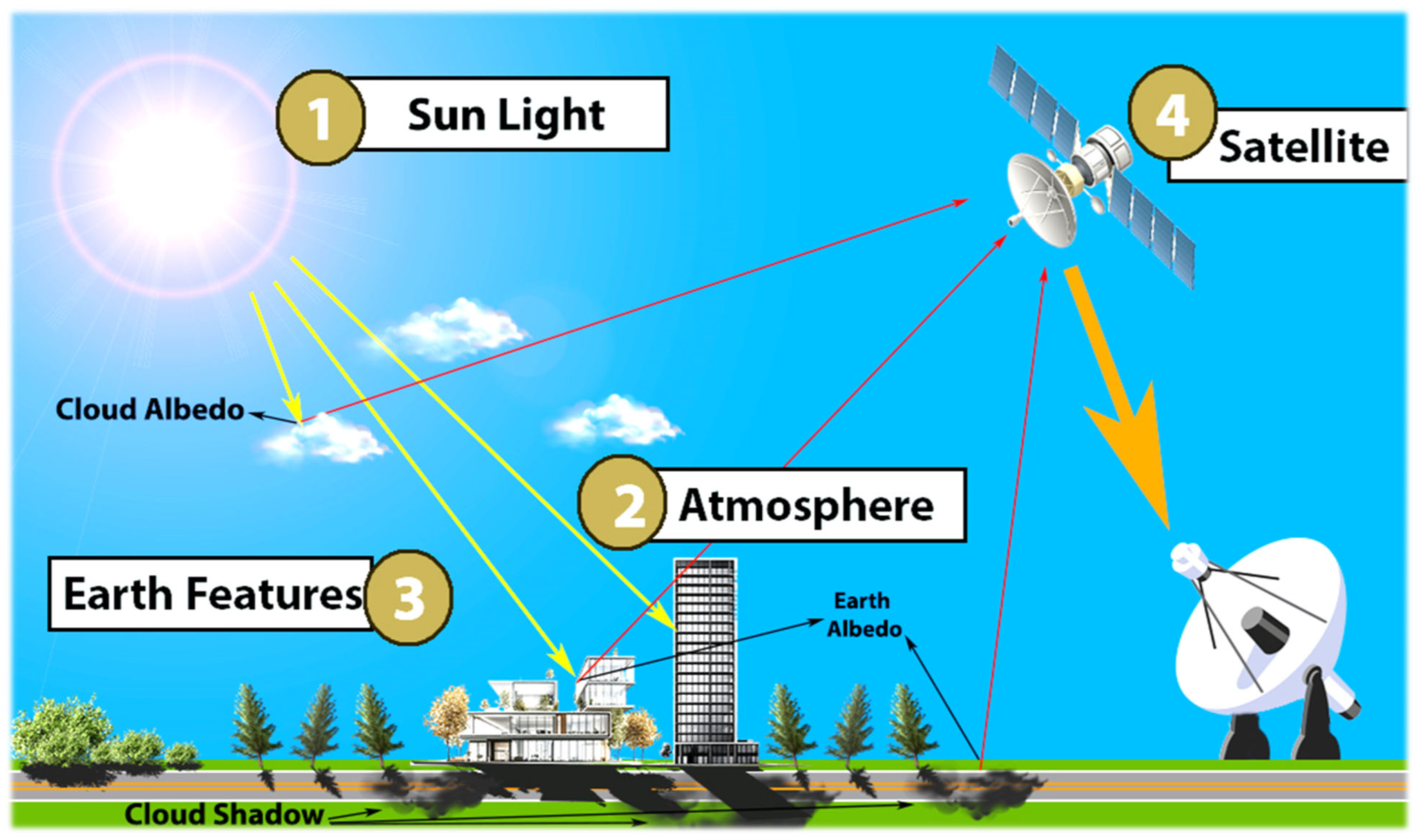

2. Cloud and Its Effect

- Low clouds: They are found to be nearly touching the ground and lie within 2 km above ground level (AGL). They are typically formed by the condensation of water droplets, although in high-latitude regions, low clouds can also be formed by ice particles. These clouds usually comprise stratus, stratocumulus, and nimbostratus clouds. They are generally optically thick, which mostly obscures the objects beneath them, leading to dark shadows. Vertical clouds typically extend through a wide range of altitudes, from low to high levels. They are characterized by being optically thick, meaning they significantly block or scatter incoming radiation, similar to low clouds in appearance and effect.

- Medium clouds: These are between low and high clouds (2 km to 6 km) that include altostratus and altocumulus clouds. They are formed due to low temperatures by ice crystals and water droplets [31]. They are slightly less dense than low clouds, but the visibility of objects below them is less than 50%, and they have shadows slightly away from the cloud in satellite imagery. If only two cloud types are considered, they fall in the low cloud category.

- High clouds: They are usually above 6 km and include cirrus, cirrostratus, and cirrocumulus clouds formed by ice crystals in stable air [32]. They are optically thin, light, streaky, and cover large areas. Ground objects are visible but look hazy and blurry due to their presence [33]. Their shadow is usually very light and often gets removed during atmospheric correction of satellite imagery.

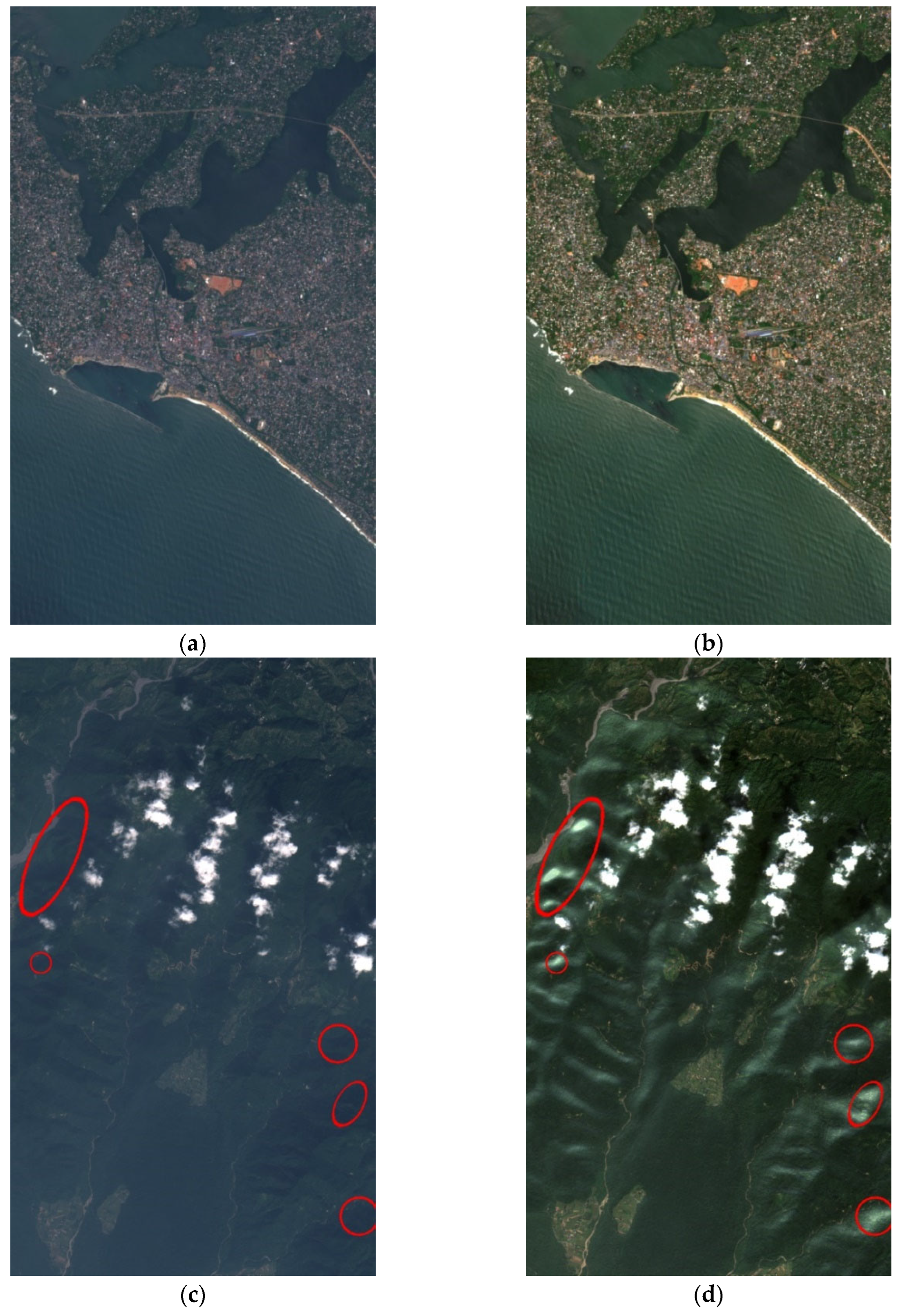

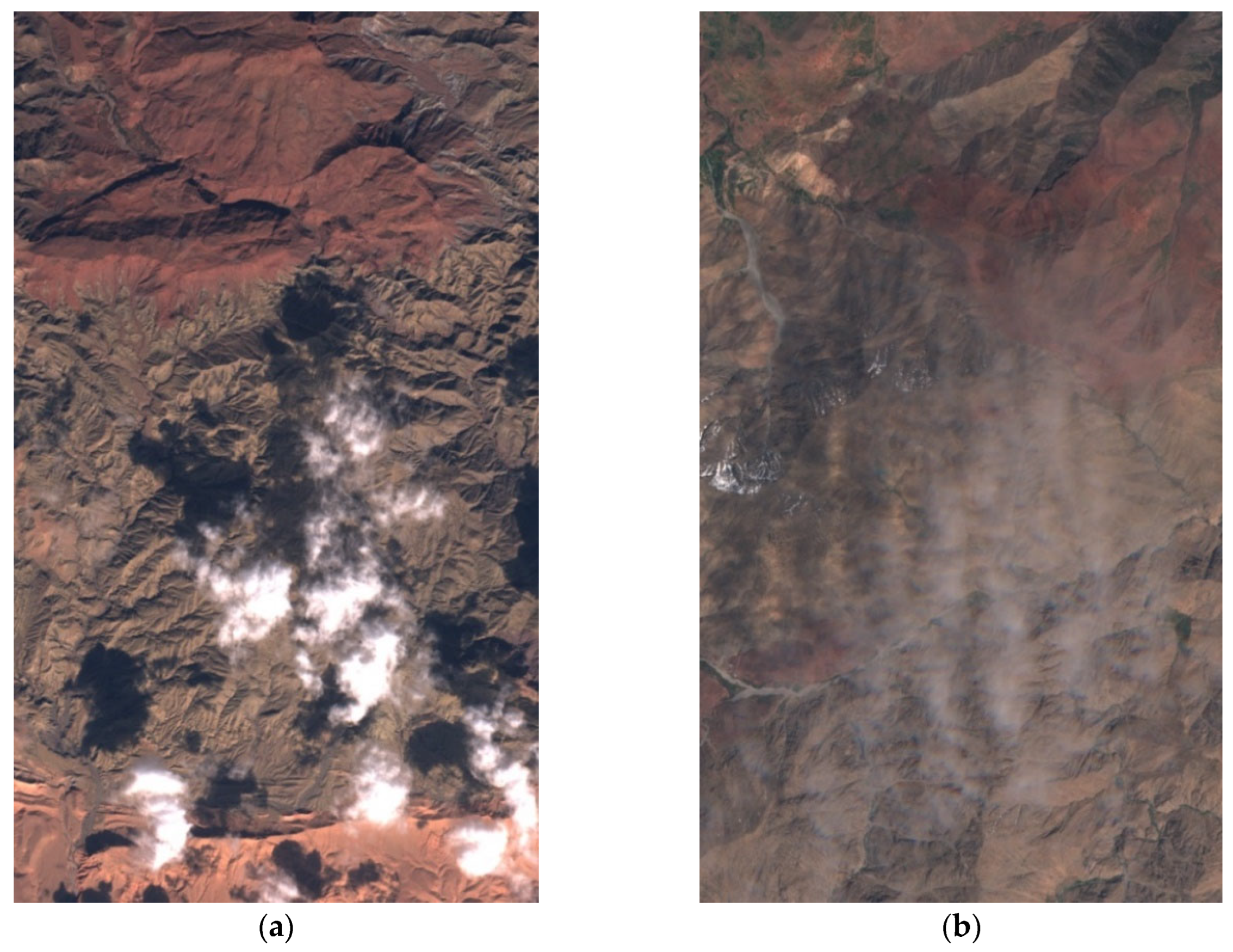

- Thick cloud: A cloud is classified as ‘thick’ when it obscures the underlying Earth’s surface with an opacity exceeding 50%, which corresponds to a high optical thickness commonly used in remote sensing studies. Thick clouds make it impossible to comprehend any details below, ultimately hindering the interpretation of ground-level events and creating dark cloud shadows that hide the objects (Figure 3a). The formation of cloud shadows depends on the altitude & thickness of the cloud, the sun angle, and the characteristics of the Earth’s surface over which the shadow is falling [35].

- Thin cloud: In contrast, a cloud is termed ‘thin’ if it covers the surface with less than 50% opacity, corresponding to low optical thickness [36]. It offers limited ground visibility, but the view is often blurred and distorted, making it challenging to accurately perceive the terrestrial activities (Figure 3b).

3. Cloud Detection

- Binary cloud mask: A binary cloud mask usually has only two classes or values, and it has two variants: cloud-only [42,43,44] and cloud-contaminated [45]. In the cloud-only variant, each pixel is categorized as either being under cloud cover or being free from clouds (Figure 4b). On the other hand, the cloud-contaminated variant shares the same classification structure, but the cloudy pixels include each type of cloud as well as cloud shadow (Figure 4c). Cloud-only and cloud-contaminated variants of binary masks can easily be created using multiclass cloud masks [33,40].

- Multiclass cloud mask: A multiclass cloud mask is an improved version of cloud masking, where pixels are classified into several distinct categories [38,44]. Each class signifies different types of cloud cover or atmospheric conditions (Figure 4d). Additionally, this cloud mask incorporates classes that denote different Earth’s surfaces, such as snow/ice, water, forests, and others, which can exhibit characteristics resembling clouds or cloud shadows [33,46]. A more intricate and comprehensive understanding of satellite imagery can be attained by adopting this approach.

3.1. Generic Cloud Detection Classification

- Manual Cloud Detection: It is the most reliable and highly accurate method of cloud detection used so far. This method requires a human expert to visually interpret the true-color and false-color composite of satellite imagery rather than mark the boundaries of different classes of cloud-cover areas by drawing polygons [42]. While this method is highly accurate, it is time-consuming, labor-intensive, and requires expertise [47]. This technique was viable during periods when satellite data access was constrained. With the availability of free large-scale satellite data, this method may prove to be infeasible. However, it is a good option for generating a validation dataset that can be used for performance analysis of automated methods and active learning methods.

- Automated cloud detection: These methods use algorithms, specific rules, and programs that can detect clouds without direct human intervention [26]. They are designed to be faster and more efficient compared to manual cloud detection. These methods explore spectral and spatial signatures and characteristics of clouds to differentiate them from Earth’s objects. These methods range from simple threshold-based techniques to advanced machine learning/ deep learning methods. They are designed to be scalable and can quickly handle large data volumes [40]. However, these methods have some limitations that researchers are exploring to overcome:

- These methods produce commission and omission errors [48], especially in complex scenes.

- These approaches might fail to work with certain atmospheric conditions and clouds.

- These algorithms might not be universally applicable solutions and require some additional parameter tuning.

- Active Learning: It is a specialized algorithm, often rooted in machine learning, that collaborates with a human expert to arrive at a definitive conclusion regarding uncertain and ambiguous cloud-cover regions [33]. Human feedback is essential, from training to refining the algorithm’s accuracy over time [41]. It is also termed a human-in-the-loop approach. This approach represents an effort to combine human expertise with machine learning capabilities. However, it necessitates careful supervision in most instances.

3.2. Sensor Band-Based Cloud Detection Classification

- Single-band Cloud detection: This approach relies solely on the spatial information from a single dominant spectral band sensitive to cloud properties, such as the blue or cirrus band [49,50]. However, such detection approaches are very rare, and their ability to distinguish clouds from other bright surfaces is limited, especially under complex atmospheric and surface conditions.

- Multi-band Cloud detection: The multi-band cloud detection approach capitalizes on a combination of captured multiple spectral bands to improve accuracy. This approach can be classified into four sub-categories:

- 3-band approach: This method employs the standard color bands (RGB) for cloud masking [51]. Since most satellites possess these three bands, it is a generic, versatile, and scalable approach applicable to a wide range of satellites.

- 4-band approach: This method uses the visible band (RGB) and NIR band to generate the cloud masks. These four bands have rich information required for cloud detection [52]. Most advanced remote sensing satellites like Landsat, Sentinel, PlanetScope, GeoFAN, IRS, etc., share these four bands, making this approach widely adaptable.

- All-band approach: These cloud detection methods are usually satellite-centric; they use all bands available with the satellite to generate cloud masks. Most threshold-based cloud detection uses this concept to generate cloud masks and perform atmospheric and geometric corrections.

4. Dataset Available

4.1. Baetens-Hagolle (CESBIO/CNES) Dataset

4.2. WHUS2-CD Dataset

4.3. KappaSet Dataset

4.4. IndiaS2 Dataset

5. Cloud Detection Methods

5.1. Threshold-Based Cloud Detection

5.1.1. Function of Mask (Fmask)

5.1.2. Sen2cor

5.1.3. MAJA

5.2. Machine Learning (ML) Cloud Detection

5.3. Deep Learning (DL) Cloud Detection

5.4. Importance of Multiclass Cloud Detection in Cloud Removal

6. Intercomparison Framework and Performance Evaluation

6.1. Data Harmonization and Input Consistency

6.2. Performance Evaluation Metrics

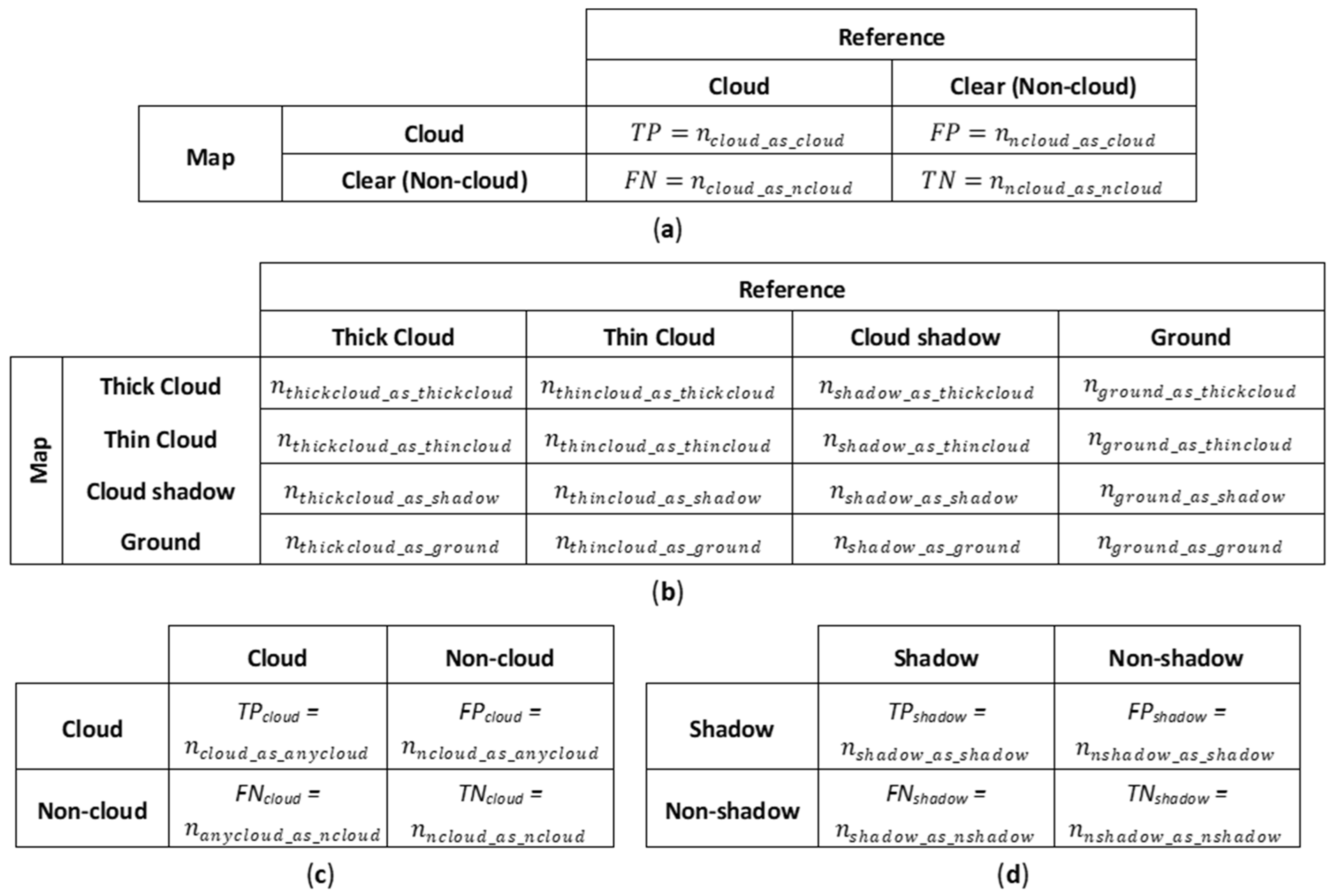

- For multiclass cloud detection, extended confusion matrices (Figure 5b–d) are used to evaluate the performance metrics (Table 10) for each class (c), where n is the number of pixels, C is the number of classes, and True Positive (TPc), False Positive (FPc), False Negative (FNc), True Negative (TNc) are estimated as per [150,181]. The accuracy metrics for multiclass cloud detection are computed either as macro-averaged to give equal importance to each class by reducing the resultant impact of the dominant class or micro-averaged, which aggregates the contributions of all classes (Table 10).

6.3. Conversion Framework for Multiclass Comparison

7. Research Gaps

- Threshold-based methods exhibit limited universality and scalability when applied to imagery from different locations with rich diversity. Thresholds are often static discrimination, which may only be suitable for specific regions and cloud types. Given the richness and diversity of remote sensing data, dynamic thresholds that can adapt to different conditions through expert knowledge systems are needed. Although thick cloud detection is generally efficient for most methods, improving the detection of thin clouds and cloud shadows remains a challenge. Also, realizing a comparative analysis for different approaches is important, as most algorithms generate cloud masks at different pixel resolutions. Fmask produces cloud masks at 20 m, Sen2Cor at 30 m, and MAJA at 240 m.

- ML methods automatically handle threshold selection requirements but are dependent on effective feature selection and extraction methods, as the use of only spectral features generally leads to lower accuracy. Their performance can be enhanced by incorporating spectral and spatial features by considering an appropriate combination of conventional methods that generate handcrafted texture features. However, conventional methods of feature extraction are time-consuming, and a mechanism is required to consider spatial features along with spectral features automatically.

- DL methods perform well by automatically generating low to high-level spectral and spatial features at various levels. However, they have high computation costs and encounter difficulties in considering large patch sizes containing rich information about large structures like clouds, shadows, and snow regions. They also face challenges in attaining discriminating features between clouds and bright areas like snow/ice, buildings, and river beds, as well as cloud shadows vs. dark areas like water bodies and terrain shadows.

- The extraction of cloud leaves zero value in the imagery, either filled by mosaicking from multitemporal data or pixel-wise reconstruction. However, mosaicking fails to consider radiometric variation and tends to overlook the spatial distribution of pixel intensity. The pixel-wise reconstruction shows improvement, but most existing cloud removal methods are unsuitable for addressing thin clouds as well as large areas covered by thick clouds. Also, an efficient automated multiclass cloud detection technique is required to consider thick vs. thin cloud separation. Most cloud removal methods consider true-color or false-color image composites, while each spectral band is affected by cloud presence; therefore, a mechanism to handle cloud in each spectral band is needed.

8. Conclusions

Funding

Conflicts of Interest

Correction Statement

References

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Richards, J.A. Sources and Characteristics of Remote Sensing Image Data. In Remote Sensing Digital Image Analysis; Springer: Berlin, Heidelberg, 1993; pp. 1–37. [Google Scholar]

- Messina, G.; Peña, J.M.; Vizzari, M.; Modica, G. A Comparison of UAV and Satellites Multispectral Imagery in Monitoring Onion Crop. An Application in the ‘Cipolla Rossa Di Tropea’(Italy). Remote Sens. 2020, 12, 3424. [Google Scholar] [CrossRef]

- Wulder, M.A.; Roy, D.P.; Radeloff, V.C.; Loveland, T.R.; Anderson, M.C.; Johnson, D.M.; Healey, S.; Zhu, Z.; Scambos, T.A.; Pahlevan, N.; et al. Fifty Years of Landsat Science and Impacts. Remote Sens. Environ. 2022, 280, 113195–113216. [Google Scholar] [CrossRef]

- Qian, S.-E. Hyperspectral Satellites, Evolution, and Development History. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7032–7056. [Google Scholar] [CrossRef]

- Adjovu, G.E.; Stephen, H.; James, D.; Ahmad, S. Overview of the Application of Remote Sensing in Effective Monitoring of Water Quality Parameters. Remote Sens. 2023, 15, 1938–1973. [Google Scholar] [CrossRef]

- Phiri, D.; Simwanda, M.; Salekin, S.; Nyirenda, V.R.; Murayama, Y.; Ranagalage, M. Sentinel-2 Data for Land Cover/Use Mapping: A Review. Remote Sens. 2020, 12, 2291. [Google Scholar] [CrossRef]

- Ahmed, R.; Ahmad, S.T.; Wani, G.F.; Ahmed, P.; Mir, A.A.; Singh, A. Analysis of Landuse and Landcover Changes in Kashmir Valley, India—A Review. GeoJournal 2022, 87, 4391–4403. [Google Scholar] [CrossRef]

- Pandey, P.C.; Koutsias, N.; Petropoulos, G.P.; Srivastava, P.K.; Ben Dor, E. Land Use/Land Cover in View of Earth Observation: Data Sources, Input Dimensions, and Classifiers—A Review of the State of the Art. Geocarto Int. 2021, 36, 957–988. [Google Scholar] [CrossRef]

- Casagli, N.; Intrieri, E.; Tofani, V.; Gigli, G.; Raspini, F. Landslide Detection, Monitoring and Prediction with Remote-Sensing Techniques. Nat. Rev. Earth Environ. 2023, 4, 51–64. [Google Scholar] [CrossRef]

- Liu, L.; Li, C.; Lei, Y.; Yin, J.; Zhao, J. Volcanic Ash Cloud Detection from MODIS Image Based on CPIWS Method. Acta Geophys. 2017, 65, 151. [Google Scholar] [CrossRef]

- Wu, B.; Zhang, M.; Zeng, H.; Tian, F.; Potgieter, A.B.; Qin, X.; Yan, N.; Chang, S.; Zhao, Y.; Dong, Q.; et al. Challenges and Opportunities in Remote Sensing-Based Crop Monitoring: A Review. Natl. Sci. Rev. 2023, 10, nwac290. [Google Scholar] [CrossRef] [PubMed]

- Giardina, G.; Macchiarulo, V.; Foroughnia, F.; Jones, J.N.; Whitworth, M.R.Z.; Voelker, B.; Milillo, P.; Penney, C.; Adams, K.; Kijewski-Correa, T. Combining Remote Sensing Techniques and Field Surveys for Post-Earthquake Reconnaissance Missions. Bull. Earthq. Eng. 2024, 22, 3415–3439. [Google Scholar] [CrossRef]

- Sun, W.; Chen, C.; Liu, W.; Yang, G.; Meng, X.; Wang, L.; Ren, K. Coastline Extraction Using Remote Sensing: A Review. GIScience Remote Sens. 2023, 60, 2243671. [Google Scholar] [CrossRef]

- Kussul, N.; Yailymova, H.; Drozd, S. Detection of War-Damaged Agricultural Fields of Ukraine Based on Vegetation Indices Using Sentinel-2 Data. In Proceedings of the 2022 12th International Conference on Dependable Systems, Services and Technologies (DESSERT), Athens, Greece, 9–11 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–5. [Google Scholar]

- Ciocarlan, A.; Stoian, A. Ship Detection in Sentinel 2 Multi-Spectral Images with Self-Supervised Learning. Remote Sens. 2021, 13, 4255. [Google Scholar] [CrossRef]

- Aulicino, G.; Cotroneo, Y.; de Ruggiero, P.; Buono, A.; Corcione, V.; Nunziata, F.; Fusco, G. Remote Sensing Applications in Satellite Oceanography; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- O’Reilly, D.; Herdrich, G.; Kavanagh, D.F. Electric Propulsion Methods for Small Satellites: A Review. Aerospace 2021, 8, 22. [Google Scholar] [CrossRef]

- Moreira, A.; Prats-Iraola, P.; Younis, M.; Krieger, G.; Hajnsek, I.; Papathanassiou, K.P. A Tutorial on Synthetic Aperture Radar. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–43. [Google Scholar] [CrossRef]

- Fritz, S. The Albedo of the Planet Earth and of Clouds. J. Atmos. Sci. 1949, 6, 277–282. [Google Scholar] [CrossRef]

- Du, X.; Wu, H. Cloud-Graph: A Feature Interaction Graph Convolutional Network for Remote Sensing Image Cloud Detection. J. Intell. Fuzzy Syst. 2023, 45, 9123–9139. [Google Scholar] [CrossRef]

- Young, N.E.; Anderson, R.S.; Chignell, S.M.; Vorster, A.G.; Lawrence, R.; Evangelista, P.H. A Survival Guide to Landsat Preprocessing. Ecology 2017, 98, 920–932. [Google Scholar] [CrossRef]

- Frantz, D. FORCE—Landsat + Sentinel-2 Analysis Ready Data and Beyond. Remote Sens. 2019, 11, 1124–1145. [Google Scholar] [CrossRef]

- Pflug, B.; Makarau, A.; Richter, R. Processing Sentinel-2 Data with ATCOR. In Proceedings of the EGU General Assembly, Vienna, Austria, 17–22 April 2016; p. 15488. [Google Scholar]

- Main-Knorn, M.; Pflug, B.; Louis, J.; Debaecker, V.; Müller-Wilm, U.; Gascon, F. Sen2Cor for Sentinel-2. In Proceedings of the Image and Signal Processing for Remote Sensing XXIII, Warsaw, Poland, 11–13 September 2017; Bruzzone, L., Bovolo, F., Benediktsson, J.A., Eds.; SPIE: Pune, India, 2017; Volume 10427, p. 3. [Google Scholar]

- Mahajan, S.; Fataniya, B. Cloud Detection Methodologies: Variants and Development—A Review. Complex Intell. Syst. 2020, 6, 251–261. [Google Scholar] [CrossRef]

- Tapakis, R.; Charalambides, A.G. Equipment and Methodologies for Cloud Detection and Classification: A Review. Sol. Energy 2013, 95, 392–430. [Google Scholar] [CrossRef]

- Li, L.; Li, X.; Jiang, L.; Su, X.; Chen, F. A Review on Deep Learning Techniques for Cloud Detection Methodologies and Challenges. Signal Image Video Process. 2021, 15, 1527–1535. [Google Scholar] [CrossRef]

- King, M.D.; Platnick, S.; Menzel, W.P.; Ackerman, S.A.; Hubanks, P.A. Spatial and Temporal Distribution of Clouds Observed by MODIS Onboard the Terra and Aqua Satellites. IEEE Trans. Geosci. Remote Sens. 2013, 51, 3826–3852. [Google Scholar] [CrossRef]

- Wang, J.; Rossow, W.B. Determination of Cloud Vertical Structure from Upper-Air Observations. J. Appl. Meteorol. Climatol. 1995, 34, 2243–2258. [Google Scholar] [CrossRef]

- Parikh, J.A.; Rosenfeld, A. Automatic Segmentation and Classification of Infrared Meteorological Satellite Data. IEEE Trans. Syst. Man. Cybern. 1978, 8, 736–743. [Google Scholar] [CrossRef]

- Mishchenko, M.I.; Rossow, W.B.; Macke, A.; Lacis, A.A. Sensitivity of Cirrus Cloud Albedo, Bidirectional Reflectance and Optical Thickness Retrieval Accuracy to Ice Particle Shape. J. Geophys. Res. Atmos. 1996, 101, 16973–16985. [Google Scholar] [CrossRef]

- Baetens, L.; Desjardins, C.; Hagolle, O. Validation of Copernicus Sentinel-2 Cloud Masks Obtained from MAJA, Sen2Cor, and FMask Processors Using Reference Cloud Masks Generated with a Supervised Active Learning Procedure. Remote Sens. 2019, 11, 433–458. [Google Scholar] [CrossRef]

- Zhang, Q.; Yuan, Q.; Li, J.; Li, Z.; Shen, H.; Zhang, L. Thick Cloud and Cloud Shadow Removal in Multitemporal Imagery Using Progressively Spatio-Temporal Patch Group Deep Learning. ISPRS J. Photogramm. Remote Sens. 2020, 162, 148–160. [Google Scholar] [CrossRef]

- Li, Z.; Shen, H.; Weng, Q.; Zhang, Y.; Dou, P.; Zhang, L. Cloud and Cloud Shadow Detection for Optical Satellite Imagery: Features, Algorithms, Validation, and Prospects. ISPRS J. Photogramm. Remote Sens. 2022, 188, 89–108. [Google Scholar] [CrossRef]

- Shen, Y.; Wang, Y.; Lv, H.; Qian, J. Removal of Thin Clouds in Landsat-8 OLI Data with Independent Component Analysis. Remote Sens. 2015, 7, 11481–11500. [Google Scholar] [CrossRef]

- Wieland, M.; Li, Y.; Martinis, S. Multi-Sensor Cloud and Cloud Shadow Segmentation with a Convolutional Neural Network. Remote Sens. Environ. 2019, 230, 111203. [Google Scholar] [CrossRef]

- Hollstein, A.; Segl, K.; Guanter, L.; Brell, M.; Enesco, M. Ready-to-Use Methods for the Detection of Clouds, Cirrus, Snow, Shadow, Water and Clear Sky Pixels in Sentinel-2 MSI Images. Remote Sens. 2016, 8, 666–684. [Google Scholar] [CrossRef]

- Irish, R.R. Landsat 7 Automatic Cloud Cover Assessment. In Algorithms for Multispectral, Hyperspectral, and Ultraspectral Imagery VI; Shen, S.S., Descour, M.R., Eds.; SPIE: Orlando, FL, USA, 2000; Volume 4049, p. 348. [Google Scholar]

- Skakun, S.; Wevers, J.; Brockmann, C.; Doxani, G.; Aleksandrov, M.; Batič, M.; Frantz, D.; Gascon, F.; Gómez-Chova, L.; Hagolle, O.; et al. Cloud Mask Intercomparison EXercise (CMIX): An Evaluation of Cloud Masking Algorithms for Landsat 8 and Sentinel-2. Remote Sens. Environ. 2022, 274, 112990–113012. [Google Scholar] [CrossRef]

- Shtym, T.; Wold, O.; Domnich, M.; Voormansik, K.; Rohtsalu, M.; Truupõld, J.; Murin, N.; Toqeer, A.; Odera, C.A.; Harun, F.; et al. KappaSet: Sentinel-2 KappaZeta Cloud and Cloud Shadow Masks 2022. Available online: https://data.niaid.nih.gov/resources?id=zenodo_7100326 (accessed on 14 December 2021).

- Mohajerani, S.; Krammer, T.A.; Saeedi, P. A Cloud Detection Algorithm for Remote Sensing Images Using Fully Convolutional Neural Networks. In Proceedings of the 2018 IEEE 20th International Workshop on Multimedia Signal Processing (MMSP), Vancouver, BC, Canada, 29–31 August 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–5. [Google Scholar]

- Mohajerani, S.; Saeedi, P. Cloud and Cloud Shadow Segmentation for Remote Sensing Imagery Via Filtered Jaccard Loss Function and Parametric Augmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4254–4266. [Google Scholar] [CrossRef]

- U.S. Geological Survey L8 Biome Cloud Validation Masks. U.S. Geological Survey Data Release. Available online: https://landsat.usgs.gov/landsat-8-cloud-cover-assessment-validation-data (accessed on 14 December 2021).

- Candra, D.S.; Phinn, S.; Scarth, P. Cloud and Cloud Shadow Masking Using Multi-Temporal Cloud Masking Algorithm in Tropical Environmental. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 95–100. [Google Scholar] [CrossRef]

- Hughes, M.J.; Kennedy, R. High-Quality Cloud Masking of Landsat 8 Imagery Using Convolutional Neural Networks. Remote Sens. 2019, 11, 2591. [Google Scholar] [CrossRef]

- Mohajerani, S.; Saeedi, P. Cloud-Net: An End-To-End Cloud Detection Algorithm for Landsat 8 Imagery. In Proceedings of the IGARSS 2019–2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 1029–1032. [Google Scholar]

- Foga, S.; Scaramuzza, P.L.; Guo, S.; Zhu, Z.; Dilley Jr, R.D.; Beckmann, T.; Schmidt, G.L.; Dwyer, J.L.; Hughes, M.J.; Laue, B. Cloud Detection Algorithm Comparison and Validation for Operational Landsat Data Products. Remote Sens. Environ. 2017, 194, 379–390. [Google Scholar] [CrossRef]

- Breon, F.-M.; Colzy, S. Cloud Detection from the Spaceborne POLDER Instrument and Validation against Surface Synoptic Observations. J. Appl. Meteorol. Climatol. 1999, 38, 777–785. [Google Scholar] [CrossRef]

- Zhan, Y.; Wang, J.; Shi, J.; Cheng, G.; Yao, L.; Sun, W. Distinguishing Cloud and Snow in Satellite Images via Deep Convolutional Network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1785–1789. [Google Scholar] [CrossRef]

- Ji, H.; Xia, M.; Zhang, D.; Lin, H. Multi-Supervised Feature Fusion Attention Network for Clouds and Shadows Detection. ISPRS Int. J. Geo-Information 2023, 12, 247–269. [Google Scholar] [CrossRef]

- Grabowski, B.; Ziaja, M.; Kawulok, M.; Bosowski, P.; Longépé, N.; Le Saux, B.; Nalepa, J. Squeezing Adaptive Deep Learning Methods with Knowledge Distillation for On-Board Cloud Detection. Eng. Appl. Artif. Intell. 2024, 132, 107835. [Google Scholar] [CrossRef]

- Tarrio, K.; Tang, X.; Masek, J.G.; Claverie, M.; Ju, J.; Qiu, S.; Zhu, Z.; Woodcock, C.E. Comparison of Cloud Detection Algorithms for Sentinel-2 Imagery. Sci. Remote Sens. 2020, 2, 100010. [Google Scholar] [CrossRef]

- European Space Agency (ESA) Open Access Hub, Scihub.Copernicus.Eu. Available online: https://dataspace.copernicus.eu/ (accessed on 25 June 2025).

- SAFE Format. Available online: https://sentiwiki.copernicus.eu/web/safe-format (accessed on 25 June 2025).

- Li, J.; Wu, Z.; Hu, Z.; Jian, C.; Luo, S.; Mou, L.; Zhu, X.X.; Molinier, M. A Lightweight Deep Learning-Based Cloud Detection Method for Sentinel-2A Imagery Fusing Multiscale Spectral and Spatial Features. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–19. [Google Scholar] [CrossRef]

- CVAT Computer Vision Annotation Tool. CVAT. Available online: https://www.cvat.ai/ (accessed on 28 March 2023).

- Segment.ai Segments.Ai Dataset Tool. Available online: https://segments.ai/ (accessed on 28 March 2023).

- Saunders, R.W.; Kriebel, K.T. An Improved Method for Detecting Clear Sky and Cloudy Radiances from AVHRR Data. Int. J. Remote Sens. 1988, 9, 123–150. [Google Scholar] [CrossRef]

- Kubota, M. A New Cloud Detection Algorithm for Nighttime AVHRR/HRPT Data. J. Oceanogr. 1994, 50, 31–41. [Google Scholar] [CrossRef]

- Jedlovec, G. Automated Detection of Clouds in Satellite Imagery. In Advances in Geoscience and Remote Sensing; InTech: London, UK, 2009; pp. 303–316. [Google Scholar]

- Zhu, Z.; Woodcock, C.E. Object-Based Cloud and Cloud Shadow Detection in Landsat Imagery. Remote Sens. Environ. 2012, 118, 83–94. [Google Scholar] [CrossRef]

- Lonjou, V.; Desjardins, C.; Hagolle, O.; Petrucci, B.; Tremas, T.; Dejus, M.; Makarau, A.; Auer, S. MACCS-ATCOR Joint Algorithm (MAJA). In Proceedings of the Remote Sensing of Clouds and the Atmosphere XXI, Edinburgh, UK, 19 October 2016; Comerón, A., Kassianov, E.I., Schäfer, K., Eds.; SPIE: Bellingham, WA, USA, 2016; Volume 10001, p. 1000107. [Google Scholar]

- Zhu, Z.; Woodcock, C.E. Automated Cloud, Cloud Shadow, and Snow Detection in Multitemporal Landsat Data: An Algorithm Designed Specifically for Monitoring Land Cover Change. Remote Sens. Environ. 2014, 152, 217–234. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, S.; Woodcock, C.E. Improvement and Expansion of the Fmask Algorithm: Cloud, Cloud Shadow, and Snow Detection for Landsats 4–7, 8, and Sentinel 2 Images. Remote Sens. Environ. 2015, 159, 269–277. [Google Scholar] [CrossRef]

- Qiu, S.; Zhu, Z.; He, B. Fmask 4.0: Improved Cloud and Cloud Shadow Detection in Landsats 4--8 and Sentinel-2 Imagery. Remote Sens. Environ. 2019, 231, 111205. [Google Scholar] [CrossRef]

- Richter, R.; Louis, J.; Berthelot, B. Sentinel-2 MSI – Level 2A Products Algorithm Theoretical Basis Document. Eur. Sp. Agency 2012, 49, 1–72. [Google Scholar]

- Hagolle, O.; Huc, M.; Pascual, D.V.; Dedieu, G. A Multi-Temporal Method for Cloud Detection, Applied to FORMOSAT-2, VENµS, LANDSAT and SENTINEL-2 Images. Remote Sens. Environ. 2010, 114, 1747–1755. [Google Scholar] [CrossRef]

- Hagolle, O.; Huc, M.; Villa Pascual, D.; Dedieu, G. A Multi-Temporal and Multi-Spectral Method to Estimate Aerosol Optical Thickness over Land, for the Atmospheric Correction of FormoSat-2, LandSat, VENμS and Sentinel-2 Images. Remote Sens. 2015, 7, 2668–2691. [Google Scholar] [CrossRef]

- Li, P.; Dong, L.; Xiao, H.; Xu, M. A Cloud Image Detection Method Based on SVM Vector Machine. Neurocomputing 2015, 169, 34–42. [Google Scholar] [CrossRef]

- Bai, T.; Li, D.; Sun, K.; Chen, Y.; Li, W. Cloud Detection for High-Resolution Satellite Imagery Using Machine Learning and Multi-Feature Fusion. Remote Sens. 2016, 8, 715–736. [Google Scholar] [CrossRef]

- Tan, K.; Zhang, Y.; Tong, X. Cloud Extraction from Chinese High Resolution Satellite Imagery by Probabilistic Latent Semantic Analysis and Object-Based Machine Learning. Remote Sens. 2016, 8, 963–988. [Google Scholar] [CrossRef]

- Shao, Z.; Deng, J.; Wang, L.; Fan, Y.; Sumari, N.; Cheng, Q. Fuzzy AutoEncode Based Cloud Detection for Remote Sensing Imagery. Remote Sens. 2017, 9, 311. [Google Scholar] [CrossRef]

- Pérez-Suay, A.; Amorós-López, J.; Gómez-Chova, L.; Laparra, V.; Muñoz-Marí, J.; Camps-Valls, G. Randomized Kernels for Large Scale Earth Observation Applications. Remote Sens. Environ. 2017, 202, 54–63. [Google Scholar] [CrossRef]

- Sun, X.; Yu, Q.; Li, Z. SVM-Based Cloud Detection Using Combined Texture Features. In Proceedings of the International Symposium of Space Optical Instrument and Application, Beijing, China, 5–7 September 2018; pp. 363–372. [Google Scholar]

- Ishida, H.; Oishi, Y.; Morita, K.; Moriwaki, K.; Nakajima, T.Y. Development of a Support Vector Machine Based Cloud Detection Method for MODIS with the Adjustability to Various Conditions. Remote Sens. Environ. 2018, 205, 390–407. [Google Scholar] [CrossRef]

- Pérez-Suay, A.; Amorós-López, J.; Gómez-Chova, L.; Muñoz-Mari, J.; Just, D.; Camps-Valls, G. Pattern Recognition Scheme for Large-Scale Cloud Detection over Landmarks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3977–3987. [Google Scholar] [CrossRef]

- Deng, C.; Li, Z.; Wang, W.; Wang, S.; Tang, L.; Bovik, A.C. Cloud Detection in Satellite Images Based on Natural Scene Statistics and Gabor Features. IEEE Geosci. Remote Sens. Lett. 2018, 16, 608–612. [Google Scholar] [CrossRef]

- Ghasemian, N.; Akhoondzadeh, M. Introducing Two Random Forest Based Methods for Cloud Detection in Remote Sensing Images. Adv. Sp. Res. 2018, 62, 288–303. [Google Scholar] [CrossRef]

- Fu, H.; Shen, Y.; Liu, J.; He, G.; Chen, J.; Liu, P.; Qian, J.; Li, J. Cloud Detection for FY Meteorology Satellite Based on Ensemble Thresholds and Random Forests Approach. Remote Sens. 2018, 11, 44. [Google Scholar] [CrossRef]

- Joshi, P.P.; Wynne, R.H.; Thomas, V.A. Cloud Detection Algorithm Using SVM with SWIR2 and Tasseled Cap Applied to Landsat 8. Int. J. Appl. Earth Obs. Geoinf. 2019, 82, 101898–101908. [Google Scholar] [CrossRef]

- Chen, X.; Liu, L.; Gao, Y.; Zhang, X.; Xie, S. A Novel Classification Extension-Based Cloud Detection Method for Medium-Resolution Optical Images. Remote Sens. 2020, 12, 2365. [Google Scholar] [CrossRef]

- Cilli, R.; Monaco, A.; Amoroso, N.; Tateo, A.; Tangaro, S.; Bellotti, R. Machine Learning for Cloud Detection of Globally Distributed Sentinel-2 Images. Remote Sens. 2020, 12, 2355. [Google Scholar] [CrossRef]

- Wei, J.; Huang, W.; Li, Z.; Sun, L.; Zhu, X.; Yuan, Q.; Liu, L.; Cribb, M. Cloud Detection for Landsat Imagery by Combining the Random Forest and Superpixels Extracted via Energy-Driven Sampling Segmentation Approaches. Remote Sens. Environ. 2020, 248, 112005–112019. [Google Scholar] [CrossRef]

- Ibrahim, E.; Jiang, J.; Lema, L.; Barnabé, P.; Giuliani, G.; Lacroix, P.; Pirard, E. Cloud and Cloud-Shadow Detection for Applications in Mapping Small-Scale Mining in Colombia Using Sentinel-2 Imagery. Remote Sens. 2021, 13, 736–756. [Google Scholar] [CrossRef]

- Li, J.; Wang, L.; Liu, S.; Peng, B.; Ye, H. An Automatic Cloud Detection Model for Sentinel-2 Imagery Based on Google Earth Engine. Remote Sens. Lett. 2022, 13, 196–206. [Google Scholar] [CrossRef]

- Yao, X.; Guo, Q.; Li, A.; Shi, L. Optical Remote Sensing Cloud Detection Based on Random Forest Only Using the Visible Light and Near-Infrared Image Bands. Eur. J. Remote Sens. 2022, 55, 150–167. [Google Scholar] [CrossRef]

- Singh, R.; Biswas, M.; Pal, M. Cloud Detection Using Sentinel 2 Imageries: A Comparison of XGBoost, RF, SVM, and CNN Algorithms. Geocarto Int. 2023, 38, 1–32. [Google Scholar] [CrossRef]

- Singh, R.; Biswas, M.; Pal, M. An Automated Cloud Detection Method for Sentinel-2 Imageries. In Proceedings of the 2023 IEEE India Geoscience and Remote Sensing Symposium (InGARSS), Bengaluru, India, 10–13 December 2023; IEEE: Piscataway, NJ, USA; pp. 1–4. [Google Scholar]

- Shang, H.; Letu, H.; Xu, R.; Wei, L.; Wu, L.; Shao, J.; Nagao, T.M.; Nakajima, T.Y.; Riedi, J.; He, J.; et al. A Hybrid Cloud Detection and Cloud Phase Classification Algorithm Using Classic Threshold-Based Tests and Extra Randomized Tree Model. Remote Sens. Environ. 2024, 302, 113957. [Google Scholar] [CrossRef]

- Liu, Z.; Yang, J.; Wang, W.; Shi, Z. Cloud Detection Methods for Remote Sensing Images: A Survey. Chin. Sp. Sci. Technol. 2023, 43, 1–17. [Google Scholar] [CrossRef]

- Caraballo-Vega, J.A.; Carroll, M.L.; Neigh, C.S.R.; Wooten, M.; Lee, B.; Weis, A.; Aronne, M.; Alemu, W.G.; Williams, Z. Optimizing WorldView-2,-3 Cloud Masking Using Machine Learning Approaches. Remote Sens. Environ. 2022, 284, 113332–113347. [Google Scholar] [CrossRef]

- Gawlikowski, J.; Ebel, P.; Schmitt, M.; Zhu, X.X. Explaining the Effects of Clouds on Remote Sensing Scene Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 9976–9986. [Google Scholar] [CrossRef]

- AJ, G.C.; Jojy, C. A Survey of Cloud Detection Techniques For Satellite Images. Int. Res. J. Eng. Technol. 2015, 2, 2485–2490. [Google Scholar]

- Wang, Y.; Gu, L.; Li, X.; Gao, F.; Jiang, T. Coexisting Cloud and Snow Detection Based on a Hybrid Features Network Applied to Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5405515. [Google Scholar] [CrossRef]

- Miroszewski, A.; Mielczarek, J.; Szczepanek, F.; Czelusta, G.; Grabowski, B.; Saux, B.L.; Nalepa, J. Cloud Detection in Multispectral Satellite Images Using Support Vector Machines with Quantum Kernels. arXiv 2023, arXiv:2307.07281. [Google Scholar]

- Miroszewski, A.; Mielczarek, J.; Czelusta, G.; Szczepanek, F.; Grabowski, B.; Saux, B.L.; Nalepa, J. Detecting Clouds in Multispectral Satellite Images Using Quantum-Kernel Support Vector Machines. arXiv 2023, arXiv:2302.08270. [Google Scholar] [CrossRef]

- Bhagwat, R.U.; Shankar, B.U. A Novel Multilabel Classification of Remote Sensing Images Using XGBoost. In Proceedings of the IEEE 5th International Conference for Convergence in Technology, Pune, India, 29–31 March 2019; pp. 1–5. [Google Scholar]

- Zamani Joharestani, M.; Cao, C.; Ni, X.; Bashir, B.; Talebiesfandarani, S. PM2. 5 Prediction Based on Random Forest, XGBoost, and Deep Learning Using Multisource Remote Sensing Data. Atmosphere 2019, 10, 373–392. [Google Scholar] [CrossRef]

- Rumora, L.; Miler, M.; Medak, D. Impact of Various Atmospheric Corrections on Sentinel-2 Land Cover Classification Accuracy Using Machine Learning Classifiers. ISPRS Int. J. Geo-Inf. 2020, 9, 277–300. [Google Scholar] [CrossRef]

- Jeppesen, J.H.; Jacobsen, R.H.; Inceoglu, F.; Toftegaard, T.S. A Cloud Detection Algorithm for Satellite Imagery Based on Deep Learning. Remote Sens. Environ. 2019, 229, 247–259. [Google Scholar] [CrossRef]

- Xu, K.; Guan, K.; Peng, J.; Luo, Y.; Wang, S. DeepMask: An Algorithm for Cloud and Cloud Shadow Detection in Optical Satellite Remote Sensing Images Using Deep Residual Network. arXiv 2019, arXiv:1911.03607. [Google Scholar]

- Yang, J.; Guo, J.; Yue, H.; Liu, Z.; Hu, H.; Li, K. CDnet: CNN-Based Cloud Detection for Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6195–6211. [Google Scholar] [CrossRef]

- Shendryk, Y.; Rist, Y.; Ticehurst, C.; Thorburn, P. Deep Learning for Multi-Modal Classification of Cloud, Shadow and Land Cover Scenes in PlanetScope and Sentinel-2 Imagery. ISPRS J. Photogramm. Remote Sens. 2019, 157, 124–136. [Google Scholar] [CrossRef]

- Liu, C.-C.; Zhang, Y.-C.; Chen, P.-Y.; Lai, C.-C.; Chen, Y.-H.; Cheng, J.-H.; Ko, M.-H. Clouds Classification from Sentinel-2 Imagery with Deep Residual Learning and Semantic Image Segmentation. Remote Sens. 2019, 11, 119. [Google Scholar] [CrossRef]

- Kanu, S.; Khoja, R.; Lal, S.; Raghavendra, B.S.; CS, A. CloudX-Net: A Robust Encoder-Decoder Architecture for Cloud Detection from Satellite Remote Sensing Images. Remote Sens. Appl. Soc. Environ. 2020, 20, 100417. [Google Scholar] [CrossRef]

- Segal-Rozenhaimer, M.; Li, A.; Das, K.; Chirayath, V. Cloud Detection Algorithm for Multi-Modal Satellite Imagery Using Convolutional Neural-Networks (CNN). Remote Sens. Environ. 2020, 237, 111446–111463. [Google Scholar] [CrossRef]

- Guo, J.; Yang, J.; Yue, H.; Tan, H.; Hou, C.; Li, K. CDnetV2: CNN-Based Cloud Detection for Remote Sensing Imagery with Cloud-Snow Coexistence. IEEE Trans. Geosci. Remote Sens. 2021, 59, 700–713. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, Y.; Wang, H.; Wu, J.; Li, Y. CNN Cloud Detection Algorithm Based on Channel and Spatial Attention and Probabilistic Upsampling for Remote Sensing Image. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–13. [Google Scholar] [CrossRef]

- Chen, Y.; Tang, L.; Kan, Z.; Latif, A.; Yang, X.; Bilal, M.; Li, Q. Cloud and Cloud Shadow Detection Based on Multiscale 3D-CNN for High Resolution Multispectral Imagery. IEEE Access 2020, 8, 16505–16516. [Google Scholar] [CrossRef]

- Kristollari, V.; Karathanassi, V. Convolutional Neural Networks for Detecting Challenging Cases in Cloud Masking Using Sentinel-2 Imagery. In Proceedings of the Eighth International Conference on Remote Sensing and Geoinformation of the Environment (RSCy2020), Paphos, Cyprus, 16–18 March 2020; Themistocleous, K., Michaelides, S., Ambrosia, V., Hadjimitsis, D.G., Papadavid, G., Eds.; SPIE: Pune, India, 2020; p. 53. [Google Scholar]

- Luotamo, M.; Metsamaki, S.; Klami, A. Multiscale Cloud Detection in Remote Sensing Images Using a Dual Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2021, 59, 4972–4983. [Google Scholar] [CrossRef]

- Ma, N.; Sun, L.; Zhou, C.; He, Y. Cloud Detection Algorithm for Multi-Satellite Remote Sensing Imagery Based on a Spectral Library and 1D Convolutional Neural Network. Remote Sens. 2021, 13, 3319–3339. [Google Scholar] [CrossRef]

- Jiao, L.; Gao, W. Refined UNet Lite: End-to-End Lightweight Network for Edge-Precise Cloud Detection. Procedia Comput. Sci. 2022, 202, 9–14. [Google Scholar] [CrossRef]

- Li, X.; Yang, X.; Li, X.; Lu, S.; Ye, Y.; Ban, Y. GCDB-UNet: A Novel Robust Cloud Detection Approach for Remote Sensing Images. Knowl. Based Syst. 2022, 238, 107890–107902. [Google Scholar] [CrossRef]

- Kumthekar, A.; Reddy, G.R. An Integrated Deep Learning Framework of U-Net and Inception Module for Cloud Detection of Remote Sensing Images. Arab. J. Geosci. 2021, 14, 1–13. [Google Scholar] [CrossRef]

- Yin, M.; Wang, P.; Hao, W.; Ni, C. Cloud Detection of High-Resolution Remote Sensing Image Based on Improved U-Net. Multimed. Tools Appl. 2023, 82, 25271–25288. [Google Scholar] [CrossRef]

- López-Puigdollers, D.; Mateo-Garcia, G.; Gómez-Chova, L. Benchmarking Deep Learning Models for Cloud Detection in Landsat-8 and Sentinel-2 Images. Remote Sens. 2021, 13, 992. [Google Scholar] [CrossRef]

- Grabowski, B.; Ziaja, M.; Kawulok, M.; Cwiek, M.; Lakota, T.; Longepe, N.; Nalepa, J. Are Cloud Detection U-Nets Robust Against in-Orbit Image Acquisition Conditions? In Proceedings of the IGARSS 2022–2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 239–242. [Google Scholar]

- Francis, A.; Sidiropoulos, P.; Muller, J.-P. CloudFCN: Accurate and Robust Cloud Detection for Satellite Imagery with Deep Learning. Remote Sens. 2019, 11, 2312–2334. [Google Scholar] [CrossRef]

- Yang, X.; Gou, T.; Lv, Z.; Li, L.; Jin, H. Weakly-Supervised Cloud Detection and Effective Cloud Removal for Remote Sensing Images. J. Vis. Commun. Image Represent. 2023, 98, 104006. [Google Scholar] [CrossRef]

- Ma, X.; Huang, Y.; Zhang, X.; Pun, M.-O.; Huang, B. Cloud-EGAN: Rethinking CycleGAN From a Feature Enhancement Perspective for Cloud Removal by Combining CNN and Transformer. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 4999–5012. [Google Scholar] [CrossRef]

- Pang, S.; Sun, L.; Tian, Y.; Ma, Y.; Wei, J. Convolutional Neural Network-Driven Improvements in Global Cloud Detection for Landsat 8 and Transfer Learning on Sentinel-2 Imagery. Remote Sens. 2023, 15, 1706. [Google Scholar] [CrossRef]

- Li, J.; Wu, Z.; Sheng, Q.; Wang, B.; Hu, Z.; Zheng, S.; Camps-Valls, G.; Molinier, M. A Hybrid Generative Adversarial Network for Weakly-Supervised Cloud Detection in Multispectral Images. Remote Sens. Environ. 2022, 280, 113197. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Li, W.; Chen, K.; Liu, Z.; Shi, Z.; Zou, Z. Weakly Supervised Adversarial Training for Remote Sensing Image Cloud and Snow Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 15206–15221. [Google Scholar] [CrossRef]

- Shi, C.; Zhou, Y.; Qiu, B.; Guo, D.; Li, M. CloudU-Net: A Deep Convolutional Neural Network Architecture for Daytime and Nighttime Cloud Images’ Segmentation. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1688–1692. [Google Scholar] [CrossRef]

- Shi, C.; Zhou, Y.; Qiu, B. CloudU-Netv2: A Cloud Segmentation Method for Ground-Based Cloud Images Based on Deep Learning. Neural Process. Lett. 2021, 53, 2715–2728. [Google Scholar] [CrossRef]

- Peng, L.; Chen, X.; Chen, J.; Zhao, W.; Cao, X. Understanding the Role of Receptive Field of Convolutional Neural Network for Cloud Detection in Landsat 8 OLI Imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5407317. [Google Scholar] [CrossRef]

- Zhao, C.; Zhang, X.; Kuang, N.; Luo, H.; Zhong, S.; Fan, J. Boundary-Aware Bilateral Fusion Network for Cloud Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–14. [Google Scholar] [CrossRef]

- Wu, K.; Xu, Z.; Lyu, X.; Ren, P. Cloud Detection with Boundary Nets. ISPRS J. Photogramm. Remote Sens. 2022, 186, 218–231. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, W.; Li, Q.; Min, M.; Yao, Z. DCNet: A Deformable Convolutional Cloud Detection Network for Remote Sensing Imagery. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- He, Q.; Sun, X.; Yan, Z.; Fu, K. DABNet: Deformable Contextual and Boundary-Weighted Network for Cloud Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- De Souza, A.; Shokri, P. Residual U-Net with Attention for Detecting Clouds in Satellite Imagery 2023, 1–17. Available online: https://eartharxiv.org/repository/view/4910/ (accessed on 14 December 2021).

- Zhao, C.; Zhang, X.; Luo, H.; Zhong, S.; Tang, L.; Peng, J.; Fan, J. Detail-Aware Multiscale Context Fusion Network for Cloud Detection. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Yao, X.; Guo, Q.; Li, A. Light-Weight Cloud Detection Network for Optical Remote Sensing Images with Attention-Based Deeplabv3+ Architecture. Remote Sens. 2021, 13, 3617–3640. [Google Scholar] [CrossRef]

- Chen, Y.; Tang, L.; Huang, W.; Guo, J.; Yang, G. A Novel Spectral Indices-Driven Spectral-Spatial-Context Attention Network for Automatic Cloud Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 3092–3103. [Google Scholar] [CrossRef]

- Luo, C.; Feng, S.; Yang, X.; Ye, Y.; Li, X.; Zhang, B.; Chen, Z.; Quan, Y. LWCDnet: A Lightweight Network for Efficient Cloud Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Zheng, Y.; Ling, W.; Shifei, T. A Lightweight Network for Remote Sensing Image Cloud Detection. In Proceedings of the 4th International Conference on Power, Intelligent Computing and Systems, Shenyang, China, 29–31 July 2022; pp. 644–649. [Google Scholar]

- Li, X.; Chen, S.; Wu, J.; Li, J.; Wang, T.; Tang, J.; Hu, T.; Wu, W. Satellite Cloud Image Segmentation Based on Lightweight Convolutional Neural Network. PLoS ONE 2023, 18, e0280408. [Google Scholar] [CrossRef]

- Zhang, G.; Gao, X.; Yang, J.; Yang, Y.; Tan, M.; Xu, J.; Wang, Y. A Multi-Task Driven and Reconfigurable Network for Cloud Detection in Cloud-Snow Coexistence Regions from Very-High-Resolution Remote Sensing Images. Int. J. Appl. Earth Obs. Geoinf. 2022, 114, 103070–103086. [Google Scholar] [CrossRef]

- Li, X.; Ye, H.; Qiu, S. Cloud Contaminated Multispectral Remote Sensing Image Enhancement Algorithm Based on MobileNet. Remote Sens. 2022, 14, 4815–4842. [Google Scholar] [CrossRef]

- Bowen, Z.; Jianlin, Z.; Xiaoxing, F.; Yaxing, S. Cloud Detection in Cloud-Snow Co-Occurrence Remote Sensing Images Based on Convolutional Neural Network. In Proceedings of the 6th International Conference on Big Data Technologies, Qingdao, China, 22–24 September 2023; ACM: New York, NY, USA, 2023; Volume 52, pp. 396–402. [Google Scholar]

- Grabowski, B.; Ziaja, M.; Kawulok, M.; Bosowski, P.; Longépé, N.; Saux, B.L.; Nalepa, J. Squeezing NnU-Nets with Knowledge Distillation for On-Board Cloud Detection. arXiv 2023, arXiv:2306.09886. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. Comput. Vis. Pattern Recognit. 2020. [Google Scholar] [CrossRef]

- Han, S.; Wang, J.; Zhang, S. Former-CR: A Transformer-Based Thick Cloud Removal Method with Optical and SAR Imagery. Remote Sens. 2023, 15, 1196–1218. [Google Scholar] [CrossRef]

- Du, X.; Wu, H. Feature-Aware Aggregation Network for Remote Sensing Image Cloud Detection. Int. J. Remote Sens. 2023, 44, 1872–1899. [Google Scholar] [CrossRef]

- Cao, Y.; Sui, B.; Zhang, S.; Qin, H. Cloud Detection From High-Resolution Remote Sensing Images Based on Convolutional Neural Networks with Geographic Features and Contextual Information. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Qian, J.; Ci, J.; Tan, H.; Xu, W.; Jiao, Y.; Chen, P. Cloud Detection Method Based on Improved DeeplabV3+ Remote Sensing Image. IEEE Access 2024, 12, 9229–9242. [Google Scholar] [CrossRef]

- Zhang, B.; Zhang, Y.; Li, Y.; Wan, Y.; Yao, Y. CloudViT: A Lightweight Vision Transformer Network for Remote Sensing Cloud Detection. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Singh, R.; Biswas, M.; Pal, M. A Transformer-Based Cloud Detection Approach Using Sentinel 2 Imageries. Int. J. Remote Sens. 2023, 44, 3194–3208. [Google Scholar] [CrossRef]

- Francis, A. Sensor Independent Cloud and Shadow Masking with Partial Labels and Multimodal Inputs. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–18. [Google Scholar] [CrossRef]

- Jiao, W.; Zhang, Y.; Zhang, B.; Wan, Y. SCTrans: A Transformer Network Based on the Spatial and Channel Attention for Cloud Detection. In Proceedings of the IGARSS International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 615–618. [Google Scholar]

- Ge, W.; Yang, X.; Jiang, R.; Shao, W.; Zhang, L. CD-CTFM: A Lightweight CNN-Transformer Network for Remote Sensing Cloud Detection Fusing Multiscale Features. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 4538–4551. [Google Scholar] [CrossRef]

- Tolstikhin, I.O.; Houlsby, N.; Kolesnikov, A.; Beyer, L.; Zhai, X.; Unterthiner, T.; Yung, J.; Steiner, A.; Keysers, D.; Uszkoreit, J.; et al. Mlp-Mixer: An All-Mlp Architecture for Vision. Adv. Neural Inf. Process. Syst. 2021, 34, 24261–24272. [Google Scholar]

- Rao, Y.; Zhao, W.; Zhu, Z.; Lu, J.; Zhou, J. Global Filter Networks for Image Classification. Adv. Neural Inf. Process. Syst. 2021, 34, 980–993. [Google Scholar]

- Singh, R.; Biswas, M.; Pal, M. Enhanced Cloud Detection in Sentinel-2 Imagery Using K-Means Clustering Embedded Transformer-Inspired Models. J. Appl. Remote Sens. 2024, 18, 034516. [Google Scholar] [CrossRef]

- Gupta, R.; Nanda, S.J. Cloud Detection in Satellite Images with Classical and Deep Neural Network Approach: A Review. Multimed. Tools Appl. 2022, 81, 31847–31880. [Google Scholar] [CrossRef]

- Wright, N.; Duncan, J.M.A.; Callow, J.N.; Thompson, S.E.; George, R.J. CloudS2Mask: A Novel Deep Learning Approach for Improved Cloud and Cloud Shadow Masking in Sentinel-2 Imagery. Remote Sens. Environ. 2024, 306, 114122. [Google Scholar] [CrossRef]

- Gbodjo, Y.J.E.; Hughes, L.H.; Molinier, M.; Tuia, D.; Li, J. Self-Supervised Representation Learning for Cloud Detection Using Sentinel-2 Images 2025.

- Ji, T.-Y.; Chu, D.; Zhao, X.-L.; Hong, D. A Unified Framework of Cloud Detection and Removal Based on Low-Rank and Group Sparse Regularizations for Multitemporal Multispectral Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Zhou, J.; Luo, X.; Rong, W.; Xu, H. Cloud Removal for Optical Remote Sensing Imagery Using Distortion Coding Network Combined with Compound Loss Functions. Remote Sens. 2022, 14, 3452. [Google Scholar] [CrossRef]

- Zheng, W.-J.; Zhao, X.-L.; Zheng, Y.-B.; Lin, J.; Zhuang, L.; Huang, T.-Z. Spatial-Spectral-Temporal Connective Tensor Network Decomposition for Thick Cloud Removal. ISPRS J. Photogramm. Remote Sens. 2023, 199, 182–194. [Google Scholar] [CrossRef]

- Li, Z.; Shen, H.; Cheng, Q.; Li, W.; Zhang, L. Thick Cloud Removal in High-Resolution Satellite Images Using Stepwise Radiometric Adjustment and Residual Correction. Remote Sens. 2019, 11, 1925–1944. [Google Scholar] [CrossRef]

- Dai, J.; Shi, N.; Zhang, T.; Xu, W. TCME: Thin Cloud Removal Network for Optical Remote Sensing Images Based on Multidimensional Features Enhancement. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–16. [Google Scholar] [CrossRef]

- Zheng, J.; Liu, X.-Y.; Wang, X. Single Image Cloud Removal Using U-Net and Generative Adversarial Networks. IEEE Trans. Geosci. Remote Sens. 2020, 59, 6371–6385. [Google Scholar] [CrossRef]

- Liu, H.; Huang, B.; Cai, J. Thick Cloud Removal Under Land Cover Changes Using Multisource Satellite Imagery and a Spatiotemporal Attention Network. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–18. [Google Scholar] [CrossRef]

- Ma, D.; Wu, R.; Xiao, D.; Sui, B. Cloud Removal from Satellite Images Using a Deep Learning Model with the Cloud-Matting Method. Remote Sens. 2023, 15, 904–921. [Google Scholar] [CrossRef]

- Xiong, Q.; Li, G.; Yao, X.; Zhang, X. SAR-to-Optical Image Translation and Cloud Removal Based on Conditional Generative Adversarial Networks: Literature Survey, Taxonomy, Evaluation Indicators, Limits and Future Directions. Remote Sens. 2023, 15, 1137–1157. [Google Scholar] [CrossRef]

- Li, J.; Wu, Z.; Hu, Z.; Zhang, J.; Li, M.; Mo, L.; Molinier, M. Thin Cloud Removal in Optical Remote Sensing Images Based on Generative Adversarial Networks and Physical Model of Cloud Distortion. ISPRS J. Photogramm. Remote Sens. 2020, 166, 373–389. [Google Scholar] [CrossRef]

- Chen, Y.; Tang, L.; Yang, X.; Fan, R.; Bilal, M.; Li, Q. Thick Clouds Removal from Multitemporal ZY-3 Satellite Images Using Deep Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 13, 143–153. [Google Scholar] [CrossRef]

- Zhu, S.; Li, Z.; Shen, H.; Lin, D. A Fast Two-Step Algorithm for Large-Area Thick Cloud Removal in High-Resolution Images. Remote Sens. Lett. 2022, 14, 1–9. [Google Scholar] [CrossRef]

- Hu, G.; Sun, X.; Liang, D.; Sun, Y. Cloud Removal of Remote Sensing Image Based on Multi-Output Support Vector Regression. J. Syst. Eng. Electron. 2014, 25, 1082–1088. [Google Scholar] [CrossRef]

- Li, X.; Feng, R.; Guan, X.; Shen, H.; Zhang, L. Remote Sensing Image Mosaicking: Achievements and Challenges. IEEE Geosci. Remote Sens. Mag. 2019, 7, 8–22. [Google Scholar] [CrossRef]

- Ebel, P.; Xu, Y.; Schmitt, M.; Zhu, X.X. SEN12MS-CR-TS: A Remote-Sensing Data Set for Multimodal Multitemporal Cloud Removal. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Zhang, Q.; Yuan, Q.; Li, Z.; Sun, F.; Zhang, L. Combined Deep Prior with Low-Rank Tensor SVD for Thick Cloud Removal in Multitemporal Images. ISPRS J. Photogramm. Remote Sens. 2021, 177, 161–173. [Google Scholar] [CrossRef]

- Xu, Z.; Wu, K.; Wang, W.; Lyu, X.; Ren, P. Semi-Supervised Thin Cloud Removal with Mutually Beneficial Guides. ISPRS J. Photogramm. Remote Sens. 2022, 192, 327–343. [Google Scholar] [CrossRef]

- Wu, R.; Liu, G.; Lv, J.; Fu, Y.; Bao, X.; Shama, A.; Cai, J.; Sui, B.; Wang, X.; Zhang, R. An Innovative Approach for Effective Removal of Thin Clouds in Optical Images Using Convolutional Matting Model. Remote Sens. 2023, 15, 2119–2143. [Google Scholar] [CrossRef]

- Shao, Z.; Pan, Y.; Diao, C.; Cai, J. Cloud Detection in Remote Sensing Images Based on Multiscale Features-Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4062–4076. [Google Scholar] [CrossRef]

- Tu, Z.; Guo, L.; Pan, H.; Lu, J.; Xu, C.; Zou, Y. Multitemporal Image Cloud Removal Using Group Sparsity and Nonconvex Low-Rank Approximation. J. Nonlinear Var. Anal. 2023, 7, 527–548. [Google Scholar] [CrossRef]

- Li, Y.; Wei, F.; Zhang, Y.; Chen, W.; Ma, J. HS2P: Hierarchical Spectral and Structure-Preserving Fusion Network for Multimodal Remote Sensing Image Cloud and Shadow Removal. Inf. Fusion 2023, 94, 215–228. [Google Scholar] [CrossRef]

- Theissler, A.; Thomas, M.; Burch, M.; Gerschner, F. ConfusionVis: Comparative Evaluation and Selection of Multi-Class Classifiers Based on Confusion Matrices. Knowl. Based Syst. 2022, 247, 108651. [Google Scholar] [CrossRef]

| Band Names | Landsat-7 | Landsat 8 | Sentinel-2 | |||

|---|---|---|---|---|---|---|

| Band Index (Resolution) | Wavelength (µm) | Band Index (Resolution) | Wavelength (µm) | Band Index (Resolution) | Wavelength (µm) | |

| Coastal | - | - | Band 1 (30 m) | 0.435–0.451 | Band 1 (60 m) | 0.433–0.453 |

| Blue | Band 1 (30 m) | 0.441–0.514 | Band 2 (30 m) | 0.452–0.512 | Band 2 (10 m) | 0.458–0.523 |

| Green | Band 2(30 m) | 0.519–0.601 | Band 3 (30 m) | 0.533–0.590 | Band 3 (10 m) | 0.543–0.578 |

| Red | Band 3 (30 m) | 0.631–0.692 | Band 4 (30 m) | 0.636–0.673 | Band 4 (10 m) | 0.650–0.680 |

| Red Edge 1 | - | - | - | - | Band 5 (20 m) | 0.698–0.713 |

| Red Edge 2 | - | - | - | - | Band 6 (20 m) | 0.733–0.748 |

| Red Edge 3 | - | - | - | - | Band 7 (20 m) | 0.765–0.785 |

| Wide NIR | - | - | - | - | Band 8 (10 m) | 0.785–0.900 |

| Narrow NIR | Band 4 (30 m) | 0.772–0.898 | Band 5 (30 m) | 0.851–0.879 | Band 8A (20 m) | 0.855–0.875 |

| Water Vapor | - | - | - | - | Band 9 (60 m) | 0.930–0.950 |

| Cirrus | - | - | Band 9 (30 m) | 1.363–1.384 | Band 10 (60 m) | 1.365–1.385 |

| SWIR1 | Band 5 (30 m) | 1.547–1.749 | Band 6 (30 m) | 1.566–1.651 | Band 11 (20 m) | 1.565–1.655 |

| SWIR2 | Band 7 (30 m) | 2.064–2.345 | Band 7 (30 m) | 2.107–2.294 | Band 12 (20 m) | 2.100–2.280 |

| Panchromatic | Band 8 (15 m) | 0.515–0.896 | Band 8 (15 m) | 0.503–0.676 | - | - |

| TIR-1 | Band 6 (60 m) | 10.31–12.36 | Band 10 (100 m) | 10.60–11.19 | - | - |

| TIR-2 | - | - | Band 11 (100 m) | 11.50–12.51 | - | - |

| Location | Tile ID | Sentinel | Acquisition Date | Scene Information |

|---|---|---|---|---|

| Railroad Valley, USA | T11SPC | S2A | 5 January 2017 | Small cumulus over bright soil |

| S2B | 27 August 2017 | Large cumulus over bright soil | ||

| Alta Floresta, Brazil | T21LWK | S2A | 5 May 2018 | Scattered small cumulus |

| S2B | 9 June 2018 | Thin cirrus | ||

| Marrakech, Morocco | T29RPQ | S2A | 17 April 2016 | Scattered cumulus and thin cirrus |

| S2A | 21 June 2017 | Clear image with snow and thin cirrus | ||

| Arles, France | T31TFJ | S2A | 17 September 2017 | Large cloud cover |

| S2B | 2 October 2017 | Thick and thin clouds | ||

| Orleans, France | T31UDP | S2A | 16 May 2017 | Thick and thin cirrus clouds |

| S2B | 19 August 2017 | Large mid-altitude cloud cover | ||

| Ispra, Italy | T32TMR | S2A | 15 August 2017 | Clouds over mountains with snow |

| S2B | 9 October 2017 | Clouds over mountains with snow and bright soil | ||

| Gobabeb, Namibia | T33KWP | S2A | 21 December 2016 | Thick clouds above the desert |

| S2B | 9 September 2017 | Small and low clouds | ||

| Mongu, Zambia | T34LGJ | S2A | 12 November 2016 | Large thick cloud cover and some cirrus |

| S2B | 4 August 2017 | Clear image and a few mid-altitude clouds | ||

| Pretoria, South Africa | T35JPM | S2A | 13 March 2017 | Diverse cloud types |

| S2A | 20 August 2017 | Scattered small clouds | ||

| Railroad Valley, USA | T11SPC | S2B | 13 February 2018 | Large stratus and some cumulus |

| Alta Floresta, Brazil | T21LWK | S2A | 14 July 2018 | Mid-altitude small clouds |

| S2A | 13 August 2018 | Thin cirrus | ||

| Marrakech, Morocco | T29RPQ | S2A | 18 December 2017 | Scattered cumulus and snow |

| Arles, France | T31TFJ | S2B | 21 December 2017 | Mid-altitude thick clouds and snow |

| Orleans, France | T31UDP | S2B | 18 February 2018 | Stratus cloud |

| Ispra, Italy | T32TMR | S2B | 11 November 2017 | Clouds over mountains and mist |

| Gobabeb, Namibia | T33KWP | S2B | 9 February 2018 | High and thin clouds |

| Mongu, Zambia | T34LGJ | S2B | 13 October 2017 | Large thin cirrus cover |

| Pretoria, South Africa | T35JPM | S2B | 13 December 2017 | Altostratus and small scattered clouds |

| Munich, Germany | T32UPU | S2A | 22 April 2018 | Mostly cloud-free with a few small clouds |

| S2B | 24 April 2018 | Large cloud cover with cumulus and cirrus |

| Label | Class Name |

|---|---|

| 0 | No Fill |

| 1 | No Data |

| 2 | Low Cloud |

| 3 | High Cloud |

| 4 | Cloud Shadow |

| 5 | Ground |

| 6 | Water |

| 7 | Snow |

| Location | Tile ID | Acquisition Date | Scene Information |

|---|---|---|---|

| Yiwu, China | T46TFN | 14 July 2019 | Cloud over snow cover and barren |

| Henan, China | T47SQU | 19 December 2019 | Cloud over barren having snow cover |

| Washixia, China | T45SWC | 30 June 2019 | Scattered cloud over the mountain with snow |

| Tibet | T46RGV | 15 December 2019 | Cloud over snow |

| Tibet | T45SXR | 30 September 2018 | Cloud over barren and water |

| Taila, China | T51TWM | 17 March 2020 | Cloud over ice, snow, and barren |

| Bachu, China | T44TKK | 16 August 2018 | Cloud over barren and clear farmland |

| Wentugaole, China | T47TQF | 23 October 2019 | Large cloud cover |

| Shangyi, China | T50TKL | 24 August 2018 | Cloud over forest and farmland |

| Yongding, China | T50RMN | 18 November 2019 | Cloud cover with forest and green area |

| Songyang, China | T50RQS | 16 September 2019 | Large cloud cover over greenery |

| Mengzhou, China | T49SFU | 19 August 2019 | Thin and thick clouds over urban |

| Koldeneng, China | T44TPN | 15 August 2019 | Large cloud cover |

| Qingyuan, China | T51TXG | 10 April 2020 | Scattered cloud over dryland |

| Junhe, China | T50TQQ | 2 October 2019 | Scattered thick and thin cloud |

| Luochuan, China | T49SCV | 29 April 2018 | A few small clouds over the forest |

| Guyuan, China | T51UWS | 6 May 2020 | A few scattered clouds over barren |

| Yanyuan, China | T47RQL | 25 March 2020 | Scattered clouds with barren and forest |

| Rongjiang, China | T49RBJ | 28 September 2019 | Scattered clouds over the forest |

| Dazhou, China | T48RYV | 27 August 2018 | A few small clouds over greenery and urban |

| Yangchun, China | T49QEE | 22 February 2020 | A few scattered clouds over the forest |

| Qianjiang, China | T49RFP | 22 July 2018 | A few scattered clouds over the forest with urban |

| Pingxiang, China | T49RGL | 29 July 2018 | Clouds over the forest with urban |

| Wulian, China | T50SPE | 6 May 2020 | Scattered thick and thin clouds over diverse region |

| Zhanjiang, China | T49QDD | 30 September 2018 | Scattered small clouds over the coastal region |

| Changzhou & Wuxi, China | T51STR | 5 November 2019 | Scattered small clouds over shrubland |

| Linshui, China | T48RXU | 12 August 2019 | Scattered small thick clouds |

| Yilan, China | T52TES | 2 June 2019 | Thick cloud over Barren and Forest |

| Baotou, China | T49TCF | 28 March 2019 | Thick clouds over Barren |

| Altay, China | T45TXN | 2 October 2019 | Scattered cloud with snow cover |

| Tibet | T46SFC | 16 April 2020 | Cloud over snow and bright mountain |

| Tibet | T44SPC | 28 May 2020 | Cloud over the mountain with small snow cover |

| Label | Class Name |

|---|---|

| 0 | Undefined (Labeler not sure) |

| 1 | Clear |

| 2 | Cloud Shadow |

| 3 | Semi-transparent Cloud |

| 4 | Cloud |

| 5 | Missing (No Data or Fill) |

| S.No | Month | Total Tiles | Total Imagery | Total Sub-Tiles | |

|---|---|---|---|---|---|

| Train | 1 | January | 60 | 60 | 290 |

| 2 | February | 88 | 88 | 476 | |

| 3 | March | 84 | 86 | 698 | |

| 4 | April | 72 | 73 | 527 | |

| 5 | May | 121 | 126 | 2271 | |

| 6 | June | 115 | 117 | 745 | |

| 7 | July | 95 | 99 | 1172 | |

| 8 | August | 89 | 90 | 1066 | |

| 9 | September | 64 | 64 | 273 | |

| 10 | October | 87 | 88 | 556 | |

| 11 | November | 61 | 61 | 358 | |

| 12 | December | 3 | 3 | 16 | |

| Test | 13 | All Months | 119 | 124 | 803 |

| Location | Tile Id | Acquisition Date | Scene Description | |

|---|---|---|---|---|

| Train | Bhavnagar, Gujrat | T42QZJ | 28 March 2022 | Clear coastal area with urban bright objects |

| Jodhpur, Rajasthan | T43RCK | 16 June 2022 | Thick clouds with bright urban and desert objects | |

| Hanumangarh, Rajasthan | T43RDN | 26 June 2022 | Mostly clear desert and urban area | |

| Srinagar, Jammu & Kashmir | T43SDT | 4 October 2022 | Thick and thin clouds over the mountain with snow patches | |

| Srikakulam, Andhra Pradesh | T44QRF | 28 November 2022 | Sparsely cloudy sea with clear coastal area | |

| Tinsukia, Assam | T46RGR | 29 November 2022 | Thick and thin cloud over forest and clear dried riverbed | |

| Test | Thiruvananthapuram, Kerala | T43PFK | 16 August 2022 | Thick and thin clouds scattered over land and sea. |

| Bathinda, Punjab | T43RDP | 23 November 2022 | Thin cloud over cultivated farmland | |

| Shimla, Himachal Pradesh | T43RFQ | 20 November 2022 | Thick clouds over mountain with little snow | |

| Pithora, Chhattisgarh | T44QPJ | 21 December 2022 | Sparsely distributed near-to-invisible thin clouds | |

| Haldwani, Uttarakhand | T44RLT | 28 October 2022 | Thick clouds over forest and mountain ranges |

| Label | Class Name |

|---|---|

| 0 | No Fill |

| 1 | No data |

| 2 | Thick Cloud |

| 3 | Thin Cloud |

| 4 | Cloud Shadow |

| 5 | Ground |

| S. No | Author (Year) | Paper Title | Technique(s) | Satellite Sensor/Instrument (Dataset(s)) | Cloud Mask Type | Evaluation Parameter | Main Highlights |

|---|---|---|---|---|---|---|---|

| 1. | Bai et al. (2016) [71] | “Cloud detection for high-resolution satellite imagery using machine learning and multi-feature fusion” | SVM-RBF (GLCM + NDVI) |

| Binary |

|

|

| 2. | Tan et al. (2016) [72] | “Cloud extraction from Chinese high-resolution satellite imagery by probabilistic latent semantic analysis and object-based machine learning” | SVM (PLSA+SLIC) |

| Binary |

|

|

| 3. | Shao et al. (2017) [73] | “Fuzzy AutoEncode Based Cloud Detection for Remote Sensing Imagery” | Fuzzy Autoencode Model (FAEM) |

| Binary |

|

|

| 4. | Perez-Suay et al. (2017) [74] | “Randomized kernels for large scale Earth observation applications” | Randomized Kernels |

| Binary |

|

|

| 5. | Sun et al. (2018) [75] | “SVM-Based Cloud Detection Using Combined Texture Features” | SVM (GLCM + RIULBP) |

| Binary |

|

|

| 6. | Ishida et al. (2018) [76] | “Development of a support vector machine-based cloud detection method for MODIS with the adjustability to various conditions” | SVM |

| Binary |

|

|

| 7. | P’erez-Suay et al. (2018) [77] | “Pattern recognition scheme for large-scale cloud detection over landmarks” | SVM |

| Binary |

|

|

| 8. | Deng et al. (2018) [78] | “Cloud detection in satellite images based on natural scene statistics and Gabor features” | SVM (Gabor) |

| Binary |

|

|

| 9. | Ghasemian and Akhoondzadeh (2018) [79] | “Introducing two Random Forest based methods for cloud detection in remote sensing images” | RF |

| Multi-class(Thick, Thin cloud, snow/ice and background) |

|

|

| 10. | Fu et al. (2018) [80] | “Cloud detection for FY meteorology satellite based on ensemble thresholds and random forests approach” | RF |

| Binary |

|

|

| 11. | Joshi et al. (2019) [81] | “Cloud detection algorithm using SVM with SWIR2 and tasseled cap applied to Landsat 8” | SVM |

| Multiclass (cloud, shadow, and ground) |

|

|

| 12. | Chen et al. (2020) [82] | “A Novel Classification Extension-Based Cloud Detection Method for Medium-Resolution Optical Images” | Classification Extension-based Cloud Detection (CECD) using RF |

| Binary |

|

|

| 13. | Cilli et al. (2020) [83] | “Machine learning for cloud detection of globally distributed Sentinel-2 images” | RF SVM MLP |

| Binary |

|

|

| 14. | Wei et al. (2020) [84] | “Cloud detection for Landsat imagery by combining the random forest and superpixels extracted via energy-driven sampling segmentation approaches” | RFmask (using RF) |

| Binary |

|

|

| 15. | Ibrahim et al. (2021) [85] | “Cloud and Cloud-Shadow Detection for Applications in Mapping Small-Scale Mining in Colombia Using Sentinel-2 Imagery” | SVM |

| Multiclass (cloud, cloud shadow, cirrus, and clear) |

|

|

| 16. | Li et al. (2022) [86] | “An automatic cloud detection model for Sentinel-2 imagery based on Google Earth Engine” | SVM |

| Binary |

|

|

| 17. | Yao et al. (2022) [87] | “Optical remote sensing cloud detection based on random forest only using the visible light and near-infrared image bands” | RFCD (Random Forest Cloud Detection) |

| Binary |

|

|

| 18. | Singh et al. (2023) [88] | “Cloud detection using sentinel 2 images: a comparison of XGBoost, RF, SVM, and CNN algorithms” | XGBoost RF SVM (each using combination of spectral (S) +GLCM (G) + Morphological (M) + Bilateral (B), and ResNet14) |

| 6-class Binary |

|

|

| 19. | Singh et al. (2023) [89] | “An Automated Cloud Detection Method for Sentinel-2 Images” | XGBoost RF SVM (each using a pixel-wise patch-based mechanism) |

| 6-class |

|

|

| 20. | Shang et al. (2024) [90] | “A hybrid cloud detection and cloud phase classification algorithm using classic threshold-based tests and extra randomized tree model” | Threshold and Extra Randomized Tree (CARE algorithm) |

| Multiclass (cloud, probably cloud, clear, and probably clear) |

|

|

| Deep Learning-based Cloud Detection: | |||||||

| 21. | Jeppesen et al. (2019) [101] | “A cloud detection algorithm for satellite imagery based on deep learning” | Remote Sensing Network (RS-NET based on U-Net) |

| Binary |

|

|

| 22. | Xu et al. (2019) [102] | “DeepMask: an algorithm for cloud and cloud shadow detection in optical satellite remote sensing images using deep residual network” | Deepmask (based on ResNet) |

| Binary |

|

|

| 23. | Yang et al. (2019) [103] | “CDnet: CNN-based cloud detection for remote sensing imagery” | Cloud Detection Network (CD-NET based on CNN) |

| Binary |

|

|

| 24. | Shendryk et al. (2019) [104] | “Deep learning for multi-modal classification of cloud, shadow, and land cover scenes in PlanetScope and Sentinel-2 imagery” | Ensembled DenseNet201, ResNet50 and VGG10 |

| Multiclass (Clear, Partly cloudy, Cloudy, Haze) |

|

|

| 25. | Liu et al. (2019) [105] | “Clouds Classification from Sentinel-2 Imagery with Deep Residual Learning and Semantic Image Segmentation” | CloudNet (deep residual network) |

| Binary |

|

|

| 26. | Kanu et al. (2020) [106] | “CloudX-net: A robust encoder-decoder architecture for cloud detection from satellite remote sensing images” | CloudX-net (CNN) |

| Binary |

|

|

| 27. | Segal-Rozenhaimer et al. (2020) [107] | “Cloud detection algorithm for multi-modal satellite imagery using convolutional neural-networks (CNN)” | Deeplab (CNN based cloud and cloud shadow detection) |

| Binary |

|

|

| 28. | Kristollari et al. (2020) [111] | “Convolutional neural networks for detecting challenging cases in cloud masking using Sentinel-2 imagery” | Patch-to-pixel CNN architecture |

| 6-class |

|

|

| 29. | Luotamo et al. (2021) [112] | “Multiscale Cloud Detection in Remote Sensing Images Using a Dual Convolutional Neural Network” | Two-cascaded CNN architecture |

| Binary |

|

|

| 30. | Ma et al. (2021) [113] | “Cloud detection algorithm for multi-satellite remote sensing imagery based on a spectral library and 1D convolutional neural network” | Cloud detection based on CNN using spectral library (CD-SLCNN based on 1D Residual Network) |

| Binary |

|

|

| 31. | Lopez-Puigdollers et al. 2021 [118] | “Benchmarking Deep Learning Models for Cloud Detection in Landsat-8 and Sentinel-2 Images” | Fully convolutional neural networks (FCNN) based on UNet |

| Binary |

|

|

| 32. | Li et al. 2021 [56] | “A Lightweight Deep Learning-Based Cloud Detection Method for Sentinel-2A Imagery Fusing Multiscale Spectral and Spatial Features” | Cloud Detection-fusing multiscale spectral and spatial features (CD-FM3SFs) |

| Binary |

|

|

| 33. | Li et al. (2022) [124] | “A hybrid generative adversarial network for weakly-supervised cloud detection in multispectral images” | GAN-CDM |

| Binary |

|

|

| 34. | Grabowski et al. (2023) [143] | “Squeezing nnU-Nets with Knowledge Distillation for On-Board Cloud Detection” | nnU-Nets |

| Binary4-class |

|

|

| 35. | Zhang et al. (2023) [149] | “CloudViT: A Lightweight Vision Transformer Network for Remote Sensing Cloud Detection” | CloudViT |

| Binary |

|

|

| 36. | Singh et al. (2023) [150] | “A transformer-based cloud detection approach using Sentinel 2 images” | SSATR-CD (Spatial-spectral Attention Transformer using Cloud Detection) |

| 4-classBinary |

|

|

| 37. | Francis (2024) [151] | “Sensor Independent Cloud and Shadow Masking with Partial Labels and Multimodal Inputs” | SegFormer |

| Binary |

|

|

| 38. | Singh et al. (2024) [156] | “Enhanced cloud detection in Sentinel-2 imagery using K-means clustering embedded transformer-inspired models” | KET-CD (Kmeans embedded Transformer inspired methods) |

| 4-class |

|

|

| 39. | Wright et al. (2024) [158] | “CloudS2Mask: A novel deep learning approach for improved cloud and cloud shadow masking in Sentinel-2 imagery” | CloudS2Mask based on UNet |

| 4-class |

|

|

| 40. | Gbodjp et al. (2025) [159] | “Self-supervised representation learning for cloud detection using Sentinel-2 images” | DeepCluster |

| Binary |

|

|

| Metrics | Computation Formula | ||

|---|---|---|---|

| Binary | Multiclass | ||

| Macro-Averaged | Micro-Averaged | ||

| Accuracy | |||

| F1-score | |||

| Precision | |||

| Recall | |||

| Kappa coefficient | |||

| Where, and | |||

| mIoU | |||

| User Accuracy (UA) | |||

| Producer Accuracy (PA) | |||

| Binary | 4-Class | 6-Class | Fmask | Sen2Cor | |||||

|---|---|---|---|---|---|---|---|---|---|

| Value | Class | Value | Class | Value | Class | Value | Class | Value | Class |

| 0 | Cloud | 2 | Thick cloud | 2 | Low cloud | 4 | Cloud | 9 | Cloud high probability |

| 3 | Thin cloud | 3 | High cloud | - | - | 8 | Cloud medium probability | ||

| 10 | Thin cirrus | ||||||||

| 1 | Clear (non-cloud) | 4 | Cloud shadow | 4 | Cloud shadow | 2 | Cloud shadow | 3 | Cloud shadow |

| 5 | Ground | 5 | Ground | 0 | Clear land | 2 | Dark area pixels | ||

| 4 | Vegetation | ||||||||

| 5 | Bare soil | ||||||||

| 7 | Unclassified | ||||||||

| 6 | Water | 1 | Water | 6 | Water | ||||

| 7 | Snow/ice | 3 | Snow | 11 | Snow | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Singh, R.; Pal, M.; Biswas, M. Cloud Detection Methods for Optical Satellite Imagery: A Comprehensive Review. Geomatics 2025, 5, 27. https://doi.org/10.3390/geomatics5030027

Singh R, Pal M, Biswas M. Cloud Detection Methods for Optical Satellite Imagery: A Comprehensive Review. Geomatics. 2025; 5(3):27. https://doi.org/10.3390/geomatics5030027

Chicago/Turabian StyleSingh, Rohit, Mahesh Pal, and Mantosh Biswas. 2025. "Cloud Detection Methods for Optical Satellite Imagery: A Comprehensive Review" Geomatics 5, no. 3: 27. https://doi.org/10.3390/geomatics5030027

APA StyleSingh, R., Pal, M., & Biswas, M. (2025). Cloud Detection Methods for Optical Satellite Imagery: A Comprehensive Review. Geomatics, 5(3), 27. https://doi.org/10.3390/geomatics5030027