1. Introduction

Whether in distance or face-to-face teaching and learning, the use of computer-based tools has become essential. These tools, although they help a lot in teaching and learning usual tasks, can be used to further improve this domain in several ways. Moreover, this increasing use of technology implies that the student is always present in front of their computer while learning. Therefore, developing solutions that can analyze student behavior becomes increasingly possible, which can lead to understanding students even more and consequently help to teach them in a better way.

Gathering and exploiting emotions data may provide valuable insights for the creation of instructional materials and strategies that are more effective [

1]. Analyzing students’ emotional reactions to different teaching approaches may help identify the most engaging and successful teaching strategies. In order to better satisfy the academic and emotional requirements of their students, teachers can then improve their teaching strategies, implement practical ideas, and adapt the curriculum.

This study focuses on how students’ emotional data can be used to enhance learning through technology. Initially, we created an application that enables us to capture and display emotions depending on the learner’s facial expressions. Then, in the second stage, we recorded the students’ emotions while teaching a module using this software. Finally, in order to explore the relations between academic achievement and emotions using a data-driven approach, we gathered and analyzed this data.

The main objective of the software we created for this study is to offer a tool for evaluating the emotions of students during in-person or remote learning by utilizing advances in computer vision. It aims to provide teachers with a tool that facilitates real-time emotional monitoring of their students. The software uses the frontal camera to capture students’ facial expressions during teaching and learning sessions in order to identify their emotions. Additionally, the software can assist in creating a massive data source for several kinds of data science studies that employ the understanding of students’ emotions as a factor of enhancing instruction.

In this paper, we first present the emotion recognition application that we developed by focusing on its main features, architecture and technical specifications. Then, we present the details of the exploitation of this application in the experience of a real course at the private university of Fez to record and analyze the emotions of the students and their correlation with the academic performance of the students.

4. Software Description

Analyzing students’ emotions without the help of technology would be impractical, given the importance of considering emotions in teaching as described in the previous section. Facial expression identification can now be performed with outstanding accuracy thanks to advancements in artificial intelligence, particularly computer vision.

Whether studying in person or virtually, students spend most of their study time in front of a computer. This suggests that we can leverage their computers as data collection tools to examine their behavior. To accomplish this, we developed an application that runs on their devices. LearnerEmotions is a web application that can run on students’ computers and access their front-facing cameras in order to analyze facial expressions and detect emotions. Teachers or institutions can use this application to better understand their students by analyzing their emotions and using emotional data to optimize teaching techniques.

The current software is primarily based on an algorithm that uses a machine learning pretrained model to identify and store the emotions of students. The facial expression recognition model achieved an average accuracy of 0.91 and a loss of 0.3 in the experimental environment. Using JavaScript technology, the above process operates on the front end (student machine). Below we outline the key phases of this algorithm in general:

Access the student’s camera.

For each frame image, the algorithm crops the face first.

For each cropped face, the model detects the corresponding emotion.

For all frames in one second, the algorithm calculates the most dominant emotion.

The algorithm sends the most dominant emotions and the timestamp to the backend.

The backend algorithm receives the data and stores it in an object-oriented database linked to the specific student.

To record or analyze students’ emotions, the teacher must first create a group and then add the students involved in this experience to this group. Students subsequently receive an invitation email to join the group, which they must accept in order to participate. After that, the teacher has to create a session in the application which should normally correspond to a real course session that will take place either in the classroom or remotely. Subsequently, they must specify the group related to this session. At the scheduled time, students open the application and enter the session, granting access to their front-facing cameras. Once access is granted, the application begins detecting their emotions from facial expressions. During the session, students can view their detected emotions in real time, and teachers can visualize students’ emotions through dashboards provided by the application.

The emotions detected by the application are not just displayed, but they are also saved in a database. This means that the teacher and even the student can permanently view this data after the end of the session. The application offers dashboards summarizing the session’s emotions. Additionally, teachers can download the raw data for analysis in other tools or applications.

Based on user accounts, the program secures access to data and interfaces. All users, whether instructors or students, must create an account and define a password. Before accessing their data and interfaces, users must complete an authentication process. Every protected feature begins with online identity verification. Additionally, the application handles updating user profiles and changing passwords.

4.1. Software Architecture

LearnerEmotions uses the MERN stack technology. MERN stands for MongoDB, Express, React, and Node, the four main building blocks of the stack. The application was developed in the form of two nodes that cooperate. The first node executes on the client-side machine (front end) and the other executes on the server side (back end). Therefore, the client-side application is designed to:

Display the web Interfaces.

Access the client’s front-facing camera.

Process the video frames captured by the camera.

Send asynchronous HTTP requests to the back end.

The server side generally receives and processes the http requests from the clients in order to:

Send the public web content (HTML, CSS, and JavaScript files) to the front end.

Save or update data (users, groups, sessions, emotions, etc.) to the object database.

Request the data from the object database.

Return the data requested by the front end in JSON format.

Figure 1 illustrates the process of detecting and storing students’ emotions based on the architecture of the application.

4.2. Software Functionalities

LearnerEmotions was developed to provide teachers with the tools they need to manage teaching sessions while monitoring their students’ emotional states. At the same time, the software allows students to engage with the platform and participate in recording their feelings. In this section, we present the features available for both teachers and students.

4.2.1. Teacher Features

As teachers are primarily responsible for managing teaching sessions, whether in the classroom or in distance learning, we decided to assign them the task of managing emotion recordings. Therefore, the instructor role in the application is responsible for managing student groups, sessions, and overseeing the recording of emotions. It should be noted that instructors can only access their own groups and cannot access those of other instructors. The features offered by this software to teachers are listed below:

Create Groups of students: All teaching activities, whether in-person or through distance learning, take place within a framework that organizes students into groups. This feature allows the instructor to create and manage student groups according to their objectives or the arrangement used at their institution.

Add students to a group individually or from a csv file: The teacher must first send invitations via email to control which students join the groups. Next, the teacher enters the student data either by filling out a form for each student individually or by uploading an Excel file containing the necessary information (for cases where multiple students need to be added at once). This operation should trigger the sending of emails to the students, inviting them to join the group.

Create Sessions and Define Their Nature: The teacher designs specific sessions during which students’ emotions are recorded. For example, a teacher may create a session for the UML module, another for the Java module, and so on. Each session must be created for a particular group, and the instructor needs to specify the learning activities, such as lectures, practical work, guided projects, etc.

Start and stop emotions recording: The teacher can control when students’ emotions are recorded for each session. This feature should be used while students are attending the session. The teacher can also pause and resume recording at any time during the session.

Supervise Students’ Emotions: During the session, the teacher can visualize in real time the emotional changes in each student in the session. This is made possible through the dashboards provided by the application.

Display Students’ Emotions After the Session Ends: The recognized emotions can be viewed not only during the session but also after its completion, as the emotion data is stored in a database. This allows the teacher to review student-specific dashboards for an in-depth analysis of emotions even after the session has concluded.

Download emotions data as a csv file: This feature allows teachers to analyze student emotion data more freely, without being limited to the application’s dashboard features. Instructors can download the raw data for use in other data analysis tools to gain more personalized insights.

4.2.2. Student Features

Because the emotions have to be recorded related to the course session, the application allows students to access emotion registration sessions specific to each course session. Notably, a student cannot view the emotions of other students but can see their own recorded emotions. The following is a list of features available to students:

Join a Student Group: This feature allows the student to become part of a group and participate in emotion recording sessions. The student can join a group after receiving an invitation from the teacher and has the option to accept or decline the invitation.

Join Session: This feature allows the student to enter a session when an emotion recording is starting, enabling the system to begin recognizing their emotions.

Start Emotion Registration: This feature allows the student to begin recording their emotions during a specific session. This operation requires the student to grant the application access to the front-facing camera.

Display Emotions in Real Time: The student has access to a dashboard that shows their identified emotions and how they vary in real time.

Display Personal Emotion Data After the Session Ends: The student can review a visual representation of their emotions from any previously recorded session at any time.

4.2.3. Software Interface Examples

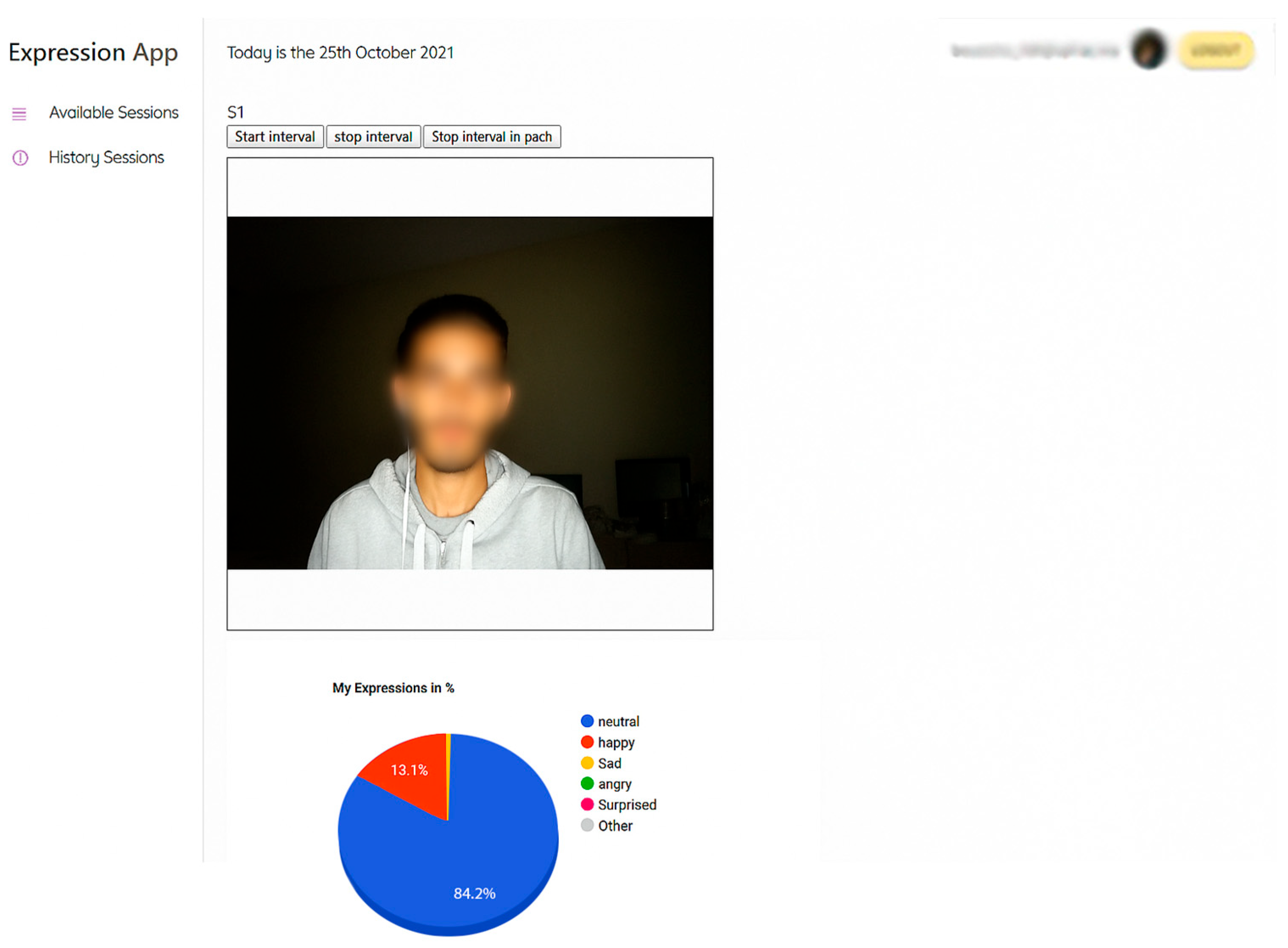

Figure 2 shows the student interface, which displays the student’s face in real time alongside a pie chart showing the percentages of their emotions during the session. These detected emotions are processed on the server side and stored in a database along with other parameters, such as the student, course title, course type, and more. This application is primarily designed for teachers who want to analyze their students’ emotions during course sessions, practical work, or any type of teaching activity. It allows teachers to view students’ emotions in real time, either in a simple mode or through detailed dashboards.

The software’s primary goal is to provide educators and educational institutions with tools to assess and analyze students’ emotions. By offering data extraction features, it allows for multiple use cases, enabling teachers to gain insights that meet their specific needs. Additionally, the application provides dashboards that display graphical charts, either in real time during sessions or after their completion.

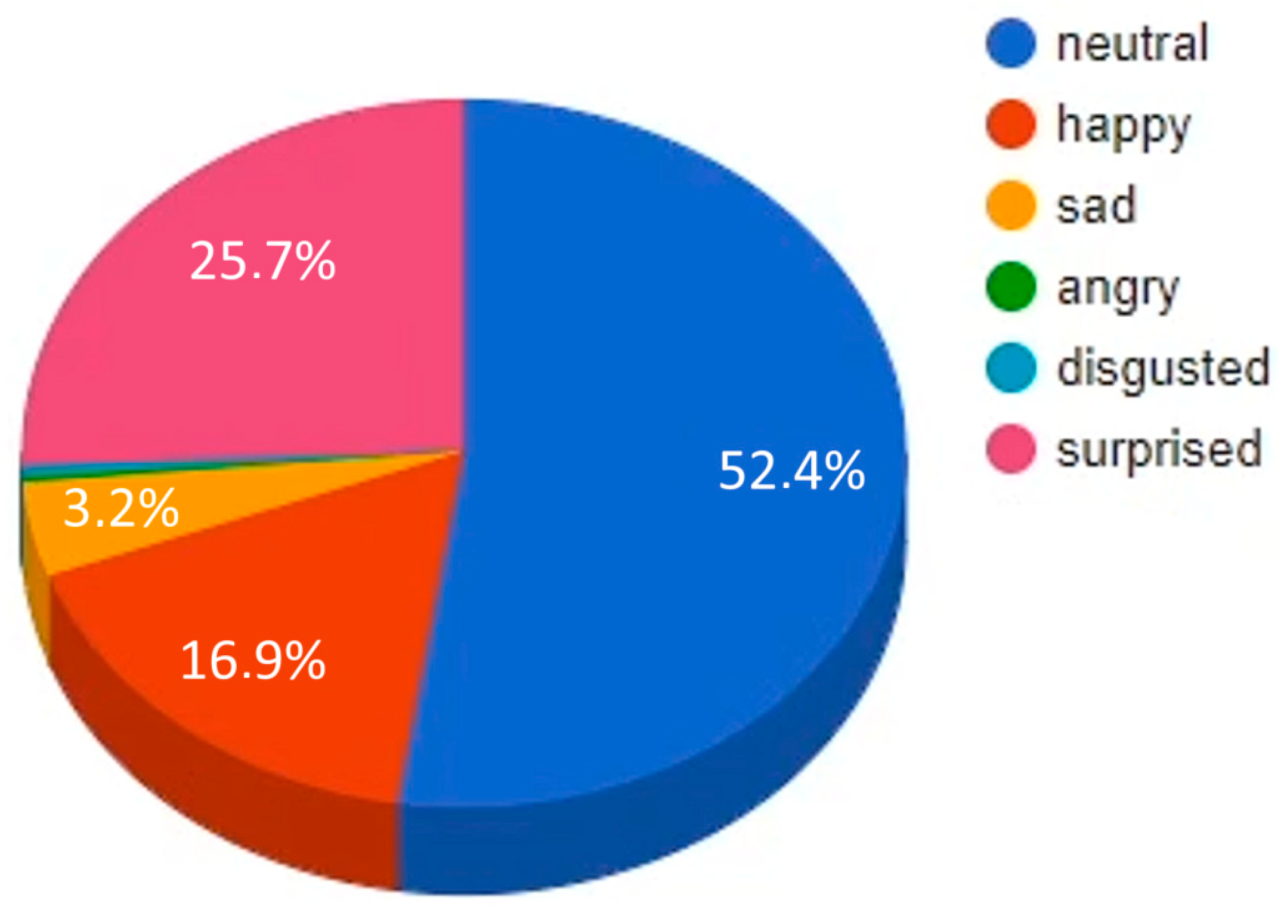

Figure 3 shows an example of a pie chart that a teacher can view for a student enrolled in a specific session, while

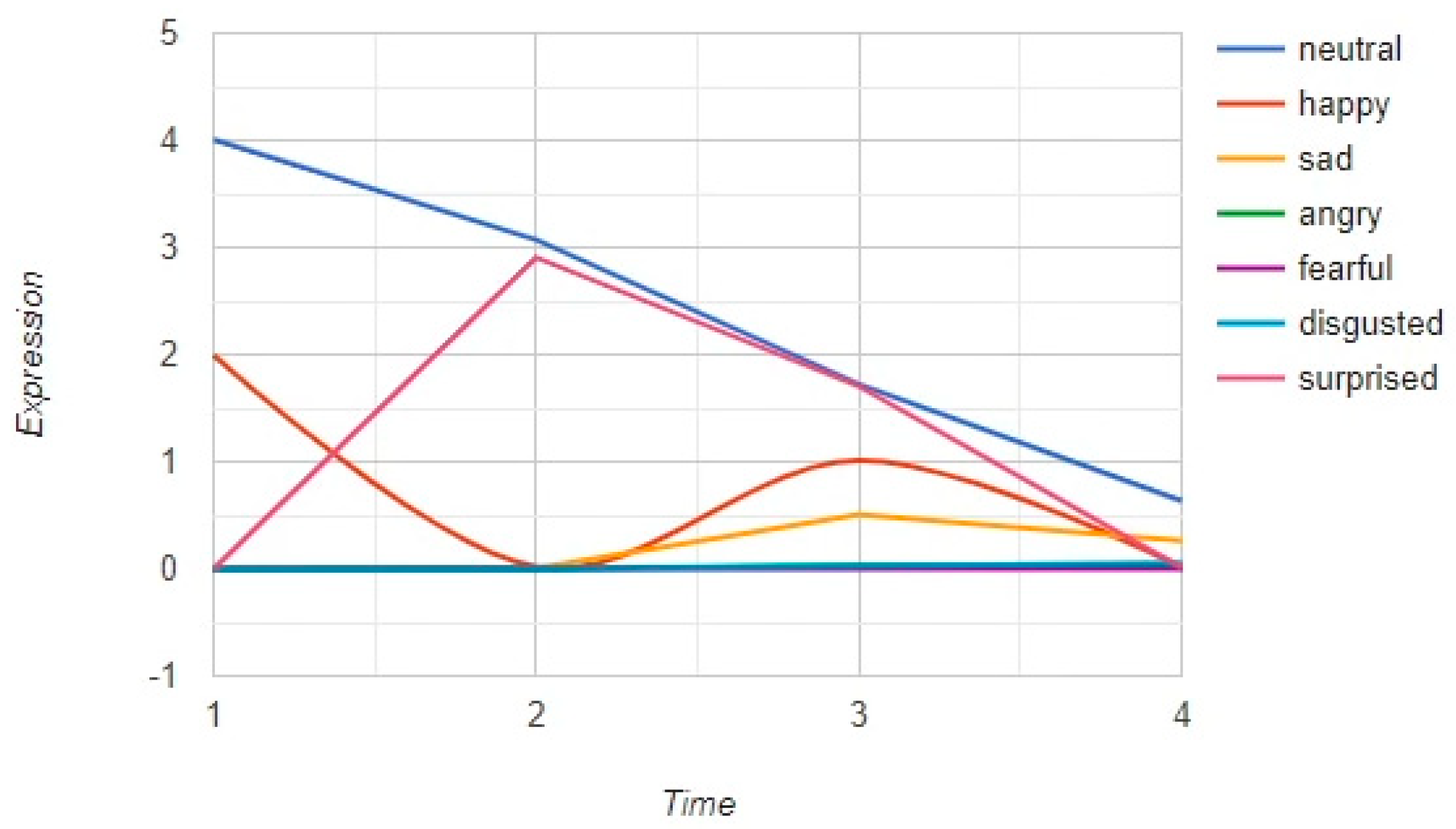

Figure 4 illustrates how a student’s emotions change throughout a course session.

For each of the previous charts, two separate algorithms were developed to implement these features according to the architecture in use: one on the back end to retrieve data from the database, and one on the front end to request data from the back end and display it in the user interface. Below, we provide an overview of the back-end algorithm’s main steps for the pie chart feature, which was developed using the Express framework:

Send a request to the database to retrieve all rows specific to the current student and session.

Count the number of occurrences of each emotion.

Calculate the percentage of each emotion.

Return the percentage of each emotion.

The previous algorithm is invoked on the front end by the user to display the chart in a specific area of the browser, depending on the view where it is called. The general front-end algorithm for displaying a pie chart is as follows:

Asynchronously call the URL that provides emotion percentages, sending the student ID and session ID as parameters.

Display the received emotion percentages as a pie chart.

Repeat the process every second to update the chart in real time.

6. Results

Learning is a complex process influenced by many factors, of which emotions play a central role. These emotional states can have a direct impact on students’ concentration, motivation, and, consequently, academic performance. With our application, artificial intelligence now makes it possible to analyze emotions in real time using facial recognition. In this context, we propose to exploit the data collected in this study to study the relationship between emotional states and academic performance.

Therefore, we integrated data of the percentages of the students’ emotions with two other parameters which are the module grade and attendance.

Table 2 presents a sample of the dataset in relation to 5 students. The grade is out of 20 and the attendance is out of 28 which is the total of the sessions carried out (1 h 30 min per session). The values presented for the emotions represent the percentage of the emotion recorded for the student during all the sessions. For example, student 1 showed the emotion “angry” for 11.38% of the time they spent in this module.

To determine the relationship between performance and all emotions, we calculated the correlation coefficient between emotions and grade. We also calculated the same metric between emotions and presence.

Table 3 represents the correlation values of emotions with either grade or presence.

The results indicate that certain emotions have a significant correlation with the academic performances of the students who participated in this experience. In particular, joy and surprise, with correlations of 0.592 and 0.430, respectively, are linked to students who achieved good grades. This means that students experiencing positive emotions during the sessions of this experience, such as joy of learning or curiosity about new content (expressed by surprise), have more ability to achieve good grades. On the other hand, negative emotions such as anger (−0.410), disgust (−0.439), sadness (−0.273), and fear (−0.282) are negatively correlated with grades, although the magnitude of this correlation can fluctuate. These observations indicate that negative emotions affect concentration and motivation, leading to decreased academic performance. According to these findings, emotions like anger and disgust influence negatively on academic performance.

Regarding class attendance, the correlations are significantly weaker, but they follow the same trend. This means that students who experience positive emotions or remain neutral are slightly more likely to attend classes regularly. However, negative emotions such as anger (−0.260), disgust (−0.176), and fear (−0.114) are weakly correlated with reduced attendance, meaning that these emotions can be considered as factors of gradual disengagement.

Despite a correlation that fluctuates from one emotion to another, we observed a resemblance between emotions deemed positive (joy, surprise) and those perceived as negative (anger, sadness, fear, disgust). Thus, in order to obtain a more accurate interpretation of the results and a more robust analysis, it is preferable to classify emotions into two large groups: positive emotions and negative emotions. This method offers the possibility of appreciating the overall impact of positive and negative emotions on performance and attendance in class, while minimizing the statistical fluctuation caused by the distinct nuances of each emotion.

Table 4 shows the transformation made to the dataset for the first 5 students as an example. The value of the positive emotion represents the sum of the values of the emotions considered positive (joy, surprise).

In order to analyze the results to establish the link between positive and negative emotions on the one hand, and academic success and class attendance on the other, we determined the correlation of these elements with the new values of emotions.

Table 5 below presents the result of the calculation of the correlation coefficients.

By analyzing the results indicated in

Table 5, a significant link between students’ emotions, their academic performance, and their attendance has been revealed. Specifically, in the correlation between positive emotions and academic performance, a strong positive correlation (0.699) was observed. This can be interpreted as an argument that confirms that students who express more joy and surprise tend to perform better. On the other hand, a strong negative correlation (−0.682) was established between negative emotions and scores. This means that emotions such as sadness, anger, or fear are linked to lower performance.

Concerning attendance as a factor, the relationship with positive emotions remains positive, but its value is very weak (0.179). This implies that students who experience positive feelings have a slight tendency to attend class than students with negative emotions, although this impact is less significant than that on academic performance. On the other hand, a moderate negative correlation exists between negative emotions and class attendance (−0.312), indicating that students experiencing stress, worry, or other unpleasant feelings tend to be less diligent in class. This outcome may be associated with a gradual disaffection or discomfort with the educational setting.

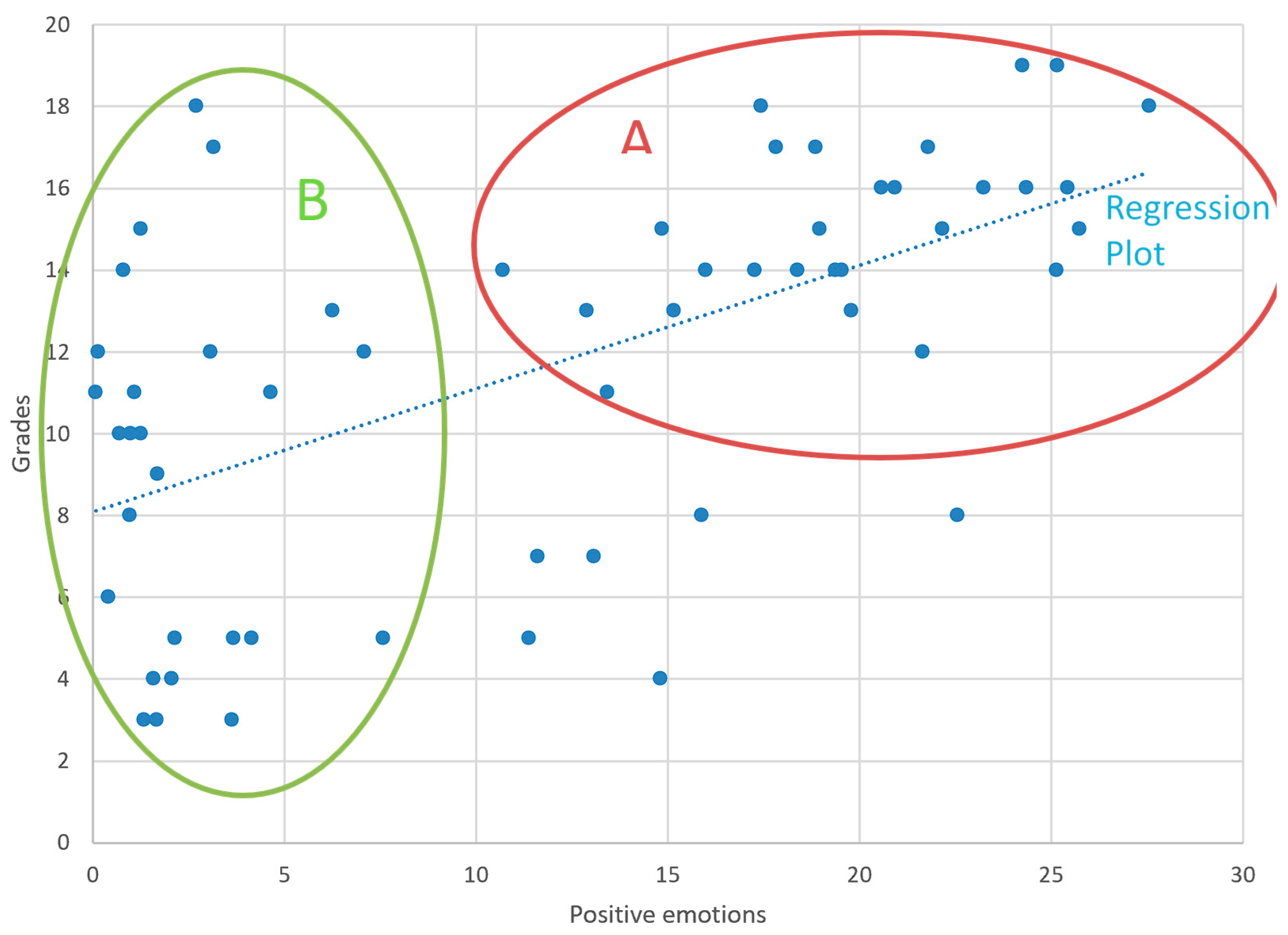

To graphically visualize the relationship between positive emotions and students’ academic performance, we plotted the data points representing the students in a plane that has the students’ performance as the first axis and their grades as the second axis.

Figure 5 shows the result obtained. We notice in this figure that almost all students with more positive emotions have grades between good and excellent (Zone A). On the other hand, students with less positive emotions have varied grades and a significant number have grades below average (Zone B).

To further investigate the link between emotions and academic performance, we categorized students into four distinct categories based on their achievements: high-achieving students (<5), vulnerable students (5 ≤ grades < 10), satisfactory-performing students (10 ≤ grades < 15), and brilliant students (≥15). This categorization facilitates a more precise observation of the progression of emotions according to different degrees of success and the identification of the most fragile groups. By identifying these categories, we are able to more precisely understand how emotions impact the transition from failure to excellence, and to develop tailor-made pedagogical approaches for each type of profile.

We determined the difference between positive and negative emotions for each student in order to examine each group separately. Each time this disparity was greater, that student demonstrated more positive emotions. Each time it was devalued and low, that student demonstrated more negative emotions. An example of this operation for the first five students is illustrated in the table below (

Table 6).

We examined the distribution of students based on their positive or negative difference, classifying them into various groups according to their academic performance. In addition, we determined the mean and standard deviation of these differences for each group. The results obtained are illustrated in the following table (

Table 7).

Analysis of the gap between positive and negative emotions reveals a distinct progression across grade groups, ranging from students with significant academic difficulties to those who excel.

A large majority (81.82%) of students with a grade of 5 or lower expressed primarily negative emotions, indicating that they experienced more negative than positive emotions. The mean deviation of this difference is strongly negative (−18.13), indicating a clear preponderance of these unfavorable feelings. This category also shows a mean deviation of 10.29, signaling a moderate dispersion of the emotions experienced. These findings indicate that students with major academic problems primarily experience negative emotions, which are likely to impair their motivation and engagement in the learning process.

Among the group of students who obtained a score of 10 or lower, there is still a tendency toward negative emotions, but with a slight improvement. Indeed, 70% of them present a negative gap, although this average gap has narrowed (−6.21). The average gap is higher (11.81), which suggests greater variability in emotional perceptions. This indicates that some students are beginning to control their emotions, while most still have problems experiencing positive feelings in relation to their learning.

The group of students with a score of 15 or lower represents a tipping point where positive emotions gradually begin to dominate. Indeed, 60% of students in this group show a positive difference, signifying an improvement in their emotional well-being. The mean difference is slightly above zero (1.96), despite a considerable mean difference (14.01), reflecting a large disparity within this group. This transition indicates that students with average to good performance are cultivating a more balanced mindset, which could strengthen their engagement and motivation.

Finally, students who scored above 15 clearly stand out for their preponderance of positive emotions. Indeed, 85.71% of these students show a positive difference, suggesting that they generally experience more positive emotions than negative ones. The average deviation of this difference is 10.81, indicating significant emotional well-being, while the average variance is the lowest (9.54), suggesting greater uniformity within this group. These observations suggest that students who excel demonstrate a more confident mindset, superior stress management, and increased motivation to succeed.

7. Discussion

7.1. Academic Performance

In this study, we have been investigating the influence of emotions on students’ academic achievement through the use of our application. As a result, it generally appears that struggling students are more sensitive to negative emotions, while those who perform better express mainly more positive emotions. Therefore, the results confirm that the emotional state of the learner plays a key role in the learning process. This means that it affects not only motivation, but also attention and engagement in class.

Additionally, the difficulties encountered by students in their studies lead to more frustration, anxiety, or discouragement. Due to these emotions, there may likely be a gradual disengagement from students over time, such that their attention may decrease during class sessions, or they may have an unfavorable perception of their own success. This phenomenon can create a harmful cycle where diminished self-esteem accentuates negative emotions, which hinders the learning process.

On the other hand, Students who demonstrate higher performance during their studies tend to display more positive emotions, such as joy or curiosity. As a result, this fosters in students a mindset conducive to learning by stimulating the desire to discover, understand, and persevere in the face of obstacles based on these feelings experienced and expressed.

It is clear from all this that an emotionally positive environment is essential for learning success, as it allows learners to effectively manage their stress and be positive in the face of learning challenges. Moreover, emotion management also appears to have an impact on class attendance. Students who experience primarily positive emotions tend to attend class regularly, while those dominated by negative emotions generally have lower attendance. This may be because an unfavorable emotional state can make learning more difficult, prompting some students to avoid circumstances that cause them stress or discomfort.

Our findings resonate with Pekrun’s Control-Value Theory of Achievement Emotions [

40], which posits that positive activating emotions (e.g., joy, curiosity) enhance motivation and performance, whereas negative emotions undermine engagement. This theoretical lens supports the interpretation of our correlations and provides a psychological basis for understanding the role of emotions in learning.

These observations highlight the need for an educational environment that considers students’ emotional state. Implementing tactics to create a more pleasant classroom atmosphere, such as interactive teaching approaches, available psychological support, or stress management methods, could not only enhance students’ well-being but also optimize their academic performance.

Finally, we acknowledge that the present study employed correlation analysis as an exploratory step. While this provides initial insights, we acknowledge that more sophisticated approaches, such as regression models, classification algorithms, or machine learning validation, could yield deeper predictive insights. We intend to explore these methods in future work.

7.2. Impact of the Software

The solution proposed in this project opens new perspectives for a better understanding of students. By using a set of criteria, the application evaluates students’ emotions, enabling transversal research based on the collected data. This approach helps answer a variety of questions regarding the assessment of teaching and learning processes—an evaluation that was not previously feasible in traditional classroom settings. Universities and educational institutions can gain deeper insights into their students by using the application for emotional analysis. As explained in the first section, research has already demonstrated that understanding learner emotions can help in increasing learner engagement [

33,

34], applying personalized learning [

35,

36] providing struggling students with help [

37,

38] promoting students’ self-awareness and self-regulation [

39], and providing valuable insights for the creation of instructional materials and strategies that are more effective [

1]. We subsequently cite cases where the application can be very useful:

For teachers, who are typically interested in their students’ emotional states during face-to-face classroom instruction, this application allows them to adjust their teaching methods accordingly. However, teachers cannot always adopt this approach, particularly in large classes. Moreover, as machines increasingly take over instructional tasks, this application may enable them to better understand students’ emotions.

Classifying Students According to Their Emotional State and Correlating with Other Parameters: The purpose of this software is to store all emotional information in an object database. By allowing open data utilization, it enables further studies. The recorded data can be imported and analyzed in various contexts. For example, unsupervised learning can be used to identify groups of students with similar emotional patterns, which can then be correlated with other variables, such as grades in hard or soft skill modules.

Analyzing Changes in Students’ Emotions According to Content: For the same student, it is possible to analyze whether their emotions differ across various modules. This can provide an emotional diagnosis by module, helping to address issues with the student. For example, if a student consistently feels sad or angry in certain modules but happy in others, educators can discuss the underlying reasons with the student to promote greater engagement in the less positive modules.

Analyzing the Variation in Students’ Emotions by Educational Content (Course, Practical Work, Guided Project, etc.): Pedagogical techniques are fundamental to effective teaching and learning. Analyzing students’ emotions can help determine the effectiveness of these strategies. This application serves as a valuable tool for teachers to evaluate their teaching approaches by collecting emotional feedback from their students.

Detecting Extreme Cases: The algorithm can automatically identify students who exhibit emotions that differ significantly from those of their peers or who consistently display negative emotions across all modules. This feature helps pinpoint such individuals so that the underlying causes of their low emotional engagement can be addressed.

Reporting Proactive Alerts: While emotion data is recorded in its raw form, the application provides flexibility for users to apply various techniques to analyze emotions.

Bringing Distance Teaching Closer to Classroom Teaching: Many limitations were observed in distance learning by both educators and students during the COVID-19 period. This software can help address some of these gaps, allowing teachers and students to feel more comfortable and engaged in distance learning sessions.

Emotion recognition is a topic of significant interest in the field of computer vision. Considerable research has been conducted on this subject, particularly in developing effective machine learning models and applying these findings to practical use cases, such as the application described in this paper. To illustrate how our software differs from other published works, we compare existing emotion recognition applications across several features in

Table 8. These features are as follows:

Language: The technologies and programming languages used to develop the software.

Display on Video: Indicates whether the application shows the identified emotions in real time on the video.

Teaching and Learning Workflow: Indicates whether the application supports aspects of the teaching and learning workflow, such as organizing classes and student groups, and recording emotions according to the type of session (lecture, lab, guided project, etc.).

Users Involved in Teaching and Learning: Indicates whether the application provides user accounts specific to educators, learners, and other participants in the teaching and learning process.

Dashboards: Indicates whether the software provides features that allow educators or other stakeholders to view charts visually depicting emotions in dashboards.

Data storage: Indicates whether the application provides features to store emotional data in files, databases, or other types of storage.

Use Outside the Teaching and Learning Context: Indicates whether the application can be used to detect emotions in other contexts beyond teaching and learning.

7.3. Limitations

Although the proposed approach shows promising results, several limitations remain and should be addressed in future work:

One limitation of this study lies in the restricted dataset, as the experiment was conducted with 65 computer engineering students from a single Moroccan university. Consequently, the generalizability of the findings is limited, and further studies involving larger and more diverse populations across multiple universities and disciplines are necessary.

Another limitation concerns the fact that our experiment was carried out on a single course module. Emotional reactions and their influence on performance may vary across disciplines and types of learning activities. Future studies should therefore extend the experimentation to different subjects and pedagogical contexts.

This study did not stratify results by demographic variables such as gender or age, nor by prior academic performance. These factors may influence the relationship between emotions and grades, and they will be considered in future studies to refine our understanding.

Multimodal information (e.g., voice, body language, physiological signals) is important for robust emotion detection, this study focused exclusively on facial expressions. Future work will extend LearnerEmotions to integrate multimodal signals.

Regarding software, a limitation is the lack of analysis regarding scalability, cost, and hardware dependency. LearnerEmotions is lightweight and web-based, requiring only a webcam-enabled computer, which facilitates deployment. However, a formal analysis of scalability and costs will be included in future work.

Although the model used in LearnerEmotions demonstrated an accuracy of 0.91 in real deployment tests, this version of the software did not include a comprehensive evaluation of performance metrics such as latency and error rates. Future work will incorporate a real-time monitoring system to assess and ensure the reliability and efficiency of emotion detection during live sessions.

It is important to note that cultural differences in interpreting facial expressions may influence emotion recognition. To extend the use of our application to students from other cultures, cross-cultural validation is needed to ensure robustness and general applicability of the system.

8. Conclusions

This research highlights the importance of considering learner emotions as a factor that directly impacts academic success and student engagement in their training. The results found in this study indicate with an experimental approach that struggling students experience more negative emotions, which can harm their motivation and concentration, while those who are more successful show a higher prevalence of positive emotions, contributing to more effective learning. The effect of emotions is also manifested in attendance and engagement where students who have developed positive emotions tend to attend scheduled classes more regularly.

Artificial intelligence technology applied to emotion analysis in the field of education promises several perspectives of improvement on several levels, notably personalized learning and the improvement in pedagogy. The application we have developed helps in the real-time recognition of students’ emotional states. As a result, teachers and institutions can have a valuable tool on which they can rely to adapt their teaching approaches to the emotional demands of learners.

These results highlight the indispensability of considering learners’ emotional well-being within educational systems, whether in face-to-face or distance learning courses. Therefore, to complete the work, we must seek to develop pedagogical approaches and support mechanisms that promote a positive learning environment, where students feel both stimulated and supported. In future work, we will examine the most effective strategies to help learners better regulate their emotions, always based on our application. This will thus contribute to their academic success and personal development.

Future work will focus on extending this research in several directions. First, we aim to increase the sample size and include students from different universities and disciplines to enhance generalizability. Second, we plan to integrate multimodal data sources (e.g., voice, body language, physiological signals) into the system for more robust emotion recognition. Additionally, technical improvements will include real-time monitoring of performance metrics such as accuracy and latency, as well as a detailed analysis of scalability and deployment costs. Finally, cross-cultural validation will be conducted to ensure applicability in diverse educational contexts.