I Can’t Get No Satisfaction? From Reviews to Actionable Insights: Text Data Analytics for Utilizing Online Feedback

Abstract

1. Introduction

2. Related Background

2.1. Visitor Reviews for Museum Management

2.2. Prior Research on Visitor Reviews

2.3. Research Gap and Scope

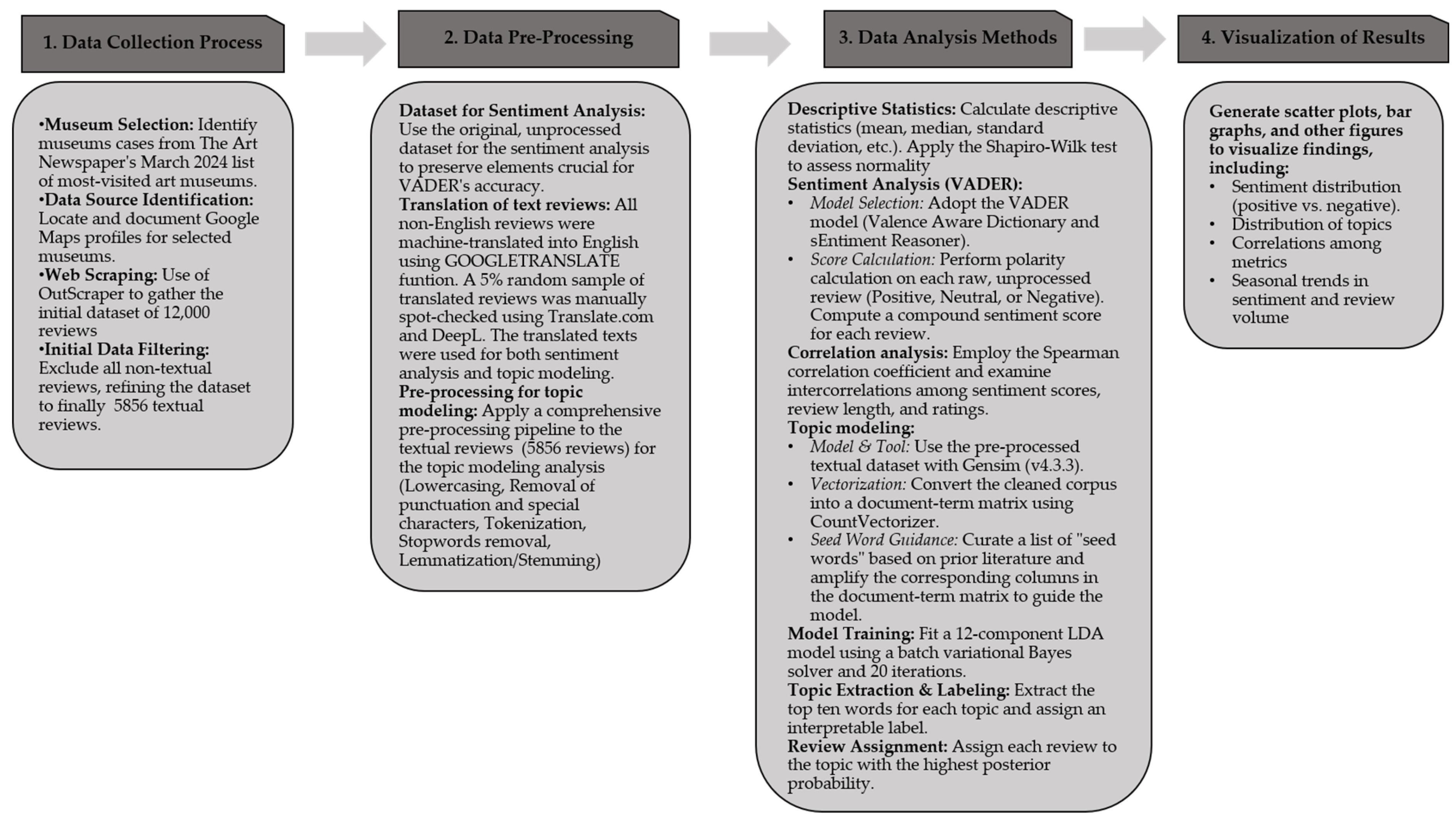

3. Materials and Methods

3.1. Data Collection

3.2. Data Pre-Processing

- Lowercasing: All textual data was transformed to lowercase to ensure uniformity and eliminate discrepancies arising from case sensitivity [13].

- Removal of punctuation and special characters: Punctuation marks and extraneous symbols (e.g., !, ?) were removed, as they do not contribute substantively to the analysis and may introduce unnecessary variance [14].

- Tokenization: The text was segmented into individual words (tokens) to facilitate subsequent processing and analysis [15].

- Stopword removal: Common stop words, such as “the,” “is,” and “and,” were removed because they offer minimal informational value and could introduce noise into the analysis [16].

- Lemmatization/Stemming: Words were reduced to their root forms to treat variations of the same lexeme as a single entity. For instance, “painter” and “painting” were standardized to their base form, “paint.” This mitigated redundancy and enhanced consistency in text representation [17].

3.3. Analysis Methods

3.3.1. Descriptive Statistics

3.3.2. Sentiment Analysis

- Sentiment model selection: The VADER (Valence Aware Dictionary and sEntiment Reasoner) model was selected, owing to its proven efficacy in processing short texts and user-generated content [18,19]. VADER is particularly advantageous for sentiment analysis within social media and review-oriented datasets, as it adeptly accounts for both lexical features and the intensity of words through a rule-based approach [5].

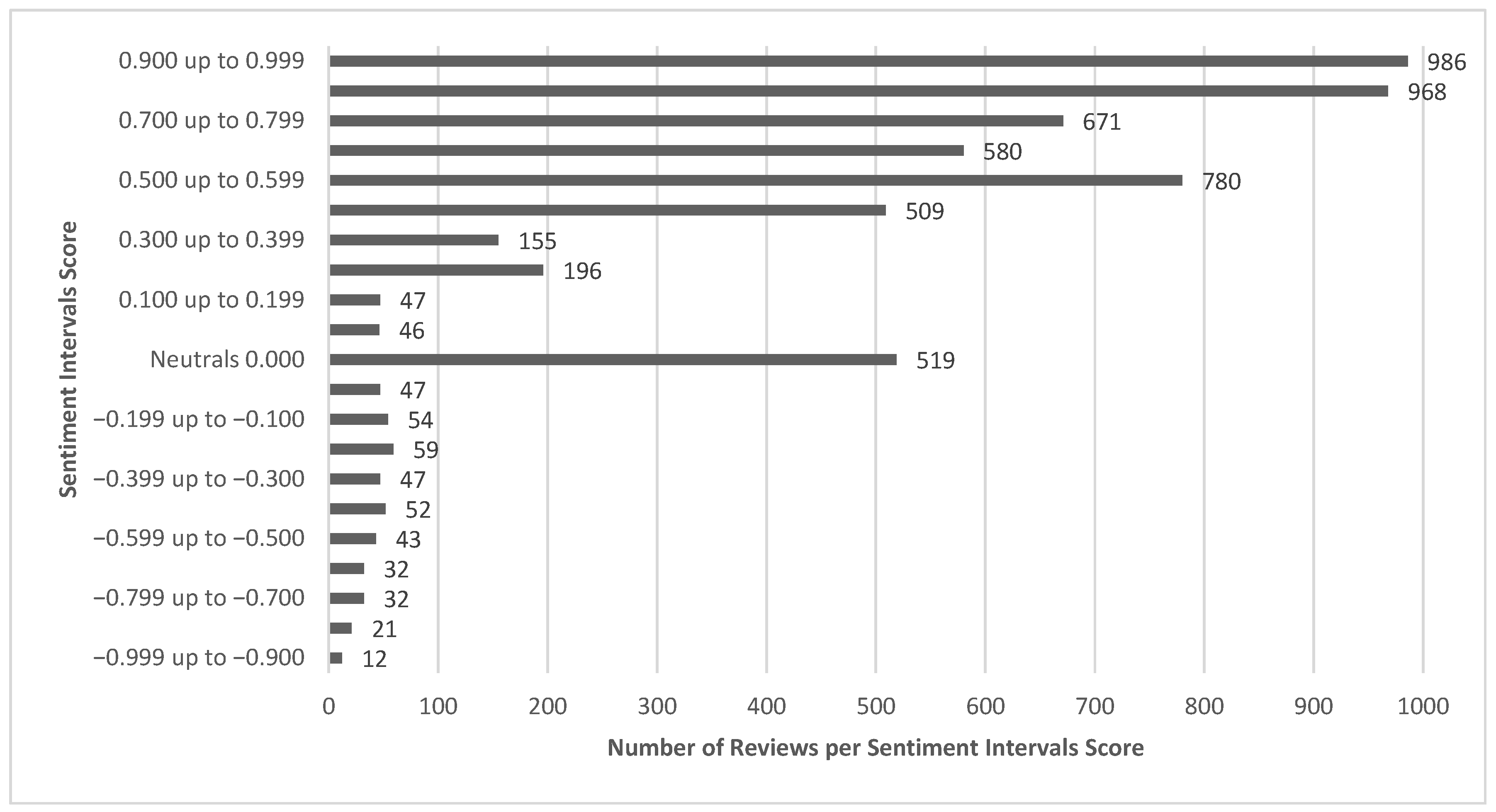

- Calculation of scores: The sentiment analysis was systematically executed in two primary phases. First, polarity calculation, where each review was analyzed to ascertain its sentiment polarity, categorizing it as positive, neutral, or negative based on predefined thresholds established within the VADER model. It is noted that sentiment analysis was performed on the original, unfiltered text corpus employing the VADER tool. This methodological approach effectively incorporates various linguistic features, such as stop words, punctuation, emoticons, and additional syntactic cues, to enhance the precision of the computed compound sentiment score [20]. Second, aggregation was followed to provide a holistic view of sentiment scores. The aggregation included individual sentiment scores for each review as well as overall sentiment distribution for the entire corpus. For Darraz et al. [21], this approach facilitated the identification of dominant sentiment trends and yielded both a micro- and macro-level overview of audience perceptions.

- Visualization: To enhance the interpretability of the findings, various visualization techniques were employed to elucidate the sentiment distribution across the dataset. Scatter plots and bar graphs were generated to clearly represent the proportions of positive, neutral, and negative sentiments. These visualizations not only fostered a more intuitive comprehension of sentiment trends of the current corpus but also contributed to the identification of potential patterns within the data.

3.3.3. Correlation Analysis

3.3.4. Topic Modeling

4. Results

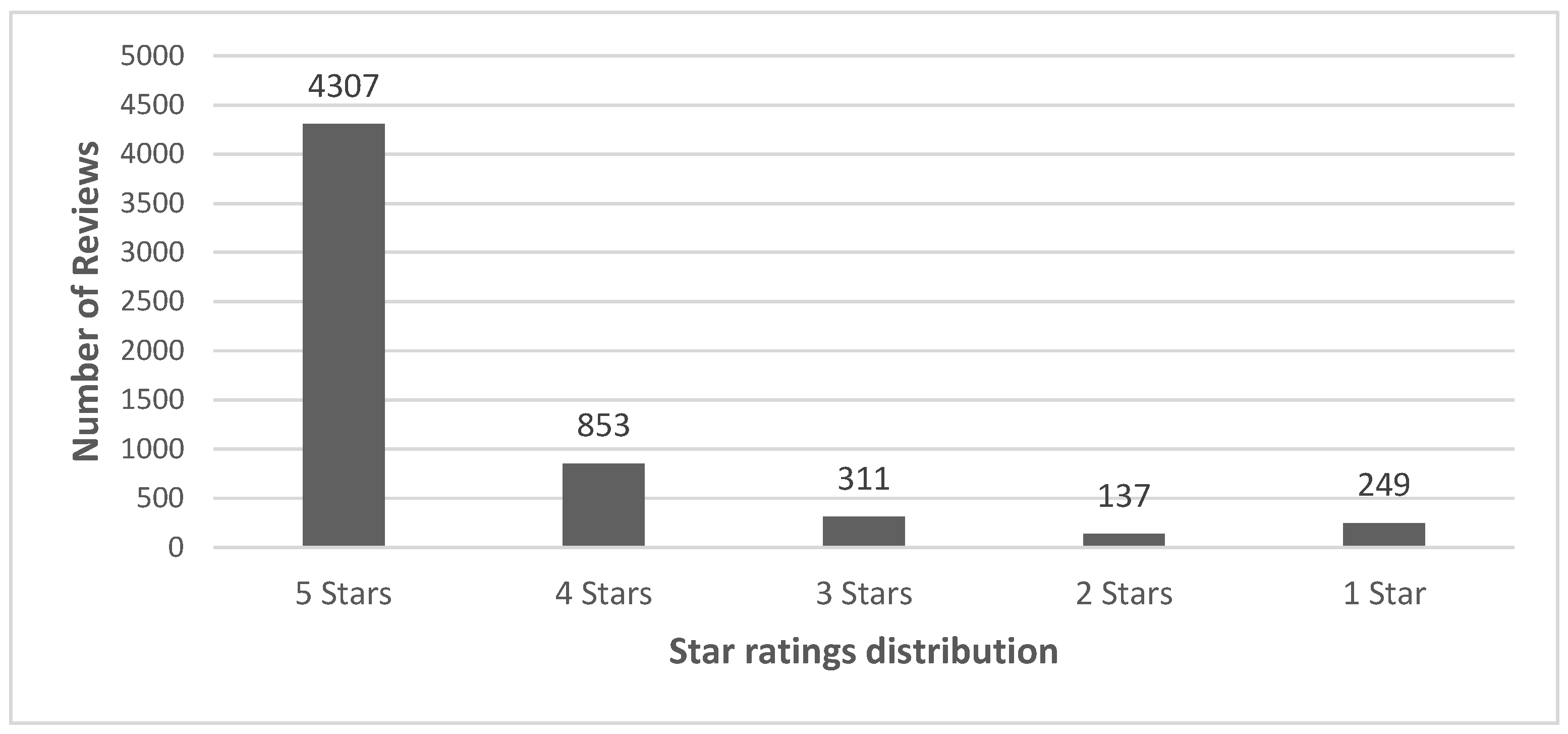

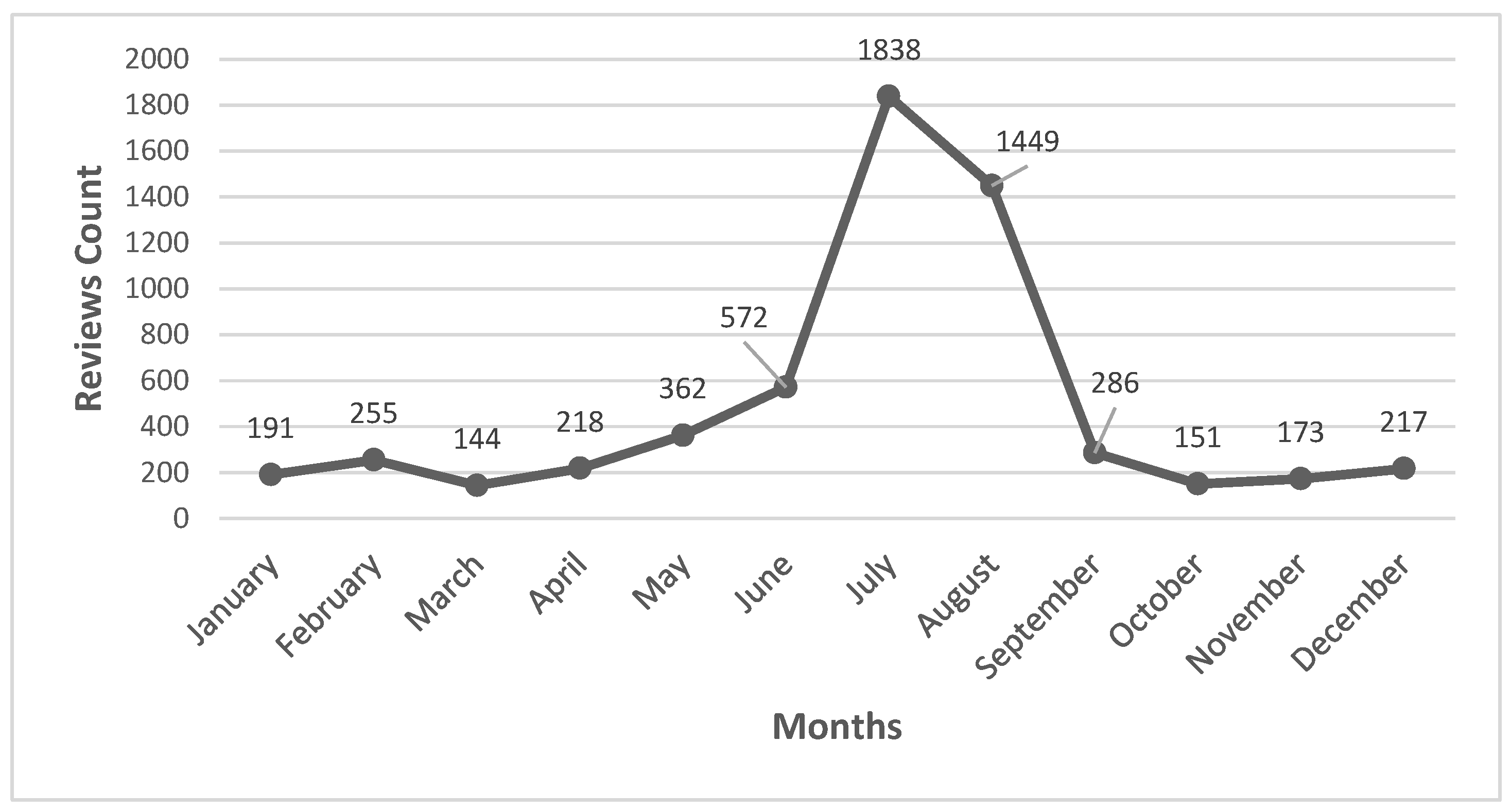

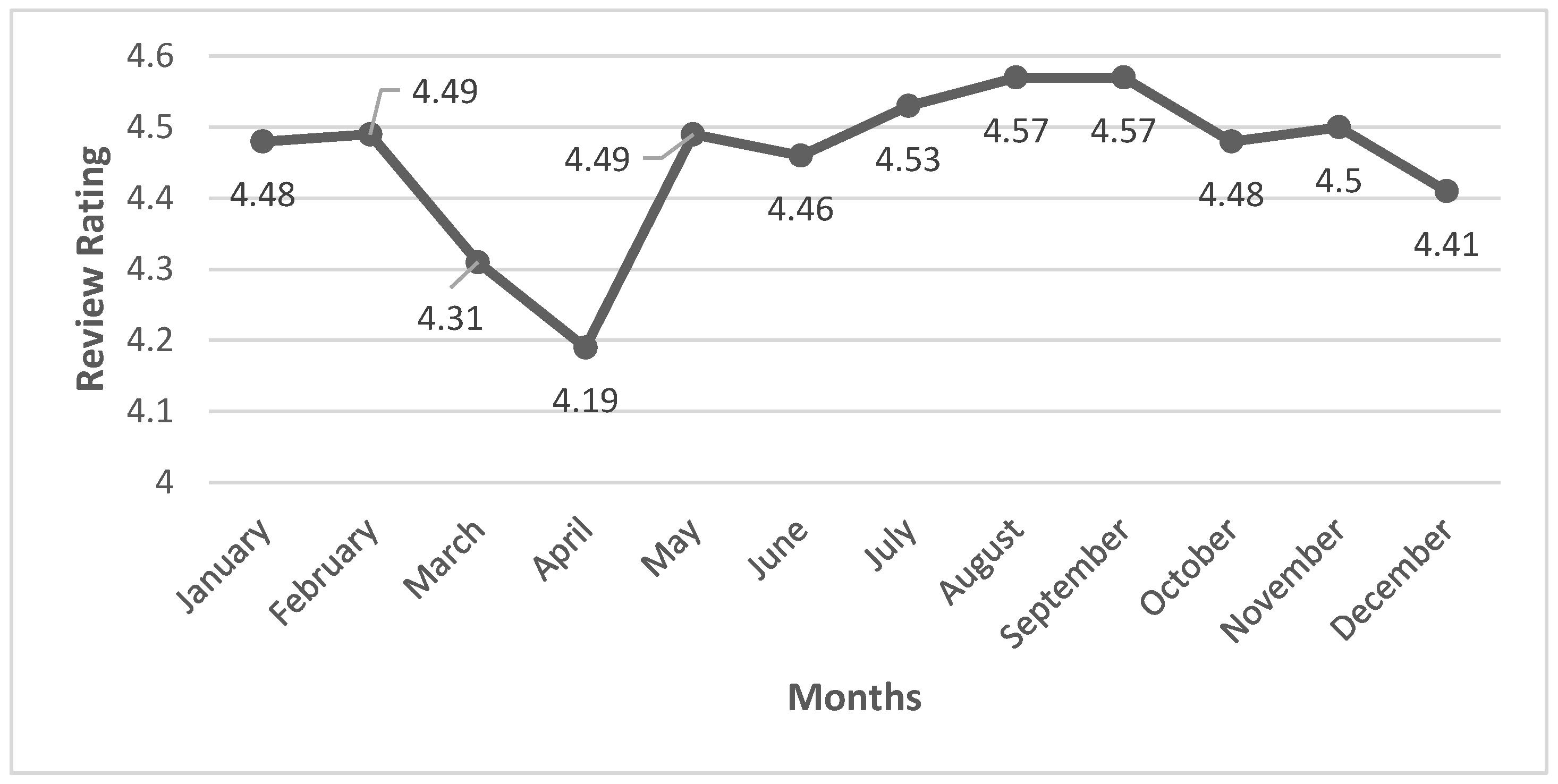

4.1. Dataset Characteristics

4.2. Descriptive Statistics Results

4.3. Sentiment Analysis Results

4.4. Correlations Results

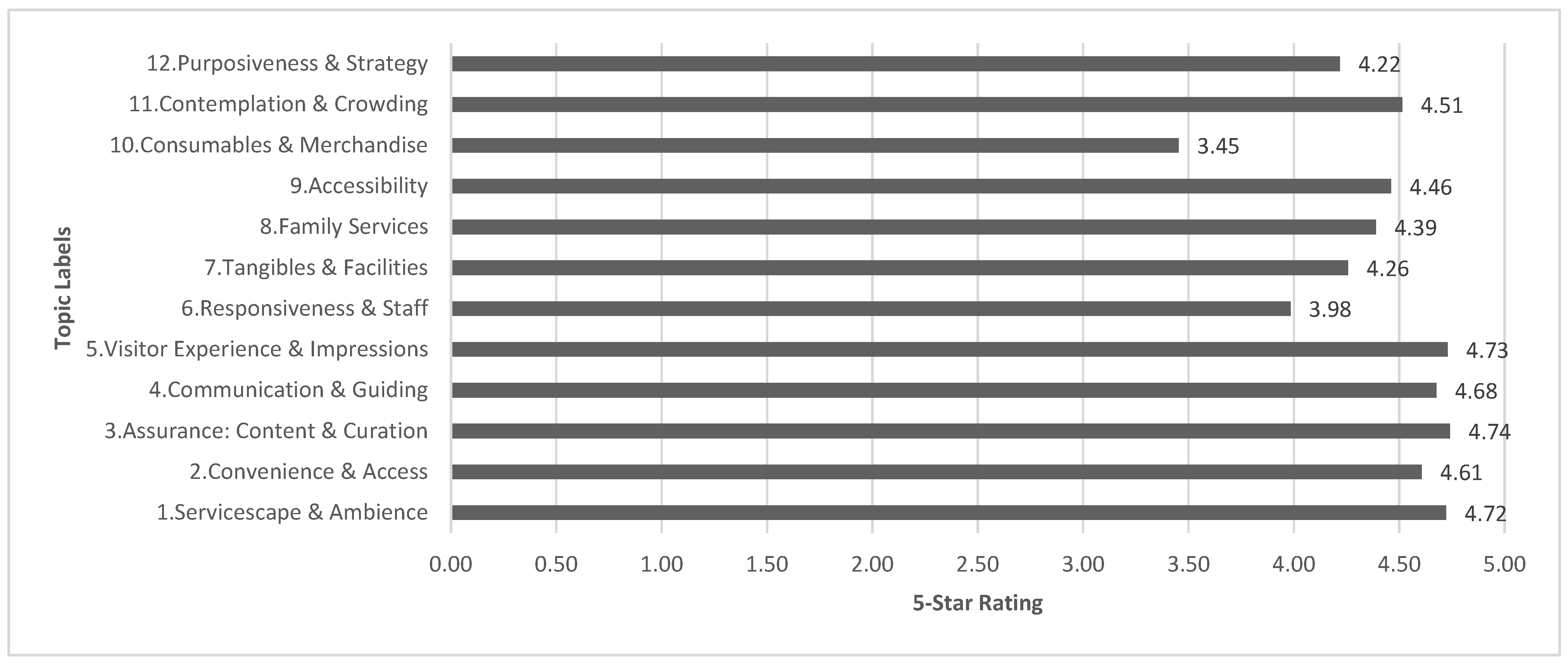

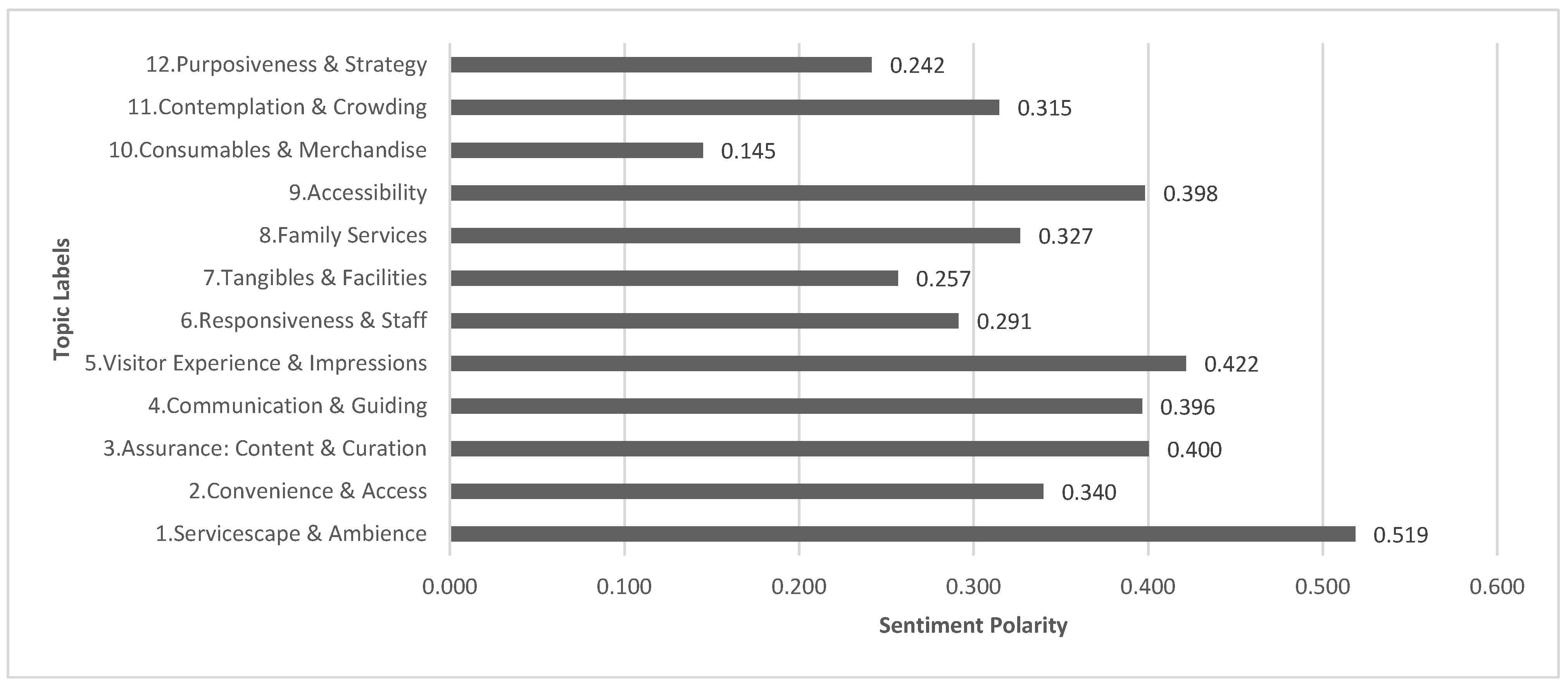

4.5. Topic Modeling Results

5. Discussion

5.1. Major Findings

5.2. Theoretical Contribution

5.3. Practical Contribution

5.4. Limitations and Future Steps

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Overview of Museum Cases

| Cases | Museum Name | Country | Address | Number of Reviews per Museum |

|---|---|---|---|---|

| 1 | Acropolis Museum | Greece | Dionysiou Areopagitou 15, 11742 Athens | 84 |

| 2 | Art Gallery of New South Wales | Australia | Art Gallery Road, The Domain Sydney NSW 2000 | 93 |

| 3 | Art Gallery of South Australia | Australia | North Terrace, Adelaide, SA 5000 | 110 |

| 4 | Crystal Bridges Museum of American Art | United States | 600 Museum Way, Bentonville, AR 72712 | 110 |

| 5 | Fondation Louis Vuitton | France | 8 Avenue du Mahatma Gandhi, 75116 Paris | 96 |

| 6 | Frederik Meijer Gardens & Sculpture Park | United States | 1000 East Beltline Avenue NE, Grand Rapids, MI 49525 | 111 |

| 7 | Guggenheim Museum Bilbao | Spain | Av. Abandoibarra, 2, 48009 Bilbao | 76 |

| 8 | Gyeongju National Museum | Republic of Korea | 186 Iljeong-ro, Inwang-dong, Gyeongju, Gyeongsangbuk-do | 93 |

| 9 | Hong Kong Museum of Art | Hong Kong | 10 Salisbury Road, Tsim Sha Tsui, Hong Kong | 105 |

| 10 | Humboldt Forum im Berliner Schloss | Germany | Schloßpl. 1, 10178 Berlin | 125 |

| 11 | Kunsthistorisches Museum Wien | Austria | Maria-Theresien-Platz, 1010 Vienna | 101 |

| 12 | Los Angeles County Museum of Art | United States | 5905 Wilshire Blvd, Los Angeles, CA 90036 | 93 |

| 13 | Louvre Museum | France | Rue de Rivoli, 75001 Paris | 87 |

| 14 | MMCA (National Museum of Modern and Contemporary Art) Seoul | Republic of Korea | 30 Samcheong-ro, Jongno-gu, Seoul 3062 | 96 |

| 15 | Moskovskiy Dom Fotografii | Russia | Ostozhenka Street, 16, Moscow, 119034 | 84 |

| 16 | Mucem—Museum of Civilizations of Europe and the Mediterranean | France | 7 promenade Robert Laffont, 13002 Marseille | 102 |

| 17 | Musée d’Orsay | France | Esplanade Valéry Giscard d’Estaing, 75007 Paris | 96 |

| 18 | Musée du quai Branly—Jacques Chirac | France | 37 Quai Branly, 75007 Paris | 99 |

| 19 | Museo Nacional Centro de Arte Reina Sofía | Spain | Calle de Santa Isabel, 52, 28012 Madrid | 78 |

| 20 | Museo Nacional del Prado | Spain | C. de Ruiz de Alarcón, 23, 28014 Madrid | 87 |

| 21 | Museum of Fine Arts, Boston | United States | 465 Huntington Avenue, Boston, MA 02115 | 93 |

| 22 | National Gallery of Art | United States | Constitution Avenue NW, Washington, D.C. 20565 | 103 |

| 23 | National Gallery of Australia | Australia | Parkes Place, Parkes, ACT 2600 | 125 |

| 24 | National Gallery of Victoria | Australia | 180 St Kilda Road, Melbourne, VIC 3006 | 98 |

| 25 | National Gallery Singapore | Singapore | 1 St Andrew’s Road, Singapore 178957 | 103 |

| 26 | National Museum in Kraków | Poland | al. 3 Maja 1, 30-001 Kraków | 79 |

| 27 | National Museum of Korean Contemporary History | Republic of Korea | 198 Sejong-daero, Jongno-gu, Seoul 03141 | 96 |

| 28 | National Museum of Scotland | United Kingdom | Chambers Street, Edinburgh EH1 1JF, UK | 106 |

| 29 | Petit Palais | France | Avenue Winston-Churchill, 75008 Paris | 108 |

| 30 | Philadelphia Museum of Art | United States | 2600 Benjamin Franklin Parkway, Philadelphia, PA 19130 | 89 |

| 31 | Queensland Art Gallery | Australia | Stanley Pl, South Brisbane, QLD 4101 | 120 |

| 32 | Rijksmuseum | Netherlands | Museumstraat 1, 1071 XX Amsterdam | 101 |

| 33 | Royal Academy of Arts | United Kingdom | Burlington House, Piccadilly, London W1J 0BD | 116 |

| 34 | Shanghai Museum | China | 201 Renmin Avenue, Huangpu District, Shanghai | 126 |

| 35 | Somerset House | United Kingdom | Strand, London WC2R 1LA | 154 |

| 36 | State Hermitage Museum | Russia | Palace Square, 2, St. Petersburg, 190000 | 147 |

| 37 | Tate Modern | United Kingdom | Bankside, London SE1 9TG | 159 |

| 38 | Tel Aviv Museum of Art | Israel | Sderot Sha’ul HaMelech 27, Tel Aviv-Yafo, 61332012 | 80 |

| 39 | The British Museum | United Kingdom | Great Russell Street, London WC1B 3DG | 80 |

| 40 | The Centre Pompidou | France | Place Georges-Pompidou, 75004 Paris | 121 |

| 41 | The Getty | United States | 1200 N Getty Center Dr, Los Angeles, CA 90049 | 96 |

| 42 | The Metropolitan Museum of Art | United States | 1000 Fifth Avenue, New York, NY 10028 | 93 |

| 43 | The Moscow Kremlin | Russia | Moscow Kremlin, Moscow, 103073 | 73 |

| 44 | The Museum of Fine Arts, Houston | United States | 1001 Bissonnet Street, Houston, TX 77005 | 78 |

| 45 | The Museum of Modern Art | United States | 11 West 53rd Street, New York, NY 10019 | 75 |

| 46 | The National Art Center, Tokyo | Japan | 7-22-2 Roppongi, Minato-ku, Tokyo 106-8558 | 96 |

| 47 | The National Gallery of London | United Kingdom | Trafalgar Square, London WC2N 5DN, United Kingdom | 96 |

| 48 | The Pushkin State Museum of Fine Arts | Russia | Volkhonka Street, 12, Moscow, 119019 | 93 |

| 49 | The Royal Castle in Warsaw | Poland | plac Zamkowy 4, 00-277 Warszawa | 66 |

| 50 | The State Russian Museum, Mikhailovsky Palace | Russia | Inzhenernaya Street, 4, St Petersburg, 191186 | 121 |

| 51 | The State Tretyakov Gallery | Russia | Lavrushinsky Ln, 10, Moscow, 119017 | 95 |

| 52 | Thyssen-Bornemisza National Museum | Spain | Paseo del Prado, 8, 28014 Madrid | 90 |

| 53 | Tokyo Metropolitan Art Museum | Japan | 8-36 Ueno-koen, Taito-ku, Tokyo | 97 |

| 54 | Tokyo National Museum | Japan | 13-9 Uenokoen, Taito City, Tokyo 110-8712 | 106 |

| 55 | Triennale di Milano | Italy | Viale Emilio Alemagna, 6, 20121 Milano | 68 |

| 56 | Uffizi Galleries | Italy | Piazzale degli Uffizi, 6, 50122 Florence | 107 |

| 57 | Vatican Museums | Vatican City | Viale Vaticano, 00165 Vatican City | 88 |

| 58 | Victoria and Albert Museum | United Kingdom | Cromwell Road, London SW7 2RL | 93 |

| 59 | Whitney Museum of American Art | United States | 99 Gansevoort Street, New York, NY 10014 | 93 |

References

- Su, Y.; Teng, W. Contemplating Museums’ Service Failure: Extracting the Service Quality Dimensions of Museums from Negative on-Line Reviews. Tour. Manag. 2018, 69, 214–222. [Google Scholar] [CrossRef]

- Xu, Q.; Shih, J.-Y. Applying Text Mining Techniques for Sentiment Analysis of Museum Visitor Reviews. In Proceedings of the 2024 IEEE 4th International Conference on Electronic Communications, Internet of Things and Big Data (ICEIB), Taipei, Taiwan, 19–21 April 2024; pp. 270–274. [Google Scholar]

- Hua, L.; Wahid, W.A.; Ali, N.A.M.; Dong, J. Culture and Technology: Visitor Experiences at the Palace Museum and China Science Museum. Environ.-Behav. Proc. J. 2025, 10, 49–55. [Google Scholar] [CrossRef]

- Wan, Y.N.; Forey, G. Exploring Collective Identity and Community Connections: An Interpersonal Analysis of Online Visitor Reviews at the Overseas Chinese Museum (2012–2023). Forum Linguist. Stud. 2024, 6, 149–170. [Google Scholar] [CrossRef]

- Alexander, V.D.; Blank, G.; Hale, S.A. TripAdvisor Reviews of London Museums: A New Approach to Understanding Visitors. Mus. Int. 2018, 70, 154–165. [Google Scholar] [CrossRef]

- Richmond, F.; Uchechukwu, N.C.; Ramos, C.M.Q. Analyses of Visitors’ Experiences in Museums Based on E-Word of Mouth and Tripadvisors Online Reviews: The Case of Kwame Nkrumah Memorial Park, Ghana and the Nike Center for Art and Culture, Nigeria. In Advances in Marketing, Customer Relationship Management, and E-Services; Munna, A.S., Shaikh, M.S.I., Kazi, B.U., Eds.; IGI Global: Hershey, PA, USA, 2023; pp. 192–216. ISBN 978-1-6684-7735-9. [Google Scholar]

- Grande-Ramírez, J.R.; Roldán-Reyes, E.; Aguilar-Lasserre, A.A.; Juárez-Martínez, U. Integration of Sentiment Analysis of Social Media in the Strategic Planning Process to Generate the Balanced Scorecard. Appl. Sci. 2022, 12, 12307. [Google Scholar] [CrossRef]

- Nicola, S.; Schmitz, S. From Mining to Tourism: Assessing the Destination’s Image, as Revealed by Travel-Oriented Social Networks. Tour. Hosp. 2024, 5, 395–415. [Google Scholar] [CrossRef]

- Drivas, I.; Vraimaki, E. Evaluating and Enhancing Museum Websites: Unlocking Insights for Accessibility, Usability, SEO, and Speed. Metrics 2025, 2, 1. [Google Scholar] [CrossRef]

- Chauhan, U.; Shah, A. Topic Modeling Using Latent Dirichlet Allocation: A Survey. ACM Comput. Surv. 2022, 54, 1–35. [Google Scholar] [CrossRef]

- Agostino, D.; Brambilla, M.; Pavanetto, S.; Riva, P. The Contribution of Online Reviews for Quality Evaluation of Cultural Tourism Offers: The Experience of Italian Museums. Sustainability 2021, 13, 13340. [Google Scholar] [CrossRef]

- Cheshire, L.; da Silva, J.; Moller, L.E.; Palk, R. The 100 Most Popular Art Museums in the World—Blockbusters, Bots and Bounce-Backs. Available online: https://www.theartnewspaper.com/2024/03/26/the-100-most-popular-art-museums-in-the-world-2023 (accessed on 28 April 2025).

- Dhanalakshmi, P.; Kumar, G.A.; Satwik, B.S.; Sreeranga, K.; Sai, A.T.; Jashwanth, G. Sentiment Analysis Using VADER and Logistic Regression Techniques. In Proceedings of the 2023 International Conference on Intelligent Systems for Communication, IoT and Security (ICISCoIS), Coimbatore, India, 9–11 February 2023; pp. 139–144. [Google Scholar]

- Dao, T.A.; Aizawa, A. Evaluating the Effect of Letter Case on Named Entity Recognition Performance. In Natural Language Processing and Information Systems; Métais, E., Meziane, F., Sugumaran, V., Manning, W., Reiff-Marganiec, S., Eds.; Lecture Notes in Computer Science; Springer Nature: Cham, Switzerland, 2023; Volume 13913, pp. 588–598. ISBN 978-3-031-35319-2. [Google Scholar]

- Qais, E.; Veena, M.N. TxtPrePro: Text Data Preprocessing Using Streamlit Technique for Text Analytics Process. In Proceedings of the 2023 International Conference on Network, Multimedia and Information Technology (NMITCON), Bengaluru, India, 1–2 September 2023; pp. 1–6. [Google Scholar]

- Vayadande, K.; Kale, D.R.; Nalavade, J.; Kumar, R.; Magar, H.D. Text Generation & Classification in NLP: A Review. In How Machine Learning is Innovating Today’s World; Dey, A., Nayak, S., Kumar, R., Mohanty, S.N., Eds.; Wiley: New York, NY, USA, 2024; pp. 25–36. ISBN 978-1-394-21411-2. [Google Scholar]

- Sarica, S.; Luo, J. Stopwords in Technical Language Processing. PLoS ONE 2021, 16, e0254937. [Google Scholar] [CrossRef]

- Jabbar, A.; Iqbal, S.; Tamimy, M.I.; Rehman, A.; Bahaj, S.A.; Saba, T. An Analytical Analysis of Text Stemming Methodologies in Information Retrieval and Natural Language Processing Systems. IEEE Access 2023, 11, 133681–133702. [Google Scholar] [CrossRef]

- Borg, A.; Boldt, M. Using VADER Sentiment and SVM for Predicting Customer Response Sentiment. Expert Syst. Appl. 2020, 162, 113746. [Google Scholar] [CrossRef]

- Cruz, C.A.A.; Balahadia, F.F. Analyzing Public Concern Responsesfor Formulating Ordinances and Lawsusing Sentiment Analysis through VADER Application. Int. J. Comput. Sci. Res. 2022, 6, 842–856. [Google Scholar] [CrossRef]

- Darraz, N.; Karabila, I.; El-Ansari, A.; Alami, N.; Lazaar, M.; Mallahi, M.E. Using Sentiment Analysis to Spot Trending Products. In Proceedings of the 2023 Sixth International Conference on Vocational Education and Electrical Engineering (ICVEE), Surabaya, Indonesia, 14–15 October 2023; pp. 48–54. [Google Scholar]

- Ghasemaghaei, M.; Eslami, S.P.; Deal, K.; Hassanein, K. Reviews’ Length and Sentiment as Correlates of Online Reviews’ Ratings. Internet Res. 2018, 28, 544–563. [Google Scholar] [CrossRef]

- Liu, S.; Wright, A.P.; Patterson, B.L.; Wanderer, J.P.; Turer, R.W.; Nelson, S.D.; McCoy, A.B.; Sittig, D.F.; Wright, A. Using AI-Generated Suggestions from ChatGPT to Optimize Clinical Decision Support. J. Am. Med. Inform. Assoc. 2023, 30, 1237–1245. [Google Scholar] [CrossRef]

- Sedgwick, P. Spearman’s Rank Correlation Coefficient. BMJ 2014, 349, g7327. [Google Scholar] [CrossRef]

- Khaled, E.; Omar, Y.M.K.; Hodhod, R. Towards an Enhanced Model For Contextual Topic Identification. In Proceedings of the 2023 5th Novel Intelligent and Leading Emerging Sciences Conference (NILES), Giza, Egypt, 21–23 October 2023; pp. 188–193. [Google Scholar]

- Zhang, Y.; Zhang, Y.; Michalski, M.; Jiang, Y.; Meng, Y.; Han, J. Effective Seed-Guided Topic Discovery by Integrating Multiple Types of Contexts. In Proceedings of the Sixteenth ACM International Conference on Web Search and Data Mining, Singapore, 27 February–3 March 2023; pp. 429–437. [Google Scholar]

- Řehůřek, R. What Is Gensim? GENSIM Topic Modelling for Humans. 2024. Available online: https://radimrehurek.com/gensim/intro.html#what-is-gensim (accessed on 18 June 2025).

- Srinivasa-Desikan, B. Natural Language Processing and Computational Linguistics: A Practical Guide to Text Analysis with Python, Gensim, spaCy, and Keras, 1st ed.; Packt Publishing: Birmingham, UK, 2018; ISBN 978-1-78883-853-5. [Google Scholar]

- Cheng, H.; Liu, S.; Sun, W.; Sun, Q. A Neural Topic Modeling Study Integrating SBERT and Data Augmentation. Appl. Sci. 2023, 13, 4595. [Google Scholar] [CrossRef]

- Id, I.D.; Kurniawan, R. Feedback Analysis of Learning Evaluation Applications Using Latent Dirichlet Allocation. In Proceedings of the 2023 Sixth International Conference on Vocational Education and Electrical Engineering (ICVEE), Surabaya, Indonesia, 14–15 October 2023; pp. 335–339. [Google Scholar]

- Watanabe, K.; Baturo, A. Seeded Sequential LDA: A Semi-Supervised Algorithm for Topic-Specific Analysis of Sentences. Soc. Sci. Comput. Rev. 2024, 42, 224–248. [Google Scholar] [CrossRef]

- Hsu, C.-I.; Chiu, C. A Hybrid Latent Dirichlet Allocation Approach for Topic Classification. In Proceedings of the 2017 IEEE International Conference on Innovations in Intelligent Systems and Applications (INISTA), Gdynia, Poland, 3–5 July 2017; pp. 312–315. [Google Scholar]

- Hardiyanti, L.; Anggraini, D.; Kurniawati, A. Identify Reviews of Pedulilindungi Applications Using Topic Modeling with Latent Dirichlet Allocation Method. Indones. J. Comput. Cybern. Syst. 2023, 17, 441. [Google Scholar] [CrossRef]

- Yun, J.-Y.; Lee, J.-H. Analysis of Museum Social Media Posts for Effective Social Media Management. In Cultural Space on Metaverse; Lee, J.-H., Ed.; KAIST Research Series; Springer Nature: Singapore, 2024; pp. 175–191. ISBN 978-981-99-2313-7. [Google Scholar]

- Drivas, I.C.; Kouis, D.; Kyriaki-Manessi, D.; Giannakopoulou, F. Social Media Analytics and Metrics for Improving Users Engagement. Knowledge 2022, 2, 225–242. [Google Scholar] [CrossRef]

- Burkov, I.; Gorgadze, A. From Text to Insights: Understanding Museum Consumer Behavior through Text Mining TripAdvisor Reviews. Int. J. Tour. Cities 2023, 9, 712–728. [Google Scholar] [CrossRef]

- Cappa, F.; Rosso, F.; Capaldo, A. Visitor-Sensing: Involving the Crowd in Cultural Heritage Organizations. Sustainability 2020, 12, 1445. [Google Scholar] [CrossRef]

- Stemmer, K.; Gjerald, O.; Øgaard, T. Crowding, Emotions, Visitor Satisfaction and Loyalty in a Managed Visitor Attraction. Leis. Sci. 2024, 46, 710–732. [Google Scholar] [CrossRef]

- Ma, Y.; Zhu, Y.; Chen, M.; Yang, R. Visitor-Oriented: A Study of the British Museum’s Visitor-Centred Operations Strategy. Int. J. Educ. Humanit. 2023, 11, 128–130. [Google Scholar] [CrossRef]

- Lieto, A.; Striani, M.; Gena, C.; Dolza, E.; Marras, A.M.; Pozzato, G.L.; Damiano, R. A Sensemaking System for Grouping and Suggesting Stories from Multiple Affective Viewpoints in Museums. Hum.–Comput. Interact. 2024, 39, 109–143. [Google Scholar] [CrossRef]

- Parasuraman, A.; Zeithaml, V.A.; Berry, L.L. SERVQUAL: A Multiple-Item Scale for Measuring Consumer Perceptions of Service Quality. J. Retail. 1988, 64, 12–40. [Google Scholar]

- Ladhari, R. A Review of Twenty Years of SERVQUAL Research. Int. J. Qual. Serv. Sci. 2009, 1, 172–198. [Google Scholar] [CrossRef]

- Wirtz, J.; Lovelock, C.H. Services Marketing: People, Technology, Strategy, 9th ed.; World Scientific: Singapore, 2022; ISBN 978-1-944659-82-0. [Google Scholar]

- Black, G. Transforming Museums in the Twenty-First Century; Routledge: New York, NY, USA, 2012; ISBN 978-0-415-61573-0. [Google Scholar]

- Grönroos, C.; Voima, P. Critical Service Logic: Making Sense of Value Creation and Co-Creation. J. Acad. Mark. Sci. 2013, 41, 133–150. [Google Scholar] [CrossRef]

- Poulopoulos, V.; Wallace, M. Digital Technologies and the Role of Data in Cultural Heritage: The Past, the Present, and the Future. Big Data Cogn. Comput. 2022, 6, 73. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiao, Y.; Wu, J.; Lu, X. Comprehensive World University Ranking Based on Ranking Aggregation. Comput. Stat. 2021, 36, 1139–1152. [Google Scholar] [CrossRef]

- Blanco, R.D. First Steps to Create a Data-Driven Culture in Organizations. Case Study in a Financial Institution. In Proceedings of the 2024 IEEE Eighth Ecuador Technical Chapters Meeting (ETCM), Cuenca, Ecuador, 15–18 October 2024; pp. 1–6. [Google Scholar]

- Shahbazi, Z.; Byun, Y.-C. LDA Topic Generalization on Museum Collections. In Smart Technologies in Data Science and Communication; Fiaidhi, J., Bhattacharyya, D., Rao, N.T., Eds.; Lecture Notes in Networks and Systems; Springer: Singapore, 2020; Volume 105, pp. 91–98. ISBN 978-981-15-2406-6. [Google Scholar]

- Huang, M. Discussion of the Descriptive Metadata Schema for Museum Objects. Sci. Conserv. Archaeol. 2021, 33, 98–104. [Google Scholar]

- Meler, A. In The Beginning, Let There Be The Word: Challenges and Insights in Applying Sentiment Analysis to Social Research. In Proceedings of the Companion Proceedings of the ACM Web Conference 2024, Singapore, 13–17 May 2024; pp. 1214–1217. [Google Scholar]

- Adeyeye, O.J.; Akanbi, I. A Review of Data-Driven Decision Making in Engineering Management. Eng. Sci. Technol. J. 2024, 5, 1303–1324. [Google Scholar] [CrossRef]

| Research Gaps Identified | How This Study Tackles These Gaps |

|---|---|

| Limited temporal scope: Outdated datasets (e.g., pre-2014). | Uses a contemporary dataset, primarily from 2024, capturing recent trends. |

| Geographical limitations: Studies focused on specific cities or regions. | Analyzes data from 59 museums across 19 countries, ensuring global relevance. |

| Language barriers: Focus on English or local language reviews, missing international perspectives. | Over 50% of the dataset consists of non-English reviews (3080 out of 5856), which were translated to ensure global inclusivity. |

| Limited methodological integration: Either qualitative or quantitative methods used in isolation. | Combines quantitative metrics (e.g., ratings and review length) and qualitative topic modeling for a more comprehensive analysis. |

| Practical applicability: Research lacked actionable insights for museum managers. | Provides actionable insights to help museums address service failures and prioritize improvements. |

| Metric Name | Definition | Example from the Dataset | Source |

|---|---|---|---|

| Museum name | The official name of the museum, as listed in Google My Business. | National Gallery of Australia, Somerset House, Kunsthistorisches Museum Wien, etc. | Google Maps |

| Review text | The content of an individual review. Some reviews only include a star rating without text. | Very thorough in regards to contemporary ROK history. Excellent audio guide via QR code. Museum is out of English language printed guides. But you really don’t need it. Unless you collect them as souvenirs. Great view from the 8F roof deck. | Google Maps |

| Number of characters on a unique review | Total number of characters in a single review. Calculated using = LEN() in spreadsheets. | 384 | Developed |

| Review sentiment score | A numerical score ranging from −0.999 (very negative) to 0.999 (very positive), indicating the emotional tone of the review. | 0.4404 = positive; −0.3495 = negative; 0.000 = neutral | Developed |

| Review rating | User-assigned score on a 1–5 scale reflecting satisfaction, where 1 is poor and 5 is excellent. | 1, 5, 4, 2, 3, etc. | Google Maps |

| Number of review likes | The number of users who marked the review as helpful or agreeable. Reflects how much the review resonated with others. | Google Maps | |

| Review date | The date the review was posted. | 31 August 2024, 3 September 2024, 16 June 2024, etc. | Google Maps |

| Unprocessed Reviews | Processed Reviews |

|---|---|

| Must go if you are art lover. Highly recommend to book time ahead of time. Otherwise you will be lining up for a while. Go to website and it’s free of charge to book time a lot and you will have to plan ahead and be there at the time slot. It will be definitely worth doing this if you see the regular line. A lot of art pieces for reviewing but some of the pieces got loan out until fall. Staffs are polite, friendly and knowledgable. Amazing experience and will definitely go back again. | must go art lover highly recommend book time ahead time otherwise lining go website free charge book time lot plan ahead time slot definitely worth see regular line lot art piece reviewing piece got loan fall staff polite friendly knowledgable amazing experience definitely go back |

| “Whitney Museum Free Fridays! I must recommend checking the Whitney out in the summer! They offer free entry from 5 pm to 10 pm. You can go online and get your tickets. Which I highly recommend so entry is a breeze. This was my first time at the Whitney and it was just amazing. Once you enter there is a security check and a DJ playing music on the First Floors. Gift shop and a Restaurant is also located on the first floor as well as a cafe on one of the upper floors. The museum has several floors of art. They also have lots of outdoor space that overlooks the city. If you’re in the city on a Friday this is a great place to visit. I will surely try my best to check it out again.” | whitney museum free friday must recommend checking whitney summer offer free entry 5 pm 10 pm go online get ticket highly recommend entry breeze first time whitney amazing enter security check dj playing music first floor gift shop restaurant also located first floor well cafe one upper floor museum several floor art also lot outdoor space overlook city youre city friday great place visit surely try best check |

| There are too many people on weekends. Go for a weekday afternoon. There are so many artifacts. The Rosetta Stone and Moai stone statues were impressive. | many people weekend go weekday afternoon many artifact rosetta stone moai stone statue impressive |

| Study | Identified Topics for Categorizing Museum Visitor Reviews |

|---|---|

| Lei et al. [3] | Historical atmosphere, crowd density, guide services, interactive exhibits, family-friendliness, educational value |

| Agostino et al. [11] | Ticketing and welcoming, space, comfort, activities, communication museum cultural heritage, personal experience, museum services |

| Alexander et al. [5] | Descriptive: Children, Hours, Queue, Early, Location, Cost, Meal, Staff, Asked, Toilets, Exhibition Evaluative: Difficult (poor displays), Confusing (poor layout), Surprised (unexpectedly pleasant), Longer (wanted more time), Inspiring (awe-inspiring experiences) Museum-specific: Beefeaters (guides), Poppies (specific installation), Fashion (special exhibitions) |

| Su & Teng [1] | Convenience: Queuing, online ticketing issues, parking availability, opening times Contemplation: Overcrowding, visitor behavior, photography and selfie issues Assurance: Curation/display quality, collection relevance, visitor interest alignment Responsiveness: Staff behavior, handling of complaints Reliability: Unexpected closures, exhibit maintenance Tangibles: Facilities quality, cleanliness, restrooms, Wi-Fi, elevators Empathy: Services for people with disabilities, elderly, and children Communication: Multi-language services, signage, exhibit interpretation, audio guides Servicescape: Museum layout, visitor flow, ambient conditions (temperature, smell) Consumables: Quality and pricing of food services and shops Purposiveness: Alignment with museum’s mission, commercialization level First-hand experience: Interactive elements, proximity and engagement with exhibits |

| Museum Name | Review Text | Number of Characters on a Unique Review | Review Sentiment Score | Review Rating | Number of Review Likes | Review Date |

|---|---|---|---|---|---|---|

| Philadelphia Museum of Art | So excited to see some of the world’s famous paintings in just one location :). | 78 | 0.340 | 5 | 0 | 8 December 2024 |

| The National Gallery UK | A very good museum with lots of stuff to see. The paintings are loaded with history. Nothing vegan to eat except for an overpriced cookie, 3 cm × 3 cm, 6 GBP (3-4× bigger non-vegan food is 2–4 GBP). I was told to stop eating my vegan roll because it was not bought from there. There is no fairness in this, hence only 3 stars. | 322 | 0.178 | 3 | 0 | 15 August 2024 |

| Somerset House | Ice rink under an inch of water, with no visible drainage. People falling over and getting completely soaked. Staff refusing to give refunds to those who would prefer not to wreck their day in London with a soaking. Take your money elsewhere. | 243 | −0.755 | 1 | 4 | 1 February 2024 |

| Measures | Metrics | |||

|---|---|---|---|---|

| Number of Characters | Review Sentiment Score | Review Rating | Review Likes | |

| Median | 91.000 | 0.637 | 5.000 | 0.000 |

| Mean | 168.433 | 0.555 | 4.508 | 0.231 |

| Std. Deviation | 240.086 | 0.387 | 1.003 | 1.528 |

| Skewness | 4.364 | −1.320 | −2.297 | 18.172 |

| Shapiro–Wilk | 0.599 | 0.867 | 0.551 | NaN |

| p-value of Shapiro–Wilk | <0.001 | <0.001 | <0.001 | NaN |

| Minimum | 1.000 | −0.977 | 1.000 | 0.000 |

| Maximum | 3401.000 | 0.999 | 5.000 | 52.000 |

| Variables | Number of Characters | Review Sentiment Score | Review Rating | Review Likes |

|---|---|---|---|---|

| Number of Characters | - | |||

| Review Sentiment Score | 0.450 *** p < 0.001 | - | ||

| Review Rating | −0.147 *** p < 0.001 | 0.272 *** p < 0.001 | - | |

| Review Likes | 0.198 *** p < 0.001 | 0.040 ** p = 0.002 | −0.110 *** p < 0.001 | - |

| Assigned Topic Label | Topic Description | Top Keywords Included |

|---|---|---|

| 1. Servicescape & Ambience | Focuses on the museum’s environment, including layout, lighting, and atmosphere, and their impact on visitor experience. | place, beautiful, flow, great, interesting, nice, see, art, amazing, exhibition |

| 2. Convenience & Access | Covers practical aspects like parking, ticketing, operating hours, and ease of navigation within the museum. | display, parking, museum, art, exhibit, free, work, de, lot, chirico |

| 3. Assurance, Content & Curation | Addresses the quality, relevance, and presentation of the museum’s collections and exhibitions. | collection, art, museum, painting, building, visit, beautiful, gallery, piece, rich |

| 4. Communication & Guiding | Evaluates the effectiveness of visitor support services, such as guides, signage, and multilingual support. | audio, guide, tour, museum, excellent, well, history, language, guided, multiple |

| 5. Visitor Experience & Impressions | Reflects visitors’ emotional reactions and overall impressions of their visit. | art, museum, exhibition, work, building, great, visit, beautiful, modern, photography |

| 6. Responsiveness & Staff | Reviews staff friendliness, professionalism, and how they handle visitor inquiries or issues. | staff, friendly, museum, helpful, experience, rude, great, ice, nice, lovely |

| 7. Tangibles & Facilities | Covers the condition of the museum’s physical amenities, like restrooms, seating, and Wi-Fi. | free, ticket, museum, exhibition, see, entrance, admission, went, day, get |

| 8. Family Services | Focuses on family-friendly features, including children’s areas and services for young visitors. | interactive, exhibition, smell, museum, child, floor, well, exhibit, time, see |

| 9. Accessibility | Addresses accommodations for visitors with disabilities or the elderly, such as wheelchair access and assistive devices. | wheelchair, good, cafe, great, also, button, access, lovely, gallery, elevator |

| 10. Consumables & Merchandise | Reviews food, beverage, and merchandise at the museum shops, including variety and quality. | signage, people, get, food, exhibition, souvenirs, restaurant, first, security, wifi |

| 11. Contemplation & Crowding | Discusses issues like overcrowding, noise, and behaviors affecting the visitor’s experience of exhibits. | museum, time, people, visit, day, place, many, one, hour, lot |

| 12. Purposiveness & Strategy | Reflects on the museum’s mission, the balance between commercial and cultural goals, and strategic direction. | layout, opening, museum, behavior, gallery, mission, exhibition, van, gogh, work |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Drivas, I.C.; Vraimaki, E.; Lazaridis, N. I Can’t Get No Satisfaction? From Reviews to Actionable Insights: Text Data Analytics for Utilizing Online Feedback. Digital 2025, 5, 35. https://doi.org/10.3390/digital5030035

Drivas IC, Vraimaki E, Lazaridis N. I Can’t Get No Satisfaction? From Reviews to Actionable Insights: Text Data Analytics for Utilizing Online Feedback. Digital. 2025; 5(3):35. https://doi.org/10.3390/digital5030035

Chicago/Turabian StyleDrivas, Ioannis C., Eftichia Vraimaki, and Nikolaos Lazaridis. 2025. "I Can’t Get No Satisfaction? From Reviews to Actionable Insights: Text Data Analytics for Utilizing Online Feedback" Digital 5, no. 3: 35. https://doi.org/10.3390/digital5030035

APA StyleDrivas, I. C., Vraimaki, E., & Lazaridis, N. (2025). I Can’t Get No Satisfaction? From Reviews to Actionable Insights: Text Data Analytics for Utilizing Online Feedback. Digital, 5(3), 35. https://doi.org/10.3390/digital5030035