1. Introduction

The evolutionary development of APIs in microservice architectures presents a complex challenge that necessitates careful change management, rigorous testing, and continuous monitoring to ensure system stability and security.

Microservice architectures, increasingly adopted in enterprise and web systems [

1,

2,

3,

4], rely on APIs as a crucial means of interaction between services. This architectural approach offers flexibility, scalability, and fault tolerance [

5,

6]. Unlike traditional monolithic architectures, where components communicate through internal function calls or shared memory, microservices primarily interact through lightweight API endpoints. These APIs serve as well-defined contracts that enable independent services to exchange data and perform operations, often over network protocols such as HTTP/REST, WebSocket, gRPC, message queues, etc.

However, the shift from tightly coupled internal interfaces in monolithic systems [

1,

2,

3,

4,

7] to loosely coupled, network-based APIs introduces new challenges. Microservices require adaptable APIs that support independent evolution while ensuring backward compatibility and interoperability. API versioning, contract validation, and service discovery mechanisms become essential in managing these interactions effectively. With the growing prevalence of containerization and microservices, web-based APIs are utilized even when the interacting components operate on the same machine [

8,

9]. Changes in APIs [

10,

11,

12,

13,

14], particularly between consumers (services using the API) and providers (services offering the API), often occur asynchronously, without coordination between stakeholders. This lack of synchronization can lead to unexpected consequences, such as system instability or service failures [

12,

15,

16,

17,

18]. The importance of addressing these challenges lies in ensuring the seamless operation of microservice ecosystems while maintaining their benefits of modularity and independence. As microservices evolve independently, changes to an API can result in breaking existing clients that rely on earlier versions, making API evolution a critical concern. Maintaining backward compatibility becomes essential to ensure that legacy consumers continue to function as new versions are deployed. The effective management of API evolution in microservice architectures necessitates not only the implementation of general strategic approaches but also the integration of well-established engineering practices that ensure system stability and reliability. Key aspects of this process include structured versioning, often based on semantic principles, which facilitates controlled interface evolution and compatibility verification. The use of granular versioning, applied at the level of individual endpoints or messages, enables the finer-grained management of changes. Maintaining backward compatibility is achieved through additive, non-breaking modifications and is supported by clearly defined deprecation policies that provide consumers with sufficient transition periods. In event-driven architectures, where services interact asynchronously, the evolution of message schemas must be carefully managed to prevent deserialization failures, often through schema registries and contract validation. Observability mechanisms such as monitoring, logging, and tracing are essential for detecting incompatibilities during and after deployment. Furthermore, continuous integration and deployment (CI/CD) pipelines play a critical role in automating compatibility checks, enforcing contract tests, and enabling safe deployment strategies such as canary or Blue/Green releases. Lastly, coordinated dependency management—often supported by centralized registries or orchestration platforms—ensures that API changes do not disrupt service interoperability, thereby preserving overall system integrity in dynamic and distributed environments.

To better illustrate cascading dependencies in microservice-based systems, we briefly introduce a simplified mathematical model. While optional, this helps to formalize the impact of asynchronous breaking changes.

Consider a system that supports functionalities A, B, and C, where functionality C is provided by a set of microservices . Changes in the API of one of these microservices can affect the performance of functionality C, as well as other functions that depend on C.

The dependency of functionality B on C is denoted like , where . The value means that B depends on C, while does not indicate such dependency.

Assume that it is a microservice whose API has undergone discontinuous changes—changes that break backward compatibility by removing or modifying API elements [

19]. This can be formalized as follows:

The functionality

C becomes fully or partially inoperable if at least one of the microservices

has undergone incompatible changes:

The status of functionality

B depends on the status of

C and the dependency level

d (

Figure 1):

When a microservice modifies its API without proper notification or implementation of backward compatibility strategies, it can disrupt the functionality of dependent services, leading to system instability. One critical issue from such scenarios is the potential for uncontrolled shutdowns of vital application nodes due to API incompatibility [

15,

19,

20,

21].

For instance, changes to response formats or request structures can prevent deserialization on the consumer side, which expects data in a different format. This mismatch can cause failures and delays in request processing workflows [

12,

19]. To mitigate these risks, modern approaches to API evolution in microservices incorporate several strategies, including API versioning, contract-based testing, backward compatibility support, and real-time microservice monitoring tools. API versioning enables the coexistence of multiple API versions, facilitating smooth transitions to new versions without service interruption. Contract-based testing ensures that changes adhere to agreed-upon standards and do not disrupt the operation of other system components. Backward compatibility allows consumers to operate with both the new and old API versions, minimizing the risk of disruptions. These measures collectively enhance the resilience and adaptability of microservice architectures in the face of API evolution.

Numerous studies [

12,

19,

22] predominantly analyze various aspects of API breaking changes, including their frequency, characteristics, cascading effects, and impacts on software ecosystems, with some also considering implications for company performance and user experience. A central issue emphasized in this research is the challenges and risks associated with API breaking changes during software evolution, which can lead to unforeseen consequences stemming from interface modifications. For instance, changes to APIs can result in compatibility issues, causing failures in client applications that rely on these APIs for critical functionality. These failures may cascade through software ecosystems, disrupting dependent services and applications as explored in studies focused on technical repercussions and ecosystem-level effects [

19,

23].

Additionally, analyses of the motivations for implementing breaking changes [

24] indicate that decisions surrounding API evolution may sometimes prioritize innovation over stability, leading to client-side disruptions. These disruptions, in turn, can cause financial and reputational damage to companies, although most studies primarily focus on technical aspects rather than business outcomes. Nonetheless, the research [

22,

25,

26] links breaking API changes to potential financial losses and reputational risks, especially when service failures affect clients’ business operations. While a substantial portion of the literature concentrates on the mechanics and patterns of API changes, it indirectly underscores the significant risk of stability issues in client applications.

Causes of API Changes

The evolution of APIs is driven by the need to update functionality, adapt to technological advancements, and improve design. Alexander Lercher [

12] identifies four primary reasons for API changes. The first key driver is the addition of new functionality, where developers enhance API capabilities to meet emerging user or business requirements. While this increases the utility of the API, it may also alter its behavior, potentially impacting client applications. The second reason is technological adaptation, which involves migrating to new cloud platforms or updating programming language versions. These changes are essential to maintain infrastructure relevance, ensure security, and remain compatible with modern technologies. However, such transitions often require significant API restructuring, which can disrupt prior functionality.

The third cause is the improvement of existing functionality, including performance optimization or the consolidation of similar workflows to simplify client interactions. Although these enhancements add value to the API, they often necessitate adjustments on the client side to align applications with the updated configurations [

12,

24,

27]. By understanding these motivations, stakeholders can better anticipate and manage the implications of API evolution, balancing innovation with stability.

Finally, API design optimization involves removing deprecated components or restructuring the API to make it more user-friendly and modern. While these optimizations improve ease of use and maintainability, they often necessitate significant changes in client applications to adapt to the updated API [

12,

27]. APIs, like any other part of a software system, require thoughtful design and continuous improvement. If an API effectively meets the needs of its clients, there is little incentive for change. However, studies show that web APIs are frequently updated over extended periods following their initial development [

8,

28].

These factors collectively explain the motivations behind API changes, despite their potential risks to client stability, underscoring the need to balance innovation with the impact on dependent systems.

Accordingly, the research gap lies in the absence of a comprehensive, empirically validated approach that simultaneously formalizes failure propagation across microservice dependencies and automatically enforces API-version compatibility during asynchronous evolution. The aim of this study is to design and experimentally verify a compatibility-driven version-orchestration framework that mitigates breaking changes and reduces service downtime. The main objectives and contributions are as follows:

The synthesis and taxonomy of existing strategies for managing API changes in microservice environments.

The formulation of a directed-acyclic-graph model for cascading failures, introducing the failure radius and propagation depth metrics.

The design of the VersionOrchestrator algorithm, integrating semantic versioning, contract testing, and CI/CD triggers.

The implementation of an open-source prototype with reproducible artifacts and deployment scripts.

Empirical evaluation on a simplified synthetic microservice chain, benchmarked against a Git-based schema version control approach.

The derivation of practical guidelines for DevOps adoption under Zero-Trust architecture requirements.

2. Strategies for Minimizing the Impact of Breaking API Changes

According to Lehman’s laws of software evolution [

29], real-world software systems require maintenance and evolution to remain relevant. Providers must address critical API changes to ensure balance between innovation and stability [

12]. This balance is crucial for maintaining API stability for consumers, avoiding abrupt, compatibility-breaking changes that could disrupt client applications.

Evolutionary API changes, which often lead to compatibility issues, client application failures, and reputational risks for companies, demand the application of diverse strategies to mitigate potential problems. The lack of tools for predicting the impact of API changes on client applications and limited access to data on API usage add complexity to maintaining API stability. This underscores the need for structured methods to prevent breaking changes and robust mechanisms to monitor and manage their impacts effectively.

Researchers [

12,

19,

20] agree that successful API evolution requires systematic approaches to minimize breaking changes. Below, we outline some key strategies that can help address this challenge.

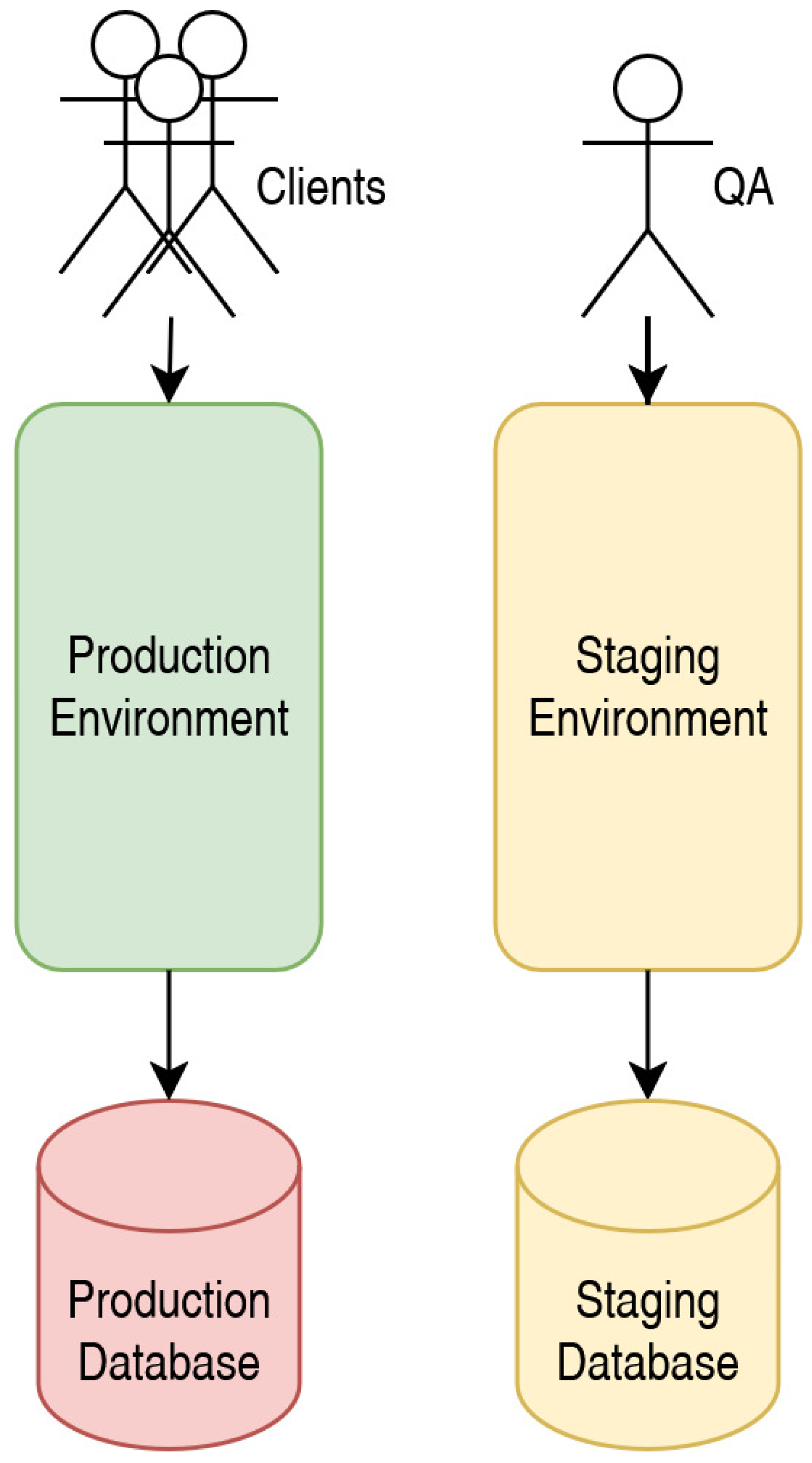

2.1. Procedural Approach (Staging Testing)

One of the effective methods for addressing API inconsistency issues is staging testing [

30,

31,

32,

33,

34], a software testing phase conducted in an environment closely resembling the production environment. This staging environment typically uses the same configurations, databases, and third-party services as production but remains isolated from end users. Staging testing enables the simulation of real-world workloads and verification of new API changes against existing system components. It allows developers and quality assurance specialists to test changes automatically and manually, reducing the risk of unexpected failures in client applications by ensuring that all modifications are evaluated in production-like conditions (

Figure 2).

In a staging environment, testing focuses not only on API functionality but also on compatibility between the new API version and existing system components and dependencies. This check includes testing service interactions, validating data formats, and ensuring the correct execution of all business processes dependent on the API. The automation of staging tests facilitates the rapid identification of potential incompatibilities and provides timely feedback to developers, which is particularly critical in microservice architectures [

30,

31,

32]. However, achieving full test coverage by the API provider is often impractical, as the API may be used by a diverse range of companies and industries, each with unique configurations and dependencies. Due to this variability, predicting all possible usage scenarios and ensuring comprehensive test coverage across different clients is challenging.

For automated testing [

31,

32], tools such as Pact.io [

35] are utilized, supporting contract testing between services. This approach verifies interactions between API clients and providers before deploying changes to the production environment, ensuring seamless integration and minimizing the risk of incompatibilities. Staging testing also facilitates regression testing [

33,

36], enabling developers to confirm that new changes do not negatively affect existing functionality. By leveraging a staging environment for API testing, teams can not only address potential inconsistencies but also ensure system stability and reliability for end users. This comprehensive testing approach significantly reduces the likelihood of disruptions while maintaining a high level of service quality [

30,

31,

32,

33].

2.2. Protocol Description Scheme Generation Approach

One of the reliable methods for ensuring API compatibility amid continuous changes is the automated generation of protocol description schemas by the API provider [

37,

38,

39,

40]. This approach involves the provider creating an up-to-date schema that defines the structure and interaction rules for the API, including all available resources, data types, and supported versions. Incorporating version information into the generated schema allows clients to identify the API version they use, reducing the risk of incompatibilities caused by breaking changes [

39,

41]. However, successfully adapting to changes requires clients to conduct their own quality assurance processes to validate compatibility and ensure smooth integration.

All API clients have access to these generated schemas, ensuring consistency and minimizing errors arising from mismatches in data structures or invocation methods. Clients can implement periodic checks for version updates by referencing a central repository where the latest schemas are stored. This approach enables clients to promptly detect changes, compare their current API version with the latest, and adapt their systems to meet new requirements [

39,

41].

Based on the analysis, automated schema updates with versioning streamline the process of upgrading clients to newer API versions, maintaining compatibility and reducing risks to client application stability. This approach ensures that API providers can uphold stability and backward compatibility while minimizing disruptions and facilitating smoother integration with updated API versions for clients.

2.3. Adaptive API Compatibility Approach

Employing flexible data formats that support adaptability and are not strictly tied to predefined schemas is an effective method for maintaining backward compatibility in APIs. Formats such as JSON or Protocol Buffers (which allow adding optional attributes without breaking compatibility), enable APIs to evolve without requiring drastic structural changes [

4,

12,

40,

42]. This approach facilitates the addition of new data elements or functionalities without disrupting clients that continue to use the older API format. As a result, APIs become more resilient to change, a critical feature in dynamic systems where new requirements often demand rapid adaptation.

Before deploying new API versions with modified data structures, compatibility checks are conducted to ensure the new format remains backwards compatible. Such validation guarantees that all clients using previous API versions experience no disruptions due to changes in the data structure. For instance, new fields in JSON objects can be made optional, allowing clients to ignore these fields if they are not yet prepared to handle them. This strategy minimizes the impact of API evolution while maintaining seamless operation across diverse client applications.

A key strategy followed by API providers, as highlighted in [

12], is to avoid unexpected breaking changes and maintain backward compatibility for API consumers. This approach prioritizes stability and minimizes the risks associated with critical modifications that could disrupt client applications. Following the principle of “no critical changes unless necessary”, breaking changes are implemented only when indispensable, thereby reducing the likelihood of unforeseen failures and preserving API reliability. Notably, API providers typically refrain from introducing breaking changes without notifying clients, except when such changes are explicitly requested to add new functionality. This strategy allows providers to effectively maintain API stability while retaining the ability to evolve and adapt to new requirements when necessary.

Data formats supporting backward compatibility significantly reduce the risk of breaking changes and ensure a smooth transition to new API versions. Moreover, this approach enables API providers to respond rapidly to emerging requirements without compromising the functionality of client applications. By conducting version compatibility checks, APIs remain both flexible and dependable—qualities that are critical in microservice architectures where stability and backward compatibility are paramount. This methodology not only supports seamless evolution but also strengthens the resilience and reliability of APIs in dynamic, high-demand environments. However, it should be noted that adaptive protocols such as JSON or Protocol Buffers are only limitedly adaptive and can ensure backward compatibility only if protocol extended with optional attributes. Several techniques support smooth transitions with this approach. Feature flags allow for the gradual introduction of new API functionalities without affecting existing consumers. The tolerant reader pattern encourages clients to ignore unrecognized fields instead of failing when encountering unexpected data. Additionally, deprecation notices provide consumers with advance warnings before older API versions are retired, facilitating planned and controlled migrations.

2.4. Blue/Green Deployment Approach

Blue/Green Deployment is a software deployment strategy that minimizes the downtime and risks associated with application updates, enabling a seamless transition between versions [

43,

44,

45]. This approach involves maintaining two identical environments: the current environment (Blue) and a new environment (Green). While the Blue environment continues serving users, the Green one is used to deploy and test the updated application. Once testing is complete and the new version is confirmed stable, traffic is gradually or instantly redirected to the Green environment (

Figure 3).

This method significantly reduces the risks associated with API changes, as the previous version remains fully accessible and can be quickly restored in case of issues with the new release. Such fallback capability is critical for mission-critical systems, where failures or prolonged downtime can result in significant losses. By allowing immediate rollback to the prior stable version, Blue/Green deployment provides an additional layer of reliability. It enables teams to implement new API versions with minimal impact on end users, ensuring a high level of service continuity and user satisfaction [

43,

45].

2.5. Including API Versioning in the Protocol

Incorporating versioning directly into the API is an effective method for ensuring compatibility and managing updates. This can be achieved by embedding versioning information in the HTTP header or within the API structure itself, such as in the URL or response body. Each API version is assigned a unique identifier, enabling clients to automatically verify compatibility with the version they are using [

46,

47,

48].

Client applications can use this versioning information to check whether their current interface aligns with the deployed API version. If the API version changes and the client system does not support the new version, clients can be notified of the incompatibility and take appropriate actions, such as adapting to the new version or updating dependencies. This approach prevents unexpected disruptions in client applications and ensures stable interactions, even during frequent API updates.

Although supporting multiple API versions offers flexibility, it also increases maintenance costs for older versions and can slow the adoption of innovations [

12].

2.6. Analysis of Approaches

Based on the analysis of materials [

4,

12,

30,

31,

32,

33,

34,

37,

38,

39,

40,

42,

43,

44,

45,

49], a comparative analysis of the approaches mentioned above can be conducted. The results of the analysis are presented in

Table 1.

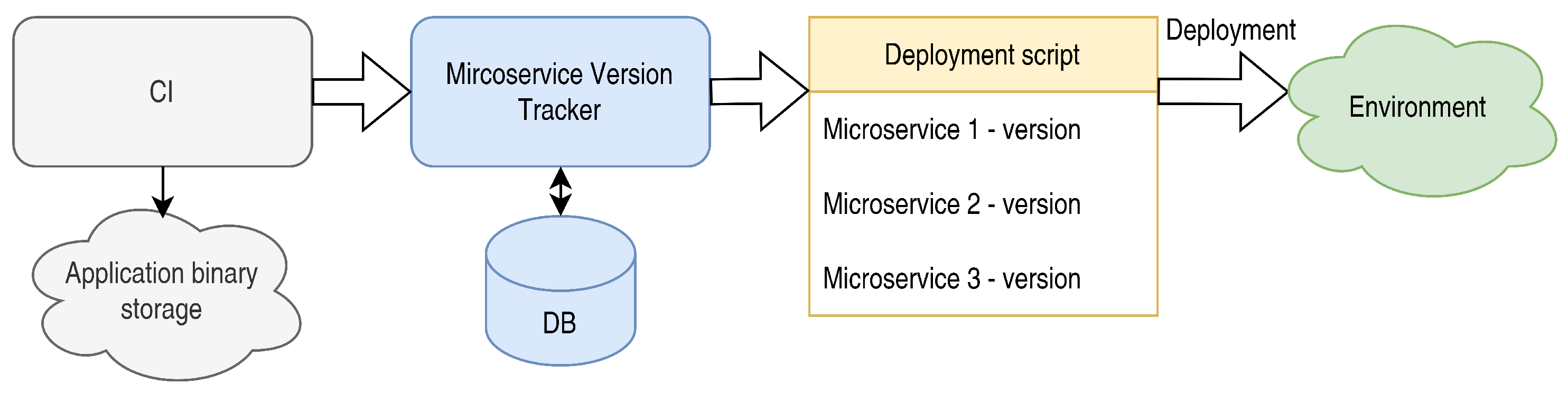

3. API Compatibility-Based Microservice Version Orchestration

The proposed approach represents an evolution of Protocol Description Scheme Generation with the addition of DevOps automation, enabling the development of a strategy termed “Microservice Version Orchestration Based on API Compatibility”. In systems employing a microservice architecture, application APIs can be automatically generated from versioned shared resources, such as JSON schemas or Proto2/3 schemas (

Figure 4). The build system then records the service name and API version into an external application, which maintains a repository of all available services and their corresponding API versions.

This centralized repository facilitates the management of API changes and allows for the automatic monitoring of version relevance across all services. Unlike traditional versioning approaches, which primarily focus on maintaining API documentation history, our method introduces an orchestration mechanism that actively manages version compatibility before deployment. Such orchestration ensures that all microservices adhere to the compatibility requirements, providing a robust mechanism for maintaining consistency and stability in dynamic systems. This approach minimizes the risks associated with API updates, enabling seamless integration and coordination across microservices.

To operationalize the orchestration of microservice versions, the framework integrates protocol-specific compatibility validation tools. For gRPC-based services, we employ a containerized version of the protolock utility [

50] to detect backward-incompatible changes in .proto interface definitions. This tool identifies breaking modifications in service signatures, such as removed fields or incompatible type changes, before deployment proceeds.

For JSON-Schema described protocols, the framework leverages IBM’s jsonsubschema [

51] described in [

52], which implements a subtype-based comparison. In this approach, if the new schema is a subtype of the previous one, the change is classified as non-breaking and triggers a minor version increment. Conversely, if the previous schema is a subtype of the new one (but not vice versa), a major version increment is applied due to potential incompatibility. The validator can handle nested data structures (e.g., DTOs or JSON objects with multiple fields) to detect semantic changes beyond purely syntactic signatures. This is essential since parameter structures may embed multiple data portions whose meaning could change while the method signature itself remains the same.

An API version should consist of two numbers separated by a dot [

53]. The first number indicates the introduction of breaking changes relative to the previous version, while the second represents minor updates. This versioning approach enables control of the accuracy of the microservice component’s deployment, ensuring both interaction stability among services and the gradual implementation of new features.

An external application, independent of the core system, utilizes the provided versioning data to create deployment scenarios based on the compatibility of API versions between providers and consumers. From the subset of service versions that meet the declared compatibility criteria, the application automatically selects the latest release (i.e., the highest semantic-version number) so that deployments always run on the most up-to-date—but still compatible—implementation. The proposed method automates this process by programmatically verifying dependencies and alerting stakeholders when changes necessitate intervention. When a service introduces a new API version, the external application automatically verifies its compatibility with all dependent clients. If the API users are ready to interact with the new API version, the deployment proceeds. However, if incompatibilities are detected, the deployment may be delayed until the API users are adapted or backwards-compatible changes are implemented. This automation-driven approach ensures that developers receive actionable insights rather than raw version history, streamlining the adoption of API changes and reducing deployment friction. The process ensures a balance between maintaining system stability and facilitating innovation in a controlled manner.

To enhance clarity and reproducibility, we provide a structured overview of the continuous integration (CI) pipeline adopted in our system. For ease of understanding, the core stages of the pipeline are represented using high-level pseudocode (see Algorithm 1), which abstracts away implementation-specific details while preserving logical flow. Additionally, a graphical diagram (

Figure 5) is included to visually depict the sequence of stages, data flow, and key decision points within the CI/CD process. This dual representation aims to facilitate comprehension for both technical and non-technical readers, especially in contexts involving microservice architecture and API compatibility validation.

| Algorithm 1 CI/CD pipeline with microservice API compatibility validation |

- 1:

Input: commit—new code pushed to version control - 2:

Output: Build and deployment status notification - 3:

Step 1: Code Commit Handling - 4:

if commit is pushed to remote repository then - 5:

Log “Commit received” with commit identifier - 6:

end if - 7:

Step 2: Code Retrieval - 8:

Clone codebase from repository - 9:

Step 3: Protocol Dependency Acquisition - 10:

Fetch protocol definitions (OpenAPI, Proto, etc.) - 11:

Step 4: Application Build and Test - 12:

if language is compiled (Go, Java, C#, Rust) then - 13:

Compile source code - 14:

Run unit and integration tests - 15:

else if language is interpreted (Python, JS, Ruby) then - 16:

Verify dependencies - 17:

Lint and test source code - 18:

if packaging required then - 19:

Package artifacts (e.g., wheel, tarball) - 20:

end if - 21:

end if - 22:

if tests failed then - 23:

Notify developer and exit - 24:

end if - 25:

Step 5: Registry Push - 26:

Build container image - 27:

Push image to registry (e.g., DockerHub) - 28:

Step 6: API Metadata Registration - 29:

Extract version, dependencies, and exposed API info - 30:

Register service metadata in Microservice API Tracker system - 31:

Step 7: Compatibility Validation and Deployment - 32:

if API compatibility is valid then - 33:

Generate deployment plan (e.g., Blue/Green) - 34:

else - 35:

Notify developer of API conflict and exit - 36:

end if - 37:

Step 8: Developer Notification - 38:

Inform developer of success and deployment inclusion status

|

The proposed approach is optimal for systems where operational stability is critical [

54]. For instance, in air traffic control systems [

55,

56] or power grid management infrastructures [

57], even minor disruptions can result in substantial financial losses, threats to human safety, or public safety breaches. However, due to the complexity of design, management, and maintenance, employing “API compatibility-based microservice version orchestration” may be less applicable for systems where stability is not a primary concern. In contexts such as small websites, internal corporate applications, or experimental low-load platforms, the focus can shift toward development speed and cost reduction, as potential failures in such systems typically have minimal consequences. Thus, while this approach provides significant advantages for mission-critical systems, its complexity may outweigh its benefits in less stability-dependent environments.

The approach minimizes disruptions by combining compatibility validation with progressive traffic-shifting strategies such as Blue/Green deployments. After a new version is deployed and validated, traffic is gradually redirected from the stable (Blue) environment to the new (Green) environment, ensuring that in case of unexpected failures, the rollback to the previous version is instantaneous and risk free. This enables a controlled deployment process with minimal user-visible downtime, enhancing the reliability of interactions between microservices. Automated management of API versions and their compatibility guarantees system stability, even in complex architectures with numerous dependencies. Nonetheless, this strategy requires the coordination of near-simultaneous deployment of multiple microservices and sufficient infrastructure resources to support parallel environments, which can add implementation complexity.

4. Experimental Setup and Methodology

This experimental study aimed to evaluate the robustness of an automated microservice version orchestration framework under the conditions of breaking changes in the JSON Schema definitions. The core objective was to detect compatibility violations and assess the system’s ability to coordinate safe deployments based on version constraints. The experimental setup utilized a Kubernetes cluster running MicroK8s version 1.31.7 (revision 7979), deployed on two OrangePi 5 single-board computers, each featuring 16 GB of RAM and 64 GB of onboard storage, and powered by Rockchip RK3588 processors (Fuzhou, China). System load was simulated using a Gatling test scenario, configured to generate a sustained traffic of 100 HTTP requests per second for a total duration of 10 min. The microservice system consists of three components:

Load http external requests → MS1 → MS2 → MS3

Communication Protocols:

The API interface definitions (gRPC and JSON Schema) are stored in separate Git repositories and integrated into each microservice as subprojects. A Jenkins CI pipeline monitors any changes to the protocol definitions and performs the following steps:

Executes backward compatibility checks.

Applies semantic versioning:

- –

Major increment for breaking changes.

- –

Minor for non-breaking updates.

Updates a version.info file and assigns Git tags.

4.1. Manual Introduction of a Breaking Change

A breaking modification was introduced into the JSON schema governing the communication protocol between MS2 and MS3, specifically through the changing one of the field names. Following the commit of this alteration, the Jenkins pipeline automatically identified the backward-incompatible change and incremented the major version accordingly. Subsequent to this version update, the dependent microservice underwent the necessary modifications to align with the revised schema.

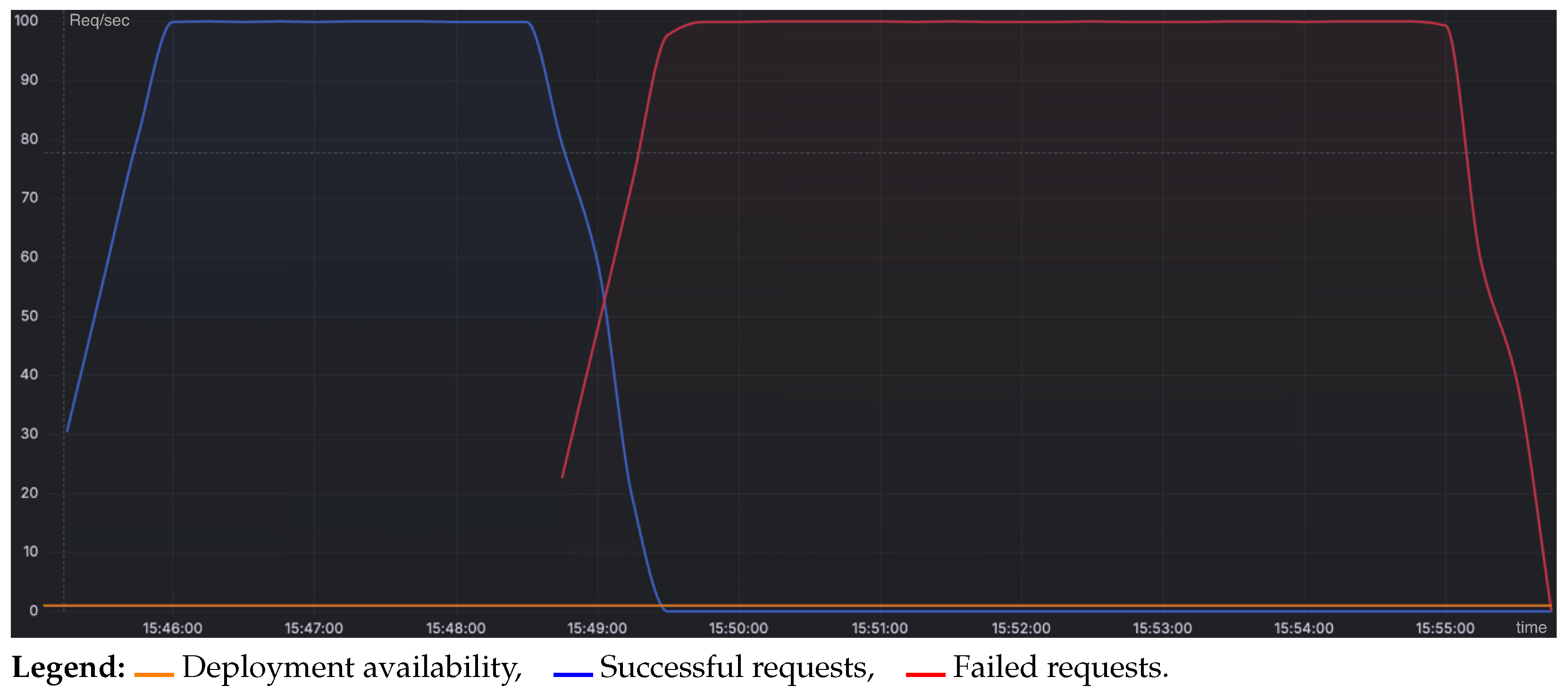

To rigorously evaluate the ramifications of this schema change, load testing was performed using Gatling, with requests routed sequentially through the microservice chain MS1 → MS2 → MS3 (

Figure 6). During the testing process, the updated MS2 service was manually deployed to the Kubernetes cluster to simulate the production environment. As a consequence, Gatling (

Figure 7) recorded a series of HTTP errors originating from MS3, which were attributable to schema validation failures resulting from the absence of the expected field. These results substantiate that the JSON schema modification introduced an incompatibility between MS2 and MS3.

Furthermore, the observed failure radius r = 2 is consistent with the prediction of Equation (

3), as the failure in MS2 propagated downstream to MS3 and subsequently impacted the external client.

4.2. Blue/Green Deployment with Incompatible Schema

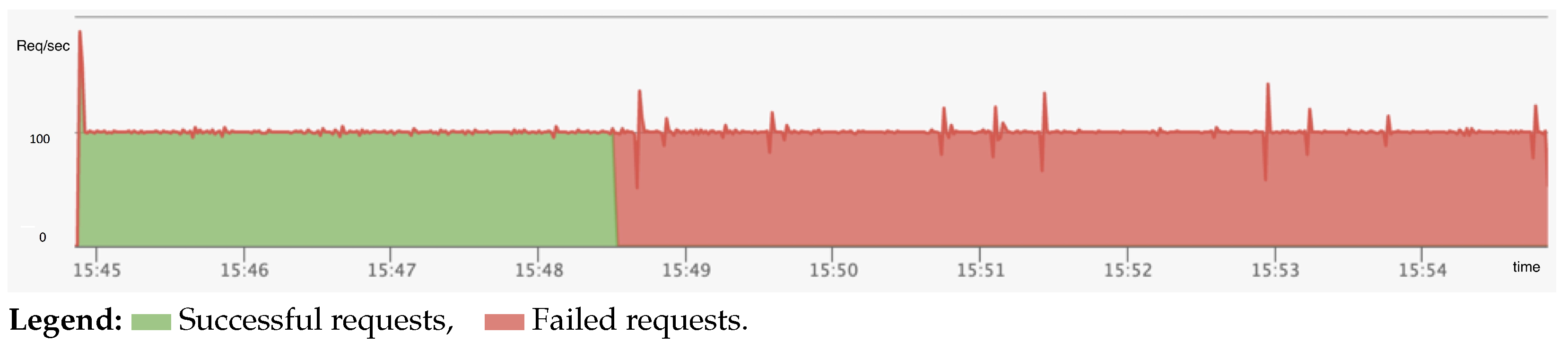

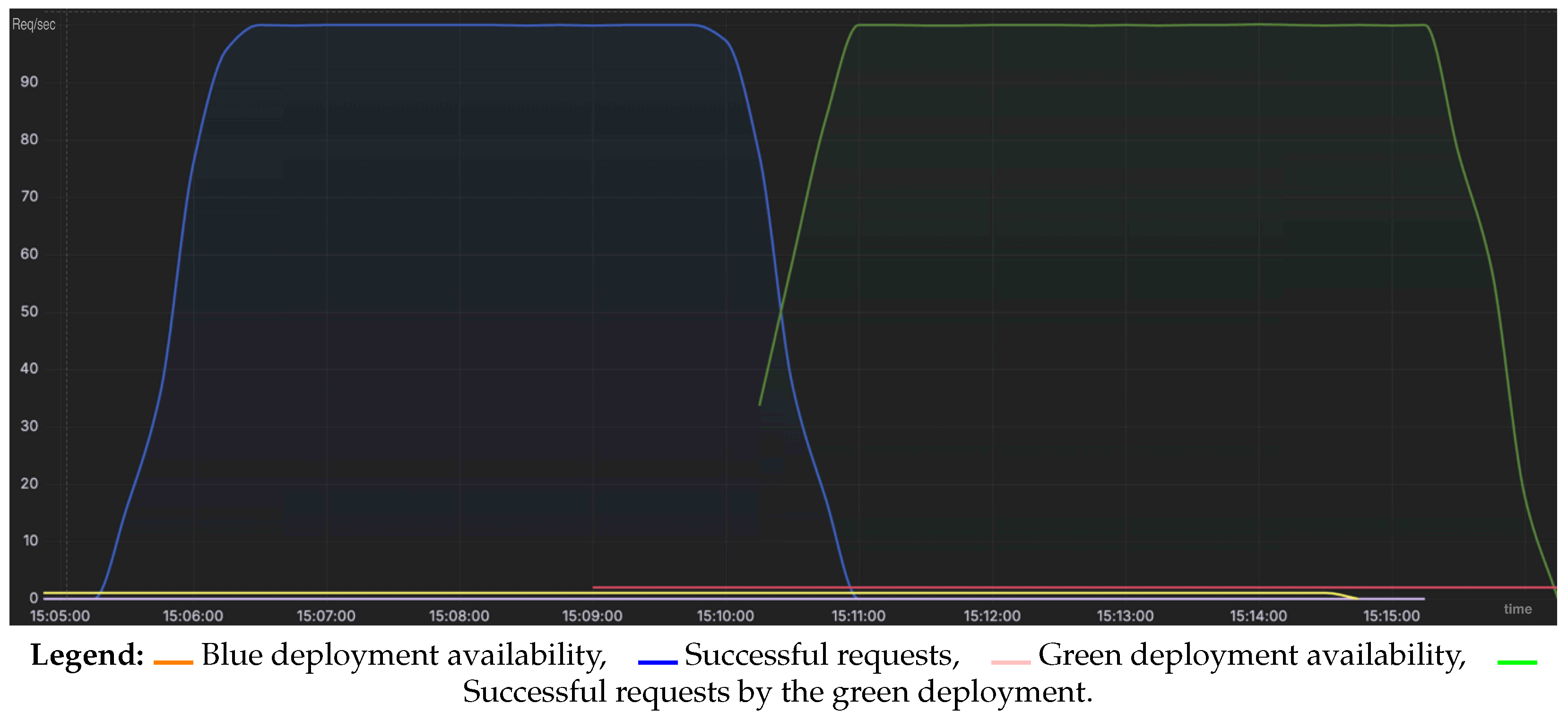

In this scenario a Blue/Green deployment strategy was applied, in which the Green environment contained a microservice MS2 version with a breaking JSON Schema change.

At 20:58, the Green version was introduced, and between 20:58 and 20:59, traffic was gradually shifted to the Green environment. By 21:01, Kubernetes’ built-in safeguards triggered traffic throttling and circuit breaking due to request failures caused by schema mismatches (

Figure 8). And the Gatling system began to receive only failed responses (

Figure 9).

This result confirmed the runtime instability arising from schema incompatibility under load, despite the partial isolation provided by the Blue/Green deployment approach.

4.3. Coordinated Compatible Deployment

In this scenario, MS3 was updated to support a newer version of the JSON schema that is already in use by MS2. The Microservice API Tracker generated a compatible deployment sequence for MS1, MS2, and MS3, with newer versions for MS2 and MS3, which was subsequently executed in a coordinated manner. During deployment under load, we observed systemic issues in a distributed microservices architecture when introducing breaking changes to service APIs, even though all microservices were updated to compatible versions. The core problem stems from non-uniform deployment timing—some services are downloaded and started faster than others. As a result, there is a transient state in which both old and new versions of microservices are simultaneously active, leading to API contract mismatches between interacting components (

Figure 10).

This inconsistency creates a race condition where certain services may issue or receive requests incompatible with the current state of their peers, resulting in request failures or unexpected behavior. This phenomenon was particularly pronounced under load, where system stress magnified timing disparities between service startup times (

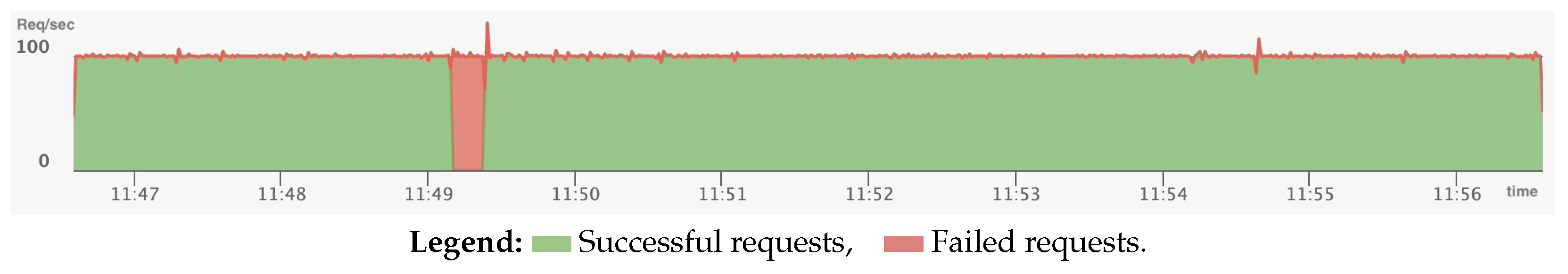

Figure 11).

As evident from the monitoring graphs, a spike in HTTP errors occurred at approximately 11:49, coinciding with the deployment of updated microservice versions. These errors persisted for about one minute and are indicative of temporary API incompatibility between microservices. Specifically, the data suggest that during this interval, a subset of services was still running the old API version, which was not compatible with the newly deployed versions.

4.4. Coordinated Compatible Deployment Combined with Blue/Green Strategy

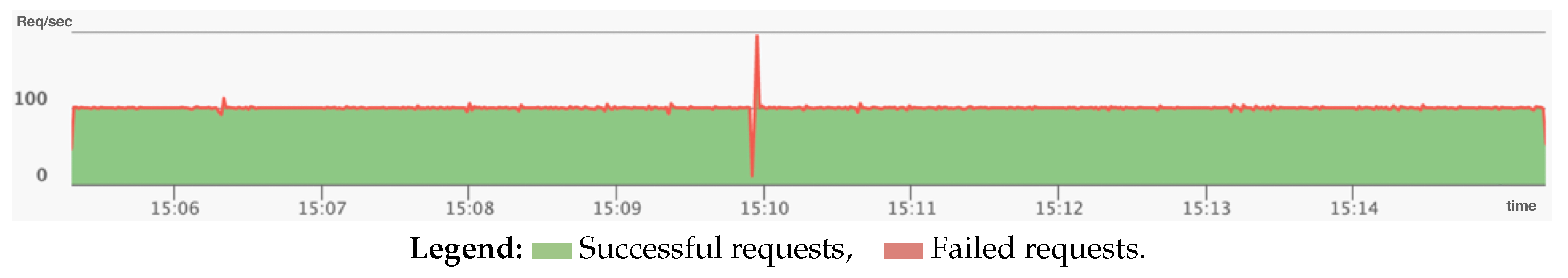

To validate our hypothesis and mitigate the API mismatch issue observed during rolling updates under load, we repeated the previous experiment using a Blue/Green deployment strategy. In this setup, the new version of the microservices (green environment) was fully deployed in parallel with the existing production version (blue environment). Traffic routing to the updated version was only switched after all green services were confirmed to be healthy and fully operational.

The deployment of the new version at 15:09 was followed by traffic redirection by 15:10, with no errors or circuit-breaking events observed (

Figure 12). A slight increase in response latency occurred during the transition period, consistent with the expected behavior under load conditions (

Figure 13).

6. Conclusions

The evolutionary development of APIs in microservice architectures demands systematic change management to ensure stability, compatibility, and security in highly dynamic environments. This study demonstrated that the Compatibility-Driven Version Orchestrator demonstrated effectiveness of mitigating the risks of breaking changes, reducing mean time to recovery (MTTR), and improving the resilience of microservice-based systems.

Experimental results confirmed that combining coordinated dependency updates with Blue/Green deployment strategies can practically eliminate the transient failures commonly seen with rolling updates, even when versions are otherwise compatible. This positions the orchestrator as a particularly valuable approach for mission-critical domains, including energy, aviation, and financial services, where downtime is unacceptable.

Nevertheless, this method requires investment in the supporting infrastructure, including a centralized API registry, automated compatibility verification, and sophisticated monitoring capabilities. Its complexity may be excessive for small-scale or experimental projects with lower risk profiles.

Future research should focus on scaling the framework and validating its effectiveness under industrial-grade workloads. In addition, aligning its security model with Zero-Trust requirements would help strengthen external validity and industry adoption.