Abstract

Workplace safety training is the cornerstone of workplace safety and accident prevention. In the case of frequently rotating employees, such as in the laboratories of higher education institutions, where students are required to perform tasks as part of their education, a considerable amount of effort for workplace safety training is demanded from the supervising instructors. Accordingly, the use of self-guided workplace safety training may lead to significant savings in the workload for instructors. In this evaluation study, we investigated to what extent an augmented reality (AR) app is deemed suitable for workplace safety training. The prototypical augmented reality (AR) app is based on an AR platform that performs tracking based on visual Simultaneous Localization and Mapping (vSLAM, Google Tango). The workplace safety training was carried out for two common stations and two devices in a workshop of an environmental engineering laboratory at a higher education institution. A total of 12 participants took part in the mixed-method study. Standardized questionnaires were used to assess usability, cognitive load and the learner prerequisites of motivation and emotion. Qualitative results were collected through subsequent semi-structured interviews. The app was able to achieve good usability, and the values for cognitive load can be classified as conducive to learning, as can the values for the learning prerequisites of motivation and emotion. The interviews provided insights into strengths, but also into potential improvements to the app. The study proved that using vSLAM-based AR apps for workplace safety training is a viable approach. However, for further efforts to establish AR-app-based workplace safety training, these insights need to be ported to a new AR platform, as the platform used has since been discontinued.

1. Introduction

The use of laboratories and workshops is part of higher education, not only in engineering. Using these facilities often involves risks to the health and safety of students. Accordingly, workplace safety training (WST) is inevitable for reducing risks. WST is currently often provided in face-to-face sessions using conventional learning methods, such as presentations [1]. In recent years, new media, such as virtual reality (VR) or augmented reality (AR), have gained in maturity, allowing their affordances to be exploited for more memorable learning experiences aimed at achieving improved learning outcomes. AR is a technology where the real world is enriched with computer-generated (often visual) stimuli [2,3,4]. In educational contexts, additional information can thus be integrated into reality to promote learning. A further development in recent years is mobile handheld devices, such as smartphones, which, for the most part, may also be provided by the students themselves (bring your own device (BYOD)). Accordingly, handheld devices using AR might also be used for self-guided safety training in laboratories or workshops, thus reducing the instructors’ workload and rendering training more time independent.

The ARTour app is a mobile AR app prototypically developed to introduce apprentices in a car manufacturer’s training workshops [5]. Initial evaluations have been positive [6]. A special characteristic of the app is its tracking, i.e., the app itself recognizes its position in space based on the visual characteristics of the space. This visual Simultaneous Localization and Mapping (vSLAM) technique differs from tracking methods frequently used in educational contexts, which mostly use markers, i.e., optical features, such as QR codes previously placed in the real world that also need to be maintained accordingly [7,8,9,10,11] or digital localization technologies, such as beacons [12] or GPS [13,14,15]. Equally, it is crucial to differentiate between indoor and outdoor tracking. While most tracking methods work for both indoor and outdoor environments, vSLAM is utilized as an indoor tracking method. Owing to this distinctive characteristic, this study was undertaken to examine the research question (RQ) of whether self-guided WST using an vSLAM-AR-powered handheld device is a viable option. In the study, we consider WST as a learning setting since the goal is to impart knowledge relevant to workplace safety to the students.

The rest of the article is structured as follows: The next section outlines the theoretical background, and this is followed by a presentation of the methodology in Section 3. Section 4 describes the quantitative and qualitative results, which are discussed in Section 5. Finally, Section 6 draws the conclusions.

2. Background

2.1. Workplace Safety

In 2022, there were 2.97 million non-fatal workplace accidents in the European Union with at least 4 days of sick leave and 3289 fatal accidents at work [16]. In addition to the individual human harm, there is also significant economic damage, which should be mitigated by comprehensive workplace safety measures [17,18]. Workplace safety is defined by Beus et al. (2016) [19] as “an attribute of work systems reflecting the (low) likelihood of physical harm—whether immediate or delayed—to persons, property, or the environment during the performance of work”. Beus et al. present the Integrated Safety Model, in which safety-related knowledge, skills and motivation are considered to be determining factors for workplace safety. One of the measures used to achieve workplace safety is employee training [20,21]. In their review, Robson et al. (2012) [22] demonstrate the positive effects of training on workplace-safety-relevant behavior. Further studies suggest that WSP influences the safety-related behavior of vulnerable employees and thus has the potential to reduce work-related injuries [23,24,25]. The construction industry is one of the largest areas of application for WST [26,27,28,29,30]. Other fields of application include healthcare, agriculture and energy [31] and transportation, manufacturing and the workplace in general [1].

2.2. Digital Educational Media

In recent decades, digital tools have become increasingly available for training and education [32,33]. In WST, too, more and more digital tools are available that are said to foster better learning outcomes than conventional methods [30,34]. Various educational digital tools also exist in the technical domain of workplace safety, as the following examples demonstrate:

- Szabó (2024) [35] presents a combination of the learning management system Moodle and the video conferencing system BigBlueButton as a digital online environment for teaching workplace safety competencies.

- Pink et al. (2016) [28] evaluate digital videos to improve workplace safety in the construction industry.

- Vukićević et al. (2021) [36] introduce a mobile app to promote workplace safety.

- Castaneda et al. (2013) [37] examine the extent to which a telenovela promotes workplace safety.

- Hussain et al. (2024) [26] highlight the positive effect of using AI tools in the form of large language models to increase knowledge gain from WST for foreign-language employees in the construction industry.

- Fortuna et al. (2024) [38] have developed a serious game to teach workplace safety rules in a machining workshop. Pietrafesa et al. (2021) [39] have also responded to the integration of work-based learning into the curriculum by using a serious game to train workplace security.

2.3. Immersive Media

Badea et al. (2024) [31] identify new media, such as VR and AR, as ways to reduce workplace accidents, improve knowledge acquisition and increase employee knowledge. Sacks et al. (2013) [30] recommend VR as a medium for WST based on their comparative study. Li et al. (2018) [27] conclude that the use of immersive media requires a solid theoretical basis in the technical domain of workplace safety.

VR-based WST and AR-based WST follow different approaches. VR-based WST uses a virtual environment. For example, in construction WST, 360° VR-based virtual field trips [40] are often used. In contrast, AR-based training takes place on site in the real world, which is augmented with additional information and instructions using AR [1]. Accordingly, AR-based WST always involves access to the actual real-world environment and there should be no risk to or from the trainees. VR-based WST is more independent in this respect, as it does not require access to the real environment and can also be used in risky environments without posing any additional danger to the trainees [41,42]. Likewise, from a learning psychology perspective, differences between VR- and AR-based WST have been identified, for example, with regard to presence, immersion, cognitive load and learning requirements such as motivation and emotion [43,44]. However, the findings mostly are not yet unequivocal, apart from that both VR and AR appear to have positive effects on motivation and engagement [14,42,43,45].

Babalola et al. (2023) [2] also conduct a systematic literature review (SLR) on immersive media, such as AR and VR, for improving workplace safety. Inter alia, they deplore the small sample size of the studies examined, which indicates the early stage of these technologies’ development and integration into education, and point out the high effort required to conduct training with immersive media. For VR-based training, Stefan et al. (2023) [46] find an advantage over conventional learning methods, but also attest to the still early stage of development. Zhang et al. (2025) [47] utilize the advantages of dynamic AR-based instructions in the context of a workplace assistance system for high-precision assembly tasks. Gong et al. (2024) [1] find a positive influence of AR in WST in their SLR.

2.4. Mobile AR

As already mentioned, AR is the extension of the real world with digitally generated stimuli, usually visualizations [2,4,48,49]. A distinction is made between immersive (usually using headsets) and non-immersive (usually using handheld devices) experiences [1]. In the case of handheld devices, there are also differences between video see through (the augmentations are integrated into a live video from the camera of the handheld device) and optical see through (the augmentations are displayed on a semi-transparent optical element) [4]. When using a smartphone—as is the intention here—to achieve a low-threshold option for workplace safety with hardware that is as generally available as possible, a non-immersive video see through variant is used. The tracking variant is also important, i.e., the continuous localization of the handheld device in the environment, which should be achieved without scanning markers, as is common in educational contexts [50]. In terms of the subject matter, we are in need of a tour of a workplace, such as a workshop or a laboratory. Taking these four requirements of video see through, smartphones as devices, markerless tracking and an indoor workplace tour, there seems to be little known in the literature so far. For example, Tatić and Tešić (2017) [51] use marker-based AR to conduct WST. Ismael et al. (2024) [52] conduct laboratory safety training using AR glasses. Gong et al. (2024) [1] have conducted a recent SLR regarding safety training with AR. In the 37 included articles, there are no studies that possess all four characteristics.

3. Materials and Methods

3.1. AR App

The AR app was developed using the Google Tango platform as part of a research project [5]. Google Tango enables mobile devices to use sensors, in particular an RGB-D camera [53], for three-dimensional sensing and mapping of the environment in real time. An RGB-D camera simultaneously provides a colored image of the environment as well as depth information for the objects depicted [54]. This information can be used to digitally reconstruct the environment, also known as Simultaneous Localization and Mapping (SLAM). When only visual information is used, it is referred to as visual SLAM (vSLAM). Google Tango also integrates data from depth sensors. Nevertheless, the tracking used by Google Tango is a form of vSLAM. Overall, the technical features of Google Tango can be used for (a) 3D spatial perception, which allows virtual objects to be placed precisely in the real environment, (b) motion tracking using cameras and sensors to track the movement of the mobile device, (c) depth measurement to determine the distance to objects and (d) spatial mapping to create a 3D model of the environment. Google Tango enables the location and orientation (pose) of the handheld device to be determined without external positioning services, such as GPS [15,55,56]. However, Google Tango requires special devices due to the need for specific sensors [54]. The Asus ZenFone AR (Asus, Taipei, Taiwan) used in the study is one of these devices. Typical applications for Google Tango devices include indoor navigation [57], virtual furnishing [58] and assistance systems for vision-impaired people [59]. Google Tango has been replaced by ARCore [60], which can work without special devices and can therefore be used more widely.

The ARTour app consists of a JavaScript framework that addresses the Google Tango platform and simultaneously manages objects with attributes and visualizations. In an edit mode for creating a tour, the environment is first captured and mapped by moving the smartphone systematically [5]. Then, virtual objects are placed in the environment for the annotation of physical objects and are provided with attributes that also influence the visualizations and define the order of the locations to be visited in the environment (Figure 1). As soon as one of these locations is reached, i.e., a minimum distance is fallen below, information about this location can be displayed and interactions, such as answering multiple-choice questions (MCQs), can be configured. Navigation is achieved by a distance indicator and an arrow indicating the direction to the next location to be visited. At the end of such a tour, a certificate of successful completion of the tour can be issued if the interactions have been carried out successfully, i.e., if the intended locations have been visited and the multi-choice questions have been answered (mostly) correctly. After configuring a tour in the edit mode, this tour can be carried out in the tour mode, as can be seen in the video [61]. The app was already shown to have good usability during development [6]. However, further studies on its actual learning effectiveness are rather scarce. Accordingly, the present study contributes to addressing this gap.

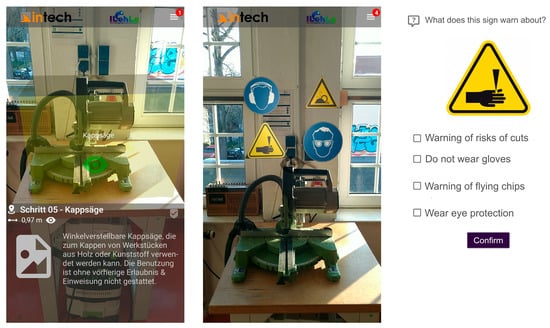

Figure 1.

Augmentation scheme in the app near an object (here, a cross-cut saw): information text and green eye-shaped cursor for selection of the object (left), warning symbol (center) and MCQ (right).

3.2. Safety Training Scenario

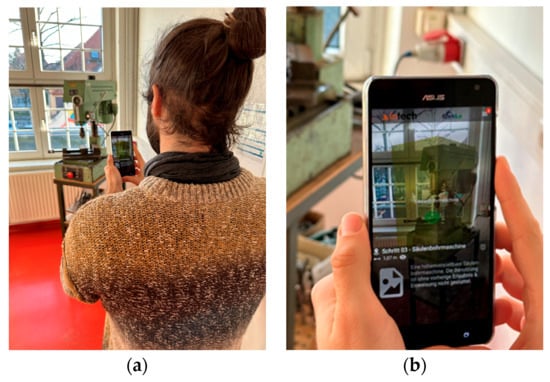

The environmental engineering laboratory (“Technikum”) of the higher education institution (HEI) is integrated into environmental engineering teaching. As part of their studies, students have to construct experimental facilities and carry out experiments. For the construction, students have access to the laboratory’s workshop. Safety training is required to gain access to the workshop. It covers instructions on how to operate the machinery in the laboratory as well as explanations of where to find materials, tools and first aid kits. For this study, a shortened safety training program was designed and implemented in the AR app. This shortened version of the safety training is based on the transcript of an exemplary safety training program given to the researchers by the head of the laboratory. The participants were presented with the app, and the instructions summarized in the following were provided by the experimenter to the participants in the Introduction and Tutorial Activity (cf. Figure 2): The tour consists of four stations: first, a station that explains how tools could be borrowed from the workshop, then a station that provides general information about the permanently installed devices and a station for each of two of these permanently installed devices, a box column drill and a cross-cut saw (Figure 3). Each station explains general information about its topic. Additionally, the stations for the permanently installed devices give information about the safety guidelines that must be followed while using these devices (e.g., the requirement to wear safety glasses).

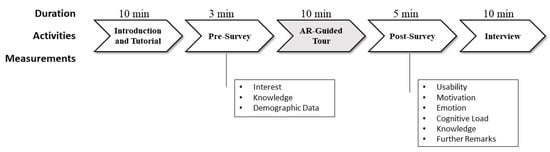

Figure 2.

Study design.

Figure 3.

Safety training equipment: cross-cut saw (front left) and box column drill (back right).

An introduction to the scenario is on a separate screen in the app itself. The tour sequence is predetermined so that the participants have to pass through the stations in a fixed order. At each station, the relevant information is displayed on an information screen as explanatory texts. In addition, the relevant safety instructions for the devices are then displayed as AR signs placed on the device (Figure 1). To be able to read and check off these safety instructions, the signs have to be selected in the app (through pointing with an eye-shaped cursor) by the participants. There are three around the box column drill and four signs placed around the cross-cut saw. At the end of the tour, three MCQs, randomly selected from a pool of six MCQs, are used to assess the learning outcomes. A certificate is then displayed, which confirms that the participant either “passed” or “failed” depending on the answers to the MCQs. For a participant who is familiar with the app, the tour takes just under five minutes; for the less experienced participants, it is closer to ten minutes.

3.3. Study Design

The study followed a mixed-methods research design and aimed to evaluate the prototype of the augmented reality (AR) app introduced in the last section. The evaluation focused on the user friendliness of the app, the comprehensibility of the safety content and the determination of learning-relevant factors, such as motivation and emotion, evoked when using the app.

Prior to the commencement of the study, participants were fully informed about its purpose and procedures. Each participant received a detailed written consent form, which they signed voluntarily after being provided with comprehensive information. The confidentiality of all collected data was ensured, with data being anonymized and used solely for research purposes. Participants were assured of their right to withdraw from the study at any time without facing any disadvantages.

3.4. Participants

A total of 12 people (8 male, 4 female) aged between 26 and 56 years (mean: 35.9, SD: 9.22) took part in the study. The participants had varying degrees of previous experience with AR technologies and varying levels of prior knowledge of the safety-related aspects of the specific workshop. However, all participants had previous experiences in WST, the scientific employees due to their teaching activities, in which they have to instruct students in face-to-face settings in the laboratory, and the students due to having each completed at least two of these WST programs as research assistants. Thus, a diverse range of perspectives on the app was systematically elicited. Further, the participants were five scientific employees and seven students (7) of the HEI acquired through convenience sampling and were not remunerated for their participation. The study was conducted entirely in German.

3.5. Evaluation

The entire evaluation study [62] consisted of several phases (Figure 4):

Figure 4.

Example sequence illustrating the app’s use. Exploring the box column drill: overview (a) and AR screen (b).

- Introduction

The participants were welcomed by the experimenter at the beginning of the study. In this context, they were informed about the general course of the evaluation process. Care was taken to ensure that any questions or ambiguities were clarified in advance to ensure that participants fully understood the remainder of the study and their role in it. All participants gave their consent to take part in the study.

- 2.

- Tutorial

As AR technology was new to some of the participants, they were offered a short tutorial before using the actual prototype. This tutorial explained the basic functions of the AR app and guided the participants step by step through the handling of the app. The aim was to ensure that all participants achieved the minimum level of operating competence required to be able to complete the subsequent safety briefing. The tutorial, guided by a one-page information sheet, lasted around 5–10 min and was given by the experimenter. The experimenter was available during the safety training for supervision and support of the participants.

- 3.

- Pre Survey

After the introduction and tutorial, participants completed a questionnaire about their demographic data. This questionnaire was used to collect relevant background information on the participants, allowing the detailed classification of the results. The questions covered demographic variables such as age, gender and technical affinity in relation to AR applications.

- 4.

- AR-Guided Tour

Following the tutorial, the participants started with the actual safety training using the AR app. This training was intended to convey the most important safety aspects for selected parts of the workshop. The participants moved through the workshop on a guided tour accompanied by the AR app.

- 5.

- Post SurveyFollowing the safety training, participants were asked to complete a questionnaire consisting of the following standardized instruments:

- (1)

- System Usability Scale (SUS, Brooke, 1996) [63]. A key feature of an app is its user friendliness, which is crucial, especially for developing prototypes such as the one used here. The SUS by Brooke is an established short 10-item questionnaire that can be used universally, including for apps. Breaking it down to a single value makes the usability values comparable.

- (2)

- Cognitive Load (CL, Klepsch et al., 2017) [64]. In addition to usability, the mental load on the participants, also known as cognitive load (CL), was measured. Cognitive load theory [65] assumes that learning is associated with cognitive effort. A high CL can overwhelm users and limit the usability of an app, whereas a low CL often enables efficient task processing. In particular, the CL of AR apps might be high compared to traditional learning tools [45]. The CL was measured using the subscales Intrinsic Cognitive Load (ICL), Extraneous Cognitive Load (ECL) and Germane Cognitive Load (GCL).

- (3)

- Motivation [66]. Motivation is one of the prerequisites for learning and has a significant influence on learning success since it determines how intensively learners engage with the subject matter [67]. The Isen & Reeve questionnaire systematically assesses the fundamentals of learning motivation in just 8 items.

- (4)

- Achievement Emotions Questionnaire (AEQ) [68]. Emotions are also a prerequisite for learning because they influence attention and memory, among other things, and thus affect the learning process [69]. We used the eight items from the AEQ to assess learning-related emotions.

Apart from the SUS, which uses a five-point Likert scale, the other three instruments each utilize a seven-point Likert scale.

- 6.

- Semi-Structured InterviewFollowing the post survey, the experimenter conducted a 10 min semi-structured interview with each participant. The interviews allowed participants to elaborate on specific aspects of the user experience as well as to pick up on unforeseen topics. The following questions structured the interview:

- (1)

- How well did you get on with the app?

- (2)

- Do you think that the app allows you to grasp the key elements of the workshop, i.e., can you get to know the workshop if all the important stations are included in the tour?

- (3)

- How do you see the app compared to a face-to-face introduction to the workshop?

- (4)

- What do you think of the concept of an app-based introduction overall? What do you see as the advantages? What are the disadvantages? (Compared to a face-to-face introduction/compared to a group-based introduction?)

The questions were aimed at obtaining a more detailed assessment of app usage and identifying any weaknesses or potential for improvement. The results were subjected to a qualitative analysis [70] performed by the experimenter and validated by one of the co-authors.

4. Results

4.1. Quantitative Results

4.1.1. Interest in the Workshop

Participants’ interest in the workshop environment was measured on a 10-point Likert scale (1 = “very little interest”; 10 = “very high interest”). The mean value was 6.8 (SD = 2.80), which indicates a rather average to slightly above average interest in the specific workshop environment.

4.1.2. Perceived Knowledge

One aim of the study was to assess the participants’ level of knowledge about the workshop both before and after the WST for an estimation of the learning outcomes. The pre-training knowledge received a mean of 4.6 of 10 points (SD: 3.52), while the post-training knowledge was rated as 6.8 (SD: 1.95) points. Results of a Wilcoxon Signed-Rank test indicated that there was a significant difference between pre-training knowledge (Mdn = 4.5, n = 12) and post-training knowledge ((Mdn = 7, n = 12), Z = 2.1, p = 0.033, with a large effect size of r = 0.8, which is equivalent to a strong effect according to [71]).

A Wilcoxon test indicated a significant increase in knowledge of the workshop. In a comparison of perceived knowledge before and after the WST, seven participants indicated improvement, four indicated the same level before and after and one participant indicated a reduction of 2 points. However, this participant had indicated their prior knowledge with the highest possible score of 10. Thus, the WST presumably led to this person realizing knowledge gaps regarding the workshop, which might qualify as a learning outcome. Accordingly, the reduction in the standard deviation of the perceived knowledge should also be noted, indicating a more homogeneous level of knowledge after training.

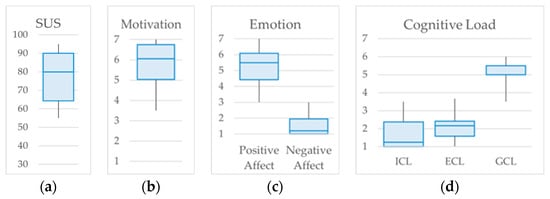

4.1.3. System Usability Scale (SUS)

The mean value achieved was 77.1 (SD = 13.81), which indicates good usability of the app overall (for the boxplot, cf. Figure 5). The usability was thus rated slightly better than in the study conducted during the development of the app [6].

Figure 5.

Boxplots of the surveyed SUS (a), motivation (b), Positive and Negative Affect (c) and the three dimensions (intrinsic (ICL), extraneous (ECL) and germane (GCL)) of cognitive load (d).

4.1.4. Motivation

The motivation of the participants reached a mean value of 5.8 (SD = 1.17). This indicates a moderate to high level of motivation overall, which suggests that the participants were motivated to engage with the content of the AR app despite the technological requirements (for the boxplot, cf. Figure 5).

4.1.5. Emotion

The eight emotions queried were measured separately and subsequently summarized into Positive Affect and Negative Affect for simplification. Positive Affect had a mean value of 5.2 (SD = 1.56), which indicates predominantly positive emotional reactions of the participants towards the use of the AR app. Negative Affect, on the other hand, had a mean value of 1.6 (SD = 0.99), which indicates that negative emotions hardly played a role during the use of the app. The low variance in Negative Affect shows that almost all participants experienced few negative emotions such as frustration or being overwhelmed (for the boxplot, cf. Figure 5).

4.1.6. Cognitive Load

The Intrinsic Cognitive Load (ICL), which refers to the complexity of the task, had a mean value of 1.8 (SD = 1.24). This relatively low value indicates that the participants did not find the content-related requirements of the WST overly demanding. The Extraneous Cognitive Load (ECL), which indicates the load caused by the app design, had a mean of 2.1 (SD = 1.19). The value is to be considered rather low; only a little mental effort is required to operate the app. The Germane Cognitive Load (GCL), which describes the load that is conducive to learning, was characterized by a mean value of 5.1 (SD = 1.90). This comparatively high value shows that the participants were able to successfully process learning content and integrate it into their knowledge base. Overall, there is a high GCL and low ECL result in a situation that seems conducive to learning for a topic that is not too complex (for the boxplot, cf. Figure 5).

4.2. Semi-Structured Interviews

During the interviews, numerous pieces of feedback and points of criticism regarding the use of the AR app in the context of the safety briefing were collected. The analysis of this feedback revealed both positive and negative aspects of the app. These aspects are of central importance both for the evaluation of the app’s effectiveness and for the further app development. The eight clusters identified from a total of 141 codes in the qualitative analysis are described in the following.

4.2.1. Previous AR Experience

Participants’ previous knowledge varied. Five of the twelve interviewees stated that they had never encountered AR before the WST. Three others described their previous experience as minimal and emphasized that they had only been confronted with AR in a few situations. Thus, most participants had no or very limited AR experience. Only one participant reported some relevant experience with AR.

4.2.2. AR Visualization

One of the main criticisms concerned the visibility and size of the AR objects. Several participants complained that the objects displayed in the app were sometimes difficult to recognize (three mentions) or too small (two) to easily be located in the room. This resulted in longer phases of participants searching for the 3D objects, thus increasing the difficulty of using the app. Furthermore, it was mentioned once each that the AR visualization distracts from the actual content, that the AR objects are arranged inconsistently and that the AR objects should show an animation when selected.

4.2.3. Learning Objectives

Seven participants stated that the app itself distracted from the actual content. They reported that interacting with the app led to a focus on using the technology rather than the content of the WST. One participant explicitly admitted that he had not internalized the learning content. A similar problem mentioned was the tendency to simply tick off all the tasks without reading the content thoroughly (four). This poses a potential risk to the learning effect, as there is a danger that participants will simply follow the formal training procedure without actively engaging with the content. In contrast, however, six participants also reported that they had absorbed the learning content overall. There appear to be differences in individual perception and learning styles that should be considered when designing the app and the learning scenarios. The MCQs at the end were seen as a constructive way to assess learning outcomes (two).

4.2.4. AR App vs. Face-to-Face Training

The participants mentioned advantages and disadvantages of the app-guided training compared to face-to-face training. The most frequently mentioned disadvantage was that no practical demonstration or practice of the movement sequences was possible with the app (10). The app did not offer the opportunity to directly simulate physical work processes or safety-relevant actions, which is usually the case in face-to-face training (two). Another criticism was the lack of individual interaction with an instructor. This limitation reduced opportunities for direct feedback and clarification of open questions (five). Additionally, it prevented instructors from providing personalized safety instructions. For example, they could not point out specific precautions, such as tying up long hair when using certain machines. The lower information density (two) and the lack of variation, and the correspondingly less memorable learning experience (two), were also mentioned.

On the other hand, clear advantages of the AR app over face-to-face introductions were also mentioned (eight). The time aspect in particular was emphasized by many participants. They explained that the app saves time (especially for the instructors). They also emphasized the option of accessing the learning content at their own pace (five) and with a time delay (eight), which some participants considered a major advantage over face-to-face training. Furthermore, it was mentioned once that the app is more suitable for students who learn better by reading, that the game-based aspect of the app is fun and that more information is remembered because of the self-guidance.

4.2.5. User Interface

Participants were also critical of the app’s user interface. In particular, the tick to confirm reading was described as too small and unintuitive, which made interaction unnecessarily difficult (three). The color scheme and low contrast in the design of the app were also criticized (three), as this made it difficult to clearly read texts and information. This was particularly criticized by one participant with red–green visual impairment. It was pointed out twice that the arrow pointing to the next object was too small.

4.2.6. Improvements

Numerous suggestions for improving the AR app were also made. Five participants suggested that multimedia content could promote learning by making the presentation of information more varied and appealing. For example, motion sequences for some tools could be integrated as videos. Another suggested feature was a built-in wiki in which participants could look up information later to retrieve it as required (four). To improve the clarity of the app, it was suggested that we introduce an overview map that shows how many active objects there are in the environment and where they are located (five). A map would ease navigating within the environment and would make the learning process more comprehensible. Further, it was proposed that the introduction process should be made more flexible, so that users can decide which station they want to visit next, rather than being tied to a predetermined order (three). From a didactic point of view, a more concise introduction at the beginning and a summary of what has been learned at the end were requested (two). The following points were mentioned once each: (a) the lack of a hint that the visibility of the augmentations is light dependent, (b) the joint tour with other participants, (c) the repetition and (d) that a higher level of interactivity could be beneficial to learning.

4.2.7. Comprehensibility of the App

Four participants reported that the additional explanations by the experimenter were required for a clear understanding how the app works. The tutorial was also described as difficult to follow (four), which meant that three participants had difficulty recognizing what the next step should be after they had reached a station. Navigating through the workshop using the app, especially searching for new stations in the workshop, was perceived as cumbersome (three). The unclear navigational cues within the app proved to be a particularly significant factor in this context. Participants generally needed a specific period of use to develop an understanding of how to navigate and utilize the app effectively (three). Only one participant explicitly mentioned the app as being easy to understand.

4.2.8. Information and Safety

Apart from aspects of usability, the information provided was also criticized. Three participants who were familiar with the relevant safety aspects stated that there was too little or no information on the safety-relevant equipment in the workshop. In particular, no explanations were provided on how the individual devices worked (two), which would be very important in WST. This gap in the content provided could potentially affect participants’ safety awareness. In addition, the app lacks a hint pointing to the already existing physical information sheet next to the devices (one), and the explanation of the devices using symbols does not seem intuitive. Furthermore, the app was described as well suited for an overview but less suitable for a detailed exploration (one). Another area of application is a self-guided workshop tour during an open-door event. Yet one participant considered the app unsuitable for WST.

4.2.9. Summary

Despite the many aspects with potential for optimization and a few negative assessments, the app was generally considered beneficial for WST. Many of the aspects with potential for optimization can be easily implemented, for example, improving and expanding the content, as mentioned in the previous section. However, some aspects, such as less prominent navigational cues and a map, require more extensive changes to the app.

5. Discussion

In this study, we evaluated a prototypical AR app based on the vSLAM tracking technique for self-guided WST in a workshop at an HEI. The aim of the study was to examine whether such self-guided WST is useful and can achieve the learning outcomes (RQ). The answer to this research question also reveals whether and to what extent the technique of vSLAM-based tracking is a viable tracking option in our context of a workshop. This implied sub-research question could also be answered positively by answering the actual research question positively: The vSLAM-based tracking also proved to be viable, i.e., the smartphones were able to determine their pose correctly and display the augmentations accurately. However, there was a limitation where the smartphones became warm after a certain period of work and partially stopped working, i.e., there was a time limit for the duration of the safety training. Nevertheless, we assume that these are teething technical problems that will be resolved as the app in particular is further developed. In principle, the RQ received a positive answer, i.e., despite all the negative aspects mentioned, the participants were able to use the app meaningfully and achieve the learning outcomes. It should be noted, however, that the study was conducted under the supervision of an experimenter, who was available to answer questions and for further interventions. That said, it may be assumed that further improvements to the tutorial and the app will allow the app to be used without supervision. In particular, it remains to be examined to what extent group-based didactic scenarios, for example, the implementation of the WST in pairs for communication among the participants, and accordingly for the joint mastering of possible problems (in the sense of situated learning), can be achieved. Since this study was more of a technical feasibility study, follow-up studies should also examine further didactic scenarios. The nature of a technical feasibility study also implies that this study was not specifically guided by learning theory, but rather that a newly available technology was fundamentally tested for applicability in learning contexts. However, this deficiency is often observed with newly available technology [72].

Limitations of the study include the small sample size of only 12 participants. Accordingly, the quantitative results are not as meaningful as they ideally should be. Nevertheless, the interviews did provide qualitative insights that will help in the further development of the app. A caveat here, however, is that the Google Tango platform has since been discontinued and so further development must be based on a new technology platform. It is likely, however, that the results of the study can be meaningfully incorporated into a porting of the app to the successor platform ARCore. Further, the measurement of learning was achieved by self-reporting. Future studies should rely on more detailed measures, such as practical demonstrations of the knowledge gained. Another limitation of the study is that not all of the app’s features were integrated. For example, the feature of a virtual safety boundary, which triggers a vibration in the smartphone to provide haptic feedback when crossed, was not integrated into the safety training. It is likely that the app’s learning experience could be further enhanced by integrating more of the features supported.

There are certainly more options for conducting self-guided WST, for example, using written instructions with a floor plan or an AR app with markers that participants have to scan. However, we believe that the use of an app that independently recognizes the pose is of unique benefit to learners, as it allows them to focus on the content of the safety training without having to spend a lot of time orienting themselves and analyzing their surroundings. Further research is needed to clarify how susceptible a vSLAM-based app is to maintenance compared to a marker-based app. If the environment changes significantly, the environment (here, the workshop) has to be rescanned by the app; otherwise, the tracking will not work. In the current prototypical authoring environment, programming skills are still required to modify the safety training.

Although a scenario based on the four distinctive features of our study—video see through, smartphones as devices, markerless tracking and an indoor workplace tour—to the best of our knowledge has not yet been described in the literature, key results of the study seem to be consistent with the central findings of the SLR about AR in safety training in [1]:

- (1)

- User-centered design: AR-based learning environments should be optimized in a user-centered and individualized (adaptive) way to promote immersion and thus learning success [73,74,75]. Although the quantitative results were positive, the feedback from the interviews should be incorporated into the necessary further development of the app.

- (2)

- Inclusion of further domains: The not yet completed design integration of learning theories into the app and didactic scenario is acknowledged [76]. The integration of additional technologies may further enhance the learning experience, for example, the pose can be evaluated to provide AI-generated hints for the next action [77,78]. It is also conceivable to generate a VR environment from the model created by vSLAM, in which pre-training can then take place in the case of more complex environments [42,79]. Gamification could be used as a didactic tool to increase motivation and engagement [80,81]. By utilizing Metaverse or Internet of Things principles, current operational data of the devices could be integrated into the safety training via AR [82,83].

- (3)

- Assessment of learning success. Answering integrated MCQs provides a basic way of checking learning success. However, it is likely that much more detailed data will be collected in the future, such as usage times or retention times. Learning analytics principles could be used for this purpose, i.e., the data generated when using the AR app, which can serve as indicators for optimizing learning success [84,85,86]. The assessment of cognitive load conducted as part of the study already provided acceptable values overall. However, physiological measurements, such as EEGs or heart rate, are preferable to self-reported measurements for continuous measurement [87,88].

6. Conclusions

This study investigated the AR-app-based implementation of workplace safety training (WST) using vSLAM tracking for a workshop of an environmental engineering laboratory at a higher education institute. The results of the mixed-method evaluation study with 12 participants are to be considered positive. The app, especially its vSLAM-based tracking, was found to be working and to be easy to use. Further, the cognitive load (mental effort) was estimated to be conducive to learning. Similar values were determined for the learning prerequisites motivation and emotion. The learning effectiveness was determined positively by a pre and post survey of the perceived subject knowledge. Further possibilities for improving the app were identified through semi-structured interviews. Due to the discontinuation of the AR platform used, the findings now need to be ported to a newer platform, such as ARCore. Overall, the study suggests that AR-app-based WST is a viable option. Despite some challenges, such as content maintenance, AR-app-based WST might provide the necessary training with reduced effort compared to face-to-face training. The wide range of information that may be provided in the app may allow participants to achieve a level of knowledge that is not usually attained in face-to-face training. Accordingly, the results of the study contribute to the advancement of self-guided WST based on mobile vSLAM-based augmented reality.

Author Contributions

Conceptualization, H.S., T.F., F.W., J.W. and T.H.; methodology, H.S., T.F. and J.W.; software, T.F. and H.S.; validation, H.S., T.F., F.W., J.W. and T.H.; formal analysis, H.S. and J.W.; investigation, H.S., T.F., J.W., F.W. and T.H.; resources, H.S., T.F., J.W. and T.H.; data curation, T.F.; writing—original draft preparation, H.S., T.F., J.W. and T.H.; writing—review and editing, H.S., T.F., F.W., J.W. and T.H.; visualization, T.F.; supervision, H.S. and J.W.; project administration, H.S. and T.H.; funding acquisition, H.S. All authors have read and agreed to the published version of the manuscript.

Funding

The authors gratefully acknowledge the funding of this work by the Digi-Fellowship for Innovations in Digital Higher Education Teaching “Lern Umwelt” funded by the Thüringer Ministerium für Wirtschaft, Wissenschaft und Digitale Gesellschaft and the Stifterverband. Further, the authors acknowledge support from the German Research Foundation (DFG) and Bauhaus-Universität Weimar within the program of Open-Access Publishing.

Institutional Review Board Statement

This study investigated the usage of an augmented reality app within a supervised learning context. Specifically, the participants used a smartphone as they routinely would in a similar everyday context for a period of 10 minutes. At no time was there any danger to the life and well-being of the participants. In addition, the participants were supervised by a supervisor who could intervene at any time. In Germany, there is no legal requirement for the ethical review of studies outside of medicine (§ 40-42 Medicines Act (“Arzneimittelgesetz”) (AMG) and § 20 Medical Devices Act ("Medizinproduktegesetz”) (MPG)). In addition, the data were anonymized immediately after participation, so that the data are not subject to the nationally binding General Data Protection Regulation (GDPR). Thus, the research did not receive prior ethical clearance from an institutional review board (IRB) or ethics committee; however, all efforts were made to adhere to ethical research principles, including voluntary participation, informed consent, the protection of participant confidentiality and the minimization of potential risks. No personally identifiable information was collected or reported, and participants were not subjected to harm or coercion. While formal ethical approval was not obtained, the study was conducted in accordance with the Declaration of Helsinki.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data that support the findings of this study are available on request from the corresponding author.

Acknowledgments

The app under study has been developed as part of the Federal Ministry of Education and Research (BMBF)-funded project ILehLe (FKZ16SV7557) and was provided by the grant recipient (in-tech GmbH. Braunschweig, Germany) for further evaluation. Specifically, we cordially thank Frank Höwing (in-tech GmbH) for his excellent support in using the app.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Gong, P.; Lu, Y.; Lovreglio, R.; Lv, X.; Chi, Z. Applications and Effectiveness of Augmented Reality in Safety Training: A Systematic Literature Review and Meta-Analysis. Saf. Sci. 2024, 178, 106624. [Google Scholar] [CrossRef]

- Babalola, A.; Manu, P.; Cheung, C.; Yunusa-Kaltungo, A.; Bartolo, P. Applications of Immersive Technologies for Occupational Safety and Health Training and Education: A Systematic Review. Saf. Sci. 2023, 166, 106214. [Google Scholar] [CrossRef]

- Dargan, S.; Bansal, S.; Kumar, M.; Mittal, A.; Kumar, K. Augmented Reality: A Comprehensive Review. Arch. Comput. Methods Eng. 2023, 30, 1057–1080. [Google Scholar] [CrossRef]

- Schmalstieg, D.; Höllerer, T. Augmented Reality, Principles and Practice; Pearson Education, Inc.: London, UK, 2016. [Google Scholar]

- Höwing, F. Adaptives Lehr-Leitsystem Mit Multisensorischem Feedback: Schlussbericht Des Teilvorhabens Der In-Tech GmbH: Im Verbundprojekt ILehLe-Die Intelligente Lehr-Lernfabrik-Die Lernende Lernfabrik-Eine Intelligente Lehr-Lernumgebung Zur Energie-Und Ressourceneffizienz; Intech: Garching, Germany, 2019; p. 41. [Google Scholar]

- Reining, N.; Müller-Frommeyer, L.C.; Höwing, F.; Thiede, B.; Aymans, S.; Herrmann, C. Kauffeld Evaluation neuer Lehr-Lern-Medien in einer Lernfabrik. Eine Usability-Studie zu App- und AR-Anwendungen. In Teaching Trends: Die Präsenzhochschule und Die Digitale Transformation (4.: 2018: Braunschweig); Digitale Medien in der Hochschullehre Waxmann: Waldkirchen, Germany, 2019; Volume 7. [Google Scholar]

- Baker, L.; Ventura, J.; Langlotz, T.; Gul, S.; Mills, S.; Zollmann, S. Localization and Tracking of Stationary Users for Augmented Reality. Vis. Comput. 2024, 40, 227–244. [Google Scholar] [CrossRef]

- Beltrán, G.; Huertas, A.P. Augmented Reality for the Development of Skilled Trades in Indigenous Communities: A Case Study. Electron. J. E-Learn. 2023, 22, 29–45. [Google Scholar] [CrossRef]

- Rani, S.; Mazumdar, S.; Gupta, M. Marker-Based Augmented Reality Application in Education Domain. In Machine Learning, Image Processing, Network Security and Data Sciences; Chauhan, N., Yadav, D., Verma, G.K., Soni, B., Lara, J.M., Eds.; Communications in Computer and Information Science; Springer Nature: Cham, Switzerland, 2024; Volume 2128, pp. 98–109. ISBN 978-3-031-62216-8. [Google Scholar]

- Syed, T.A.; Siddiqui, M.S.; Abdullah, H.B.; Jan, S.; Namoun, A.; Alzahrani, A.; Nadeem, A.; Alkhodre, A.B. In-Depth Review of Augmented Reality: Tracking Technologies, Development Tools, AR Displays, Collaborative AR, and Security Concerns. Sensors 2022, 23, 146. [Google Scholar] [CrossRef]

- Zhou, F.; Duh, H.B.-L.; Billinghurst, M. Trends in Augmented Reality Tracking, Interaction and Display: A Review of Ten Years of ISMAR. In Proceedings of the 7th IEEE/ACM International Symposium on Mixed and Augmented Reality, Cambridge, UK, 15–18 September 2008; pp. 193–202. [Google Scholar]

- Foukarakis, M.; Faltakas, O.; Frantzeskakis, G.; Ntafotis, E.; Zidianakis, E.; Kontaki, E.; Manoli, C.; Ntoa, S.; Partarakis, N.; Stephanidis, C. A Mobile Tour Guide with Localization Features and AR Support. In HCI International 2023—Late Breaking Posters; Stephanidis, C., Antona, M., Ntoa, S., Salvendy, G., Eds.; Communications in Computer and Information Science; Springer Nature: Cham, Switzerland, 2024; Volume 1957, pp. 489–496. ISBN 978-3-031-49211-2. [Google Scholar]

- Capecchi, I.; Bernetti, I.; Borghini, T.; Caporali, A.; Saragosa, C. Augmented Reality and Serious Game to Engage the Alpha Generation in Urban Cultural Heritage. J. Cult. Herit. 2024, 66, 523–535. [Google Scholar] [CrossRef]

- Koo, S.; Kim, J.; Kim, C.; Kim, J.; Cha, H.S. Development of an Augmented Reality Tour Guide for a Cultural Heritage Site. J. Comput. Cult. Herit. 2020, 12, 1–24. [Google Scholar] [CrossRef]

- Osipova, M.; Das, S.; Riyad, P.; Söbke, H.; Wolf, M.; Wehking, F. High Accuracy GPS Antennas in Educational Location-Based Augmented Reality. In Proceedings of the DELFI Workshops 2022, Karlsruhe, Germany, 12 September 2022; pp. 37–46. [Google Scholar]

- Eurostat Accidents at Work Statistics. Available online: https://ec.europa.eu/eurostat/statistics-explained/index.php?title=Accidents_at_work_statistics (accessed on 12 October 2024).

- Brown, K.A. Workplace Safety: A Call for Research. J. Oper. Manag. 1996, 14, 157–171. [Google Scholar] [CrossRef]

- Christian, M.S.; Bradley, J.C.; Wallace, J.C.; Burke, M.J. Workplace Safety: A Meta-Analysis of the Roles of Person and Situation Factors. J. Appl. Psychol. 2009, 94, 1103–1127. [Google Scholar] [CrossRef]

- Beus, J.M.; McCord, M.A.; Zohar, D. Workplace Safety: A Review and Research Synthesis. Organ. Psychol. Rev. 2016, 6, 352–381. [Google Scholar] [CrossRef]

- Becker, P.; Morawetz, J. Impacts of Health and Safety Education: Comparison of Worker Activities before and after Training. Am. J. Ind. Med. 2004, 46, 63–70. [Google Scholar] [CrossRef]

- Colligan, M.J.; Cohen, A. The Role of Training in Promoting Workplace Safety and Health. In The Psychology of Workplace Safety; American Psychological Association: Washington, DC, USA, 2004; pp. 223–248. ISBN 1-59147-068-4. [Google Scholar]

- Robson, L.S.; Stephenson, C.M.; Schulte, P.A.; Amick, B.C.; Irvin, E.L.; Eggerth, D.E.; Chan, S.; Bielecky, A.R.; Wang, A.M.; Heidotting, T.L.; et al. A Systematic Review of the Effectiveness of Occupational Health and Safety Training. Scand. J. Work. Environ. Health 2012, 38, 193–208. [Google Scholar] [CrossRef]

- Bayram, M.; Arpat, B.; Ozkan, Y. Safety Priority, Safety Rules, Safety Participation and Safety Behaviour: The Mediating Role of Safety Training. Int. J. Occup. Saf. Ergon. 2022, 28, 2138–2148. [Google Scholar] [CrossRef]

- Bęś, P.; Strzałkowski, P. Analysis of the Effectiveness of Safety Training Methods. Sustainability 2024, 16, 2732. [Google Scholar] [CrossRef]

- Nykänen, M.; Guerin, R.J.; Vuori, J. Identifying the “Active Ingredients” of a School-Based, Workplace Safety and Health Training Intervention. Prev. Sci. 2021, 22, 1001–1011. [Google Scholar] [CrossRef]

- Hussain, R.; Sabir, A.; Lee, D.-Y.; Zaidi, S.F.A.; Pedro, A.; Abbas, M.S.; Park, C. Conversational AI-Based VR System to Improve Construction Safety Training of Migrant Workers. Autom. Constr. 2024, 160, 105315. [Google Scholar] [CrossRef]

- Li, X.; Yi, W.; Chi, H.-L.; Wang, X.; Chan, A.P.C. A Critical Review of Virtual and Augmented Reality (VR/AR) Applications in Construction Safety. Autom. Constr. 2018, 86, 150–162. [Google Scholar] [CrossRef]

- Pink, S.; Lingard, H.; Harley, J. Digital Pedagogy for Safety: The Construction Site as a Collaborative Learning Environment. Video J. Educ. Pedagog. 2016, 1, 1–15. [Google Scholar] [CrossRef]

- Romero Barriuso, A.; Villena Escribano, B.M.; Rodríguez Sáiz, A. The Importance of Preventive Training Actions for the Reduction of Workplace Accidents within the Spanish Construction Sector. Saf. Sci. 2021, 134, 105090. [Google Scholar] [CrossRef]

- Sacks, R.; Perlman, A.; Barak, R. Construction Safety Training Using Immersive Virtual Reality. Constr. Manag. Econ. 2013, 31, 1005–1017. [Google Scholar] [CrossRef]

- Badea, D.O.; Darabont, D.C.; Chis, T.V.; Trifu, A. The Transformative Impact of E-Learning on Workplace Safety and Health Training in the Industry: A Comprehensive Analysis of Effectiveness, Implementation, and Future Opportunities. Int. J. Educ. Inf. Technol. 2024, 18, 105–118. [Google Scholar] [CrossRef]

- Nagy, A. The Impact of e-Learning. In E-Content: Technologies and Perspectives for the European Market; Springer: Berlin/Heidelberg, Germany, 2005; pp. 79–96. [Google Scholar]

- Schmidt, J.T.; Tang, M. Digitalization in Education: Challenges, Trends and Transformative Potential. In Führen und Managen in der Digitalen Transformation; Trends, Best Practices und Herausforderungen; Springer: Berlin/Heidelberg, Germany, 2020; pp. 287–312. [Google Scholar]

- Barati Jozan, M.M.; Ghorbani, B.D.; Khalid, M.S.; Lotfata, A.; Tabesh, H. Impact Assessment of E-Trainings in Occupational Safety and Health: A Literature Review. BMC Public Health 2023, 23, 1187. [Google Scholar] [CrossRef]

- Szabó, G. Developing Occupational Safety Skills for Safety Engineering Students in an Electronic Learning Environment. In Management, Innovation and Entrepreneurship in Challenging Global Times, Proceedings of the 16th International Symposium in Management (SIM 2021), Timisoara, Romania, 22–23 October 2001; Prostean, G.I., Lavios, J.J., Brancu, L., Şahin, F., Eds.; Springer International Publishing: Cham, Switzerland, 2024; pp. 211–221. ISBN 978-3-031-47164-3. [Google Scholar]

- Vukićević, A.M.; Mačužić, I.; Djapan, M.; Milićević, V.; Shamina, L. Digital Training and Advanced Learning in Occupational Safety and Health Based on Modern and Affordable Technologies. Sustainability 2021, 13, 13641. [Google Scholar] [CrossRef]

- Castaneda, D.E.; Organista, K.C.; Rodriguez, L.; Check, P. Evaluating an Entertainment–Education Telenovela to Promote Workplace Safety. Sage Open 2013, 3, 2158244013500284. [Google Scholar] [CrossRef]

- Fortuna, A.; Samala, A.D.; Andriani, W.; Rawas, S.; Chai, H.; Compagno, M.; Abbasinia, S.; Nabawi, R.A.; Prasetya, F. Enhancing Occupational Health and Safety Education: A Mobile Gamification Approach in Machining Workshops. Int. J. Inf. Educ. Technol. 2024, 14, 10–18178. [Google Scholar]

- Pietrafesa, E.; Bentivenga, R.; Lalli, P.; Capelli, C.; Farina, G.; Stabile, S. Becoming Safe: A Serious Game for Occupational Safety and Health Training in a WBL Italian Experience; Springer: Berlin/Heidelberg, Germany, 2021; pp. 264–271. [Google Scholar]

- Pham, H.C.; Dao, N.; Pedro, A.; Le, Q.T.; Hussain, R.; Cho, S.; Park, C. Virtual Field Trip for Mobile Construction Safety Education Using 360-Degree Panoramic Virtual Reality. Int. J. Eng. Educ. 2018, 34, 1174–1191. [Google Scholar]

- Klippel, A.; Zhao, J.; Oprean, D.; Wallgrün, J.O.; Stubbs, C.; La Femina, P.; Jackson, K.L. The Value of Being There: Toward a Science of Immersive Virtual Field Trips. Virtual Real. 2020, 24, 753–770. [Google Scholar] [CrossRef]

- Wolf, M.; Wehking, F.; Söbke, H.; Montag, M.; Zander, S.; Springer, C. Virtualised Virtual Field Trips in Environmental Engineering Higher Education. Eur. J. Eng. Educ. 2023, 48, 1312–1334. [Google Scholar] [CrossRef]

- Kim, J.-H.; Kim, M.; Park, M.; Yoo, J. Immersive Interactive Technologies and Virtual Shopping Experiences: Differences in Consumer Perceptions between Augmented Reality (AR) and Virtual Reality (VR). Telemat. Inform. 2023, 77, 101936. [Google Scholar] [CrossRef]

- Verhulst, I.; Woods, A.; Whittaker, L.; Bennett, J.; Dalton, P. Do VR and AR Versions of an Immersive Cultural Experience Engender Different User Experiences? Comput. Hum. Behav. 2021, 125, 106951. [Google Scholar] [CrossRef]

- Das, S.; Wolf, M.; Schmidt, P.; Söbke, H. Mental Workload in Augmented Reality-Based Urban Planning Education. In Proceedings of the IEEE International Symposium on Multimedia (ISM), Laguna Hills, CA, USA, 11–13 December 2023; pp. 303–308. [Google Scholar]

- Stefan, H.; Mortimer, M.; Horan, B. Evaluating the Effectiveness of Virtual Reality for Safety-Relevant Training: A Systematic Review. Virtual Real. 2023, 27, 2839–2869. [Google Scholar] [CrossRef]

- Zhang, X.; He, W.; Bai, J.; Billinghurst, M.; Qin, Y.; Dong, J.; Liu, T. Evaluation of Augmented Reality Instructions Based on Initial and Dynamic Assembly Tolerance Allocation Schemes in Precise Manual Assembly. Adv. Eng. Inform. 2025, 63, 102954. [Google Scholar] [CrossRef]

- Baer, M.; Tregel, T.; Laato, S.; Söbke, H. Virtually (Re) Constructed Reality: The Representation of Physical Space in Commercial Location-Based Games. In Proceedings of the 25th International Academic Mindtrek Conference, New York, NY, USA, 16–18 November 2022; pp. 9–22. [Google Scholar]

- Soyka, F.; Simons, J. Improving the Understanding of Low Frequency Magnetic Field Exposure with Augmented Reality. Int. J. Environ. Res. Public Health 2022, 19, 10564. [Google Scholar] [CrossRef]

- Guth, L.; Söbke, H.; Hornecker, E.; Londong, J. An Augmented Reality-Supported Facility Model in Vocational Training. In Proceedings of the DELFI Workshops 2021, Dortmund, Germany, 13 September 2021; pp. 15–27. [Google Scholar]

- Tatić, D.; Tešić, B. The Application of Augmented Reality Technologies for the Improvement of Occupational Safety in an Industrial Environment. Comput. Ind. 2017, 85, 1–10. [Google Scholar] [CrossRef]

- Ismael, M.; McCall, R.; McGee, F.; Belkacem, I.; Stefas, M.; Baixauli, J.; Arl, D. Acceptance of Augmented Reality for Laboratory Safety Training: Methodology and an Evaluation Study. Front. Virtual Real. 2024, 5, 1322543. [Google Scholar] [CrossRef]

- Taketomi, T.; Uchiyama, H.; Ikeda, S. Visual SLAM Algorithms: A Survey from 2010 to 2016. IPSJ Trans. Comput. Vis. Appl. 2017, 9, 16. [Google Scholar] [CrossRef]

- Marques, B.; Carvalho, R.; Dias, P.; Oliveira, M.; Ferreira, C.; Santos, B.S. Evaluating and Enhancing Google Tango Localization in Indoor Environments Using Fiducial Markers. In Proceedings of the IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Torres Vedras, Portugal, 25–27 April 2018; pp. 142–147. [Google Scholar]

- Weiss, S.; Scaramuzza, D.; Siegwart, R. Monocular-SLAM–Based Navigation for Autonomous Micro Helicopters in GPS-Denied Environments. J. Field Robot. 2011, 28, 854–874. [Google Scholar] [CrossRef]

- Yeh, Y.-J.; Lin, H.-Y. 3D Reconstruction and Visual SLAM of Indoor Scenes for Augmented Reality Application. In Proceedings of the 14th International Conference on Control and Automation (ICCA), Anchorage, AK, USA, 12–15 June 2018; pp. 94–99. [Google Scholar]

- Nguyen, K.A.; Luo, Z. On Assessing the Positioning Accuracy of Google Tango in Challenging Indoor Environments. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017; pp. 1–8. [Google Scholar]

- Rahimian, F.P.; Chavdarova, V.; Oliver, S.; Chamo, F.; Amobi, L.P. OpenBIM-Tango Integrated Virtual Showroom for Offsite Manufactured Production of Self-Build Housing. Autom. Constr. 2019, 102, 1–16. [Google Scholar] [CrossRef]

- Jafri, R.; Campos, R.L.; Ali, S.A.; Arabnia, H.R. Visual and Infrared Sensor Data-Based Obstacle Detection for the Visually Impaired Using the Google Project Tango Tablet Development Kit and the Unity Engine. IEEE Access 2017, 6, 443–454. [Google Scholar] [CrossRef]

- Voinea, G.-D.; Girbacia, F.; Postelnicu, C.C.; Marto, A. Exploring Cultural Heritage Using Augmented Reality Through Google’s Project Tango and ARCore; Springer: Berlin/Heidelberg, Germany, 2019; pp. 93–106. [Google Scholar]

- VDI/VDE Innovation + Technik GmbH ILEHLE-Ein vom BMBF Gefördertes Projekt zur Mensch-Technik-Interaktion. Available online: https://www.youtube.com/watch?v=2Gn1BHP8ijs (accessed on 20 January 2024).

- Reinking, D.; Alvermann, D.E. What Are Evaluation Studies, and Should They Be Published in” RRQ”? Read. Res. Q. 2005, 40, 142–146. [Google Scholar] [CrossRef]

- Brooke, J. SUS-A Quick and Dirty Usability Scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Klepsch, M.; Schmitz, F.; Seufert, T. Development and Validation of Two Instruments Measuring Intrinsic, Extraneous, and Germane Cognitive Load. Front. Psychol. 2017, 8, 1997. [Google Scholar] [CrossRef]

- Chandler, P.; Sweller, J. Cognitive Load Theory and the Format of Instruction. Cogn. Instr. 1991, 8, 293–332. [Google Scholar] [CrossRef]

- Isen, A.M.; Reeve, J. The Influence of Positive Affect on Intrinsic and Extrinsic Motivation: Facilitating Enjoyment of Play, Responsible Work Behavior, and Self-Control. Motiv. Emot. 2005, 29, 295–323. [Google Scholar] [CrossRef]

- Pintrich, P.R. A Motivational Science Perspective on the Role of Student Motivation in Learning and Teaching Contexts. J. Educ. Psychol. 2003, 95, 667. [Google Scholar] [CrossRef]

- Pekrun, R.; Goetz, T.; Frenzel, A.C.; Barchfeld, P.; Perry, R.P. Measuring Emotions in Students’ Learning and Performance: The Achievement Emotions Questionnaire (AEQ). Contemp. Educ. Psychol. 2011, 36, 36–48. [Google Scholar] [CrossRef]

- Tyng, C.M.; Amin, H.U.; Saad, M.N.; Malik, A.S. The Influences of Emotion on Learning and Memory. Front. Psychol. 2017, 8, 235933. [Google Scholar] [CrossRef]

- Schmidt, C. The Analysis of Semi-Structured Interviews. In A Companion to Qualitative Research; Flick, U., von Kardorff, E., Steinke, I., Eds.; SAGE Publications: London, UK, 2004; pp. 253–258. ISBN 0761973753. [Google Scholar]

- Cohen, J. Statistical Power Analysis. Curr. Dir. Psychol. Sci. 1992, 3, 79–112. [Google Scholar] [CrossRef]

- Makransky, G.; Petersen, G.B. The Cognitive Affective Model of Immersive Learning (CAMIL): A Theoretical Research-Based Model of Learning in Immersive Virtual Reality. Educ. Psychol. Rev. 2021, 33, 937–958. [Google Scholar] [CrossRef]

- Bozzelli, G.; Raia, A.; Ricciardi, S.; De Nino, M.; Barile, N.; Perrella, M.; Tramontano, M.; Pagano, A.; Palombini, A. An Integrated VR/AR Framework for User-Centric Interactive Experience of Cultural Heritage: The ArkaeVision Project. Digit. Appl. Archaeol. Cult. Herit. 2019, 15, e00124. [Google Scholar] [CrossRef]

- Peters, M.; Simó, L.P.; Amatller, A.M.; Liñan, L.C.; Kreitz, P.B. Toward A User-Centred Design Approach for AR Technologies in Online Higher Education. In Proceedings of the 7th International Conference of the Immersive Learning Research Network (iLRN), Eureka, CA, USA, 17 May–10 June 2021; pp. 1–8. [Google Scholar]

- Schweiß, T.; Thomaschewski, L.; Kluge, A.; Weyers, B. Software Engineering for AR-Systems Considering User Centered Design Approaches. In Proceedings of the MCI-WS07: Virtual and Augmented Reality in Everyday Context (VARECo), Hamburg, Germany, 8–11 September 2019. [Google Scholar]

- Sommerauer, P.; Müller, O. Augmented Reality for Teaching and Learning—A Literature Review on Theoretical and Empirical Foundations. In Proceedings of the Twenty-Sixth European Conference on Information Systems (ECIS2018), Portsmouth, UK, 23–28 June 2018; Volume 2, pp. 31–35. [Google Scholar]

- Herbert, B.; Ens, B.; Weerasinghe, A.; Billinghurst, M.; Wigley, G. Design Considerations for Combining Augmented Reality with Intelligent Tutors. Comput. Graph. 2018, 77, 166–182. [Google Scholar] [CrossRef]

- Lampropoulos, G. Augmented Reality and Artificial Intelligence in Education: Toward Immersive Intelligent Tutoring Systems. In Augmented Reality and Artificial Intelligence: The Fusion of Advanced Technologies; Springer: Berlin/Heidelberg, Germany, 2023; pp. 137–146. [Google Scholar]

- Sato, Y.; Minemoto, K.; Nemoto, M.; Torii, T. Construction of Virtual Reality System for Radiation Working Environment Reproduced by Gamma-Ray Imagers Combined with SLAM Technologies. Nucl. Instrum. Methods Phys. Res. Sect. Accel. Spectrometers Detect. Assoc. Equip. 2020, 976, 164286. [Google Scholar] [CrossRef]

- Das, S.; Nakshatram, S.V.; Söbke, H.; Hauge, J.B.; Springer, C. Towards Gamification for Spatial Digital Learning Environments. Entertain. Comput. 2025, 52, 100893. [Google Scholar] [CrossRef]

- Lampropoulos, G.; Keramopoulos, E.; Diamantaras, K.; Evangelidis, G. Augmented Reality and Gamification in Education: A Systematic Literature Review of Research, Applications, and Empirical Studies. Appl. Sci. 2022, 12, 6809. [Google Scholar] [CrossRef]

- Ilic, A.; Fleisch, E. Augmented Reality and the Internet of Things; ETH Zurich: Zurich, Switzerland, 2016. [Google Scholar]

- Wolf, M.; Riyad, P.; Söbke, H.; Mellenthin Filardo, M.; Oehler, D.A.; Melzner, J.; Kraft, E. Metaverse-Based Approach in Urban Planning: Enhancing Wastewater Infrastructure Planning Using Augmented Reality. In Augmented and Virtual Reality in the Metaverse; Springer: Berlin/Heidelberg, Germany, 2024; pp. 311–338. [Google Scholar]

- Christopoulos, A.; Mystakidis, S.; Pellas, N.; Laakso, M.-J. ARLEAN: An Augmented Reality Learning Analytics Ethical Framework. Computers 2021, 10, 92. [Google Scholar] [CrossRef]

- Kazanidis, I.; Pellas, N.; Christopoulos, A. A Learning Analytics Conceptual Framework for Augmented Reality-Supported Educational Case Studies. Multimodal Technol. Interact. 2021, 5, 9. [Google Scholar] [CrossRef]

- Secretan, J.; Wild, F.; Guest, W. Learning Analytics in Augmented Reality: Blueprint for an AR/xAPI Framework. In Proceedings of the IEEE International Conference on Engineering, Technology and Education (TALE), Yogyakarta, Indonesia, 10–13 December 2019; pp. 1–6. [Google Scholar]

- Joseph, S. Measuring Cognitive Load: A Comparison of Self-Report and Physiological Methods. Ph.D. Thesis, Arizona State University, Tempe, AZ, USA, 2013. [Google Scholar]

- Solhjoo, S.; Haigney, M.C.; McBee, E.; van Merrienboer, J.J.; Schuwirth, L.; Artino, A.R., Jr.; Battista, A.; Ratcliffe, T.A.; Lee, H.D.; Durning, S.J. Heart Rate and Heart Rate Variability Correlate with Clinical Reasoning Performance and Self-Reported Measures of Cognitive Load. Sci. Rep. 2019, 9, 14668. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).