Artificial Intelligence to Detect Obstructive Sleep Apnea from Craniofacial Images: A Narrative Review

Abstract

1. Introduction

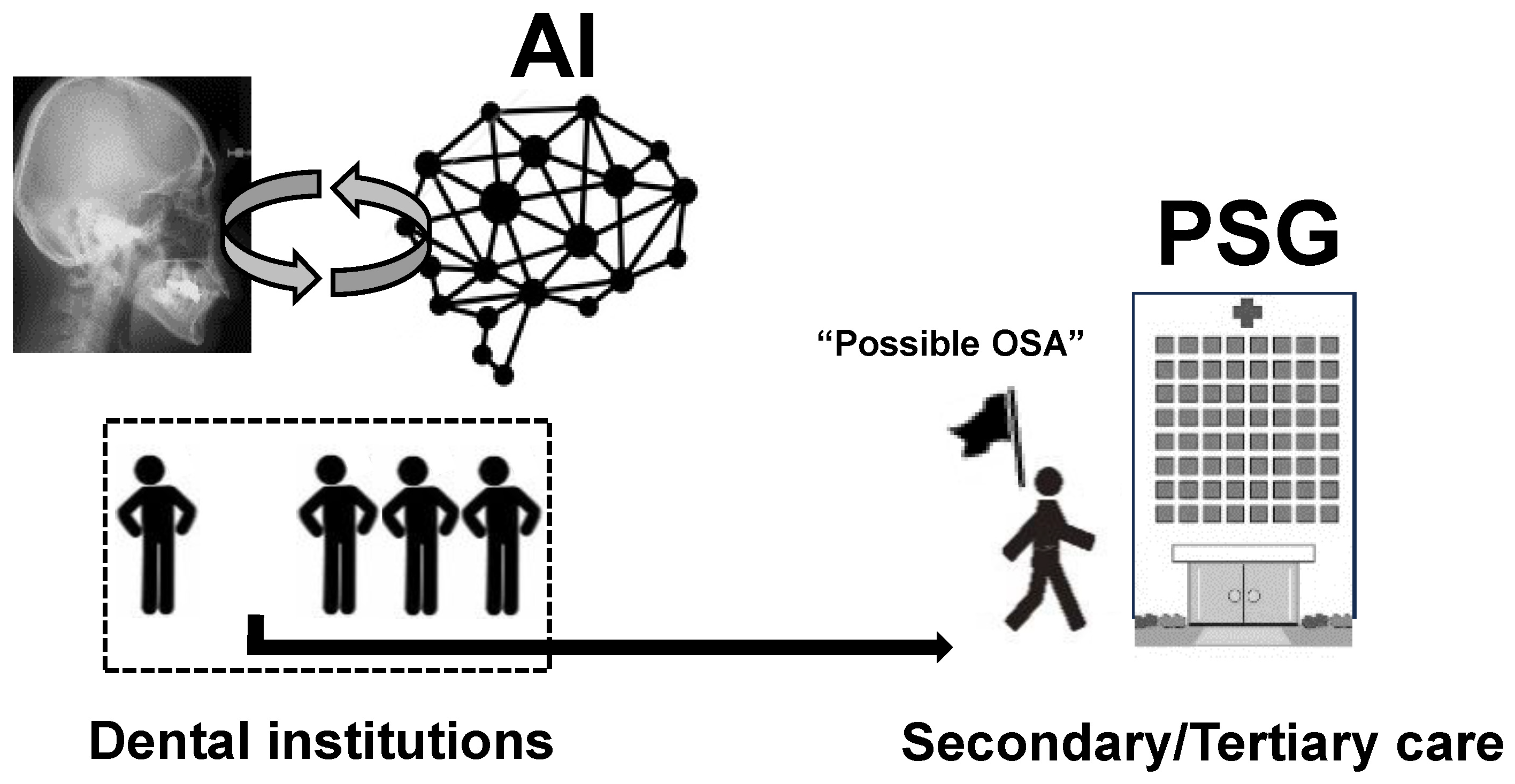

2. Understanding the Pathogenesis of OSA from a Dental Perspective

3. Concept of AI Use in Detecting OSA from Images

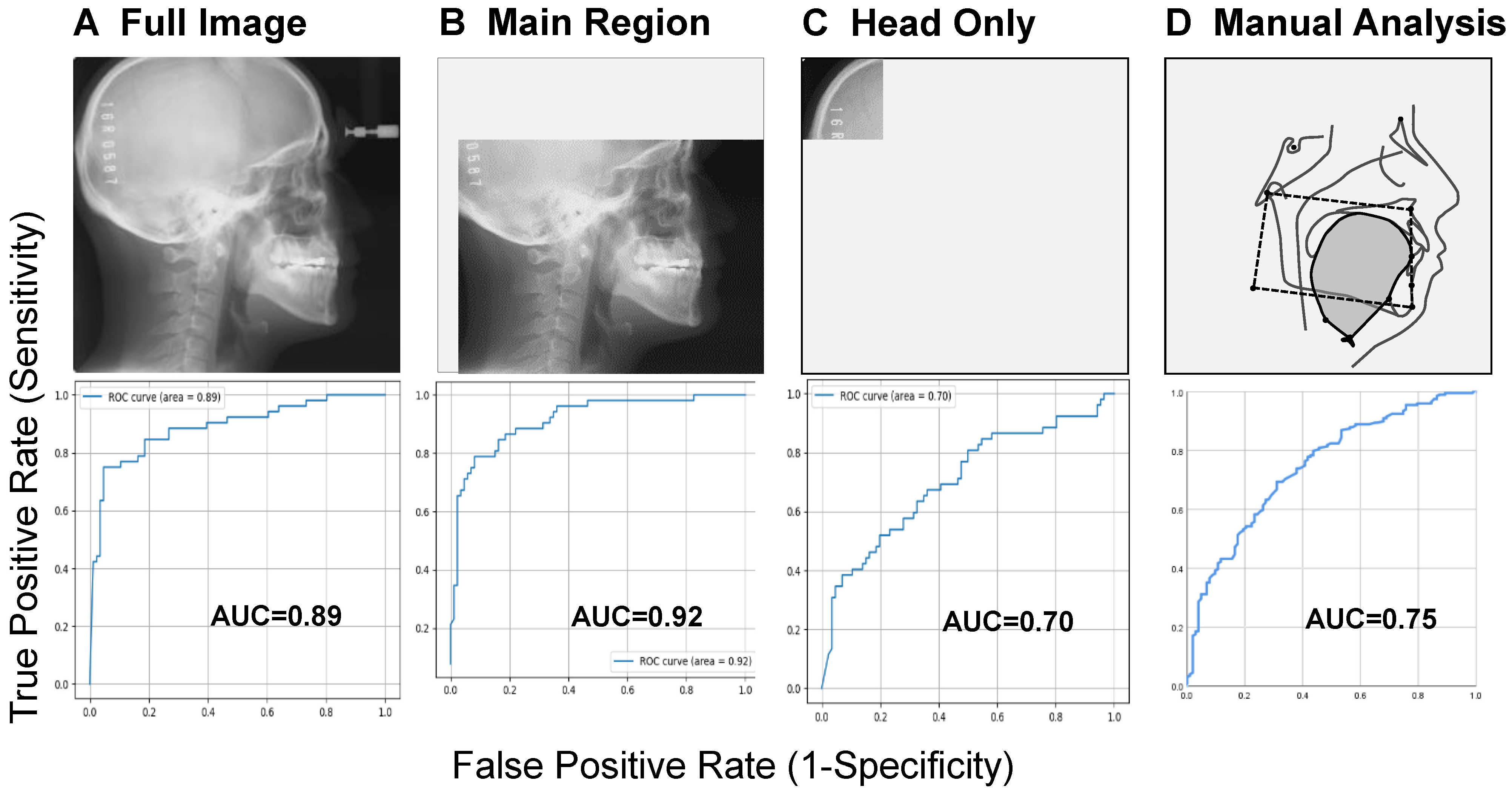

4. What Does AI Focus on in a Lateral Cephalometric Image?

5. Interpretability and Stratification in AI-Based OSA Detection

6. What AI Can See in Images That Humans Cannot: Perspectives

7. Conclusions

8. Patents

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Isono, S. Obstructive sleep apnea of obese adults: Pathophysiology and perioperative airway management. Anesthesiology 2009, 110, 908–921. [Google Scholar] [CrossRef]

- Asaoka, S.; Namba, K.; Tsuiki, S.; Komada, Y.; Inoue, Y. Excessive daytime sleepiness among Japanese public transportation drivers engaged in shiftwork. J. Occup. Environ. Med. 2010, 52, 813–818. [Google Scholar] [CrossRef]

- Hirsch Allen, A.J.M.; Bansback, N.; Ayas, N.T. The effect of OSA on work disability and work-related injuries. Chest 2015, 147, 1422–1428. [Google Scholar] [CrossRef]

- Akashiba, T.; Inoue, Y.; Uchimura, N.; Ohi, M.; Kasai, T.; Kawana, F.; Sakurai, S.; Takegami, M.; Tachikawa, R.; Tanigawa, T.; et al. Sleep Apnea Syndrome (SAS) Clinical Practice Guidelines 2020. Sleep Biol. Rhythms 2022, 20, 5–37. [Google Scholar] [CrossRef]

- Neelapu, B.C.; Kharbanda, O.P.; Sardana, H.K.; Balachandran, R.; Sardana, V.; Kapoor, P.; Gupta, A.; Vasamsetti, S. Craniofacial and upper airway morphology in adult obstructive sleep apnea patients: A systematic review and meta-analysis of cephalometric studies. Sleep. Med. Rev. 2017, 31, 79–90. [Google Scholar] [CrossRef] [PubMed]

- Tsuiki, S.; Kohzuka, Y.; Fukuda, T.; Iijima, T. Contribution of dentists to detecting obstructive sleep apnea. J. Oral Sleep. Med. 2023, 9, 25–32, (In Japanese with English Abstract). [Google Scholar]

- Eckert, D.J. Phenotypic approaches to obstructive sleep apnoea—New pathways for targeted therapy. Sleep. Med. Rev. 2018, 37, 45–59. [Google Scholar] [CrossRef]

- Carberry, J.C.; Amatoury, J.; Eckert, D.J. Personalized management approach for obstructive sleep apnea. Chest 2018, 153, 744–755. [Google Scholar] [CrossRef]

- Tsuiki, S.; Nagaoka, T.; Fukuda, T.; Sakamoto, Y.; Almeida, F.R.; Nakayama, H.; Inoue, Y.; Enno, H. Machine learning for image-based detection of patients with obstructive sleep apnea: An exploratory study. Sleep Breath. 2021, 25, 2297–2305. [Google Scholar] [CrossRef]

- Tsuiki, S.; Isono, S.; Ishikawa, T.; Yamashiro, Y.; Tatsumi, K.; Nishino, T. Anatomical balance of the upper airway and obstructive sleep apnea. Anesthesiology 2008, 108, 1009–1015. [Google Scholar] [CrossRef] [PubMed]

- Watanabe, T.; Isono, S.; Tanaka, A.; Tanzawa, H.; Nishino, T. Contribution of body habitus and craniofacial characteristics to segmental closing pressures of the passive pharynx in patients with sleep-disordered breathing. Am. J. Respir. Crit. Care Med. 2002, 165, 260–265. [Google Scholar] [CrossRef]

- Ito, E.; Tsuiki, S.; Maeda, K.; Okajima, I.; Inoue, Y. Oropharyngeal crowding closely relates to aggravation of OSA. Chest 2016, 150, 346–352. [Google Scholar] [CrossRef]

- Meyer, A.; Zverinski, D.; Pfahringer, B.; Kempfert, J.; Kuehne, T.; Sündermann, S.H.; Stamm, C.; Hofmann, T.; Falk, V.; Eickhoff, C. Machine learning for real-time prediction of complications in critical care: A retrospective study. Lancet Respir. Med. 2018, 6, 905–914. [Google Scholar] [CrossRef] [PubMed]

- Xu, Z.; Lin, A.; Han, X. Current AI applications and challenges in oral pathology. Oral 2025, 5, 2. [Google Scholar] [CrossRef] [PubMed]

- Amari, S. Dynamics of pattern formation in lateral-inhibition type neural fields. Biol. Cybern. 1977, 27, 77–87. [Google Scholar] [CrossRef] [PubMed]

- Dominguez, D.; Koroutchev, K.; Serrano, E.; Rodríguez, F.B. Information and topology in attractor neural networks. Neural Comput. 2007, 19, 956–973. [Google Scholar] [CrossRef]

- Hopfield, J.J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. USA 1982, 79, 2554–2558. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Jeong, H.G.; Kim, T.; Hong, J.E.; Kim, H.J.; Yun, S.Y.; Kim, S.; Yoo, J.; Lee, S.H.; Thomas, R.J.; Yun, C.H. Automated deep neural network analysis of lateral cephalogram data can aid in detecting obstructive sleep apnea. J. Clin. Sleep Med. 2023, 19, 327–337. [Google Scholar] [CrossRef]

- Pang, B.; Doshi, S.; Roy, B.; Lai, M.; Ehlert, L.; Aysola, R.S.; Kang, D.W.; Anderson, A.; Joshi, S.H.; Tward, D.; et al. Machine learning approach for obstructive sleep apnea screening using brain diffusion tensor imaging. J. Sleep Res. 2023, 32, e13729. [Google Scholar] [CrossRef]

- Bommineni, V.L.; Erus, G.; Doshi, J.; Singh, A.; Keenan, B.T.; Schwab, R.J.; Wiemken, A.; Davatzikos, C. Automatic Segmentation and Quantification of Upper Airway Anatomic Risk Factors for Obstructive Sleep Apnea on Unprocessed Magnetic Resonance Images. Acad. Radiol. 2023, 30, 421–430. [Google Scholar] [CrossRef]

- Kim, J.W.; Lee, K.; Kim, H.J.; Park, H.C.; Hwang, J.Y.; Park, S.W.; Kong, H.J.; Kim, J.Y. Predicting Obstructive Sleep Apnea Based on Computed Tomography Scans Using Deep Learning Models. Am. J. Respir. Crit. Care Med. 2024, 210, 211–221. [Google Scholar] [CrossRef]

- Giorgi, L.; Nardelli, D.; Moffa, A.; Iafrati, F.; Di Giovanni, S.; Olszewska, E.; Baptista, P.; Sabatino, L.; Casale, M. Advancements in Obstructive Sleep Apnea Diagnosis and Screening Through Artificial Intelligence: A Systematic Review. Healthcare 2025, 13, 181. [Google Scholar] [CrossRef]

- He, S.; Li, Y.; Zhang, C.; Li, Z.; Ren, Y.; Li, T.; Wang, J. Deep learning technique to detect craniofacial anatomical abnormalities concentrated on middle and anterior of face in patients with sleep apnea. Sleep Med. 2023, 112, 12–20. [Google Scholar] [CrossRef]

- Isono, S.; Tanaka, A.; Tagaito, Y.; Ishikawa, T.; Nishino, T. Influences of head positions and bite opening on collapsibility of the passive pharynx. J. Appl. Physiol. 2004, 97, 339–346. [Google Scholar] [CrossRef] [PubMed]

- Isono, S.; Tsuiki, S. Difficult tracheal intubation and a low hyoid. Anesthesiology 2009, 110, 431. [Google Scholar] [CrossRef]

- Martin, S.E.; Mathur, R.; Marshall, I.; Douglas, N.J. The effect of age, sex, obesity and posture on upper airway size. Eur. Respir. J. 1997, 10, 2087–2090. [Google Scholar] [CrossRef] [PubMed]

- Malhotra, A.; Huang, Y.; Fogel, R.B.; Pillar, G.; Edwards, J.K.; Kikinis, R.; Loring, S.H.; White, D.P. The male predisposition to pharyngeal collapse: Importance of airway length. Am. J. Respir. Crit. Care Med. 2002, 166, 1388–1395. [Google Scholar] [CrossRef]

- Popovic, R.M.; White, D.P. Influence of gender on waking genioglossal electromyogram and upper airway resistance. Am. J. Respir. Crit. Care Med. 1995, 152, 725–731. [Google Scholar] [CrossRef] [PubMed]

- Van de Graaff, W.B.; Gotteried, S.B.; Mitra, J.; Van Lunteren, E.; Cherniack, N.S.; Strohl, K.P. Respiratory function of hyoid muscles and hyoid arch. J. Appl. Physiol. 1984, 57, 197–204. [Google Scholar] [CrossRef]

- Kuna, S.T.; Remmers, J.E. Anatomy and physiology of upper airway obstruction. In Principles and Practice of Sleep Medicine, 3rd ed.; Kryger, M.H., Roth, T., Dement, W.C., Eds.; WB Saunders: Philadelphia, PA, USA, 2000; pp. 840–858. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Computer Vision—ECCV 2014; Lecture Notes in Computer Science; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014; Volume 8689, pp. 818–833. [Google Scholar]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Curran Associates, Inc.: Red Hook, NY, USA, 2017; pp. 4765–4774. [Google Scholar]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why should I trust you?”: Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD’16), San Francisco, CA, USA, 13–17 August 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 1135–1144. [Google Scholar]

- Alimova, S.; Sharobaro, V.; Yukhno, A.; Bondarenko, E. Possibilities of Ultrasound Examination in the Assessment of Age-Related Changes in the Soft Tissues of the Face and Neck: A Review. Appl. Sci. 2023, 13, 1128. [Google Scholar] [CrossRef]

- Oliveira, W.; Albuquerque Santos, M.; Burgardt, C.A.P.; Anjos Pontual, M.L.; Zanchettin, C. Estimation of human age using machine learning on panoramic radiographs for Brazilian patients. Sci. Rep. 2024, 14, 19689. [Google Scholar] [CrossRef] [PubMed]

- Bizjak, Ž.; Robič, T. DentAge: Deep learning for automated age prediction using panoramic dental X-ray images. J. Forensic Sci. 2024, 69, 2069–2074. [Google Scholar] [CrossRef]

- Kahm, S.H.; Kim, J.Y.; Yoo, S.; Bae, S.M.; Kang, J.E.; Lee, S.H. Application of entire dental panorama image data in artificial intelligence model for age estimation. BMC Oral Health 2023, 23, 1007. [Google Scholar] [CrossRef]

- Alam, S.S.; Rashid, N.; Faiza, T.A.; Ahmed, S.; Hassan, R.A.; Dudley, J.; Farook, T.H. Estimating Age and Sex from Dental Panoramic Radiographs Using Neural Networks and Vision–Language Models. Oral 2025, 5, 3. [Google Scholar] [CrossRef]

- Kim, M.J.; Jeong, J.; Lee, J.W.; Kim, I.H.; Park, J.W.; Roh, J.Y.; Kim, N.; Kim, S.J. Screening obstructive sleep apnea patients via deep learning of knowledge distillation in the lateral cephalogram. Sci. Rep. 2023, 13, 17788. [Google Scholar] [CrossRef] [PubMed]

- Goldstein, C.A.; Berry, R.B.; Kent, D.T.; Kristo, D.A.; Seixas, A.A.; Redline, S.; Westover, M.B.; Abbasi-Feinberg, F.; Aurora, R.N.; Carden, K.A.; et al. Artificial intelligence in sleep medicine: An American Academy of Sleep Medicine position statement. J. Clin. Sleep Med. 2020, 16, 605–607. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tsuiki, S.; Furuhashi, A.; Ito, E.; Fukuda, T. Artificial Intelligence to Detect Obstructive Sleep Apnea from Craniofacial Images: A Narrative Review. Oral 2025, 5, 76. https://doi.org/10.3390/oral5040076

Tsuiki S, Furuhashi A, Ito E, Fukuda T. Artificial Intelligence to Detect Obstructive Sleep Apnea from Craniofacial Images: A Narrative Review. Oral. 2025; 5(4):76. https://doi.org/10.3390/oral5040076

Chicago/Turabian StyleTsuiki, Satoru, Akifumi Furuhashi, Eiki Ito, and Tatsuya Fukuda. 2025. "Artificial Intelligence to Detect Obstructive Sleep Apnea from Craniofacial Images: A Narrative Review" Oral 5, no. 4: 76. https://doi.org/10.3390/oral5040076

APA StyleTsuiki, S., Furuhashi, A., Ito, E., & Fukuda, T. (2025). Artificial Intelligence to Detect Obstructive Sleep Apnea from Craniofacial Images: A Narrative Review. Oral, 5(4), 76. https://doi.org/10.3390/oral5040076