1. Introduction

Diagnosing oral white lesions is complex due to the broad spectrum of conditions they encompass, ranging from benign reactive lesions to potentially serious dysplastic or neoplastic ones. Characteristic features can help distinguish these lesions, but similarities often complicate the diagnosis, necessitating biopsies for confirmation. Establishing a definitive diagnosis is crucial to avoid delays in treating patients with more severe conditions [

1,

2].

Elementary white mucosal lesions (EWMLs) constitute only 5% of oral pathologies, yet some, such as leukoplakia and lichen planus, carry significant malignant potential (up to 0.5–100%). Therefore, a thorough clinical diagnostic approach is essential to rule out malignancy or premalignancy [

3].

The clinical signs of these conditions vary, and only senior oral medicine experts have demonstrated high reliability in distinguishing them. General dentists, especially trainees, may exhibit lower diagnostic accuracies. An AI system tailored to assist dental students and trainees could be a valuable tool in effectively distinguishing EWMLs [

3,

4,

5].

EWMLs can result from a thickened keratotic layer or the accumulation of non-keratotic material, arising from factors such as local frictional irritation, immunologic reactions, or more serious processes like premalignant or malignant transformation [

3].

Frictional hyperkeratosis, a benign lesion, is characterized by epithelial thickening due to chronic irritation, commonly from ill-fitting dental appliances or habitual cheek biting [

6]. Oral leukoplakia (OL) presents as a white patch or plaque that cannot be clinically or histologically attributed to other diseases, often associated with irritants like tobacco and alcohol [

7]. Oral lichen planus (OLP) is a chronic mucocutaneous disorder believed to be immune-mediated, presenting with various patterns, including reticular and erosive forms [

8,

9]. Oral lichenoid reactions (OLR) resemble OLP but arise from medications or dental materials [

9,

10,

11]. Oral condylomas and papillomas are benign HPV-related lesions with whitish growths on the mucosa [

12,

13,

14]. Oral candidiasis, or thrush, is a fungal infection characterized by forms such as pseudomembranous and hyperplastic [

15,

16,

17].

Several mobile applications have been proposed to improve the clinical recognition of oral lesions among medical and dental professionals [

18,

19]. Machine learning (ML), a subset of artificial intelligence (AI), has emerged as a potent tool for supporting diagnostic tasks in various fields, including healthcare and dentistry. Deep learning (DL) is a subset of ML that involves neural networks with many layers [

8,

9]. These neural networks are designed to automatically learn and extract features from raw data through a process of hierarchical representation [

10]. In the context of this study, DL refers to the use of such advanced neural network architectures to analyze and classify clinical images of oral lesions, enabling the model to detect and differentiate between various types of lesions with high accuracy [

11]. DL employs multi-layered neural networks, such as convolutional neural networks (CNNs), to discern intricate patterns in data, particularly in complex structures like medical images. CNNs have played a crucial role in detecting a variety of medical conditions, such as breast cancer in mammograms, skin cancer in clinical screenings, and diabetic retinopathy in retinal images. Similarly, in dentistry, CNNs have shown remarkable effectiveness in identifying different conditions like periodontal bone loss, apical lesions, and caries lesions, achieving high levels of accuracy [

8,

9,

12].

AI has transformed medical and dental diagnostics by analyzing and classifying data, enabling precise diagnoses. DL models, especially CNNs, extract features like gradient differences and analyze shape, contour, and pattern, facilitating efficient detection and differentiation of EWMLs [

20,

21,

22,

23,

24,

25]. The relevance of AI to oral health is profound. By improving early detection of potentially malignant lesions, AI can enable timely interventions and better patient outcomes. The consistency and objectivity of AI analyses reduce variability and enhance diagnostic accuracy, making the diagnostic process more reliable. Moreover, AI tools can streamline workflows, saving time and resources for practitioners and patients. In educational settings, AI aids in training future dental professionals to effectively diagnose and manage various oral conditions. As of today, there is a lack of AI systems tailored to differentiate EWMLs. Our research aims to fill this critical gap in current diagnostic practices by exploring the effectiveness of a deep learning model in detecting and classifying EWMLs from oral images. The central research question driving this study is: How effective is a deep learning model in detecting and classifying EWMLs in oral images? We hypothesize that the model will achieve high accuracy, potentially outperforming traditional diagnostic methods, and effectively assist trainee dentists and dental students in differentiating EWMLs, thereby improving their diagnostic skills and contributing to more accurate clinical outcomes.

2. Materials and Methods

The initial dataset consisted of 231 clinical oral photographs selected from the database of the Oral Medicine Unit at University Hospital “Policlinico Paolo Giaccone” Palermo, Italy.

The study protocol conformed with the ethical guidelines of the 1964 Declaration of Helsinki and its later amendments or comparable ethical standards.

These photographs were selected from the database during the period from 29 March 2024 to 8 July 2024. The photographs, which had been originally taken prior to this period, were included in the dataset after obtaining informed consent from patients (ethical approval n.12 07/05/2024). The dataset aimed to represent various oral conditions, including oral leukoplakia, striae and reticular figurations, morsicatio buccarum, linea alba, hyperplastic candidiasis, papilloma, and condyloma. Out of these, 10 photos were discarded during the labeling phase, resulting in a final dataset of 221 photos. The discarded photos did not meet the fundamental requirements for accurate lesion detection. Specifically, these images were removed due to issues such as poor focus, inadequate lighting, or repetitive content. These quality concerns were deemed critical to ensure that the dataset used for training and evaluation was of high integrity, thus supporting reliable model performance. These photographs were meticulously categorized after definitive histopathologic diagnoses (when necessary) into five distinct classes, depicting various manifestations of oral mucosal lesions.

The classification comprised 60 images for striate-reticular lesions, 30 for morsicatio buccarum, 24 for linea alba, 39 for leukoplakia, 40 for condyloma-papilloma, and 28 for oral candidiasis (

Table 1). To ensure diversity and randomness in the dataset, photographs of both male and female patients were randomly selected.

A Nikon D7200 digital camera, equipped with a 105 mm lens and a macro flash SB-R200, was utilized for capturing the oral mucosal lesions. The resolution of the photographs varied, with dimensions of 6000 × 4000 and 4800 × 3200 pixels. A horizontal resolution of 300 dpi and a vertical resolution of 300 dpi were maintained, with a bit depth of 24 and color representation in sRGB. The input images were resized while preserving their original aspect ratio, with the new maximum dimension set to 1024 pixels and the minimum dimension set to 600 pixels. These specifications ensured high-quality images suitable for detailed analysis and classification.

2.1. Labeling Phase

For labeling the photographs in the dataset, a manual annotation process was employed. The image labeling and model training processes were conducted using the SentiSight.AI (Neurotechnology Co.®, Vilnius, Lithuania) web-based tool. Patient privacy was ensured through a confidentiality agreement with Neurotechnology, confirming that images on the SentiSight.AI platform were used only for model training, not shared with third parties, and stored securely. Each photograph underwent a thorough examination by trained professional senior experts in oral medicine. Utilizing a manual annotation process, clinical images were labeled with bounding boxes outlining regions of interest (ROIs) corresponding to specific lesions depicted. These ROIs were subsequently assigned appropriate class identifiers corresponding to the type of oral mucosal lesion present.

In instances where the lesion spanned a significant area, necessitating the delineation of a large region that could potentially include non-relevant elements such as teeth, clinicians opted to create multiple bounding boxes to optimize the utilization of the photograph without introducing undesired elements irrelevant to the lesion. This approach ensured that the model received optimal training data, minimizing the risk of incorporating extraneous information during the training process.

In some cases, clinicians utilized the inversion of image colors (negative) to facilitate the recognition of certain lesions.

2.2. Model Training

The algorithm used for training was Faster R-CNN with ResNet-101, a powerful CNN architecture. This model, with 44.5 million parameters and 347 layers, served as the backbone network for the detection and classification tasks. Pre-trained weights from the MS COCO dataset were utilized to initialize the network, leveraging the rich feature representations learned from a diverse range of objects and scenes. Subsequently, the entire network was fine-tuned on our dataset of oral mucosal lesion images.

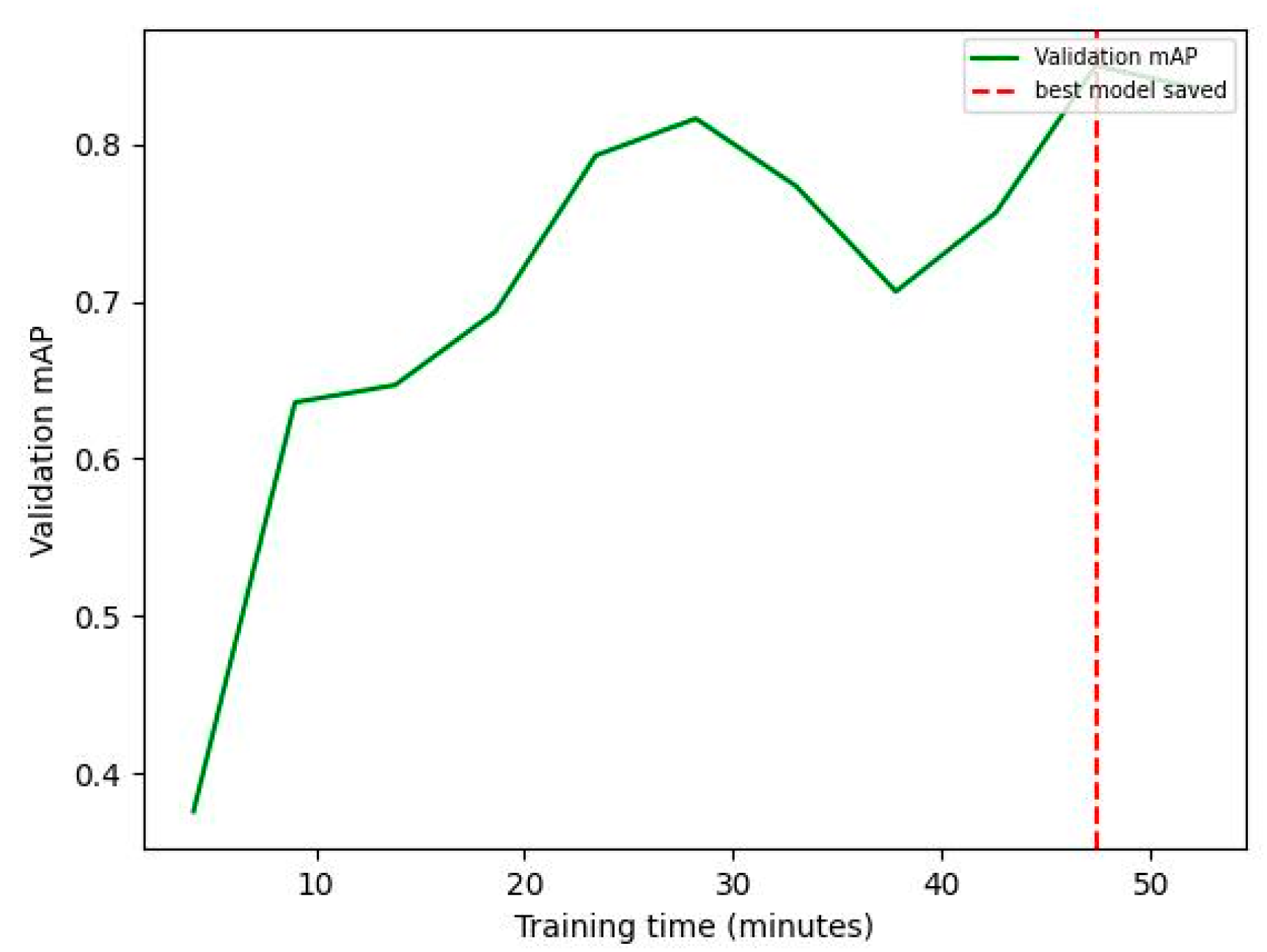

The total training time amounted to 60 min and 27 s. For each of the five classes, an 80% random subset (179 photos) served as the training set, while the remaining 20% (42 photos) constituted the validation set. During fine-tuning, a flat learning rate of 10 × 10

−3 and a large model size were employed throughout training, ensuring stable convergence while updating the network parameters. The Stochastic Gradient Descent (SGD) optimizer with momentum was utilized for efficient optimization, facilitating rapid convergence towards a locally optimal solution. Additionally, a batch size of one image was used, allowing for dynamic adjustments during training to accommodate variations in image characteristics and lesion manifestations. The selection of the model checkpoint was based on the highest mean average precision (mAP) metric achieved on the validation set, which occurred at 47 min and 22 s (

Figure 1). The cross-entropy loss function was used. Regarding the hardware used for training, the SentiSight.AI web-based tool was utilized. It is important to note that the hardware choice does not influence the accuracy of predictions and, therefore, is not relevant to the scientific study. The focus lies on the methodology, algorithms, and data quality rather than the specific hardware configuration. The best model occurred at 47 min and 22 s.

2.3. Model Validation and Evaluation

The model performance was evaluated on the validation set, which comprised 42 images that were unseen during training, ensuring an independent assessment. A threshold intersection over union (IoU) value of 0.5 was utilized to assess the prediction performance. IoU measures the overlap between the predicted bounding box and the ground truth box, with higher values indicating a closer match. IoU was used to classify predictions as true positive (TP), false positive (FP), and false negative (FN). Specifically, if the predicted bounding box had an IoU ≥ 0.5 with the ground truth bounding box, it was considered a TP. Conversely, if the predicted bounding box had an IoU < 0.5 with the ground truth bounding box, it was considered an FP. Additionally, if the ground truth bounding box was not detected by the model, it was considered an FN.

Global metrics for the validation set were computed using precision, sensitivity,

F1 score (harmonic mean of precision and sensitivity, balancing the two metrics), and

mAP (Equation (1)).

Equation (1): Metrics of performance for CNN models. Abbreviations: FN, false negative; FP, false positive; TP, true positive; AP, average precision; mAP, mean average precision.

3. Results

The evaluation of the five-class object detection model was conducted on the validation set. Overall statistics for the entire validation test are summarized in

Table 2, while detailed statistics for each class are reported in

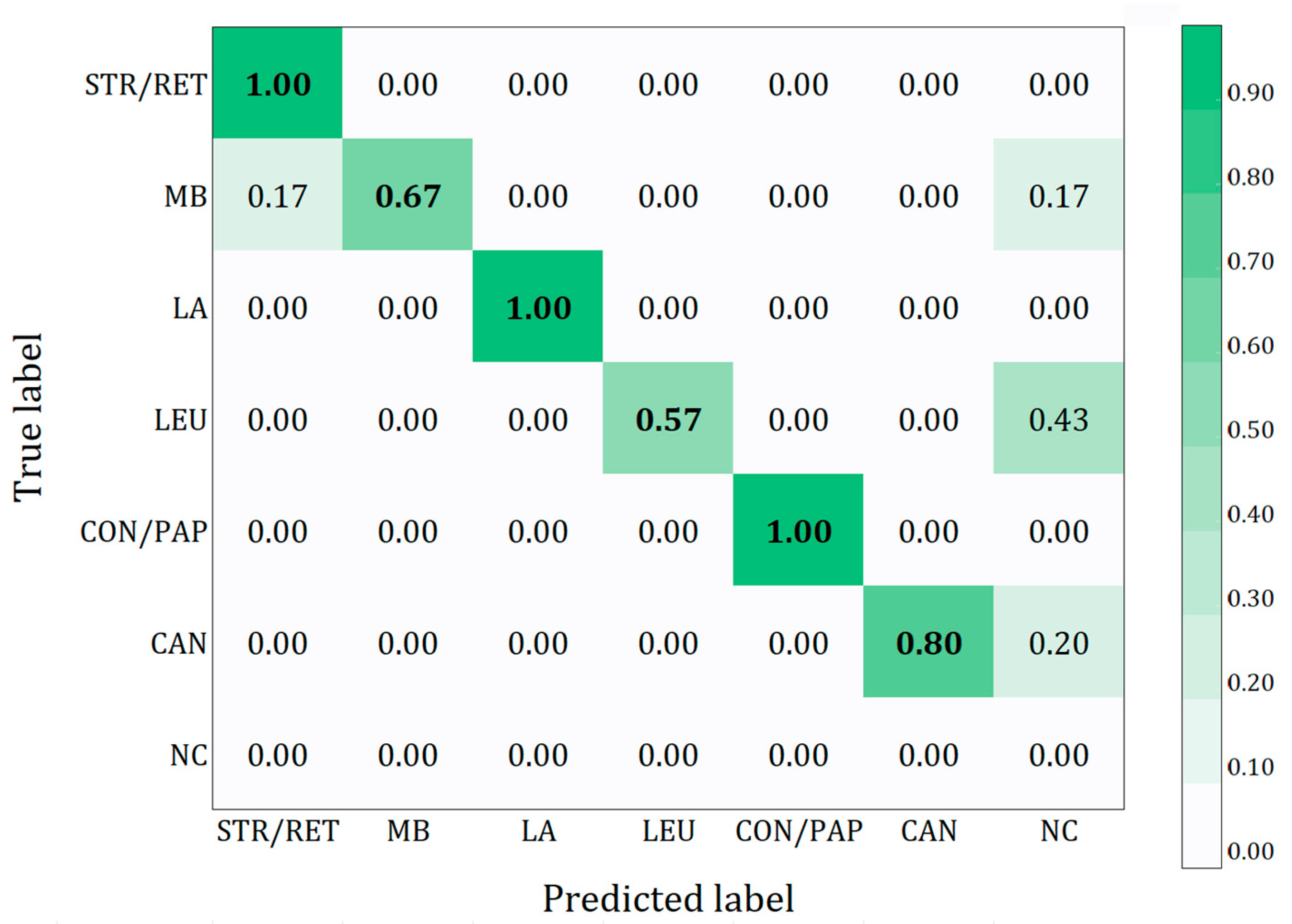

Table 3. Globally, the model achieved a precision of 77.2%, sensitivity of 76.0%, F1 score of 74.4%, and an mAP of 82.3%. To provide a clearer overview of the results, a confusion matrix was utilized, enabling a more comprehensive understanding of the model’s performance in terms of correctly and incorrectly classified instances for each class (

Figure 2). Additionally,

Table 4 shows the counts of TP, FP, and FN for each class. The confusion matrix provides a detailed overview of the model’s performance in correctly classifying different oral lesions in the study. The results show a high accuracy for the striate-reticular lesions, linea alba, and condyloma–papilloma classes, with a value of 1.00, indicating that all images in these classes were classified correctly. The morsicatio buccarum class achieved an accuracy of 0.67, with 0.17 of the images misclassified as striate-reticular lesions and an additional 0.17 not detected. The oral candidiasis class obtained an accuracy of 0.80, with 0.20 of the lesions not detected, while the leukoplakia class showed a lower accuracy of 0.57, with 0.43 of the lesions not detected. This matrix highlights the strengths and critical areas of the model, providing a clearer understanding of the results obtained.

4. Discussion

In recent years, there has been significant interest in developing artificial intelligence (AI) systems for computer-aided diagnosis of oral lesions using clinical images. These AI systems, particularly deep learning algorithms, are designed to differentiate malignant oral lesions from benign ones by utilizing various imaging modalities, including clinical photographs and histopathological images [

16,

17,

18,

19,

20].

AI techniques utilizing CNNs for the diagnosis of diseases have been extensively explored across various fields, particularly in histopathology and dermatology [

18,

19,

20]. Similarly, in dentistry, the utilization of CNNs shows promise; in fact, AI-based detection in dentistry using clinical photography has demonstrated a high diagnostic odds ratio [

18,

21].

In this pilot study, we developed object recognition models capable of detecting the presence of EWMLs in images, a task that, while not difficult for an expert dentist, is significant for trainee dentists and dental students.

Several studies have demonstrated the potential of AI-based systems for classifying oral lesions. CNN-based models have shown diagnostic performance comparable to expert clinicians in classifying oral squamous cell carcinoma (OSCC) and oral potentially malignant disorders (OPMDs) using oral photographs [

19,

20].

To date, however, AI systems enabling the detection and classification of EWMLs are still lacking.

The development of an effective AI system for detecting and diagnosing oral lesions faces several challenges. One of the primary difficulties is obtaining large, diverse, and well-annotated datasets of oral lesion images. Current datasets are often small and limited to specific types of lesions, making it challenging to train robust AI models [

22,

23]. Additionally, integrating these AI systems into clinical workflows is essential to assist clinicians, especially general practitioners. These systems should improve the accuracy of early cancer diagnosis and support expert-level decision-making in screening programs for oral malignant and potentially malignant lesions. AI functions as a complementary tool rather than a replacement for clinical expertise, reaffirming that dentists remain the key decision-makers in the diagnostic process [

24,

25,

26].

Another significant challenge is related to the medico-legal issues arising from data privacy concerns and lack of explainability for the systems used [

17,

27,

28].

The performance of CNN models is significantly influenced by image quality and the number of samples [

29]. Capturing images of the oral cavity is particularly challenging due to the lack of natural lighting, the need to remove mucous membranes for visibility in certain regions, and difficulties in standardizing angles, distance, framing, and sharpness [

30].

The condyloma, papilloma, and hyperplastic candidiasis classes achieved outstanding performance across all statistics, demonstrating the model’s ability to distinguish these lesions accurately. High values in some indicators (e.g., precision, sensitivity, F1 score, and mAP) for these classes underscore the robustness and reliability of the model in these areas.

The striae, reticular, and morsicatio buccarum classes show relatively balanced performance, with striae and reticular achieving an impressive sensitivity of 84.6%. This highlights the model’s ability to detect these lesions effectively, even if further refinement could enhance precision and reduce false positives.

The leucoplakia class exhibited a precision of 60.0% and a sensitivity of 25.0%. This discrepancy suggests the need for more balanced and representative data for this class to improve the model ability to generalize.

The linea alba class also shows very promising results with a sensitivity of 100.0% and a strong precision of 80.0%. This indicates the efficacy of model capability in detection for this class.

The results highlight how the recognition of linea alba, condyloma, and papilloma lesions is straightforward for AI, as their morphological characteristics are easily distinguishable clinically from other analyzed classes, achieving high values across all statistics.

This study explored the process of gathering and labeling images taken from the oral cavity, specifically those exhibiting confirmed EWMLs by the clinical team. It also showcased the outcomes of using DL to automate the early detection of EWMLs. Our initial findings illustrate promising findings, indicating that DL methods could be effective in addressing this complex challenge. However, some questions are open; first of all, who should perform the image segmentation by designating the bounding boxes useful for model training. Furthermore, it is important to understand whether it is necessary to instruct oral medicine professionals in image segmentation. In our opinion, this training could also benefit dental trainees and dental students by helping them learn to recognize lesions and learn from them. The integration of DSLR photographs into this study might raise concerns about the applicability and future scalability of the AI model in real-world contexts. Although DSLRs provide high-quality images, it is likely that smartphone photos will become more prevalent in the future, especially with the development of apps for diagnosing oral lesions. However, smartphone images can vary in quality due to differences in models, camera specifications, lighting conditions, and user handling, which could impact AI performance if not trained on a diverse dataset [

4,

31,

32,

33].

To date, there are no studies comparing the performance of AI systems with images acquired from DSLRs and smartphones. Therefore, it is crucial to expand datasets with smartphone images to enhance the model’s generalization in real-world scenarios, such as screening programs or field diagnostics.

5. Limitations and Strengths

The deep learning models developed for detecting EWMLs have initially yielded satisfactory results, with overall outcomes being quite promising. Nevertheless, a significant limitation is the relatively small size of the dataset, which presents a considerable challenge during the model training phase. Although the model performs well across several classes, there are specific areas that require further improvement. To enhance the model’s robustness and generalizability, additional research with larger datasets is necessary.

The study highlights several notable strengths. The use of a Faster R-CNN with ResNet-101 architecture, one of the most advanced models available, showcases a sophisticated application of AI in oral medicine diagnosis. Another strength lies in the high-performance metrics achieved for certain lesion classes, such as condyloma, papilloma, and hyperplastic candidiasis. Additionally, the study suggests that AI-based tools could be especially valuable for training dental students and trainees, enhancing their ability to recognize and understand lesions. This educational aspect could improve training programs and support the development of diagnostic skills. Another interesting aspect of this work is the innovative use of image color inversion (negative), employed in certain cases to enhance the recognition of specific lesions.

6. Conclusions

The challenge of precise lesion localization in object detection can be addressed by expanding datasets, as larger datasets improve deep learning models’ ability to detect complex patterns and enhance generalizability. Image augmentation techniques like rotation, scaling, and color adjustments can further enhance model robustness. Incorporating images from various devices ensures adaptability to different image qualities and lighting conditions, while regular updates and user feedback help improve model accuracy. Today, there is a noticeable lack of apps that could facilitate the learning path for trainee dentists and dental students, and developing such applications in the future would be beneficial. Additionally, this tool could potentially be extended to identify red, pigmented, and red-and-white lesions. Early exposure to AI systems in dental education is crucial, as it will prepare students to integrate these technologies into future practice. Future research should focus on developing automatic segmentation tools to streamline image annotation, enhancing scalability and efficiency. While AI can assist in lesion detection, dentists are the ultimate decision-makers in diagnosis, ensuring that AI serves as a complementary tool rather than a replacement for their clinical expertise.

Author Contributions

Conceptualization, G.C. and O.D.F.; methodology, G.C., G.G., M.G.C.A.C. and O.D.F.; software, F.K., G.L.M. and M.G.C.A.C.; validation, G.P., F.K. and G.L.M.; investigation, G.L.M.; resources, G.O. and G.G.; data curation, G.P. and M.G.C.A.C.; formal analysis, M.G.C.A.C.; writing—original draft preparation, G.C., G.L.M. and F.K.; writing—review and editing, G.C., G.L.M. and O.D.F.; visualization, G.G., G.O. and G.P.; supervision, G.C. and M.G.C.A.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Local Ethics Committee of the University Hospital “Policlinico Paolo Giaccone”, Palermo, Italy (protocol code: 12/2024; date of approval: 7 May 2024).

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

We gratefully acknowledge the free technical support provided by the algorithm developers at Neurotechnology Co.®, Vilnius, Lithuania, for the analysis of the trained models during the completion of this study. The work was partially supported by the project “OCAX—Oral Cancer eXplained by DL-enhanced case-based classification” PRIN 2022 code P2022KMWX3.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- McKinney, R.; Olmo, H.; McGovern, B. Benign Chronic White Lesions of the Oral Mucosa. In StatPearls; StatPearls Publishing: Treasure Island, FL, USA, 2024. [Google Scholar]

- Simi, S.M.; Nandakumar, G.; Anish, T.S. White Lesions in the Oral Cavity: A Clinicopathological Study from a Tertiary Care Dermatology Centre in Kerala, India. Indian J. Dermatol. 2013, 58, 269. [Google Scholar] [CrossRef] [PubMed]

- Shiragur, S.S.; Srinath, S.; Yadav, S.T.; Purushothaman, A.; Chavan, N. V Spectrum of White Lesions in the Oral Cavity—A Review. J. Oral Med. Oral Surg. Oral Pathol. Oral Radiol. 2024, 10, 3–13. [Google Scholar] [CrossRef]

- Moosa, Y.; Alizai, M.H.K.; Tahir, A.; Zia, S.; Sadia, S.; Fareed, M.T. Artificial Intelligence in Oral Medicine. Int. J. Health Sci. 2023, 7, 1476–1488. [Google Scholar] [CrossRef]

- Albagieh, H.; Alzeer, Z.O.; Alasmari, O.N.; Alkadhi, A.A.; Naitah, A.N.; Almasaad, K.F.; Alshahrani, T.S.; Alshahrani, K.S.; Almahmoud, M.I. Comparing Artificial Intelligence and Senior Residents in Oral Lesion Diagnosis: A Comparative Study. Cureus 2024, 16, e51584. [Google Scholar] [CrossRef] [PubMed]

- Di Fede, O.; Panzarella, V.; Buttacavoli, F.; La Mantia, G.; Campisi, G. Doctoral: A Smartphone-Based Decision Support Tool for the Early Detection of oral Potentially Malignant Disorders. Digit Health 2023, 9, 20552076231177141. [Google Scholar] [CrossRef] [PubMed]

- Zain, R.B.; Pateel, D.G.S.; Ramanathan, A.; Kallarakkal, T.G.; Wong, G.R.; Yang, Y.H.; Zaini, Z.M.; Ibrahim, N.; Kohli, S.; Durward, C. Effectiveness of “OralDETECT”: A Repetitive Test-Enhanced, Corrective Feedback Method Competency Assessment Tool for Early Detection of Oral Cancer. J. Cancer Educ. 2022, 37, 319–327. [Google Scholar] [CrossRef]

- Fatima, A.; Shafi, I.; Afzal, H.; Díez, I.D.L.T.; Lourdes, D.R.S.M.; Breñosa, J.; Espinosa, J.C.M.; Ashraf, I. Advancements in Dentistry with Artificial Intelligence: Current Clinical Applications and Future Perspectives. Healthcare 2022, 10, 2188. [Google Scholar] [CrossRef] [PubMed]

- Krishnan, G.; Singh, S.; Pathania, M.; Gosavi, S.; Abhishek, S.; Parchani, A.; Dhar, M. Artificial Intelligence in Clinical Medicine: Catalyzing a Sustainable Global Healthcare Paradigm. Front. Artif. Intell. 2023, 6, 1227091. [Google Scholar] [CrossRef]

- Rakhmatulin, I.; Dao, M.S.; Nassibi, A.; Mandic, D. Exploring Convolutional Neural Network Architectures for EEG Feature Extraction. Sensors 2024, 24, 877. [Google Scholar] [CrossRef]

- Tanriver, G.; Soluk Tekkesin, M.; Ergen, O.; Koljenovickoljenovic, S.; Bossy, P.; Bouaoud, J. Automated Detection and Classification of Oral Lesions Using Deep Learning to Detect Oral Potentially Malignant Disorders. Cancers 2021, 13, 2766. [Google Scholar] [CrossRef]

- Ding, H.; Wu, J.; Zhao, W.; Matinlinna, J.P.; Burrow, M.F.; Tsoi, J.K.H. Artificial Intelligence in Dentistry—A Review. Front. Dent. Med. 2023, 4, 1085251. [Google Scholar] [CrossRef]

- Parola, M.; La Mantia, G.; Galatolo, F.; Cimino, M.G.C.A.; Campisi, G.; Di Fede, O. Image-Based Screening of Oral Cancer via Deep Ensemble Architecture. In Proceedings of the 2023 IEEE Symposium Series on Computational Intelligence (SSCI), Mexico City, Mexico, 5–8 December 2023; pp. 1572–1578. [Google Scholar] [CrossRef]

- Chen, Y.-W.; Stanley, K.; Att, W. Artificial Intelligence in Dentistry: Current Applications and Future Perspectives. Quintessence Int. 2020, 51, 248–257. [Google Scholar] [CrossRef]

- Alzaid, N.; Ghulam, O.; Albani, M.; Alharbi, R.; Othman, M.; Taher, H.; Albaradie, S.; Ahmed, S. Revolutionizing Dental Care: A Comprehensive Review of Artificial Intelligence Applications Among Various Dental Specialties. Cureus 2023, 15, e47033. [Google Scholar] [CrossRef] [PubMed]

- Hegde, S.; Ajila, V.; Zhu, W.; Zeng, C. Artificial Intelligence in Early Diagnosis and Prevention of Oral Cancer. Asia-Pac. J. Oncol. Nurs. 2022, 9, 100133. [Google Scholar] [CrossRef]

- Dixit, S.; Kumar, A.; Srinivasan, K. A Current Review of Machine Learning and Deep Learning Models in Oral Cancer Diagnosis: Recent Technologies, Open Challenges, and Future Research Directions. Diagnostics 2023, 13, 1353. [Google Scholar] [CrossRef]

- Al-Rawi, N.; Sultan, A.; Rajai, B.; Shuaeeb, H.; Alnajjar, M.; Alketbi, M.; Mohammad, Y.; Shetty, S.R.; Mashrah, M.A. The Effectiveness of Artificial Intelligence in Detection of Oral Cancer. Int. Dent. J. 2022, 72, 436. [Google Scholar] [CrossRef] [PubMed]

- Sathishkumar, R.; Govindarajan, M. A Comprehensive Study on Artificial Intelligence Techniques for Oral Cancer Diagnosis: Challenges and Opportunities. In Proceedings of the 2023 International Conference on System, Computation, Automation and Networking, ICSCAN 2023, Puducherry, India, 17–18 November 2023. [Google Scholar] [CrossRef]

- Khanagar, S.B.; Alkadi, L.; Alghilan, M.A.; Kalagi, S.; Awawdeh, M.; Bijai, L.K.; Vishwanathaiah, S.; Aldhebaib, A.; Singh, O.G. Application and Performance of Artificial Intelligence (AI) in Oral Cancer Diagnosis and Prediction Using Histopathological Images: A Systematic Review. Biomedicines 2023, 11, 1612. [Google Scholar] [CrossRef]

- Decroos, F.; Springenberg, S.; Lang, T.; Päpper, M.; Zapf, A.; Metze, D.; Steinkraus, V.; Böer-Auer, A. A Deep Learning Approach for Histopathological Diagnosis of Onychomycosis: Not Inferior to Analogue Diagnosis by Histopathologists. Acta Derm. Venereol. 2021, 101, 107. [Google Scholar] [CrossRef]

- Rajendran, S.; Lim, J.H.; Yogalingam, K.; Kallarakkal, T.G.; Zain, R.B.; Jayasinghe, R.D.; Rimal, J.; Kerr, A.R.; Amtha, R.; Patil, K.; et al. The Establishment of a Multi-Source Dataset of Images on Common Oral Lesions; Preprint; Research Square: Durham, NC, USA, 2021. [Google Scholar] [CrossRef]

- Dinesh, Y.; Ramalingam, K.; Ramani, P.; Deepak, R.M. Machine Learning in the Detection of Oral Lesions with Clinical Intraoral Images. Cureus 2023, 15, e44018. [Google Scholar] [CrossRef]

- Bonny, T.; Al Nassan, W.; Obaideen, K.; Al Mallahi, M.N.; Mohammad, Y.; El-Damanhoury, H.M. Contemporary Role and Applications of Artificial Intelligence in Dentistry. F1000Res 2023, 12, 1179. [Google Scholar] [CrossRef]

- Batra, A.M.; Reche, A. A New Era of Dental Care: Harnessing Artificial Intelligence for Better Diagnosis and Treatment. Cureus 2023, 15, e49319. [Google Scholar] [CrossRef]

- Roganović, J.; Radenković, M.; Miličić, B. Responsible Use of Artificial Intelligence in Dentistry: Survey on Dentists’ and Final-Year Undergraduates’ Perspectives. Healthcare 2023, 11, 1480. [Google Scholar] [CrossRef] [PubMed]

- Di Fede, O.; La Mantia, G.; Cimino, M.G.C.A.; Campisi, G. Protection of Patient Data in Digital Oral and General Health Care: A Scoping Review with Respect to the Current Regulations. Oral 2023, 3, 155–165. [Google Scholar] [CrossRef]

- Parola, M.; Galatolo, F.A.; La Mantia, G.; Cimino, M.G.C.A.; Campisi, G.; Di Fede, O. Towards Explainable Oral Cancer Recognition: Screening on Imperfect Images via Informed Deep Learning and Case-Based Reasoning. Comput. Med. Imaging Graph. 2024, 117, 102433. [Google Scholar] [CrossRef]

- Guo, J.; Wang, H.; Xue, X.; Li, M.; Ma, Z. Real-Time Classification on Oral Ulcer Images with Residual Network and Image Enhancement. IET Image Process 2022, 16, 641–646. [Google Scholar] [CrossRef]

- Fabiane, R.; Gomes, T.; Schmith, J.; Marques De Figueiredo, R.; Freitas, S.A.; Nunes Machado, G.; Romanini, J.; Coelho Carrard, V. Use of Artificial Intelligence in the Classification of Elementary Oral Lesions from Clinical Images. Int. J. Environ. Res. Public Health 2023, 20, 3894. [Google Scholar] [CrossRef]

- Ciecierski-Holmes, T.; Singh, R.; Axt, M.; Brenner, S.; Barteit, S. Artificial Intelligence for Strengthening Healthcare Systems in Low- and Middle-Income Countries: A Systematic Scoping Review. npj Digit. Med. 2022, 5, 162. [Google Scholar] [CrossRef] [PubMed]

- Gore, J.C. Artificial Intelligence in Medical Imaging. Magn. Reson. Imaging 2020, 68, A1–A4. [Google Scholar] [CrossRef]

- Mintz, Y.; Brodie, R. Introduction to Artificial Intelligence in Medicine. Minim. Invasive Ther. Allied Technol. 2019, 28, 73–81. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).