Real-Time Caries Detection of Bitewing Radiographs Using a Mobile Phone and an Artificial Neural Network: A Pilot Study

Abstract

:1. Introduction

2. Background

What Is AI?

3. Materials and Method

3.1. Ethics

3.2. Study Design

3.3. Data Acquisition and Annotation

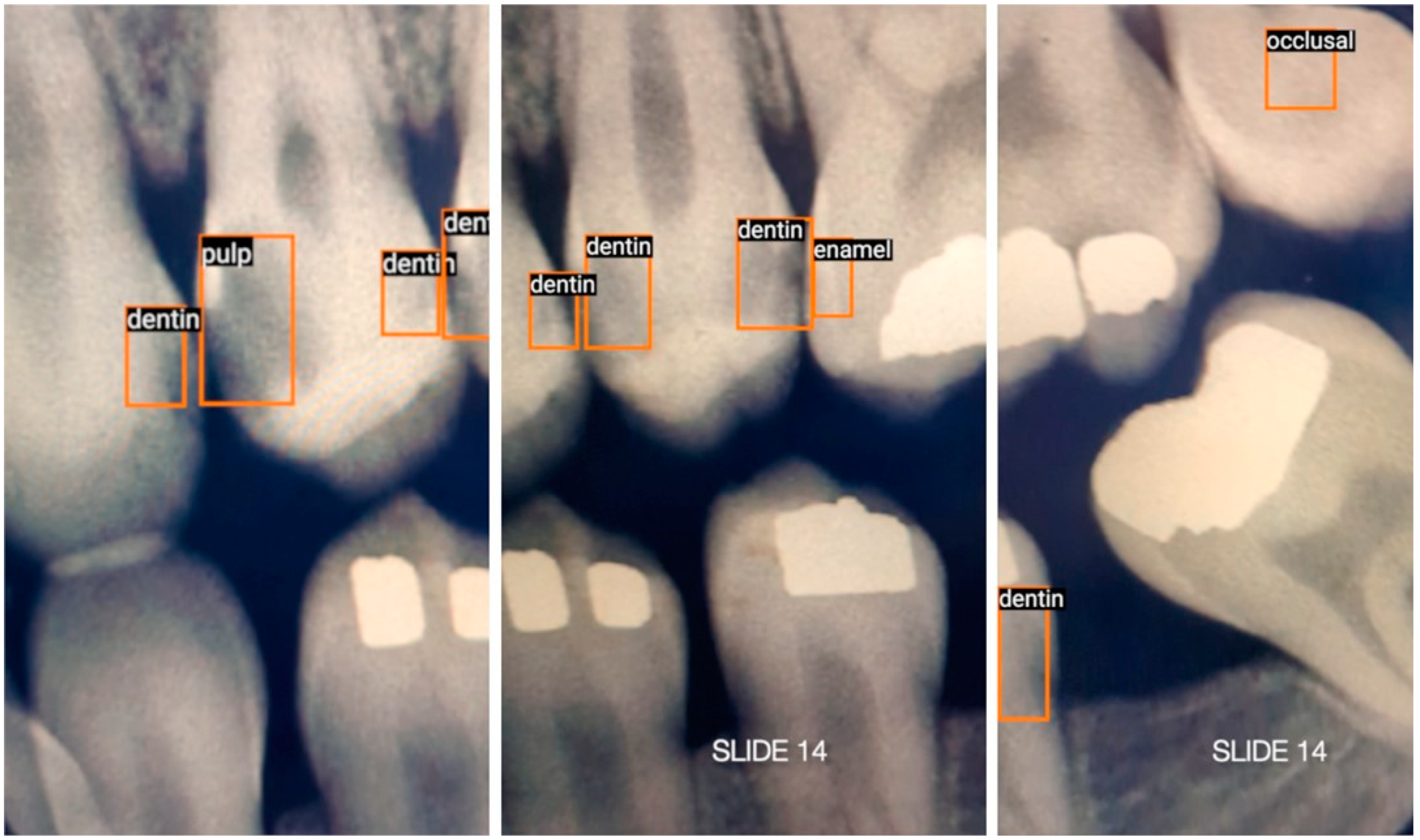

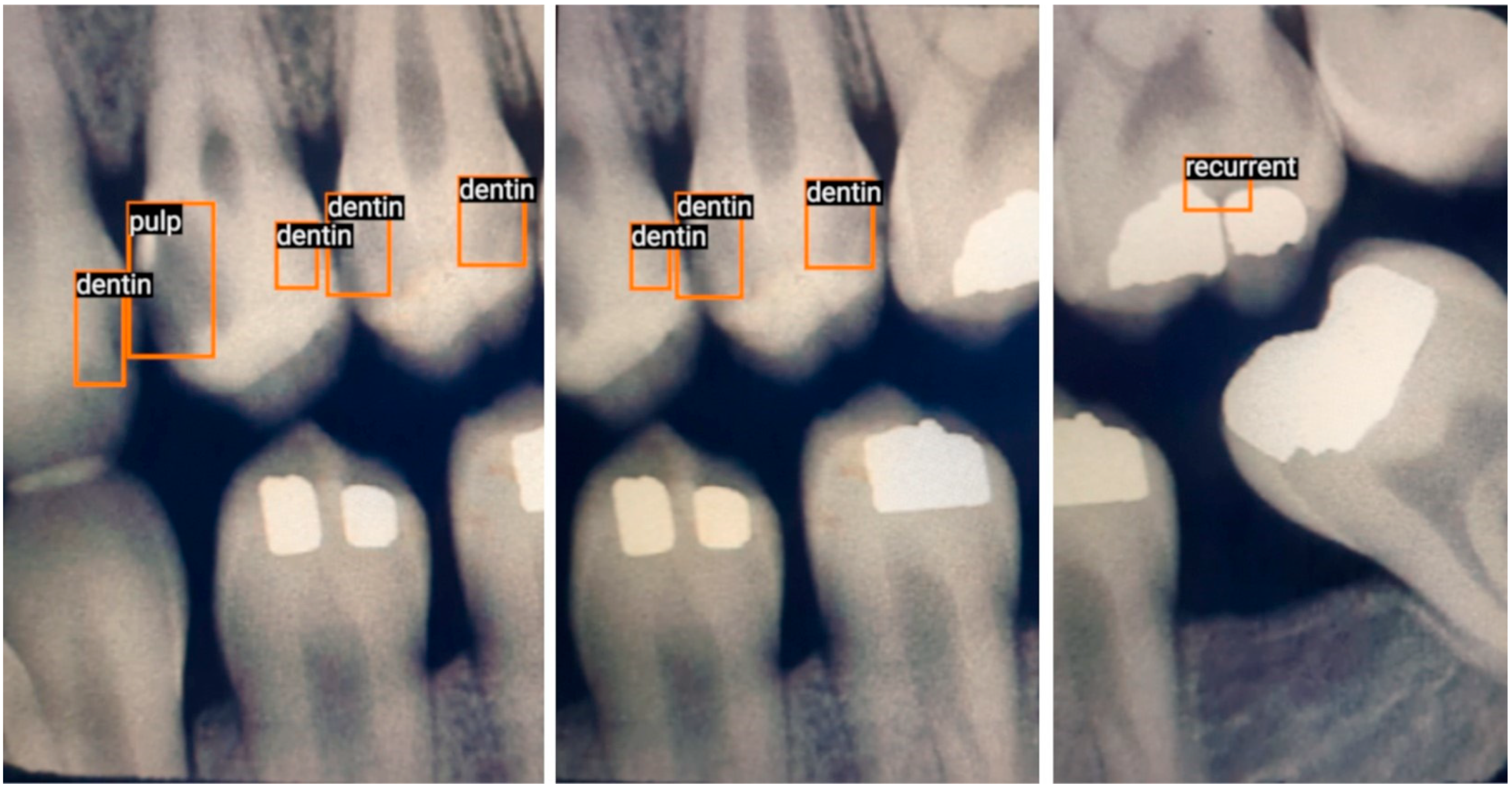

- Enamel: Interproximal caries visible only within enamel;

- Dentin: Interproximal caries visible within dentin. Caries within overlapping contacts were ignored if they did not extend to the dentinoenamel junction;

- Pulp: Caries near or extending to the pulp chamber;

- Recurrent: Caries under existing restorations;

- Occlusal: Caries under the occlusal surface, including superimposed buccal or lingual/palatal caries.

3.4. Training and Augmentation

3.5. Testing

3.6. Technical Methodology

3.7. Metrics

- True positive (TP): The number of correct detections in the presence of true caries.

- False positive (FP): The number of incorrect detections in the absence of true caries.

- False negative (FN): The number of missed detections in the presence of true caries.

- Additionally, the following performance metrics were reported:

- Sensitivity (Recall, True Positive Rate (TPR)) = TP/(TP + FN).

- Precision (Positive Predictive Value (PPV)) = TP/(TP + FP).

- F1 Score = 2TP/(2TP + FP + FN).

4. Results

4.1. Moving Mobile Phone Detecting Stationary BWRs in Real Time

4.2. Stationary Mobile Phone Detection of Moving Video of BWRs in Real Time

5. Discussion

5.1. Subjective Goals

5.2. Comparing the Results

5.3. Comparison with Other Studies

5.4. Ethics of Using Internet Images

5.5. Potential Benefits of Using Internet Images

5.6. Limitations

6. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Schwendicke, F.; Dörfer, C.E.; Schlattmann, P.; Page, L.F.; Thomson, W.M.; Paris, S. Socioeconomic inequality and caries: A systematic review and meta-analysis. J. Dent Res. 2015, 94, 10–18. [Google Scholar] [CrossRef] [PubMed]

- Wang, T.; Mathur, M.; Schmidt, H. Universal health coverage, oral health, equity and personal responsibility. Bull. World Health Organ. 2020, 98, 719–721. [Google Scholar] [CrossRef]

- Young, D.A.; Nový, B.B.; Zeller, G.G.; Hale, R.; Hart, T.C.; Truelove, E.L.; Ekstrand, K.R.; Featherstone, J.D.; Fontana, M.; Ismail, A.; et al. American Dental Association Caries Classification System for clinical practice: A report of the American Dental Association Council on Scientific Affairs. J. Am. Dent. Assoc. 2015, 146, 79–86. [Google Scholar] [CrossRef]

- GBD 2017 Disease and Injury Incidence and Prevalence Collaborators. Global, regional, and national incidence, prevalence, and years lived with disability for 354 diseases and injuries for 195 countries and territories, 1990–2017: A systematic analysis for the Global Burden of Disease Study 2017. Lancet 2018, 392, 1789–1858. [Google Scholar] [CrossRef]

- Bashir, N. Update on the prevalence of untreated caries in the US adult population. J. Am. Dent Assoc. 2022, 153, 300–308. [Google Scholar] [CrossRef] [PubMed]

- Benjamin, R.M. Oral health: The silent epidemic. Public Health Rep. 2010, 125, 158–159. [Google Scholar] [CrossRef] [PubMed]

- Griffin, S.O.; Wei, L.; Gooch, B.F.; Weno, K.; Espinoza, L. Centers for Disease Control and Prevention. Vital signs: Dental sealant use and untreated tooth decay among US school-aged children. Morb. Mortal. Wkly. Rep. 2016, 65, 1141–1145. [Google Scholar]

- WHO. Sugars and Dental Caries. 9 November 2017. Available online: https://www.who.int/news-room/fact-sheets/detail/sugars-and-dental-caries (accessed on 24 February 2023).

- Grieco, P.; Jivraj, A.; Da Silva, J.; Kuwajima, Y.; Ishida, Y.; Ogawa, K.; Ohyama, H.; Ishikawa-Nagai, S. Importance of bitewing radiographs for the early detection of interproximal carious lesions and the impact on healthcare expenditure in Japan. Ann. Transl. Med. 2022, 10, 2. [Google Scholar] [CrossRef]

- Schwendicke, F.; Göstemeyer, G. Conventional bitewing radiography. Clin. Dent. Rev. 2020, 4, 109–117. [Google Scholar] [CrossRef]

- Lino, J.R.; Ramos-Jorge, J.; Coelho, V.S.; Ramos-Jorge, M.L.; Moysés, M.R.; Ribeiro, J.C. Association and comparison between visual inspection and bitewing radiography for the detection of recurrent dental caries under restorations. Int. Dent. J. 2015, 65, 178–181. [Google Scholar] [CrossRef]

- Iannucci, J.M.; Howerton, L.J. Dental Radiography: Principles and Techniques, 6th ed.; Elsevier: St. Louis, MO, USA, 2022. [Google Scholar]

- Choi, J.; Eun, H.; Kim, C. Boosting Proximal Dental Caries Detection via Combination of Variational Methods and Convolutional Neural Network. J. Signal Process. Syst. 2018, 90, 87–97. [Google Scholar] [CrossRef]

- Lee, S.; Oh, S.-I.; Jo, J.; Kang, S.; Shin, Y.; Park, J.-W. Deep learning for early dental caries detection in bitewing radiographs. Nature 2021, 11, 16807. [Google Scholar] [CrossRef]

- Abdinian, M.; Razavi, S.M.; Faghihian, R.; Samety, A.A.; Faghihian, E. Accuracy of Digital Bitewing Radiography versus Different Views of Digital Panoramic Radiography for Detection of Proximal Caries. J. Dent. 2015, 12, 290–297. [Google Scholar]

- Kositbowornchai, S.; Siriteptawee, S.; Plermkamon, S.; Bureerat, S.; Chetchotsak, D. An Artificial Neural Network for Detection of Simulated Dental Caries. Int. J. CARS 2006, 1, 91–96. [Google Scholar] [CrossRef]

- Bayrakdar, I.S.; Orhan, K.; Akarsu, S.; Çelik, Ö.; Atasoy, S.; Pekince, A.; Yasa, Y.; Bilgir, E.; Sağlam, H.; Aslan, A.F.; et al. Deep-learning approach for caries detection and segmentation on dental bitewing radiographs. Oral Radiol. 2022, 38, 468–479. [Google Scholar] [CrossRef] [PubMed]

- Cantu, A.G.; Gehrung, S.; Krois, J.; Chaurasia, A.; Rossi, J.G.; Gaudin, R.; Elhennawy, K.; Schwendicke, F. Detecting caries lesions of different radiographic extension on bitewings using deep learning. J. Dent. 2020, 100, 103425. [Google Scholar] [CrossRef] [PubMed]

- Geetha, V.; Aprameya, K.S.; Hinduja, D.M. Dental caries diagnosis in digital radiographs using back-propagation neural network. Health Inf. Sci. Syst. 2020, 8, 1–14. [Google Scholar] [CrossRef]

- Srivastava, M.M.; Kumar, P.; Pradhan, L.; Varadarajan, S. Detection of Tooth caries in Bitewing Radiographs using Deep Learning. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- McCarthy, J. What Is Artificial Intelligence? 12 November 2007. Available online: https://www-formal.stanford.edu/jmc/whatisai.pdf (accessed on 2 February 2023).

- Kavlakoglu, E. AI vs. Machine Learning vs. Deep Learning vs. Neural Networks: What’s the Difference? 27 May 2020. Available online: https://www.ibm.com/cloud/blog/ai-vs-machine-learning-vs-deep-learning-vs-neural-networks (accessed on 25 February 2023).

- Nguyen, T.T.; Larrivée, N.; Lee, A.; Bilaniuk, O.; Durand, R. Use of Artificial Intelligence in Dentistry: Current Clinical Trends and Research Advances. J. Can. Dent. Assoc. 2021, 87, I7. [Google Scholar] [CrossRef]

- Iannucci, J. Radiographic Caries Identification. Available online: http://dentalintranet.osu.edu/archive/instructional/RadiologyCarie/images.htm (accessed on 18 June 2023).

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. arXiv 2020, arXiv:1911.09070. [Google Scholar]

- Tan, M.; Yu, A. EfficientDet: Towards Scalable and Efficient Object Detection. 15 April 2020. Available online: https://ai.googleblog.com/2020/04/efficientdet-towards-scalable-and.html (accessed on 18 December 2021).

- Gholami, A.; Kim, S.; Dong, Z.; Yao, Z.; Mahoney, M.W.; Keutzer, K. A Survey of Quantization Methods for Efficient Neural Network Inference. arXiv 2021, arXiv:2103.13630. [Google Scholar]

- Mander, G.T.; Munn, Z. Understanding diagnostic test accuracy studies and systematic reviews: A primer for medical radiation technologists. J. Med. Imaging Radiat. Sci. 2021, 52, 286–294. [Google Scholar] [CrossRef]

- Digital 2023: Global Overview Report. 26 January 2023. Available online: https://datareportal.com/reports/digital-2023-global-overview-report (accessed on 26 February 2023).

- Pun, M. Video caries-detection on bitewing radiographs using an artificial neural network. Ont. Dent. 2023, 100, 26–30. [Google Scholar]

- Al-Jallad, N.; Ly-Mapes, O.; Hao, P.; Ruan, J.; Ramesh, A.; Luo, J.; Wu, T.T.; Dye, T.; Rashwan, N.; Ren, J.; et al. Artificial intelligence-powered smartphone application, AICaries, improves at-home dental caries screening in children: Moderated and unmoderated usability test. PLoS Digit. Health 2022, 1, e0000046. [Google Scholar] [CrossRef]

- Thanh, M.T.G.; Van Toan, N.; Ngoc, V.T.N.; Tra, N.T.; Giap, C.N.; Nguyen, D.M. Deep Learning Application in Dental Caries Detection Using Intraoral Photos Taken by Smartphones. Appl. Sci. 2022, 12, 5504. [Google Scholar] [CrossRef]

- Ding, B.; Zhang, Z.; Liang, Y.; Wang, W.; Hao, S.; Meng, Z.; Guan, L.; Hu, Y.; Guo, B.; Zhao, R.; et al. Detection of dental caries in oral photographs taken by mobile phones based on the YOLOv3 algorithm. Ann. Transl. Med. 2021, 9, 1622. [Google Scholar] [CrossRef]

- Salahin, S.M.S.; Ullaa, M.D.S.; Ahmed, S.; Mohammed, N.; Farook, T.H.; Dudley, J. One-Stage Methods of Computer Vision Object Detection to Classify Carious Lesions from Smartphone Imaging. Oral 2023, 3, 176–190. [Google Scholar] [CrossRef]

- Krupinski, E.A. An Ethics Framework for Clinical Imaging Data Sharing and the Greater Good. Radiology 2020, 295, 683–684. [Google Scholar] [CrossRef]

- Larson, D.B.; Magnus, D.C.; Lungren, M.P.; Shah, N.H.; Langlotz, C.P. Ethics of using and sharing clinical imaging data for artificial intelligence: A proposed framework. Radiology 2020, 295, 675–682. [Google Scholar] [CrossRef] [PubMed]

- Faden, R.R.; Kass, N.E.; Goodman, S.N.; Pronovost, P.; Tunis, S.; Beauchamp, T.L. An ethics framework for a learning health care system: A departure from traditional research ethics and clinical ethics. Hastings Cent. Rep. 2013, 43, S16–S27. [Google Scholar] [CrossRef]

- Adeoye, J.; Hui, L.; Su, Y.X. Data-centric artificial intelligence in oncology: A systematic review assessing data quality in machine learning models for head and neck cancer. J. Big Data 2023, 10, 1–25. [Google Scholar] [CrossRef]

- Adeoye, J.; Tan, J.Y.; Choi, S.W.; Thomson, P. Prediction models applying machine learning to oral cavity cancer outcomes: A systematic review. Int. J. Med. Inform. 2021, 154, 104557. [Google Scholar] [CrossRef]

| Total | True Positive | False Positive | False Negative | Sensitivity | Precision | F1 Score | |

|---|---|---|---|---|---|---|---|

| Caries | TP | FP | FN | TP/(TP + FN) | TP/(TP + FP) | 2TP/(2TP + FP + FN) | |

| Handheld Detection * | 40 | 27 | 12 | 13 | 0.675 | 0.692 | 0.684 |

| Video Detection * | 40 | 23 | 9 | 17 | 0.575 | 0.719 | 0.639 |

| Average | 0.625 | 0.706 | 0.661 |

| Sensitivity | Precision | F1 Score | |

|---|---|---|---|

| TP/(TP + FN) | TP/(TP + FP) | 2TP/(2TP + FP + FN) | |

| Geetha et al. [19] | 0.962 | 0.963 | 0.962 |

| Bayraktar et al. [17] | 0.840 | 0.840 | 0.840 |

| Srivastava et al. [20] | 0.805 | 0.615 | 0.700 |

| Lee et al. [14] | 0.650 | 0.633 | 0.641 |

| EfficientDet-Lite1 Video Average * | 0.625 | 0.706 | 0.661 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pun, M.H.J. Real-Time Caries Detection of Bitewing Radiographs Using a Mobile Phone and an Artificial Neural Network: A Pilot Study. Oral 2023, 3, 437-449. https://doi.org/10.3390/oral3030035

Pun MHJ. Real-Time Caries Detection of Bitewing Radiographs Using a Mobile Phone and an Artificial Neural Network: A Pilot Study. Oral. 2023; 3(3):437-449. https://doi.org/10.3390/oral3030035

Chicago/Turabian StylePun, Ming Hong Jim. 2023. "Real-Time Caries Detection of Bitewing Radiographs Using a Mobile Phone and an Artificial Neural Network: A Pilot Study" Oral 3, no. 3: 437-449. https://doi.org/10.3390/oral3030035

APA StylePun, M. H. J. (2023). Real-Time Caries Detection of Bitewing Radiographs Using a Mobile Phone and an Artificial Neural Network: A Pilot Study. Oral, 3(3), 437-449. https://doi.org/10.3390/oral3030035