The Trail Making Test in Virtual Reality (TMT-VR): Examination of the Ecological Validity, Usability, Acceptability, and User Experience in Adults with ADHD

Abstract

1. Introduction

1.1. Ecological Validity of Virtual Reality Assessments

1.2. Trail Making Test in Virtual Reality

1.3. Neuropsychological Assessment in Attention Deficit Hyperactivity Disorder

1.4. Trail Making Test in ADHD

1.5. Virtual Reality and ADHD in Clinical and Non-Clinical Populations

1.6. Usability, User Experience, and Acceptability

1.7. Present Study

2. Materials and Methods

2.1. Participants/Sample

2.2. Materials

2.2.1. Immersive VR Setup

2.2.2. Demographics

2.2.3. Greek Version of the Adult ADHD Self-Report Scale (ASRS)

2.2.4. Trail Making Test (TMT)

2.2.5. Cybersickness in Virtual Reality Questionnaire (CSQ-VR)

2.2.6. Trail Making Test VR (TMT-VR)

- TMT-VR Task A: Participants connect 25 numbered cubes in ascending numerical order (1, 2, 3, …, 25). This task measures visual scanning, attention, and processing speed, similar to the traditional TMT-A but within a more immersive environment.

- TMT-VR Task B: Participants connect 25 cubes that alternate between numbers and letters in ascending order (1, A, 2, B, 3, C, …, 13). This task assesses more complex cognitive functions, including task-switching ability and cognitive flexibility, reflecting the traditional TMT-B but with the added benefits of the VR setting.

2.2.7. System Usability Scale (SUS)

2.2.8. Short Version of the User Experience Questionnaire (UEQ-S)

2.2.9. Service User Technology Acceptability Questionnaire (SUTAQ)

2.3. Procedures

2.4. Statistical Analyses

2.4.1. Usability, User Experience, and Acceptability

2.4.2. Independent Samples t-Tests

2.4.3. Repeated Measures ANOVA

2.4.4. Correlation Analyses

2.4.5. Linear Regression Analysis

3. Results

3.1. Descriptive Statistics

3.2. Usability, User Experience, and Acceptability

3.3. Performance on TMT-VR and TMT

Group Comparisons: ADHD vs. Neurotypical

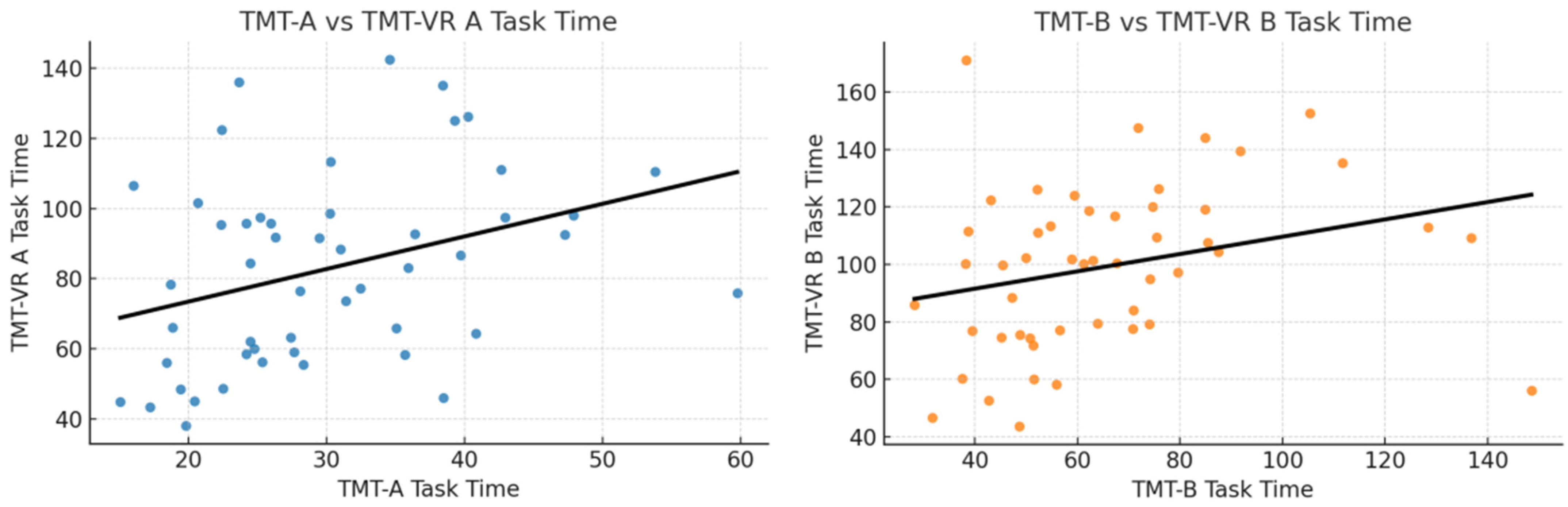

3.4. Convergent Validity

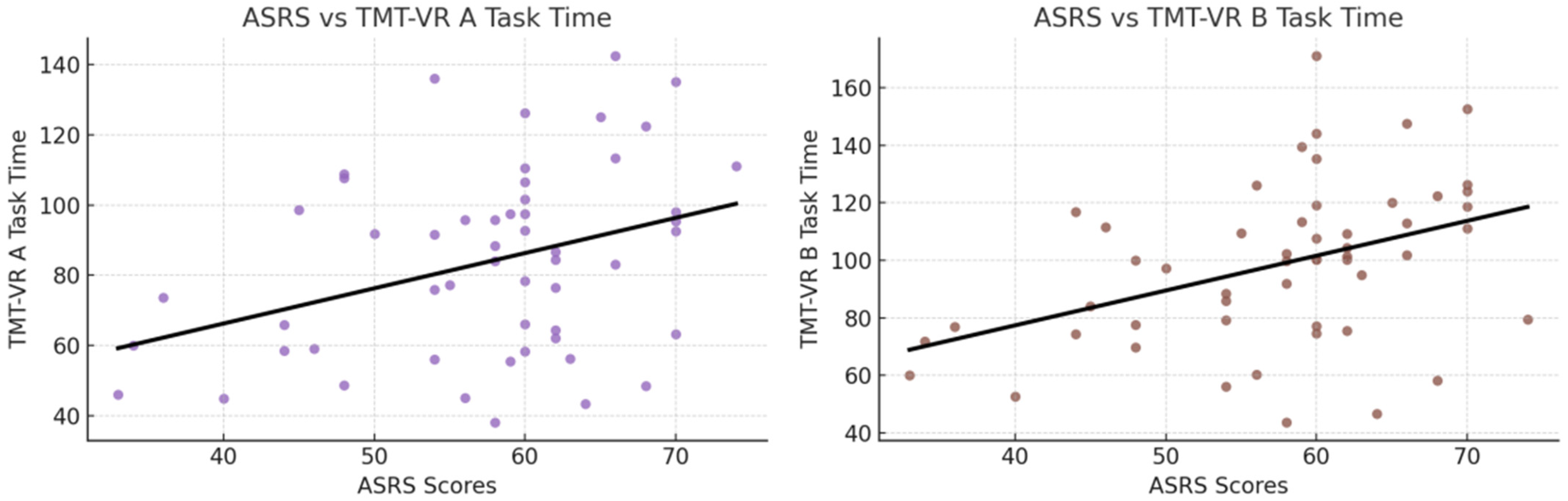

3.5. Ecological Validity

Regression Analyses for Predicting ASRS Scores

4. Discussion

4.1. Usability, User Experience, and Acceptability

Virtual Reality in Neurodivergent Individuals

4.2. Convergent Validity

4.3. Ecological Validity

4.4. Limitations and Future Studies

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chaytor, N.; Schmitter-Edgecombe, M. The Ecological Validity of Neuropsychological Tests: A Review of the Literature on Everyday Cognitive Skills. Neuropsychol. Rev. 2003, 13, 181–197. [Google Scholar] [CrossRef] [PubMed]

- Spooner, D.M.; Pachana, N.A. Ecological Validity in Neuropsychological Assessment: A Case for Greater Consideration in Research with Neurologically Intact Populations. Arch. Clin. Neuropsychol. 2006, 21, 327–337. [Google Scholar] [CrossRef] [PubMed]

- Kourtesis, P.; Korre, D.; Collina, S.; Doumas, L.; MacPherson, S. Guidelines for the Development of Immersive Virtual Reality Software for Cognitive Neuroscience and Neuropsychology: The Development of Virtual Reality Everyday Assessment Lab (VR-EAL), a Neuropsychological Test Battery in Immersive Virtual Reality. Front. Comput. Sci. 2020, 1, 12. [Google Scholar] [CrossRef]

- Kourtesis, P.; Collina, S.; Doumas, L.A.A.; MacPherson, S.E. Validation of the Virtual Reality Everyday Assessment Lab (VR-EAL): An Immersive Virtual Reality Neuropsychological Battery with Enhanced Ecological Validity. J. Int. Neuropsychol. Soc. 2021, 27, 181–196. [Google Scholar] [CrossRef]

- Kourtesis, P.; MacPherson, S.E. How Immersive Virtual Reality Methods May Meet the Criteria of the National Academy of Neuropsychology and American Academy of Clinical Neuropsychology: A Software Review of the Virtual Reality Everyday Assessment Lab (VR-EAL). Comput. Hum. Behav. Rep. 2021, 4, 100151. [Google Scholar] [CrossRef]

- Chaytor, N.; Schmitter-Edgecombe, M.; Burr, R. Improving the Ecological Validity of Executive Functioning Assessment. Arch. Clin. Neuropsychol. 2006, 21, 217–227. [Google Scholar] [CrossRef]

- Kourtesis, P.; Argelaguet, F.; Vizcay, S.; Marchal, M.; Pacchierotti, C. Electrotactile Feedback Applications for Hand and Arm Interactions: A Systematic Review, Meta-Analysis, and Future Directions. IEEE Trans. Haptics 2022, 15, 479–496. [Google Scholar] [CrossRef]

- Davison, S.M.C.; Deeprose, C.; Terbeck, S. A Comparison of Immersive Virtual Reality with Traditional Neuropsychological Measures in the Assessment of Executive Functions. Acta Neuropsychiatr. 2018, 30, 79–89. [Google Scholar] [CrossRef]

- Skurla, M.D.; Rahman, A.T.; Salcone, S.; Mathias, L.; Shah, B.; Forester, B.P.; Vahia, I.V. Virtual Reality and Mental Health in Older Adults: A Systematic Review. Int. Psychogeriatr. 2022, 34, 143–155. [Google Scholar] [CrossRef]

- Kim, E.; Han, J.; Choi, H.; Prié, Y.; Vigier, T.; Bulteau, S.; Kwon, G.H. Examining the Academic Trends in Neuropsychological Tests for Executive Functions Using Virtual Reality: Systematic Literature Review. JMIR Serious Games 2021, 9, e30249. [Google Scholar] [CrossRef]

- Parsons, T. Virtual Reality for Enhanced Ecological Validity and Experimental Control in the Clinical, Affective, and Social Neurosciences. Front. Hum. Neurosci. 2015, 9, 660. [Google Scholar] [CrossRef] [PubMed]

- Kourtesis, P.; Kouklari, E.-C.; Roussos, P.; Mantas, V.; Papanikolaou, K.; Skaloumbakas, C.; Pehlivanidis, A. Virtual Reality Training of Social Skills in Adults with Autism Spectrum Disorder: An Examination of Acceptability, Usability, User Experience, Social Skills, and Executive Functions. Behav. Sci. 2023, 13, 336. [Google Scholar] [CrossRef] [PubMed]

- Parsey, C.M.; Schmitter-Edgecombe, M. Applications of Technology in Neuropsychological Assessment. Clin. Neuropsychol. 2013, 27, 1328–1361. [Google Scholar] [CrossRef] [PubMed]

- Huygelier, H.; Schraepen, B.; van Ee, R.; Vanden Abeele, V.; Gillebert, C.R. Acceptance of Immersive Head-Mounted Virtual Reality in Older Adults. Sci. Rep. 2019, 9, 4519. [Google Scholar] [CrossRef]

- Zaidi, F.; Duthie, C.; Carr, E.; Hassan, S. Conceptual framework for the usability evaluation of gamified virtual reality environment for non-gamers. In Proceedings of the 16th ACM SIGGRAPH International Conference on Virtual-Reality Continuum and its Applications in Industry, Tokyo, Japan, 2–3 December 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Doré, B.; Gaudreault, A.; Everard, G.; Ayena, J.; Abboud, A.; Robitaille, N.; Batcho, C.S. Acceptability, Feasibility, and Effectiveness of Immersive Virtual Technologies to Promote Exercise in Older Adults: A Systematic Review and Meta-Analysis. Sensors 2023, 23, 2506. [Google Scholar] [CrossRef]

- Bohil, C.J.; Alicea, B.; Biocca, F.A. Virtual Reality in Neuroscience Research and Therapy. Nat. Rev. Neurosci. 2011, 12, 752–762. [Google Scholar] [CrossRef]

- Palmisano, S.; Mursic, R.; Kim, J. Vection and Cybersickness Generated by Head-and-Display Motion in the Oculus Rift. Displays 2017, 46, 1–8. [Google Scholar] [CrossRef]

- Kourtesis, P.; Amir, R.; Linnell, J.; Argelaguet, F.; MacPherson, S.E. Cybersickness, Cognition, & Motor Skills: The Effects of Music, Gender, and Gaming Experience. IEEE Trans. Vis. Comput. Graph. 2023, 29, 2326–2336. [Google Scholar] [CrossRef]

- Kourtesis, P.; Papadopoulou, A.; Roussos, P. Cybersickness in Virtual Reality: The Role of Individual Differences, Its Effects on Cognitive Functions and Motor Skills, and Intensity Differences during and after Immersion. Virtual Worlds 2024, 3, 62–93. [Google Scholar] [CrossRef]

- Kourtesis, P.; Linnell, J.; Amir, R.; Argelaguet, F.; MacPherson, S.E. Cybersickness in Virtual Reality Questionnaire (CSQ-VR): A Validation and Comparison against SSQ and VRSQ. Virtual Worlds 2023, 2, 16–35. [Google Scholar] [CrossRef]

- Kourtesis, P.; Collina, S.; Doumas, L.; MacPherson, S. Validation of the Virtual Reality Neuroscience Questionnaire: Maximum Duration of Immersive Virtual Reality Sessions Without the Presence of Pertinent Adverse Symptomatology. Front. Hum. Neurosci. 2019, 13, 417. [Google Scholar] [CrossRef] [PubMed]

- Ashendorf, L.; Jefferson, A.L.; O’Connor, M.K.; Chaisson, C.; Green, R.C.; Stern, R.A. Trail Making Test Errors in Normal Aging, Mild Cognitive Impairment, and Dementia. Arch. Clin. Neuropsychol. 2008, 23, 129–137. [Google Scholar] [CrossRef] [PubMed]

- Bowie, C.R.; Harvey, P.D. Administration and Interpretation of the Trail Making Test. Nat. Protoc. 2006, 1, 2277–2281. [Google Scholar] [CrossRef]

- Plotnik, M.; Doniger, G.M.; Bahat, Y.; Gottleib, A.; Gal, O.B.; Arad, E.; Kribus-Shmiel, L.; Kimel-Naor, S.; Zeilig, G.; Schnaider-Beeri, M.; et al. Immersive Trail Making: Construct Validity of an Ecological Neuropsychological Test. In Proceedings of the 2017 International Conference on Virtual Rehabilitation (ICVR), Montreal, QC, Canada, 19–22 June 2017; pp. 1–6. [Google Scholar]

- Marije Boonstra, A.; Oosterlaan, J.; Sergeant, J.A.; Buitelaar, J.K. Executive Functioning in Adult ADHD: A Meta-Analytic Review. Psychol. Med. 2005, 35, 1097–1108. [Google Scholar] [CrossRef]

- Mohamed, S.M.H.; Butzbach, M.; Fuermaier, A.B.M.; Weisbrod, M.; Aschenbrenner, S.; Tucha, L.; Tucha, O. Basic and Complex Cognitive Functions in Adult ADHD. PLoS ONE 2021, 16, e0256228. [Google Scholar] [CrossRef]

- Tatar, Z.B.; Cansız, A. Executive Function Deficits contribute to Poor Theory of Mind Abilities in Adults with ADHD. Appl. Neuropsychol. Adult 2022, 29, 244–251. [Google Scholar] [CrossRef]

- Yurtbaşı, P.; Aldemir, S.; Teksin Bakır, M.G.; Aktaş, Ş.; Ayvaz, F.B.; Piştav Satılmış, Ş.; Münir, K. Comparison of Neurological and Cognitive Deficits in Children with ADHD and Anxiety Disorders. J. Atten. Disord. 2018, 22, 472–485. [Google Scholar] [CrossRef]

- Giatzoglou, E.; Vorias, P.; Kemm, R.; Karayianni, I.; Nega, C.; Kourtesis, P. The Trail Making Test in Virtual Reality (TMT-VR): The Effects of Interaction Modes and Gaming Skills on Cognitive Performance of Young Adults. Appl. Sci. 2024, 14, 10010. [Google Scholar] [CrossRef]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders; American Psychiatric Publishing: Arlington, VA, USA, 2013; ISBN 978-0-89042-555-8. [Google Scholar]

- Lovejoy, D.W.; Ball, J.D.; Keats, M.; Stutts, M.L.; Spain, E.H.; Janda, L.; Janusz, J. Neuropsychological performance of adults with attention deficit hyperactivity disorder (ADHD): Diagnostic classification estimates for measures of frontal lobe/executive functioning. J. Int. Neuropsychol. Soc. 1999, 5, 222–233. [Google Scholar] [CrossRef]

- Polanczyk, G.; Willcutt, E.; Salum, G.; Kieling, C.; Rohde, L.; Polanczyk, G.V.; Willcutt, E.G.; Salum, G.A.; Kieling, C.; Rohde, L.A. ADHD prevalence estimates across three decades: An updated systematic review and meta-regression analysis. Int. J. Epidemiol. 2014, 43, 434–442. [Google Scholar] [CrossRef] [PubMed]

- Song, P.; Zha, M.; Yang, Q.; Zhang, Y.; Li, X.; Rudan, I. The prevalence of adult attention-deficit hyperactivity disorder: A global systematic review and meta-analysis. J. Glob. Health 2021, 11, 04009. [Google Scholar] [CrossRef] [PubMed]

- Faraone, S.V.; Asherson, P.; Banaschewski, T.; Biederman, J.; Buitelaar, J.K.; Ramos-Quiroga, J.A.; Rohde, L.A.; Sonuga-Barke, E.J.S.; Tannock, R.; Franke, B. Attention-deficit/hyperactivity disorder. Nat. Rev. Dis. Primer 2015, 1, 15020. [Google Scholar] [CrossRef] [PubMed]

- Choi, W.-S.; Woo, Y.S.; Wang, S.-M.; Lim, H.K.; Bahk, W.-M. The prevalence of psychiatric comorbidities in adult ADHD compared with non-ADHD populations: A systematic literature review. PLoS ONE 2022, 17, e0277175. [Google Scholar] [CrossRef]

- Faraone, S.V.; Bierderman, J.; Mick, E. The age-dependent decline of attention deficit hyperactivity disorder: A meta-analysis of follow-up studies. Psychol. Med. 2006, 36, 159–165. [Google Scholar] [CrossRef]

- Simon, V.; Czobor, P.; Bálint, S.; Mészáros, Á.; Bitter, I. Prevalence and correlates of adult attention-deficit hyperactivity disorder: Meta-analysis. Br. J. Psychiatry 2009, 194, 204–211. [Google Scholar] [CrossRef]

- Barkley, R.A. ADHD and the Nature of Self-Control; The Guilford Press: New York, NY, USA, 1997; p. xix. 410p, ISBN 1-57230-250-X. [Google Scholar]

- Barkley, R.A.; Murphy, K.R.; Fischer, M. ADHD in Adults: What the Science Says; The Guilford Press: New York, NY, USA, 2008; p. xii. 500p. [Google Scholar]

- Biederman, J.; Mick, E.; Faraone, S.V. Age-dependent decline of symptoms of attention deficit hyperactivity disorder: Impact of remission definition and symptom type. Am. J. Psychiatry 2000, 157, 816–818. [Google Scholar] [CrossRef]

- Winstanley, C.A.; Eagle, D.M.; Robbins, T.W. Behavioral models of impulsivity in relation to ADHD: Translation between clinical and preclinical studies. Atten. Deficit Hyperact. Disord. Neurosci. Behav. Approach 2006, 26, 379–395. [Google Scholar] [CrossRef]

- Biederman, J.; Monuteaux, M.; Doyle, A.; Seidman, L.; Wilens, T.; Ferrero, F.; Morgan, C.; Faraone, S. Impact of Executive Function Deficits and Attention-Deficit/Hyperactivity Disorder (ADHD) on Academic Outcomes in Children. J. Consult. Clin. Psychol. 2004, 72, 757–766. [Google Scholar] [CrossRef]

- Wiebe, A.; Kannen, K.; Li, M.; Aslan, B.; Anders, D.; Selaskowski, B.; Ettinger, U.; Lux, S.; Philipsen, A.; Braun, N. Multimodal Virtual Reality-Based Assessment of Adult ADHD: A Feasibility Study in Healthy Subjects. Assessment 2023, 30, 1435–1453. [Google Scholar] [CrossRef]

- Murphy, K.; Barkley, R.A. Attention deficit hyperactivity disorder in adults: Comorbidities and adaptive impairments. Compr. Psychiatry 1996, 37, 393–401. [Google Scholar] [CrossRef] [PubMed]

- Woods, S.P.; Lovejoy, D.W.; Ball, J.D. Neuropsychological Characteristics of Adults with ADHD: A Comprehensive Review of Initial Studies. Clin. Neuropsychol. 2002, 16, 12–34. [Google Scholar] [CrossRef] [PubMed]

- Egeland, J.; Follesø, K. Offering alphabet support in the Trail Making Test: Increasing validity for participants with insufficient automatization of the alphabet. Appl. Neuropsychol. Adult 2022, 29, 478–485. [Google Scholar] [CrossRef] [PubMed]

- Taylor, C.J.; Miller, D.C. Neuropsychological assessment of attention in ADHD adults. J. Atten. Disord. 1997, 2, 77–88. [Google Scholar] [CrossRef]

- Walker, A.Y.; Shores, A.E.; Trollor, J.N.; Lee, T.; Sachdev, P.S. Neuropsychological Functioning of Adults with Attention Deficit Hyperactivity Disorder. J. Clin. Exp. Neuropsychol. 2000, 22, 115–124. [Google Scholar] [CrossRef]

- Piepmeier, A.T.; Shih, C.-H.; Whedon, M.; Williams, L.M.; Davis, M.E.; Henning, D.A.; Park, S.; Calkins, S.D.; Etnier, J.L. The effect of acute exercise on cognitive performance in children with and without ADHD. J. Sport Health Sci. 2015, 4, 97–104. [Google Scholar] [CrossRef]

- Aycicegi-Dinn, A.; Dervent-Ozbek, S.; Yazgan, Y.; Bicer, D.; Dinn, W. Neurocognitive correlates of adult attention-deficit/hyperactivity disorder in a Turkish sample. Atten. Deficit Hyperact. Disord. 2011, 3, 41–52. [Google Scholar] [CrossRef]

- Kourtesis, P.; Collina, S.; Doumas, L.A.A.; MacPherson, S.E. Technological Competence Is a Pre-condition for Effective Implementation of Virtual Reality Head Mounted Displays in Human Neuroscience: A Technological Review and Meta-Analysis. Front. Hum. Neurosci. 2019, 13, 342. [Google Scholar] [CrossRef]

- Romero-Ayuso, D.; Toledano-González, A.; Rodríguez-Martínez, M.D.; Arroyo-Castillo, P.; Triviño-Juárez, J.M.; González, P.; Ariza-Vega, P.; Del Pino González, A.; Segura-Fragoso, A. Effectiveness of Virtual Reality-Based Interventions for Children and Adolescents with ADHD: A Systematic Review and Meta-Analysis. Children 2021, 8, 70. [Google Scholar] [CrossRef]

- Schena, A.; Garotti, R.; D’Alise, D.; Giugliano, S.; Polizzi, M.; Trabucco, V.; Riccio, M.P.; Bravaccio, C. IAmHero: Preliminary Findings of an Experimental Study to Evaluate the Statistical Significance of an Intervention for ADHD Conducted through the Use of Serious Games in Virtual Reality. Int. J. Environ. Res. Public. Health 2023, 20, 3414. [Google Scholar] [CrossRef]

- Corrigan, N.; Păsărelu, C.-R.; Voinescu, A. Immersive virtual reality for improving cognitive deficits in children with ADHD: A systematic review and meta-analysis. Virtual Real. 2023, 27, 3545–3564. [Google Scholar] [CrossRef] [PubMed]

- Bioulac, S.; Micoulaud-Franchi, J.-A.; Maire, J.; Bouvard, M.P.; Rizzo, A.A.; Sagaspe, P.; Philip, P. Virtual Remediation Versus Methylphenidate to Improve Distractibility in Children With ADHD: A Controlled Randomized Clinical Trial Study. J. Atten. Disord. 2020, 24, 326–335. [Google Scholar] [CrossRef]

- Frolli, A.; Ricci, M.C.; Cavallaro, A.; Rizzo, S.; Di Carmine, F. Virtual Reality Improves Learning in Children with ADHD. In Proceedings of the 13th International Conference on Education and New Learning Technologies, Online, 5–6 July 2021; p. 9236. [Google Scholar]

- Kessler, R.C.; Adler, L.; Ames, M.; Demler, O.; Faraone, S.; Hiripi, E.; Howes, M.J.; Jin, R.; Secnik, K.; Spencer, T.; et al. The World Health Organization adult ADHD self-report scale (ASRS): A short screening scale for use in the general population. Psychol. Med. 2005, 35, 245–256. [Google Scholar] [CrossRef] [PubMed]

- Rivas Costa, C.; Fernández Iglesias, M.J.; Anido Rifón, L.E.; Gómez Carballa, M.; Valladares Rodríguez, S. The acceptability of TV-based game platforms as an instrument to support the cognitive evaluation of senior adults at home. PeerJ 2017, 5, e2845. [Google Scholar] [CrossRef] [PubMed]

- Rizzo, A.A.; Schultheis, M.; Kerns, K.A.; Mateer, C. Analysis of assets for virtual reality applications in neuropsychology. Neuropsychol. Rehabil. 2004, 14, 207–239. [Google Scholar] [CrossRef]

- Robbins, R.N.; Santoro, A.F.; Ferraris, C.; Asiedu, N.; Liu, J.; Dolezal, C.; Malee, K.M.; Mellins, C.A.; Paul, R.; Thongpibul, K.; et al. Adaptation and construct validity evaluation of a tablet-based, short neuropsychological test battery for use with adolescents and young adults living with HIV in Thailand. Neuropsychology 2022, 36, 695–708. [Google Scholar] [CrossRef]

- Terruzzi, S.; Albini, F.; Massetti, G.; Etzi, R.; Gallace, A.; Vallar, G. The Neuropsychological Assessment of Unilateral Spatial Neglect Through Computerized and Virtual Reality Tools: A Scoping Review. Neuropsychol. Rev. 2024, 34, 363–401. [Google Scholar] [CrossRef]

- Andreaki, A.; Xenikaki, K.; Kosma, E.; Chronopoulos, S.; Dimopoulos, N.; Tafiadis, D. Validation of Adult Self Report Scale for ADHD Through Data Analysis: A Pilot Study in Typical Greek Population. In Proceedings of the 2nd Congress on Evidence Based Mental Health: From Research to Clinical Practice, Kavala, Greece, 28 June–1 July 2018. [Google Scholar]

- Zalonis, I.; Kararizou, E.; Triantafyllou, N.I.; Kapaki, E.; Papageorgiou, S.; Sgouropoulos, P.; Vassilopoulos, D. A Normative Study of the Trail Making Test A and B in Greek Adults. Clin. Neuropsychol. 2008, 22, 842–850. [Google Scholar] [CrossRef]

- Brooke, J. SUS: A retrospective. J. Usability Stud. 2013, 8, 29–40. [Google Scholar]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Design and Evaluation of a Short Version of the User Experience Questionnaire (UEQ-S). Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 103. [Google Scholar] [CrossRef]

- Hirani, S.P.; Rixon, L.; Beynon, M.; Cartwright, M.; Cleanthous, S.; Selva, A.; Sanders, C.; Newman, S.P. Quantifying beliefs regarding telehealth: Development of the Whole Systems Demonstrator Service User Technology Acceptability Questionnaire. J. Telemed. Telecare 2017, 23, 460–469. [Google Scholar] [CrossRef] [PubMed]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2022. [Google Scholar]

- RStudio Team. RStudio: Integrated Development Environment for R; RStudio, PBC: Boston, MA, USA, 2022. [Google Scholar]

- Peterson, R.A. Finding Optimal Normalizing Transformations via bestNormalize. R J. 2021, 13, 310. [Google Scholar] [CrossRef]

- Wickham, H. ggplot2: Elegant Graphics for Data Analysis; Springer: New York, NY, USA, 2016; ISBN 978-3-319-24277-4. [Google Scholar]

- Wei, T.; Simko, V. R package, version 0.94; “corrplot”: Visualization of a Correlation Matrix. 2024. Available online: https://github.com/taiyun/corrplot (accessed on 10 February 2025).

- Singmann, H.; Bolker, B.; Westfall, J.; Aust, F.; Ben-Shachar, M.S. afex: Analysis of Factorial Experiments, 2021. Available online: https://github.com/singmann/afex (accessed on 10 February 2025).

- Cohen, J. A power primer. Psychol. Bull. 1992, 112, 155. [Google Scholar] [CrossRef] [PubMed]

- Merzon, L.; Pettersson, K.; Aronen, E.T.; Huhdanpää, H.; Seesjärvi, E.; Henriksson, L.; MacInnes, W.J.; Mannerkoski, M.; Macaluso, E.; Salmi, J. Eye movement behavior in a real-world virtual reality task reveals ADHD in children. Sci. Rep. 2022, 12, 20308. [Google Scholar] [CrossRef]

- Malegiannaki, A.; Garefalaki, E.; Nestoraki, D.; Malegiannakis, A.; Kosmidis, M. Can the Trail Making Test be substituted by a 3D computerized visit to a supermarket? Clinical implications. Dialogues Clin. Neurosci. 2021, 4, 71–80. [Google Scholar] [CrossRef]

- Kourtesis, P. A Comprehensive Review of Multimodal XR Applications, Risks, and Ethical Challenges in the Metaverse. Multimodal Technol. Interact. 2024, 8, 98. [Google Scholar] [CrossRef]

- Carlson, K.D.; Herdman, A.O. Understanding the Impact of Convergent Validity on Research Results. Organ. Res. Methods 2012, 15, 17–32. [Google Scholar] [CrossRef]

- Araiza-Alba, P.; Keane, T.; Beaudry, J.; Kaufman, J. Immersive Virtual Reality Implementations in Developmental Psychology. Int. J. Virtual Real. 2020, 20, 1–35. [Google Scholar] [CrossRef]

- Park, S.-Y.; Schott, N. The trail-making-test: Comparison between paper-and-pencil and computerized versions in young and healthy older adults. Appl. Neuropsychol. Adult 2022, 29, 1208–1220. [Google Scholar] [CrossRef]

- Kourtesis, P.; Collina, S.; Doumas, L.A.A.; MacPherson, S.E. An ecologically valid examination of event-based and time-based prospective memory using immersive virtual reality: The effects of delay and task type on everyday prospective memory. Memory 2021, 29, 486–506. [Google Scholar] [CrossRef]

- Kourtesis, P.; MacPherson, S.E. An ecologically valid examination of event-based and time-based prospective memory using immersive virtual reality: The influence of attention, memory, and executive function processes on real-world prospective memory. Neuropsychol. Rehabil. 2023, 33, 255–280. [Google Scholar] [CrossRef] [PubMed]

- Climent, G.; Rodríguez, C.; García, T.; Areces, D.; Mejías, M.; Aierbe, A.; Moreno, M.; Cueto, E.; Castellá, J.; Feli González, M. New virtual reality tool (Nesplora Aquarium) for assessing attention and working memory in adults: A normative study. Appl. Neuropsychol. Adult 2021, 28, 403–415. [Google Scholar] [CrossRef] [PubMed]

- Tychsen, L.; Foeller, P. Effects of Immersive Virtual Reality Headset Viewing on Young Children: Visuomotor Function, Postural Stability, and Motion Sickness. Am. J. Ophthalmol. 2020, 209, 151–159. [Google Scholar] [CrossRef] [PubMed]

- Seesjärvi, E.; Puhakka, J.; Aronen, E.T.; Hering, A.; Zuber, S.; Merzon, L.; Kliegel, M.; Laine, M.; Salmi, J. EPELI: A novel virtual reality task for the assessment of goal-directed behavior in real-life contexts. Psychol. Res. 2023, 87, 1899–1916. [Google Scholar] [CrossRef]

| Mean | SD | Minimum | Maximum | |

|---|---|---|---|---|

| Age | 23.868 | 3.8232 | 18 | 40 |

| Education | 15.981 | 3.2610 | 12 | 22 |

| TMT Task A–Task Time | 30.435 | 9.8485 | 15.080 | 59.730 |

| TMT Task A–Mistakes | 0.132 | 0.590 | 0 | 4 |

| TMT Task B–Task Time | 65.485 | 25.3947 | 28.200 | 148.500 |

| TMT Task B–Mistakes | 0.604 | 1.291 | 0 | 6 |

| TMT-VR Task A–Accuracy | 0.185 | 0.0572 | 0.152 | 0.318 |

| TMT-VR Task A–Task Time | 83.835 | 26.7971 | 38.174 | 142.443 |

| TMT-VR Task A–Mistakes | 1.151 | 2.3729 | 0 | 14 |

| TMT-VR Task B–Accuracy | 0.185 | 0.0568 | 0.151 | 0.313 |

| TMT-VR Task B–Task Time | 98.607 | 28.2016 | 43.681 | 171.150 |

| TMT-VR Task B–Mistakes | 1.585 | 2.4685 | 0 | 14 |

| SUS | 41.208 | 5.1004 | 29 | 49 |

| UEQ-S | 143.547 | 19.4971 | 101 | 174 |

| SUTAQ | 49.113 | 8.5522 | 20 | 60 |

| ASRS | 39.528 | 9.5507 | 15 | 56 |

| Diagnosis | Mean (SD) | Min–Max | t-Statistic | p-Value | Cohen’s d | |

|---|---|---|---|---|---|---|

| TMT Task A–Task Time | ADHD | 29.38 (10.24) | 16.05–59.73 | −0.73 | p = 0.467 | −0.20 |

| Neurotypical | 31.37 (9.57) | 15.08–53.80 | ||||

| TMT Task A–Mistakes | ADHD | 0.08 (0.27) | 0–1 | −0.60 | p = 0.549 | −0.17 |

| Neurotypical | 0.17 (0.77) | 0–4 | ||||

| TMT Task B–Task Time | ADHD | 65.40 (27.92) | 31.62–148.50 | −0.02 | p = 0.983 | −0.01 |

| Neurotypical | 65.55 (23.42) | 28.20–136.81 | ||||

| TMT Task B–Mistakes | ADHD | 0.52 (1.12) | 0–5 | −0.44 | p = 0.660 | −0.12 |

| Neurotypical | 0.67 (1.44) | 0–6 | ||||

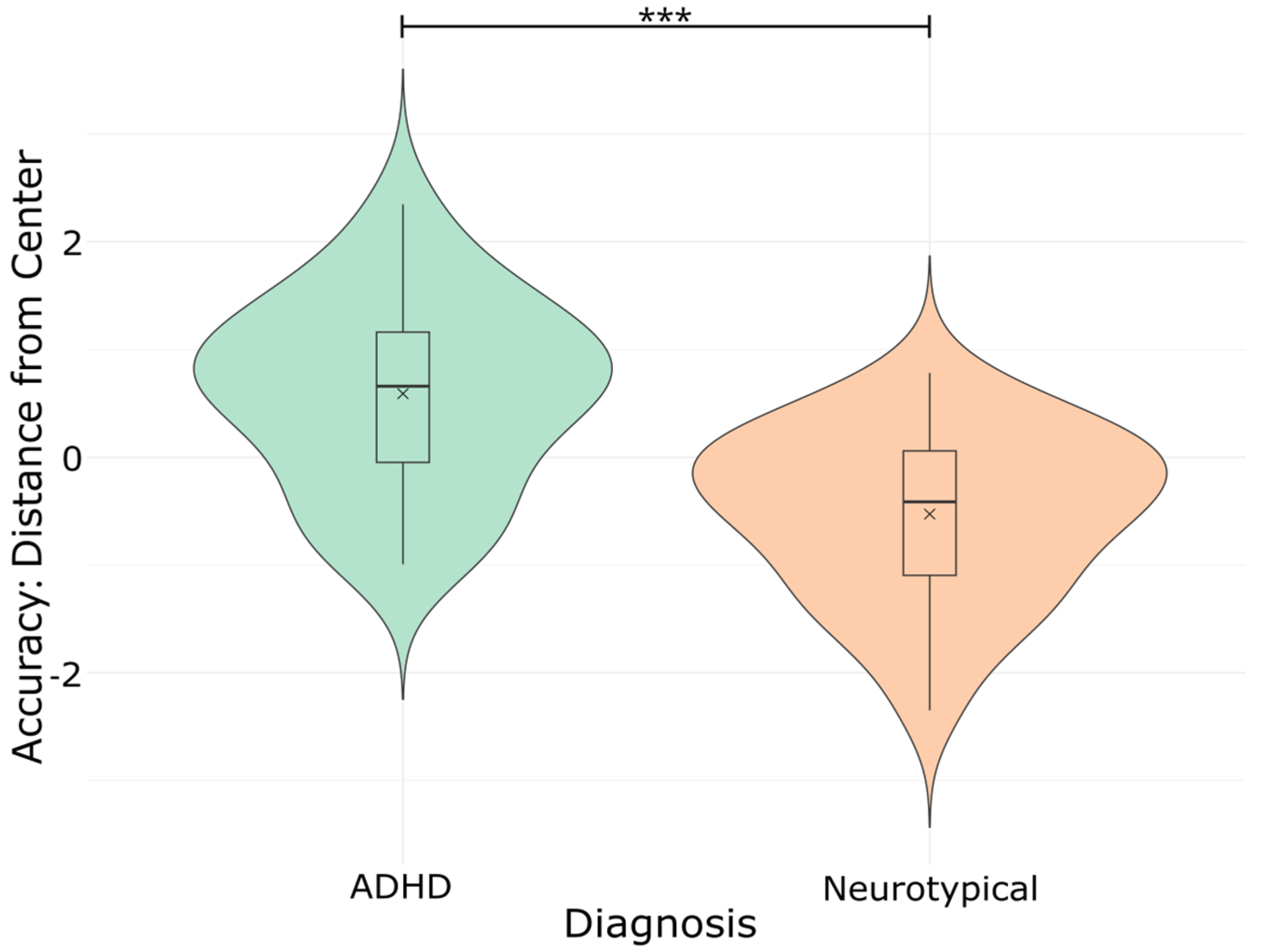

| TMT-VR Task A–Accuracy | ADHD | 0.21 (0.07) | 0.15–0.31 | 4.89 | p < 0.001 *** | 1.34 |

| Neurotypical | 0.15 (0.01) | 0.15–0.17 | ||||

| TMT-VR Task A–Task Time | ADHD | 80.75 (29.29) | 38.17–142.44 | −0.98 | p = 0.835 | −0.27 |

| Neurotypical | 86.58 (24.56) | 44.84–136.07 | ||||

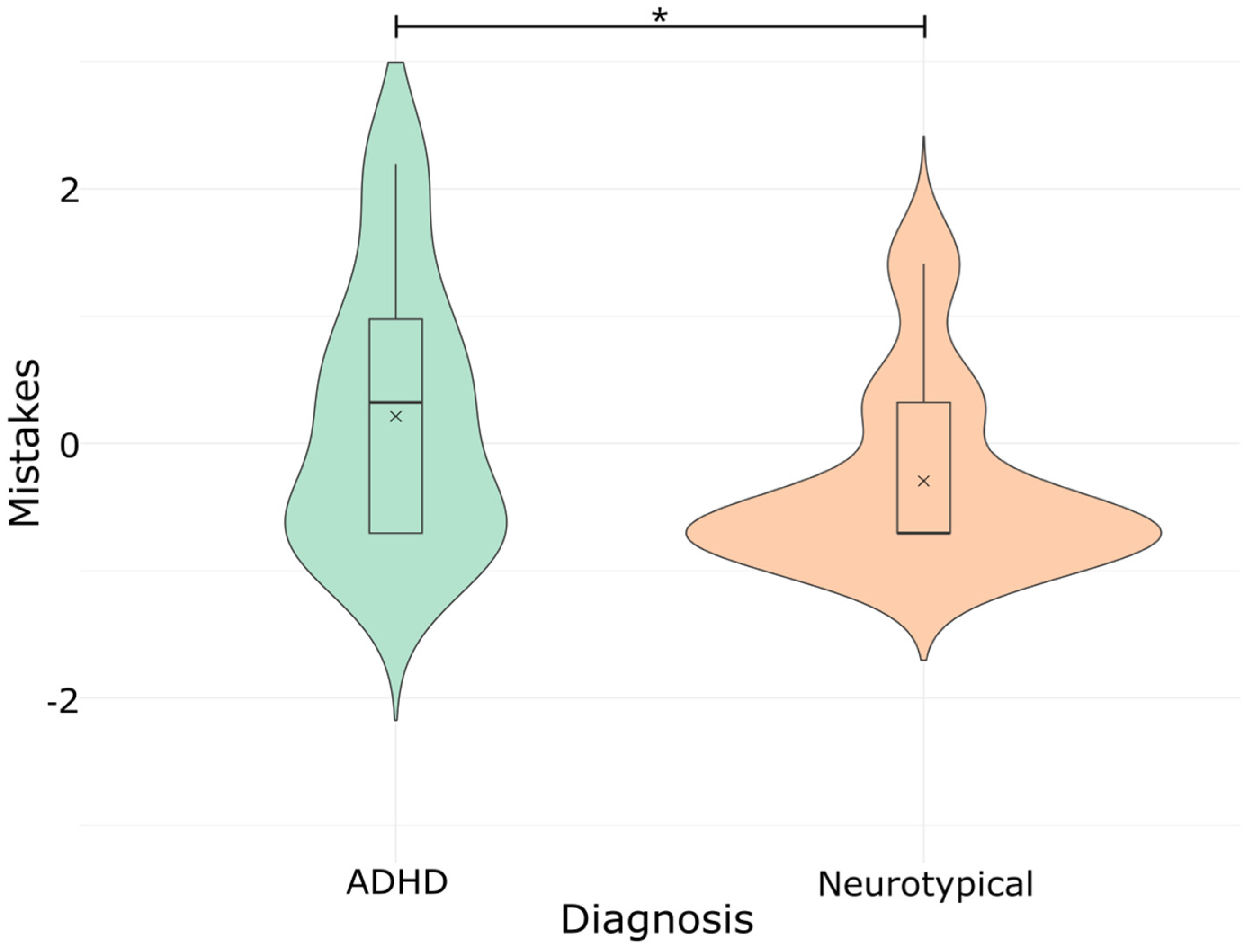

| TMT-VR Task A–Mistakes | ADHD | 1.88 (3.17) | 0–14 | 2.38 | p = 0.011 * | 0.65 |

| Neurotypical | 0.50 (0.96) | 0–3 | ||||

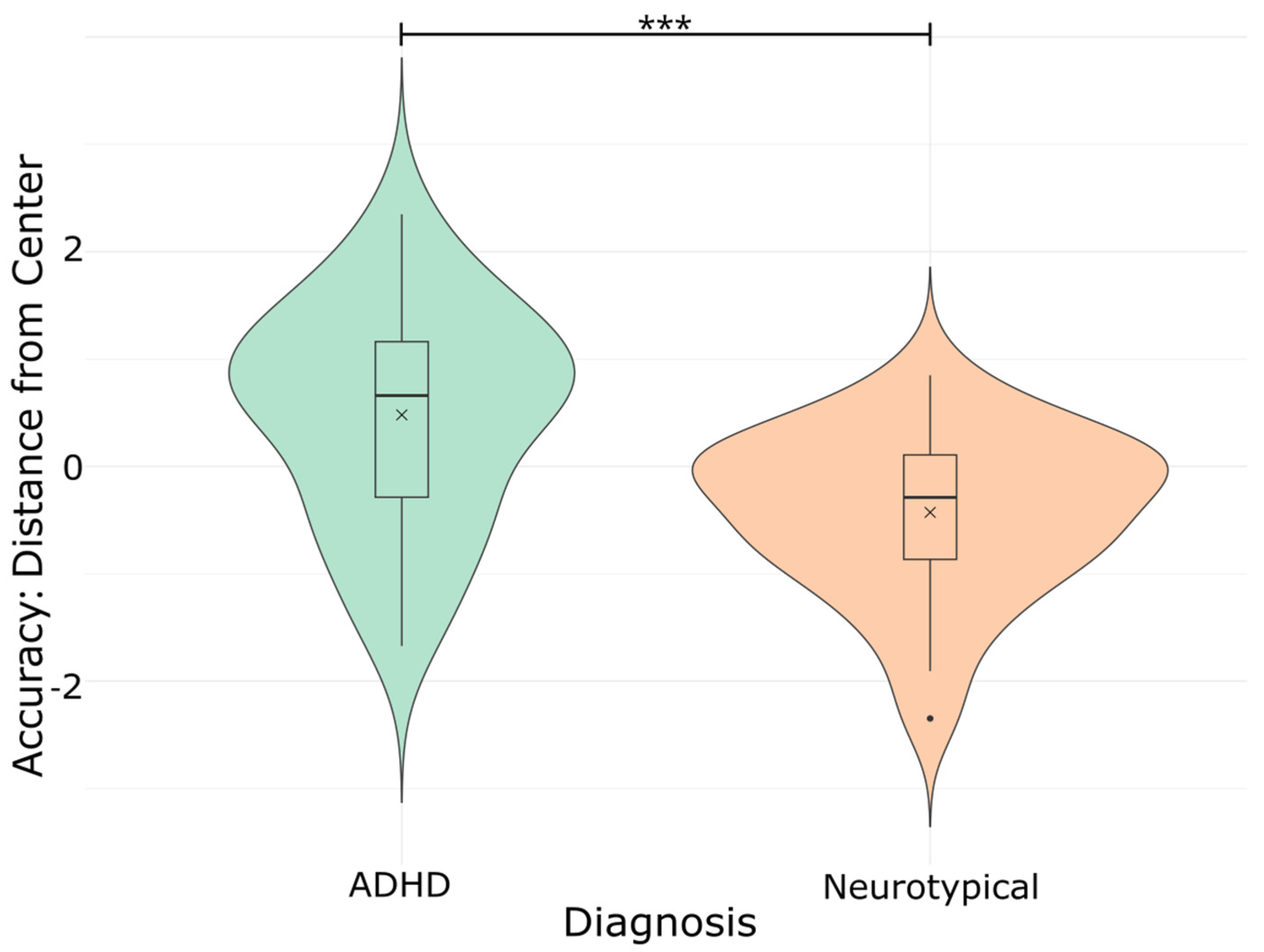

| TMT-VR Task B–Accuracy | ADHD | 0.21 (0.07) | 0.15–0.31 | 3.69 | p < 0.001 *** | 1.02 |

| Neurotypical | 0.15 (0.01) | 0.15–0.17 | ||||

| TMT-VR Task B–Task Time | ADHD | 94.47 (27.71) | 43.68–152.68 | −0.93 | p = 0.822 | −0.26 |

| Neurotypical | 102.29 (28.61) | 52.58–171.15 | ||||

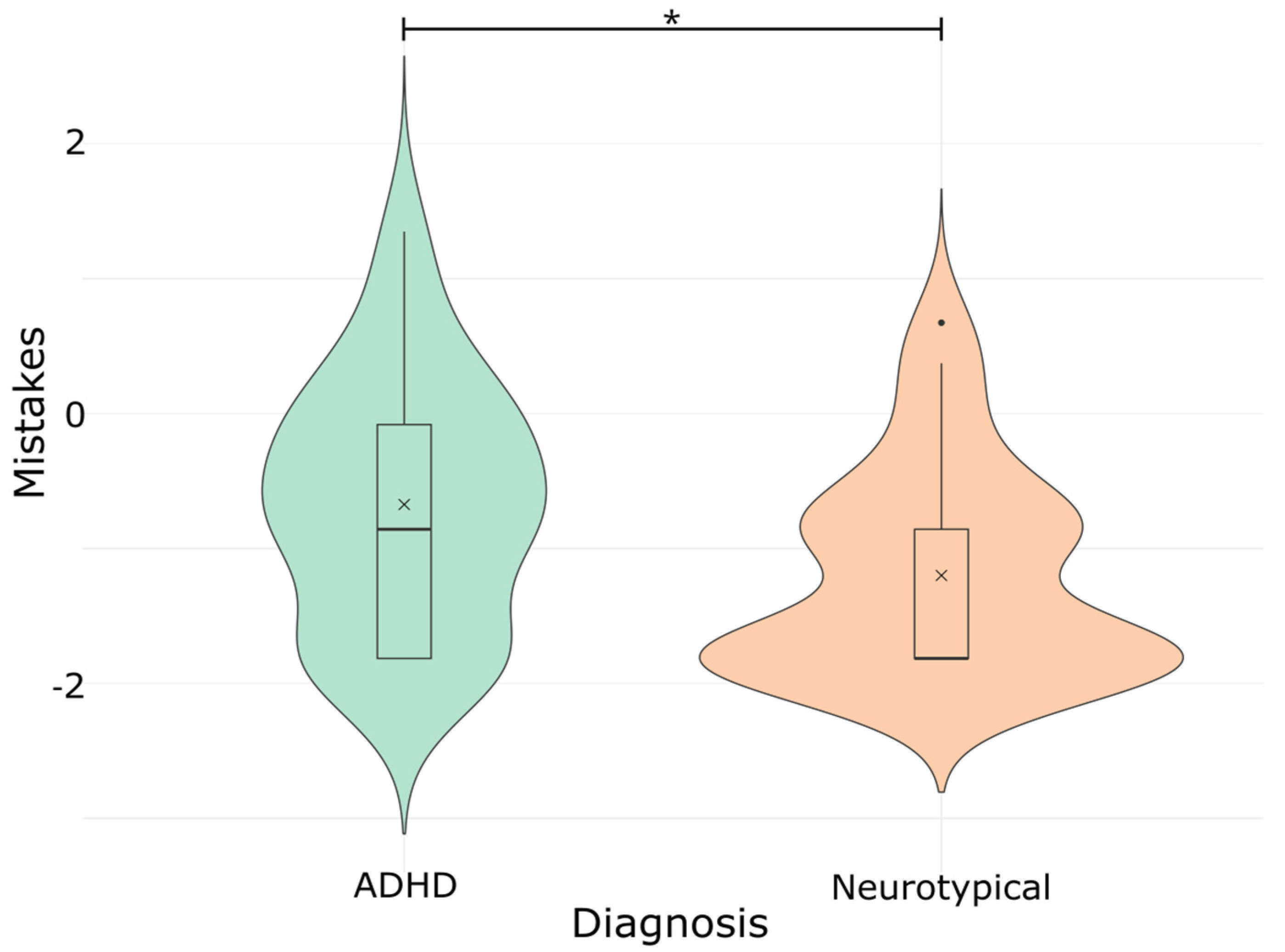

| TMT-VR Task B–Mistakes | ADHD | 2.28 (3.08) | 0–14 | 2.29 | p = 0.013 * | 0.63 |

| Neurotypical | 0.96 (1.57) | 0–6 | ||||

| ASRS | ADHD | 43.96 (6.81) | 28–56 | 3.57 | p < 0.001 *** | 0.98 |

| Neurotypical | 35.57 (9.99) | 15–52 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gounari, K.A.; Giatzoglou, E.; Kemm, R.; Beratis, I.N.; Nega, C.; Kourtesis, P. The Trail Making Test in Virtual Reality (TMT-VR): Examination of the Ecological Validity, Usability, Acceptability, and User Experience in Adults with ADHD. Psychiatry Int. 2025, 6, 31. https://doi.org/10.3390/psychiatryint6010031

Gounari KA, Giatzoglou E, Kemm R, Beratis IN, Nega C, Kourtesis P. The Trail Making Test in Virtual Reality (TMT-VR): Examination of the Ecological Validity, Usability, Acceptability, and User Experience in Adults with ADHD. Psychiatry International. 2025; 6(1):31. https://doi.org/10.3390/psychiatryint6010031

Chicago/Turabian StyleGounari, Katerina Alkisti, Evgenia Giatzoglou, Ryan Kemm, Ion N. Beratis, Chrysanthi Nega, and Panagiotis Kourtesis. 2025. "The Trail Making Test in Virtual Reality (TMT-VR): Examination of the Ecological Validity, Usability, Acceptability, and User Experience in Adults with ADHD" Psychiatry International 6, no. 1: 31. https://doi.org/10.3390/psychiatryint6010031

APA StyleGounari, K. A., Giatzoglou, E., Kemm, R., Beratis, I. N., Nega, C., & Kourtesis, P. (2025). The Trail Making Test in Virtual Reality (TMT-VR): Examination of the Ecological Validity, Usability, Acceptability, and User Experience in Adults with ADHD. Psychiatry International, 6(1), 31. https://doi.org/10.3390/psychiatryint6010031