1. Introduction

The digital news ecosystem is undergoing a profound shift as artificial intelligence increasingly intervenes in editorial processes. Gatekeeping theory—the study of how information is filtered and selected for news—has long centered on human decisions by journalists and editors (

van Dalen, 2012,

2024). Classic works by

White (

1950) documented how a newspaper editor (“

Mr. Gates”) accepts or rejects wire stories, illustrating the news gatekeeper’s role in shaping media agendas (

White, 1950). Subsequent scholarship (

Shoemaker & Reese, 1991;

Shoemaker & Vos, 2009) expanded gatekeeping beyond individual editors to multiple levels (organizational policies, routines, societal factors) that influence news selection. Agenda-setting theory further complements this, positing that media may not tell people

what to think but are stunningly effective in telling audiences

what to think about, by elevating certain issues over others (

McCombs & Shaw, 1972). Likewise, framing theory notes that media not only select topics but also shape

how issues are presented (

Entman, 1993), influencing public interpretation. These foundational theories presume a largely human-driven process of editorial judgment, news values, and institutional norms guiding what becomes news.

However, in the age of AI, traditional gatekeeping is being challenged on two fronts. First, AI-driven news curation—algorithms that filter and recommend content on platforms like Facebook’s News Feed, Google News, or X (formerly Twitter) timelines—have assumed functions once dominated by editors. These platforms determine which news stories users see (and in what order), effectively acting as “

algorithmic gatekeepers” between publishers and audiences. This raises critical questions: Do these recommender algorithms reinforce the editorial choices of news organizations, override them with personalized criteria, or create an entirely new set of gatekeeping biases? Early evidence shows that social media algorithms have “

upended the gatekeeping role long held by traditional news outlets” (

Elanjimattom, 2023), sometimes skewing users’ news diets in ways not fully under journalistic control. Second, AI-driven content creation is emerging via automated journalism (

Danzon-Chambaud, 2023;

Peng et al., 2024). News organizations are beginning to deploy large language models (LLMs) to draft news reports, summaries, or even write articles autonomously. This automation of news writing introduces new gatekeeping considerations: the

training data of an AI and its

optimization objectives (e.g., maximizing reader engagement metrics) might implicitly determine which facts or angles are emphasized in a story (

Guo et al., 2022). In other words, the algorithm becomes a gatekeeper in deciding how information is “

massaged” into news (

van Dalen, 2024), a function once reserved for human editors.

These developments motivate a reconceptualization of gatekeeping theory. The core purpose of this paper is to theoretically explore how AI-driven content curation and automated news generation challenge—and potentially reshape—traditional notions of gatekeeping in news production and dissemination. We undertake a comprehensive literature review spanning communication studies, journalism, computer science, and ethics to map current knowledge. Key focal points include: AI News Recommenders vs. Journalistic Gatekeepers: How algorithmic news feeds and recommendation systems interact with or supersede the choices of human editors in determining what news reaches audiences; Technical Features and Bias in AI Newswriting: How the design of AI writers (training data, model architecture, and reward functions like engagement versus diversity) influences which stories are told and how they are told, potentially introducing new forms of bias or homogeneity; Autonomy and Control in AI-Augmented Newsrooms: How dependence on third-party AI platforms and tools (e.g., reliance on Google’s algorithms or OpenAI’s models) changes where power resides in journalism, raising concerns about editorial independence and accountability.; Adaptive Algorithms and Feedback Loops: How AI systems that learn from user interactions can create self-reinforcing feedback cycles that alter news visibility and diversity dynamically (for example, privileging click-attractive content and marginalizing other topics, or vice-versa).

By integrating these themes, we aim to develop a theoretical framework for “gatekeeping in the age of AI”. This framework will draw on classic theories (gatekeeping, agenda-setting, framing) and update them with insights from algorithmic media and platform studies, as well as STS perspectives that view technology and human actors as co-constitutive in news processes. We also incorporate AI ethical principles (fairness, transparency, accountability) as normative considerations in evaluating AI’s gatekeeping role. Ultimately, this paper seeks to clarify how the gatekeeping function in journalism is evolving and to identify what is at stake for the quality of the news and the health of the public sphere. In the sections that follow, we outline our review methodology and theoretical approach, present key insights from the literature, delve into thematic findings, and discuss implications for theory and practice.

2. Materials and Methods

This study employs a comprehensive literature review and theoretical synthesis, examining scholarly and industry sources at the intersection of journalism and AI. We prioritized peer-reviewed journal articles, academic books, and reputable reports (from industry bodies and think tanks) published in the last decade, while also including seminal works on gatekeeping theory. Our search spanned communication and media journals (e.g., Digital Journalism, Journalism, New Media & Society), conference proceedings on computational journalism, and interdisciplinary databases. Key search terms included combinations of “gatekeeping,” “algorithmic news,” “news recommender,” “automated journalism,” “AI and news bias,” “platform algorithms and news,” and “LLM journalism.” From an initial broad search across these databases yielding numerous sources, we thematically selected a focused set of approximately 80 works deemed most central to addressing our core theoretical questions regarding the evolution of gatekeeping in the AI era. This selection prioritized works offering key conceptual insights or empirical findings directly relevant to AI’s role in news curation and production. These include foundational gatekeeping theory and contemporary studies on algorithmic recommendations, research on algorithmic gatekeeping power, and analyses of AI adoption in newsrooms. We also incorporated insights from science and technology studies (STS) to understand the socio-technical assemblages of news algorithms and from the AI ethics literature regarding bias, transparency, and accountability.

Our method was essentially theoretical synthesis. We treated the literature review process not just as an end, but as the

material from which to construct an updated theoretical model of gatekeeping. In practice, this meant iteratively mapping concepts from classic media theories onto current AI-driven phenomena and identifying where existing theories fall short or need extension. For example, we examined how Lewin’s gatekeeping concept (originally a metaphor of gates controlling food supply lines, later applied by White to news) (

Lewin, 1943;

White, 1950) might be reframed when “

gates” are automated decision rules. We scrutinized Shoemaker and Vos’s gatekeeping model (

Shoemaker & Vos, 2009), which describes multiple levels of influence (individual, routines, organizational, social system), to consider where algorithmic systems fit in—potentially as a new level or as part of existing levels. Similarly, we revisited agenda setting (

McCombs & Shaw, 1972) in light of algorithms that prioritize certain content for users and framing theory in the context of AI-written narratives. Throughout, we used interdisciplinary triangulation, e.g., combining a communication theory perspective on gatekeeping with an STS perspective on technological agency. STS scholarship encourages us to see algorithms not as neutral tools but as actors with agency (or

actants in Actor-Network Theory terms) that participate in shaping news outcomes (

Law, 1992). This guided us to conceptualize AI not just as a new context but as an active agent in gatekeeping processes.

In constructing the theoretical framework, we also paid attention to the technical underpinnings of AI systems. We considered two broad classes of algorithms: (1) deterministic algorithms, which follow predefined rules (e.g., older news ranking systems with fixed criteria), and (2) machine learning algorithms, which adapt their behavior based on data (including deep learning models) (

van Dalen, 2024). This distinction is crucial because machine learning-based gatekeepers (such as personalized feeds or LLMs)

learn and evolve, potentially leading to unpredictable gatekeeping outcomes over time. We examined how machine learning systems’ optimization goals (for instance, maximizing user click-through or watch time) serve as

de facto editorial policies that might conflict with traditional journalistic values like diversity or public interest (

Thäsler-Kordonouri et al., 2024).

Our analytical method was to extract from each source the insights relevant to AI and gatekeeping, and then categorize these insights under our key themes (recommender systems, automated writing, third-party control, feedback loops). Within each theme, we compared findings across studies to identify consensus, contradictions, and gaps. This thematic synthesis then informed the Results Section (theoretical insights) where we articulate the reconceptualized framework. We acknowledge that much literature on AI in journalism is nascent; where empirical evidence is limited, our framework remains provisional or speculative, highlighting the need for further research.

Our review is qualitative and integrative, rather than a quantitative meta-analysis. We emphasize theoretical depth and breadth of perspectives. To ensure rigor, we cross-verified claims with multiple sources where possible. We also included authoritative industry perspectives (

Newman et al., 2023) to capture real-world developments that the academic literature may not yet fully cover (such as the very latest use of GPT-based tools in newsrooms). However, given the rapid pace of AI advancements, our framework should be revisited frequently.

3. Results

We present a set of theoretical insights that reconceptualize gatekeeping in the context of AI-driven news production and dissemination. These insights form the core of our proposed framework, highlighting how traditional concepts are being transformed or extended. Key results are grouped in five directions, as follows:

We find that the classic gatekeeping function—

filtering and selecting information to become news—is increasingly shared between humans and algorithms. Algorithms now influence each stage of news processing, from what events are detected (through trends or data mining) to how stories are ranked and distributed to audiences (

van Dalen, 2024). In our framework, AI-driven gatekeeping is defined as the “

influence of automated procedures on selecting, writing, editing, and distributing news”, paralleling Shoemaker et al.’s definition of journalistic gatekeeping (

Shoemaker et al., 2009), Crucially, these algorithms (especially machine learning-based ones) operate at a scale and speed beyond human capacity and can introduce new selection criteria (e.g., personalization for user preference, or optimization for engagement) that differ from traditional news values. The gatekeeping process thus becomes a hybrid human–AI system, where editorial judgment and algorithmic logic intersect (

Régis et al., 2024). This challenges linear models of news flow: instead of information passing sequentially through a series of human “

gates”, we have a networked system of parallel and iterative gatekeeping actions by both people and code. Gatekeeping is no longer a unidirectional chain but a dynamic, decentralized process (

Wallace, 2018) involving interactions between journalists, algorithms, audiences, and platform engineers.

Interestingly, AI can both undermine and reinforce aspects of traditional gatekeeping. On the one hand, platform algorithms often usurp editorial control, determining what content “

makes the cut” to user feeds irrespective of newsroom choices. This diminishes the gatekeeping authority of news editors, raising concerns that important public interest news might be downranked if it is not “

engaging” enough. On the other hand, studies suggest that some AI-driven systems end up mirroring legacy gatekeeping structures. For example,

Nechushtai and Lewis (

2019) found Google News’ algorithm largely promoted the same mainstream news sources across different users, “

replicating traditional industry structures more than disrupting them” (

Nechushtai & Lewis, 2019). Major outlets dominated recommendations, indicating that algorithms can reinforce the established media agenda rather than diversify it. Our framework thus accounts for a spectrum: in some cases, algorithmic gatekeeping amplifies existing power hierarchies (privileging big publishers, popular voices), and in others, it introduces new patterns (potentially privileging niche interests or sensational content). The net effect on the diversity of news is context-dependent, prompting us to theorize gatekeeping not as uniformly weakened or strengthened by AI, but re-distributed. We introduce the concept of “

gatekeeping symmetry”: when algorithmic choices align with journalistic choices (symmetry), traditional gatekeeping is reinforced; when they diverge (asymmetry), the gatekeeping function shifts toward the algorithm. This insight highlights the need to assess algorithmic influence case by case.

Traditional gatekeepers often use professional news values (e.g., relevance, timeliness, public significance, balance) to decide what becomes news. AI systems, in contrast, rely on quantifiable proxies—popularity metrics, user profiles, or learned patterns from data. Our review indicates a potential mismatch between journalistic values and algorithmic optimization metrics. For instance, a news feed algorithm typically aims to maximize user engagement (clicks, dwell time, shares) as a measure of success. This can skew gatekeeping toward emotionally arousing or partisan content that drives interaction, possibly at the expense of diversity or hard news. Likewise, an LLM generating news might be trained on a corpus that over-represents certain topics or styles, meaning its default “judgment” of what content to produce is shaped by data bias. One emerging insight is that the objectives coded into AI systems effectively act as a new set of gatekeeping criteria. If an algorithm is tuned for engagement, it will gatekeep in favor of attention-grabbing content; if tuned for personalization, it may narrow recommendations to match user taste (risking echo chambers); if tuned (intentionally) for diversity, it could rotate in under-exposed stories. Our framework posits that understanding who sets the AI’s objectives (engineers, platform policy, newsroom management) is part of modern gatekeeping theory. The decision to prioritize one metric over another is analogous to an editor’s decision to prioritize one news value over another. Thus, gatekeeping power shifts upstream to those who design and configure AI systems.

The incorporation of proprietary AI systems from tech giants (Google, Meta, OpenAI, etc.) into news workflows has effectively created new gatekeepers outside the newsroom. News organizations using third-party AI tools may cede some control over how news is gathered, produced, or delivered. As a result, the locus of gatekeeping power shifts toward these technology providers. Our research supports

Simon’s (

2022) argument that the “

AI goldrush” in news risks increasing the news industry’s dependence on platform companies (

Simon, 2022). Where previously platforms mainly controlled distribution (e.g., social traffic to sites), now their influence extends into content production and curation algorithms embedded in news products. This raises theoretical implications: gatekeeping is no longer solely a media institution function but a shared function with technology institutions. We identify platform companies and AI developers as emergent gatekeepers in the news ecosystem. This is encapsulated in the idea of “

secondary gatekeeping” by infrastructure—e.g., if a newsroom relies on a content moderation AI to filter user submissions, the provider of that AI is indirectly gatekeeping what is published. Editorial autonomy is thus constrained by technical dependency, an insight that adds a political economy layer to gatekeeping theory: control over the “

gates” can lie with actors who own the algorithms and data. This leads to questions of trust and legitimacy of algorithmic gatekeepers (

van Dalen, 2024)—do journalists and the public trust AI systems to play this role, and under what conditions is that power legitimate? Our framework emphasizes that gatekeeping authority is contested in the AI era, and legitimacy must be negotiated (through transparency, regulation, etc.).

Unlike static human judgments, AI gatekeeping systems continuously learn and adjust. This adaptive nature allows outcomes to evolve through self-reinforcing cycles. Evidence for these dynamics is particularly apparent in personalized news delivery: algorithms show users more of what they engage with, and as users engage primarily with what they like or agree with (

confirmation bias), the algorithm further biases the selection of news along those lines (

Mattis et al., 2021). Over time, this can narrow an individual’s exposure (creating a filter bubble effect)

or simply cater to their preferences at the cost of serendipity. Additionally, feedback loops can operate at the ecosystem level—for example, if Facebook’s algorithm downranks news broadly, users might click news less, prompting the algorithm to show even less news, a loop that “

deprioritizes news, decreasing exposure and interest in news over time” (

Yu et al., 2024). In our theoretical model, we incorporate adaptive gatekeeping: the idea that what is gated in or out today can influence audience behavior, which in turn influences what is gated tomorrow. This dynamic challenges the static nature of traditional gatekeeping theory. It also implies that gatekeeping should be analyzed as an ongoing

process with memory and path dependency, not just a single decision point. We also note that AI systems can be designed to include

corrective reinforcement cycles—e.g., promoting diverse content to diversify user engagement—suggesting a possible intervention point for maintaining diversity. The notion of “

algorithmic feedback gatekeeping” is thus a core insight: gatekeeping is partly delegated to a learning system that co-evolves with its audience. Any theoretical account of gatekeeping now must contend with these nonlinear dynamics.

Collectively, these insights suggest a transformed gatekeeping paradigm. We propose that gatekeeping in the AI age is best understood as a socio-technical, multi-actor, and iterative process. Humans (journalists, editors, audiences), technologies (algorithms, AI writers), and organizations (newsrooms, platform firms) form a network of gatekeeping agents. Power within this network is fluid: editorial intent, algorithm design, and user behavior all exert influence. Our theoretical framework, therefore, moves away from viewing gatekeeping as a top-down editorial filter and toward a networked gatekeeping model, where influence is distributed and often opaque. In the next section, we support and elaborate on these insights with a detailed thematic literature review, linking empirical findings and scholarly arguments to each facet of the framework.

3.2. Automated News Generation by AI and Gatekeeping of Content

A parallel strand of research examines automated journalism—the use of algorithms, including LLMs, to generate news stories or news-like content. Prominent examples include The Associated Press using algorithms to write corporate earnings reports, and more recently, explorations of using GPT-like models to draft news articles. While initially these systems followed predetermined templates (for well-structured data like sports scores or financial data), the advent of powerful LLMs means AI can potentially decide what to write about and how to narrate it with much less human oversight. This raises the question: in automated news writing, where does gatekeeping occur and who (or what) is the gatekeeper?

Technical features of AI writers directly influence gatekeeping of content. One critical factor is the training data. An LLM trained predominantly on prior news articles will learn the prevailing news values and biases present in that corpus—it may mirror the story selection tendencies of mainstream media, including their blind spots. If certain communities or topics were underreported in the training data, the AI might also underrepresent them, effectively perpetuating existing gatekeeping biases. Indeed, researchers warn that “

dependence on algorithms and data used by robots can lead to news that tends to reinforce existing biases”, whether political, social, or gender-based (

Irwanto et al., 2024). For instance, an AI might write more stories on crime if the training data overemphasized crime news, reinforcing a crime-centric agenda that a human editor might consciously avoid. This reflects how algorithmic content generation inherits biases from past media output, a form of gatekeeping by precedent.

Another factor is the optimization goal or directive assigned to the AI. If a news organization directs an AI to optimize content for maximum clicks or SEO, the AI might choose topics and angles it predicts will garner attention—potentially emphasizing sensationalism or controversy (e.g., selecting to write about celebrity gossip over a complex policy issue). Conversely, if instructed to focus on a broad news agenda, it might produce a more balanced mix. However, without clear guidance, an AI lacks the editorial intuition to gauge public importance. Ethicists highlight that AI lacks an internal moral compass or news judgment; it will follow its objective function. One outcome is the risk of “

sterile, uninspiring journalism that fails to engage or reflect diversity” if an AI is too blandly unbiased (

Gondwe, 2024). The opposite risk is an AI that, in trying to maximize engagement, skews towards the hyper-engaging content and neglects less immediately clickable but important news. In either case, the traditional gatekeeper’s role of curating a balanced news diet is in question.

The literature also discusses the need for editorial oversight and hybrid models.

Demartini et al. (

2020) suggest keeping “

humans in the loop” (

Demartini et al., 2020)—journalists acting as a check on AI outputs to maintain integrity and accuracy (

Irwanto et al., 2024). Human editors might choose which AI-generated pieces to publish or edit them, thus reasserting a gatekeeping function at the end of the pipeline.

Singer (

2014) introduces the concept of “

secondary gatekeepers” in the form of users providing feedback and scrutiny to AI-generated content—for example, if an automated story contains errors or bias, audience critique can prompt corrections, indirectly influencing what stays published. This distributed gatekeeping again blurs the lines of who is gatekeeper (

Singer, 2014).

The function and impact of algorithmic curation on major content platforms offer significant parallels for understanding AI-driven gatekeeping in news curation and automated journalism. On platforms like YouTube, prioritization algorithms act as powerful gatekeepers, selecting and ranking content based on metrics designed to maximize platform goals, such as user engagement. Initially driven by clicks, YouTube’s algorithm shifted to prioritize “watch time” partly to counteract manipulation and enhance user retention. This algorithmic control dictates content visibility and potential revenue, thereby functioning similarly to traditional gatekeeping mechanisms but driven by automated, often opaque, processes.

The influence of these curation algorithms extends deeply into content production strategies. Creators must often adapt their work to align with the perceived preferences of the algorithm to maintain visibility and economic viability.

Ribes (

2020) provides a detailed case study of this adaptation among YouTube animators, who responded to algorithmic changes by significantly increasing the length of their videos, adjusting publishing frequency, and simplifying animation techniques or shifting to less labor-intensive formats. This dynamic suggests that news producers facing AI-driven distribution might similarly feel pressure to modify journalistic practices or content formats to achieve algorithmic favor (

Ribes, 2020).

This interaction between the platform’s technical infrastructure and the cultural content it hosts can be understood through the lens of “

transcoding”, where the logic of the computational layer influences and reshapes cultural expression. As

Ribes (

2020) documents, the algorithmic and policy shifts on YouTube led to the emergence and popularization of new animation-related genres—such as machinima, animated vlogs, anime parodies, tutorials, and Q&A formats—that better satisfied the platform’s requirements for length and regular output. This evolution highlights how technological constraints can foster specific forms of content, a process potentially mirrored in the news ecosystem as AI curation preferences shape news formats (

Ribes, 2020).

Moreover, the inherent complexity and opacity of these systems, often employing sophisticated AI such as deep neural networks, raise critical questions about accountability and bias, echoing concerns about the “black box” nature of AI in news selection and personalization. Compounding the algorithmic influence are the platform’s explicit policies and monetization rules, which establish additional constraints.

Ribes (

2020) found that YouTube’s guidelines regarding monetization and acceptable content (e.g., favoring ‘family-friendly’ themes) led animators to self-censor and avoid mature or controversial topics. This demonstrates how platform governance, enacted through both code and policy, shapes the content landscape, offering a relevant framework for analyzing how AI gatekeeping in news operates within specific commercial and regulatory contexts (

Ribes, 2020).

Ethical considerations are central in this area. Accuracy and verification are classic gatekeeping concerns—with AIs, there is risk of generating false or misleading content (the “

fabrication” or “

hallucination” problem of LLMs). Thus, editorial gatekeeping extends to vetting AI outputs. Moreover, AI as a

journalist gatekeeper raises accountability issues: If an AI decides to omit a certain fact or perspective, who is responsible? Some have called for “

algorithmic accountability” in journalism (

Diakopoulos, 2015)—meaning news organizations should audit and disclose how their AI systems make decisions, similar to holding human journalists accountable for choices. A 2023 study in the Indian context (

Elanjimattom, 2023) emphasized core values to uphold when employing AI, arguing journalists should act as “

media literacy activists” to help the public understand AI’s role in news, while a study of Romanian broadcasting offers context-specific empirical data illustrating the practical manifestations of broader theoretical concerns about AI’s role in reshaping journalism, such as algorithmic gatekeeping (

Vlăduțescu & Stănescu, 2025).

In summary, automated news generation introduces an internal gatekeeping mechanism within content creation. Instead of an editor assigning a story, an AI might spontaneously generate one from data patterns; instead of a reporter deciding which angle or sources to include, an AI picks based on its training. These micro-decisions by AI collectively amount to gatekeeping influences on what information is conveyed and how. The literature in this domain is still emerging, but it consistently calls for maintaining human oversight, transparency in AI content generation, and alignment of AI outputs with editorial standards (

Irwanto et al., 2024). The gatekeeping role of training data and algorithms must be recognized and managed to prevent the erosion of journalistic quality and diversity. We see consensus that full automation without accountability could be harmful: thus the field is moving toward frameworks where AI is a tool assisting journalists, rather than replacing the editorial gatekeeper entirely. Even so, the agency of AI in story selection and framing is a novel dimension that theories of gatekeeping must incorporate.

3.3. Locus of Control: Third-Party AI Systems and Newsroom Autonomy

As news organizations integrate AI technologies, many rely on third-party systems provided by tech companies—from content management plugins to cloud-based AI APIs (for translation, summarization, etc.). This outsourcing of AI capabilities has important implications for control and autonomy in journalism. The literature highlights a growing dependence on a few big technology firms for AI tools, which can shift power in subtle but significant ways.

Simon (

2022) argues that the introduction of AI in news risks shifting even more control to platform companies, increasing newsrooms’ dependency on them (

Simon, 2022). Traditionally, news organizations already depended on platforms for audience access (e.g., Facebook for distribution, Google for search traffic). Now, if those same companies or similar giants (Google Cloud AI, Amazon, OpenAI, etc.) provide the core AI infrastructure for news production, the tech firms’ influence permeates further into the news production chain. Our review of the Reuters Institute’s reports (

Newman et al., 2023) and the Tow Center’s extensive interview study (

Simon, 2024) supports this. Newsrooms often lack resources to develop AI in-house and turn to readily available solutions from tech firms. For example, many use Google’s natural language APIs for paywall recommendations or moderation or rely on OpenAI’s GPT via licensing. As a result, the gatekeeping function is partly ceded to these external systems—e.g., an AI that flags that comments are toxic (thus gating user input) or an AI that determines content placements on a personalized app (

Sonni et al., 2024).

This dependency creates what the Tow Center report describes as “

lock-in effects that risk keeping news organizations tethered to technology companies”, limiting their autonomy (

Simon, 2024). If a newsroom builds its workflow around a certain AI service, it may be vulnerable to that service’s pricing changes, policy shifts, or technical constraints. More subtly, the lack of transparency in proprietary AI can obscure how decisions are made. A newsroom might not fully know why the AI’s recommendations look a certain way, making it difficult to challenge or adjust those gatekeeping decisions. This opacity can hide biases or errors, effectively meaning the editorial team is flying blind in certain aspects of content curation controlled by the algorithm.

Another dimension is how third-party AI may embody the values or priorities of its creators rather than the news organization’s. If a social media platform’s algorithm values user engagement above all, news that travels via that platform is subject to those values. As the Tow Center report notes, the balance of power tilts toward tech companies, raising concerns about “

rent extraction” (platforms profiting from AI features with news content) and threats to publishers’ autonomy and business models (

Simon, 2024). For example, if an AI-driven search provides answers directly (like Google’s AI snippets), users might not click through to the news site, depriving publishers of traffic and revenue—an outcome determined by the platform’s gatekeeping decisions.

From a theoretical standpoint, this theme touches on agenda-setting power as well: tech companies may increasingly set the agenda for what technical solutions are used and how news is delivered, indirectly setting the agenda for public conversation. If, say, an AI assistant (voice or chatbot) provides the “top news” via some algorithm, it influences what issues people perceive as top news. The locus of agenda setting moves further away from news editors and more towards AI system designers.

Scholars suggest several mitigation strategies: developing open-source or in-house AI tools to retain control (though costly), and pushing for transparency requirements from tech providers. Policy discussions in the EU’s Digital Services Act and AI Act have floated rules for algorithmic transparency in content recommendation, which could help news orgs regain some insight into third-party gatekeepers.

Helberger et al. (

2018) have also argued for a concept of “

platform responsibility” in curating news, given their quasi-editor role (

Helberger et al., 2018).

In sum, the literature portrays a delicate balance. Third-party AI systems deliver efficiency and advanced capabilities that newsrooms need (hence, the “

AI goldrush”), yet they also embed news production within the orbit of big tech’s power. The locus of gatekeeping is partially externalized, which is a sea change from when gatekeeping was fully internal to media institutions. Recognizing platform companies and tech providers as stakeholders in gatekeeping theory is essential (

DeIuliis, 2015). We must now analyze gatekeeping as occurring in a distributed ecosystem: editorial choices are interwoven with platform algorithms, and control over content flows involves negotiation (or conflict) between media and tech sectors. This reframing is critical for journalism studies, as it connects to issues of media freedom, independence, and the autonomy of journalism as a profession in an age of pervasive technology.

3.4. Feedback Loops, Adaptive Learning, and News Visibility

Modern AI systems in news distribution are not static gatekeepers; they continuously update what they show based on user interactions, leading to feedback loops that can amplify certain patterns in news consumption. This theme has attracted attention from both communication scholars and computer scientists concerned with recommender systems. The central insight is that algorithmic gatekeeping is dynamic—it shapes user behavior, which in turn shapes the algorithm’s future gatekeeping decisions.

One widely discussed consequence is the potential reinforcement of confirmation bias. As noted in a theoretical framework by

Mattis et al. (

2021), confirmation bias influences news consumption in recommender systems through biased content selection and algorithmic feedback loops (

Mattis et al., 2021). If a reader tends to click on partisan news favoring one side, the system learns this preference and feeds more of it, validating the user’s beliefs and reducing exposure to opposing perspectives. Over time, the feed becomes narrowly tailored, a phenomenon often described as an “

echo chamber” (

Morando, n.d.). While the degree to which algorithms alone create echo chambers is debated (as we saw, user choice and network homophily also play roles), algorithmic feedback undeniably can magnify engagement biases (

Lu et al., 2024).

Another effect is the rich-get-richer or popularity feedback loop. Content that initially receives slightly more clicks can be further boosted by the algorithm, gaining even more visibility and thus more clicks, while less immediately popular content is buried. This can lead to a skew in visibility where a few stories dominate attention. Such loops might explain why trivial or sensational stories sometimes “go viral” at the expense of more nuanced reporting. The algorithm doesn’t “know” which story is more important, only which is receiving interaction. As a result, initial fluctuations can be amplified—a form of algorithmic gatekeeping that exaggerates winners and losers among news items.

Importantly, reinforcement cycles can also work at the level of news avoidance and declining news exposure. As one study suggested, if a platform’s algorithm notices a user seldom clicks on hard news, it might show even less news, leading the user to see and engage with even fewer news stories, in a downward spiral (

Yu et al., 2024). This feedback loop is concerning from a civic perspective—it could contribute to news deserts in individuals’ media diets.

E. Nguyen (

2023) refer to this as “

algorithmic aversion loops”, where low initial interest leads to algorithmic deprioritization of news, which then further dampens interest (

Liu et al., 2023;

E. Nguyen, 2023;

L. Nguyen et al., 2024).

Adaptive learning algorithms also mean that gatekeeping outcomes can shift in response to collective behavior. For instance, during breaking news events or crises, user interest spikes and algorithms may adapt to prioritize authoritative news sources (to meet user demand), which can temporarily counteract prior personalization. But once the event wanes, the algorithm might revert to usual patterns. Thus, what is gated through at any given time can be in flux, responding to trends and user signals.

The literature also explores interventions to steer these feedback loops towards positive outcomes. Diversity-aware recommender algorithms are a line of research: these systems explicitly incorporate a diversity criterion to ensure a variety of sources or viewpoints are shown (

Helberger et al., 2018;

Zuiderveen Borgesius et al., 2016). By doing so, they aim to break the loop of homogenization. Experiments with “

nudges” for users—such as interface cues encouraging people to read a wider range of news—have also been tested (

Möller et al., 2018). The idea is to create a

feedback loop for good, where increased engagement with diverse content trains the algorithm to keep offering diversity (

Bodó et al., 2019).

The interplay of user agency and algorithmic learning is crucial. Users are not passive; their choices feed the algorithm, but they can also make conscious choices to diversify their intake (or use platform settings if available). Some research suggests that more educated or aware users can escape negative loops by actively seeking varied news (

Bodó et al., 2019), whereas disengaged users might be more at the mercy of the default algorithm. This points to a need for digital literacy regarding how feeds work, empowering audiences in the gatekeeping process.

In theoretical terms, feedback loops mean that gatekeeping has a temporal dimension—it is a process that evolves. Traditional theory considered gatekeeping largely a moment of decision (publish or not, place on front page or not). Now, gatekeeping is iterative and cumulative. Each click or skip by users essentially votes on what the gatekeeping algorithm will do next. Our framework thus incorporates concepts from complex systems and feedback theory, e.g., path dependence, equilibrium vs. runaway effects, and potential need for moderation mechanisms to prevent undesirable equilibria (like extreme polarization or disengagement). Some scholars draw analogies to agenda setting’s second level (attribute agenda setting or framing) where feedback loops might also affect not just what topics are shown but what aspects of those topics are emphasized over time by algorithmic tweaking.

Ultimately, the presence of adaptive feedback loops in news algorithms underscores that gatekeeping is no longer solely a deliberate act by news editors, but an emergent phenomenon arising from many micro-interactions. It complicates the attribution of responsibility—an outcome (say, widespread belief in a false story) might be due to a loop between user behavior and algorithm, rather than a single gatekeeper’s error. This challenges researchers to develop new methods (algorithm audits, simulations) to understand these dynamics. The literature, while still developing, makes it clear that addressing the effects of feedback loops is key to fostering a healthy information ecosystem. Breaking harmful loops and reinforcing beneficial ones (like interest in high-quality news) could become a new goal for both platforms and news outlets, aligning technological gatekeeping with democratic ideals.

4. Discussion

The findings from our literature review and theoretical synthesis paint a picture of news gatekeeping in flux. In this discussion, we interpret these insights, connect them back to our theoretical foundations, and explore their implications for both scholarship and practice. We also identify limitations of the current knowledge and propose directions for future research and theory-building.

Our exploration demonstrates that, while the core concerns of classic media theories remain pertinent, their mechanisms manifest differently in an AI-permeated environment. The fundamental gatekeeping question—who controls content access?—now points to a complex interplay of editorial judgment, algorithmic design, and user interaction. This calls for an expansion of Shoemaker and Vos’s gatekeeping framework. We propose adding an algorithmic layer to the model of influences on media content. For instance, alongside individual and routine-level influences (e.g., a reporter’s instincts or newsroom policy), we must consider algorithmic influence—the role of platform algorithms and AI tools as both constraints and actors in decision-making. Similarly, agenda setting must now account for algorithmic influence on perceived issue importance. Platforms might set a de facto agenda via trending topics algorithms or search autocompletion, which cue audiences on what matters. Traditional agenda-setters (news editors) operate in this shadow—they might publish a story, but if the algorithm does not amplify it, the issue may never set on the public agenda. Framing theory must also adapt, considering how AI-generated narratives might subtly shape presentation through learned language patterns and biases. These observations urge a conceptual update: we should view media theories through a socio-technical lens, recognizing technology not just as environment but as part of the communicative act.

Our framework benefits from STS and AI ethics perspectives. STS reminds us that technologies come loaded with the values and assumptions of their creators—in gatekeeping terms, which doors are built, and how they function, is a design choice influenced by corporate and engineering culture. For example, the choice by YouTube to prioritize “watch time” in recommendations was not value-neutral; it reflected a business goal that, in turn, affected the visibility of certain kinds of content (often favoring sensational videos that keep eyes glued). This resonates with STS ideas of values in design. We therefore incorporate the notion of “affordances as gatekeepers”—the idea that the technical affordances of platforms (like the Share button, the algorithm’s openness to user feedback, the character limit on Twitter) shape what information thrives. AI ethics contribute principles like transparency, fairness, and accountability that we argue should be applied to gatekeeping mechanisms. A transparent algorithmic gatekeeper would disclose, at least in broad terms, how it selects news (e.g., “stories are ranked by relevance and freshness” or “personalized to your reading history”). Fairness might mean ensuring minority viewpoints or local news have a fair shot in recommendations rather than being drowned out by majority preferences. Accountability would entail mechanisms to audit and correct gatekeeping biases or mistakes—for instance, an appeals process if certain content is consistently suppressed by an algorithm.

The reconceptualized gatekeeping model has practical implications. News organizations must recognize that gatekeeping is a shared responsibility with technology. This may require new roles in newsrooms—data scientists and algorithm auditors working alongside editors to tune and oversee algorithmic curation systems in line with editorial values. It also means engaging proactively with platforms, for example, if Facebook’s algorithm changes drastically reduce the reach of critical civic information, news organizations might need to adapt their distribution or lobby for adjustments. The notion of “algorithmic literacy” comes into play: journalists and editors need a greater understanding of how algorithms work (much as they learned about SEO in the early 2000s) to effectively navigate and influence those external gatekeeping forces. Some newsrooms have begun creating AI ethical guidelines for themselves, to decide what tasks to automate, how to maintain human control, and how to be transparent with their audience about AI use.

Policy implications also arise, particularly concerning the platform power dynamics previously discussed. If a handful of algorithms are the new gatekeepers to the public sphere, society has a stake in how they operate. Regulatory approaches could include mandating transparency for major news recommenders (so researchers can study bias and influence), as well as ensuring competition (to avoid a monoculture of one dominant gatekeeping algorithm). There are parallels here to historical broadcasting regulations that aimed to ensure diverse viewpoints. One might envision an adaptation, e.g., requiring that algorithmic news feeds provide options to users (like a “diversity” mode vs “personalized” mode) or that they include some public service content. Another avenue is supporting public interest algorithms—initiatives to create open-source or public media-owned recommendation systems aligned with democratic values.

The public too plays a role. In our updated model, users are not merely endpoints but participants who can influence gatekeeping through their collective behavior. Media literacy efforts should inform audiences that their clicks and shares have consequences beyond individual preference—they shape the information landscape for themselves and others. Encouraging more mindful consumption (e.g., occasionally clicking on quality journalism even if it is not one’s usual fare, to signal interest) could be framed as a civic act in the digital age.

Despite the progress in understanding AI’s impact on news, there are areas where the literature is still nascent or inconclusive. One limitation is the black box nature of proprietary algorithms—researchers often rely on reverse-engineering or observational studies without full visibility into how platforms curate news. This makes it challenging to attribute causality (is a lack of diversity the algorithm’s doing, or due to user self-selection?). Future research could benefit from collaborations with platforms or from platforms granting data access to independent auditors. Another limitation is that much research has focused on Western contexts and on a few big platforms (Facebook, Google). We know less about how gatekeeping is changing in messaging apps (like WhatsApp), voice assistants, or in countries where different platforms dominate. Additionally, the impact of generative AI (LLMs) in newsrooms is very fresh—empirical studies are only beginning. How audiences respond to AI-generated news (in terms of trust and credibility) is an open question: early experiments indicate some readers find AI-written news surprisingly credible due to the “machine objectivity” heuristic (

Elanjimattom, 2023), which could either help reduce perceived bias or conversely mask subtle biases. This area needs more research.

Building on our analysis, we outline several directions to advance the theoretical and empirical understanding of gatekeeping in the AI age. Scholars should formulate models that quantitatively and qualitatively describe the interplay between human and algorithmic decisions. For example, how do editorial choices and algorithmic rankings correlate in shaping the final news that audiences see? Models could incorporate variables like algorithmic weight, editorial weight, and user feedback loops to simulate different scenarios of gatekeeping balance.

There is a need for longitudinal research following the introduction of AI systems in news contexts. For instance, if a newsroom starts using an AI recommender, how does the diversity and composition of their content output and audience engagement change over time? Such studies can reveal the lasting effects (or adaptations) due to AI.

Future research should connect gatekeeping changes to effects on public knowledge, attitudes, and polarization. Does algorithmic gatekeeping measurably alter what the public knows about certain issues (agenda setting outcomes)? Does it affect trust in news (perhaps if people feel the selection is less human, do they trust it more or less)? This merges media effects tradition with the new gatekeeping realities.

Interdisciplinary collaboration with ethicists and legal scholars could produce concrete frameworks for responsible algorithmic gatekeeping. Pilot programs where news organizations or platforms implement transparency reports (e.g., publishing a “nutrition label” for the day’s recommended news mix) could be studied. Similarly, if a platform allows users to toggle recommendation criteria, researchers could observe how many opt for more diverse feeds and what impact that has.

Research in diverse global settings (such as India’s WhatsApp news circulation, China’s Toutiao AI-driven news app, or Africa’s use of radio + social hybrids) will test our theoretical framework’s universality. These contexts might reveal different configurations of human/AI gatekeeping—for example, heavier state influence or community influence interacting with algorithms. Studying these can enrich the theory or show its limits.

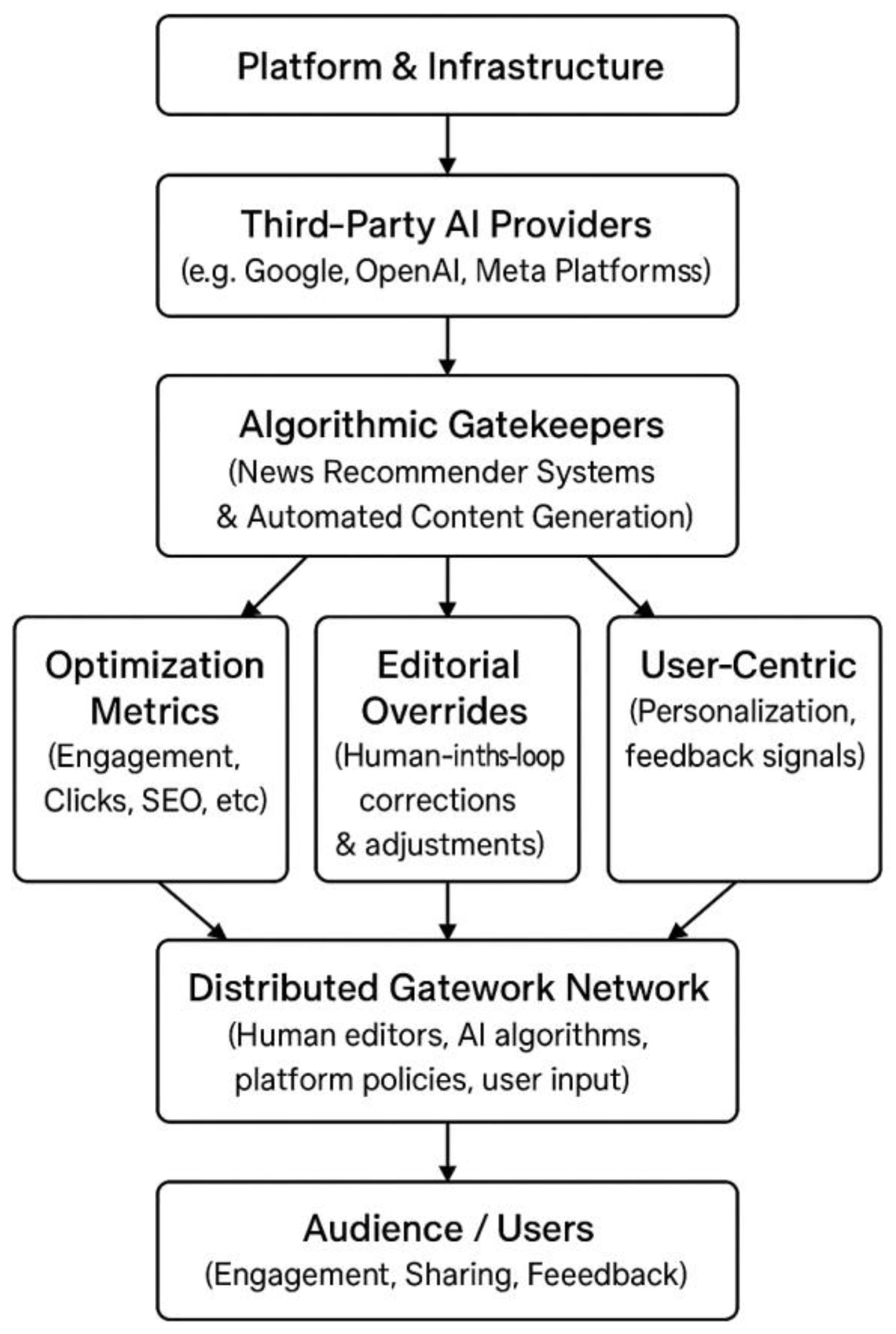

In light of everything, we propose that gatekeeping theory be reframed as

Algorithmically Networked Gatekeeping, visualized in

Figure 1. This theoretical perspective views gatekeeping not as a series of linear filters but as a networked process involving human and non-human agents connected via technological platforms. Information “

gates” are everywhere—from the code that screens out hate speech, to the editor deciding a headline, to the user’s own swiping behavior. Power is exercised through these network connections. Borrowing from network theory, we acknowledge gatekeepers can be nodes of various types (people, AI, organizations) and that gatekeeping outcomes emerge from their interactions. It also suggests multi-dimensional evaluation: success is not just receiving important news through the gates, but ensuring the network as a whole promotes a healthy information ecosystem (which might mean building in counter-loops to avoid runaway biases).

Reconceptualizing gatekeeping in the age of AI is not only an academic exercise but a practical necessity—as AI systems rapidly become entrenched in news dissemination, understanding and guiding their gatekeeping influence will be crucial to uphold the foundational goals of journalism—informing the public, holding power to account, and enabling deliberation. The emerging theoretical model shifts from a solely human-centric paradigm to one where algorithms act as influential actors within a broader socio-technical ecosystem.

Digital platforms (Facebook, Google, X/Twitter, etc.) provide the foundational infrastructure for news delivery, exerting influence through technical affordances, business models, and platform policies. The infrastructure’s affordances—such as ranking algorithms and APIs—implicitly set conditions and boundaries for what kind of content is prioritized, visible, or economically viable.

Providers like Google (Cloud AI), Meta (Recommendation Systems), and OpenAI (GPT models) supply proprietary AI technologies that news organizations increasingly rely on for content curation and generation. This dependence shifts journalistic autonomy towards these AI providers, embedding the providers’ values and biases (through training data, algorithmic parameters, and optimization goals) into the news production workflow.

Algorithmic gatekeepers perform two essential functions: (1) Curation, by selecting and ranking news based on quantitative engagement metrics; (2) Generation, by autonomously drafting content using large language models. They incorporate implicit biases through optimization goals, such as maximizing engagement (clicks, shares) or user satisfaction, potentially diverging from traditional journalistic values.

There is a triadic gatekeeping criteria, based on optimization, editorial oversight and user feedback. Algorithms leverage user metrics (click-through rates, dwell times, engagement) to dynamically shape visibility. This introduces a quantifiable logic that prioritizes audience engagement over editorial values, often privileging sensational, polarized, or emotionally appealing content. Journalists and editors retain agency, intervening to correct, modify, or enhance algorithmic outputs to maintain journalistic standards and ethics. These overrides represent critical human-in-the-loop processes that balance computational optimization with qualitative news values. User interactions provide ongoing feedback to algorithms, creating dynamic feedback loops. Audience behavior shapes future algorithmic decisions, potentially reinforcing confirmation bias, echo chambers, or algorithmic aversion loops.

Gatekeeping emerges as distributed “gatework”, involving diverse human and non-human actors (journalists, editors, algorithms, platform engineers, users). Each actor contributes partially and interdependently, rendering the final gatekeeping outcome an emergent phenomenon rather than an editorial decree. Audiences actively participate in gatekeeping via engagement behaviors (clicking, sharing, commenting), influencing algorithmic recommendations. User choices thus constitute indirect gatekeeping power.

5. Conclusions

The transformation of news gatekeeping in the age of AI is a double-edged sword. On the one hand, AI-driven systems offer opportunities: they can process vast information, potentially personalize news to individual needs, and free journalists from routine tasks to focus on deeper reporting. On the other hand, these systems introduce uncertainties and challenges to the values and theories that have underpinned journalism for decades (

Sonni, 2025). Our theoretical exploration illustrates that traditional gatekeeping theory must evolve to account for algorithmic curation, automated content generation, and the entanglement of newsroom practice with platform ecosystems. The once-clear lines between editor, distributor, and audience have blurred into a continuum of gatekeeping agents.

We found that AI has reconstituted the gatekeeping process into a socio-technical network, where human intent, algorithmic logic, and user feedback continuously shape the news agenda. This calls for a new vocabulary and framework—one that recognizes algorithms as gatekeepers in their own right, even as they remain tools designed by humans. It also calls for humility: some aspects of this new gatekeeping (like complex feedback loops) defy simple control and prediction, reminding us that our media environment is an evolving ecosystem.

In answering our guiding question—how do AI-driven content curation and automated news generation challenge and reshape gatekeeping?—we conclude that they do so in at least four pivotal ways. First, they redistribute gatekeeping power, diminishing the monopoly of editorial gatekeepers and introducing new influential actors (tech platforms and AI models). Second, they change the criteria and transparency of gatekeeping, often shifting from normative news values to optimization metrics hidden in code. Third, they create conditions for new biases and inequalities in information exposure (but also tools to potentially counteract them, if we choose to deploy those). Fourth, they necessitate a rethinking of accountability—when an algorithm filters out important information or an AI story misleads, who is responsible and how do we address it?

Crucially, this reconceptualization does not imply that human journalists are obsolete in gatekeeping. Rather, it highlights their role must adapt. Journalists and news organizations are needed more than ever to guide, oversee, and correct the algorithmic processes; to inject ethical judgment where machines might err; and to advocate for the public interest in the design of news technologies. The future of gatekeeping will likely be a collaborative enterprise between humans and AI, and understanding that collaboration is where future research and practice should focus.

In closing, this study contributes to a theoretical foundation for AI era journalism. It provides a scaffold for scholars to build upon and for practitioners to reflect on. By integrating multidisciplinary insights, we hope to have sketched a coherent picture of where we stand and where we might go in understanding gatekeeping today. Nonetheless, this is an unfolding story. As AI capabilities advance (e.g., more sophisticated personalization, multimodal news, deepfake content), each development will pose new questions that test our framework. The reconceptualization of gatekeeping is thus an ongoing project, one that must remain responsive to technological change and anchored by journalism’s enduring mission to inform society. Our work is a step in that direction, urging continuous dialogue between media theory, technological insight, and ethical considerations to ensure that the flow of news in the digital age remains conducive to an informed and empowered public.