Abstract

This study explores the factors influencing the sustainable adoption of AI technologies in journalism. It integrates the expectation confirmation model (ECM)—including expectation confirmation, perceived usefulness, and satisfaction—with knowledge management (KM) features (knowledge sharing, acquisition, and application), and other factors like ease of use, trust, and technological affinity. Data from an online survey of 396 Chinese journalists using AI for journalistic tasks were analyzed through structural equation modeling. Results show that expectation confirmation significantly influences perceived usefulness, while satisfaction is correlated with expectation confirmation, usefulness, and ease of use. Sustainable use of AI is impacted by usefulness, satisfaction, knowledge management practices, ease of use, trust, and technological affinity, with perceived usefulness being the most significant factor. These findings provide deeper insights into AI adoption in journalism, offering implications for AI developers, media enterprises, and training programs aimed at fostering sustainable AI use in journalism.

1. Introduction

In recent years, artificial intelligence (AI) has become a significant force in sectors like journalism (Canavilhas, 2022; Goni & Tabassum, 2020). The technological advancements of AI, combined with the Internet of Things and big data, have enhanced various sectors, particularly in design and performance (Romanova et al., 2021). This rapid technological evolution has driven the journalism industry to integrate AI for improving news collection, production, and distribution (Chiu et al., 2021; Barredo Ibáñez et al., 2023). AI can increase accuracy, efficiency, and productivity in journalism, enhancing competitiveness for media firms (Shi & Lin, 2024). However, challenges like poor training and insufficient infrastructure hinder AI adoption (Kuai et al., 2022). Moreover, issues such as the changing role of journalists and job structure need further exploration (Kothari & Cruikshank, 2022).

AI offers several advantages, such as providing journalists access to material anytime (Noain Sánchez, 2022) and assisting with knowledge management (Pavlik, 2023). Despite AI’s growing presence in newsrooms, a disparity exists between resource-rich and resource-constrained organizations (Sun et al., 2024). Research on AI’s long-term impact in journalism, especially in China, is still limited (Cui & Wu, 2021). This study addresses these gaps by combining knowledge management (KM) frameworks and the expectation confirmation model (ECM), along with factors like perceived ease of use, trust, and technology affinity. The results aim to provide insights for media organizations, lawmakers, and technologists on how to facilitate AI adoption while preserving journalistic values like honesty and credibility.

2. Review of the Literature

2.1. AI Technologies in Journalism

AI presents potential tools for the media and journalistic industries. As an example, AI may provide journalists access to material in any location and at any time (Noain Sánchez, 2022). AI provides journalists with specialized help by retaining prior discussions (de-Lima-Santos & Ceron, 2021). AI offers journalists prompt input in a manner similar to human interaction. Due to the distinctive characteristics of AIs, journalists might use them for knowledge management and executing their regular tasks (Pavlik, 2023). While newsrooms globally investigate the integration of AI to enhance information gathering, organization, and delivery, a disparity persists between resource-abundant entities and those with constrained resources (Sun et al., 2024). However, the comprehension of factors influencing the acceptance and use of AI remains in its nascent phase, particularly within the journalism industry in China (Cui & Wu, 2021). Even while studies on AI in journalism are on the rise, most of them concentrate on new developments in technology and their direct practical applicability. There is a deficiency of research about the extensive, long-term impacts of AI on journalistic practices across various socioeconomic contexts (Kothari & Cruikshank, 2022). Moreover, current studies often neglect the impact of cognitive, organizational, and trust-related aspects on journalists’ readiness to sustainably integrate AI into their profession (Trang et al., 2024).

Key AI technologies used in journalism include automation, machine learning, and data processing, which help in the collection, creation, and dissemination of news (Fubini, 2022). In a fragmented media environment, AI provides a strategic advantage to mass media companies (Chiu et al., 2021). However, there are challenges, including the need for AI models to be re-engineered for new initiatives, which can limit cost efficiency (Stray, 2021). Investigative journalism applications using AI, such as computer vision, require significant investments in technology and skilled professionals (de-Lima-Santos & Mesquita, 2021). Additionally, AI integration into newsrooms is often expensive (Broussard et al., 2019), and AI algorithms may be based on biased or outdated data, raising ethical concerns (Guzman & Lewis, 2020; Sharma & Bhardwaj, 2024).

2.2. AI Technologies in the Chinese News Industry

AI technology has seen a rapid adoption by global news organizations, with AI-based algorithms being used to analyze data from diverse sources, convert text into audio and video formats, and assess sentiment (Calvo-Rubio & Ufarte-Ruiz, 2021; Islam et al., 2021). Major news agencies such as The Washington Post, The New York Times, and the Associated Press have successfully integrated AI into their operations (Chan-Olmsted, 2019). In China, newsrooms like Media Brain use AI tools to automatically produce news bulletins (Yu & Huang, 2021). AI also plays a crucial role in establishing news proposal infrastructure and is essential for data exploration, trend analysis, and theme identification (Túñez-López et al., 2021; Noain Sánchez, 2022). Social networks and online platforms are increasingly popular among journalists for news research, allowing for swift investigations into various events (Parratt-Fernández et al., 2021). This integration of AI enhances content generation and audience understanding (Chiu et al., 2021).

The use of artificial intelligence (AI) within the Chinese news ecosystem displays a distinctive interplay of technological advancement and regulatory context, as well as cultural particularity (Lucero, 2019). AI-supported tools such as automated content generation, personalized recommendation algorithms, and sentiment analysis have become more prevalent and widely adopted in an effort to improve efficiency and engagement with the audience (Upadhyaya, 2024). Nevertheless, the Chinese media system is also shaped by strict content governance policies that are implemented in a way that emphasizes both ideological alignment and social stability (C. C. Lee, 2003; Zhao, 2012). These policies shape the extent to which AI tools can and will be used for news dissemination, often embedding compliance mechanisms into the design of the algorithms; the ‘algorithmic transparency’ advocated is currently limited to those in the platform economy and industry (Xu, 2024). Therefore, AI algorithms trained on Chinese news data may demonstrate distinct behavioral patterns (Zheng et al., 2018), such as elevated sensitivity to politically sensitive topics, aligned output with state narratives. In a Western context, where press freedoms and algorithm transparency are often noted features (Diakopoulos & Koliska, 2017), the AI applications used for disseminating news content will often be based on the diversity of viewpoints and an adversarial approach (Saeidnia et al., 2025). Specifically, sentiment analysis models, trained on Western data, will encompass much more of the political rhetoric spectrum and dissenting opinions versus the Chinese counterparts that display a more limited output range due to mechanisms to modify and shape output before dissemination (Z. Wang et al., 2025). Cultural differences in communication styles can inform differences in NLP model performances—the tendency to communicate indirectly, for example, will always affect the accuracy and interpretability of algorithms across cultures (Shin et al., 2022). These observed differences speak to the need for adaptations in AI research that are contextually situated. While the sports communication model, as presented, seemed to function very well in the tested context/prior contexts, it needed adjustments for transferability across other media ecosystems. This “calibration” process must account for regulatory, cultural, and linguistic dimensions to ensure transferability. Despite these challenges, AI is a valuable tool in journalism, requiring journalists to deepen their knowledge to enhance AI capabilities in the newsroom (Trang et al., 2024). Consideration must also be given to power dynamics, enforcement mechanisms, and adherence to ethical and legal norms (Broussard et al., 2019). AI tools may reflect the biases of their creators, and there are concerns about racial, gender, and other biases in AI models (Campolo & Crawford, 2020; Forja-Pena et al., 2024). These biases may not adapt to new cultural contexts, posing challenges for AI implementation across different nations (Sun et al., 2024). Therefore, understanding the driving factors for journalists’ use of AI is crucial for sustainable application in journalism.

In addressing the identified gap in the literature, this research empirically investigates the major factors that affect sustained use of AI technologies in journalism in the Chinese context as a differentiated regulatory and technological environment. The research asks three important questions:

- RQ1: How do expectation confirmation and perceived usefulness influence journalists’ satisfaction and continued use of AI?

- RQ2: How can knowledge sharing, acquisition, and application help sustain AI integration?

- RQ3: What roles do ease of use, personal trust, and technological affinity have in sustained use?

The study also examines new challenges that occur in resource-limited contexts, especially infrastructure, regulatory, and technological challenges. Overall, the study offers significant implications for media systems for both the developed and developing world.

3. Theoretical Approach

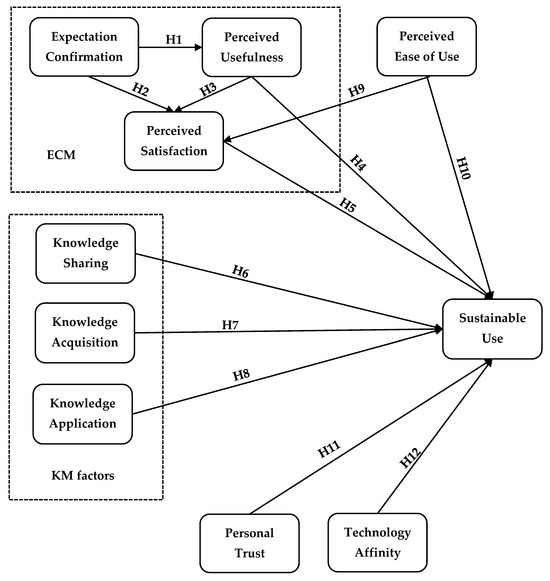

To investigate the continued implementation of AI by journalists for journalistic motives, a comprehensive framework has been developed. This model is based on the extraction of constructs from the expectation confirmation model (expectation confirmation, perceived usefulness, and satisfaction), incorporated with the knowledge management factors (knowledge sharing, knowledge acquisition, and knowledge application), and also includes additional factors such as ease of use, trust, and technology affinity. There are four primary causes that are responsible for the combination of those components. To begin, the key elements of the ECM, which include expectation confirmation, perceived utility, and pleasure, have been used extensively in the past for the purpose of evaluating the sustainable use of AI (Bhattacherjee, 2001). As AI technologies are still comparatively fresh, it is considered that incorporating those variables will give an analytical view of how journalists may utilize AI in a sustainable manner. The second reason why KM aspects were included in the theoretical model is because the persistence of the usage of AI is contingent upon the perception that these conversational bots allow journalists to successfully acquire, share, and apply their information. The knowledge management attributes were first used to assess how organizational institutions adopted technological innovations (García-Sánchez et al., 2017). However, recent research has also validated their use at the individual level (Al-Sharafi et al., 2023). Again, according to the technology acceptance model (TAM), ease of use and usefulness are the most essential features to ascertain the spread of technological advancements (Davis, 1989). TAM posits that the sustainable use of technology is affected by the intention to use it, which denotes an individual’s cognitive objective to use a computer device. The perspective of journalists toward the accessibility of AI technology is a critical determinant of their inclination towards sustainable use (Davis, 1989). Finally, research indicates that persons with a greater affinity for technology are more inclined to accept new technologies compared to those with a lesser attraction (Aldás-Manzano et al., 2009). Moreover, the attitude of technological affinity has an indirect influence on the desire to embrace the technology (Trautwein et al., 2021). A number of studies, on the other hand, have shown that consumers’ views and decisions about the utilization of technology are highly influenced by the level of trust (Pham & Nguyet, 2023; Zhu et al., 2021). Consequently, it is believed that integrating technical affinity and human trust into the model would augment journalists’ incentive to use AI in journalism for sustained application. Figure 1 below illustrates the proposed research model, summarizing all hypothesized relationships among the constructs.

Figure 1.

Conceptual research framework.

3.1. Expectation Confirmation

Expectation confirmation is defined as “users perceptions of the congruence between the expectation of information system usage and its actual performance” (Bhattacherjee, 2001). Once people’s expectations from before they use AI are met throughout their actual experience, it means their expectations were truthful (Eren, 2021). Users will have a favorable impression of the technology’s utility when their actual experience exceeds their pre-usage expectations (C. Y. Li & Fang, 2019). Prior research has substantiated the beneficial impact of expectation confirmation on perceived usefulness across many settings, including social networking platforms (Ambalov, 2021), electronic financial services (Rahi et al., 2021), and distance education (J. Wang et al., 2021). Again, previous studies have shown that expectation confirmation positively affects perceived utility in the setting of AI chatbots (Nguyen et al., 2021). Consequently, we suggest that if the effectiveness of AI meets journalists’ expectations, they are more inclined to see these technologies as beneficial for journalistic objectives. Therefore, we propose the following:

H1.

Expectation confirmation positively influences the perceived usefulness of AI technologies for journalistic purposes.

Prior research identified a favorable correlation between expectation confirmation and perceived satisfaction (Dai et al., 2020). According to Pereira and Tam (2021), one of the most important factors that determines satisfaction is the degree to which one’s expectations are fulfilled. Individuals experience satisfaction with a technology when it fulfills their pre-usage expectations (Gupta et al., 2020). Some claim that when expectations are met, people are more satisfied with the technology they use (Eren, 2021). Consequently, it is anticipated that journalists will be content with AI technologies if these tools fulfill their expectations and enhance their journalistic practice. Therefore, we propose:

H2.

Expectation confirmation positively influences perceived satisfaction of utilizing AI technologies for journalistic purposes.

3.2. Perceived Usefulness

Perceived usefulness is defined as “the degree to which a person believes that using a particular system would enhance his/her job performance” (Davis, 1989). It has been shown in prior research that there is a correlation between the perception of usefulness and the level of satisfaction (Rahi et al., 2021). According to Jumaan et al. (2020), one of the most important factors influencing happiness after adoption is how beneficial the product is judged to be. The favorable effect of usefulness on perceived satisfaction was confirmed within the setting of the utilization of AI (Ashfaq et al., 2020). AI technologies may be used by the journalist for a variety of objectives, including, but not limited to, the discovery of answers to their inquiries (Pérez et al., 2020), the improvement of peer communication abilities (A. M. Al-Rahmi et al., 2021), and the enhancement of learning efficiency (Wu et al., 2020). As a result, journalists are more likely to become pleased with the employment of AI if these technologies are able to fulfill their requirements. Therefore, we propose the following:

H3.

Perceived usefulness positively influences perceived satisfaction of utilizing AI technologies for journalistic purposes.

According to the findings of research conducted on post-adoption behaviors of technology, perceived usefulness has continuously been viewed as an essential prerequisite of continuing use intention (Pereira & Tam, 2021). The technology continuation theory (TCT) and ECM state that perceived utility is a crucial factor in determining whether or not an individual intends to continue using a technology (Rahi et al., 2021). During the post-adoption phase, perceived usefulness is formed by the post-experience beliefs that journalists have on the capabilities of artificial intelligence to ease the process of gaining knowledge and improve results in practice (Winkler & Söllner, 2018). Therefore, the employers are motivated to continue using such technology since they believe that advanced artificial intelligence technology improves functionality (Jumaan et al., 2020). Because of this, it is reasonable to anticipate that journalists will continue to make use of artificial intelligence technologies in the future if they believe that these technologies are beneficial. Therefore, we propose the following:

H4.

Perceived usefulness positively influences the sustainable utilization of AI technologies for journalistic purposes.

3.3. Perceived Satisfaction

Perceived satisfaction is defined as “the affective response that an individual derives from their prior IT usage experience” (Jumaan et al., 2020). A user’s level of satisfaction is determined by how well a product meets their expectations in relation to how well it really performs (Yan et al., 2021). Several settings have confirmed that satisfaction influences the intention to continuously use, for example, mobile banking (Foroughi et al., 2019), health and fitness apps (Chiu et al., 2021), LMSs (Ashfaq et al., 2020), chatbots’ practicality in education (Al-Sharafi et al., 2023), and government apps for mobile use (Mandari & Koloseni, 2022). According to Zhang et al. (2022), the expectation of continued use intention is mostly driven by satisfaction. Previous studies have shown that users are more likely to want to use AI continuously if they are satisfied with its use (X. Li et al., 2021). Consistent with earlier research, we postulated that journalists’ contentment with AI technologies leads them to keep using these tools in their media endeavors. Therefore, we propose the following:

H5.

Perceived satisfaction positively influences the sustainable utilization of AI technologies for journalistic purposes.

3.4. Knowledge Sharing

Knowledge sharing is defined as “the process of disseminating various resources among the individuals taking part in specific activities” (Al-Emran & Teo, 2020). Important to the learning process, knowledge sharing facilitates the flow of information from one individual to another, which in turn generates new knowledge (Feiz et al., 2019). According to Lin and Huang (2020), the peers engage in active communication and share both explicit and implicit information. The dissemination of information is an essential component of knowledge management and has a substantial impact on the quality of job performance (Wu et al., 2020). Previous research has shown that there is a favorable correlation between the knowledge-sharing characteristic of an artificial intelligence information system and the pace at which it is adopted (Arpaci et al., 2020). The capacity of artificial intelligence technologies to provide a platform for the exchange of information is what motivates individuals to utilize these tools (Al-Emran & Teo, 2020). As a result, our research suggests that journalists will be more likely to continue using AI tools if they think these technologies help them share information with their peers and instructors. Therefore, we propose:

H6.

Knowledge sharing positively influences the sustainable utilization of AI technologies for journalistic purposes.

3.5. Knowledge Acquisition

Knowledge acquisition is defined as “the processes that use existing knowledge and capture new knowledge” (C. P. Lee et al., 2007). Acquiring new information is an essential part of both the process of learning and the management of existing information (Cukurova et al., 2018). The literature has provided evidence that supports the hypothesis that there is a correlation between the capacity of AI tools to aid knowledge acquisition and the adoption of such technologies (Arpaci et al., 2020). According to Al-Emran and Teo (2020), one of the main reasons why people start using AI tools is to obtain more information about the subject. With the help of AI, micro-learning processes are made more efficient and effective, and both the acquisition and retention of knowledge may be improved (Vázquez-Cano et al., 2021). AI systems can make it easier to locate and learn new information from course materials, forum threads, and other sources (Farkash, 2018). As an example, libraries may use AI techniques to improve patron–library engagement and the flow of information for trainees (Sheth et al., 2019). It is thus reasonable to anticipate that the favorable impact that AI tools have on the process of learning new knowledge would inspire journalists to continue making use of AI technologies to acquire new information. Therefore, we propose the following:

H7.

Knowledge acquisition positively influences the sustainable utilization of AI technologies for journalistic purposes.

3.6. Knowledge Application

Knowledge application is defined as “the process that enables the individuals to access the knowledge smoothly via the existing efficient storage and retrieval techniques” (Arpaci et al., 2020). Several pieces of research have shown that there is a correlation between the capacity of artificial intelligence tools to assist in the application of information and the adoption of these technologies (Al-Sharafi et al., 2023). According to Al-Emran and Mezhuyev (2019), the knowledge application ability of a technology has a substantial impact on the rate of adoption and utilization. When users are deciding whether or not to utilize AI technologies, one of the most essential factors they take into account is how they will apply their knowledge (Al-Emran & Teo, 2020). It has been discovered that the application of knowledge is a crucial factor in determining the performance of the users and their desire to utilize artificial intelligence technology (Al-Emran & Mezhuyev, 2019). In light of this, if journalists see AI technologies as useful tools that facilitate the application of knowledge in the profession of journalism, then they are more inclined to continue utilizing these advanced tools. Therefore, we propose the following:

H8.

Knowledge application positively influences the sustainable utilization of AI technologies for journalistic purposes.

3.7. Perceived Ease of Use

Perceived ease of usage refers to how simple a technology appears to be to understand and operate (Davis, 1989). Kasinphila et al. (2023) defined it as the ease with which new technology can be comprehended and utilized. It relates to users’ perceptions of a technology’s advantages over existing alternatives, simplifying decision-making through intuitive interfaces offering various options (Rodgers et al., 2021). Ease of use suggests that AI technologies should require minimal effort, as users are unlikely to adopt systems that are difficult to navigate. AI products perceived as user-friendly lead to higher user satisfaction (Djakasaputra et al., 2020). Conversely, poorly functioning AI technologies result in frustration and dissatisfaction (Alqasa, 2023). Research, particularly within the technology acceptance model (TAM), shows a positive correlation between ease of use and intention to use (W. M. Al-Rahmi et al., 2020; Bhagat et al., 2023). Therefore, if journalists perceive AI tools as user-friendly, they are more likely to integrate and continue using them in journalism. Hence, we propose the following:

H9.

Perceived ease of use positively influences journalists’ perceived satisfaction with AI technologies for journalistic purposes.

H10.

Perceived ease of use positively influences the sustainable utilization of AI technologies for journalistic purposes.

3.8. Personal Trust

Within the realm of behavioral intention, trust is an essential component. AI trust has tremendously affected the broad usage of technology breakthroughs to better journalism (Trang et al., 2024). According to the findings of a number of studies, consumers’ views and intentions toward the adoption of AI technology are substantially influenced by personal trust (Pham & Nguyet, 2023). Users are more likely to embrace and want to utilize technology when they trust it, which helps them overcome their fears and doubts about the process. People who have faith in AI will be more careful when deciding which AI approach to adopt, and they will stick with it for the long haul (Stanciu & Rîndaşu, 2021). Studies indicate that AI technologies enhance trust and awareness in decision-making processes (Duan et al., 2019). According to a study conducted by Kim et al. (2008), users’ trust and belief in AI positively impact their inclination to use. Although other elements, for example, performance expectancy and social influence, impact sustainable use, trust is the most important (Stojanović et al., 2023). For this reason, it is reasonable to assume that journalists who have complete trust in AI technology will keep using it in the future. Therefore, we propose the following:

H11.

Personal trust positively influences the sustainable utilization of AI technologies for journalistic purposes.

3.9. Technology Affinity

Technology affinity refers to an individual’s natural inclination and comfort level with technology (Trang et al., 2024), playing a crucial role in adopting new technologies. Aldás-Manzano et al. (2009) suggest that while traditional TAM characteristics influence technology adoption, technological affinity can modify these effects. Trautwein et al. (2021) further emphasize that technological affinity shapes attitudes, which subsequently affect usage intention. Perceived utility and societal influences also contribute to the acceptance of new technologies (Barzilai et al., 2018). Studies show that individuals with stronger technology affinity are more likely to embrace new innovations (Trang et al., 2024). In the context of journalism, technological affinity significantly affects journalists’ intentions to use AI technology (Trang et al., 2024; Pham & Nguyet, 2023). Thus, journalists with high technical proficiency are more likely to continue using AI tools in their work. Therefore, we propose the following:

H12.

Technology affinity positively influences the sustainable utilization of AI technologies for journalistic purposes.

4. Research Methodology

4.1. Questionnaire Design

A structured questionnaire survey was used to collect primary data, focusing on ECM constructs (expectation confirmation, perceived usefulness, and satisfaction), KM factors (knowledge sharing, knowledge acquisition, and knowledge application), personal trust, ease of use, and technology affinity. This study adhered to ethical guidelines for research involving human participants. All participants were provided with a clear explanation of the study’s purpose, procedures, and the voluntary nature of participation. Informed consent was obtained from each participant before data collection began, and respondents were assured that their participation was completely voluntary, with the option to withdraw at any time without consequence. Confidentiality and anonymity were strictly maintained, and data were stored securely for research purposes only. The study received ethical approval from the University’s Academic Commission. We consulted five experts in journalism and communication to ensure content relevance and then conducted a pilot study with 30 journalists to check for grammatical and clarity issues. Minor revisions were made based on feedback. The questionnaire, available in English and Chinese, is divided into two parts: demographic information (e.g., gender, age, experience) and 41 items measuring the 10 constructs using a seven-point Likert scale (1 = strongly disagree, 7 = strongly agree). Items were adapted from established scales (Bhagat et al., 2023; Trang et al., 2024). Construct items are listed in Appendix A. Since this study utilized self-reported survey data to examine journalists’ perceptions of AI technologies, the potential for social desirability bias exists, as respondents may select responses they believe are acceptable in either the professional or public social sphere. This is especially true for perceived usefulness, satisfaction, and trust. To mitigate the potential social desirability bias, researchers sought to guarantee the anonymity of the responses; participants were assured that responses would be kept confidential and used for academic purposes, and the survey was self-administered online (lessening social pressure on responses). While every effort was made to mitigate social desirability bias, there may still be some that were unaccounted for. Future research is encouraged to utilize observational behavioral data (i.e., system logs, digital interactions) to triangulate and contrast self-reports.

4.2. Data Collection and Participants

An online survey was conducted during a cross-sectional period to collect data from journalists across China, aiming to validate the proposed framework and examine the linkages. The target group consisted of journalists using AI for journalistic purposes (Trang et al., 2024). The questionnaire was distributed via Google Forms to various groups, including Facebook Messenger, WhatsApp, and WeChat, with journalists encouraged to participate. Media colleagues were also asked to share the survey link. Participation was voluntary, and responses were kept confidential. To prevent missing data, the questions required answers.

Since there is no preexisting sample frame for this population and no equitable way to choose people from the population at large, the non-probability sampling approach was adopted (Bougie & Sekaran). Consequently, this research employed convenience sampling, whereby individuals were selected according to their accessibility and availability at a certain moment (Creswell & Creswell, 2017). Convenience sampling also saves time and money compared to random sampling (Chowdhury et al., 2019; Kawser et al., 2023). A total of 421 journalists participated in the study endeavor. The final sample comprised 396 journalists after eliminating identical replies.

4.3. Sample Size Determination

In order to identify the lowest sample size, we used G*power software (Faul et al., 2009). We employed its online version (v 3.1.9.4). The minimal sample size is determined by considering statistical power and effect size. We used an effect size of 0.05 and a statistical power of 0.95 (Nahar et al., 2023). According to the software, 262 is the minimal sample size. With a sample size of 396, the study has an adequate number of journalists for research assessment.

4.4. Journalists’ Demographic Information

The sample consisted of 396 Chinese journalists, with a higher female participation of 61.1%, compared to 38.9% male participants, reflecting changing gender roles in Chinese journalism. The age distribution showed dominance among early and mid-career professionals, with 31.6% aged 26–30, followed by 21.5% aged 31–40, and 18.4% aged 41–50. Journalists aged 18–25 made up 16.7%, while those aged 51–60 and above 60 represented 9.6% and 2.3%, respectively, highlighting the strong involvement of younger professionals, likely due to their adaptability to AI.

In terms of experience, 28.3% had 1–5 years, 23.5% had 6–10 years, and 9.6% had over 30 years. A significant portion had limited AI exposure: 35.4% had two years of experience, and 33.8% had three years. The main AI applications included content creation (30.3%), content editing (26.8%), text-to-speech (25.3%), and video/image processing (15.4%). These data suggest that while AI adoption is still recent, it is already integral to content-related tasks in Chinese newsrooms, signaling AI’s transformative impact on the industry. Table 1 outlines the demographic data.

Table 1.

Journalists’ information (N = 396).

5. Data Analysis and Results

5.1. Data Analysis

The data were analyzed using three software packages: Excel (version 19), SPSS (version 24), and SmartPLS (version 4), with Partial Least Squares-Structural Equation Modeling (PLS-SEM) employed for evaluating the proposed research framework. PLS-SEM is widely recognized as a key tool in information systems and social sciences (V.-H. Lee et al., 2020; Khan et al., 2018), offering the ability to examine measurement and structural models simultaneously, leading to more accurate estimates (Al-Saedi et al., 2020). Given the exploratory nature of this study, PLS-SEM was preferred over CB-SEM due to its suitability for large numbers of items and model complexity (Al-Sharafi et al., 2023). PLS-SEM can handle data that does not follow a normal distribution (Hair et al., 2019) and is effective with small samples (Roy et al., 2024). A bootstrapping method was used to determine parameter significance by checking the path coefficient (Kashyap & Agrawal, 2020). The analysis followed a two-step approach: first, evaluating the measurement model, followed by the structural model calculation.

5.2. Normality Test

Researchers have utilized an online calculator to run Mardia’s (1970) multivariate normality assessment in order to find out whether the data shows multivariate normality (Oppong & Agbedra, 2016). Multivariate normality is required for a reliable model prediction. Multivariate normality examination revealed non-normality in variables, as shown by skewness (β = 451.1603, p-value < 0.001) and kurtosis (β = 2516.6074, p-value < 0.001) (Mardia, 1970). PLS-SEM is a highly efficient method for tackling non-normal data (Hair et al., 2019). This is another justification for using PLS-SEM in this context.

5.3. Common Method Bias and Multicollinearity

This research assessed multicollinearity to determine the link between separate factors. It is important to note that the path coefficients are affected by the amount of collinearity that exists between the independent constructs. If the variance inflation factor (VIF) values are higher than 3.3, there will be collinearity issues (Hair et al., 2019; Nahar et al., 2023). Since common method variance is a potential issue in self-reported research, Harman’s test was also run. As the maximum variation explained by a single component was just 48.46%—below the 50% suggested cut-off value—the findings did not indicate any problems (Podsakoff et al., 2003). Therefore, there is no common method bias and multicollinearity issues for this investigation.

5.4. Measurement Model Assessment

In the first step, we evaluated the measurement model’s reliability and validity. For that, we determined the internal consistency, reliability, convergent, and discriminant validity of the various constructs. For estimating the construct’s internal consistency and reliability, we employed Cronbach’s alpha (α) and composite reliability (CR). In both cases, the α and CR scores were higher than the recommended limit of 0.70 (Hair et al., 2019). These results showed a high degree of reliability between the indicators. The average variance extracted (AVE) and the outer loadings (λ) of the items were used to assess for convergent validity. All the values λ were more than the benchmark of 0.70, and all the AVE values were more than the benchmark of 0.50 (Hair et al., 2019). Thus, there are no convergent validity issues. Please check Table 2.

Table 2.

Construct reliability and validity.

The concept of discriminant validity provides an explanation for the degree to which different variables are separate from one another. For evaluating discriminant validity, we employed the Fornell and Larcker criterion (Fornell & Larcker, 1981) and Heterotrait-Monotrait Ratio (HTMT). The HTMT needs to be less than 0.85 (Kline, 2023). All of the results are lower than the maximum value of 0.85, hence, the HTMT condition was satisfied. Check Table 3.

Table 3.

Discriminant validity.

5.5. Structural Model Assessment

After validating the measurement model, we looked forward to structural model assessment. The researchers examined the significance of the beta (β), coefficient of determination (R2), predictive relevance (Q2), and effect sizes (f2) in order to evaluate the structural model’s results. We employed the bootstrapping technique of 10,000 resamples (Hair et al., 2019).

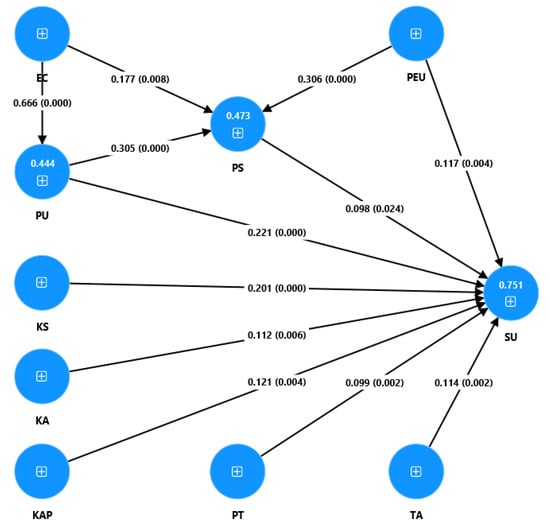

According to the findings shown in Table 4, each of the proposed hypotheses was confirmed. The study results revealed that EC is significantly correlated with PU (β = 0.666, p < 0.001), supporting the hypothesis H1. Similarly, PS was significantly predicted by EC (β = 0.177, p < 0.01), PU (β = 0.305, p < 0.001), and PEU (β = 0.306, p < 0.001), supporting the hypotheses H2, H3, and H9. Finally, the outcomes revealed that SU was significantly and positively affected by PU (β = 0.221, p < 0.001), PS (β = 0.098, p < 0.05), KS (β = 0.201, p < 0.001), KA (β = 0.112, p < 0.01), KAP (β = 0.121, p < 0.05), PEU (β = 0.117, p < 0.01), PT (β = 0.099, p < 0.01) and TA (β = 0.114, p < 0.05), supporting the hypotheses H4, H5, H6, H7, H8, H10, H11, and H12. See Figure 2. Out of all these independent constructs, the structural equation analysis revealed that PU most significantly affects SU because β = 0.221 (p < 0.001).

Table 4.

Hypothesis validity.

Figure 2.

Results of the structural model. Note: EC = Expectation confirmation, PU = perceived usefulness, PS = perceived satisfaction, PEU = perceived ease of use, KS = knowledge sharing, KA = knowledge acquisition, KAP = knowledge application, PT = personal trust, TA = technology affinity, and SU = sustainable use.

Again, according to Table 2 (outer model) and Table 4 (inner model), all of the VIF values are within the acceptable range. the outcomes of the study revealed that the coefficients of determination (R2) for the three endogenous factors accounted for a significant amount of the overall variance. The R2 values were 44.4%, 47.3%, and 75.1% for perceived usefulness, perceived satisfaction, and sustainable use, respectively. See Figure 2. Therefore, the hypothesized model is statistically meaningful. After that, the researchers checked the framework’s predictive relevance (Q2) (Geisser, 1974). A Q2 score over zero indicates great predictive relevance. In this experiment, we employed the blindfolding method with an omission distance of seven. The Q2 values were 0.314, 0.341, and 0.613 for perceived usefulness, perceived satisfaction, and sustainable use, respectively. Therefore, the model has high predictive relevance.

6. Discussion and Implications

This study explores the sustainable use of AI technologies in journalism by integrating constructs from the expectation confirmation model (ECM) and knowledge management (KM), along with factors such as trust, technological affinity, and ease of use. Data collected from journalists using structural equation modeling revealed several key findings.

6.1. Key Drivers of AI Tool Adoption in Journalism

Expectation confirmation positively impacted the perceived utility of AI tools, aligning with previous research (Nguyen et al., 2021). When AI technologies meet journalists’ expectations, they are considered more beneficial, enhancing satisfaction. This satisfaction further strengthens their intention to use AI tools sustainably (Eren, 2021). Additionally, the perceived utility of AI tools positively influences their sustained use, supporting the notion that effective tools enhance performance (Jumaan et al., 2020). Satisfaction with AI tools was also linked to their continued use in journalistic work, reinforcing findings that happiness fosters technology adoption (X. Li et al., 2021). Media organizations should ensure that the functions of AI tools are aligned with journalists’ expectations and workflows for increased perceived utility. This alignment could take the form of participatory design, iterative feedback loops, or customized training opportunities that can continue to adapt to the ongoing needs of newsroom professionals. Moreover, since user satisfaction is essential for continued use, all parties should focus on improving the usability and contextually appropriate use of AI tools. By understanding how expectation confirmation, perceived utility, and satisfaction all align, media organizations might design more thoughtful frameworks for AI adoption that can be seamlessly and sustainably adopted into journalistic practice.

6.2. Enhancing Sustainable AI Use in Newsrooms

Information sharing and knowledge acquisition were found to significantly contribute to the sustainable use of AI in journalism. AI technologies facilitate the flow of information, allowing journalists to stay updated without investing extra time (Al-Emran & Teo, 2020). Knowledge application also plays a critical role, as AI helps journalists access and apply relevant information, promoting long-term usage (Al-Emran & Teo, 2020). Media organizations must embed AI systems into editorial processes via real-time intelligence dissemination, dynamic content curation, and the automation of commonplace tasks, thus freeing cognitive bandwidth to focus on higher-order reporting. Equally important is the empowerment of journalists through specific training that builds their confidence in how to best utilize AI-derived insights. These approaches would not only deepen an epistemic relationship with AI but also cultivate a robust, knowledge-based journalism ecology that encourages long-term technological integration.

6.3. Foundations for Long-Term AI Integration in Journalism

Ease of use was a major factor influencing both satisfaction and sustainable use, with journalists who found AI tools intuitive and efficient showing higher satisfaction and a greater likelihood of continued use (Bhagat et al., 2023; Chen, 2022). Trust and technological affinity were significant predictors of sustainable AI use. Trust in AI reduced journalists’ concerns about risks and uncertainties, enhancing their willingness to use the technology long-term (Pham & Nguyet, 2023). Technological affinity also played a crucial role, with journalists more likely to embrace AI if they have a strong affinity for technology (Trautwein et al., 2021). Journalists comfortable with technology are more likely to sustain the use of AI tools in their daily journalistic activities.

Media organizations must ensure user satisfaction while building long-term commitment to their users. Thus, as media organizations develop AI systems, they should emphasize usability and efficiency of use, particularly for journalists’ current workflows. Usability of AI systems becomes paramount for overcoming product stickiness to adoption and use, and normalizing the acceptance of AI technologies in user groups, particularly those in journalism. Trust is vital once journalists are introduced to AI, to reduce user concerns about the risk and uncertainty of technology. Organizations can foster trust through their transparency with algorithms, clarity in their data privacy policies, and providing reliability in the tools. Understanding the implications of technology affinity, organizations should offer training that reflects user experience, since users have different technology experiences. Therefore, if AI adoption matches the technology affinity of journalists, organizations can capitalize on technology affinity for deeper and more sustained integration into journalists’ practices.

7. Limitations and Future Directions

7.1. Limitations

In discussing the limitations of this study, it is important to consider methodological limitations, particularly those related to the cross-sectional study design and self-report data. First, the cross-sectional nature of the research precludes causal inference or even educational comparisons over time. While the research offers useful insights into the factors driving AI adoption at a single point in time, it lacks an accounting of the dynamic changes in journalists’ perceptions and behaviors that may occur over time as they engage with AI tools. Longitudinal studies would produce better educational insights by better capturing the shifting of attitudes, satisfaction, and usage, thus producing a more detailed understanding of the development of those shifts over time. Additionally, self-report data introduces potential for response bias, including social desirability bias and proclivity to present answers to align with perceived social agreements or professional agreements. These types of bias were mitigated through anonymous surveys, though self-report can bias findings towards more socially or professionally acceptable conclusions. Especially in highly subjective contexts, such as perceived usefulness and satisfaction with AI tools, self-report can bias findings, and future studies would benefit from incorporating objective data on behavior tracking to obtain a more accurate representation of the actual use of AI tools and their impact on journalism practice. A more comprehensive sample across regions or professional scope would further enhance the generalizability of findings, as the current sampled professionals identified here are only Chinese journalists, where experiences and perceptions may be limited to China and may differ widely across geopolitical location and cultural context.

Future studies should also consider the potentially negative consequences of complexity and perception of risk for AI tools in journalism. For example, if an AI system is too complex or cognitively demanding to use, users may be left with a higher perceived burden; as a result, users may feel less satisfied with AI use, ultimately affecting the intent to continue using AI. Additionally, journalists’ higher perception of risk, or even ethical issues, especially regarding algorithmic transparency, data privacy, or editorial responsibility, may undermine trust in AI technologies, and may create less intent to continue to use AI over time. Similarly, lower trust levels, lower affinity or connection with technology, or high perceived costs to knowledge sharing may also diminish the perceived potential of AI technology and deter sustainable use of AI technologies in newsroom practices. These considerations imply that future studies should consider utilizing more complicated constructs, including moderating variables, to promote a more nuanced understanding of human–AI interaction behavior in the professional context of journalism.

7.2. Future Direction

Future studies should examine the temporal and methodological limitations in this study via a longitudinal design to capture the changing relationships with AI amongst journalists over time. These designs would allow for changes in attitudes, usage behaviors, and perceived usefulness to be captured in richer detail, thus contributing to a more nuanced understanding of sustained adoption. Future work should also include objective measures—such as behavioral tracking or system usage logs—to address potential self-reported bias in this study. It is also important to expand the sample beyond a single geopolitical and cultural context. The origins of AI and its usage have and will be determined by region-specific rules, regulatory practices, and sociocultural circumstances. Future work should also acknowledge the relevant role of AI literacy and ethical issues, particularly around algorithmic bias and ethics in journalism. Together, these research pathways would further contribute to a more comprehensive and globally minded framework for responsible/sustainable AI integration to journalism.

8. Conclusions

This research study examined the factors influencing the sustainable use of artificial intelligence (AI) technologies in journalism by integrating the expectation confirmation model (ECM) and knowledge management (KM) factors. The study utilized data from an online survey of 396 Chinese journalists who utilize AI for journalistic purposes. The findings revealed that expectation confirmation significantly predicts perceived usefulness of AI technologies. Satisfaction with AI technologies was found to be positively correlated with expectation confirmation, perceived usefulness, and ease of use. Moreover, sustainable use of AI was significantly impacted by perceived usefulness, satisfaction, knowledge sharing, knowledge acquisition, knowledge application, ease of use, personal trust, and technological affinity. Among these factors, perceived usefulness had the most substantial influence on sustainable use. The study contributes to the theoretical understanding of AI adoption in journalism by highlighting the importance of KM factors and additional constructs such as ease of use, trust, and technology affinity. Practical implications suggest that AI developers and service providers should focus on enhancing the ease of use, reliability, and perceived usefulness of AI tools to promote their sustainable adoption in journalism. This research offers significant practical and theoretical contributions to the field of journalism by providing insights into the factors influencing the sustainable use of AI technologies. It underscores the importance for AI creators and developers to carefully consider usability, reliability, and rationality when designing AI tools for journalists, ensuring a seamless experience that encourages continued use. Emphasizing the perceived usefulness of AI services, the study advises service providers to prioritize attributes that grant journalists access to credible information, enhancing their work efficiency. Furthermore, the research highlights that journalists prefer straightforward and relevant tools, while also acknowledging the potential benefits of more intricate technologies with increased cognitive dedication. To promote the sustainable use of AI tools, service providers should focus on perceived utility, expectation confirmation, and knowledge management, ensuring alignment with the socioeconomic backgrounds of journalists. Overall, this study provides a roadmap for the development and promotion of AI tools in journalism, advising service providers to create user-friendly products that demonstrate enhanced performance.

Author Contributions

Conceptualization, F.L. and H.W.; methodology, F.L.; validation, H.W.; formal analysis, F.L.; investigation, F.L. and H.W.; resources, F.L.; data curation, H.W.; writing—original draft preparation, F.L.; writing—review and editing, F.L.; visualization, F.L.; supervision, H.W.; project administration, F.L.; funding acquisition, F.L. and H.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study was conducted in accordance with the academic and ethical standards required by the Academic Committee of the Communication University of China. According to institutional regulations, no specific ethical approval was necessary for this type of research.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data that support the findings of this study are not openly available due to securing the anonymity of the respondents. However, anonymized data sets are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

| Constructs | References |

| Technology affinity (TA) | Trang et al. (2024) |

| 1. I am very good at using AI technologies. | |

| 2. I like to experiment with new technologies. | |

| 3. I don’t regret time to get used to new technologies. | |

| 4. I didn’t take too much time to master new technologies. | |

| Personal trust (PT) | Trang et al. (2024) |

| 1. I believe in the feasibility of applying artificial intelligence in journalism. | |

| 2. I believe in the potential of artificial intelligence in journalism. | |

| 3. I believe I can use artificial intelligence in a pure way. | |

| 4. I believe that artificial intelligence will make my work more efficient. | |

| Ease of use (EU) | Bhagat et al. (2023) |

| 1. AI-enabled journalism is much easier and simpler. | |

| 2. AI-enabled journalism increases efficiency. | |

| 3. AI-enabled journalism provides the best alternatives to choose from. | |

| Expectation confirmation (EC) | Al-Sharafi et al. (2023) |

| 1. My experience with using AI was better than what I expected. | |

| 2. The service level provided by AI technologies was better than what I expected. | |

| 3. The benefits of using AI were better than what we expected. | |

| 4. Overall, most of my expectations from using AI were confirmed. | |

| Perceived usefulness (PU) | Khan et al. (2018) |

| 1. AI technologies are useful for my journalism. | |

| 2. AI technologies are effective for journalism. | |

| 3. AI technologies are beneficial for doing journalism. | |

| 4. AI technologies are quick and convenient for getting information. | |

| 5. AI technologies are time convenient for journalism. | |

| Perceived satisfaction (PS) | Al-Sharafi et al. (2023) |

| 1. My experience of using AI was very satisfying. | |

| 2. My experience of using AI was very pleasing. | |

| 3. My experience of using AI was very contenting. | |

| 4. My experience of using AI was very delightful. | |

| Knowledge sharing (KS) | Al-Sharafi et al. (2023) |

| 1. AI application allows me to share knowledge with my co-workers. | |

| 2. AI application supports various types of discussions. | |

| 3. AI application facilitates the process of knowledge sharing at any time anywhere settings. | |

| 4. AI application enables me to share different types of resources with my co-workers. | |

| 5. AI application facilitates the collaborative learning process. | |

| Knowledge acquisition (KA) | Al-Sharafi et al. (2023) |

| 1. AI application facilitates the process of acquiring knowledge from the course material. | |

| 2. AI application facilitates the process of acquiring knowledge through discussions. | |

| 3. AI application allows me to generate a new knowledge based on my existing knowledge. | |

| 4. AI application enables me to acquire the knowledge through various resources. | |

| 5. AI application assists me to acquire the knowledge that suits my needs. | |

| Knowledge application (KAP) | Al-Sharafi et al. (2023) |

| 1. AI application provides me with instant access to various types of knowledge. | |

| 2. AI application enables me to apply the knowledge in performing the learning activities. | |

| 3. AI application allows me to integrate different types of knowledge. | |

| 4. AI application can help us for better managing the course materials within the journalism industry. | |

| Sustainable use (SU) | Al-Sharafi et al. (2023) |

| 1. I intend to continue using AI rather than discontinue its use. | |

| 2. I intend to continue using AI than other alternative means. | |

| 3. If I could, I would like to sustain my use of AI technologies. |

References

- Al-Emran, M., & Mezhuyev, V. (2019, October). Examining the effect of knowledge management factors on mobile learning adoption through the use of importance-performance map analysis (IPMA). In International conference on advanced intelligent systems and informatics (pp. 449–458). Springer International Publishing. [Google Scholar] [CrossRef]

- Al-Emran, M., & Teo, T. (2020). Do knowledge acquisition and knowledge sharing really affect e-learning adoption? An empirical study. Education and Information Technologies, 25(3), 1983–1998. [Google Scholar] [CrossRef]

- Aldás-Manzano, J., Ruiz-Mafé, C., & Sanz-Blas, S. (2009). Exploring individual personality factors as drivers of M-shopping acceptance. Industrial Management & Data Systems, 109(6), 739–757. [Google Scholar] [CrossRef]

- Alqasa, K. M. A. (2023). Impact of artificial intelligence-based marketing on banking customer satisfaction: Examining moderating role of ease of use and mediating role of brand image. Transnational Marketing Journal, 11(1), 167–180. [Google Scholar]

- Al-Rahmi, A. M., Shamsuddin, A., Alturki, U., Aldraiweesh, A., Yusof, F. M., Al-Rahmi, W. M., & Aljeraiwi, A. A. (2021). The influence of information system success and technology acceptance model on social media factors in education. Sustainability, 13(14), 7770. [Google Scholar] [CrossRef]

- Al-Rahmi, W. M., Alzahrani, A. I., Yahaya, N., Alalwan, N., & Kamin, Y. B. (2020). Digital communication: Information and communication technology (ICT) usage for education sustainability. Sustainability, 12(12), 5052. [Google Scholar] [CrossRef]

- Al-Saedi, K., Al-Emran, M., Ramayah, T., & Abusham, E. (2020). Developing a general extended UTAUT model for M-payment adoption. Technology in Society, 62, 101293. [Google Scholar] [CrossRef]

- Al-Sharafi, M. A., Al-Emran, M., Iranmanesh, M., Al-Qaysi, N., Iahad, N. A., & Arpaci, I. (2023). Understanding the impact of knowledge management factors on the sustainable use of AI-based chatbots for educational purposes using a hybrid SEM-ANN approach. Interactive Learning Environments, 31(10), 7491–7510. [Google Scholar] [CrossRef]

- Ambalov, I. A. (2021). An investigation of technology trust and habit in IT use continuance: A study of a social network. Journal of Systems and Information Technology, 23(1), 53–81. [Google Scholar] [CrossRef]

- Arpaci, I., Al-Emran, M., & Al-Sharafi, M. A. (2020). The impact of knowledge management practices on the acceptance of Massive Open Online Courses (MOOCs) by engineering students: A cross-cultural comparison. Telematics and Informatics, 54, 101468. [Google Scholar] [CrossRef]

- Ashfaq, M., Yun, J., Yu, S., & Loureiro, S. M. C. (2020). I, Chatbot: Modeling the determinants of users’ satisfaction and continuance intention of AI-powered service agents. Telematics and Informatics, 54, 101473. [Google Scholar] [CrossRef]

- Barredo Ibáñez, D., Jamil, S., & de la Garza Montemayor, D. J. (2023). Disinformation and artificial intelligence: The case of online journalism in China. Estudios Sobre el Mensaje Periodistico, 29(4), 761–770. [Google Scholar] [CrossRef]

- Barzilai, O., Voloch, N., Hasgall, A., Steiner, O. L., & Ahituv, N. (2018). Traffic control in a smart intersection by an algorithm with social priorities. Contemporary Engineering Sciences, 11(31), 1499–1511. [Google Scholar] [CrossRef]

- Bhagat, R., Chauhan, V., & Bhagat, P. (2023). Investigating the impact of artificial intelligence on consumer’s purchase intention in e-retailing. Foresight, 25(2), 249–263. [Google Scholar] [CrossRef]

- Bhattacherjee, A. (2001). Understanding information systems continuance: An expectation-confirmation model. MIS Quarterly, 25, 351–370. [Google Scholar] [CrossRef]

- Broussard, M., Diakopoulos, N., Guzman, A. L., Abebe, R., Dupagne, M., & Chuan, C. H. (2019). Artificial intelligence and journalism. Journalism & Mass Communication Quarterly, 96(3), 673–695. [Google Scholar] [CrossRef]

- Calvo-Rubio, L. M., & Ufarte-Ruiz, M. J. (2021). Artificial intelligence and journalism: Systematic review of scientific production in Web of Science and Scopus (2008–2019). Communication & Society, 34, 159–176. [Google Scholar] [CrossRef]

- Campolo, A., & Crawford, K. (2020). Enchanted determinism: Power without responsibility in artificial intelligence. Engaging Science, Technology, and Society, 6, 1–19. [Google Scholar] [CrossRef]

- Canavilhas, J. (2022). Artificial intelligence and journalism: Current situation and expectations in the Portuguese sports media. Journalism and Media, 3(3), 510–520. [Google Scholar] [CrossRef]

- Chan-Olmsted, S. M. (2019). A review of artificial intelligence adoptions in the media industry. International Journal on Media Management, 21(3–4), 193–215. [Google Scholar] [CrossRef]

- Chen, J. (2022). Adoption of M-learning apps: A sequential mediation analysis and the moderating role of personal innovativeness in information technology. Computers in Human Behavior Reports, 8, 100237. [Google Scholar] [CrossRef]

- Chiu, W., Cho, H., & Chi, C. G. (2021). Consumers’ continuance intention to use fitness and health apps: An integration of the expectation–confirmation model and investment model. Information Technology & People, 34(3), 978–998. [Google Scholar] [CrossRef]

- Chowdhury, S. H., Roy, S. K., Arafin, M., & Siddiquee, S. (2019). Green HR practices and its impact on employee work satisfaction-a case study on IBBL, Bangladesh. International Journal of Research and Innovation in Social Science, Delhi, 3(3), 129–138. [Google Scholar] [CrossRef]

- Creswell, J. W., & Creswell, J. D. (2017). Research design: Qualitative, quantitative, and mixed methods approaches. Sage Publications. [Google Scholar] [CrossRef]

- Cui, D., & Wu, F. (2021). The influence of media use on public perceptions of artificial intelligence in China: Evidence from an online survey. Information Development, 37(1), 45–57. [Google Scholar] [CrossRef]

- Cukurova, M., Bennett, J., & Abrahams, I. (2018). Students’ knowledge acquisition and ability to apply knowledge into different science contexts in two different independent learning settings. Research in Science & Technological Education, 36(1), 17–34. [Google Scholar] [CrossRef]

- Dai, H. M., Teo, T., Rappa, N. A., & Huang, F. (2020). Explaining Chinese university students’ continuance learning intention in the MOOC setting: A modified expectation confirmation model perspective. Computers & Education, 150, 103850. [Google Scholar] [CrossRef]

- Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13, 319–340. [Google Scholar] [CrossRef]

- de-Lima-Santos, M. F., & Ceron, W. (2021). Artificial intelligence in news media: Current perceptions and future outlook. Journalism and Media, 3(1), 13–26. [Google Scholar] [CrossRef]

- de-Lima-Santos, M. F., & Mesquita, L. (2021). Data journalism beyond technological determinism. Journalism Studies, 22(11), 1416–1435. [Google Scholar] [CrossRef]

- Diakopoulos, N., & Koliska, M. (2017). Algorithmic transparency in the news media. Digital Journalism, 5(7), 809–828. [Google Scholar] [CrossRef]

- Djakasaputra, A., Pramono, R., & Hulu, E. (2020). Brand image, perceived quality, ease of use, trust, price, service quality on customer satisfaction and purchase intention of Blibli website with digital technology as dummy variable in the use of eviews. Journal of Critical Reviews, 7(11), 3987–4000. [Google Scholar] [CrossRef]

- Duan, Y., Edwards, J. S., & Dwivedi, Y. K. (2019). Artificial intelligence for decision making in the era of Big Data–evolution, challenges and research agenda. International Journal of Information Management, 48, 63–71. [Google Scholar] [CrossRef]

- Eren, B. A. (2021). Determinants of customer satisfaction in chatbot use: Evidence from a banking application in Turkey. International Journal of Bank Marketing, 39(2), 294–311. [Google Scholar] [CrossRef]

- Farkash, Z. (2018). Higher education chatbot: Chatbots are the future of higher education. Chatbots Life. Available online: https://blog.chatbotslife.com/higher-education-chatbot-chatbots-are-the-future-of-higher-education-51f151e93b02 (accessed on 23 April 2025).

- Faul, F., Erdfelder, E., Buchner, A., & Lang, A. G. (2009). Statistical power analyses using G* Power 3.1: Tests for correlation and regression analyses. Behavior Research Methods, 41(4), 1149–1160. [Google Scholar] [CrossRef] [PubMed]

- Feiz, D., Dehghani Soltani, M., & Farsizadeh, H. (2019). The effect of knowledge sharing on the psychological empowerment in higher education mediated by organizational memory. Studies in Higher Education, 44(1), 3–19. [Google Scholar] [CrossRef]

- Forja-Pena, T., García-Orosa, B., & López-García, X. (2024). The ethical revolution: Challenges and reflections in the face of the integration of artificial intelligence in digital journalism. Communication & Society, 37, 237–254. [Google Scholar] [CrossRef]

- Fornell, C., & Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research, 18(1), 39–50. [Google Scholar] [CrossRef]

- Foroughi, B., Iranmanesh, M., & Hyun, S. S. (2019). Understanding the determinants of mobile banking continuance usage intention. Journal of Enterprise Information Management, 32(6), 1015–1033. [Google Scholar] [CrossRef]

- Fubini, A. (2022). New powers, new responsibilities. A global survey of journalism and artificial intelligence. Problemi Dell’informazione, 47(2), 297–301. [Google Scholar] [CrossRef]

- García-Sánchez, E., García-Morales, V. J., & Bolívar-Ramos, M. T. (2017). The influence of top management support for ICTs on organisational performance through knowledge acquisition, transfer, and utilisation. Review of Managerial Science, 11, 19–51. [Google Scholar] [CrossRef]

- Geisser, S. (1974). A predictive approach to the random effect model. Biometrika, 61(1), 101–107. [Google Scholar] [CrossRef]

- Goni, A., & Tabassum, M. (2020). Artificial Intelligence (AI) in journalism: Is Bangladesh ready for it? A study on journalism students in Bangladesh. Athens Journal of Mass Media and Communications, 6(4), 209–228. [Google Scholar] [CrossRef]

- Gupta, A., Yousaf, A., & Mishra, A. (2020). How pre-adoption expectancies shape post-adoption continuance intentions: An extended expectation-confirmation model. International Journal of Information Management, 52, 102094. [Google Scholar] [CrossRef]

- Guzman, A. L., & Lewis, S. C. (2020). Artificial intelligence and communication: A human–machine communication research agenda. New Media & Society, 22(1), 70–86. [Google Scholar] [CrossRef]

- Hair, J. F., Risher, J. J., Sarstedt, M., & Ringle, C. M. (2019). When to use and how to report the results of PLS-SEM. European Business Review, 31(1), 2–24. [Google Scholar] [CrossRef]

- Islam, S., Hossain, M. S., & Roy, S. K. (2021). Performance evaluation using CAMELS model: A comparative study on private commercial banks in Bangladesh. International Journal for Asian Contemporary Research, 1(4), 170–176. [Google Scholar] [CrossRef]

- Jumaan, I. A., Hashim, N. H., & Al-Ghazali, B. M. (2020). The role of cognitive absorption in predicting mobile internet users’ continuance intention: An extension of the expectation-confirmation model. Technology in Society, 63, 101355. [Google Scholar] [CrossRef]

- Kashyap, A., & Agrawal, R. (2020). Scale development and modeling of intellectual property creation capability in higher education. Journal of Intellectual Capital, 21(1), 115–138. [Google Scholar] [CrossRef]

- Kasinphila, P., Dowpiset, K., & Nuangjamnong, C. (2023). Influence of web design, usefulness, ease of use, and enjoyment on beauty and cosmetics online purchase intention towards a popular brand in Thailand. Research Review, 5(12). [Google Scholar] [CrossRef]

- Kawser, M. S. A. A., Roy, S. K., & Uddin, M. R. (2023). Investigating the influencing factors of study retention for CSE undergraduate students. International Journal of Emerging Technologies and Innovative Research, 10(5), i648–i657. [Google Scholar] [CrossRef]

- Khan, I. U., Hameed, Z., Yu, Y., Islam, T., Sheikh, Z., & Khan, S. U. (2018). Predicting the acceptance of MOOCs in a developing country: Application of task-technology fit model, social motivation, and self-determination theory. Telematics and Informatics, 35(4), 964–978. [Google Scholar] [CrossRef]

- Kim, D. J., Ferrin, D. L., & Rao, H. R. (2008). A trust-based consumer decision-making model in electronic commerce: The role of trust, perceived risk, and their antecedents. Decision Support Systems, 44(2), 544–564. [Google Scholar] [CrossRef]

- Kline, R. B. (2023). Principles and practice of structural equation modeling. Guilford Publications. [Google Scholar] [CrossRef]

- Kothari, A., & Cruikshank, S. A. (2022). Artificial intelligence and journalism: An Agenda for journalism research in Africa. African Journalism Studies, 43(1), 17–33. [Google Scholar] [CrossRef]

- Kuai, J., Ferrer-Conill, R., & Karlsson, M. (2022). AI ≥ journalism: How the Chinese copyright law protects tech giants’ AI innovations and disrupts the journalistic institution. Digital Journalism, 10(10), 1893–1912. [Google Scholar] [CrossRef]

- Lee, C. C. (2003). The global and the national of the Chinese media. In Chinese media, global contexts (pp. 1–31). Routledge. [Google Scholar] [CrossRef]

- Lee, C. P., Lee, G. G., & Lin, H. F. (2007). The role of organizational capabilities in successful e-business implementation. Business Process Management Journal, 13(5), 677–693. [Google Scholar] [CrossRef]

- Lee, V.-H., Hew, J.-J., Leong, L.-Y., Tan, G. W.-H., & Ooi, K.-B. (2020). Wearable payment: A deep learning-based dual-stage SEM-ANN analysis. Expert Systems with Applications, 157, 113477. [Google Scholar] [CrossRef]

- Li, C. Y., & Fang, Y. H. (2019). Predicting continuance intention toward mobile branded apps through satisfaction and attachment. Telematics and Informatics, 43, 101248. [Google Scholar] [CrossRef]

- Li, X., Wang, S., Xue, H., Zhao, X., & Huang, J. (2021). Understanding consumers’ continuance intention to use AI-powered services. International Journal of Information Management, 58, 102366. [Google Scholar]

- Lin, C. Y., & Huang, C. K. (2020). Understanding the antecedents of knowledge sharing behaviour and its relationship to team effectiveness and individual learning. Australasian Journal of Educational Technology, 36(2), 89–104. [Google Scholar] [CrossRef]

- Lucero, K. (2019). Artificial intelligence regulation and China’s future. Columbia Journal of Asian Law, 33, 94. [Google Scholar] [CrossRef]

- Mandari, H., & Koloseni, D. (2022). Examining the antecedents of continuance usage of mobile government services in Tanzania. International Journal of Public Administration, 45(12), 917–929. [Google Scholar] [CrossRef]

- Mardia, K. V. (1970). Measures of multivariate skewness and kurtosis with applications. Biometrika, 57(3), 519–530. [Google Scholar] [CrossRef]

- Nahar, S., Akter, M., Roy, S. K., & Alim, M. A. (2023). Determining the shortest distance using fuzzy triangular method. Journal for New Zealand Herpetology BioGecko, 12(03), 2188–2194. [Google Scholar] [CrossRef]

- Nguyen, D. M., Chiu, Y. T. H., & Le, H. D. (2021). Determinants of continuance intention towards banks’ chatbot services in Vietnam: A necessity for sustainable development. Sustainability, 13(14), 7625. [Google Scholar] [CrossRef]

- Noain Sánchez, A. (2022). Addressing the impact of artificial intelligence on journalism: The perception of experts, journalists and academics. Communication & Society, 35(3), 105–121. [Google Scholar] [CrossRef]

- Oppong, F. B., & Agbedra, S. Y. (2016). Assessing univariate and multivariate normality: A guide for non-statisticians. Mathematical Theory and Modeling, 6(2), 26–33. [Google Scholar] [CrossRef]

- Parratt-Fernández, S., Mayoral-Sánchez, J., & Mera-Fernández, M. (2021). The application of artificial intelligence to journalism: An analysis of academic production. Profesional de la información, 30(3). [Google Scholar] [CrossRef]

- Pavlik, J. V. (2023). Collaborating with ChatGPT: Considering the implications of generative artificial intelligence for journalism and media education. Journalism & Mass Communication Educator, 78(1), 84–93. [Google Scholar] [CrossRef]

- Pereira, R., & Tam, C. (2021). Impact of enjoyment on the usage continuance intention of video-on-demand services. Information & Management, 58(7), 103501. [Google Scholar] [CrossRef]

- Pérez, J. Q., Daradoumis, T., & Puig, J. M. M. (2020). Rediscovering the use of chatbots in education: A systematic literature review. Computer Applications in Engineering Education, 28(6), 1549–1565. [Google Scholar] [CrossRef]

- Pham, C. T., & Nguyet, T. T. T. (2023). Determinants of blockchain adoption in news media platforms: A perspective from the Vietnamese press industry. Heliyon, 9(1), e12747. [Google Scholar] [CrossRef]

- Podsakoff, P. M., MacKenzie, S. B., Lee, J. Y., & Podsakoff, N. P. (2003). Common method biases in behavioral research: A critical review of the literature and recommended remedies. Journal of applied psychology, 88(5), 879. [Google Scholar] [CrossRef]

- Rahi, S., Khan, M. M., & Alghizzawi, M. (2021). Extension of technology continuance theory (TCT) with task technology fit (TTF) in the context of Internet banking user continuance intention. International Journal of Quality & Reliability Management, 38(4), 986–1004. [Google Scholar] [CrossRef]

- Rodgers, W., Yeung, F., Odindo, C., & Degbey, W. Y. (2021). Artificial intelligence-driven music biometrics influencing customers’ retail buying behavior. Journal of Business Research, 126, 401–414. [Google Scholar] [CrossRef]

- Romanova, T., Stoyan, Y., Pankratov, A., Litvinchev, I., Avramov, K., Chernobryvko, M., Yanchevskyi, I., Mozgova, I., & Bennell, J. (2021). Optimal layout of ellipses and its application for additive manufacturing. International Journal of Production Research, 59(2), 560–575. [Google Scholar] [CrossRef]

- Roy, S. K., Musfika, M., & Habiba, U. (2024). Moderated mediating effect on undergraduates’ mobile social media addiction: A three-stage analytical approach. Journal of Technology in Behavioral Science, 1–20. [Google Scholar] [CrossRef]

- Saeidnia, H. R., Hosseini, E., Lund, B., Tehrani, M. A., Zaker, S., & Molaei, S. (2025). Artificial intelligence in the battle against disinformation and misinformation: A systematic review of challenges and approaches. Knowledge and Information Systems, 104, 9–17. [Google Scholar] [CrossRef]

- Sharma, H., & Bhardwaj, S. K. (2024). Ethical explorations: AI’s role in shaping the journalism ecosystem across Asia. New Global Studies, 18(2), 201–209. [Google Scholar] [CrossRef]

- Sheth, A., Yip, H. Y., Iyengar, A., & Tepper, P. (2019). Cognitive services and intelligent chatbots: Current perspectives and special issue introduction. IEEE Internet Computing, 23(2), 6–12. [Google Scholar] [CrossRef] [PubMed]

- Shi, Y., & Lin, S. (2024). How generative AI is transforming journalism: Development, application and ethics. Journalism and Media, 5, 582–594. [Google Scholar] [CrossRef]

- Shin, D., Chotiyaputta, V., & Zaid, B. (2022). The effects of cultural dimensions on algorithmic news: How do cultural value orientations affect how people perceive algorithms? Computers in Human Behavior, 126, 107007. [Google Scholar] [CrossRef]

- Stanciu, V., & Rîndaşu, S.-M. (2021). Artificial Intelligence in retail: Benefits and risks associated with mobile shopping applications. Amfiteatru Economic, 23(56), 46–64. [Google Scholar] [CrossRef]

- Stojanović, M., Radenković, M., Popović, S., Mitrović, S., & Bogdanović, Z. (2023). A readiness assessment framework for the adoption of 5G based smart-living services. Information Systems and e-Business Management, 21(2), 389–413. [Google Scholar] [CrossRef]

- Stray, J. (2021). Making artificial intelligence work for investigative journalism. In Algorithms, automation, and news (pp. 97–118). Routledge. [Google Scholar] [CrossRef]

- Sun, M., Hu, W., & Wu, Y. (2024). Public perceptions and attitudes towards the application of artificial intelligence in journalism: From a China-based survey. Journalism Practice, 18(3), 548–570. [Google Scholar] [CrossRef]

- Trang, T. T. N., Chien Thang, P., Hai, L. D., Phuong, V. T., & Quy, T. Q. (2024). Understanding the adoption of artificial intelligence in journalism: An empirical study in Vietnam. SAGE Open, 14(2), 21582440241255241. [Google Scholar] [CrossRef]

- Trautwein, S., Lindenmeier, J., & Arnold, C. (2021). The effects of technology affinity, prior customer journey experience, and brand familiarity on the acceptance of smart service innovations. SMR-Journal of Service Management Research, 5(1), 36–49. [Google Scholar] [CrossRef]

- Túñez-López, J. M., Fieiras-Ceide, C., & Vaz-Álvarez, M. (2021). Impact of Artificial Intelligence on Journalism: Transformations in the company, products, contents and professional profile. Communication & Society, 34(1), 177–193. [Google Scholar] [CrossRef]

- Upadhyaya, N. (2024). Artificial intelligence in web development: Enhancing automation, personalization, and decision-making. Artificial Intelligence, 4(1), 534–540. [Google Scholar] [CrossRef]

- Vázquez-Cano, E., Mengual-Andrés, S., & López-Meneses, E. (2021). Chatbot to improve learning punctuation in Spanish and to enhance open and flexible learning environments. International Journal of Educational Technology in Higher Education, 18, 1–20. [Google Scholar] [CrossRef]

- Wang, J., Li, X., Wang, P., Liu, Q., Deng, Z., & Wang, J. (2021). Research trend of the unified theory of acceptance and use of technology theory: A bibliometric analysis. Sustainability, 14(1), 10. [Google Scholar] [CrossRef]

- Wang, Z., Huang, D., Cui, J., Zhang, X., Ho, S. B., & Cambria, E. (2025). A review of Chinese sentiment analysis: Subjects, methods, and trends. Artificial Intelligence Review, 58(3), 75. [Google Scholar] [CrossRef]

- Winkler, R., & Söllner, M. (2018, July). Unleashing the potential of chatbots in education: A state-of-the-art analysis. In Academy of management proceedings (Vol. 2018, No. 1, p. 15903). Academy of Management. [Google Scholar] [CrossRef]