A Hierarchical RNN-LSTM Model for Multi-Class Outage Prediction and Operational Optimization in Microgrids

Abstract

1. Introduction

- Proposes an enhanced temporal learning framework based on RNN-LSTM architecture for multi-class outage prediction in microgrids. The model achieves a high accuracy of 86.52%, with a precision of 86%, a recall of 86.20%, and an F1-score of 86.12% on real-time microgrid data, outperforming conventional models including CNN, XGBoost, SVM, and Random Forest.

- Applies mutual information-based feature selection to retain the most relevant and interpretable features for outage prediction, ensuring transparency and physical traceability essential for real-time microgrid operations.

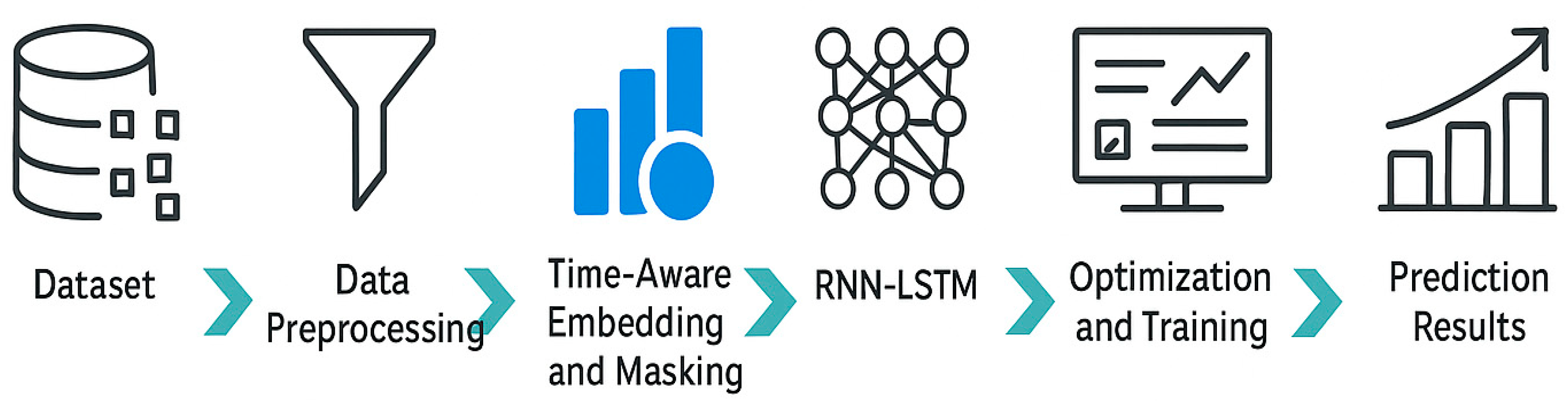

- Introduces time-aware embedding and masking strategy to preprocess categorical and sparse temporal features. This allows the model to learn rich, low-dimensional representations of categorical outage causes while ignoring padding tokens, enhancing generalization without compromising temporal continuity.

- Implements hierarchical temporal learning by structuring LSTM layers to operate at multiple time scales like 5-min, hourly, and daily resolutions. This enables the model to simultaneously learn micro-events such as short-term fluctuations and macro-behaviors like seasonal outage trends, improving forecasting depth and robustness.

- Formulates outage prediction as a fine-grained multiclass classification task rather than binary classification. The model distinguishes between diverse outage types such as equipment failures, cyber-attacks, and weather-induced disruptions, enabling more actionable predictions compared to traditional approaches.

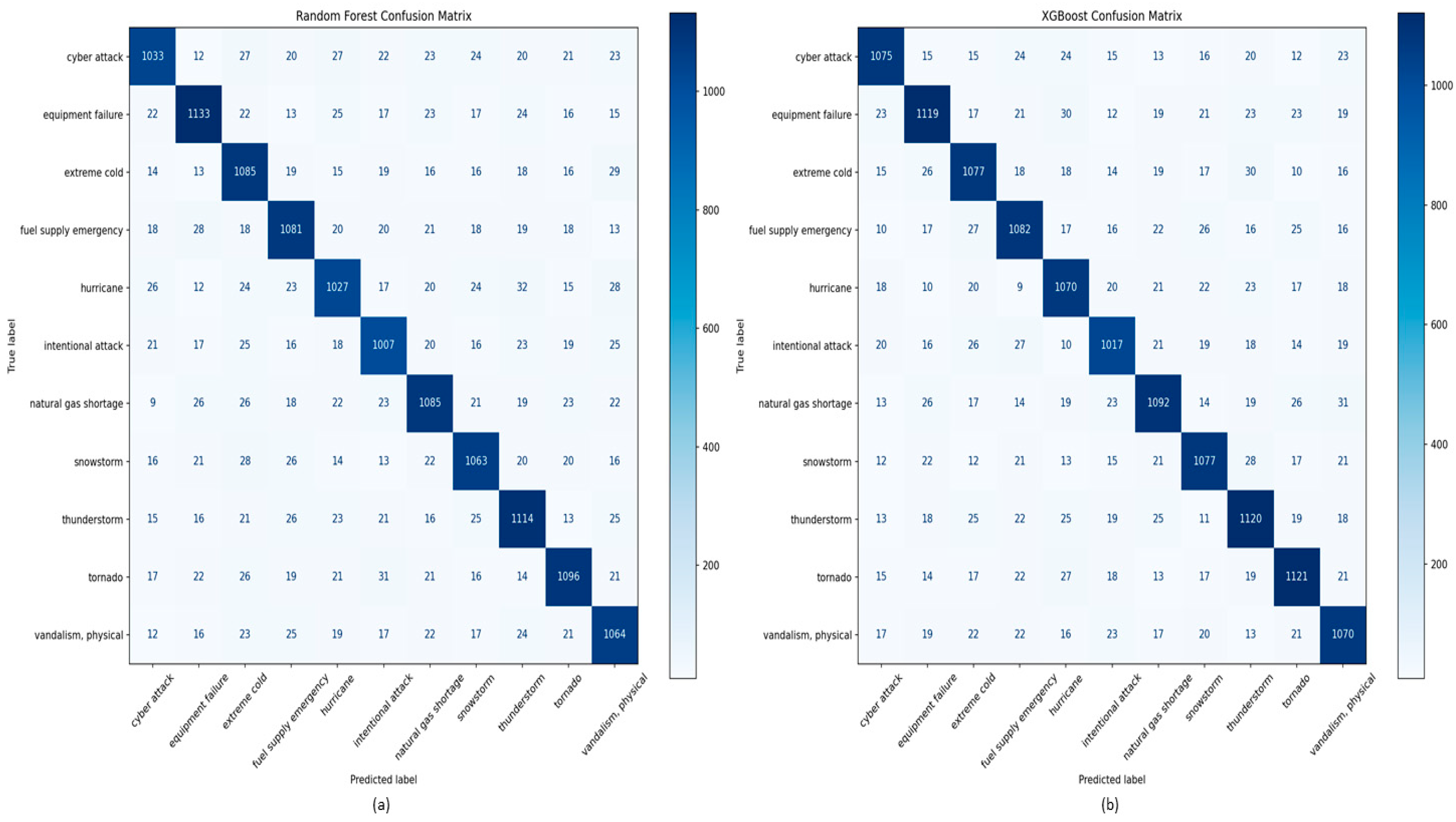

- Presents a unified comparative study of temporal deep learning (RNN-LSTM) and ensemble machine learning models (e.g., Random Forest, XGBoost) across two heterogeneous datasets, offering a holistic performance benchmark under both real-time and historical outage conditions.

- Highlights the RNN-LSTM’s capability to capture long-term temporal dependencies through gated memory units, which retain critical patterns over extended sequences. This significantly improves predictive accuracy for time-dependent outage events that unfold over hours or days.

- Validates the proposed framework using two real-world datasets: (i) a high-frequency, real-time telemetry dataset from a 5 MW microgrid at Maple Cement Factory, and (ii) a 15-year national power outage dataset from Kaggle. This dual-source validation demonstrates the model’s adaptability across local and national reliability contexts.

2. Literature Review

3. Methodology

3.1. Dataset Overview

3.2. Data Preprocessing

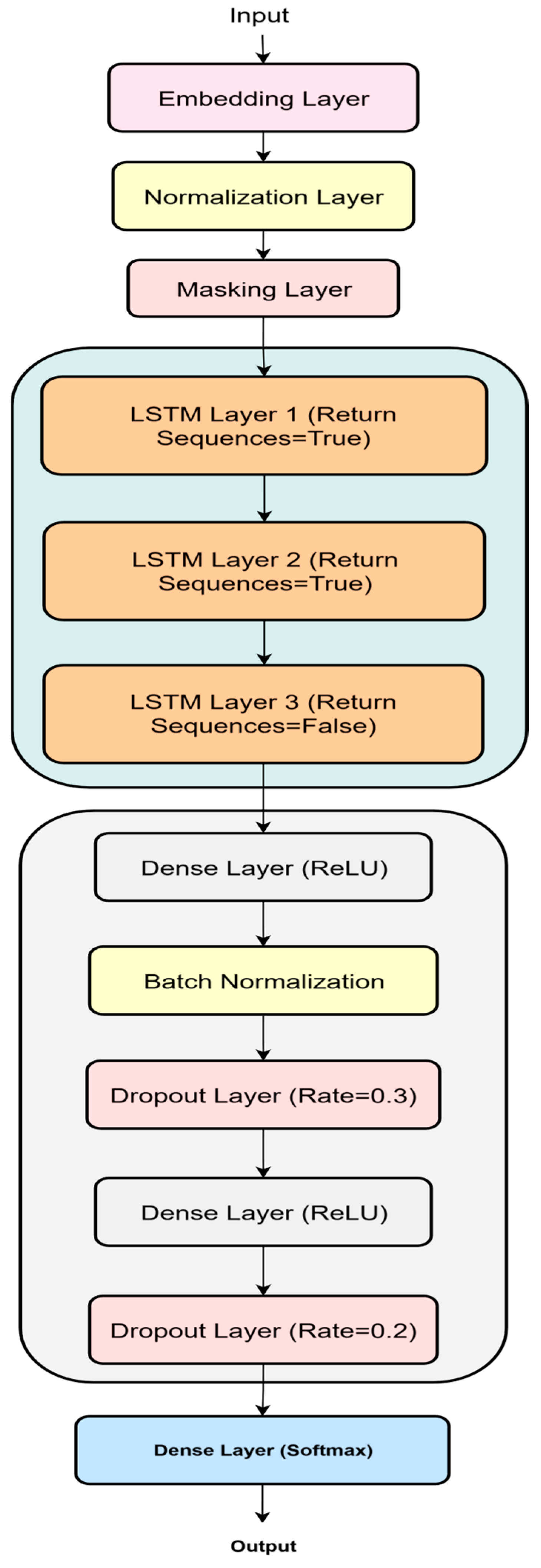

3.3. Model Architecture

- (a)

- Time-Aware Embedding

- (b)

- Masking Layer

- (c)

- Stacked LSTM Layers

- i.

- LSTM Layer 1: Comprises 128 memory cells and receives the embedded input sequence. This layer captures short-term temporal dependencies and low-level time-series fluctuations using gated memory mechanisms.

- ii.

- LSTM Layer 2: Contains 64 memory cells and builds on the output of the first layer to learn intermediate temporal abstractions, capturing transitions and evolving patterns related to outage precursors.

- iii.

- LSTM Layer 3: Includes 32 memory cells and functions as a high-level temporal aggregator, integrating long-range dependencies and consolidating sequence-level context before passing the representation to the dense classifier.

- (d)

- Hierarchical Temporal Fusion

- (e)

- Dropout Regularization

- (f)

- Dense Classifier

- (g)

- Optimization and Training

- Learning rate (η): The optimized learning rate 0.001, determined through empirical tuning to balance fast convergence and gradient stability.

- Exponential decay rates for moment estimates (β1 and β2): Default values of 0.9 and 0.999, respectively.

- Epsilon (ϵ): 1 × 10−8 to prevent division by zero during parameter updates.

4. Results

5. Discussion

Practical Implications for Microgrid Operations

- Early Warning System: The model’s ability to classify outage types in advance such as cyberattack or weather-related failures, enables operators to initiate differentiated mitigation protocols, improving response time and resource allocation.

- Dynamic Scheduling and Load Shedding: Accurate forecasts can be integrated into energy management systems (EMS) to dynamically adjust load prioritization, storage dispatch, or demand response actions during high-risk periods.

- SCADA Integration: The model can be integrated into existing SCADA or monitoring dashboards, where it processes incoming data streams and outputs real-time predictions through lightweight RESTful APIs.

- Grid Resilience Planning: Historical insights from the model can inform resilience strategies, maintenance scheduling, and investment prioritization like reinforcing nodes with recurrent weather-related failures.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- “GAO Urges Action to Address ‘Far-Reaching Effects’ of Climate Change on US Grid|S&P Global.” [Online]. Available online: https://www.spglobal.com/market-intelligence/en/news-insights/articles/2021/3/gao-urges-action-to-address-far-reaching-effects-of-climate-change-on-us-grid-63081807 (accessed on 8 September 2025).

- Feng, W.; Jin, M.; Liu, X.; Bao, Y.; Marnay, C.; Yao, C.; Yu, J. A review of microgrid development in the United States—A decade of progress on policies, demonstrations, controls, and software tools. Appl. Energy 2018, 228, 1656–1668. [Google Scholar] [CrossRef]

- Newman, S.; Shiozawa, K.; Follum, J.; Barrett, E.; Douville, T.; Hardy, T.; Solana, A. A comparison of PV resource modeling for sizing microgrid components. Renew. Energy 2020, 162, 831–843. [Google Scholar] [CrossRef]

- Arafat, M.Y.; Hossain, M.J.; Alam, M.M. Machine learning scopes on microgrid predictive maintenance: Potential frameworks, challenges, and prospects. Renew. Sustain. Energy Rev. 2024, 190, 114088. [Google Scholar] [CrossRef]

- Votava, J.; Saker, M.; Ghanem, S.; Rehabi, M.; Fandi, G.; Müller, Z.; Krepl, V.; Čábelková, I.; Smutka, L.; Tlustý, J.; et al. Sustainable energy management system for microgrids assisted by IOT and improved by AI. Results Eng. 2025, 27, 106158. [Google Scholar] [CrossRef]

- ATariq, H.; Kazmi, S.A.A.; Hassan, M.; Muhammed Ali, S.A.; Anwar, M. Analysis of fuel cell integration with hybrid microgrid systems for clean energy: A comparative review. Int. J. Hydrogen Energy 2024, 52, 1005–1034. [Google Scholar] [CrossRef]

- Abbasi, M.; Abbasi, E.; Li, L.; Aguilera, R.P.; Lu, D.; Wang, F. Review on the Microgrid Concept, Structures, Components, Communication Systems, and Control Methods. Energies 2023, 16, 484. [Google Scholar] [CrossRef]

- Shaier, A.A.; Elymany, M.M.; Enany, M.A.; Elsonbaty, N.A.; Tharwat, M.M.; Ahmed, M.M. An efficient and resilient energy management strategy for hybrid microgrids inspired by the honey badger’s behavior. Results Eng. 2024, 24, 103161. [Google Scholar] [CrossRef]

- Hafez, S.; Alkhedher, M.; Ramadan, M.; Gad, A.; Alhalabi, M.; Yaghi, M.; Jama, M.; Ghazal, M. Advancements in grid resilience: Recent innovations in AI-driven solutions. Results Eng. 2025, 26, 105042. [Google Scholar] [CrossRef]

- Zubair, M.; Waleed, A.; Rehman, A.; Ahmad, F.; Islam, M.; Javed, S. Machine Learning Insights into Retail Sales Prediction: A Comparative Analysis of Algorithms. In Proceedings of the 2024 Horizons of Information Technology and Engineering (HITE), Lahore, Pakistan, 15–16 October 2024; Available online: https://ieeexplore.ieee.org/abstract/document/10777132/ (accessed on 14 June 2025).

- Małolepsza, O.; Mikołajewski, D.; Prokopowicz, P. Using Fuzzy Logic to Analyse Weather Conditions. Electronics 2024, 14, 85. [Google Scholar] [CrossRef]

- Maged, N.A.; Hasanien, H.M.; Ebrahim, E.A.; Tostado-Véliz, M.; Turky, R.A.; Jurado, F. Optimal Real-time implementation of fuzzy logic control strategy for performance enhancement of autonomous microgrids. Int. J. Electr. Power Energy Syst. 2023, 151, 109140. [Google Scholar] [CrossRef]

- Henao, F.; Edgell, R.; Sharma, A.; Olney, J. AI in power systems: A systematic review of key matters of concern. Energy Inform. 2025, 8, 76. [Google Scholar] [CrossRef]

- Fatima, K.; Shareef, H.; Costa, F.B.; Bajwa, A.A.; Wong, L.A. Machine learning for power outage prediction during hurricanes: An extensive review. Eng. Appl. Artif. Intell. 2024, 133, 108056. [Google Scholar] [CrossRef]

- Balamurugan, M.; Narayanan, K.; Raghu, N.; Kumar, G.B.A.; Trupti, V.N. Role of artificial intelligence in smart grid—A mini review. Front. Artif. Intell. 2025, 8, 1551661. [Google Scholar] [CrossRef] [PubMed]

- Akhtar, S.; Shahzad, S.; Zaheer, A.; Ullah, H.S.; Kilic, H.; Gono, R.; Jasiński, M.; Leonowicz, Z. Short-Term Load Forecasting Models: A Review of Challenges, Progress, and the Road Ahead. Energies 2023, 16, 4060. [Google Scholar] [CrossRef]

- Lei, C.; Zhang, H.; Wang, Z.; Miao, Q. Deep Learning for Demand Forecasting: A Framework Incorporating Variational Mode Decomposition and Attention Mechanism. Processes 2025, 13, 594. [Google Scholar] [CrossRef]

- Dewangan, F.; Abdelaziz, A.Y.; Biswal, M. Load Forecasting Models in Smart Grid Using Smart Meter Information: A Review. Energies 2023, 16, 1404. [Google Scholar] [CrossRef]

- Ibrahim, B.; Rabelo, L.; Gutierrez-Franco, E.; Clavijo-Buritica, N. Machine Learning for Short-Term Load Forecasting in Smart Grids. Energies 2022, 15, 8079. [Google Scholar] [CrossRef]

- El Bourakadi, D.; Yahyaouy, A.; Boumhidi, J. Intelligent energy management for micro-grid based on deep learning LSTM prediction model and fuzzy decision-making. Sustain. Comput. Inform. Syst. 2022, 35, 100709. [Google Scholar] [CrossRef]

- Pinheiro, M.G.; Madeira, S.C.; Francisco, A.P. Short-term electricity load forecasting—A systematic approach from system level to secondary substations. Appl. Energy 2023, 332, 120493. [Google Scholar] [CrossRef]

- Elsaraiti, M.; Merabet, A. A Comparative Analysis of the ARIMA and LSTM Predictive Models and Their Effectiveness for Predicting Wind Speed. Energies 2021, 14, 6782. [Google Scholar] [CrossRef]

- ‘EDA: 15 Years of Power Outage’. [Online]. Available online: https://www.kaggle.com/datasets/autunno/15-years-of-power-outages (accessed on 6 September 2025).

- Boser, A. Validating spatio-temporal environmental machine learning models: Simpson’s paradox and data splits. Environ. Res. Commun. 2024, 6, 031003. [Google Scholar] [CrossRef]

- Ghasemkhani, B.; Kut, R.A.; Yilmaz, R.; Birant, D.; Arıkök, Y.A.; Güzelyol, T.E.; Kut, T. Machine Learning Model Development to Predict Power Outage Duration (POD): A Case Study for Electric Utilities. Sensors 2024, 24, 4313. [Google Scholar] [CrossRef] [PubMed]

- Bashkari, M.; Sami, A.; Rastegar, M. Outage cause detection in power distribution systems based on data mining. IEEE Trans. Ind. Inform. 2021, 17, 640–649. Available online: https://ieeexplore.ieee.org/abstract/document/8959134/?casa_token=0m_nfBPS7hwAAAAA:7cVZ-k687--4nLbwlyRG0P_ykq2lNkm9JWQ_GkyHxECQ3wmPiIDcu_drhtDM_DuvtSMNd-RlBxgEY1E (accessed on 19 July 2025). [CrossRef]

| Time Event Began | Irradiation (W/m2) | Total Power (kW) | Insolation (kWh/m2) | Total Energy (kWh) | Expected Energy (kWh) | Lost Energy (kWh) | Tags |

|---|---|---|---|---|---|---|---|

| 8/1/2022 5:50 | 13.2 | 14.9 | 1.1 | 1.2 | 4.4 | 0 | severe weather, thunderstorm |

| 8/1/2022 5:55 | 13.2 | 30.8 | 1.1 | 2.6 | 4.4 | 0 | severe weather, thunderstorm |

| 8/1/2022 6:00 | 13.2 | 31.1 | 1.1 | 2.6 | 4.4 | 0 | severe weather, thunderstorm |

| 8/1/2022 6:05 | 13.2 | 31.4 | 1.1 | 2.6 | 4.4 | 0 | fuel supply emergency, coal |

| 8/1/2022 6:10 | 37.5 | 70.2 | 3.1 | 5.8 | 12.5 | 0 | vandalism, physical |

| 8/1/2022 6:15 | 37.5 | 99.5 | 3.1 | 8.3 | 12.5 | 0 | vandalism, physical |

| 8/1/2022 6:20 | 37.5 | 100.7 | 3.1 | 8.4 | 12.5 | 0 | vandalism, physical |

| 8/1/2022 6:25 | 37.5 | 102.1 | 3.1 | 8.5 | 12.5 | 0 | severe weather, thunderstorm |

| 8/1/2022 6:30 | 37.5 | 103.7 | 3.1 | 8.6 | 12.5 | 0 | severe weather, thunderstorm |

| 8/6/2022 14:10 | 793.3 | 353.8 | 66.1 | 29.5 | 264 | −29.5 | severe weather, wind, rain |

| 28/8/2022 14:55 | 716.4 | 1359.1 | 59.7 | 113.3 | 238.4 | −113.3 | equipment failure |

| Date Event Began | Date of Restoration | Geographic Areas | Demand Loss (KW) | Customers Affected (count) | Time Event Began (HH:MM: SS) | Time of Restoration (HH:MM: SS) | Tags |

|---|---|---|---|---|---|---|---|

| 6/30/2014 | 7/2/2014 | Illinois | −999 | 420,000 | 20:00:00 | 18:30:00 | severe weather, thunderstorm |

| 6/30/2014 | 7/1/2014 | North Central Indiana | −999 | 127,000 | 23:20:00 | 17:00:00 | severe weather, thunderstorm |

| 6/30/2014 | 7/1/2014 | Southeast Wisconsin | 424 | 120,000 | 17:55:00 | 2:53:00 | severe weather, thunderstorm |

| 6/27/2014 | −999 | Wisconsin | −999 | −999 | 13:21:00 | −999 | fuel supply emergency, coal |

| 6/24/2014 | 6/24/2014 | Nashville, Tennessee | −999 | −999 | 14:54:00 | 14:55:00 | vandalism, physical |

| 6/19/2014 | 6/19/2014 | Nashville, Tennessee | −999 | −999 | 8:47:00 | 8:48:00 | vandalism, physical |

| 6/18/2014 | 6/18/2014 | Washington | −999 | −999 | 9:52:00 | 19:00:00 | vandalism, physical |

| 6/18/2014 | 6/20/2014 | Southeast Michigan | −999 | 138,802 | 17:00:00 | 15:00:00 | severe weather, thunderstorm |

| 6/15/2014 | 6/15/2014 | Central Minnesota | −999 | 55,951 | 0:00:00 | 1:00:00 | severe weather, thunderstorm |

| 6/12/2014 | 6/12/2014 | Somervell County, Texas | −999 | −999 | 9:10:00 | 9:11:00 | vandalism, physical |

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|

| Logistic Regression | 71.1 | 70 | 69.5 | 69.75 |

| Naive Bayes (NB) | 78.2 | 76.8 | 77.5 | 77.15 |

| KNN | 79.7 | 78.4 | 78 | 78.2 |

| Stochastic Gradient Descent (SGD) | 80.2 | 79.1 | 79.5 | 79.3 |

| SVM | 80.9 | 80 | 80.4 | 80.2 |

| Decision Tree (DT) | 81.32 | 81 | 80.6 | 80.8 |

| Random Forest (RF) | 84.43 | 83.5 | 84 | 83.75 |

| XGBoost | 85.5 | 85.1 | 84.8 | 84.94 |

| Gradient Boosting Classifier | 85.9 | 85.6 | 85.3 | 85.45 |

| CNN | 86.20 | 85.90 | 85.80 | 85.85 |

| RNN-LSTM | 86.52 | 86 | 86.20 | 86.12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liaqat, N.; Zubair, M.; Waleed, A.; Abid, M.I.; Shahid, M. A Hierarchical RNN-LSTM Model for Multi-Class Outage Prediction and Operational Optimization in Microgrids. Electricity 2025, 6, 55. https://doi.org/10.3390/electricity6040055

Liaqat N, Zubair M, Waleed A, Abid MI, Shahid M. A Hierarchical RNN-LSTM Model for Multi-Class Outage Prediction and Operational Optimization in Microgrids. Electricity. 2025; 6(4):55. https://doi.org/10.3390/electricity6040055

Chicago/Turabian StyleLiaqat, Nouman, Muhammad Zubair, Aashir Waleed, Muhammad Irfan Abid, and Muhammad Shahid. 2025. "A Hierarchical RNN-LSTM Model for Multi-Class Outage Prediction and Operational Optimization in Microgrids" Electricity 6, no. 4: 55. https://doi.org/10.3390/electricity6040055

APA StyleLiaqat, N., Zubair, M., Waleed, A., Abid, M. I., & Shahid, M. (2025). A Hierarchical RNN-LSTM Model for Multi-Class Outage Prediction and Operational Optimization in Microgrids. Electricity, 6(4), 55. https://doi.org/10.3390/electricity6040055