1. Introduction

Image recognition technology has become mature and is widely used in healthcare [

1], transportation [

2], and agriculture [

3]. This technology has demonstrated its maturity and effectiveness. However, its practical application in product design is less common. With the rapid development of artificial intelligence (AI) in recent years, many related tools have emerged. Stable diffusion has become a tool for generating digital art and modifying images [

4]. These advancements have brought fundamental changes to the operational models of the design industry. Many designers with limited programming skills use AI-generated images for the initial stages of design ideation to produce a large number of creative concepts. This approach enhances creative efficiency and promotes diversity in design thinking. To verify whether AI-generated images reflect the typical characteristics of a brand, we employ machine learning and image classification techniques to provide a solid analytical foundation for innovative design. Through this method, designers can find an appropriate balance between innovation and brand typicity.

2. Related Work

2.1. Waikato Environment for Knowledge Analysis (WEKA)

Deep learning has become ubiquitous using tools such as TensorFlow and PyTorch 1.8.1. However, these methods require a foundational knowledge of programming. Industrial designers or automotive designers can have limited programming skills, and a simple machine learning tool, WEKA, is an alternative for image classification. WEKA is used without programming, offering significant ease of use for conducting machine learning (ML) and deep learning (DL) image analysis. Convolutional neural networks (CNNs) are an important application in deep learning (DL). CNNs consist of convolutional layers, pooling layers, and fully connected layers, which segment feature images, extract features, and perform recognition [

5]. Image recognition in machine learning is common, and WEKA image recognition has been applied in analyzing car shapes and distinguishing between fuel vehicles and electric vehicles [

6]. Therefore, this method was used in this study to integrate machine learning into product design.

2.2. AI in Design

With significant advancements in AI in recent years, AI technology has become a necessary trend. Sbai et al. emphasized the potential of generative networks in providing design inspiration for human designers [

7]. AI image generation is categorized into text-to-image generation and image-to-image generation. Automotive designers need to create ideas before developing innovative prototypes. However, this stage is time-consuming. The inclusion of AI breaks through creative bottlenecks and significantly reduces time and costs [

8]. In education, AI image generation has shown significant benefits. AI-generated images effectively present the context of the words, thereby enhancing learning efficiency [

9].

2.3. Analysis of Automotive Typicality

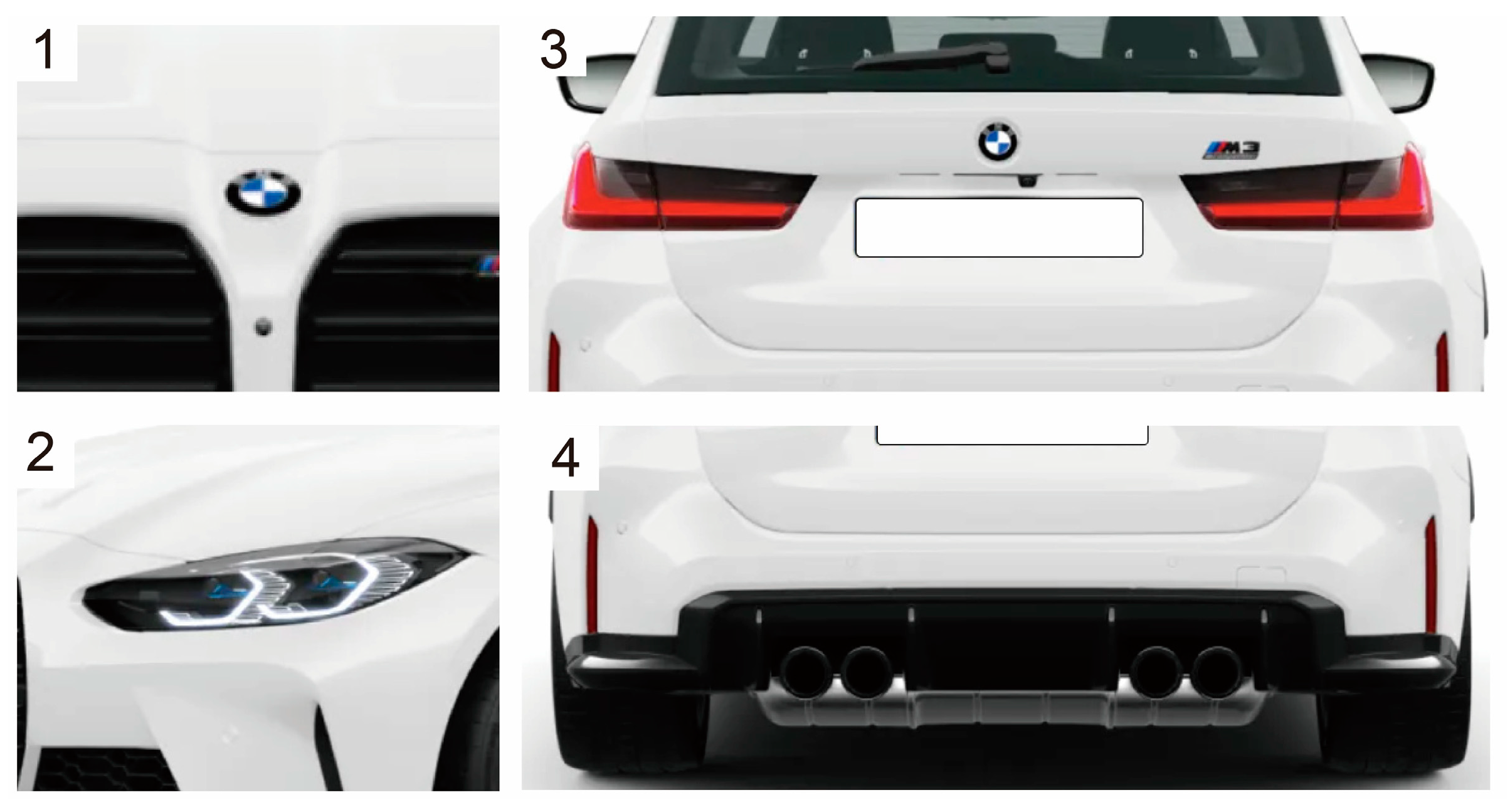

There are four design elements of a car to help identify its brand. These are (1) front badge, (2) headlights, (3) taillights, and (4) rear bumper [

10] (

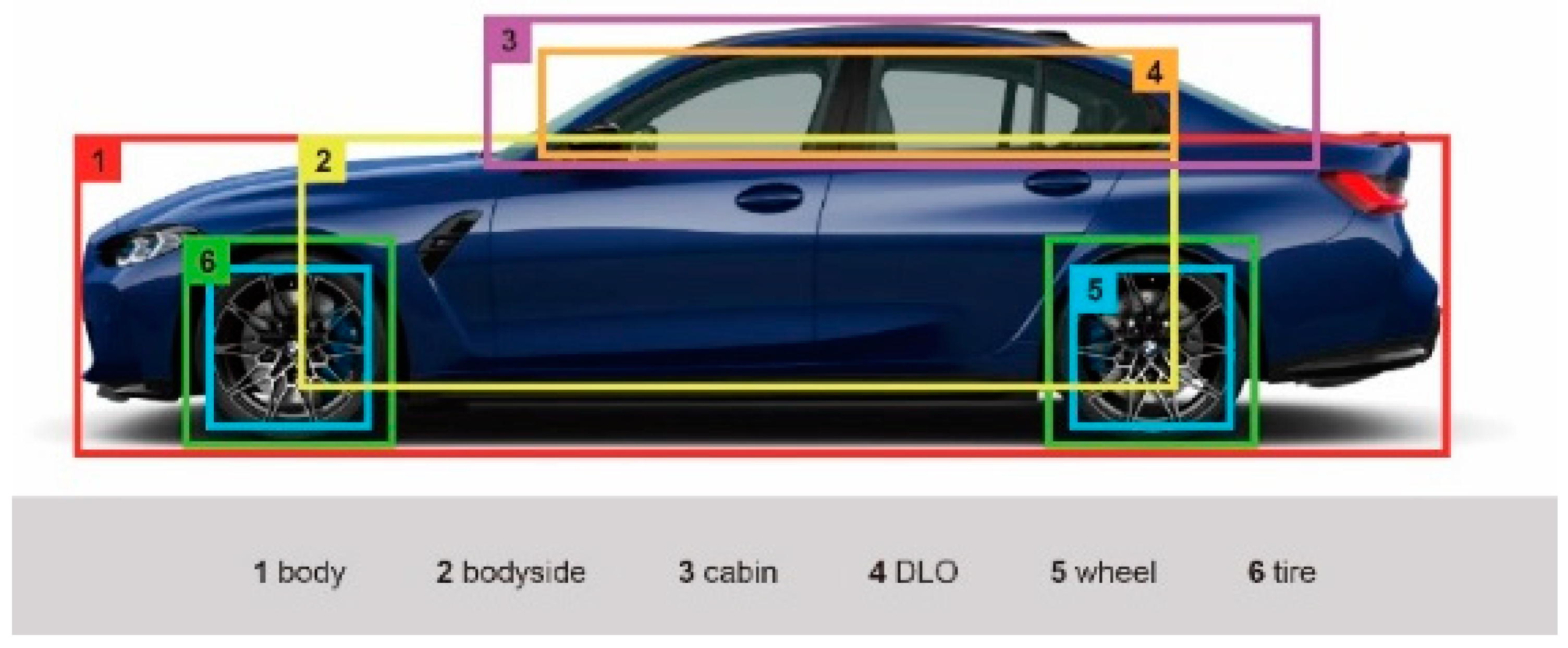

Figure 1). In initial sketching, the side view of the vehicle serves as the defining standard for the vehicle’s stance. The side view is divided into six features: body, bodyside, cabin (greenhouse), daylight opening (DLO), wheel, and tire [

11] (

Figure 2).

Each brand has a unique design DNA. For example, Lexus has its spindle grille, BMW features a kidney grille, and Lamborghini’s exterior is muscular with Y-shaped and hexagonal accents. These five design elements are the key to helping us recognize the vehicles.

3. Methods

We used open-source software throughout, including WEKA for machine learning and Stable Diffusion for AI image generation. We used DL methods through ML for image classification. The study is divided into the following three stages:

In the first stage, WEKA is chosen as the machine learning tool. Using its image classification feature, we classify images and train models, adjusting parameters to obtain the most suitable and effective model samples.

In the second stage, Stable Diffusion is used to generate images that will be used as the test dataset for the next stage.

The third stage is the validation phase, where the accuracy of the AI-generated images is assessed by testing the classification model trained with AI-generated images.

3.1. Vehicle Features and Brand Style

Based on the application of typicality in predicting product appearance using WEKA [

12], we collected images of electric and fuel vehicles from BMW’s official website in 2024. The collected images include visual elements, such as the full vehicle, body, bodyside, headlights, taillights, front bumper, and rear bumper.

These images were divided into the aforementioned individual components, with each part undergoing subsequent image processing to facilitate model training to identify the design features and key elements. The designs of electric vehicles and fuel vehicles are compared to provide data to support visual differences. Backgrounds were removed to ensure all images were clean and focused on the vehicle. Using the post-processed image data, we trained models with machine learning techniques to automatically recognize and classify the design elements of both types of vehicles and understand the relationship between brand style and vehicle type characteristics. Through training and validation based on a deep learning (DL) framework, we achieved optimal accuracy in distinguishing the vehicles.

3.2. AI-Generated Images

AI tools present a new creative approach for designers with limited programming skills. According to Liu et al.’s tests on Stable Diffusion in industrial design, product styles were successfully transformed, demonstrating that AI has a high capability to judge color, material, and style [

4]. Thus, we used Stable Diffusion to generate images of new electric vehicles, utilizing the text-to-image generation feature (

Figure 3). This process involves both positive and negative prompts.

For the vehicle body, the positive prompts include “clean exterior, BMW style, electric vehicle, future electric concept vehicle, concept, creative, car design, smooth and soft lines”. Additional prompts were added based on vehicle type (“sedan, hatchback, wagon, SUV”) and view angle (“front view, side view, rear view”). The negative prompts for the vehicle body include “old car design, old design language, boxy cars, air intake, radiator grille, exhaust”. The generated images underwent image processing to be used for evaluation in the third stage.

3.3. Evaluation of Generated Image

As the application of AI-generated images in design continues to increase, ensuring the quality and standard of these images becomes critically important. Before evaluating the electric vehicle images generated by Stable Diffusion, the images were processed and converted to be consistent with the training model. Subsequently, the preprocessed images were fed into the previously trained deep learning model to test accuracy, using CNN to identify and classify vehicle images. By observing whether the model classified the Stable Diffusion-generated images as electric vehicles, we assessed the usefulness of AI-generated images for designers.

4. Results and Discussions

4.1. Usability of Machine Learning

We divided the test dataset into three parts: front three-quarter view and front detail images, side view and side detail images, and rear three-quarter view and rear detail images. The images were converted in grayscale (

Figure 4). For training the model, we used Python 3 to apply a fixed amount of stretching deformation to some images, using deformed images as training dataset materials. The dataset was split into 80% of the collected images for training and 20% for testing.

In the selection of classifiers, we used sequential minimal optimization (SMO) and Random Forest. SMO is appropriate for high-dimensional data classification, capable of finding the optimal classification hyperplane to distinguish different categories for classification and regression analysis and efficient handling of large-scale datasets. Random Forest is known for its high robustness and accuracy for classification and regression tasks, effectively dealing with high-dimensional data and complex problems. Both are effective in image classification. In the feature extraction phase, we used various texture image filters in WEKA, including the pyramid histogram of oriented gradients filter (PHOG)-Filter, the BinaryPatternsPyramid-Filter, the EdgeHistogram-Filter, the Gabor-Filter, and the fast and compact transform-histogram filter (FCTH)-Filter.

By pairing classifiers with filters, all training sets were used through 10-fold cross-validation. The results of image training and testing showed that the random forest classifier performed the best for the classification of front three-quarter view and front detail images. When using the EdgeHistogram-Filter, the training dataset achieved an accuracy of 72.6%, and the test dataset achieved an accuracy of 90.4%. When using the PHOG-Filter, the training dataset achieved an accuracy of 78.5%, and the test dataset accuracy was 95% (

Table 1). For the classification of rear three-quarter view and rear detail images, the combination of the random forest classifier and the BinaryPatternsPyramid-Filter achieved an accuracy of 80.5% in the training dataset and 90% in the test dataset. The combination of the sequential minimal optimization (SMO) classifier and the BinaryPatternsPyramid-Filter achieved an accuracy of 70% in the training dataset and 100% in the test dataset (

Table 2). In the classification of side view and detail images, both the Random Forest and SMO classifiers were used with an accuracy of lower than 70%.

When selecting models, the model’s generalization ability was considered to ensure that the training dataset and test dataset performance were relatively consistent. This helped guarantee that the model maintains stable classification capabilities when encountering new data. The random forest classifier paired with the PHOG-Filter and BinaryPatternsPyramid-Filter showed better training and testing accuracy across different perspectives, indicating generalization ability. The SMO classifier, with a test dataset accuracy of 100%, performed ideally, but its training dataset accuracy was relatively low due to overfitting. Therefore, it is not recommended to rely solely on such results.

4.2. Distinguishment of Electric and Fuel Vehicles

To understand the differences between these two types of vehicles, we categorized the images into electric vehicles and fuel vehicles and experimented with different feature extraction methods with classifiers. The results indicated that machine learning analyzed AI-generated images effectively. Front and rear view images provide relevant feature information about the vehicle’s power system, resulting in better classification. In contrast, side view features are less effective in determining the vehicle’s power system. Side view features and shapes are less significant for judging automotive designs.

5. Conclusions

We used the machine learning tool, WEKA, to successfully distinguish AI-generated images of electric vehicles and fuel vehicles using image classification from different perspectives. The random forest classifier performed best in classifying front and rear view images, with an accuracy of exceeding 90%. However, the accuracy for classifying side-view images was relatively low. This indicates that electric vehicles and fuel vehicles have more distinct characteristics in front and rear views. In using WEKA for image classification, various image filters and classifiers were paired using the 10-fold cross-validation method to ensure the accuracy and stability of the model.

The developed method enables designers to quickly and accurately identify and distinguish different types of automotive features using machine learning techniques. This helps companies precisely convey product semantics during the design and development process for innovative or iterative designs. By combining AI image generation, designers can generate a large number of concept images in the early stages of the design process to enhance and innovate design efficiency. Machine learning effectively assesses whether the AI-generated images meet expectations. Future research is necessary to expand the dataset size, explore other machine learning algorithms and image filters, and apply this technology to home design, consumer electronics design, and others to improve the automation of the design process.

Author Contributions

Conceptualization, H.-H.W. and H.-K.C.; methodology, H.-K.C.; software, H.-K.C.; validation, H.-H.W. and H.-K.C.; formal analysis, H.-K.C.; investigation, H.-K.C.; resources, H.-K.C.; data curation, H.-K.C.; writing—original draft preparation, H.-K.C.; writing—review and editing, H.-H.W.; visualization, H.-K.C.; supervision, H.-H.W.; project administration, H.-H.W.; funding acquisition, H.-H.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by NSTC, Taiwan, through grant 111-2410-H-027-019-MY2.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are unavailable due to privacy reasons.

Acknowledgments

The authors gratefully acknowledge the financial support of NSTC, Taiwan, through grant 111-2410-H-027-019-MY2.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Shen, D.; Wu, G.; Suk, H.-I. Deep learning in medical image analysis. Annu. Rev. Biomed. Eng. 2017, 19, 221–248. [Google Scholar] [CrossRef]

- Hu, W.; Wang, W.; Ai, C.; Wang, J.; Wang, W.; Meng, X.; Liu, J.; Tao, H.; Qiu, S. Machine vision-based surface crack analysis for transportation infrastructure. Autom. Constr. 2021, 132, 103973. [Google Scholar] [CrossRef]

- Chiu, M.T.; Xu, X.; Wei, Y.; Huang, Z.; Schwing, A.G.; Brunner, R.; Khachatrian, H.; Karapetyan, H.; Karapetyan, H.; Dozier, I.; et al. Agriculture-vision: A large aerial image database for agricultural pattern analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2828–2838. [Google Scholar]

- Liu, M.; Hu, Y. Application potential of stable diffusion in different stages of industrial design. In Proceedings of the International Conference on Human-Computer Interaction, Copenhagen, Denmark, 23–28 July 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 590–609. [Google Scholar]

- Yang, X.; Ye, Y.; Li, X.; Lau, R.Y.; Zhang, X.; Huang, X. Hyperspectral image classification with deep learning models. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5408–5423. [Google Scholar] [CrossRef]

- Wang, H.-H.; Shen, Y.-Y.; Hung, Y.-Y. Car Types and Semantics Classification Using Weka. In Proceedings of the 2022 5th Artificial Intelligence and Cloud Computing Conference, Osaka, Japan, 17–19 December 2022; pp. 34–41. [Google Scholar]

- Sbai, O.; Elhoseiny, M.; Bordes, A.; LeCun, Y.; Couprie, C. Design: Design inspiration from generative networks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Radhakrishnan, S.; Bharadwaj, V.; Manjunath, V.; Srinath, R. Creative intelligence-automating car design studio with generative adversarial networks (GAN). In Proceedings of the International Cross-Domain Conference for Machine Learning and Knowledge Extraction, Hamburg, Germany, 27–30 August 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 160–175. [Google Scholar]

- Aktay, S. The usability of images generated by artificial intelligence (AI) in education. Int. Technol. Educ. J. 2022, 6, 51–62. [Google Scholar]

- Liem, A.; Abidin, S.; Warell, A. Designers’ perceptions of typical characteristics of form treatment in automobile styling. In Proceedings of the 5th International Workshop on Design & Semantics of Form and Movement, DesForm, Taipei, Taiwan, 26–27 October 2009; pp. 144–155. [Google Scholar]

- Lee, G.; Kim, T.; Suk, H.-J. GP22: A Car Styling Dataset for Automotive Designers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 2268–2272. [Google Scholar]

- Wang, H.-H.; Lin, Y.-Y.; Huang, H.-T. Application of Typicality in Predicting Product Appearance. Eng. Proc. 2023, 55, 66. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).