Enhancing Autonomous Navigation: Real-Time LIDAR Detection of Roads and Sidewalks in ROS 2 †

Abstract

1. Introduction

2. Methodology

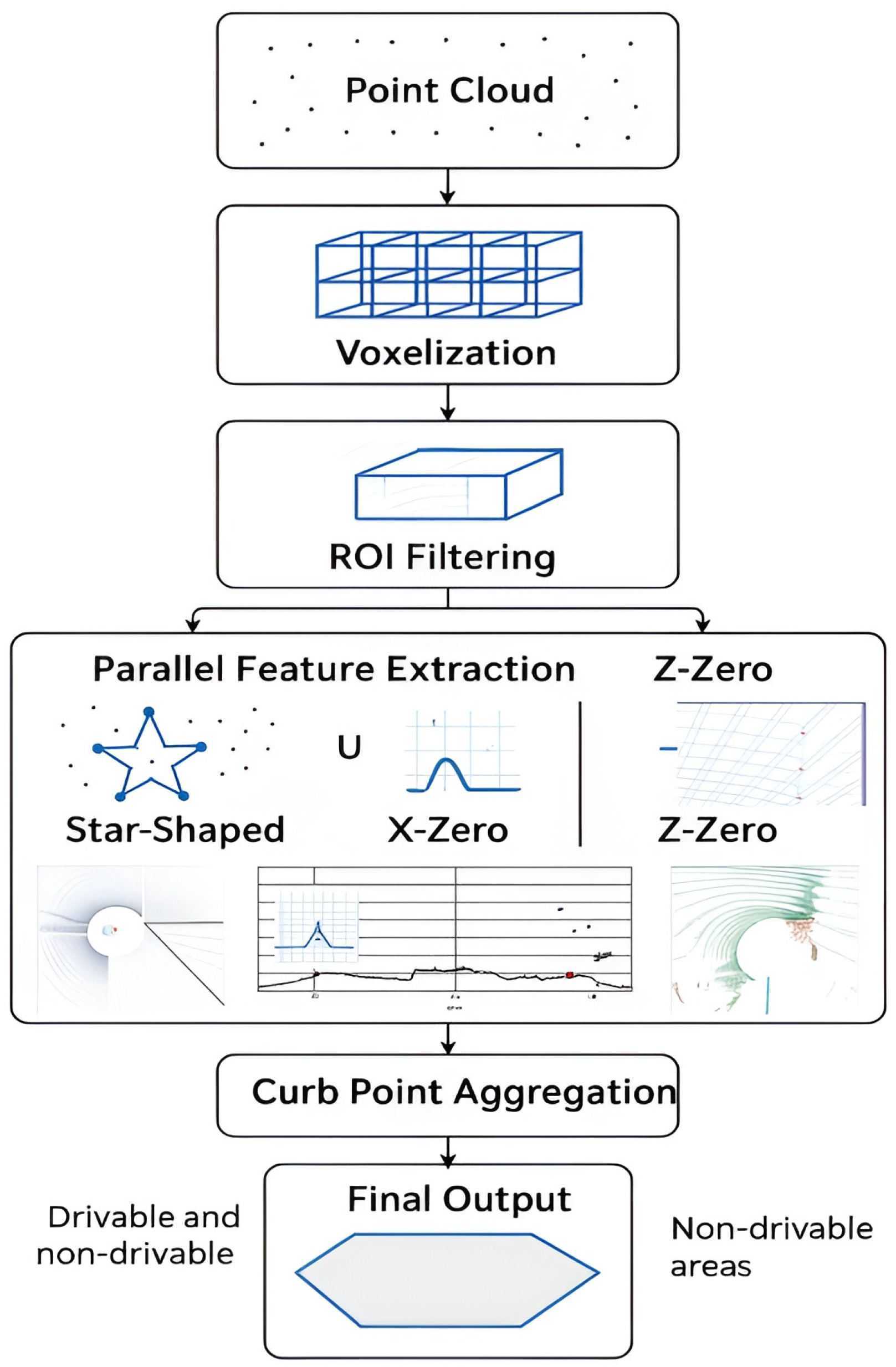

2.1. System Architecture

- Region of Interest (ROI) Filtering to discard irrelevant areas based on user-defined X, Y, and Z bounds.

- Parallel Feature Extraction using the Star-Shaped, X-Zero, and Z-Zero algorithms.

- Curb Point Aggregation via logical disjunction of candidate outputs.

- Polygonal road modeling to obtain a simplified representation using a lightweight boundary-fitting algorithm (Douglas–Peucker) [10].

2.2. Sidewalk Detection

2.3. ROS2 Integration

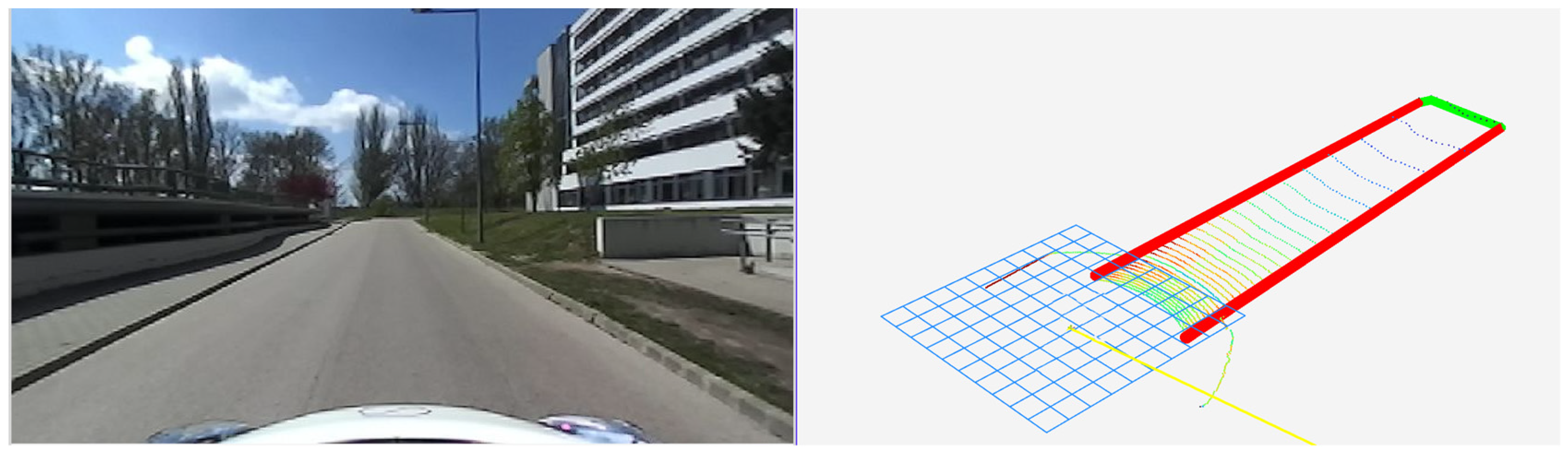

3. Results

3.1. Evaluation

- Speed: The entire pipeline, including voxelization, feature extraction, and polygon modeling, maintained execution times below 45 ms per frame on average, thereby supporting continuous operation at over 20 Hz.

- Robustness: The combination of the Star-Shaped, X-Zero, and Z-Zero algorithms provided complementary strengths, allowing the system to generalize across uneven curbs, occlusions, and shallow sidewalk geometries.

3.2. Limitations and Future Work

- Enhancing robustness in dynamic environments via noise filtering and predictive edge tracking;

- Improving parameter adaptability to reduce manual tuning;

- Optimizing the system for efficient, reliable deployment on in-vehicle hardware.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, Y.; Ma, L.; Zhong, Z.; Liu, F.; Chapman, M.A.; Cao, D.; Li, J. Deep learning for LiDAR point clouds in autonomous driving: A review. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 3412–3432. [Google Scholar] [CrossRef] [PubMed]

- Yang, G.; Mentasti, S.; Bersani, M.; Wang, Y.; Braghin, F.; Cheli, F. LiDAR point-cloud processing based on projection methods: A comparison. arXiv 2020, arXiv:2008.00706. [Google Scholar]

- Liu, H.; Wu, C.; Wang, H. Real-time object detection using LiDAR and camera fusion for autonomous driving. Sci. Rep. 2023, 13, 8942. [Google Scholar] [CrossRef] [PubMed]

- Royo, S.; Ballesta-Garcia, M. An overview of LiDAR imaging systems for autonomous vehicles. Appl. Sci. 2019, 9, 4093. [Google Scholar] [CrossRef]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End learning for point cloud-based 3D object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 4490–4499. [Google Scholar]

- Huang, Z.; Huang, Y.; Zheng, Z.; Hu, H.; Chen, D. HybridPillars: Hybrid Point-Pillar Network for real-time two-stage 3D object detection. IEEE Sens. J. 2024, 24, 11853–11863. [Google Scholar] [CrossRef]

- Zhao, Z.; Chen, K.; Yamane, S. CBAM-Unet++: Easier to find the target with the attention module “CBAM”. In Proceedings of the 2021 IEEE Global Conference on Consumer Electronics (GCCE), Osaka, Japan, 12–15 October 2021; pp. 655–657. [Google Scholar]

- Das, D.; Adhikary, N.; Chaudhury, S. Sensor fusion in autonomous vehicle using LiDAR and camera sensor. In Proceedings of the IEEE Region 10 Humanitarian Technology Conference (R10-HTC), Hyderabad, India, 30 September–2 October 2022; pp. 336–341. [Google Scholar]

- Zhang, Y.; Wang, J.; Wang, X.; Dolan, J.M. Road-segmentation-based curb detection method for self-driving via a 3D-LiDAR sensor. IEEE Trans. Intell. Transp. Syst. 2018, 19, 3981–3991. [Google Scholar] [CrossRef]

- Douglas, D.H.; Peucker, T.K. Algorithms for the reduction of the number of points required to represent a digitized line or its caricature. Cartographica 1973, 10, 112–122. [Google Scholar] [CrossRef]

- Horváth, E.; Pozna, C.; Unger, M. Real-time LIDAR-based urban road and sidewalk detection for autonomous vehicles. Sensors 2022, 22, 10194. [Google Scholar] [CrossRef]

- Rezaei, M. Computer Vision for Road Safety: A System for Simultaneous Monitoring of Driver Behaviour and Road Hazards; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Murray, R.M.; Li, Z.; Sastry, S.S. A Mathematical Introduction to Robotic Manipulation; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Farraj, B.J.B.; Alabdallah, A.; Unger, M.; Horváth, E. Enhancing Autonomous Navigation: Real-Time LIDAR Detection of Roads and Sidewalks in ROS 2. Eng. Proc. 2025, 113, 24. https://doi.org/10.3390/engproc2025113024

Farraj BJB, Alabdallah A, Unger M, Horváth E. Enhancing Autonomous Navigation: Real-Time LIDAR Detection of Roads and Sidewalks in ROS 2. Engineering Proceedings. 2025; 113(1):24. https://doi.org/10.3390/engproc2025113024

Chicago/Turabian StyleFarraj, Barham Jeries Barham, Abdelrahman Alabdallah, Miklós Unger, and Ernő Horváth. 2025. "Enhancing Autonomous Navigation: Real-Time LIDAR Detection of Roads and Sidewalks in ROS 2" Engineering Proceedings 113, no. 1: 24. https://doi.org/10.3390/engproc2025113024

APA StyleFarraj, B. J. B., Alabdallah, A., Unger, M., & Horváth, E. (2025). Enhancing Autonomous Navigation: Real-Time LIDAR Detection of Roads and Sidewalks in ROS 2. Engineering Proceedings, 113(1), 24. https://doi.org/10.3390/engproc2025113024