ROS 2-Based Framework for Semi-Automatic Vector Map Creation in Autonomous Driving Systems †

Abstract

1. Introduction

- Multi-Sensor Fusion: A robust pipeline is developed that fuses all the data present from LIDAR, GPS, and IMU to give accuracy in vehicle pose estimation and map alignment.

- Lanelet2 Compatibility: Our system converts map outputs into Lanelet2 format for integration with the existing AV software stack.

- Semi-Automation: we propose a workflow that automates most of the map-making process while allowing targeted human corrections and validations of the results.

- Scalability and Adaptability: The framework is designed from the ground up to resume operations across different vehicle platforms for different urban environments, thus enabling scalable HD map generation for diverse AV applications.

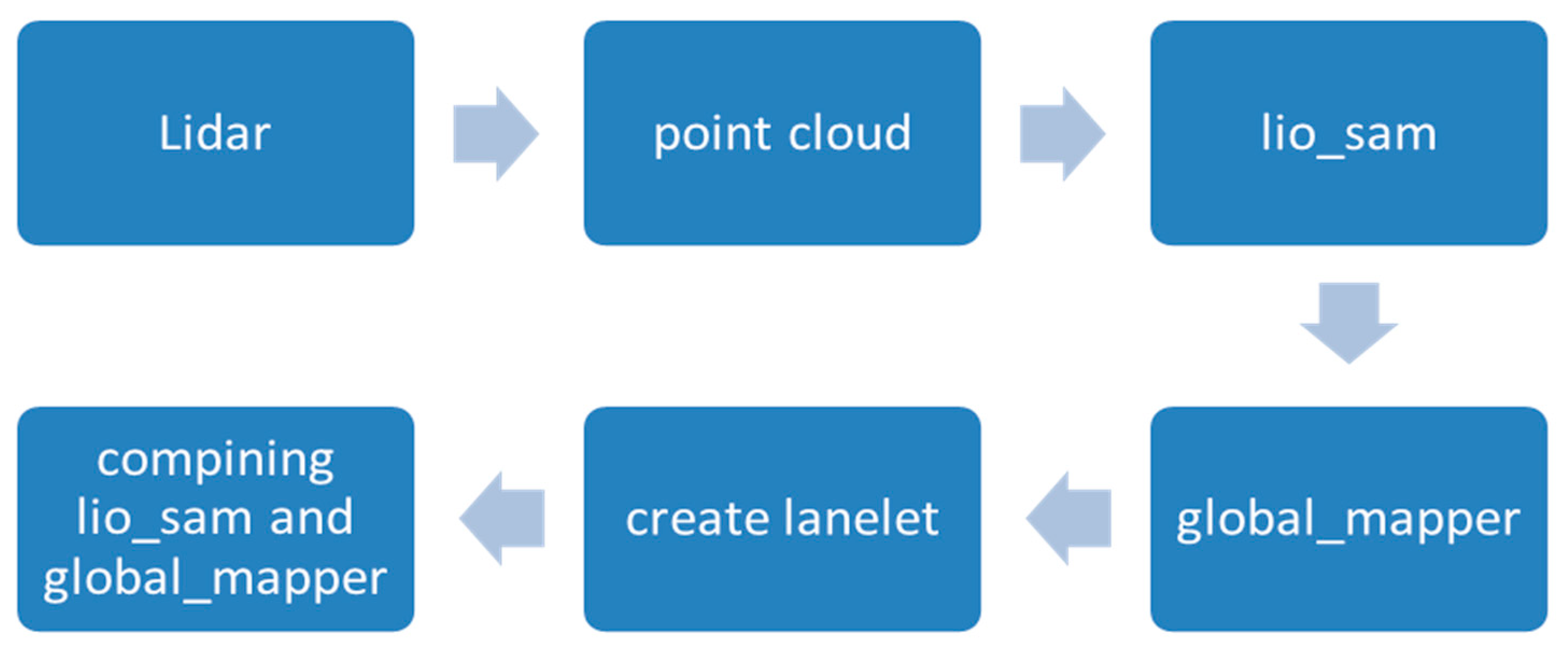

2. Methodology

2.1. System Architecture

- Sensor Acquisition Node: This captures synchronized data streams from sensors, including 64-channel Ouster OS1 LIDAR (San Francisco, CA, USA), GNSS (NovAtel PwrPak7D-E1, SwiftNav Duro Inertial), and IMU (Lord MicroStrain 3DMGX5-AHRS) (Williston, VT, USA). Data are published onto ROS 2 topics for consumption by downstream nodes.

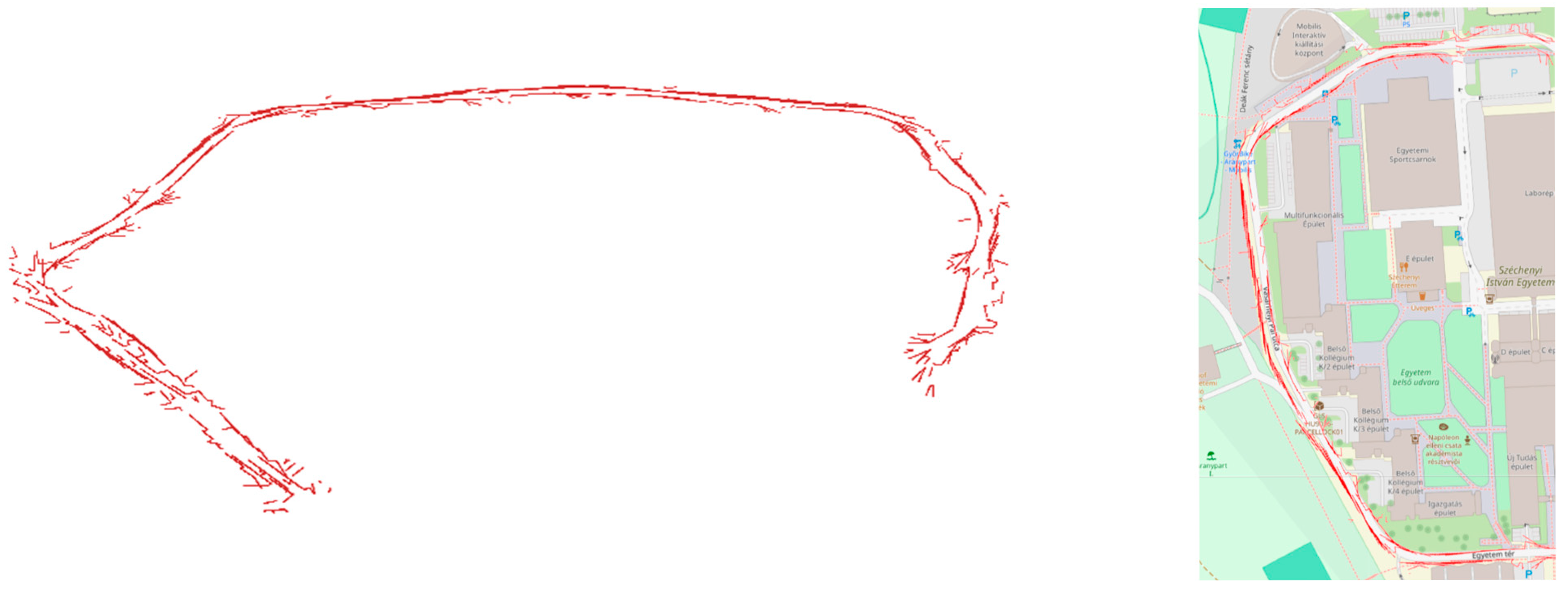

- Pose Estimation Node: This implements LIO-SAM (LIDAR Inertial Odometry via Smoothing and Mapping) for real-time fusion of LIDAR and IMU data, providing accurate vehicle localization. According to our experiments, a GNSS receiver could theoretically provide the same pose information, but a SLAM-based method proved to be more accurate and robust; see Figure 1. The node publishes accurate and drift-minimized 6-DOF vehicle pose estimates at 20 Hz.

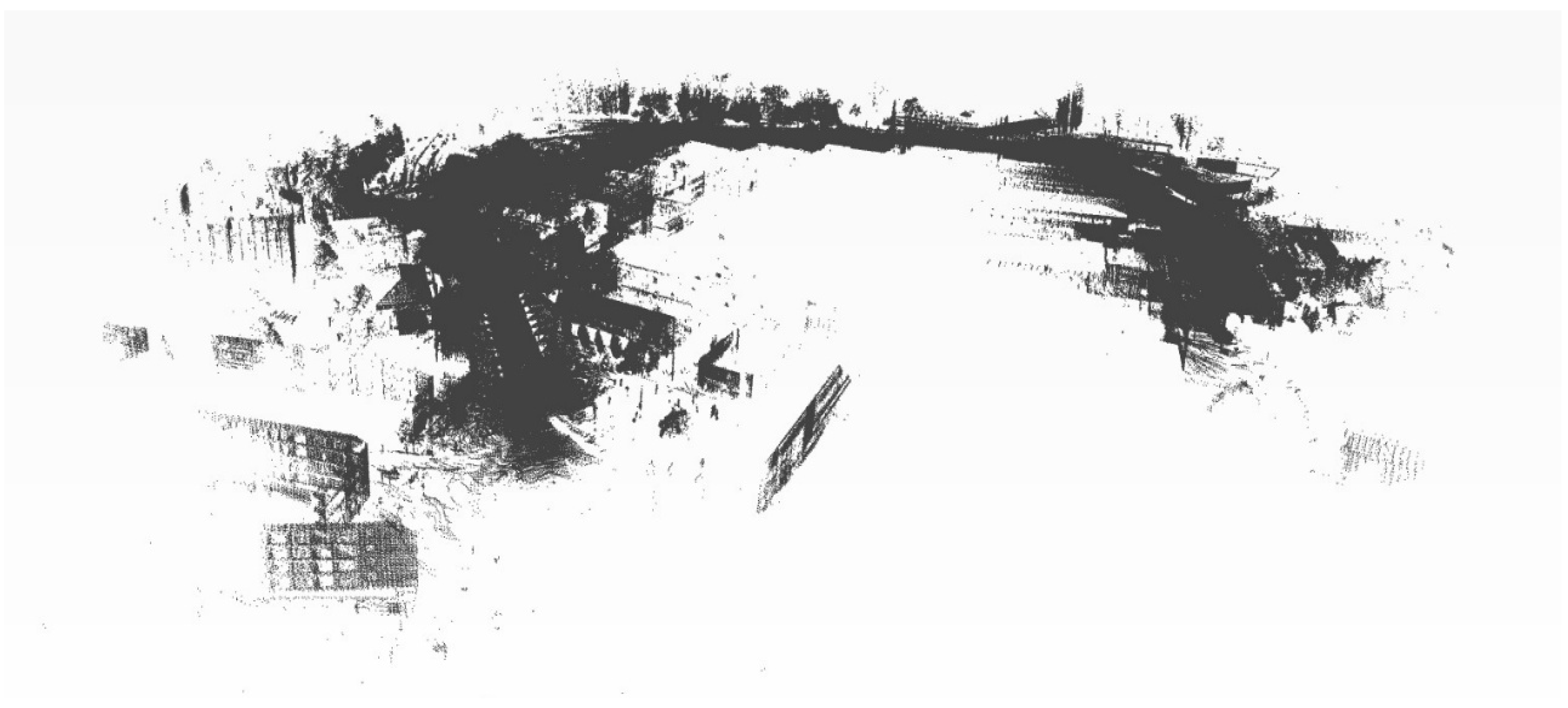

- Semantic Segmentation Node: This utilizes a modified urban_road_filter algorithm to classify and extract road and sidewalk regions from LIDAR point clouds; see Figure 2. Results are published as an ROS 2 topic for feature extraction.

- Feature Extraction Node: As part of the filer ecosystem, this defines curbs and sidewalk boundaries.

2.2. SLAM Integration

3. Discussion

3.1. Significance of Findings

3.2. Limitations and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Levinson, J.; Askeland, J.; Becker, J.; Dolson, J.; Held, D.; Kammel, S.; Kolter, J.Z.; Langer, D.; Pink, O.; Pratt, V.; et al. Towards Fully Autonomous Driving: Systems and Algorithms. In Proceedings of the IEEE Intelligent Vehicles Symposium, Baden-Baden, Germany, 5–9 June 2011. [Google Scholar]

- Urmson, C.; Anhalt, J.; Bagnell, J.; Baker, C.R.; Bittner, R.; Dolan, J.M.; Duggins, D.; Galatali, T.; Geyer, C.; Gowdy, J.; et al. Autonomous Driving in Urban Environments: Boss and the Urban Challenge. J. Field Robot. 2008, 25, 425–466. [Google Scholar] [CrossRef]

- Varga, B.; Yang, D.; Martin, M.; Hohmann, S. Cooperative Decision-Making in Shared Spaces: Making Urban Traffic Safer through Human-Machine Cooperation. In Proceedings of the 2023 IEEE 21st Jubilee International Symposium on Intelligent Systems and Informatics (SISY), Pula, Croatia, 21–23 September 2023; pp. 109–114. [Google Scholar]

- Pomerleau, F.; Colas, F.; Siegwart, R. Review of Point Cloud Registration Algorithms for Mobile Robotics. Found. Trends Robot. 2015, 4, 1–104. [Google Scholar] [CrossRef]

- Bender, P.; Ziegler, J.; Stiller, C. Lanelets: Efficient Map Representation for Autonomous Driving. In Proceedings of the IEEE Intelligent Vehicles Symposium, Dearborn, MI, USA, 8–11 June 2014. [Google Scholar]

- Poggenhans, F.; Pauls, J.-H.; Janosovits, J.; Orf, S.; Naumann, M.; Kuhnt, F.; Mayr, M. Lanelet2: A High-Definition Map Framework for the Future of Automated Driving. In Proceedings of the 21st IEEE International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 1672–1679. [Google Scholar]

- Naumann, M.; Poggenhans, F.; Kuhnt, F.; Mayr, M. Lanelet2 for nuScenes: Enabling Spatial Semantic Relationships and Diverse Map-Based Anchor Paths. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Vancouver, BC, Canada, 18–22 June 2023; pp. 3249–3258. [Google Scholar]

- Behley, J.; Milioto, A.; Stachniss, C. A Benchmark for LIDAR-based Semantic Segmentation. In Proceedings of the IEEE International Conference on Robotics and Automation, Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Bai, L.; Zhang, X.; Wang, H.; Du, S. Integrating remote sensing with OpenStreetMap data for comprehensive scene understanding through multi-modal self-supervised learning. Remote Sens. Environ. 2025, 318, 114573. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alabdallah, A.; Farraj, B.J.B.; Horváth, E. ROS 2-Based Framework for Semi-Automatic Vector Map Creation in Autonomous Driving Systems. Eng. Proc. 2025, 113, 13. https://doi.org/10.3390/engproc2025113013

Alabdallah A, Farraj BJB, Horváth E. ROS 2-Based Framework for Semi-Automatic Vector Map Creation in Autonomous Driving Systems. Engineering Proceedings. 2025; 113(1):13. https://doi.org/10.3390/engproc2025113013

Chicago/Turabian StyleAlabdallah, Abdelrahman, Barham Jeries Barham Farraj, and Ernő Horváth. 2025. "ROS 2-Based Framework for Semi-Automatic Vector Map Creation in Autonomous Driving Systems" Engineering Proceedings 113, no. 1: 13. https://doi.org/10.3390/engproc2025113013

APA StyleAlabdallah, A., Farraj, B. J. B., & Horváth, E. (2025). ROS 2-Based Framework for Semi-Automatic Vector Map Creation in Autonomous Driving Systems. Engineering Proceedings, 113(1), 13. https://doi.org/10.3390/engproc2025113013