1. Introduction

The most familiar and prevalent malignant brain tumor is glioma, which consists of 77% of all malign tumors of the central nervous system across the globe [

1]. Annually, there are around 3 to 6 new cases per 100,000 individuals incurring a glioma, which is subject to change due to the region and availability of diagnostic methods [

2]. These tumors have a multitude of subtypes starting from slower progressing ones like low-grade astrocytomas and oligodendrogliomas to highly aggressive anaplastic gliomas and glioblastomas. Because of such diversity, survival rates can vary significantly. Certain low-grade gliomas encountered can be linked with over 10 years survival period, while high-grade gliomas shorten life expectancy to more than two years under treatment [

3].

Gliomas are diverse in nature and progression, making imaging essential for diagnosis, classification, and treatment planning. Among the available methods, MRI is the most reliable tool for visualizing brain anatomy and tumor characteristics. Beyond structural imaging, advanced techniques like DWI, PWI, and MRS offer insights into tumor cellularity, vascularity, and metabolic activity. These multiparametric MRI approaches enhance diagnostic accuracy, support grading, and enable better prognosis and treatment monitoring in glioma care.

Accurate survival prediction in glioma patients is critical for guiding treatment, managing expectations, and personalizing care. Given gliomas’ aggressive and heterogeneous nature, prognostic models can stratify patients for therapy intensity or trial eligibility. Traditional clinical and histopathological assessments often fall short in capturing tumor complexity. Recently, multimodal MRI has gained prominence as a non-invasive prognostic tool, integrating structural, functional, and microenvironmental insights. Studies show that combining features from T1, T2, FLAIR, and DWI sequences enhances prognostic accuracy over single-modality approaches [

4]. Deep-learning methods, such as CNN-based MRI embeddings, further improve survival prediction, as demonstrated by Yoon et al. in glioblastoma [

5].

This research aims to predict the overall survival of glioma patients using deep features obtained from multimodal MRI images. We examined both the conventional structural sequences—T1, T2, and FLAIR—and specialized diffusion imaging metrics, such as fractional anisotropy (Eddy_FA) and mean diffusivity (Eddy_MD), derived from high angular resolution diffusion imaging (HARDI). Each of these imaging techniques provides complementary information on the tumor, edema, and the microstructural integrity of the tumor–host interface. To capture complex patterns within each imaging modality, we employed the VGG16 convolutional neural network as both a feature extractor and classifier. A separate VGG16 model was trained for each MRI modality, enabling the network to learn modality-specific representations for predicting patient survival status. This approach allows for a direct comparison of the prognostic value of each modality. Our work contributes to a better understanding of how different imaging sequences—particularly diffusion-based metrics—can enhance non-invasive survival prediction in glioma patients.

The rest of the paper is structured as follows. We describe in detail in

Section 2 the literature on glioma survival prediction with MRI and deep-learning application focusing on uni-modal and multi-modal approaches.

Section 3 explains the data description, the processes of preparing the data, and the framework of the methodology considered for model training, including extraction embedding, along with the training processes. In

Section 4, we discuss and analyze the classification results from different MRI modalities. In

Section 5, we provide an analysis of the study results emphasizing the contribution as well as the shortcomings of the study in clinical practice. The paper is concluded in

Section 6, where some suggestions for further work are also given.

2. Related Works

Survival outcomes in glioma patients remain one of the greatest challenges in neuro-oncology [

6]. Recently, the implementation of deep-learning models on MRIs has become popular due to their ability to identify delicate imaging patterns associated with prognosis. This advancement has been mostly performed using the conventional MRI modalities: T1, T2, and FLAIR. For instance, it has been demonstrated that features extracted through deep learning from T1, T2, and FLAIR MRIs are independently prognostic of diffuse gliomas, regardless of genetic and clinical markers, [

7]. Chaddad et al. was one of the first to propose a radiomic feature extraction approach using CNNs on several standard modalities and correlated these features with immune markers and overall survival [

8].

Aside from structural imaging, diffusion MRI has shown potential in predicting survival with value. Liu et al. demonstrated that overall survival in pediatric glioma was strongly associated with radiomic features from DWI as well as FLAIR intensity and tumor volume ratios [

9]. Also, Rui et al. showed that QSM incorporated microstructural susceptibility signals and it had greater value than conventional sequences like FLAIR and T1 for glioma grading and IDH mutation prediction [

10].

More recent models have attempted to resolve the challenge of heterogeneity in modalities for the purpose of survival prediction. U-Net and 3D CNN models, for instance, trained on multiple MRI modalities, exhibited a robust performance despite imaging data incompleteness, showcasing the importance of modality-aware strategies [

11].

There is also research focusing on the separate model per modality training approach. For instance, a hybrid CNN–LSTM model processing each sequence separately demonstrated considerable variability in performances across different modalities, with FLAIR and T2 often offering the best features for survival classification [

12]. In addition, the BraTS dataset provides more evidence that some single MRI sequences, such as FLAIR, outperform fused multimodal inputs in certain tasks associated with survival prediction [

13].

While these studies showcase the potential of MRI in forecasting the survival rates of gliomas, most have used either a limited scope of modalities or integrated them without assessing their individual importance. Conversely, our study comprehensively trains and evaluates VGG16-based classifiers using embeddings derived from separate MRI modalities, including advanced diffusion metrics like Eddy FA and MD. Such an approach facilitates an incremental grasp of each modality’s contribution toward survival modeling and highlights the prognostic value of diffusion-informed sequences that have been largely overlooked.

3. Material and Method

3.1. Dataset

For this study, we used the UCSF Preoperative Diffuse Glioma MRI (UCSF-PDGM) dataset [

14], which is publicly available through The Cancer Imaging Archive (TCIA) [

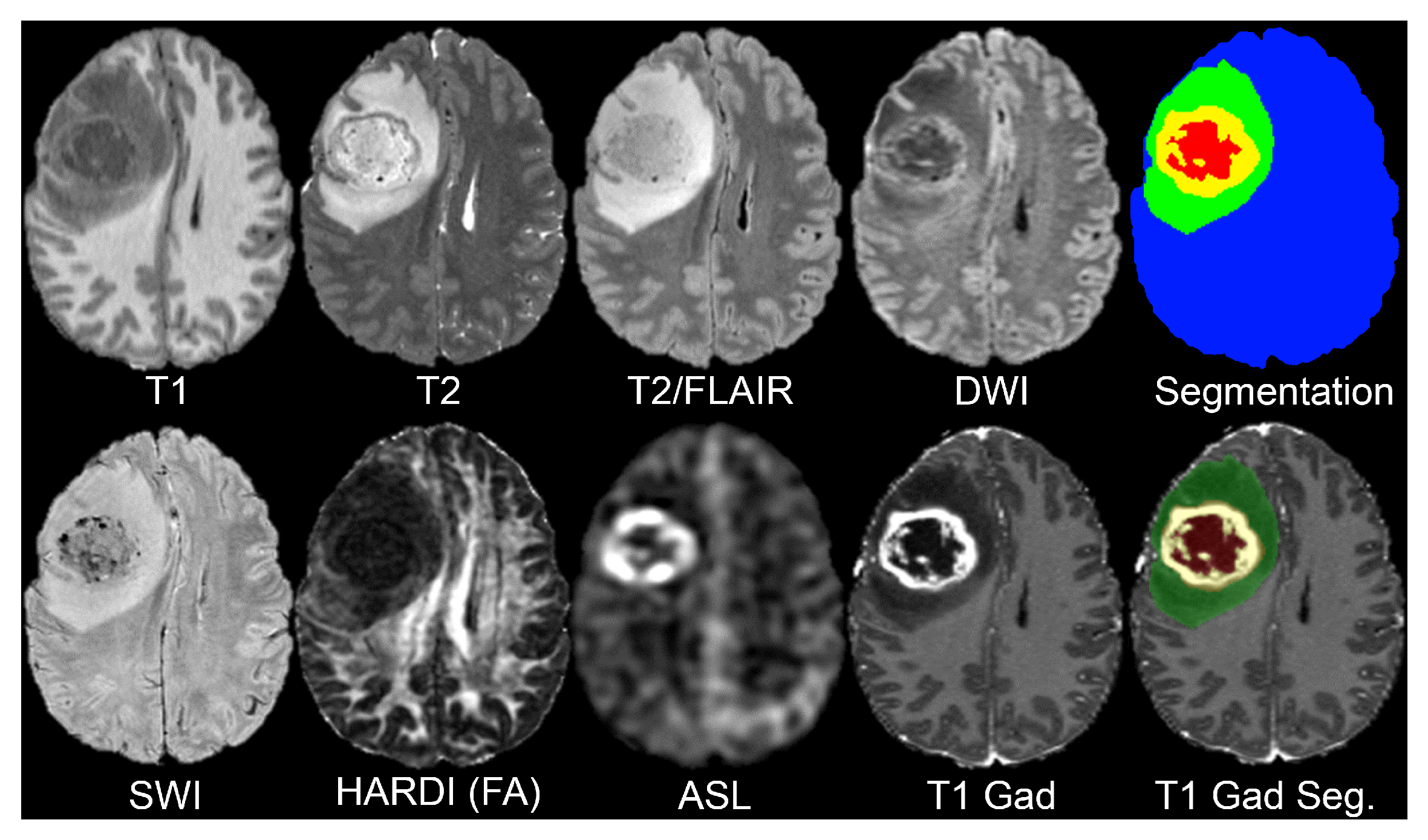

15]. The dataset consists of preoperative multimodal MRI scans from 495 adult patients with histopathologically confirmed diffuse gliomas (WHO grades II–IV). All scans were acquired at UCSF using a standardized 3 Tesla MRI protocol between 2015 and 2021 (see

Figure 1).

This dataset includes a comprehensive collection of MRI sequences, each offering unique insights into the brain structure and pathology (see

Table 1). The T1-weighted scans, both pre- and post-contrast, provide detailed anatomical information and help delineate the tumor’s structural boundaries and contrast-enhancing regions. T2-weighted imaging highlights fluid content and edema, making it useful for identifying the surrounding tissue changes. T2/FLAIR (Fluid-Attenuated Inversion Recovery) suppresses cerebrospinal fluid (CSF) signals, enhancing the visibility of peritumoral lesions and infiltrative edema often missed in standard T2 scans.

In order to capture microstructural tissue properties, the dataset includes Diffusion-Weighted Imaging (DWI) as it assesses the motion of water molecules, and is often employed to evaluate the cellular density within the tumor. Susceptibility-weighted imaging (SWI) detects blood products, calcifications, and other vascular abnormalities found in high-grade gliomas as it is sensitive to magnetic field variation and can detect these areas.

Tumor perfusion is assessed with Arterial Spin Labeling (ASL) as a non-contrast perfusion imaging method and, thus, obtains functional imaging. The dataset also contains High Angular Resolution Diffusion Imaging (HARDI) with 55 diffusion directions, which allows for more sophisticated diffusion modeling to evaluate white matter integrity, and analyze the patterns of tumor infiltration more precisely.

Lastly, segmentation maps are given for each subject to determine areas of tumors and provide anatomical landmarks for model training and assessment. These maps are critical for localizing regions of interest pertaining to the disease across different modalities and ensuring standardized preprocessing.

This variety of imaging data is what sustains our research investigation and supports the study with an automated assessment of MRI-derived mortality predictors in glioma patients on a per-modality basis.

3.2. Methodology

In this work, we designed a modality-specific deep-learning architecture based on the surpassing VGG16 classification model to evaluate the risk of patient mortality using features from individual MRI sequences. For each MRI modality of the UCSF-PDGM dataset, a separate CNN was trained to classify the outcome based on survival as either binary deeded or surviving at follow-up.

All MRI images were processed through a shared automated preprocessing pipeline. This step consisted of standard imaging practices meant to reduce inter-scanner variability, such as intensity normalization and resizing each 2D image slice to 224 × 224 pixels, which is required for input into VGG16. Only image data were utilized; no clinical or genomic factors like age, IDH mutation, or MGMT status were included.

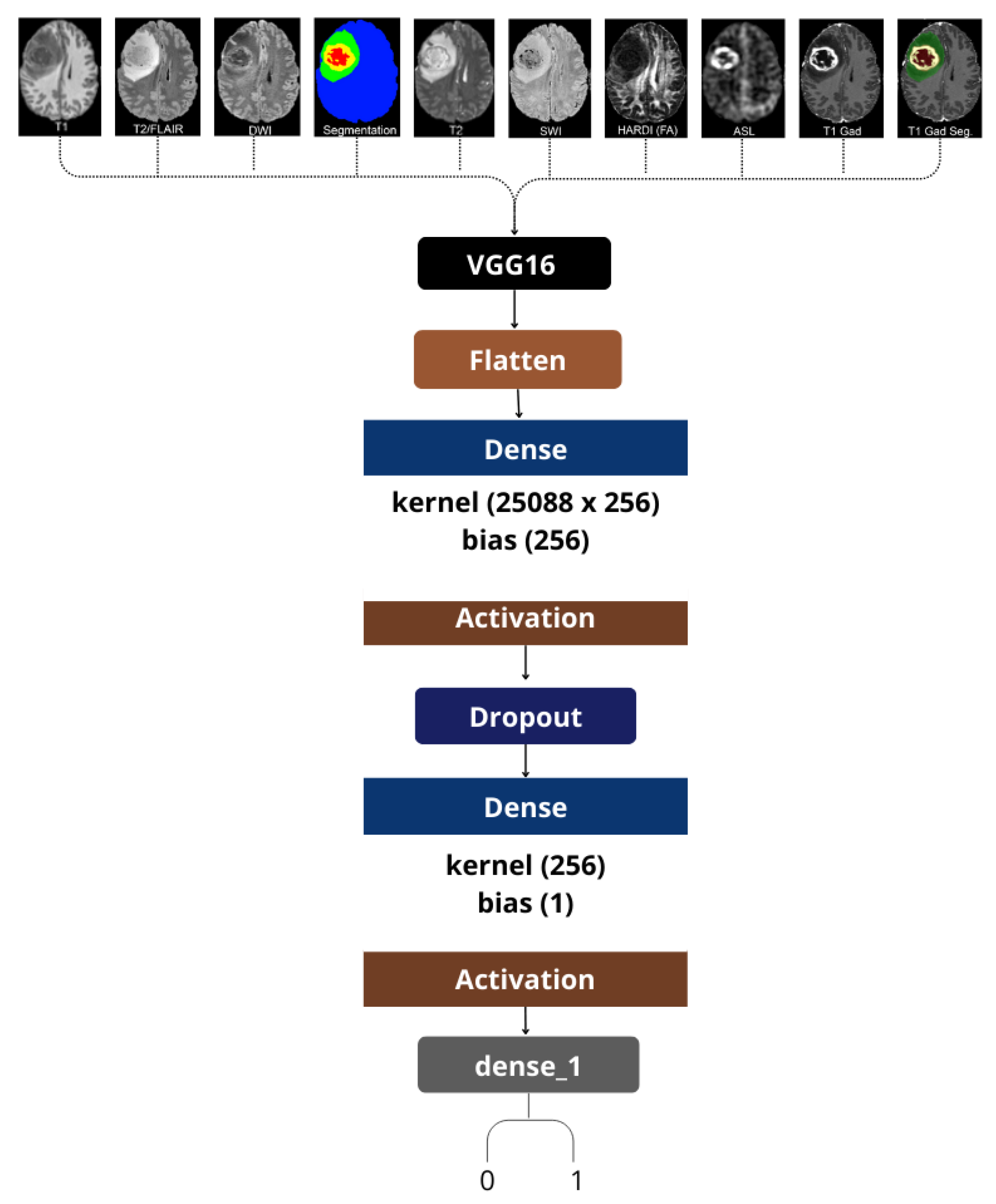

We utilized a single-modality approach, which meant that each model was only trained and assessed on one image type at a time, be it T2, DWI, or ASL (see

Figure 2). This strategy provided us the opportunity to evaluate the unique predictive value that each MRI modality provided separately. It is worth noting that even sophisticated diffusion-derived maps, like HARDI-FA, and ASL perfusion images were utilized as separate inputs to demonstrate their prognostic capabilities.

For feature extraction, we utilized VGG16, a convolutional neural network architecture known for its deep-learning capabilities and its image classification prowess trained on ImageNet. Initially, we updated the last classification layers of the network to fit and optimize for our binary classification problem. More specifically, for each image that went through the convolutional part of VGG16, the features were extracted after flattening and passed to a custom dense classification head, which included dropout layers to prevent overfitting during training.

Every distinct model was trained separately and assessed with stratified cross-validation. For each modality, the accuracy, precision, recall, and F1-score metrics were calculated to assess the overall performance in predicting mortality. This modular design enables perspective incorporation into ensemble or attention-based fusion systems while preserving interpretability from each imaging origin.

With this framework, we aimed to find out which of the standard or advanced MRI modalities bears the strongest prognostic determinant for survival among glioma patients, and to show that less frequently employed sequences such as ASL and HARDI are useful predictors of patient outcomes when analyzed independently.

4. Results

To assess how each MRI modality predicts the survival of patients, we trained and tested separate VGG16 models on all modalities of the UCSF-PDGM dataset. Performance for each MRI-derived input of UCSF-PDGM dataset is summarized in

Table 2, which includes evaluation metrics of classification accuracy, precision, recall, and F1-score.

Among the standard anatomical sequences, the model trained on Segmentation maps achieved the best performance overall, with an accuracy of 0.909 and F1-score 0.911. This indicates that annotations for tumor regions were strongly contributory for the prediction of mortality. The model trained on Parenchyma segmentation also performed well, achieving accuracy of 0.895 and F1-score 0.893, confirming the beneficial value of structural labels.

In diffusion imaging, Eddy L2, an eigenvalue decomposition of the diffusion tensor, reached the highest recall at 0.956 and a strong F1-score of 0.893. These results show a high sensitivity in identifying deceased patients. Eddy FA, corresponding to fractional anisotropy derived from HARDI data, performed extremely well, with accuracy and F1-scores of 0.891 and 0.886, respectively. Eddy MD (mean diffusivity), which is the result from the DWI input, achieved balanced metrics of accuracy and F1 of 0.881 which indicates a reliable contribution to prognosis.

The “Misc” category, which includes Conventional T1, T2, T2/FLAIR, SWI, and possibly T1 Gad images, also presented consistent results, (accuracy = 0.883, F1 = 0.877). This reflects the fact that conventional MRI modalities contain significant structural information about tumors, although they lack the more sophisticated physiological information provided by diffusion and perfusion imaging.

Interestingly, the Bias image type, typically representing bias-corrected anatomical images used for preprocessing, also performed well (accuracy = 0.905, F1 = 0.903), suggesting that even general anatomical normalization can yield predictive features when paired with a robust CNN.

Lastly, each diffusion tensor component (Eddy L1, L2, and L3) showed varied contributions, highlighting the subtle but distinct microstructural signals captured by advanced diffusion modeling. For instance, Eddy L3 had a lower recall (0.774) but maintained decent precision (0.910), showing trade-offs between sensitivity and specificity.

To give a complete insight into the model performance, a grouped bar chart (

Figure 3) is provided alongside the F1-score, accuracy, precision, and recall for all MRI modalities used in this particular study.

With this chart, it is possible to assess the advantages and disadvantages of each input type simultaneously. For example, segmentation-based modalities demonstrated high values across all metrics, confirming their capability in capturing survival-relevant features. Diffusion-derived inputs like Eddy L2 and FA demonstrated recall greater than 0.9, strongly supporting the hypothesis that these derived metrics are sensitive to high-risk cases. At the same time, F1 and precision values were also balanced. Standard anatomical sequences under “Misc” showed a moderate performance on all metrics, without exceeding the less sophisticated modalities. The visualization underscores the disparate predictive value of various types of MRIs and integrates the previously discussed findings, that more sophisticated imaging such as HARDI and segmentation provide significantly greater prognostic value for mortality prediction than conventional scans.

5. Discussion

This research investigated the mortality classification of diffuse glioma patients utilizing individual MRI modalities with a VGG16-based convolutional neural network framework. With the intent of evaluating the prognostic value of standard and advanced MRI sequences, we trained models independently on each modality. The findings indicate that models trained on specific modalities can be insightful for predicting survival and reveal the varying predictive power of each imaging type.

Segmentation-derived inputs appeared to be the most informative, likely due to their capability to isolate specific areas of interest and eliminate extraneous noise. These maps enhance discriminative power as they direct the model focus toward biologically meaningful structures. Tissue parenchyma segmentation, which concentrates on the tissue surrounding tumors, was also helpful, confirming the value of anatomical guidance in predictive modeling.

Among other imaging modalities, diffusion-derived measures, especially those obtained from HARDI, showed the highest prognostic value. This is consistent with other research that diffusion features capture microstructure properties such as the density of cells and tissue anisotropy, highly correlated with the aggressiveness and infiltrative capacity of the tumor. Each eigenvalue-based metric (for instance, L2) demonstrated its particular sensitivity to distinct survival patterns, reinforcing the idea that diffusion profiles contain highly intricate information.

Anatomical sequences that fall under “Misc” such as T1, T2, FLAIR, and SWI, demonstrated a reasonable predictive capability. These sequences are commonly used in clinical practice, but in the broader context, they were outperformed by more focused or functionally rich modalities. This suggests that while structural data remain fundamental to diagnosis, their utility for outcome prediction may be limited unless augmented by physiological or segmented information.

In comparison to other works in the field, our modality-specific strategy demonstrates at least equal, if not better, results. For instance, Lee et al. achieved a C-index of up to 0.709 using multichannel 3D CNNs trained on T1, T2, and FLAIR MRI sequences for survival prediction [

7], and Jeong et al. reported 0.86 sensitivity and 0.63 specificity when predicting PET-based tumor volumes from multimodal MRI for survival tasks [

11]. Chaddad et al. integrated immune markers and radiomic features across standard MRI and reached an AUC of 0.72 in survival classification [

8]. In contrast, our highest-performing models achieved accuracy exceeding 0.90 with balanced precision and recall, indicating that separating and fine-tuning models per modality—rather than fusing modalities prematurely—can yield highly effective survival prediction tools.

A crucial advantage of our methodology is its interpretability. Instead of a black-box fusion of modalities, our system sheds light on which specific types of MRIs are most relevant for predicting outcomes, and thus offers insight and understanding. This feature becomes indispensable in clinical settings where imaging is sparse or resources are limited. As an illustration, if only T2 or diffusion scans are provided, clinicians can still make decisions based on specific model performance for each imaging modality.

Despite promising results, several limitations warrant discussion. First, the implementation of our models on 2D slices may hinder capturing the full 3D tumor context and spatial progression. Work on more advanced 3D CNN architectures, and temporal modeling using LSTM or Transformers that incorporate volumetric and longitudinal data, is planned for the future. Clinical or molecular data such as an IDH mutation or MGMT status, which are known to impact survival, were not included. A multimodal model integrating these features alongside imaging could enhance prediction robustness. Furthermore, the decision to utilize VGG16 stemmed from the need for consistency and comparability. Nevertheless, diving into more contemporary VGG16 models such as EfficientNet, Vision Transformers, or attention-based fusion models could enhance performance, interpretability, and mod clarity.

6. Conclusions

This research demonstrates the effectiveness of training separate deep-learning models on individual MRI modalities to predict mortality in diffuse glioma patients. Segmentation maps and diffusion-derived metrics outperformed standard structural sequences. Compared to fused multimodal models, this approach is more adaptable and clinically practical, allowing imaging strategies to be tailored based on availability and prognostic value. By isolating each modality’s contribution, the framework remains both high-performing and interpretable. Future work will extend this approach to 3D architectures, incorporate non-imaging features, and develop explainable fusion methods that preserve its modular advantages.