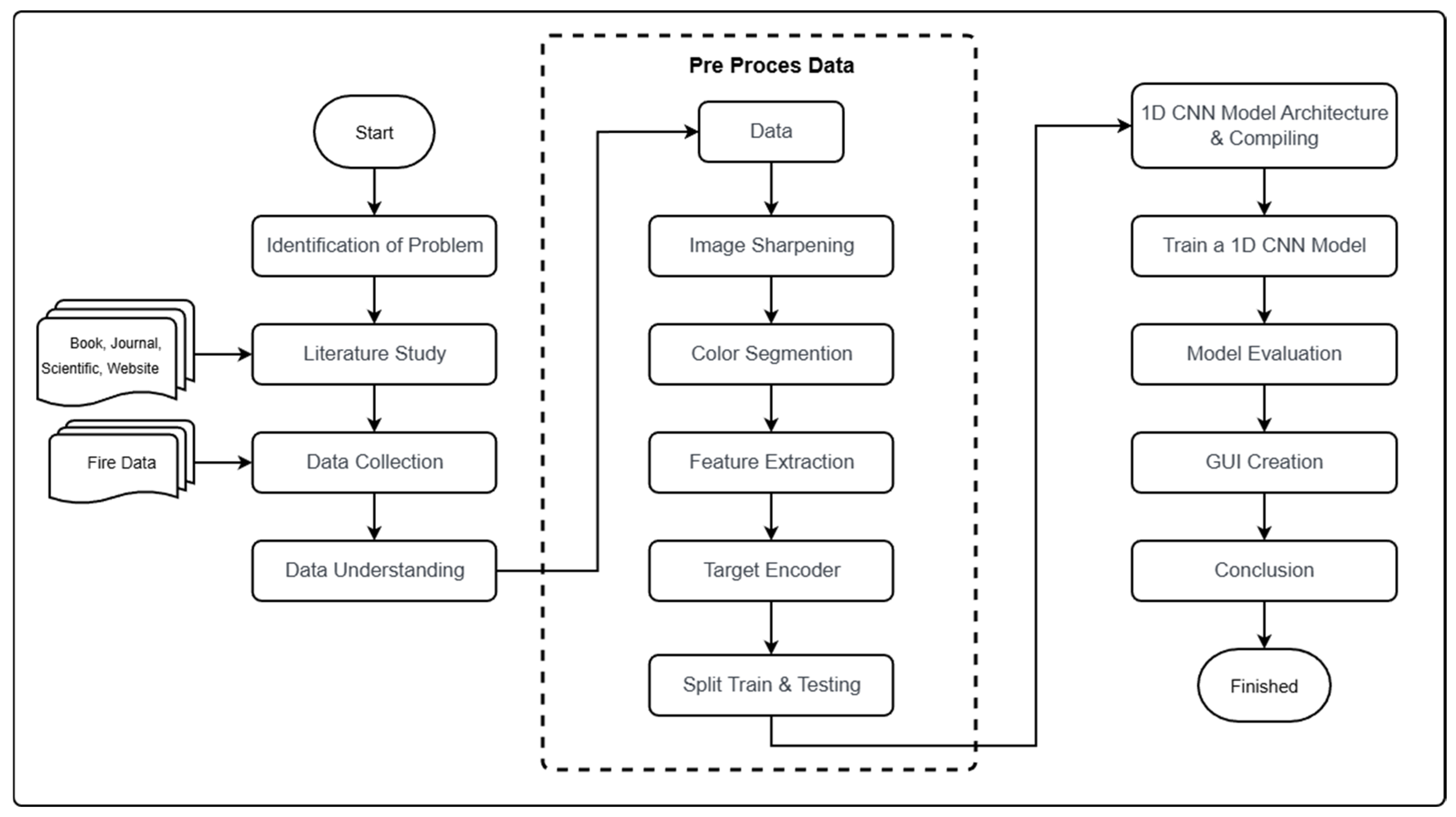

3.3. The Result of Data Pre-Processing

After the researcher knows a number of characteristics of the dataset that will be used, they will pre-process the data according to their observations and understanding. The data pre-processing stages that will be carried out are image sharpening, color segmentation, feature extraction, target encoder, and split train testing. Based on observations of CCTV fire images, it can be seen that the image contains noise which causes the image to become blurry. Therefore, the researchers carried out a process to increase the sharpness of CCTV images, i.e., the Gaussian Blur process was used to increase the sharpness and clarity of

Figure 4.

As seen in

Figure 4, there are differences in the level of image sharpness and color in various pixels, although they do not appear significant. This is because the Gaussian Blur process uses a convolution process based on the kernel size that is applied to the image after normalizing the kernel calculation itself. After the CCTV image has been observed, the image can be subjected to a color segmentation process to obtain the color values that are sought and desired from the CCTV image. In the case of fire detection, the colors that will be segmented and the values calculated are red, orange and white. This is because the fire flames are in the color range that is visible on CCTV images. So segmentation is needed for these colors in the existing image.

To carry out the color segmentation process in images, researchers can determine the lower and upper thresholds for each color that will be used in the segmentation process. The lower and upper thresholds are based on what color pixels you want to take. The RGB color arrangement for each color that will be used in the segmentation process will first be converted into HSV (Hue, Saturation, Value) color format. After determining the lower and upper thresholds, you can create a mask that searches and detects whether the color you are looking for appears in the existing CCTV image input data. The mask that will be applied to the image will be arranged based on the lower threshold and upper threshold in a predetermined HSV format. The mask that has been integrated into the image will return the capture results according to the color limitations detected in the image.

In

Figure 5, it can be seen that the mask can detect previously limited colors. It can also be seen that the color areas detected by the previously created mask are dominant in orange rather than other colors. After obtaining a color area that can be detected, the next step is to carry out the process of calculating the number of colors detected based on the masking that has been performed. This number of color values will later be entered into the data frame as one of the feature parameters used in masking.

Next, the researchers pre-processed fire data based on CCTV images. It is known that CCTV images have a pixel size of 224 × 224 with three channel layers representing RGB (Red Green Blue). The researchers use the GLCM (Gray Level Co-occurrence Matrix) method for the feature extraction process; therefore, the initial stage is of course to change the CCTV image in RGB format to gray using the grayscaling process. After the CCTV image has been converted into grayscale format, the image will have the same dimensions as before with a size of 224 × 224 pixels but only one channel. After the process of converting the image to grayscale format, the next step is to carry out a binning process which divides each pixel in the image into certain binning groups. Bins are divided into several numbers, namely 0, 16, 32, 48, 64, 80, 96, 112, 128, 144, 160, 176, 192, 208, 224, 240, and 255, as shown in

Figure 6The image pixels that have been grouped into bins will be searched to determine which group appears most often in an image among these groups. The group that appears most frequently will be used as a reference level for the image. The group that appears most frequently will be searched for and its value calculated based on the pixel intensity in adjacent bins with a predetermined degree of direction reference to identify a number of features. After obtaining the value of the co-occurrence matrix based on the specified degree direction, GLCM can be used to perform statistical calculations based on various methods. These statistical calculations can be performed using contrast, homogeneity and correlation methods. With statistical calculations, it can be made easier to identify characteristics in existing images from various aspects, making it easier for learning models to understand the characteristics of image data.

Fire data based on CCTV images has target values in the form of characters. These values are default, fire, and smoke. These values must be converted into integer values because the algorithm model cannot understand the target input data in the form of characters. Therefore, it is necessary to carry out an encoder labeling process on the target data. The label encoder will change the character values in the target data into integer values based on the alphabetical order of the data. So, the default value becomes 0, fire becomes 1, and smoke becomes 2, as shown in

Table 1.

The final step before entering the process of creating and training an algorithm model using a 1D CNN is to divide the data into training data and test data. Fire data based on CCTV images will be divided into 80% as training data and 20% as test data. The basic reason for choosing a comparison ratio for training data of 80% and test data of 20% is the Pareto principle. In the context of dividing existing fire datasets, the Pareto principle can be applied to up to 80% of the data for training, and the evaluation results of the algorithm model can be determined with 20% of the test data. So, with 20% of the total existing data, you can determine whether the performance of the algorithm model used is good or bad.

3.4. Model 1D CNN

The results of the previous data pre-processing will be used in the model training and learning process on CCTV image data. Before carrying out the algorithm model learning process, researchers created a number of model architectures that could be used by the dataset so as to achieve the expected performance for classifying fire data based on CCTV images. The 1D CNN model architecture for fire data based on CCTV images also has three types of layers, namely convolutional layers, pooling layers, and fully connected layers. In preparing the 1D CNN architecture model for fire data based on CCTV images, it will be tested in two different architectural arrangements. Each 1D CNN model architecture for fire data based on CCTV images has two convolution layers, two pooling layers, and four connected layers.

The difference that can be seen is the addition of a dropout layer for one of the architectures. The purpose of having a dropout layer in one of the architectures is to determine the amount of performance in the algorithm model if there is a difference in the amount of network sent between existing nodes [

8]. This dropout can also reduce the impact of the algorithm model experiencing overfitting. If the algorithm model experiences overfitting, then the model understands too much of the training data provided and does not understand the test data provided [

9]. So, the algorithm model becomes more unstable and ineffective to use, as shown in

Figure 7.

In the experimental architecture arrangement for the 1D CNN algorithm model of fire data based on existing CCTV images, both architectures have the same number of kernel sizes for input data in the first convolution layer. The quantity taken for the kernel size parameter in the first convolution layer is 5. This is because the input data that enters the algorithm model has a total of 14 features with dimensions of 1 (14, 1). So, the first layer of convolution will calculate the magnitude of the value with the weights of the 5 features simultaneously, as shown in

Figure 8.

After creating the 1D CNN model architecture for fire data based on CCTV images which have been successfully compiled, we can then carry out the training process for the 1D CNN model on fire data based on CCTV images which have previously undergone feature extraction. The final dataset results that will be used are a combination of the final values of texture extraction and color extraction.

From the experimental results at epoch 500 and epoch 1000, it can be seen from the 1D CNN model architecture using dropout that the accuracy is quite good in the evaluation results. To evaluate the performance and description of the training process, further understanding is needed of the accuracy of plotting training data and test data.

As a result of plotting the accuracy of the training data and validation data in

Table 2 it can be seen that at epoch 500, the algorithm model looks quite stable. Meanwhile, for the experiment at epoch 1000, it can be seen that the movement of the accuracy graph is quite the same as at epoch 500. However, it can be seen again at epoch 1000 that the more epoch experiments given, the more the algorithm model has the potential to experience overfitting. This can also be seen in the training results (

Table 2), which show that at epoch 1000, there is a greater level of overfitting compared to the experiment at epoch 500. After determining the performance of the algorithm model using a dropout architecture, we can experiment with the algorithm with an architecture without dropout.

From the results of the accuracy of the training data and test data in

Table 3, it is known that the resulting accuracy is better than the 1D CNN architecture that uses dropout. However, it can also be seen that the results of accuracy, precision and recall on training data and test data have quite visible differences and exceed the differences of the 1D CNN architecture using dropout. So, from

Table 3, it can be concluded that the 1D CNN algorithm model also experiences overfitting.

As seen in the plotting in

Table 3, the accuracy pattern results given at epoch 500 and epoch 1000 with the architecture without dropout are similar to those of epoch 500 and epoch 1000 with the architecture using dropout. Each architecture is still able to increase accuracy at epoch 500 and become stable at epoch 1000 in experiments. However, the difference that can be seen between

Table 2 and

Table 3 is the level of overfitting. Architectures that do not use dropouts tend to have greater overfitting values compared to architectures that use dropouts, which can be seen visually in the existing plotting images. A comparison of the amount of overfitting can be seen in

Table 4.

After knowing the accuracy performance and level of overfitting in each 1D CNN model architecture, which can be seen from

Table 4 along with graphic images in

Figure 8, the researcher will use the 1D CNN architecture using dropout to reduce the level of overfitting in the algorithm model. Even though researchers use dropout layers to reduce the level of overfitting in the 1D CNN algorithm model, a level of overfitting still exists in the algorithm model even though the value is below 3%. Therefore, the researchers carried out another experiment, namely by adding the kernel_regularizer parameter in one of the layers, which aims to reduce the weight value of each learning algorithm model per epoch.

It can be seen that based on the accuracy, precision and recall values in

Table 5 regarding the use of kernel regularizer parameters, the metric values produced in both epochs look smaller than without using kernel regularizer parameters. This is due to the use of lower weight values for the learning or training input data. This is in accordance with the aim of using the kernel regularizer itself, namely to reduce or suppress the level of overfitting that exists in the 1D CNN algorithm model [

10]. This can also be observed from the existing graph, which shows clearer comparisons of architectures that use kernel regularizers and those that do not use kernel regularizers.

Based on

Table 5 which contains the plotting of the algorithm model training results, it can be seen that the distance between the training data and the experimental validation data is not as far as with a dropout architecture that does not use a kernel regularizer. It can be seen that using the kernel_regularizer parameter in the layer can further reduce the level of overfitting in this one-dimensional CNN algorithm model. The results of the test data metrics for the dropout architectural model that uses a kernel regularizer and one that does not use a kernel regularizer can be seen in

Table 6 below.

However, when compared with the dropout architecture without using regularizer kernel parameters, it can be seen in

Table 6 above that the architecture that uses dropout without regularizer kernel parameters is a better option at epoch 500 in terms of the metric value. So, we will use a dropout architecture without a kernel regularizer in 500-epoch experiments for the classification and detection of existing input data.

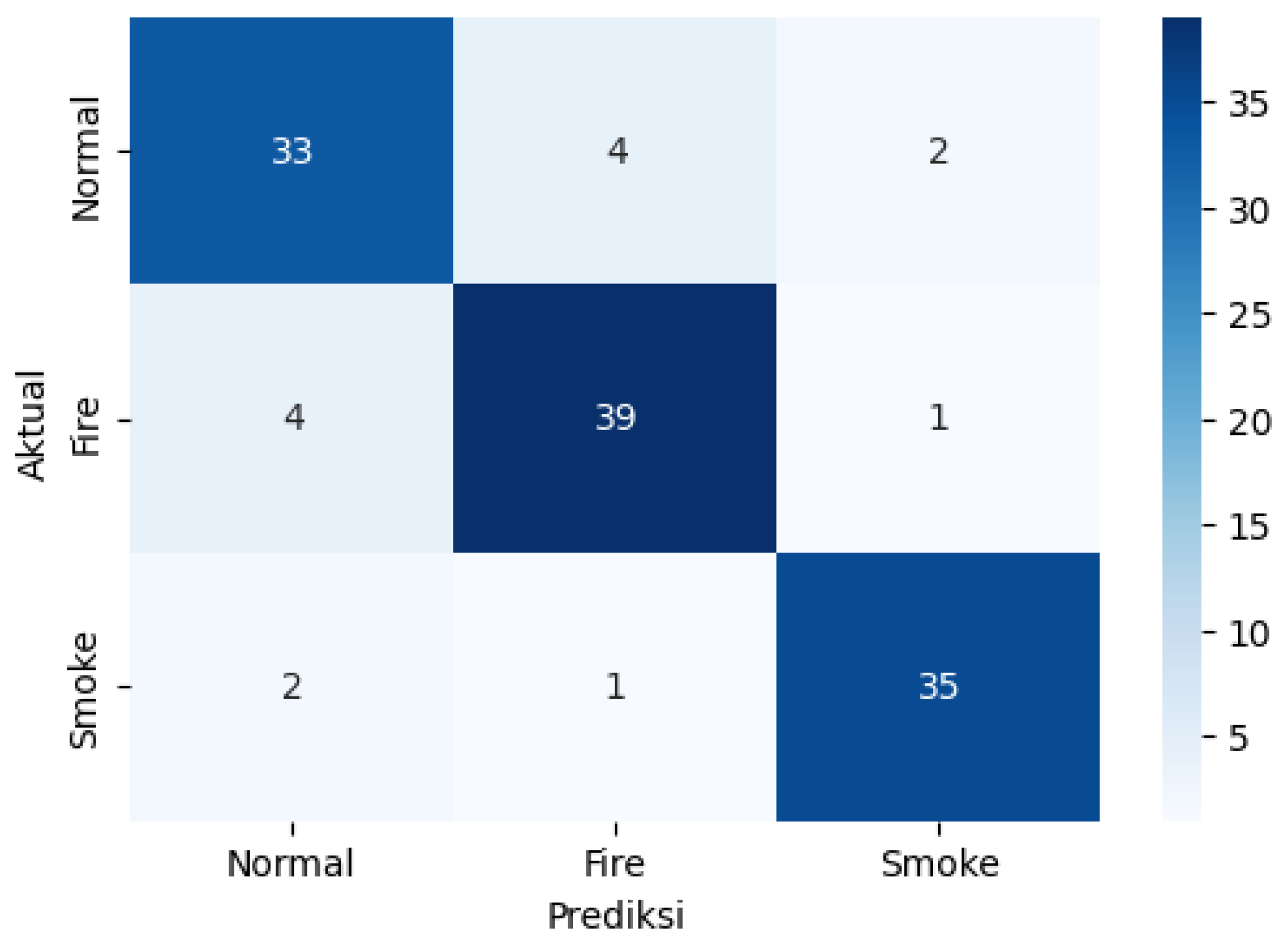

As seen in the confusion matrix (

Figure 9), based on the existing training data and test data, the algorithm model can perform normal, fire and smoke detection well. A total of 107 data points were detected correctly, including 33 for normal, 39 for fire, and 35 for smoke. So, if the percentages are calculated, 84% of the normal data is detected correctly, 88% of the fire data is detected correctly, and 92% of the smoke data is detected correctly. Next, the researchers conducted fire input data experiments based on CCTV images separate from the previously determined training data and test data. This detection experiment used three images captured by CCTV—a fire CCTV image, a non-fire CCTV image and a smoky CCTV image—which were entered into the algorithm model manually.

The CCTV image input data that has been prepared has different dimensions. Therefore, in this experiment with CCTV image input data, the image dimensions will be resized to 224 × 224 pixels. After entering fire CCTV image data, the 1D CNN algorithm model is expected to be able to detect that the CCTV image is a fire image, so it returns the output “Fire!”. Meanwhile, for CCTV image input data that does not have a fire, it is hoped that it will be able to successfully detect that there is no fire in the image and return the output “Normal”. For smoky CCTV image input data, the algorithm model is expected to be able to carry out appropriate detection by returning the output “Smoke!”.

Based on the detection results using input images separate from the training data and test data in

Table 7 above, it can be seen that there are input images that were not detected correctly. This happens because in the process of changing the input image from dimension 2 to dimension 1, it has a number of texture value patterns or levels of color which have output results between the range of values understood by the algorithm model for certain types of classes. So, the algorithm model considers the input data to be what was studied previously and requires more complex two-dimensional to one-dimensional image data processing than texture feature extraction and color segmentation.