Multimedia-Based Assessment of Scientific Inquiry Skills: Evaluating High School Students’ Scientific Inquiry Abilities Using Cloud Classroom Software †

Abstract

1. Introduction

2. Background Knowledge

2.1. Theoretical Framework of Inquiry Competence

2.1.1. Scientific Inquiry Competencies Across Research Institutions

2.1.2. Assessment Approaches and Disciplinary Variations in Scientific Inquiry Competencies

2.2. Role of Interactive Animations in Science Education

2.2.1. Cognitive Load Theory (CLT) and Multimedia Learning Theory (MLT)

2.2.2. Supportive Effects of Animation and Interaction Design on Inquiry Learning

2.3. Current Development of Online Inquiry Assessments

2.3.1. Development of International Online Inquiry Platforms

2.3.2. Current Status and Challenges in Taiwan

3. Materials and Methods

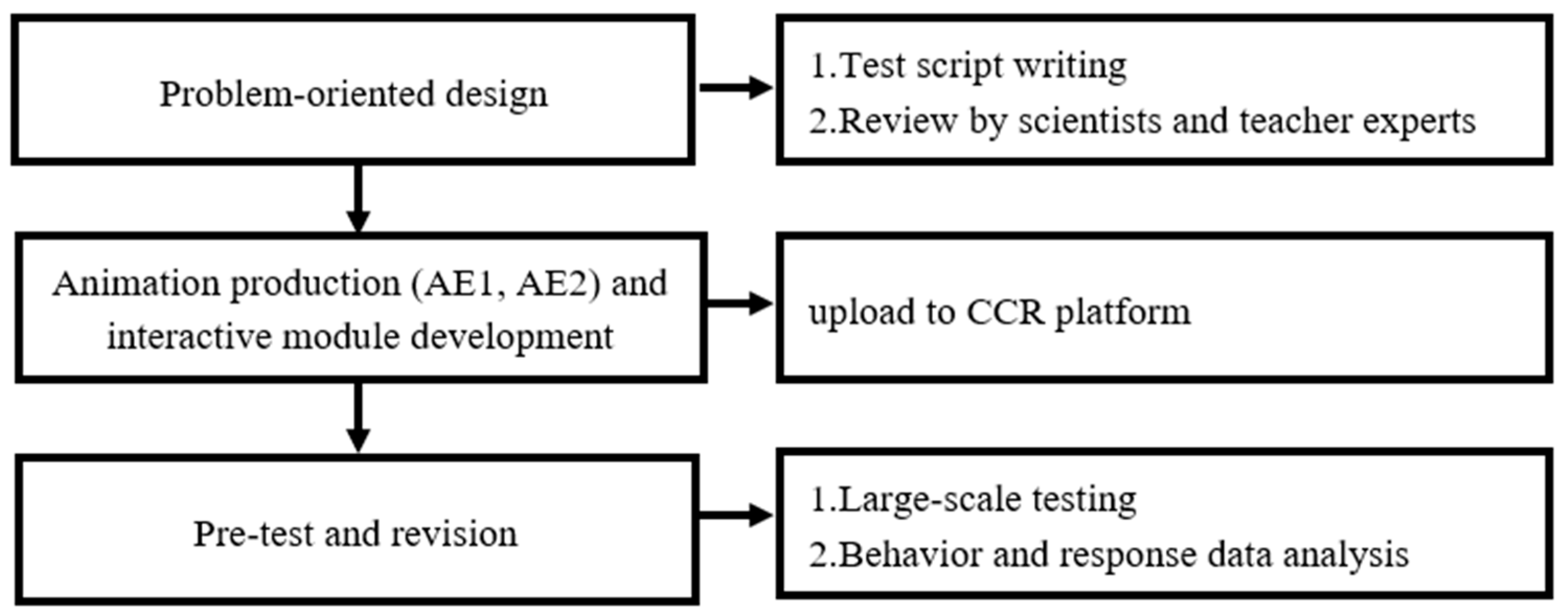

3.1. Implementation of the Inquiry-Based Assessment

Inquiry Competency Framework and Items

3.2. Participants and Data Collection

3.3. Evaluation Framework and Scoring Method

Scoring Process

- Scoring for multiple-choice questions

- 2.

- Scoring rubric for reasoning and argumentation (open-ended questions)

- Full credit: Responses in this category demonstrate the ability to provide logical and comprehensive reasoning supported by coherent explanations. Students accurately interpret scientific phenomena, establish clear connections between variables, and justify their conclusions with evidence. For example, a full-credit response might explain how hydroxyl radicals react with methane under ultraviolet light to produce carbon dioxide, linking the reaction mechanism to observed changes in experimental conditions.

- Partial credit: Responses in this category provide reasonable but incomplete reasoning. Students may correctly identify some aspects of the scientific phenomena but fail to fully explain the mechanisms or relationships between variables. For example, a partial-credit response might note that hydroxyl radicals react with methane but omit details about the resulting chemical products or their effects on the experimental outcomes.

- No credit: Responses in this category are irrelevant, incorrect, or absent. Students fail to address the scientific phenomena or provide reasoning that aligns with the question’s context. For example, a no-credit response might present unrelated information or omit an explanation entirely.

- 3.

- Scoring rubric for critical thinking (open-ended questions)

- Full credit: Responses in this category exhibit a comprehensive understanding of the experimental objectives, providing accurate reasoning and clear justification for the inclusion of specific variables. Students demonstrate the ability to align experimental choices with research goals and support their conclusions with logical explanations. For example, a full-credit response may explain why both experiments are necessary to compare the effects of hydroxyl radicals on methane oxidation under different conditions.

- Partial credit: Responses in this category demonstrate a basic understanding of the experimental objectives but lack depth or completeness. Students may correctly identify the need for certain variables but fail to fully justify their choices or provide detailed reasoning. For example, a partial-credit response may state that both experiments are necessary but omit specific references to the variables or their interactions.

- No credit: Responses in this category fail to demonstrate an understanding of the experimental objectives or provide reasoning that aligns with the question’s requirements. Students may offer unrelated, incorrect, or incomplete answers, such as suggesting the omission of one experiment without justification.

3.4. Scoring Method

4. Results

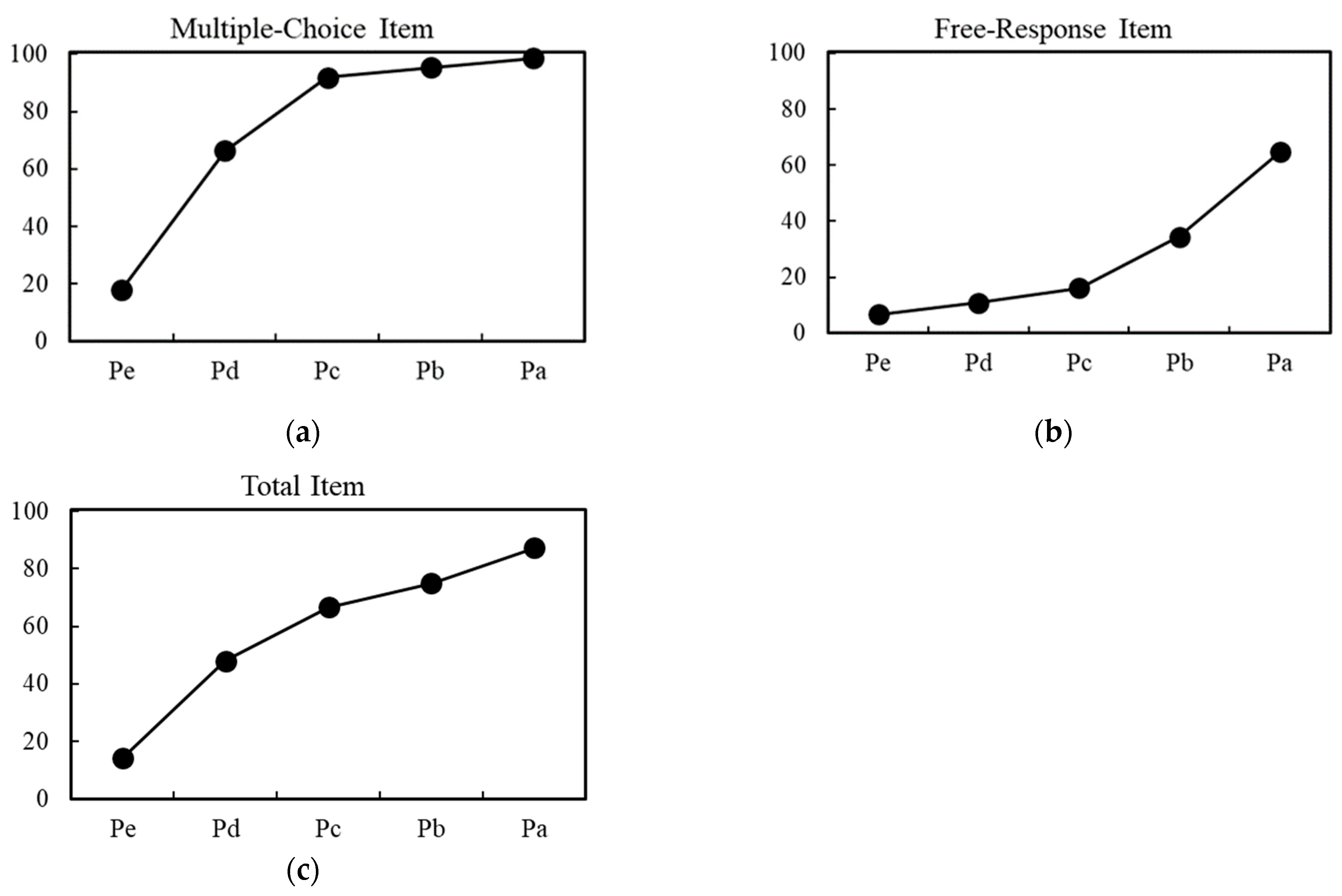

4.1. Inquiry Performance

4.2. Visual Analysis of Item Discrimination

5. Discussion

5.1. Educational and Assessment Implications

- Scaffolding inquiry skills through multistage task design:

- 2.

- Aligning assessment tools with competency-based curricula:

- 3.

- Promoting inquiry culture through assessment innovation:

- 4.

- Balancing equity and scalability through interactive assessments:

5.2. Differences and Challenges of Student Performance

- Disciplinary background

- 2.

- Gender difference

- 3.

- Further alignment of items with inquiry competencies

5.3. Limitations and Future Research

- Limited coverage of inquiry competency dimensions

- 2.

- Need for advanced automated scoring tools

6. Conclusion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- National Research Council. National Science Education Standards; National Academies Press: Washington, DC, USA, 1996. [Google Scholar] [CrossRef]

- OECD. Agency in the Anthropocene: Supporting document to the PISA 2025 Science Framework; OECD Publishing: Paris, France, 2023. [Google Scholar] [CrossRef]

- NGSS Lead States. Next Generation Science Standards: For States, by States; National Academies Press: Washington, DC, USA, 2013. [Google Scholar] [CrossRef]

- Ministry of Education. Curriculum Guidelines of 12-Year Basic Education: General Guidelines (English Version); National Academy for Educational Research: New Taipei City, Taiwan, 2014. [Google Scholar]

- Lin, T.-J.; Tsai, C.-C. A multi-dimensional instrument for evaluating Taiwanese high school students’ science learning self-efficacy in relation to their approaches to learning science. Int. J. Sci. Educ. 2013, 35, 1525–1549. [Google Scholar] [CrossRef]

- Hwang, G.-J.; Tsai, C.-C. Research trends in mobile and ubiquitous learning: A review of publications in selected journals from 2001 to 2010. Br. J. Educ. Technol. 2011, 42, E65–E70. [Google Scholar] [CrossRef]

- Sweller, J. Cognitive load theory, learning difficulty, and instructional design. Learn. Instr. 1994, 4, 295–312. [Google Scholar] [CrossRef]

- Mayer, R.E. Multimedia Learning, 2nd ed.; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar] [CrossRef]

- de Jong, T.; Linn, M.C.; Zacharia, Z.C. Physical and virtual laboratories in science and engineering education. Science 2013, 340, 305–308. [Google Scholar] [CrossRef]

- Zacharia, Z.C.; Olympiou, G. Physical versus virtual manipulative experimentation in physics learning. Learn. Instr. 2011, 21, 317–331. [Google Scholar] [CrossRef]

- de la Torre, J. DINA model and parameter estimation: A didactic. J. Educ. Behav. Stat. 2009, 34, 115–130. [Google Scholar] [CrossRef]

- Chang, T.-C.; Lyu, Y.-M.; Wu, H.-C.; Min, K.-W. Introduction of Taiwanese literacy-oriented science curriculum and development of an aligned scientific literacy assessment. Eurasia J. Math. Sci. Technol. Educ. 2024, 20, em2380. [Google Scholar] [CrossRef] [PubMed]

- OECD. PISA 2018 Assessment and Analytical Framework; OECD Publishing: Paris, France, 2019. [Google Scholar] [CrossRef]

- Pellegrino, J.W.; Hilton, M.L. Education for Life and Work: Developing Transferable Knowledge and Skills in the 21st Century; National Academies Press: Washington, DC, USA, 2012. [Google Scholar] [CrossRef]

- Wieman, C.E.; Adams, W.K.; Perkins, K.K. PhET: Simulations that enhance learning. Science 2008, 322, 682–683. [Google Scholar] [CrossRef]

- Mislevy, R.J.; Steinberg, L.S.; Almond, R.G. On the structure of educational assessments. Meas. Interdiscip. Res. Perspect. 2003, 1, 3–62. [Google Scholar] [CrossRef]

- Tuveri, M.; Steri, A.; Fadda, D. Using storytelling to foster the teaching and learning of gravitational waves physics at high-school. Phys. Educ. 2024, 59, 045031. [Google Scholar] [CrossRef]

- Rieber, L.P. Animation in computer-based instruction. Educ. Technol. Res. Dev. 1990, 38, 77–86. [Google Scholar] [CrossRef]

- Rutten, N.; van Joolingen, W.R.; van der Veen, J.T. The learning effects of computer simulations in science education. Comput. Educ. 2012, 58, 136–153. [Google Scholar] [CrossRef]

- Gobert, J.D.; Sao Pedro, M.; Baker, R.; Toto, E.; Montalvo, O. Leveraging educational data mining for real-time performance assessment of scientific inquiry skills within microworlds. J. Educ. Data Min. 2013, 5, 111–143. [Google Scholar]

- Elmoazen, R.; Saqr, M.; Khalil, M. Learning analytics in virtual laboratories: A systematic literature review of empirical research. Smart Learn. Environ. 2023, 10, 23. [Google Scholar] [CrossRef] [PubMed]

- Anderson, L.W.; Krathwohl, D.R. (Eds.) A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives; Longman: New York, NY, USA, 2001. [Google Scholar]

- Chen, Z.; Klahr, D. All other things being equal: Children’s acquisition of the control of variables strategy. Child Dev. 1999, 70, 1098–1120. [Google Scholar] [CrossRef] [PubMed]

| Institution | Core Dimensions | Strengths | Limitations |

|---|---|---|---|

| Taiwan Curriculum | Cognitive intelligence and problem-solving | Integrates creativity with logical rigor | Neglects ethics and interdisciplinary links |

| OECD PISA | Explanation, evaluation, and data-driven | Real-world relevance | Limited hypothesis-generation tasks |

| NGSS (U.S.) | Practices and crosscutting concepts | Aligns with STEM careers | Overlooks metacognitive strategies |

| No. | Type | Description | Objective |

|---|---|---|---|

| AE1 | Animation experiment 1 (visual representation) | Students observe an animated experiment showing the temperature changes in three bottles containing different gases. | To provide a foundational understanding of how gas composition influences temperature in a controlled environment. |

| Task 1 | Data analysis (multiple-choice) | Based on the table, Kevin drew the graph; however, he forgot to describe the symbols. Help Kevin match the description to each line. | To develop the ability to interpret data and link graphical representations to descriptive elements. |

| Task 2 | Data analysis (multiple-choice) | Based on the table, what role does Group A play in the experiment? The experimental group or the control group? | To understand the experimental design and distinguish between the experimental and control groups. |

| AE2 | Animation experiment 2 (understanding chemical reactions) | Students watch a second animation where the bottles are exposed to ultraviolet light, simulating chemical reactions involving hydroxyl radicals. | To visualize and understand the dynamic interactions between ultraviolet light, hydroxyl radicals, and methane in atmospheric processes. |

| Task 3 | Data analysis (multiple-choice) | Kevin paired the curves wrong again. Please help Kevin match the correct description to each line. | To refine students’ skills in analyzing experimental data and interpreting graphical trends accurately. |

| Task 4 | Data analysis (multiple-choice) | Compare (Experiment 1) and (Experiment 2) just drawn. What are the changes in lines A, B, and C? | To assess the ability to compare and interpret changes in experimental outcomes across multiple experimental setups. |

| Task 5 | Reasoning and argumentation (open-ended) | If the data you collected are correct, in the change line of Experiment 2, the A and B lines have hardly changed, but the C line has become closer to the B line. Why? | To encourage critical thinking and reasoning about experimental outcomes based on observed data. |

| Task 6 | Critical thinking (open-ended) | Was only Experiment 2 required for experimental purposes, or were both experiments required? Why? | To evaluate students’ understanding of experimental design, the necessity of controls, and the role of multiple trials in validating scientific findings. |

| Variable | Number of Student | P | Ph | Pl | Pa | Pb | Pc | Pd | Pe | D | D1 | D2 | D3 | D4 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| All | 26,823 | 61 | 83 | 30 | 88 | 76 | 68 | 50 | 16 | 0.53 | 0.11 | 0.08 | 0.19 | 0.34 |

| Male | 12,747 | 60 | 83 | 28 | 87 | 76 | 67 | 44 | 15 | 0.54 | 0.11 | 0.09 | 0.23 | 0.30 |

| Female | 13,989 | 63 | 87 | 35 | 88 | 76 | 68 | 50 | 16 | 0.52 | 0.12 | 0.08 | 0.18 | 0.34 |

| Science and Engineering | 19,776 | 65 | 87 | 42 | 88 | 76 | 68 | 56 | 21 | 0.45 | 0.12 | 0.08 | 0.12 | 0.35 |

| Arts and Humanities | 7,047 | 51 | 76 | 18 | 81 | 69 | 58 | 31 | 11 | 0.57 | 0.12 | 0.11 | 0.27 | 0.20 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yeh, S.-C.; Chang, C.-Y.; Ngo, V.T.H. Multimedia-Based Assessment of Scientific Inquiry Skills: Evaluating High School Students’ Scientific Inquiry Abilities Using Cloud Classroom Software. Eng. Proc. 2025, 103, 16. https://doi.org/10.3390/engproc2025103016

Yeh S-C, Chang C-Y, Ngo VTH. Multimedia-Based Assessment of Scientific Inquiry Skills: Evaluating High School Students’ Scientific Inquiry Abilities Using Cloud Classroom Software. Engineering Proceedings. 2025; 103(1):16. https://doi.org/10.3390/engproc2025103016

Chicago/Turabian StyleYeh, Shih-Chao, Chun-Yen Chang, and Van T. Hoang Ngo. 2025. "Multimedia-Based Assessment of Scientific Inquiry Skills: Evaluating High School Students’ Scientific Inquiry Abilities Using Cloud Classroom Software" Engineering Proceedings 103, no. 1: 16. https://doi.org/10.3390/engproc2025103016

APA StyleYeh, S.-C., Chang, C.-Y., & Ngo, V. T. H. (2025). Multimedia-Based Assessment of Scientific Inquiry Skills: Evaluating High School Students’ Scientific Inquiry Abilities Using Cloud Classroom Software. Engineering Proceedings, 103(1), 16. https://doi.org/10.3390/engproc2025103016