Abstract

Autonomous driving systems have the potential to reduce traffic accidents dramatically; however, conventional modules often struggle to accurately detect risks in complex environments. This study presents a novel risk recognition system that integrates the reasoning capabilities of a large language model (LLM), specifically GPT-4, with traffic engineering domain knowledge. By incorporating surrogate safety measures such as time-to-collision (TTC) alongside traditional sensor and image data, our approach enhances the vehicle’s ability to interpret and react to potentially dangerous situations. Utilizing the realistic 3D simulation environment of CARLA, the proposed framework extracts comprehensive data—including object identification, distance, TTC, and vehicle dynamics—and reformulates this information into natural language inputs for GPT-4. The LLM then provides risk assessments with detailed justifications, guiding the autonomous vehicle to execute appropriate control commands. The experimental results demonstrate that the LLM-based module outperforms conventional systems by maintaining safer distances, achieving more stable TTC values, and delivering smoother acceleration control during dangerous scenarios. This fusion of LLM reasoning with traffic engineering principles not only improves the reliability of risk recognition but also lays a robust foundation for future real-time applications and dataset development in autonomous driving safety.

1. Introduction

Recent NHTSA reports indicate that 94% of traffic accidents stem from human error [1], prompting significant interest in the use of autonomous driving for accident prevention [2]. However, current systems still struggle to interpret the complex interactions among small vehicles, emergency vehicles, and pedestrians [3]. To address these shortcomings, recent research has begun leveraging large language models (LLMs) such as GPT-4 [4], which exhibit emergent reasoning capabilities comparable to human judgment [5]. However, many studies overlook transportation domain knowledge—particularly surrogate safety measures (SSMs)—and rely on unrealistic 2D simulations.

This study enhances risk recognition and system reliability by integrating GPT-4 with the time-to-collision (TTC) metric in a realistic 3D simulation environment, CARLA 0.9.14. Our approach combines advanced LLM reasoning with critical transportation engineering insights, offering a promising direction for more robust and context-aware autonomous driving systems.

2. Methods

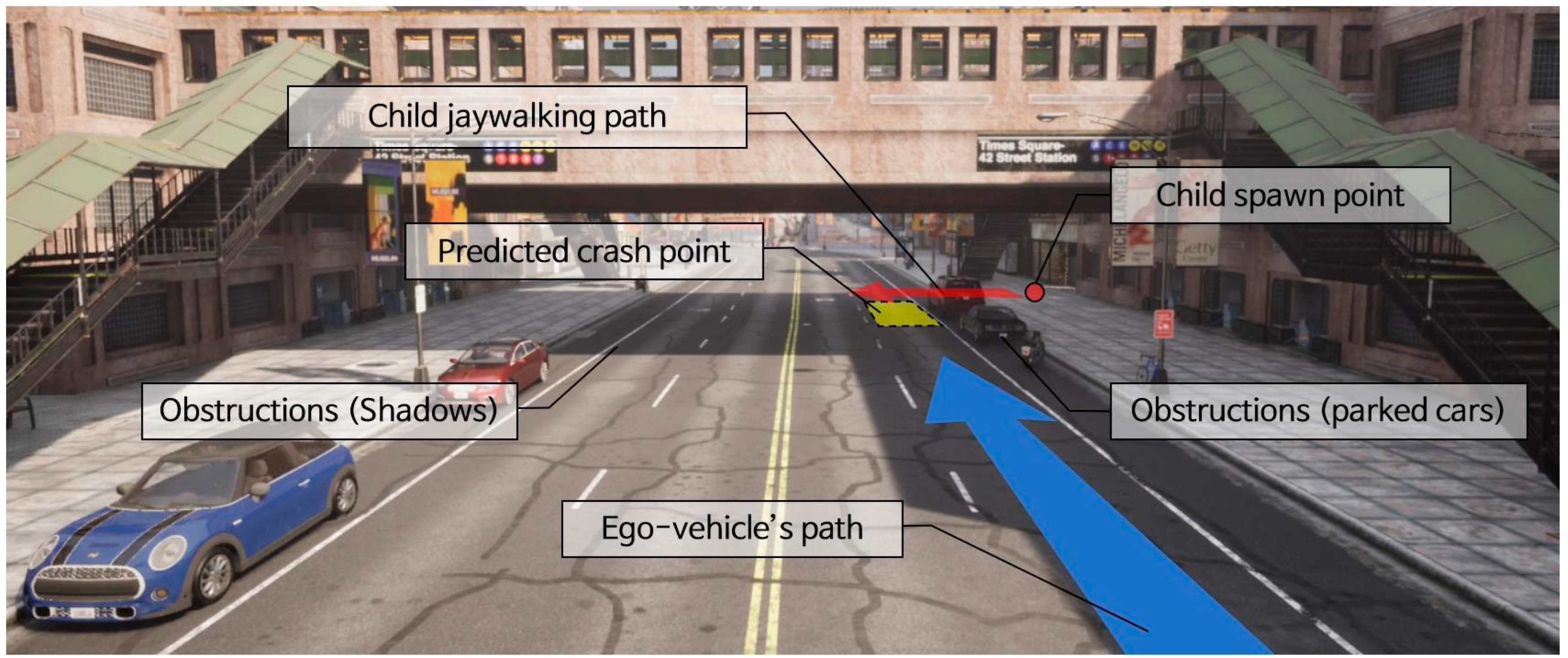

This study aims to detect potentially dangerous situations that are apparent to humans but challenging for conventional models. We implemented a dangerous scenario in CARLA, an open-source 3D simulation platform. Specifically, we selected the viaduct area of CARLA, where shadows and parked vehicles create visibility obstacles, as shown in Figure 1.

Figure 1.

Experimental scenario with potential risk.

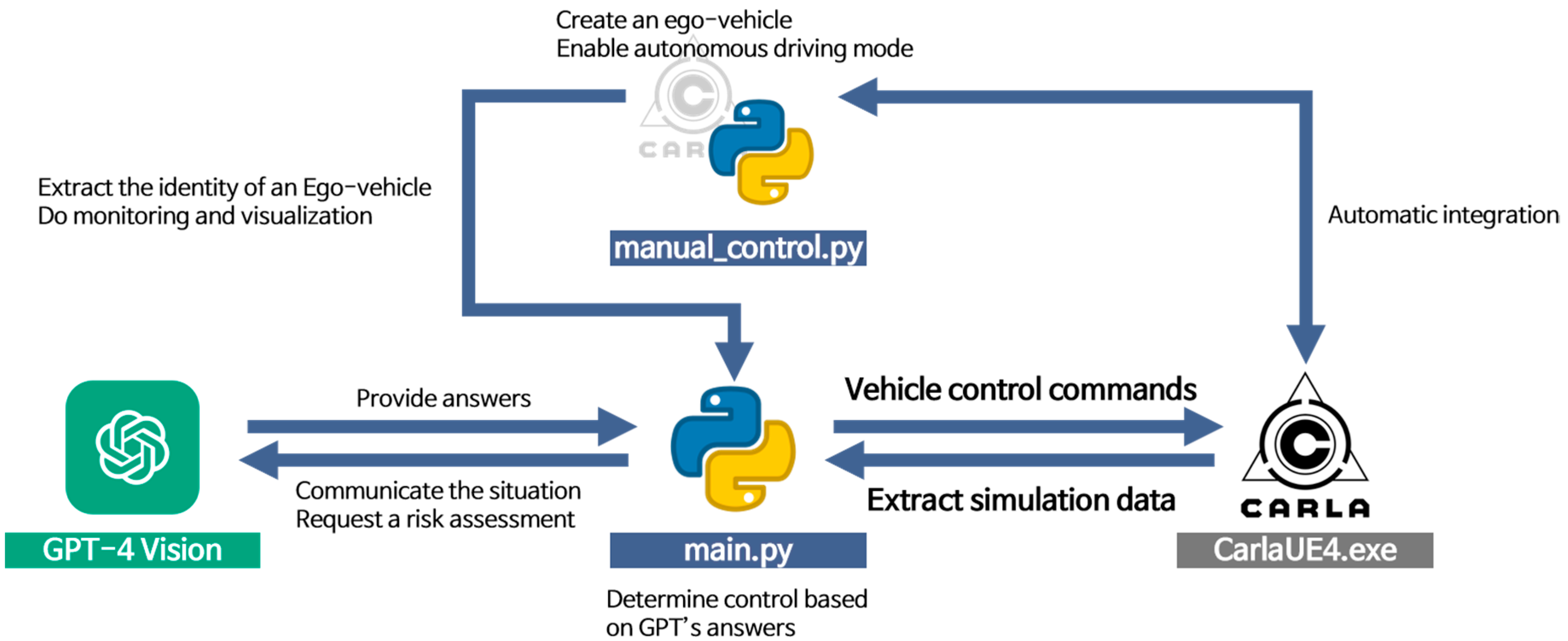

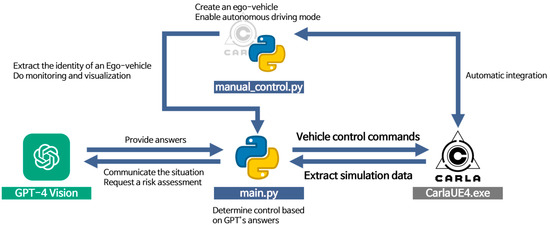

In our scenario, a child unexpectedly crosses in front of a parked vehicle at 5 m/s, requiring the autonomous vehicle to detect this risk from approximately 30 m away and respond appropriately. Our framework in Figure 2 operates the ego-vehicle in autonomous mode within CARLA and extracts key data: a front RGB image, four pieces of surrounding object information (object ID, type, Euclidean distance, and time-to-collision), and five driving parameters (speed, acceleration, throttle, steering, and brake). This information is reformatted into natural language and input into GPT-4, which evaluates the traffic situation and determines if a dangerous condition exists—responding with “YES” or “NO”, along with its reasoning. Based on GPT-4’s judgment, vehicle control commands are issued, and CARLA simulates the resulting traffic safety outcomes.

Figure 2.

Modular roles of the proposed framework.

3. Results

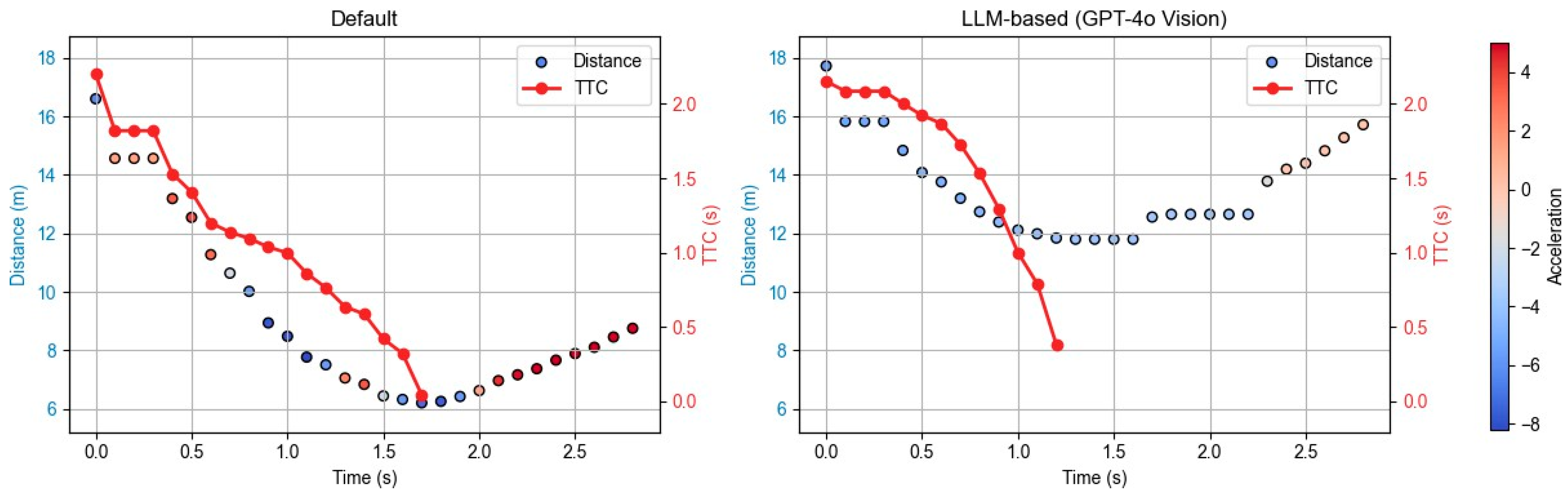

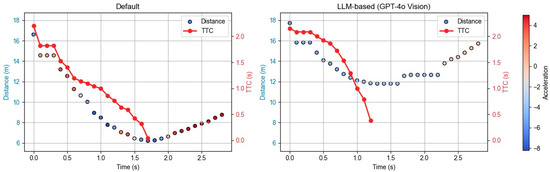

As a result of the provided information and the request that GPT determine the risk situation, it was recommended that brakes be applied to the autonomous vehicle, as shown in Table 1. To evaluate performance, we extracted the distance to the child pedestrian, TTC, and vehicle acceleration at 0.1 s intervals. Figure 3 indicates that the LLM-based module significantly improves traffic safety compared to the default module. With the default module, the distance was reduced to 6.2 m at 1.7 s after control, whereas the LLM-based module maintained a distance of 11.8 m at 1.3 s, about 5.6 m greater. Moreover, the LLM-based module provided a more stable TTC, achieving 0.38 s at 1.2 s versus 0.04 s at 1.7 s with the default module. Additionally, the default module exhibited erratic acceleration patterns, failing to recognize dangerous situations. These findings highlight the benefits of integrating LLM with domain knowledge using TTC as a critical safety metric to enhance autonomous driving performance. While CARLA-based simulations validate the approach, future work must incorporate real-time capabilities. The proposed framework serves as a foundation for building a learning dataset for further advancements in autonomous perception technology.

Table 1.

Results of GPT-4-based risk assessment.

Figure 3.

The safety performance of the default and LLM-based modules.

Author Contributions

Conceptualization, D.M. and D.-K.K.; methodology, D.M. and D.-K.K.; software, D.M.; validation, D.M.; formal analysis, D.M. and D.-K.K.; investigation, D.M.; resources, D.M.; data curation, D.M.; writing—original draft preparation, D.M.; writing—review and editing, D.M. and D.-K.K.; visualization, D.M.; supervision, D.-K.K.; project administration, D.M.; funding acquisition, D.-K.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by the Korea Institute of Police Technology (No.092021C28S02000), the National Research Foundation of Korea (No.2022R1A2C2012835), and the Korea Ministry of Land, Infrastructure, and Transport’s Innovative Talent Education Program for Smart City.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- National Highway Traffic Safety Administration (NHTSA). Critical Reasons for Crashes Investigated in the National Motor Vehicle Crash Causation Survey. Available online: http://www-nrd.nhtsa.dot.gov/Pubs/812115.pdf (accessed on 15 February 2025).

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A survey of autonomous driving: Common practices and emerging technologies. IEEE Access Digit. Object Identifier. 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Fu, D.; Li, X.; Wen, L.; Dou, M.; Cai, P.; Shi, B.; Qiao, Y. Drive like a human: Rethinking autonomous driving with large language models. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 1–6 January 2024. [Google Scholar]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Wei, J.; Tay, Y.; Bommasani, R.; Raffel, C.; Zoph, B.; Borgeaud, S.; Yogatama, D.; Bosma, M.; Zhou, D.; Metzler, D.; et al. Emergent abilities of large language models. arXiv 2022, arXiv:2206.07682. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).