Spatio-Temporal PM2.5 Forecasting Using Machine Learning and Low-Cost Sensors: An Urban Perspective †

Abstract

1. Introduction

2. Materials and Methods

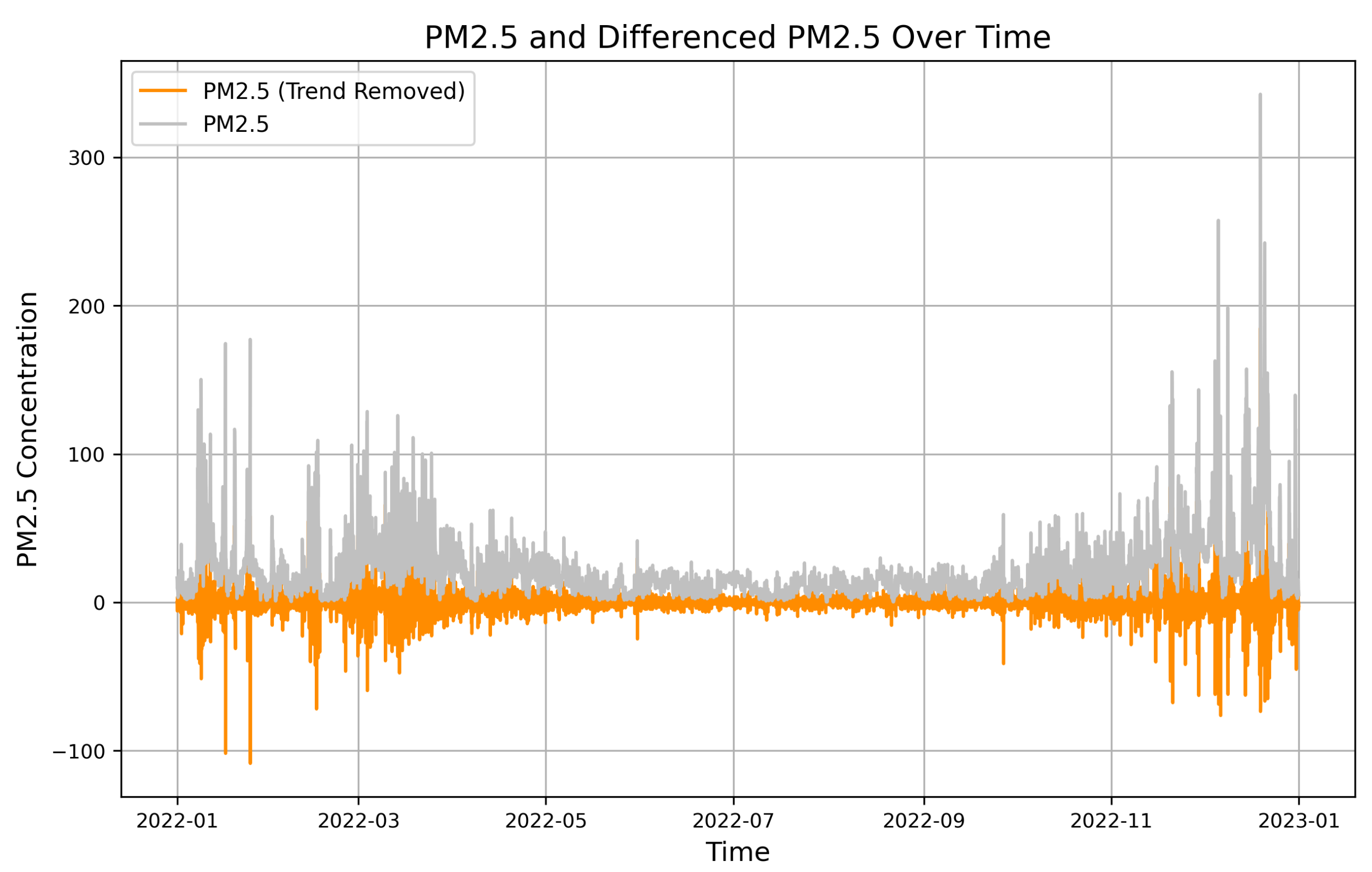

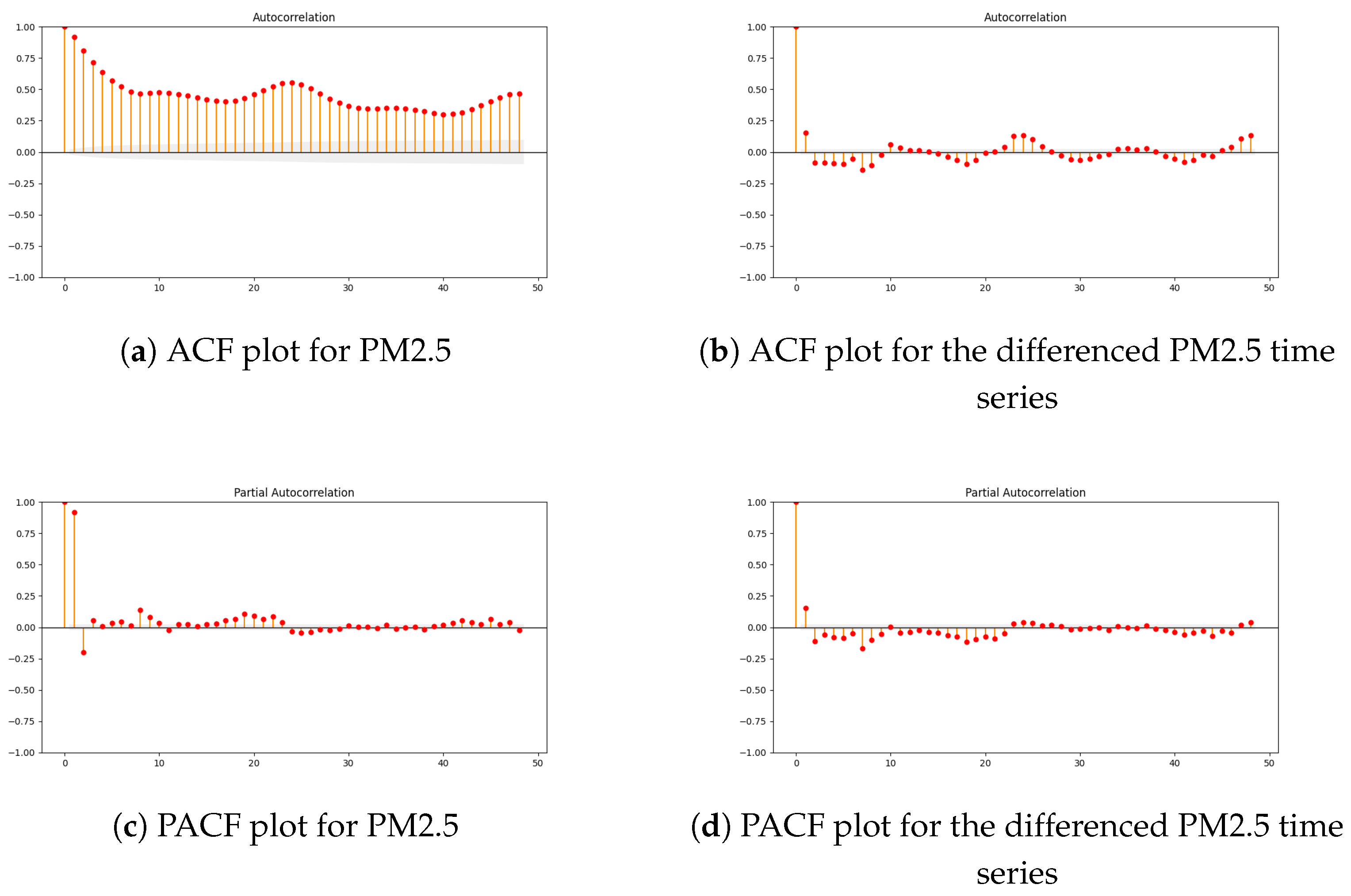

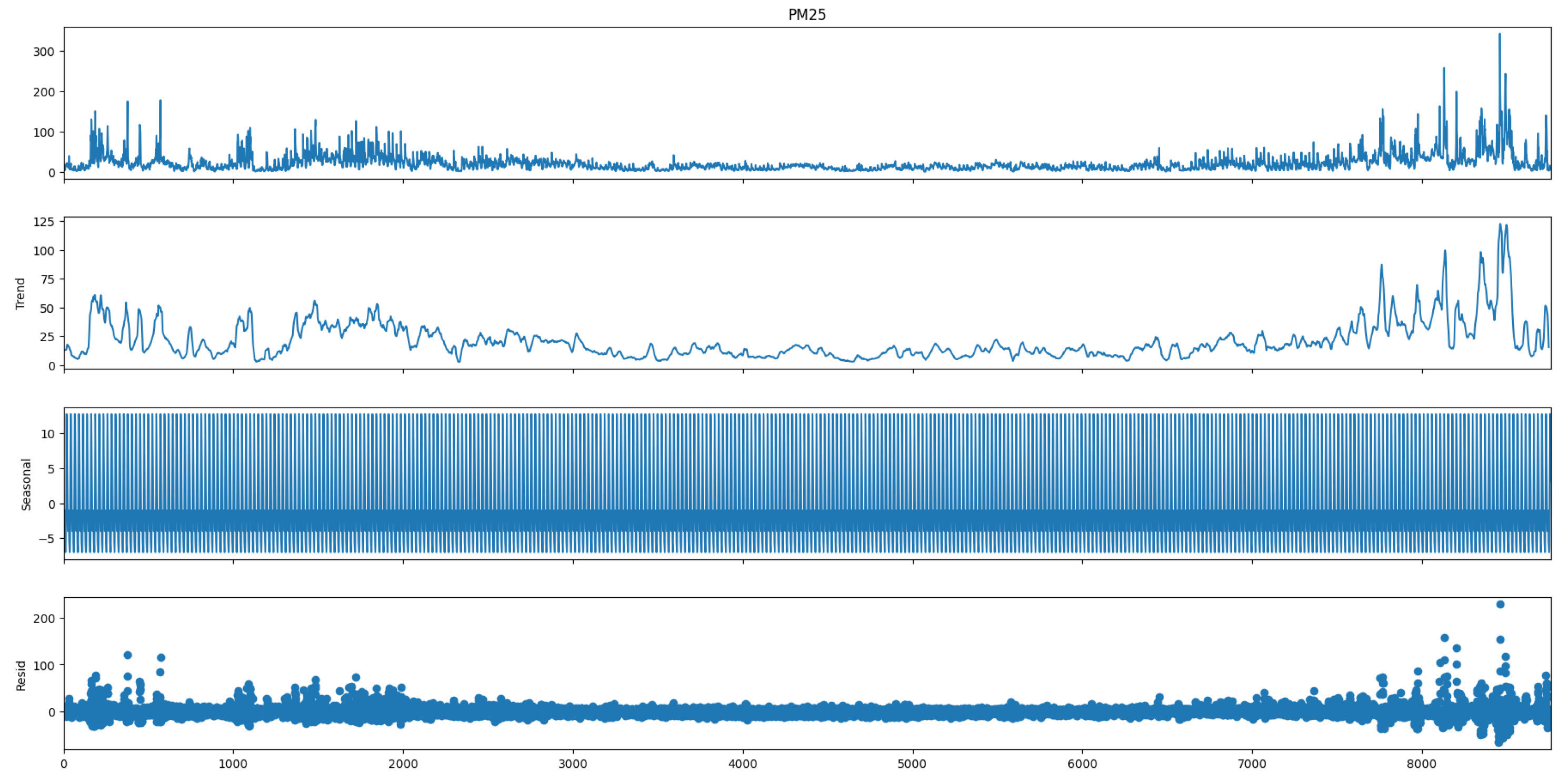

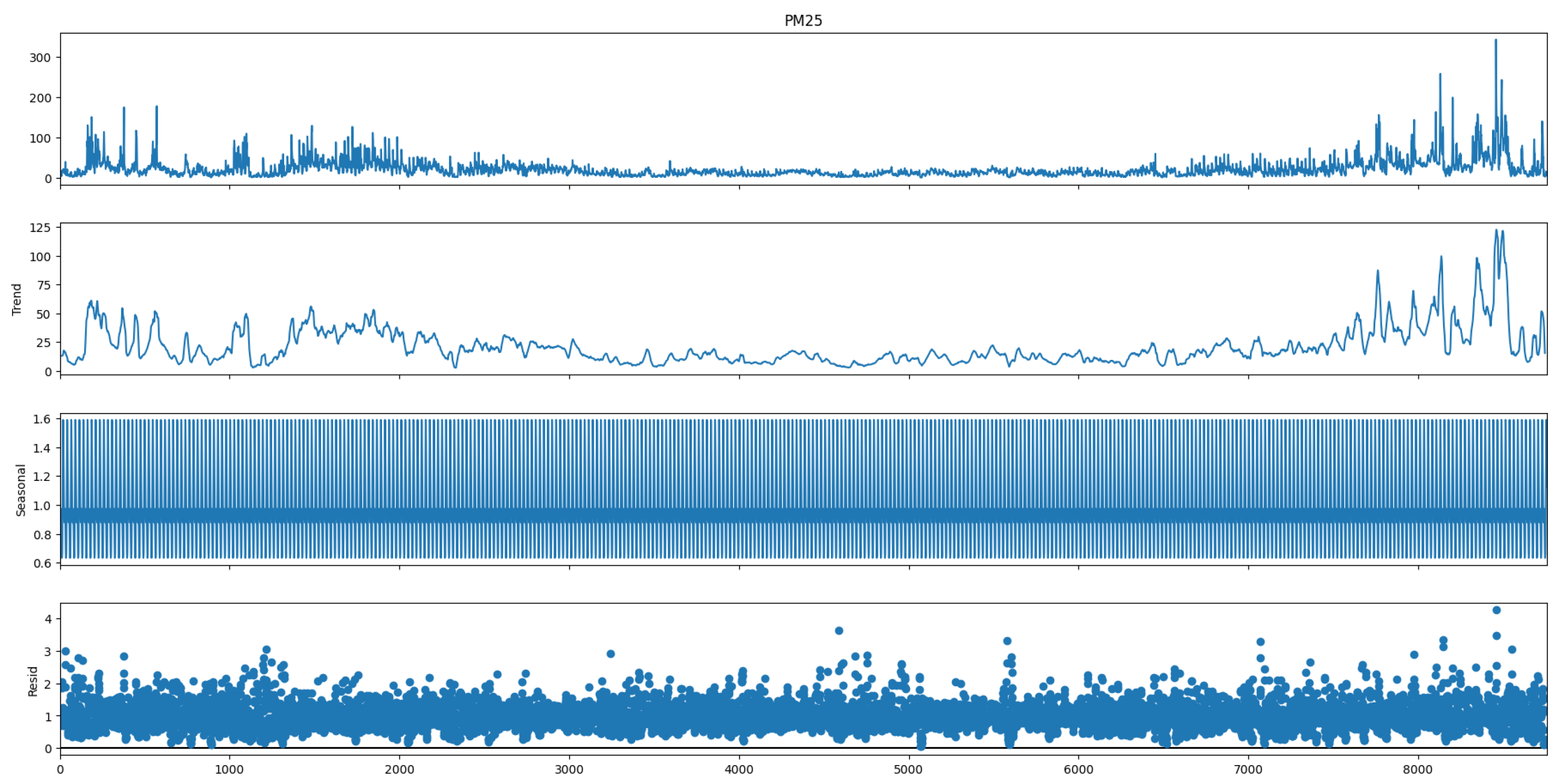

2.1. Data Analysis

- —the change in the observation value between time t and ,

- —the observation value at time t,

- —the observation value at time .

- —the time series,

- —seasonality in the time series,

- —trend in the time series,

- —residual values in the time series.

2.2. Data Workflow

2.3. PM2.5 Concentrations Forecasting

- LSTM (Long Short-Term Memory) is a type of recurrent neural network (RNN) designed to process sequential data by maintaining long-term dependencies. In the context of time-series forecasting, LSTMs learn patterns from past observations and use their memory cells to retain relevant information, allowing them to predict future values more accurately while mitigating issues like vanishing gradients [19].

- XGBoost (Extreme Gradient Boosting) is an optimized gradient boosting framework that enhances predictive performance through regularization, parallel processing, and efficiently handling missing data. In time-series forecasting, XGBoost constructs an ensemble of decision trees by iteratively minimizing the residual errors, effectively capturing complex temporal dependencies and nonlinear relationships in the data [18]. XGBoost proved strong performance in spatiotemporal tasks and consistency with prior studies [26], though LightGBM is also a strong alternative [27].

- Ridge Regression is a linear regression technique that incorporates an regularization term to prevent overfitting by penalizing large coefficient magnitudes. In time-series forecasting, Ridge Regression helps model temporal dependencies by maintaining stability in parameter estimates especially when dealing with multicollinearity or highly correlated lagged features [17].

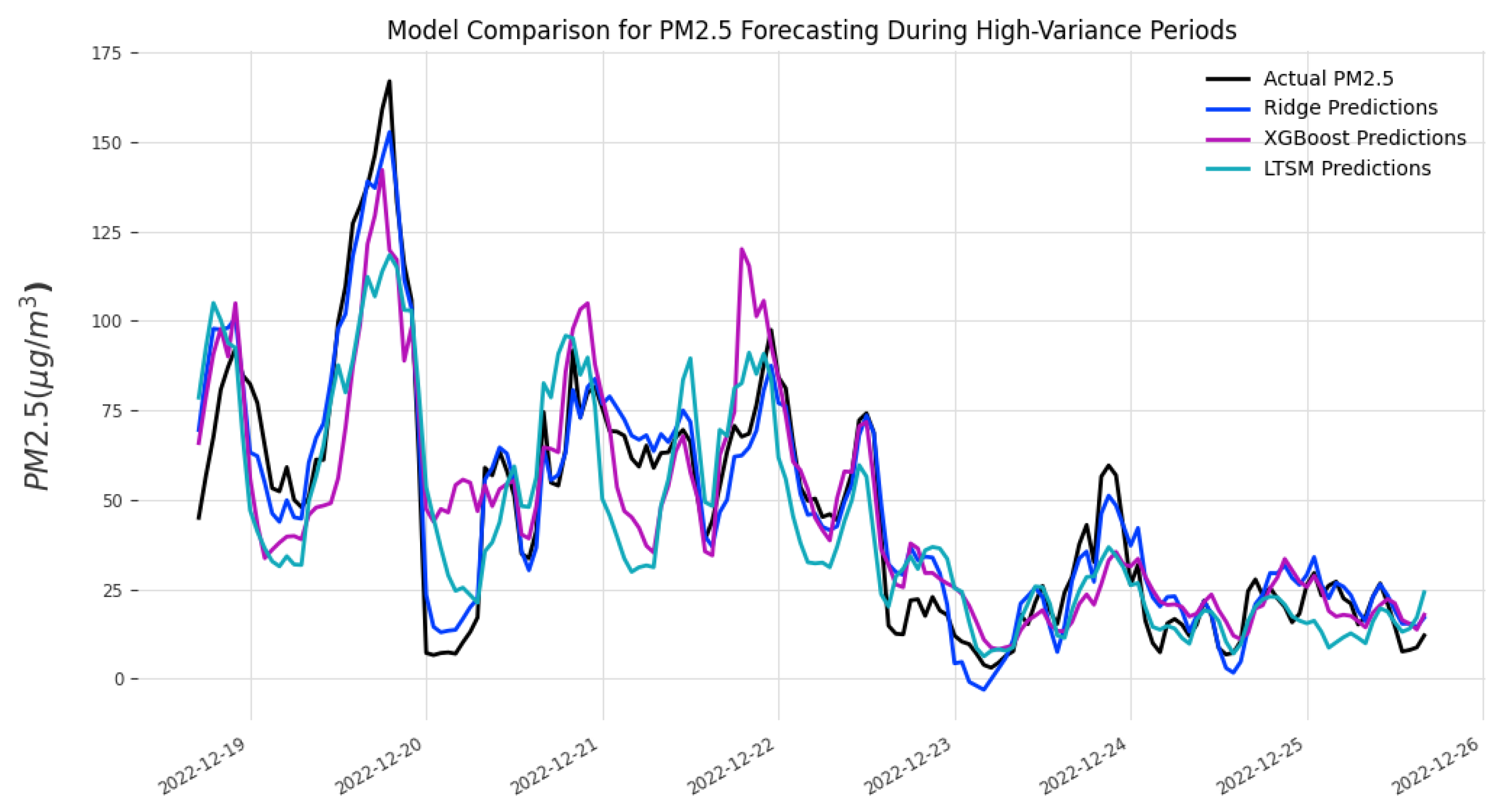

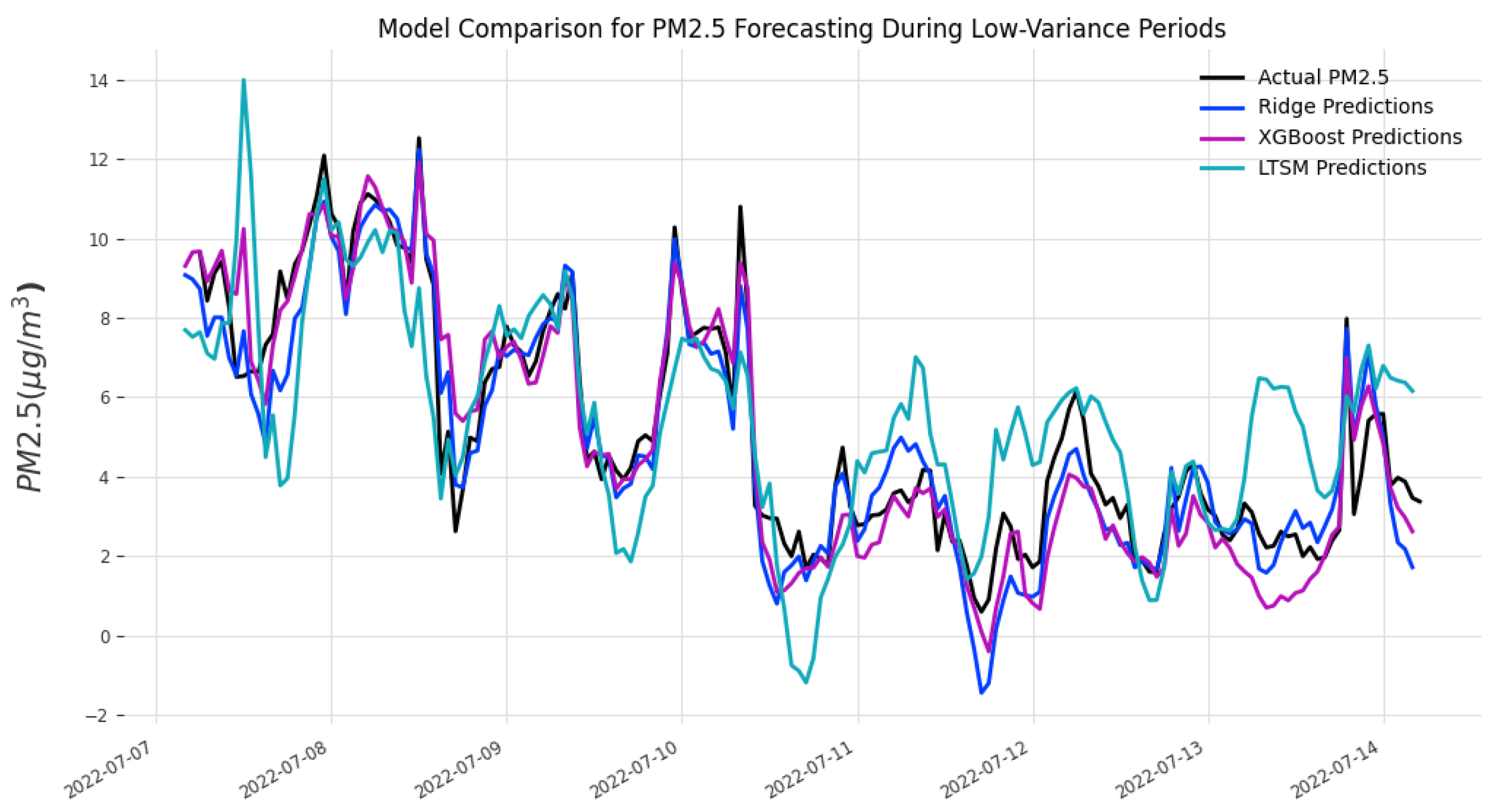

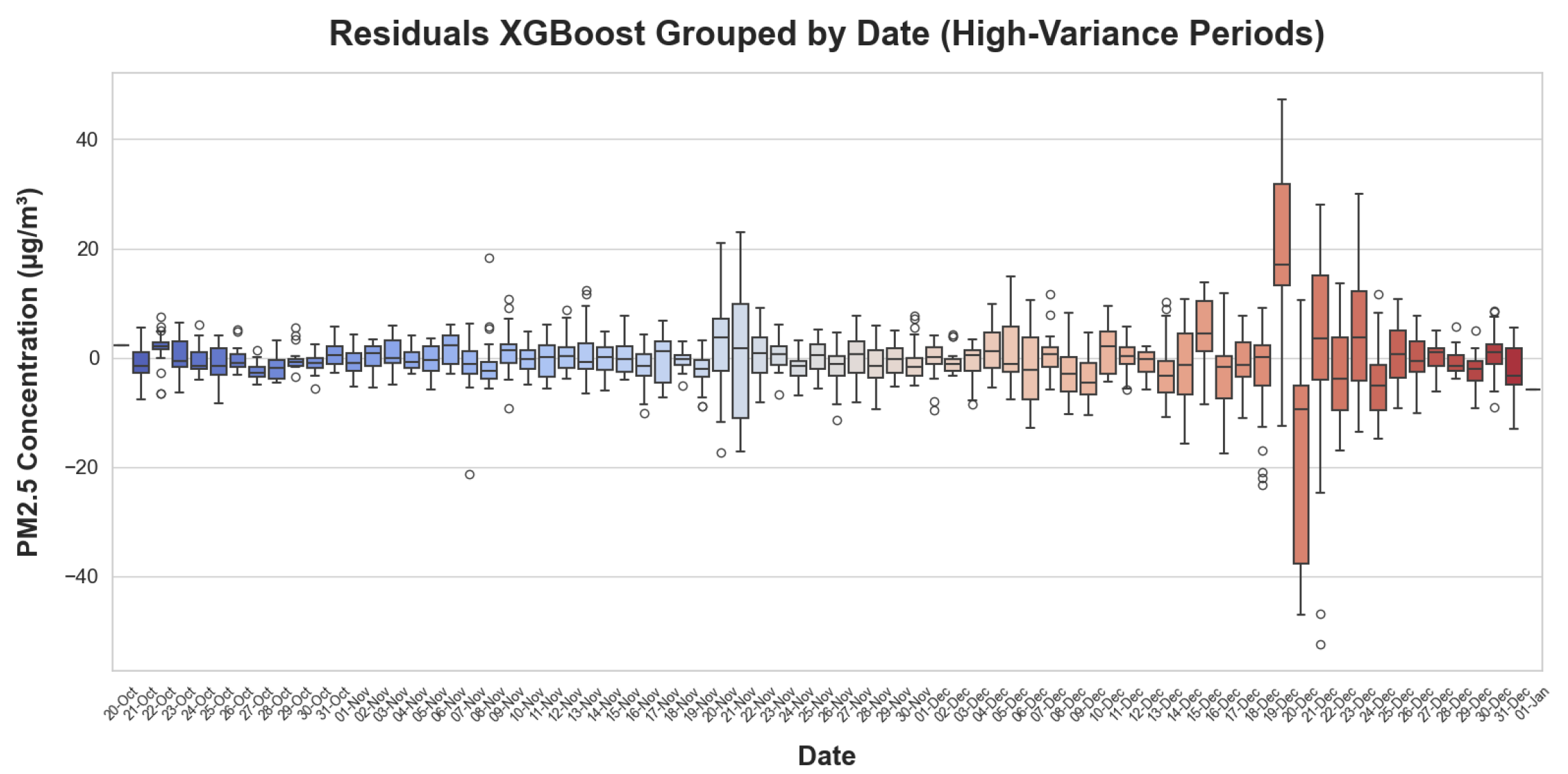

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ACF | Autocorrelation Function |

| CI/CD | Continuous Integration/Continuous Deployment |

| LCS | Low-Cost Sensors |

| LSTM | Long Short-Term Memory |

| MAE | Mean Absolute Error |

| MAPE | Mean Absolute Percentage Error |

| ML | Machine Learning |

| MSE | Mean Squared Error |

| PACF | Partial Autocorrelation Function |

| KPSS | Kwiatkowski–Phillips–Schmidt–Shin Test |

| PM | Particulate Matter |

| R2 | Coefficient of Determination |

| RSE | Rare Smog Episode |

| XAI | Explainable AI |

| XGBoost | Extreme Gradient Boosting |

References

- Cohen, A.; Brauer, M.; Burnett, R.; Anderson, H.; Frostad, J.; Estep, K.; Balakrishnan, K.; Brunekreef, B.; Dandona, L.; Dandona, R.; et al. Estimates and 25-year trends of the global burden of disease attributable to ambient air pollution: An analysis of data from the Global Burden of Diseases Study. Lancet 2017, 389, 1907–1918. [Google Scholar] [CrossRef] [PubMed]

- MacIntyre, E.; Gehring, U.; Molter, A.; Fuertes, E.; Klumper, C.; Kramer, U.; Quass, U.; Hoffmann, B.; Gascon, M.; Brunekreef, B.; et al. Air Pollution and Respiratory Infections during Early Childhood: An Analysis of 10 European Birth Cohorts within the ESCAPE Project. Environ. Health Perspect. 2014, 122, 107–113. [Google Scholar] [CrossRef] [PubMed]

- Raaschou-Nielsen, O.; Andersen, Z.; Beelen, R.; Samoli, E.; Stafoggia, M.; Weinmayr, G.; Hoffmann, B.; Fischer, P.; Nieuwenhuijsen, M.; Brunekreef, B.; et al. Air pollution and lung cancer incidence in 17 European cohorts: Prospective analyses from the European Study of Cohorts for Air Pollution Effects (ESCAPE). Lancet Oncol. 2013, 14, 813–822. [Google Scholar] [CrossRef] [PubMed]

- Cesaroni, G.; Forastiere, F.; Stafoggia, M.; Andersen, Z.J.; Badaloni, C.; Beelen, R.; Caracciolo, B.; de Faire, U.; Erbel, R.; Eriksen, K.T.; et al. Long term exposure to ambient air pollution and incidence of acute coronary events: Prospective cohort study and meta-analysis in 11 European cohorts from the ESCAPE Project. BMJ 2014, 348, f7412. [Google Scholar] [CrossRef] [PubMed]

- Pedersen, M.; Giorgis-Allemand, L.; Bernard, C.; Aguilera, I.; Andersen, A.M.; Ballester, F.; Beelen, R.M.; Chatzi, L.; Cirach, M.; Danileviciute, A.; et al. Ambient air pollution and low birthweight: A European cohort study (ESCAPE). Lancet Respir. Med. 2013, 1, 695–704. [Google Scholar] [CrossRef] [PubMed]

- Thurston, G.; Kipen, H.; Annesi-Maesano, I.; Balmes, J.; Brook, R.; Cromar, K.; De Matteis, S.; Forastiere, F.; Forsberg, B.; Frampton, M.; et al. A joint ERA/ATS policy statement: What constitutes an adverse health effect of air pollution? An analytical framework. Eur. Respir. J. 2017, 49, 1600419. [Google Scholar] [CrossRef] [PubMed]

- Manisalidis, I.; Stavropoulou, E.; Stavropoulos, A.; Bezirtzoglou, E. Environmental and Health Impacts of Air Pollution: A Review. Front. Public Health 2020, 8, 14. [Google Scholar] [CrossRef] [PubMed]

- Chantara, S.; Sillapapiromsuk, S.; Wiriya, W. Atmospheric pollutants in Chiang Mai (Thailand) over a five-year period (2005–2009), their possible sources and relation to air mass movement. Atmos. Environ. 2012, 60, 88–98. [Google Scholar] [CrossRef]

- Ribeiro, I.; Andreoli, R.; Kayano, M.; Sousa, T.; Medeiros, A.; Godoi, R.; Godoi, A.; Duvoisin, S.; Martin, S.; Souza, R. Biomass burning and carbon monoxide patterns in Brazil during the extreme drought years of 2005, 2010, and 2015. Environ. Pollut. 2018, 243, 1008–1014. [Google Scholar] [CrossRef] [PubMed]

- Zareba, M.; Danek, T. Analysis of Air Pollution Migration during COVID-19 Lockdown in Krakow, Poland. Aerosol Air Qual. Res. 2022, 22, 210275. [Google Scholar] [CrossRef]

- Zareba, M. Assessing the Role of Energy Mix in Long-Term Air Pollution Trends: Initial Evidence from Poland. Energies 2025, 18, 1211. [Google Scholar] [CrossRef]

- Danek, T.; Zareba, M. The Use of Public Data from Low-Cost Sensors for the Geospatial Analysis of Air Pollution from Solid Fuel Heating during the COVID-19 Pandemic Spring Period in Krakow, Poland. Sensors 2021, 21, 5208. [Google Scholar] [CrossRef] [PubMed]

- Danek, T.; Weglinska, E.; Zareba, M. The influence of meteorological factors and terrain on air pollution concentration and migration: A geostatistical case study from Krakow, Poland. Sci. Rep. 2022, 12, 11050. [Google Scholar] [CrossRef] [PubMed]

- Urbanowicz, J.; Mlost, A.; Binda, A.; Dobrzańska, J.; Dusza, M.; Godzina, P.; Kostrzewa, J.; Majkowska, A.; Motak, E.; Pietras-Goc, B.; et al. Strategia Rozwoju Województwa ”Małopolska 2030”. 2020. Załącznik do uchwały Nr XXXI/422/20 Sejmiku Województwa Małopolskiego z dnia 17 grudnia 2020 r. Available online: https://www.malopolska.pl/_userfiles/uploads/Rozwoj%20Regionalny/Strategia%20Ma%C5%82opolska%202030/2020-12-17_Zalacznik_Strategia_SWM_2030.pdf (accessed on 8 October 2021). (In Polish).

- Vary, G. Geology of the Carpathian Region; World Scientific Publishing: Sydney, Australia, 1998. [Google Scholar]

- Zareba, M.; Danek, T.; Zając, J. On Including Near-surface Zone Anisotropy for Static Corrections Computation—Polish Carpathians 3D Seismic Processing Case Study. Geosciences 2020, 10, 66. [Google Scholar] [CrossRef]

- Hoerl, A.E.; Kennard, R.W. Ridge Regression: Biased Estimation for Nonorthogonal Problems. Technometrics 1970, 12, 55–67. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 2016, KDD ’16, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [CrossRef]

- Lindemann, B.; Müller, T.; Vietz, H.; Jazdi, N.; Weyrich, M. A survey on long short-term memory networks for time series prediction. Procedia CIRP 2021, 99, 650–655. [Google Scholar] [CrossRef]

- Lai, S.; Feng, N.; Sui, H.; Ma, Z.; Wang, H.; Song, Z.; Zhao, H.; Yue, Y. FTS: A Framework to Find a Faithful TimeSieve. arXiv 2024, arXiv:cs.LG/2405.19647. [Google Scholar] [CrossRef]

- Kwiatkowski, D.; Phillips, P.C.; Schmidt, P.; Shin, Y. Testing the null hypothesis of stationarity against the alternative of a unit root: How sure are we that economic time series have a unit root? J. Econom. 1992, 54, 159–178. [Google Scholar] [CrossRef]

- Cleveland, R.B.; Cleveland, W.S. STL: A Seasonal-Trend Decomposition Procedure Based on Loess. J. Off. Stat. 1990, 6, 3–33. [Google Scholar]

- Athanasopoulos, G.; Hyndman, R.J. Forecasting: Principles and Practice; Monash University: Melbourne, Australia, 2013. [Google Scholar]

- Kedro Developers. Kedro: A Python Framework for Reproducible, Maintainable and Modular Data Science. 2025. Available online: https://github.com/kedro-org/kedro (accessed on 3 January 2024).

- Herzen, J.; Lässig, F.; Piazzetta, S.G.; Neuer, T.; Tafti, L.; Raille, G.; Pottelbergh, T.V.; Pasieka, M.; Skrodzki, A.; Huguenin, N.; et al. Darts: User-Friendly Modern Machine Learning for Time Series. J. Mach. Learn. Res. 2022, 23, 1–6. [Google Scholar]

- Zareba, M.; Weglinska, E.; Danek, T. Air pollution seasons in urban moderate climate areas through big data analytics. Sci. Rep. 2024, 14, 3058. [Google Scholar] [CrossRef] [PubMed]

- Tang, R.; Ning, Y.; Li, C.; Feng, W.; Chen, Y.; Xie, X. Numerical Forecast Correction of Temperature and Wind Using a Single-Station Single-Time Spatial LightGBM Method. Sensors 2022, 22, 193. [Google Scholar] [CrossRef] [PubMed]

- Konrad Banachewicz, Abhishek Thakur. Curve Fitting Is (Almost) All You Need. YouTube Video. 2022. Online. Available online: https://www.youtube.com/@konradbanachewicz8641 (accessed on 3 January 2024).

| Metric | Winter | Summer | ||||

|---|---|---|---|---|---|---|

| Ridge | XGBoost | LSTM | Ridge | XGBoost | LSTM | |

| MAPE | 0.1413 | 0.2253 | 0.2373 | 0.1597 | 0.1918 | 0.3985 |

| MAE (µg/m3) | 2.5971 | 4.2149 | 5.4401 | 1.0239 | 1.1841 | 2.5572 |

| MSE (µg/m3)2 | 14.8806 | 46.8883 | 66.7815 | 2.2520 | 2.6351 | 14.2388 |

| R2 | 0.9577 | 0.8667 | 0.8101 | 0.9325 | 0.9211 | 0.5734 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zareba, M.; Cogiel, S.; Danek, T. Spatio-Temporal PM2.5 Forecasting Using Machine Learning and Low-Cost Sensors: An Urban Perspective. Eng. Proc. 2025, 101, 6. https://doi.org/10.3390/engproc2025101006

Zareba M, Cogiel S, Danek T. Spatio-Temporal PM2.5 Forecasting Using Machine Learning and Low-Cost Sensors: An Urban Perspective. Engineering Proceedings. 2025; 101(1):6. https://doi.org/10.3390/engproc2025101006

Chicago/Turabian StyleZareba, Mateusz, Szymon Cogiel, and Tomasz Danek. 2025. "Spatio-Temporal PM2.5 Forecasting Using Machine Learning and Low-Cost Sensors: An Urban Perspective" Engineering Proceedings 101, no. 1: 6. https://doi.org/10.3390/engproc2025101006

APA StyleZareba, M., Cogiel, S., & Danek, T. (2025). Spatio-Temporal PM2.5 Forecasting Using Machine Learning and Low-Cost Sensors: An Urban Perspective. Engineering Proceedings, 101(1), 6. https://doi.org/10.3390/engproc2025101006