Abstract

This article presents the design, development, and evaluation of an AI-based assistant tailored to support users in the application of the Specific Operations Risk Assessment (SORA) methodology for unmanned aircraft systems. Built on a customized language model, the assistant was trained using system-level instructions with the goal of translating complex regulatory concepts into clear and actionable guidance. The approach combines structured definitions, contextualized examples, constrained response behavior, and references to authoritative sources such as JARUS and EASA. Rather than substituting expert or regulatory roles, the assistant provides process-oriented support, helping users understand and complete the various stages of risk assessment. The model’s effectiveness is illustrated through practical interaction scenarios, demonstrating its value across educational, operational, and advisory use cases, and its potential to contribute to the digital transformation of safety and compliance processes in the drone ecosystem.

Keywords:

SORA; AI assistant; UAS; drones; context-aware dialogue; regulatory compliance; operational safety 1. Introduction

The growing use of Unmanned Aircraft Systems (UAS) in civilian operations brings significant regulatory and safety challenges, particularly for missions falling under the “specific” category as defined by the European Union Aviation Safety Agency (EASA). These operations often involve higher risk profiles such as beyond visual line of sight (BVLOS) flights, urban environments, or proximity to uninvolved people and infrastructure. While the SORA (Specific Operations Risk Assessment) methodology offers a structured and accepted framework for risk evaluation, its effective application requires a detailed understanding of aviation regulation, operational design constraints, and formal documentation procedures.

For many small operators, startups, and institutional actors, the procedural rigor and regulatory literacy demanded by SORA represent a significant barrier. Errors in completing risk assessments or compliance evidence files may result in operational delays, denials of authorization, or safety oversights. Recent literature reviews have also highlighted a general lack of awareness regarding operational risks in the drone domain [1], further hindering innovation and limiting market entry for new participants. In this context, there emerges a clear need for a tool that facilitates the understanding and application of the SORA methodology in an accessible, automated, and interactive manner.

This paper explores the integration of an AI-based assistant (Disclaimer: The assistant provides only educational and procedural guidance. It does not substitute legal advice, operational authorization, or certified aviation decision-making.) into the SORA workflow as a mechanism to reduce entry barriers and improve documentation quality. Rather than reiterating publicly available definitions and categories, we focus on the assistant’s functional contribution: providing real-time guidance, detecting omissions and inconsistencies, interpreting regulatory expectations, and synthesizing user input into submission-ready outputs. The research evaluates the assistant’s performance through a real-world test case involving a partially completed application for a BVLOS mission in controlled airspace.

Importantly, the assistant is designed solely as an informational and educational support tool. It does not make autonomous decisions, authorize operations, or replace certified aviation personnel or regulators. All actions and decisions remain the responsibility of the operator and must be validated by the appropriate national authorities. The aim of this system is to enhance understanding, accuracy, and compliance—not to substitute accountability or professional judgment.

2. The SORA Methodology

The Specific Operations Risk Assessment (SORA) methodology emerged in response to the need for a structured, transparent, and flexible framework for managing risks associated with unmanned aircraft systems (UAS). Developed by the Joint Authorities for Rulemaking on Unmanned Systems (JARUS) [2], SORA has been widely adopted by the European Union Aviation Safety Agency (EASA) as a primary tool for assessing risk in operations falling under the so-called “specific category.” This category encompasses a significant portion of commercial and professional drone missions that involve elevated levels of operational risk and therefore cannot be classified within the “open” category. These operations require case-by-case authorization by the competent regulatory authority [3].

Within the SORA framework, operators are expected to demonstrate a clear understanding of the risks associated with their proposed mission, implement adequate technical and organizational mitigation measures, and ensure that the operation can be conducted safely and in compliance with applicable regulatory requirements. For both European and national authorities, SORA serves as a critical mechanism for informed decision-making when issuing flight authorizations, while offering the necessary flexibility to accommodate new and innovative operational scenarios that fall outside the scope of standard scenarios (STS). Its application in complex airport environments involving multiple coordinated drone inspections, for instance, has been explored in detail by Martinez [4].

In this context, a precise understanding and correct application of the SORA methodology is not merely a recommended practice—it is a vital prerequisite for ensuring the legality and safety of modern drone operations. Given the dynamic nature of the operational environment and the constantly evolving regulatory landscape, there is a growing need for an intelligent tool that supports the comprehension and implementation of SORA in an accessible, automated, and interactive manner. Such a tool can guide users through the process, reduce the likelihood of errors, delays, and administrative inconsistencies, and ultimately foster greater regulatory compliance and operational safety—an approach also reflected in the development of the SORA Tool for civil drone operations [5].

3. The Role of an AI-Based Assistant

The SORA methodology is far more than a formal administrative procedure—it requires a thorough analysis of the operational environment, an assessment of potential risks to people and airspace users, and the selection of appropriate risk mitigation measures. For operators without prior experience in aviation safety or regulatory frameworks, navigating these steps can be confusing, time-consuming, and often overwhelming.

In response to these challenges, artificial intelligence (AI) offers a promising opportunity to support operators through the creation of an intelligent assistant capable of guiding them through the SORA risk assessment process. Such an AI-based tool can translate specialized terminology into clear and comprehensible language, making it accessible not only to new operators but also to students and trainees in the field of aviation safety. Moreover, the assistant can help users navigate the various phases of the SORA methodology by providing structured guidance and explanations tailored to the specific operational scenario—whether it involves BVLOS missions, urban environments, or VLOS flights.

The AI assistant is designed to provide context-sensitive support aligned with the nature of the operation and applicable requirements, thereby aiding the decision-making process without replacing regulatory authorities or domain experts. It can also offer sample scenarios along with recommended risk assessment and mitigation strategies, assisting users in building their own Concept of Operations (ConOps). This is particularly relevant in industrial or construction zones, where drone operations require clearly defined safety frameworks [6]. Importantly, the assistant is not intended to offer legal advice; rather, it directs users toward applicable regulatory limitations, good practices, and authoritative sources, thereby ensuring high levels of compliance with oversight bodies. Tran [7], for example, identifies the critical need for AI-based tools in the assessment of cybersecurity risks within UAS operations.

By combining the strengths of real-time consultation and interactive learning, the assistant helps reduce errors, accelerate the approval process, and raise overall awareness of operational safety—an essential condition for the sustainable development of the unmanned aviation sector. The aim of developing such an AI assistant is not to replace human experts or regulatory authorities, but to provide users—especially new entrants in the drone ecosystem—with intelligent support that enables more informed and justified decisions. The assistant explicitly avoids providing deterministic answers about operational legality and is designed to prevent users from bypassing formal authorization processes.

4. Methodological Approach to Model Development

A generalized block diagram of the model development process is presented in Figure 1. Based on the identified need for an intelligent tool to assist users in navigating the logic and procedures of the SORA framework, a custom language model was developed using the OpenAI GPT infrastructure (Figure 1, Block 2). This platform was selected for its proven capabilities in contextual understanding, controlled generation of domain-specific content, and adaptability to regulatory communication requirements. The approach is focused on transforming expert-level content into understandable explanations while strictly adhering to regulatory constraints and avoiding any form of legal or regulatory interpretation.

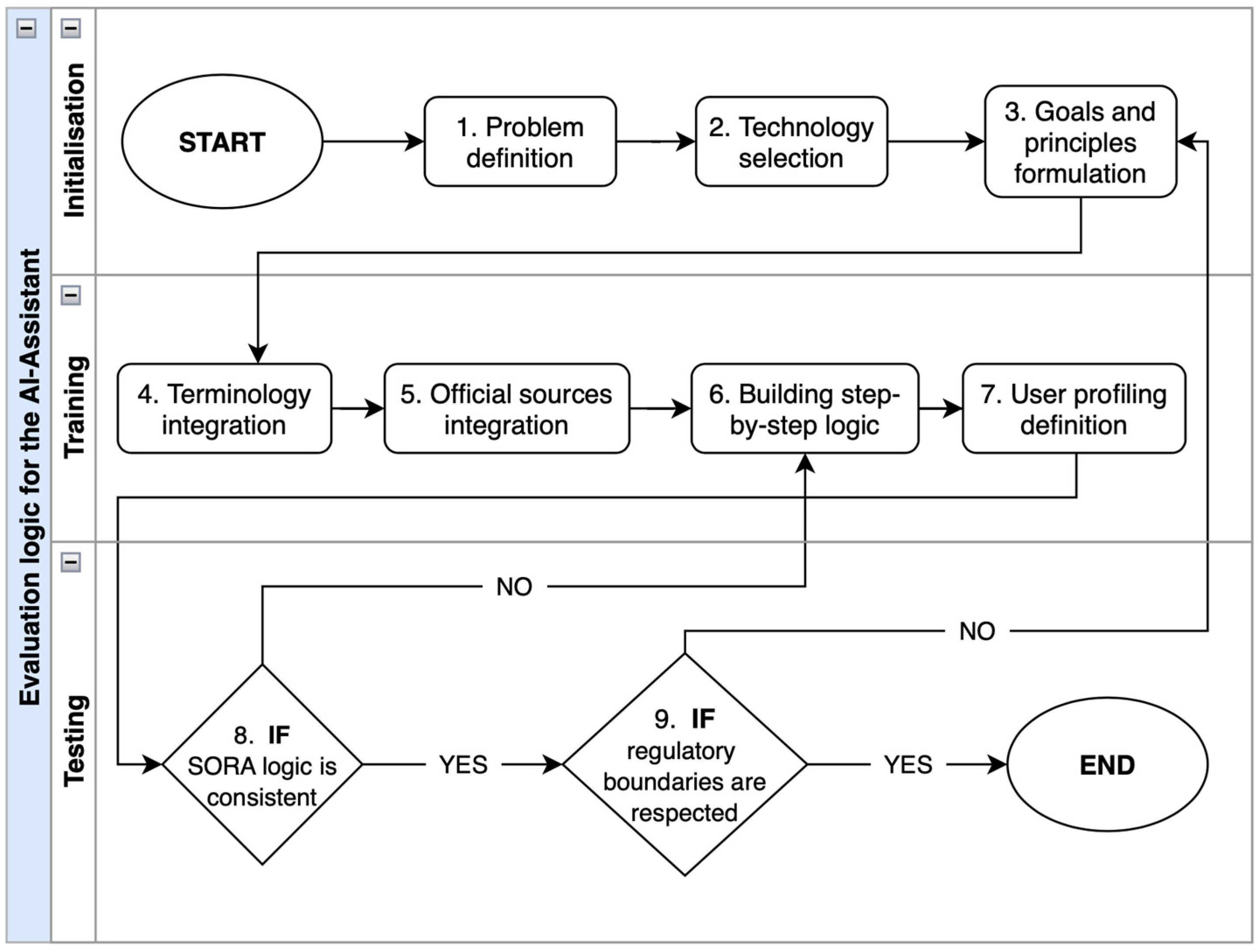

Figure 1.

Evaluation logic for AI assistant behavior validation. The diagram illustrates the core testing phases of the model, including goal definition, consistency and boundary checks, and feedback-driven retraining in cases of noncompliance.

The goal was not to create a substitute for a human consultant or regulatory authority, but rather to develop a digital assistant capable of supporting users through structured access to established terminology and logical relationships within the SORA methodology. To achieve this, a set of core principles was defined, including the ability to explain complex concepts in accessible language, contextualize guidance based on the operational scenario, and provide references to official documentation (Figure 1, Block 3).

The summarized process of developing and validating the model’s behavior is illustrated in Figure 1, which outlines the key stages and decision points used for evaluation and feedback during the training and adaptation of the AI assistant (Figure 1, Blocks 2–9).

4.1. Terminology Processing and Knowledge Structuring

As a starting point, the model was trained to recognize and explain core elements of the SORA methodology, including GRC, ARC, OSO, Tactical Mitigations, and the Specific Assurance and Integrity Level (SAIL). To ensure accuracy and alignment with accepted definitions, official sources were used during the training phase—most notably, the JARUS Guidelines on SORA v2.5, EASA’s Easy Access Rules for Unmanned Aircraft Systems (UAS), and practical examples from published assessments available via the JARUS [8] and EUROCONTROL [9] platforms (see Figure 1, Block 4). In addition, a training structure was implemented based on examples drawn from real user interactions to further refine the assistant’s response capabilities and contextual awareness (Table 1).

Table 1.

Contextual interpretation of technical terms in SORA.

This response illustrates the model’s ability to present technical information in a structured and comprehensible manner, introducing key terminology and placing it within the broader context of the SORA methodology. In this case, ARC is explained not only as a standalone concept but also in relation to GRC, the other fundamental component in risk assessment. This interconnection enhances the user’s ability to grasp the underlying logic of SORA and provides a foundation for follow-up questions and further clarification.

4.2. Contextual Responsibility and Interpretive Boundaries

A critical component in the development of the model was the design of content governance mechanisms to ensure that the AI assistant remains strictly within the bounds of informational support and does not cross into the domain of regulatory or legal interpretation. Although the model operates on the basis of language prediction, its behavior was carefully structured to maintain the boundaries of acceptable informational assistance.

To this end, the model was configured to clearly distinguish between informal guidance and official positions (Figure 1, Block 9). In its responses, it consistently emphasizes that the information provided is educational in nature and encourages users to consult authoritative sources—most notably, JARUS and EASA (Figure 1, Block 5). In cases of regulatory uncertainty or potential national-level variation, the assistant deliberately avoids definitive statements and reiterates that it does not act as a certified expert or authorized advisor [10]. It is structurally prevented from issuing permissions or authorizing actions. All determinations regarding operational legality must be made by certified authorities in compliance with applicable national and international regulations. This design aligns with established approaches in safety assurance literature, which emphasize the separation between technical advice and legal responsibility [11].

A representative example of this approach is the assistant’s handling of the question “Can I perform a mission without authorization if I use a parachute?” Instead of issuing a definitive yes or no, the assistant highlights key considerations such as maximum impact energy, characteristics of the operational zone, and national regulatory differences (Table 2). In doing so, it preserves the distinction between information and interpretation, and encourages the user to seek verification from competent authorities.

Table 2.

Interpretive boundaries.

Another important function is the model’s ability to tailor the level of detail in its responses to the context and profile of the user. Beginners are presented with basic definitions and conceptual explanations, while more advanced users receive structurally organized guidance for building argumentation within the SORA documentation process (Figure 1, Block 7). A similar logic of adaptive interaction is observed in other contextualized AI systems developed for technical domains [12].

By embedding such contextual safeguards, combined with ethical programming and reliance on verified regulatory sources, the AI assistant becomes a trustworthy and responsible tool. It supports users without compromising the principles of aviation safety or regulatory compliance, and helps expand digital access to the SORA methodology.

The practical dimensions of safety in UAS operations have been examined by Nogueira et al. [13].

4.3. Integration of the SORA Process Logic

Once the core terms and acronyms used within the SORA methodology had been defined, the next essential step in developing the assistant involved familiarizing the model with the sequential logic and step-by-step structure of the SORA framework. The objective was not only to convey the meaning of individual elements—such as GRC, ARC, OSO, and SAIL—but also to build an understanding of the interrelationships between them and their roles within the broader risk assessment process.

To achieve this, contextual prompts were embedded to represent the process flow—beginning with the formulation of the Concept of Operations (ConOps), followed by the identification and evaluation of risk classes, and culminating in the selection of appropriate mitigation measures and the documentation of the assessment. The model was guided using a structure derived from JARUS and EASA documentation, including the logic behind combining GRC and ARC to determine SAIL and the corresponding reliability requirements for a given operation (Figure 1, Block 6).

This process-level awareness enabled the model to comprehend not only what each element represents, but also when and why it is applied in the context of the complete assessment (Table 3). This behavior laid the groundwork for the development of a guided dialog strategy, discussed in the next section.

Table 3.

How the model applies SORA logic to guide the user.

An analysis of the assistant’s response reveals that it first recognizes the user’s question as corresponding to Step 3 of the SORA process. It then checks whether Step 2 (GRC) has already been completed—if not, the assistant redirects the user to that prior stage; if yes, it proceeds to Step 4 (SAIL). Finally, it offers a logical continuation to maintain consistency and forward momentum, as discussed in the following section.

This behavior is not the result of pre-programmed responses, but rather stems from an embedded process structure that enables the model to act as a navigator within the SORA methodology. As a result, the interaction becomes not only informative but also goal-oriented. A similar logic-based structuring approach, grounded in safety-critical process design, has been applied in architectures for UAS operations [14].

During the development process, the model evolved from a general-purpose language tool into a specialized assistant aligned with the structure and logic of the SORA methodology. After integrating the foundational terminology and sequential process logic, efforts were directed toward ensuring behavioral consistency that would provide contextual clarity and logical continuity across each stage of the assessment. The reliability of this functionality was validated through an internal testing cycle (Figure 1), with iterative refinements applied whenever deviations were identified. As a result, the model achieved a stable and coherent performance, in line with the methodological, ethical, and user-oriented requirements of SORA.

This level of development underscored the need for deliberate dialog modeling, enabling the assistant to guide the user consistently and effectively through all key stages of the risk assessment process.

In contrast to the static and prescriptive nature of EASA’s official documentation tools—such as checklists, explanatory templates, and regulatory guidance leaflets—the developed AI-based assistant introduces a layer of procedural intelligence that actively supports the decision-making process during mission preparation. While traditional tools rely on the operator’s ability to interpret regulatory flowcharts and map scenario-specific elements to risk categories, the assistant interprets operational context dynamically and offers real-time guidance on selecting ARC levels, identifying OSOs, and structuring mitigation narratives.

This approach transforms the assistant from a passive documentation helper into an adaptive agent capable of validating inputs, detecting inconsistencies, and recommending corrective actions based on integrated knowledge of the SORA methodology. Rather than simply following a linear form-filling path, the assistant can respond to missing information, suggest regulatory references, and ensure alignment between operational intent and safety assurance expectations. These functionalities are absent in current EASA-provided materials and represent a significant methodological differentiation.

As such, the assistant serves not only as an interpreter of static procedures but as an interactive regulatory companion capable of bridging the gap between rule-based frameworks and practical user application. This capacity is particularly critical for non-expert operators or small organizations with limited internal compliance resources.

4.4. Behavioral Modeling for Guiding the Completion of the Process

Designing an assistant that not only explains individual elements of the SORA methodology but also supports users throughout the entire assessment process required a specific approach to dialog structuring. The development of a logically guided conversational flow drew on models from safety-oriented engineering design [15]. To this end, the model was trained to maintain process continuity and to track the user’s progress through the core phases of SORA.

In particular, the assistant was trained with guidance mechanisms that activate follow-up prompts and suggestions for the next step once information has been provided on a given topic. For example, in response to a question about GRC, the model may conclude with a prompt such as “If you’d like, we can proceed to the next step—determining the air risk class (ARC) and how it affects the SAIL level for your operation.”

This behavior is realized through a combination of context-sensitive prompts suggesting subsequent actions; structured logic embedded within the system prompt that defines the order of progression; and redirecting logic that gently returns the focus to the main SORA phases if the user’s query strays from the process. The result is an AI assistant that not only answers questions but helps the user build a comprehensive understanding of the full risk assessment cycle—from formulating a Concept of Operations (ConOps) and evaluating risk, to selecting mitigation measures and documenting the rationale behind the operation. Similar strategies for iterative enhancement are applied in enterprise-grade AI assistants [16].

This conversational strategy is particularly effective for novice users who need more than just information—they need guidance through the steps in the correct sequence. For more experienced users, the model also provides added value by helping them verify the completeness and consistency of their risk assessment. The concept of progressive user guidance within a SORA context is also reflected in the system modeling work of Castro and García [17].

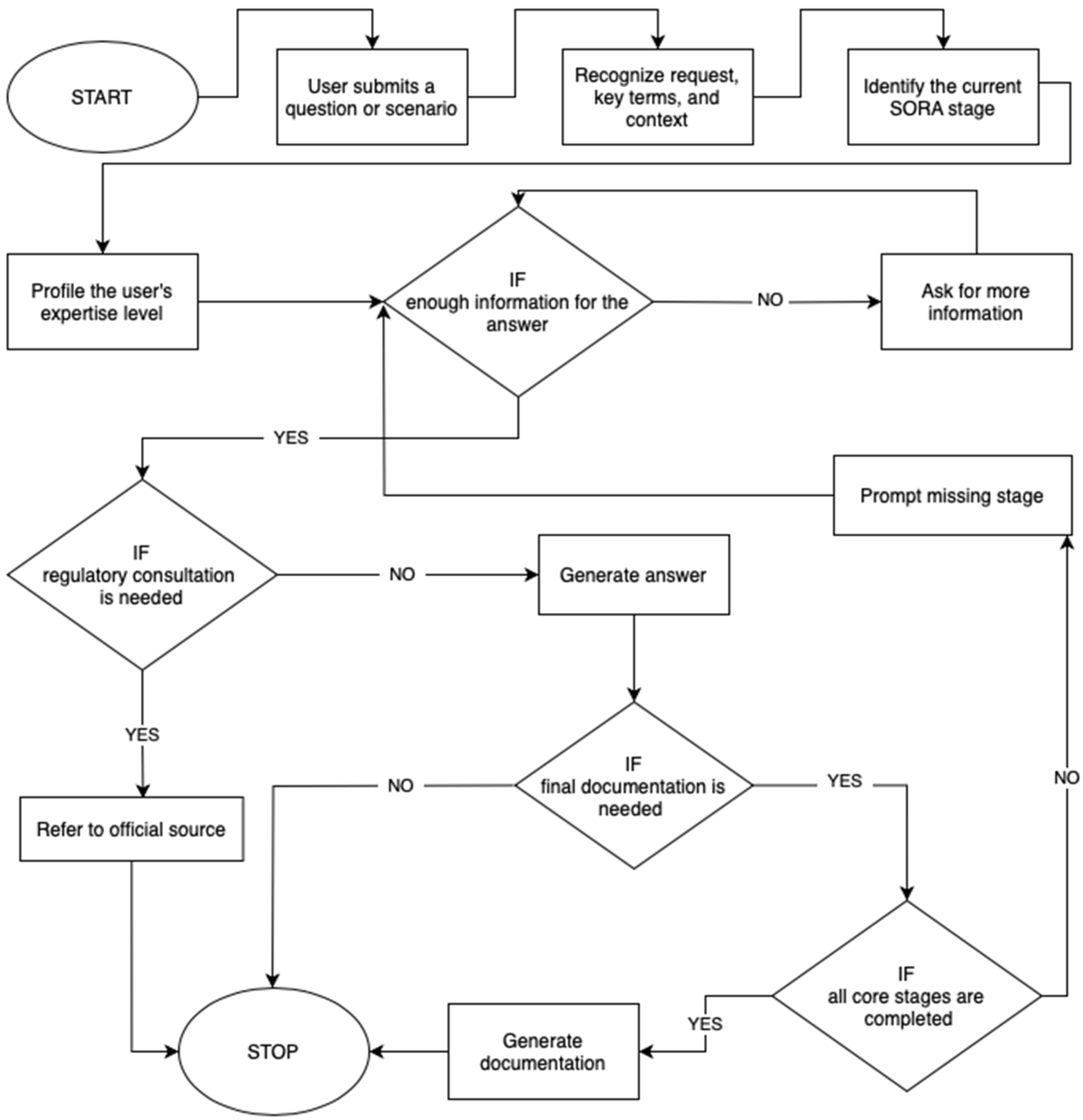

The interaction logic between the user and the AI assistant is implemented as a dynamic process in which each step depends on the context and content of the user’s input (Figure 2). Each session begins with a user-submitted question or scenario related to SORA risk assessment. The assistant first identifies the relevant terminology, the subject of the request, and the current stage of the SORA process to which it relates. It then performs user profiling, as the style and depth of the response are adapted depending on whether the user is a beginner or an advanced operator.

Figure 2.

UX logic of user interaction with the SORA AI assistant. This flowchart depicts the user’s journey through the key stages of SORA with support from the assistant—from initial inquiry to final documentation generation—featuring context adaptation and regulatory checks.

If the information provided by the user is insufficient to formulate a relevant response, the assistant prompts the user to supply the missing data or redirects them to a previous stage of the process. Once sufficient input is available, a structured and context-specific answer is generated. Before proceeding to documentation generation, the assistant checks whether regulatory consultation is necessary. If so, the user is referred to an appropriate official source—such as EASA or a national competent authority. If no consultation is required, the assistant evaluates whether the response should include a structured framework for generating final documentation.

In cases where the user requests support with documentation but has not yet completed all key SORA stages (i.e., GRC, ARC, SAIL, OSO), the assistant will guide the user back to the relevant step in order to complete the logical sequence. Only once all critical elements have been addressed and there is no need for regulatory referral does the assistant proceed to generate a structured documentation outline and conclude the process (Table 4). This ensures that the user progresses through the SORA methodology in a coherent, traceable, and logically substantiated manner.

Table 4.

Example dialog: the AI-assistant guides the user through the SORA process.

This type of guided dialog is built upon a predefined logic designed to enable progressive, practice-based learning that leads to a comprehensive understanding and execution of the risk assessment. A similar approach to mission analysis using SORA logic has been implemented in the web-based application developed by Del Guercio [18].

4.5. Real-World Scenario Validation: Application of the Assistant in a Mission Planning Context

To further validate the practical utility of the AI-based assistant, a real-world testing scenario was conducted involving partially completed documentation for a drone mission under the SORA framework. The test simulated a full workflow—from initial user input to the generation of a final document structure—centered on a BVLOS operation in controlled airspace. The assistant was tasked with interpreting and completing the provided inputs, identifying missing or inconsistent elements, and guiding the user through the key SORA stages, including assessment of GRC, identification of ARC, and derivation of the appropriate SAIL level. Throughout the session, the assistant demonstrated the ability to request missing information, offer structured and context-sensitive guidance, and refer to official sources when regulatory clarification was needed, thereby showcasing its capabilities through the following concrete actions.

1. Identification of missing regulatory references in official forms

During document completion for a real SORA-based application process, the assistant demonstrated awareness of formal regulatory requirements by identifying unmarked or absent elements in official templates. In particular, within the “Application for Operational Authorisation,” the assistant detected that the field for referencing the applicable risk assessment methodology was left blank. Drawing on its procedural knowledge of SORA documentation standards, it proposed an exact text completion to ensure proper traceability and regulatory alignment.

AI-assistant output: “We should tick ‘☒ SORA version 2.0’ or specify another version, if different.”

This behavior illustrates the assistant’s utility not only as a form-filler but also as a procedural compliance validator, helping ensure that submitted documentation meets expectations from aviation authorities such as the Bulgarian Civil Aviation Authority.

2. Determination of Air Risk Class (ARC) based on operational and spatial context

The assistant was able to assess the correct ARC level for the operation by integrating information about location, airspace class, and altitude. In this case, the user declared that the operation would take place in Sofia, within a city park, under VLOS conditions, and at an altitude below 30 m. Recognizing that the operation was within Class C controlled airspace, the assistant explained that—despite the low altitude—the ARC should conservatively be set to “ARC-c” due to lack of special coordination or NOTAM.

AI-assistant output: “In your case: Sofia is within controlled airspace (Class C) … so we should assume ARC-c. … For your operation, you should mark ARC-c for the operational volume. ARC-c is also assumed for the adjacent volume.”

This scenario demonstrates how the assistant functions as a domain-aware advisor, capable of applying qualitative rules from the SORA methodology to real-world mission profiles and translating them into accurate risk classification values.

3. Structured population of the Compliance evidence table using cross-references

When tasked with completing the Compliance table for a SORA SAIL I operation, the assistant guided the user through referencing appropriate mitigation measures and their documentation. For each of the required risk mitigation elements and OSOs (Operational Safety Objectives), it proposed short justification statements and linked them to corresponding sections of the Operations Manual or ERP. For example:

AI-assistant output: “The 1:1 buffer procedure is described in the Operations Manual, section X.X.”

AI-assistant output: “The operator’s competence is proven through an A2 certificate and training, as described in the Operations Manual, section X.X.”

This structured approach allowed the user to construct a traceable and verifiable compliance portfolio, enhancing both clarity and acceptability for regulatory review. The assistant effectively acted as a synthesis engine for linking technical content with procedural justification.

4. Identification of scenario inconsistencies based on technical specifications

During the interaction with partially completed documentation for a real drone operation scenario, the assistant demonstrated the ability to detect contextual discrepancies between the declared environmental conditions and the platform’s technical limitations. Specifically, when the scenario described the flight as taking place in unspecified weather conditions, the assistant analyzed the provided equipment details and identified that the UAV in question lacked certification for wet or adverse weather environments. As a result, it proposed a revision of the scenario to explicitly specify dry weather as an operational constraint. This recommendation not only improved internal consistency but also aligned the risk assessment with realistic mitigation assumptions, ultimately supporting a lower and more defensible Ground Risk Class (GRC).

AI-assistant output: “Since the drone is not certified for wet conditions, I recommend modifying the scenario to assume dry weather operation only. This will improve plausibility and help reduce the baseline GRC.”

This behavior highlights the assistant’s potential to act not merely as a static explainer, but as an intelligent reviewer capable of validating scenario logic and proactively suggesting corrections based on cross-referenced parameters.

This test scenario confirmed the assistant’s ability to guide users through key SORA processes by identifying missing data, validating assumptions, and generating structured, regulation-aligned content. Beyond simple question-answering, it functioned as a reliable co-pilot, enhancing completeness, consistency, and compliance in operational planning. The assistant proved especially valuable for less experienced operators, enabling them to prepare submission-ready documentation with reduced risk of rejection.

5. Conclusions

The AI assistant developed in this study—based on a customized language model—shows good results in interpreting and conveying the logic of the SORA methodology in a manner that is accessible and adaptable to various user profiles. The project shows that through carefully structured terminology, embedded step-by-step dialog logic, and strict adherence to regulatory constraints, it is possible to create a digital tool that does more than provide answers; it supports users throughout the entire risk assessment process, from defining the operational concept to justifying mitigation measures and assembling the final documentation.

The scientific contribution of this work lies in the design of a UX-driven interaction model tailored to regulatory contexts, as well as in the translation of specialized aviation terminology into an intuitive language interface. The assistant has demonstrated potential for application in self-training, educational settings, and operational environments, particularly where time efficiency and assessment accuracy are critical. Similar capabilities are increasingly reflected in intelligent learning systems across educational domains [19].

Future development of the model will include the integration of the Cyber Safety Extension [20], in alignment with the requirements of SORA 2.5 and the conceptual directions outlined in relevant academic literature [21]. This extension will introduce the processing of cybersecurity-related concepts such as the MITRE ATT&CK framework, NIST 800–53, and Zero Trust architectures, enabling the assessment of cyber risks in more complex drone operations. Additional development pathways include multilingual adaptation, embedding into UTM systems and simulation environments, as well as validation through real-world scenarios and user testing.

The creation of such an assistant represents a step toward the digital transformation of risk assessment and regulatory alignment in unmanned aviation, opening new possibilities for expanding automated expertise and supporting the safe, informed, and sustainable integration of more operators into the airspace ecosystem. Future iterations of the model may also incorporate Bayesian risk modeling approaches, as proposed by Barber [22].

Author Contributions

Conceptualization, A.P. and V.S.; methodology, A.P.; software development and validation, A.P.; formal analysis, V.S.; investigation, A.P.; resources, V.S.; data curation, A.P.; writing—original draft preparation, A.P.; writing—review and editing, V.S.; visualization, A.P.; supervision, V.S.; project administration, V.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data supporting this study are not publicly available due to security concerns. The research involves the development and analysis of an AI-based assistant for SORA risk assessment and operational safety consulting in unmanned aircraft systems. Public release of the underlying data, models, or logical structures could pose potential risks by enabling misuse or adversarial exploitation, thereby compromising the safety and integrity of drone operations. Access to data may be granted under specific conditions and upon reasonable request, subject to ethical and security review.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| ARC | Air Risk Class |

| BVLOS | Beyond Visual Line of Sight |

| ConOps | Concept of Operations |

| EASA | European Union Aviation Safety Agency |

| GRC | Ground Risk Class |

| JARUS | Joint Authorities for Rulemaking on Unmanned Systems |

| MITRE ATT&CK | MITRE Adversarial Tactics, Techniques, and Common Knowledge |

| NIST | National Institute of Standards and Technology |

| OSO | Operational Safety Objective |

| SAIL | Specific Assurance and Integrity Level |

| SORA | Specific Operations Risk Assessment |

| STS | Standard Scenario |

| UAS | Unmanned Aircraft Systems |

| UTM | Unmanned Traffic Management |

| UX | User Experience |

| VLOS | Visual Line of Sight |

References

- Andrysiak, J.; Fraczek, S. Risks of Drone Use in Light of Literature Studies. Sensors 2024, 24, 1205. Available online: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10892979 (accessed on 13 February 2025). [CrossRef] [PubMed]

- JARUS. Guidelines on Specific Operations Risk Assessment (SORA). 2017. Available online: https://jarus-rpas.org (accessed on 10 February 2025).

- EASA. Easy Access Rules for Unmanned Aircraft Systems; European Union Aviation Safety Agency: Brussels, Belgium, 2024. [Google Scholar]

- Martinez, C.; Sanchez-Cuevas, P.J.; Gerasimou, S.; Bera, A. SORA Methodology for Multi-UAS Airframe Inspections in an Airport. Drones 2021, 5, 141. Available online: https://www.mdpi.com/2504-446X/5/4/141 (accessed on 15 January 2025). [CrossRef]

- Schnüriger, P.; Schreiber, J.; Widmer, K.; Lenhart, P.M. SORA Tool—A Specific Operation Risk Assessment Tool for Civilian Drone Operations. Drones 2025, 9, 1–11. [Google Scholar] [CrossRef]

- Jeelani, I.; Gheisari, M. Safety Challenges of UAV Integration in Construction. Saf. Sci. 2021, 144, 105473. Available online: https://www.sciencedirect.com/science/article/pii/S0925753521003167 (accessed on 12 February 2025). [CrossRef]

- Tran, T.D. Cybersecurity Risk Assessment for Unmanned Aircraft Systems. Ph.D. Thesis, Université Grenoble Alpes, Saint-Martin-d’Hères, France, 2021. Available online: https://hal.science/tel-03200719v1 (accessed on 4 February 2025).

- Available online: http://jarus-rpas.org/publications/ (accessed on 19 January 2025).

- Available online: https://www.eurocontrol.int/ (accessed on 22 February 2025).

- Wyszywacz, W. SORA: Assumptions and Evaluation of the Method. Rev. Eur. De Derecho De La Naveg. Marítima Y Aeronáutica 2023, 39, 25–45. [Google Scholar]

- Denney, E.; Pai, G. Tool Support for Assurance Case Development. Autom. Softw. Eng. 2018, 25, 435–499. [Google Scholar] [CrossRef]

- Pinto, G.; De Souza, C.; Rocha, T.; Steinmacher, I.; Souza, A.; Monteiro, E. Developer Experiences with a Contextualized AI Coding Assistant. arXiv 2023, arXiv:2311.18452. [Google Scholar]

- Nogueira, A.; Silva, J.; Reis, A. Unmanned Aircraft Systems: A Pathway to Obtain a Design Verification Report. J. Airl. Airpt. Manag. 2024, 14, 19–37. Available online: https://www.jairm.org/index.php/jairm/article/viewFile/421/160 (accessed on 9 February 2025). [CrossRef]

- Denney, E.; Pai, G.; Whiteside, I. Modeling the Safety Architecture of UAS Flight Operations. In Proceedings of the Computer Safety, Reliability, and Security (SAFECOMP 2017), LNCS 10488, Trento, Italy, 13–15 September 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 162–178. [Google Scholar] [CrossRef]

- Denney, E.; Pai, G.; Whiteside, I. Model-Driven Development of Safety Architectures. In Proceedings of the 2017 ACM/IEEE 20th International Conference on Model Driven Engineering Languages and Systems (MODELS), Austin, TX, USA, 17–22 September 2017; pp. 156–166. [Google Scholar] [CrossRef]

- Maharaj, A.V.; Qian, K.; Bhattacharya, U.; Fang, S.; Galatanu, H.; Garg, M.; Hanessian, R.; Kapoor, N.; Russell, K.; Vaithyanathan, S.; et al. Evaluation and Continual Improvement for an Enterprise AI Assistant. arXiv 2024, arXiv:2407.12003. [Google Scholar]

- Castro, D.G.; García, E.V. Safety Challenges for Integrating U-Space in Urban Environments. In Proceedings of the IEEE International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 15–18 June 2021; Available online: https://ieeexplore.ieee.org/document/9476883 (accessed on 15 January 2025).

- Del Guercio, A.M. Development of a WebApp for Safe Mission Planning for UAS in Urban Areas. Master’s Thesis, Politecnico di Torino, Turin, Italy, 2024. Available online: https://webthesis.biblio.polito.it/secure/33138/1/tesi.pdf (accessed on 24 February 2025).

- Rana, M.; Saboor, K.; Vishal, S.; Hussain, A. AI-Enabled Intelligent Assistant for Personalized and Adaptive Learning in Higher Education. arXiv 2023, arXiv:2309.10892. [Google Scholar]

- Available online: http://jarus-rpas.org/wp-content/uploads/2024/06/SORA-v2.5-Cyber-Extension-Release-JAR_doc_31.pdf (accessed on 23 January 2025).

- Tran, T.D.; Thiriet, J.M.; Marchand, N.; El Mrabti, A. A Cybersecurity Risk Framework for Unmanned Aircraft Systems under Specific Category. J. Intell. Robot. Syst. 2022, 104, 4. Available online: https://link.springer.com/article/10.1007/s10846-021-01512-0 (accessed on 16 January 2025). [CrossRef]

- Barber, D. Bayesian Reasoning and Machine Learning; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).