A Secure and Robust Multimodal Framework for In-Vehicle Voice Control: Integrating Bilingual Wake-Up, Speaker Verification, and Fuzzy Command Understanding

Abstract

1. Introduction

- •

- •

- An optimized ECAPA-TDNN model enhanced with spectral augmentation, sliding window feature fusion, and an adaptive threshold mechanism, enabling accurate and efficient speaker verification on resource-constrained hardware [25].

- •

- •

- A comprehensive integration and validation of the proposed modules into a fully functional, hardware-agnostic framework, demonstrating end-to-end performance gains and practical viability through extensive experiments.

2. Methodology: Key Technologies of the Proposed System

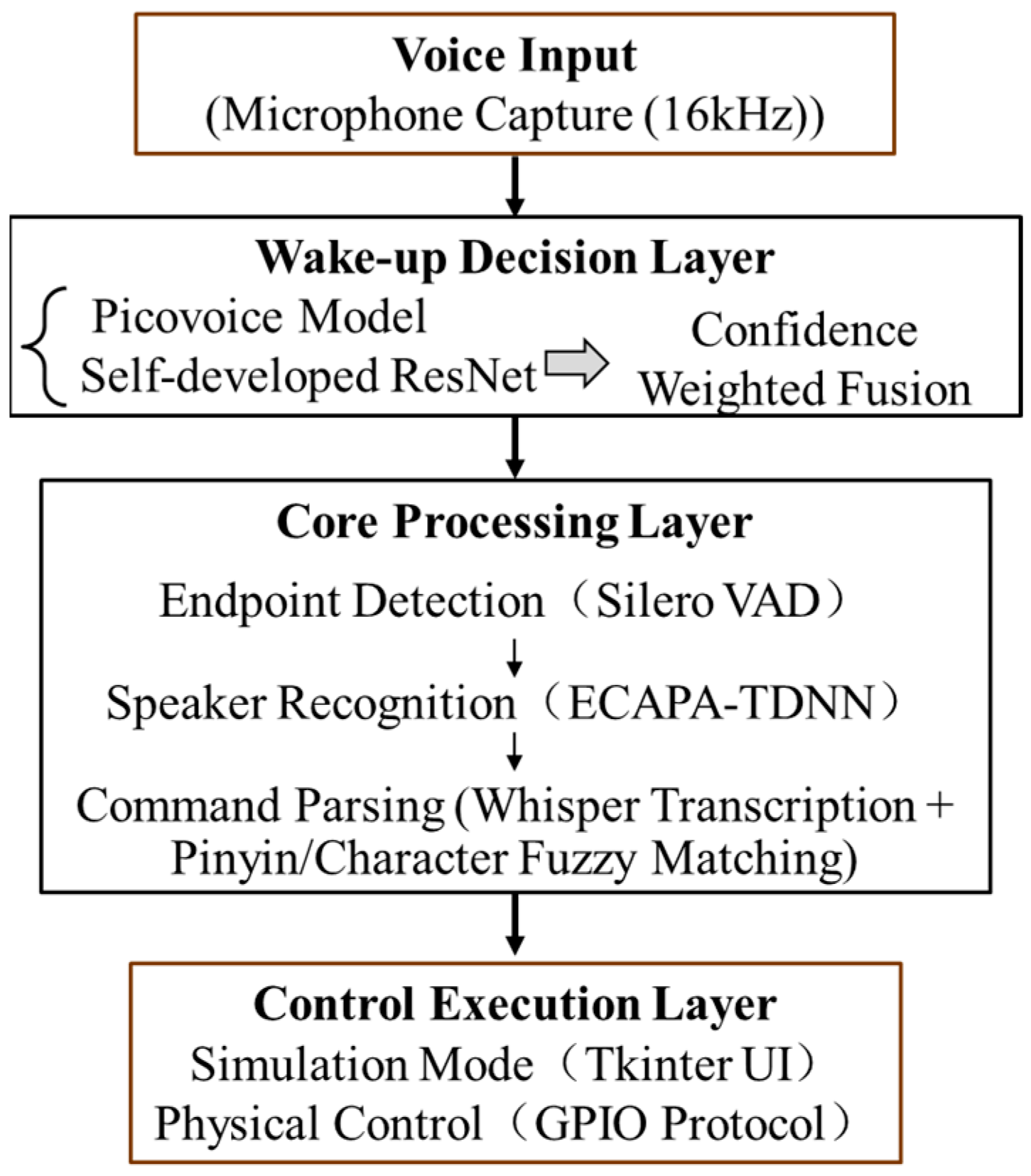

2.1. System Architecture Overview

2.1.1. Audio Preprocessing and Input

2.1.2. System Workflow

- (1)

- Audio Input Layer: Raw audio is continuously captured and preprocessed as described in Section 2.1.1. The stream of processed audio features is then delivered to the subsequent layer.

- (2)

- Wake-up Decision Layer: The feature stream is continuously analyzed by a dual-channel wake-up mechanism (detailed in Section 2.2). This mechanism operates two models in parallel: a commercial engine (Picovoice Porcupine) for the English wake word (“Hey Porcupine”) and a custom, lightweight ResNet-Lite model for the Chinese wake word (“Xiaotun”). An arbitration logic fuses their confidence scores to make a robust, language-agnostic wake-up decision. Upon a positive detection, the system activates, and a segment of audio containing the user’s subsequent command is forwarded to the next layer.

- (3)

- Core Processing Layer: This layer undertakes three critical tasks sequentially upon receiving the audio segment from the Wake-up Decision Layer:

- Voice Activity Detection (VAD) and Endpointing: A Silero VAD module first processes the audio segment to precisely detect the start and end points of the user’s spoken command, removing leading and trailing silence.

- Speaker Verification: The segmented speech is then fed into our improved ECAPA-TDNN model (Section 2.3) to generate a speaker embedding and verify the user’s identity against enrolled profiles.

- Command Parsing: Only upon successful speaker verification, the verified audio segment is transcribed to text by the Whisper ASR engine. The transcribed text is then interpreted by our dual-tier fuzzy command matching algorithm (Section 2.4), which determines the user’s intent by calculating similarity against a set of predefined commands at both character and pinyin levels.

- (4)

- Control Execution Layer: This layer acts as the execution endpoint. It receives the validated command from the Core Processing Layer and executes it either on a custom Tkinter-based software simulator (developed in-house using Python libraries for prototyping and validation) or on actual in-vehicle hardware via a hardware-agnostic control interface (for deployment), facilitating a seamless “simulation-to-deployment” transition.

2.2. Dual-Model Wake-Up Mechanism

2.2.1. Hybrid Architecture Design

- •

- English Channel: Utilizes the commercially available Picovoice Porcupine engine, optimized for the wake word “Hey Porcupine”.

- •

- Chinese Channel: Employs a custom-developed ResNet-Lite model, specifically designed for accurate detection of the Chinese wake word “Xiaotun”.

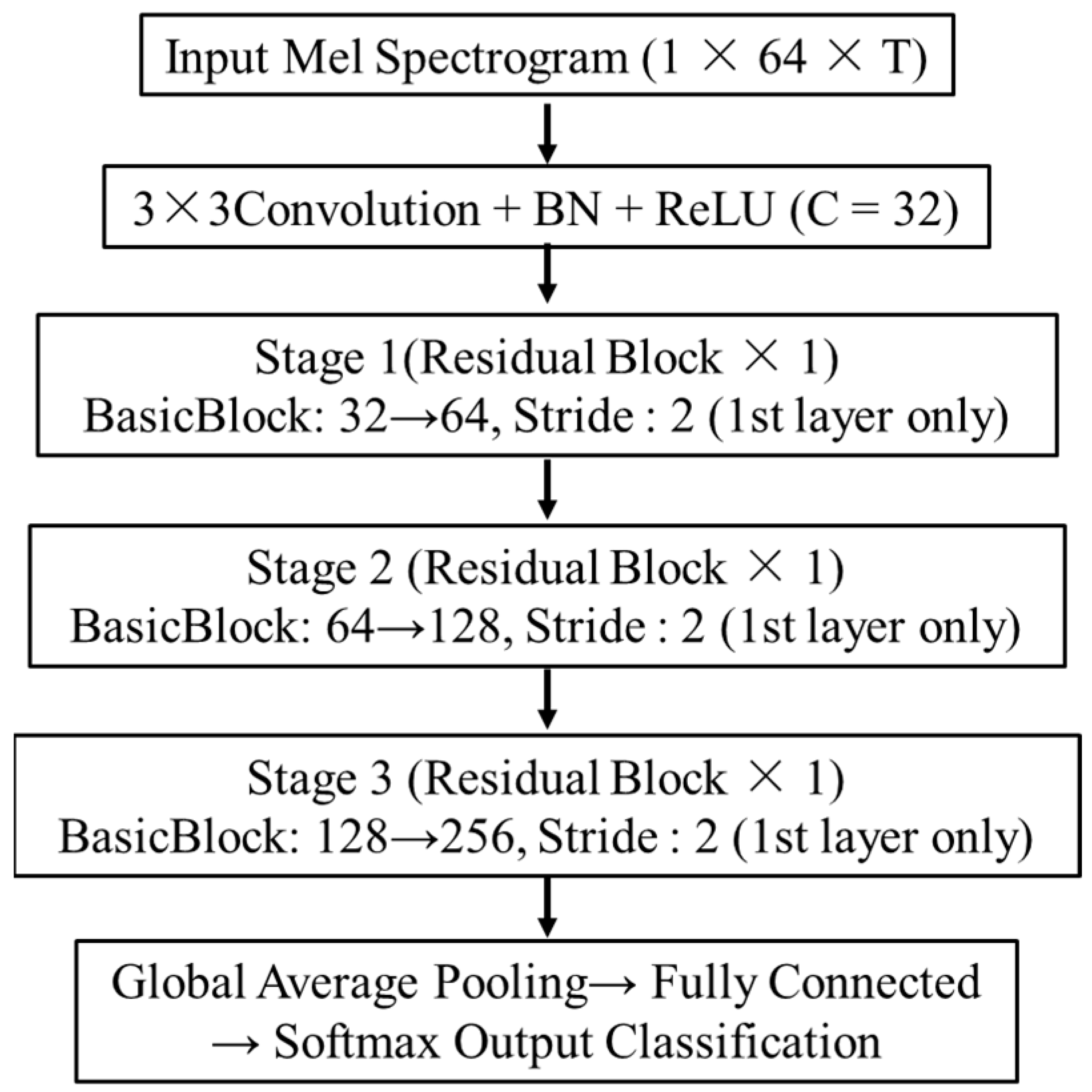

2.2.2. Lightweight Optimization of ResNet-Lite

- (1)

- Stage Reduction: The original four residual stages were reduced to three, halving the network depth from 18 to 9 weighted layers.

- (2)

- Kernel Size Reduction: The large 7 × 7 convolution in the input stem was replaced with a smaller 3 × 3 kernel.

- (3)

- Pooling Removal: The initial max-pooling layer was omitted to preserve fine-grained temporal and spectral features crucial for discerning short wake-word syllables and Mandarin tones.

- (4)

- Progressive Channel Scaling: The number of convolutional channels scales as 32 → 64 → 128 → 256 across stages. A down-sampling stride of 2 is applied only at the first convolutional layer of each stage, while all other layers use a stride of 1 to better preserve temporal details:

2.3. Voiceprint Recognition Optimization: Real-Time Speaker Verification Based on an Improved ECAPA-TDNN

- •

- At the model architecture level, we design a structurally enhanced ECAPA-TDNN to improve feature representation and deep modeling capabilities (Section 2.3.1);

- •

- During acoustic feature extraction, we introduce a sliding window feature fusion mechanism to enhance robustness against short utterances (Section 2.3.2);

- •

- At the decision stage, we develop an adaptive threshold mechanism to improve system reliability under multi-speaker and noisy conditions (Section 2.3.3).

2.3.1. Improved ECAPA-TDNN Architecture

- (1)

- Input Augmentation with FbankAug

- (2)

- Feature Processing Pipeline Enhancement (log + CMVN)

- (3)

- Forward Path Improvement via Cross-Layer Residuals

- (4)

- Lightweight Output Design

2.3.2. Sliding Window Feature Fusion

2.3.3. Adaptive Threshold Decision Mechanism

- •

- The SNR of the input audio is estimated, yielding a raw SNR value.

- •

- The SNR is constrained within the range [0, 20] dB:

- •

- The dynamic threshold is computed via linear interpolation, allowing it to increase gradually from 0.34 (in high-noise conditions) to 0.44 (in quiet conditions) as the SNR improves:

2.4. Fuzzy Command Matching Algorithm

3. Experiments and Results

3.1. Experimental Data and Setup

3.1.1. Data Composition and Statistics

- •

- Wake-up Word Data: The training and testing sets for the self-developed Chinese wake-up word “Xiaotun” were constructed from a private dataset recorded by 20 native speakers (aged 22–45, representing 10 major Chinese dialect regions, e.g., Qingdao and Dezhou of Shandong, Shaoguang and Guangzhou of Guangdong, Nanchang of Jiangxi, Xiamen, Quanzhou and Sanming of Fujian, Zhengzhou of Henan, Wuhan of Hubei, Chengdu of Sichuan, Chongqing), supplemented with synthetic speech generated by a Text-to-Speech (TTS) engine. The data includes a balanced mix of positive (wake-word) and negative (non-wake-word) samples. The English wake-up word “Hey Porcupine” was handled by the commercial Picovoice engine using its built-in model.

- •

- Speaker Verification Data: The model was trained on the large-scale English VoxCeleb2 dataset. For testing, the official test sets of VoxCeleb2 (English) and CN-Celeb (Chinese) were used to evaluate cross-lingual and accent generalization. The test sets were augmented with three typical in-vehicle acoustic conditions: quiet, background music, and simulated high-speed driving noise.

- •

- Command Recognition Data: A private dataset was collected for command recognition, comprising 6871 real recordings of 16 in-vehicle command categories (e.g., “open window,” “adjust temperature”) spoken by 20 native Chinese speakers (same as the Wake-up Word Data) across the three noise conditions.

3.1.2. Data Preprocessing and Feature Extraction

- •

- Audio Preprocessing: Raw audio was resampled to a 16 kHz mono channel. The waveform amplitude was normalized to the range [−1, 1].

- •

- Feature Extraction: We extracted 80-dimensional log-Mel filterbank features. The features were computed using a 25 ms Hamming window with a 10 ms frame shift.

- •

- Feature Normalization: CMVN was applied to the Fbank features at the utterance level to reduce session variability and improve model convergence. This was integrated within the model’s forward pass.

3.1.3. Experimental Platform and Hyperparameters

3.2. Evaluation of the Dual-Channel Wake-Up Mechanism

3.2.1. Experimental Design and Rationale

- (1)

- The Commercial Baseline: The native performance of the Picovoice Porcupine engine for the English wake-word “Hey Porcupine” was measured to establish a reference benchmark for a mature, commercial-grade solution.

- (2)

- The Integrated Commercial Channel: The performance of the same Picovoice engine was re-evaluated within our integrated system framework. This direct comparison aims to quantify any performance overhead or degradation introduced by our unified front-end audio processing pipeline and system orchestration.

- (3)

- The Self-Developed ResNet-Lite Channel: The performance of our custom, lightweight ResNet-Lite model for the Chinese wake-word “Xiaotun” was evaluated. In the absence of a directly comparable open-source Chinese model, this evaluation serves to demonstrate the standalone viability and effectiveness of our custom-developed solution in fulfilling a critical system requirement.

3.2.2. Results and Discussion

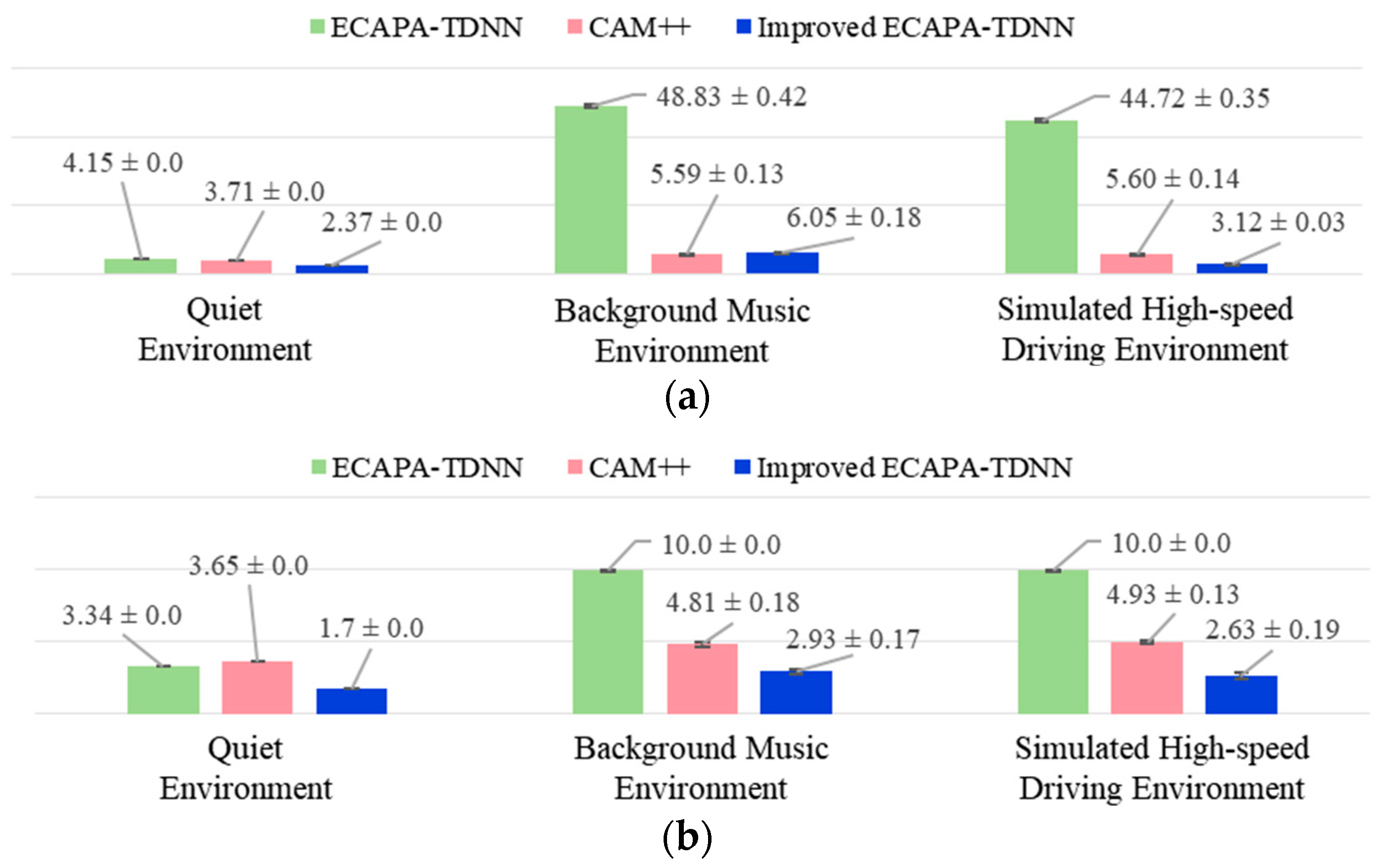

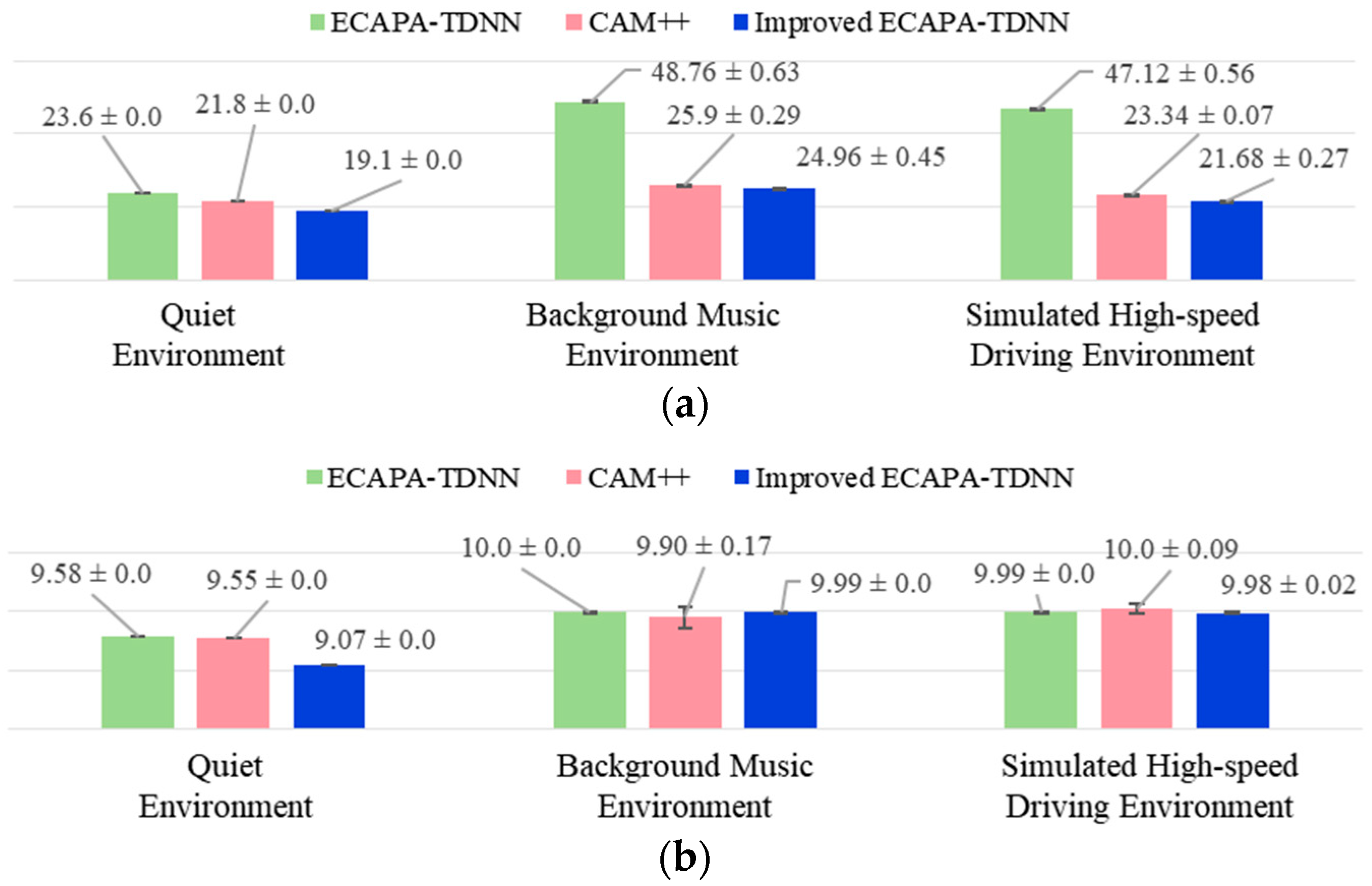

3.3. Performance Evaluation of Speaker Recognition

3.3.1. Comparative Models and Evaluation Plan

3.3.2. Comparative Results and Analysis

- (1)

- Superiority over the Standard Baseline

- (2)

- Highly Competitive Performance against the State of the Art

- (3)

- Conclusion on Practical Value

3.4. Robustness Evaluation of Command Recognition

3.4.1. Experimental Design for Fuzzy Matching Validation

3.4.2. Results and Analysis of Fuzzy Matching Strategy

4. Integrated System Performance and Discussion

4.1. Analysis of Integrated Advantages and Synergistic Effects

- (1)

- Synergy between Robust Wake-up and Secure Verification: The dual-channel wake-up mechanism (Section 3.2) serves as a robust gatekeeper, ensuring that only intentional speech triggers the computationally intensive downstream processes. This is critically complemented by the improved ECAPA-TDNN model (Section 3.3), which acts as a security checkpoint. The effectiveness of this sequential filter is demonstrated by the speaker verification module’s high accuracy under noise, which prevents the execution of commands from unverified speakers, a vulnerability in conventional systems. This layered approach ensures that system responsiveness is reserved for authenticated users, enhancing both security and resource efficiency.

- (2)

- Decoupling of Authentication from Semantic Understanding: A key architectural advantage is the clear separation of speaker identity verification from command intent recognition. The fuzzy matching algorithm (Section 3.4) operates only on transcripts from verified speakers. This design means that the system’s high semantic flexibility—its ability to understand varied and imprecise commands—is a privilege granted exclusively after successful authentication. The results from Section 3.4 demonstrate that this flexibility is maintained across different ASR performance levels and noise conditions, proving that the security module does not compromise the user experience for authorized individuals.

- (3)

- End-to-End Robustness through Modular Optimization: The experimental results collectively demonstrate robustness against a spectrum of real-world challenges. The wake-up module handles cross-lingual activation in noise, the speaker verification module maintains low error rates despite short, noisy utterances, and the command matching algorithm tolerates ASR errors and colloquial expressions. When integrated, these capabilities enable the system to handle complex scenarios. For instance, a command issued with a strong accent in a noisy cabin can be successfully processed: it is reliably woken up, the speaker is correctly verified, and the non-standard command phrase is accurately understood. This end-to-end resilience is a direct result of the targeted optimizations in each module and their effective orchestration within the proposed architecture.

4.2. Limitations and Future Work

- (1)

- Lack of Embedded Platform Validation: The system has not yet been deployed on actual embedded processing platforms; all tests were conducted via a PC-based simulator. Future work will therefore prioritize the deployment and evaluation of the system’s operational efficiency on resource-constrained automotive-grade hardware.

- (2)

- Insufficient Diversity in Speech Samples: The current test sets, while covering multiple scenarios, do not fully capture the vast diversity of accents, speech rates, and real-world noise encountered in vehicles. A key future direction is to collect and incorporate richer, more challenging corpora to further validate and enhance the system’s universality and robustness.

- (3)

- Limited Context Understanding: The system currently operates on a single-command basis, lacking multi-turn dialog memory and contextual analysis. To enable more natural interaction, future enhancements will focus on integrating lightweight semantic parsing and context-aware intent recognition technologies.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rochat, J.L. Highway Traffic Noise. Acoust. Today 2016, 12, 38–47. [Google Scholar]

- Chakroun, R.; Frikha, M. Robust Features for Text-Independent Speaker Recognition with Short Utterances. Neural Comput. Appl. 2020, 32, 13863–13883. [Google Scholar] [CrossRef]

- Hanifa, R.M.; Isa, K.; Mohamad, S. A Review on Speaker Recognition: Technology and Challenges. Comput. Electr. Eng. 2021, 90, 107005. [Google Scholar] [CrossRef]

- Li, J.; Chen, C.; Azghadi, M.R.; Ghodosi, H.; Pan, L.; Zhang, J. Security and Privacy Problems in Voice Assistant Applications: A Survey. Comput. Secur. 2023, 134, 103448. [Google Scholar] [CrossRef]

- Chen, T.; Chen, C.; Lu, C.; Chan, B.; Cheng, Y.; Chuang, H. A Lightweight Speaker Verification Model For Edge Device. In Proceedings of the 2023 Asia Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC, Taipei, Taiwan, 31 October–3 November 2023; pp. 1372–1377. [Google Scholar]

- Cihan, H.; Wu, Y.; Peña, P.; Edwards, J.; Cowan, B. Bilingual by Default: Voice Assistants and the Role of Code-Switching in Creating a Bilingual User Experience. In Proceedings of the 4th Conference on Conversational User Interfaces 4th Conference on Conversational User Interfaces, Glasgow, UK, 26–28 July 2022. [Google Scholar]

- Hu, Q.; Zhang, Y.; Zhang, X.; Han, Z.; Yu, X. CAM: A Cross-Lingual Adaptation Framework for Low-Resource Language Speech Recognition. Inf. Fusion 2024, 111, 102506. [Google Scholar] [CrossRef]

- Kunešová, M.; Hanzlíček, Z.; Matoušek, J. An Exploration of ECAPA-TDNN and x-Vector Speaker Representations in Zero-Shot Multi-Speaker TTS. In Text, Speech, and Dialogue, Proceedings of the TSD 2025; Ekštein, K., Konopík, M., Pražák, O., Pártl, F., Eds.; Lecture Notes in Computer, Science; Springer: Cham, Switzerland, 2026; Volume 16029. [Google Scholar]

- Desplanques, B.; Thienpondt, J.; Demuynck, K. ECAPA-TDNN: Emphasized Channel Attention, Propagation and Aggregation in TDNN Based Speaker Verification. In Proceedings of the Interspeech 2020, Shanghai, China, 25–29 October 2020. [Google Scholar]

- Liu, S.; Song, Z.; He, L. Improving ECAPA-TDNN Performance with Coordinate Attention. J. Shanghai Jiaotong Univ. Sci. 2024. [Google Scholar] [CrossRef]

- Wang, S. Overview of Speaker Modeling and Its Applications: From the Lens of Deep Speaker Representation Learning. IEEEACM Trans. Audio Speech Lang. Process. 2024, 32, 4971–4998. [Google Scholar] [CrossRef]

- Snyder, D.; Garcia-Romero, D.; Sell, G.; Povey, D.; Khudanpur, S. X-Vectors: Robust DNN Embeddings for Speaker Recognition. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5329–5333. [Google Scholar]

- Karo, M.; Yeredor, A.; Lapidot, I. Compact Time-Domain Representation for Logical Access Spoofed Audio. IEEE/ACM Trans. Audio Speech Lang. Process. 2024, 32, 946–958. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhao, Z.; Wu, S.; Zhang, X.; Zhang, P.; Yan, Y. Multi-Branch Coordinate Attention with Channel Dynamic Difference for Speaker Verification. IEEE Signal Processing Letters 2025, 32, 3225–3229. [Google Scholar] [CrossRef]

- Li, W.; Yao, S.; Wan, B. TDNN Achitecture with Efficient Channel Attention and Improved Residual Blocks for Accurate Speaker Recognition. Sci. Rep. 2025, 15, 23484. [Google Scholar] [CrossRef]

- Yang, W.; Wei, J.; Lu, W.; Li, L.; Lu, X. Robust Channel Learning for Large-Scale Radio Speaker Verification. IEEE J. Sel. Top. Signal Process. 2025, 19, 248–259. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Xu, T.; Brockman, G.; McLeavey, C.; Sutskever, I. Robust Speech Recognition via Large-Scale Weak Supervision. In Proceedings of the 40th International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; Volume 202, pp. 28492–28518. [Google Scholar]

- Graham, C.; Roll, N. Evaluating OpenAI’s Whisper ASR: Performance Analysis across Diverse Accents and Speaker Traits. JASA Express Lett. 2024, 4, 025206. [Google Scholar] [CrossRef]

- Rong, Y.; Hu, X. Fuzzy String Matching Using Sentence Embedding Algorithms. In Neural Information Processing, Proceedings of the ICONIP 2016; Hirose, A., Ozawa, S., Doya, K., Ikeda, K., Lee, M., Liu, D., Eds.; Lecture Notes in Computer, Science; Springer: Cham, Switzerland, 2016; Volume 9949. [Google Scholar]

- Bini, S.; Carletti, V.; Saggese, A.; Vento, M. Robust Speech Command Recognition in Challenging Industrial Environments. Comput. Commun. 2024, 228, 107938. [Google Scholar] [CrossRef]

- Kheddar, H.; Hemis, M.; Himeur, Y. Automatic Speech Recognition Using Advanced Deep Learning Approaches: A Survey. Inf. Fusion. 2024, 109, 102422. [Google Scholar] [CrossRef]

- Horiguchi, S.; Tawara, N.; Ashihara, T.; Ando, A.; Delcroix, M. Can We Really Repurpose Multi-Speaker ASR Corpus for Speaker Diarization? In Proceedings of the 2025 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Honolulu, HI, USA, 6–10 December 2025. [Google Scholar]

- Lim, H. Lightweight Feature Encoder for Wake-Up Word Detection Based on Self-Supervised Speech Representation. In Proceedings of the ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Sun, X.; Fu, J.; Wei, B.; Li, Z.; Li, Y.; Wang, N. A Self-Attentional ResNet-LightGBM Model for IoT-Enabled Voice Liveness Detection. IEEE Internet Things J. 2023, 10, 8257–8270. [Google Scholar] [CrossRef]

- Park, D.S.; Chan, W.; Zhang, Y.; Chiu, C.; Zoph, B.; Cubuk, D.; Le, Q.V. SpecAugment: A Simple Data Augmentation Method for Automatic Speech Recognition. In Proceedings of the Interspeech 2019, Graz, Austria, 15–19 September 2019. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- OpenSLR. Open Speech and Language Resources. Available online: http://www.openslr.org (accessed on 25 August 2025).

| Item | ResNet-18 | ResNet-Lite | Description |

|---|---|---|---|

| Number of Residual Stages | 4 | 3 | ResNet-Lite removes one residual stage |

| Convolution Kernel Size | 7 × 7 | 3 × 3 | Smaller kernels reduce computational load |

| Max Pooling | Yes | No | Preserves more speech detail |

| Total Weighted Layers | 18 | 9 | Network depth halved |

| Parameters (Approx.) | 11.2 M | 3.5 M | ~70% reduction in parameters |

| Inference Speed (Desktop CPU) | ~35 ms/sample | ~12 ms/sample | Test environment: Intel i5-12400 + PyTorch |

| Memory Usage (Desktop GPU) | ~180 MB | ~64 MB | Test environment: NVIDIA GTX 1660Ti |

| Suitable Deployment | General servers/GPU platforms | Resource-constrained edge devices (Automotive) | Optimized for embedded voice control environments |

| Data Type | Source | Language | # Speakers *1 | # Utterances *1 | Real/Synthetic | Noise Conditions *3 | Purpose |

|---|---|---|---|---|---|---|---|

| Wake-up Word | Private + TTS | Chinese (“Xiaotun”) | 20 + TTS | 2055 (481:1574) *2 | ~1:5 (Real vs. Synthetic) | A, B, or C | Training |

| Private + TTS | Chinese (“Xiaotun”) | 20 + TTS | 491 (100:391) *2 | ~1:5 (Real vs. Synthetic) | A, B, or C | Testing (Chinese Channel) | |

| Picovoice | English (“Hey Porcupine”) | N/A | (Built-in) | N/A | N/A | Testing (English Channel) | |

| Speaker Verification | VoxCeleb2 | English | 5994 | ~710 k | 100% Real | A, B, C, or their random superposition | Training |

| VoxCeleb2 | English | 118 | 20 k (1:1) *2 | 100% Real | A, B, or C | Testing (English Channel) | |

| CN-Celeb | Chinese | 195 | 10 k (1:1) *2 | 100% Real | Inherent noise + A, B, or C | Testing (Chinese Channel) | |

| Command Recognition | Private Dataset | Chinese | 20 | 6871 | 100% Real | A, B, or C | Testing |

| Hyperparameter | Optimizer | Initial Learning Rate | Batch Size | Max Epochs | Weight Decay | Framework |

|---|---|---|---|---|---|---|

| Improved ECAPA-TDNN | Adam | 0.001 | 64 | 80 | 2 × 10−5 | PyTorch |

| ResNet-Lite | Adam | 0.001 | 16 | 100 | Not Applied | PyTorch |

| System | Module | Wake Word | Language | Accuracy (%) in Noise Conditions * | Wake-Up Success Rate (%) in Noise Conditions * | ||||

|---|---|---|---|---|---|---|---|---|---|

| A | B | C | A | B | C | ||||

| Commercial Picovoice | Picovoice (Native) | “Hey Porcupine” | English | 99.95% | 99.76% | 99.96% | 99.51% | 95.38% | 99.76% |

| Integrated Dual-Channel System | Picovoice (In Our System) | “Hey Porcupine” | English | 99.95% | 99.66% | 99.97% | 99.51% | 93.19% | 99.76% |

| ResNet-Lite | “Xiaotun” | Chinese | 78.41% | 70.26% | 65.17% | 66.0% | 56.0% | 75.0% | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Li, Y.; Ren, W.; Wang, X. A Secure and Robust Multimodal Framework for In-Vehicle Voice Control: Integrating Bilingual Wake-Up, Speaker Verification, and Fuzzy Command Understanding. Eng 2025, 6, 319. https://doi.org/10.3390/eng6110319

Zhang Z, Li Y, Ren W, Wang X. A Secure and Robust Multimodal Framework for In-Vehicle Voice Control: Integrating Bilingual Wake-Up, Speaker Verification, and Fuzzy Command Understanding. Eng. 2025; 6(11):319. https://doi.org/10.3390/eng6110319

Chicago/Turabian StyleZhang, Zhixiong, Yao Li, Wen Ren, and Xiaoyan Wang. 2025. "A Secure and Robust Multimodal Framework for In-Vehicle Voice Control: Integrating Bilingual Wake-Up, Speaker Verification, and Fuzzy Command Understanding" Eng 6, no. 11: 319. https://doi.org/10.3390/eng6110319

APA StyleZhang, Z., Li, Y., Ren, W., & Wang, X. (2025). A Secure and Robust Multimodal Framework for In-Vehicle Voice Control: Integrating Bilingual Wake-Up, Speaker Verification, and Fuzzy Command Understanding. Eng, 6(11), 319. https://doi.org/10.3390/eng6110319