A Practical Tutorial on Spiking Neural Networks: Comprehensive Review, Models, Experiments, Software Tools, and Implementation Guidelines

Abstract

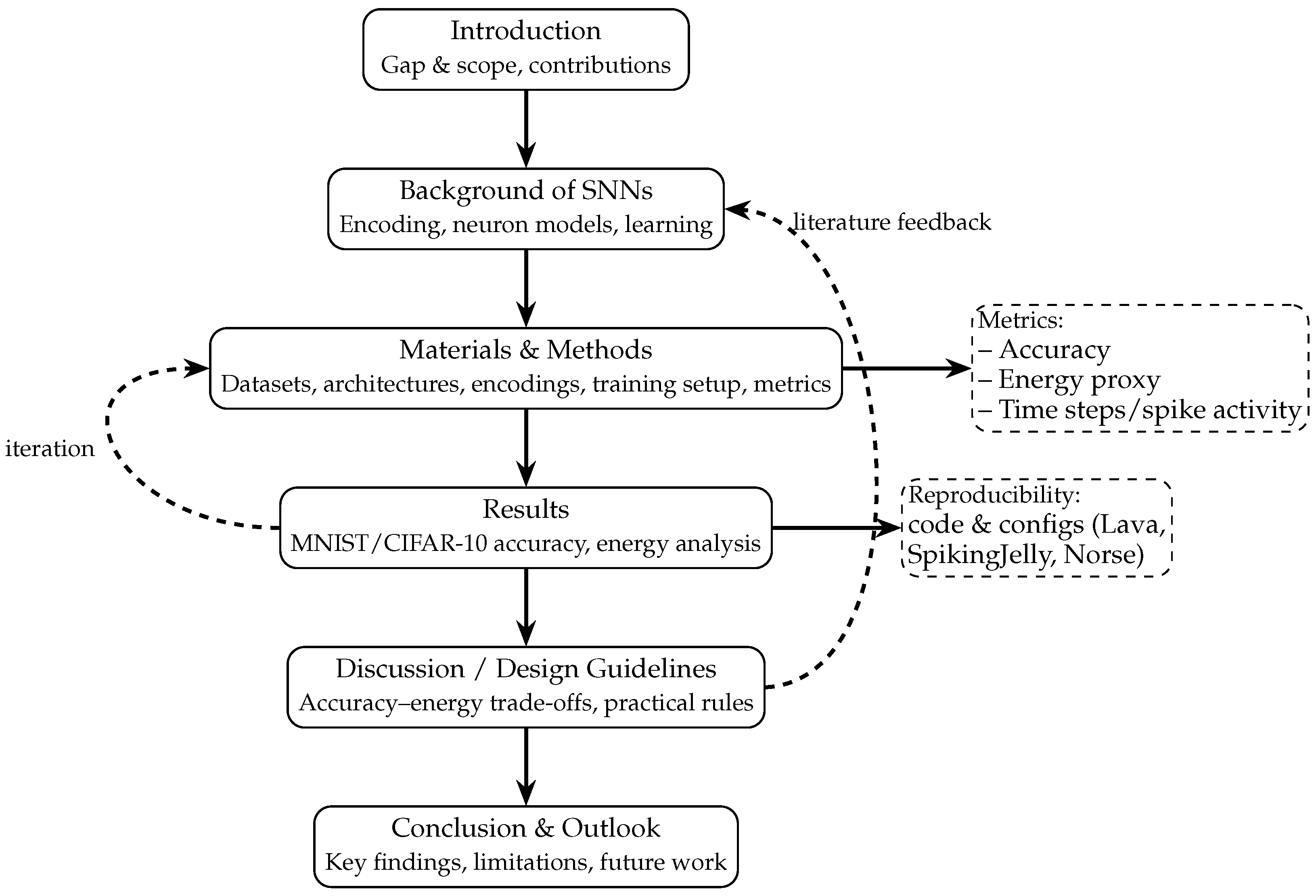

1. Introduction

- We systematize SNN components, as follows: neuron models (integrate-and-fire (IF)/leaky integrate-and-fire (LIF), adaptive leaky integrate-and-fire (ALIF), exponential integrate-and-fire/adaptive exponential integrate-and-fire (EIF/AdEx), resonate-and-fire (RF), Hodgkin–Huxley (HH), Izhikevich (IZH), resonate-and-fire–Izhikevich (RF–IZH) hybrid, current-based neuron (CUBA), sigma–delta ()); neural encodings (direct/single-value encoding, rate coding, temporal variants including time-to-first-spike (TTFS), rank-order with number-of-spikes (R–NoM), population coding, phase-of-firing coding (PoFC), burst coding, sigma–delta encoding ()); and learning paradigms: supervised (backpropagation-through-time (BPTT) with surrogate gradients, e.g., SLAYER, SuperSpike, EventProp), unsupervised (spike-timing–dependent plasticity (STDP) and variants), reinforcement (reward-modulated STDP (R-STDP), e-prop), hybrid supervised STDP (SSTDP), and ANN→SNN conversion. The practical pipeline used in this study relies on supervised training via BPTT with surrogate gradients (aTan by default; SLAYER/SuperSpike-style updates) for the tutorial and benchmark experiments [16,17,18,19].

- We provide a practical tutorial (with reference to a representative neuromorphic software stack, e.g., Intel Lava) covering model construction, encoding choices, and training–inference workflows suitable for resource-constrained deployment.

- We establish a side-by-side evaluation protocol that compares SNNs with architecturally matched ANNs on a shallow setting (MNIST) and a deeper convolutional setting (CIFAR-10 with VGG-style backbones [34]). Metrics include task accuracy, time steps, spike activity, and power-oriented proxies to highlight accuracy–efficiency trade-offs.

- We distill design guidelines that map application goals—accuracy targets and per-inference energy budgets—onto actionable choices of neuron model, encoding scheme, number of time steps, and supervised surrogate-gradient training (e.g., SLAYER, SuperSpike, aTan).

2. Background of Spiking Neural Networks

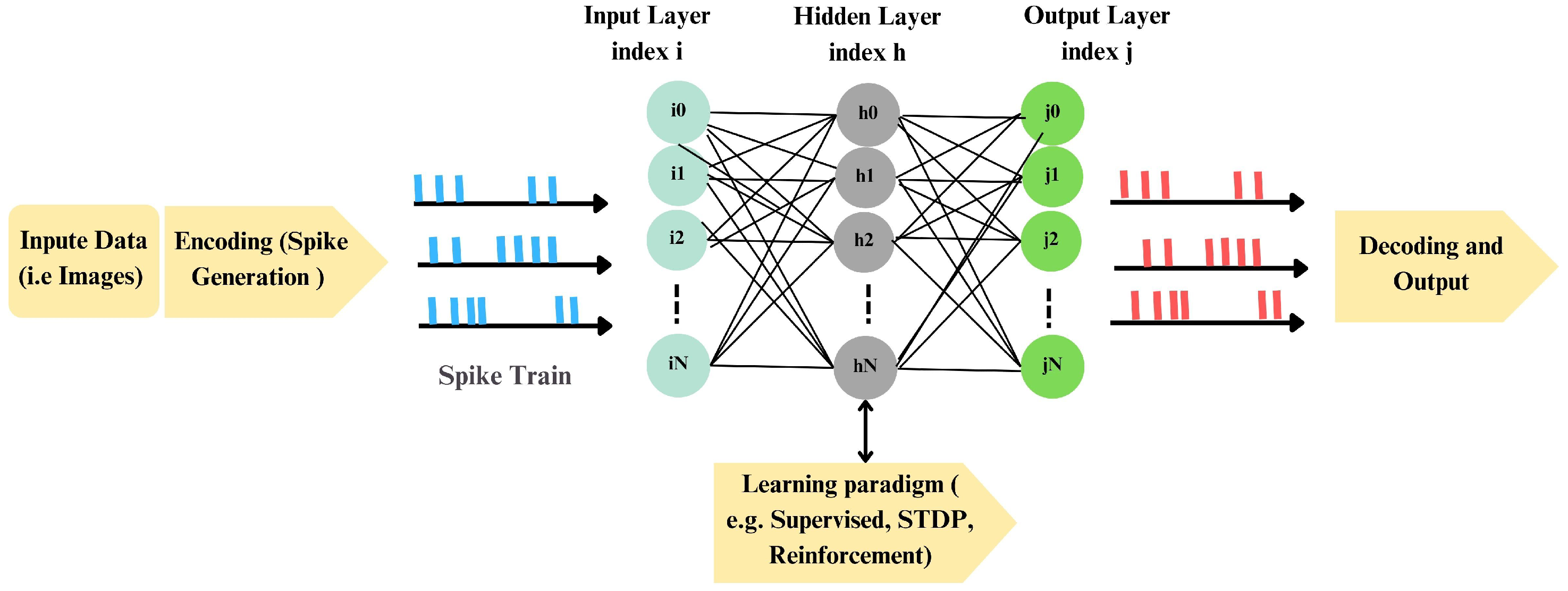

2.1. Key Aspects of Spiking Neural Networks

2.1.1. Processing Pipeline

2.1.2. Power Efficiency: Mechanisms and Practice

2.1.3. Advantages and Challenges

2.1.4. Learning and Encoding

2.1.5. Real-World Applications

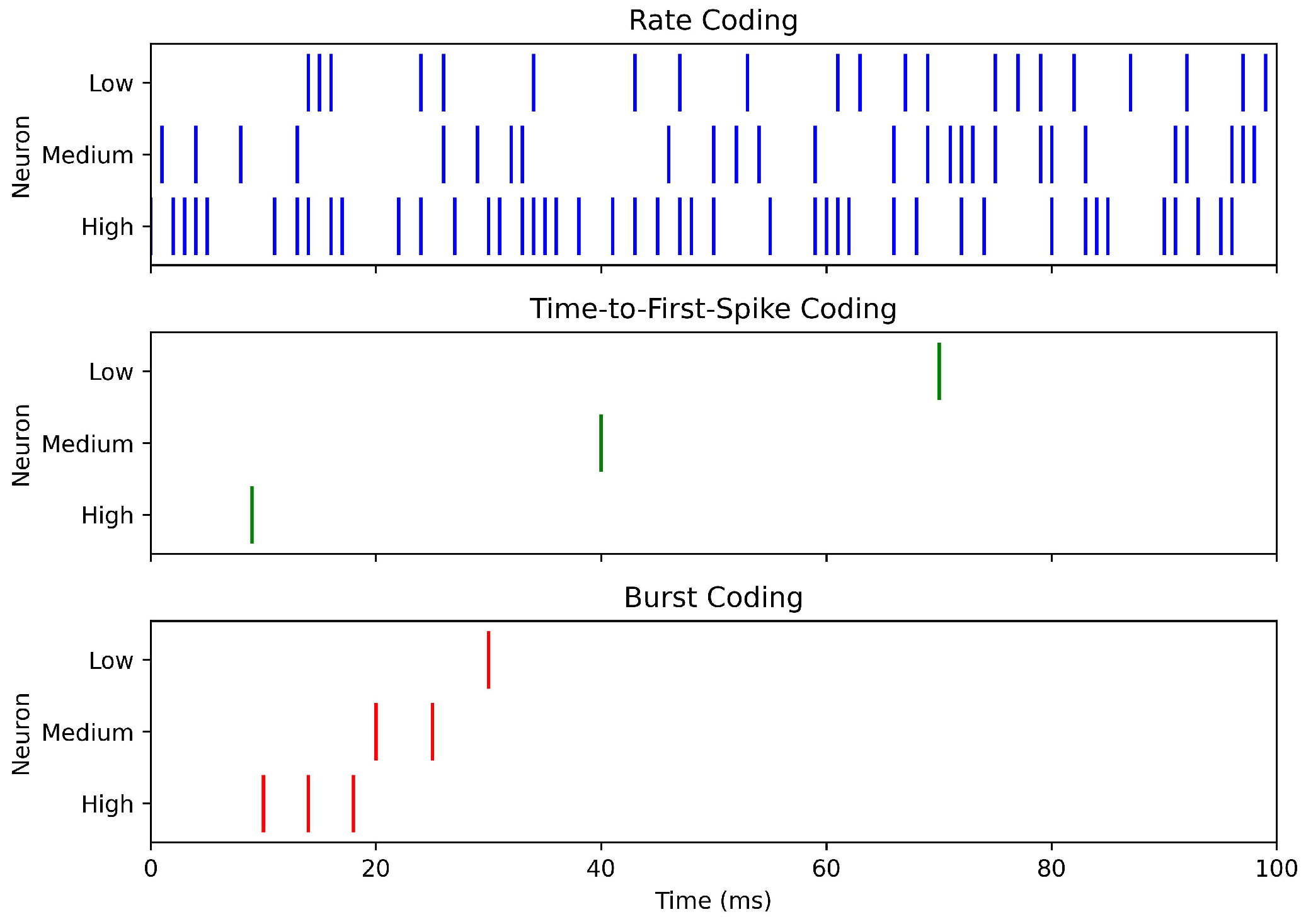

2.2. Encoding in SNNs

2.2.1. Rate Coding

2.2.2. Direct Input Encoding

2.2.3. Temporal Coding

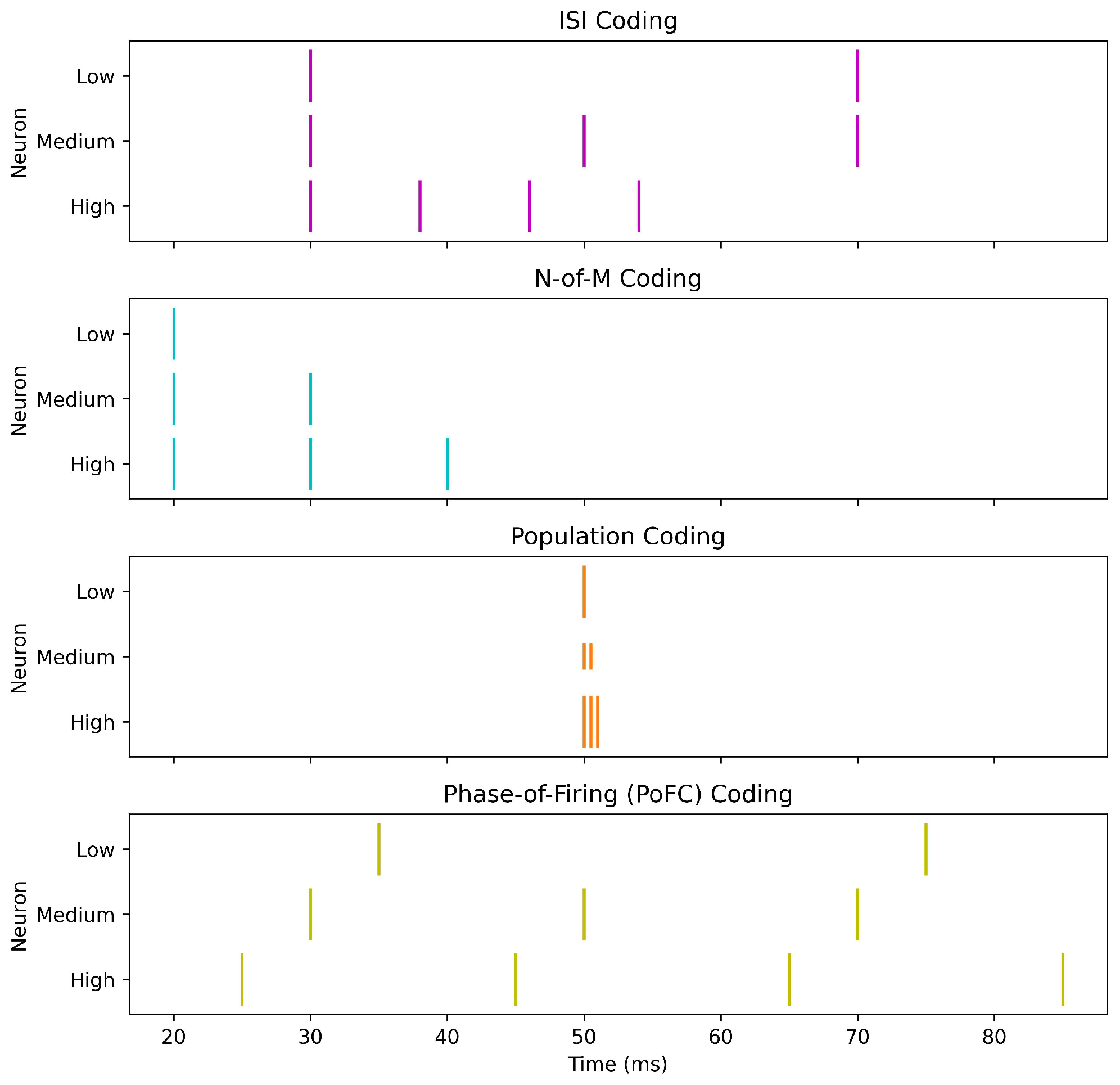

- Time-to-first-spike (TTFS): Encodes stimulus strength in the latency of the first spike:where is the stimulus onset time and the delay before firing [14]. TTFS is highly power-efficient, as minimal spiking activity can support rapid decisions, although it requires precise timing and complicates learning.

- Inter-spike interval (ISI): Encodes information in the time gap between consecutive spikes:providing richer temporal detail at the cost of increased spiking and energy use [14].

- N-of-M (NoM) coding: Transmits only the first N out of M possible spikes, improving hardware efficiency but discarding spike-order information [82].

- Rank order coding (ROC): Utilizes the sequence of spike arrivals according to synaptic weights, providing high discriminability but at the cost of computational intensity and sensitivity to precise timing [24].

- Ranked-N-of-M (R-NoM): Combines ROC and NoM by propagating the first N spikes while weighting their order:where is a decreasing modulation function with spike order [82].

2.2.4. Population Coding

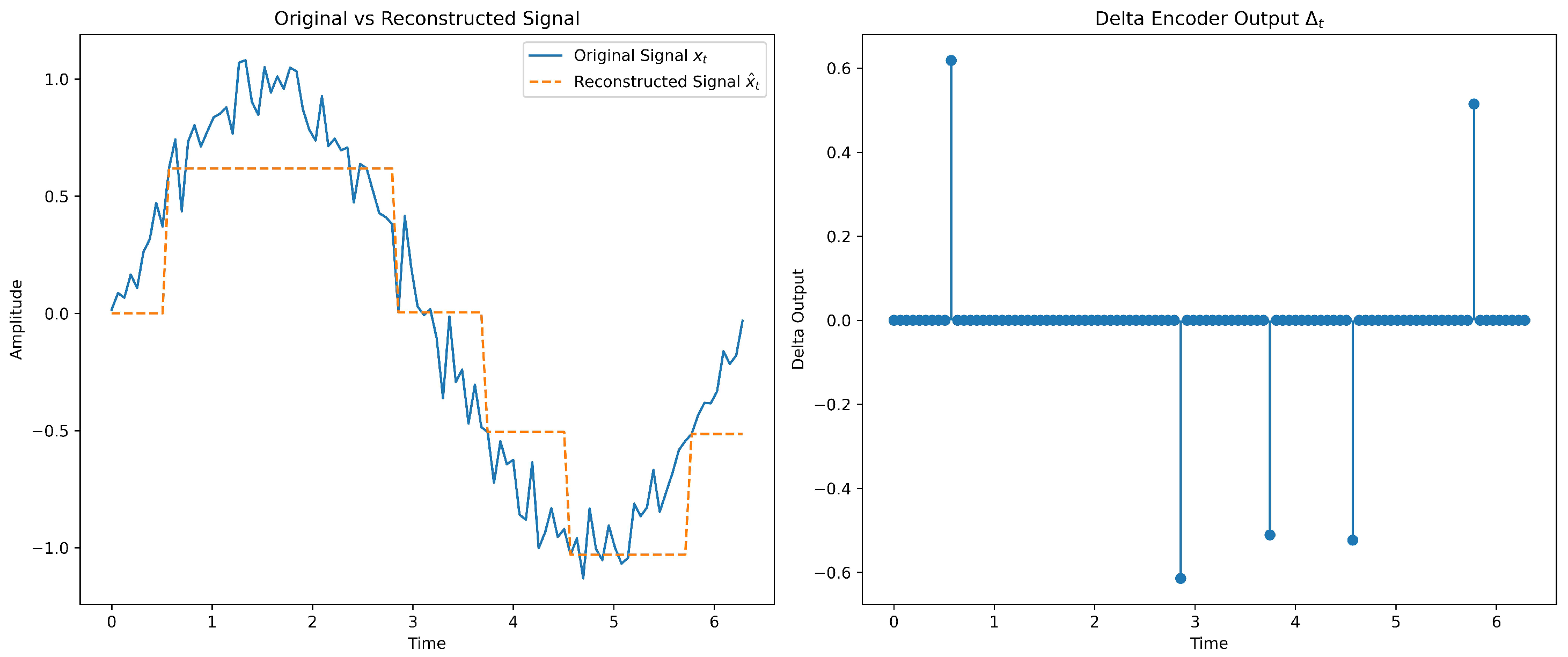

2.2.5. Encoding

2.2.6. Burst Coding

2.2.7. PoFC Coding

2.2.8. Impact of Encoding Schemes on SNN Performance and Power Efficiency

Guidelines for Selecting an Encoding Scheme

2.3. Spiking Neuron Models

2.3.1. Spike Response Neuron (SRM)

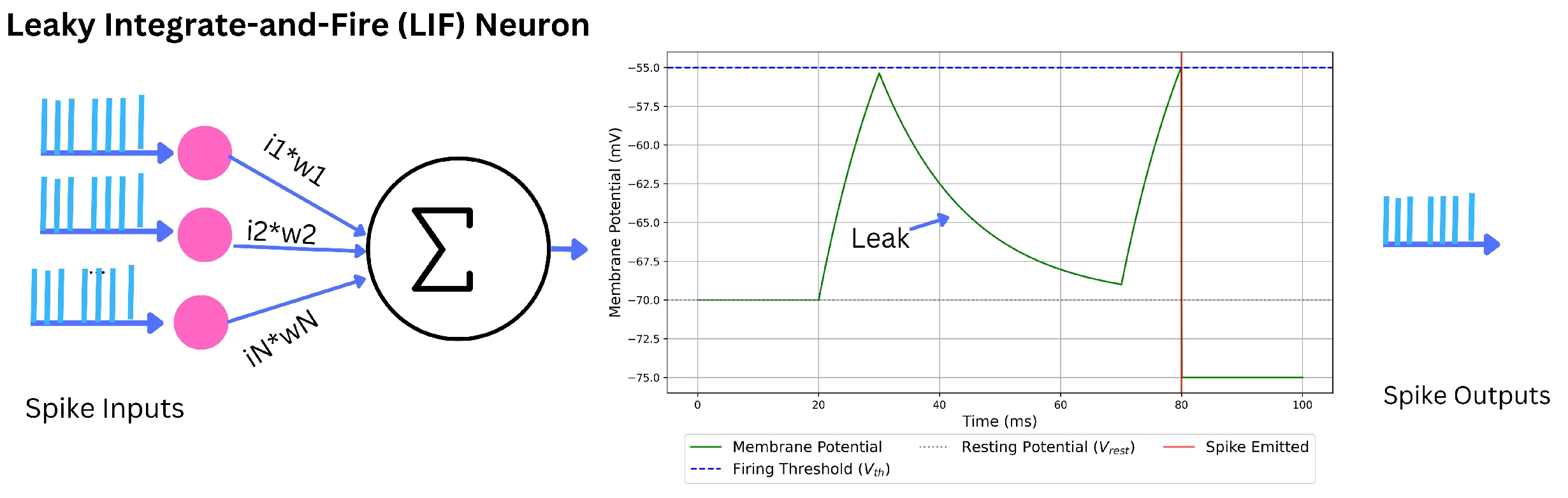

2.3.2. Integrate-and-Fire Neuron Family (IF, LIF)

Perfect IF (PIF)

Leaky IF (LIF)

2.3.3. Adaptive Leaky Integrate-and-Fire Neuron (ALIF)

Dynamic-Threshold ALIF

Current-Based ALIF

2.3.4. Exponential Integrate-and-Fire Neuron (EIF)

2.3.5. Adaptive Exponential Integrate-and-Fire Neuron (AdEx)

2.3.6. Resonate-and-Fire Neuron (RF)

2.3.7. Hodgkin–Huxley Neuron (HH)

| Neuron Model | Main Applications | Complexity | Biological Plausibility | Advantages | Challenges |

|---|---|---|---|---|---|

| SRM [38,100] | STDP studies; analytical probes of SNNs; event-driven/hardware simulation | Low | Mod. | Kernel-based, tractable; separates synaptic and refractory effects; efficient | Limited subthreshold nonlinearities; requires kernel/threshold calibration |

| IF [101,103] | Baselines; large-scale SNNs; theoretical analysis | V. Low | Low | Extremely simple; fast; closed-form insights | Unrealistic integration (no leak); no adaptation/resonance |

| LIF [38,102] | Neuromorphic inference; brain–computer interfaces; large-scale simulations | Low | Mod. | Good accuracy–efficiency balance; event-driven; well-studied statistics | No intrinsic adaptation/bursting; linear subthreshold |

| ALIF [100,104] | Temporal/sequential tasks; speech-like streams; robotics control | Mod. | Mod. | Spike-frequency adaptation; better temporal credit assignment | Extra state and parameters; tuning sensitivity |

| EIF [103,105] | Fast fluctuating inputs; spike initiation studies; neuromorphic surrogates | Mod. | High | Sharp, smooth onset; improved gain/phase vs. LIF | Parameter calibration; stiffer near threshold |

| AdEx [106] | Cortical pattern repertoire; adapting/bursting cells; efficient yet rich neurons | Mod.–High | High | Diverse firing patterns with compact model; efficient integration | More parameters; careful numerical and hardware calibration |

| RF [110] | Resonance/phase codes; frequency-selective processing; edge sensing prototypes | Mod. | High | Captures subthreshold oscillations and resonance; phase selectivity | Phenomenological spike; added second-order state; parameter tuning |

| HH [97] | Biophysical mechanism studies; channelopathies; pharmacology; single-cell fidelity | V. High | V. High | Gold-standard fidelity; reproduces ionic mechanisms and refractoriness | Computationally expensive; stiff; many parameters |

| IZH [39,89] | Large-scale networks with rich firing; cortical microcircuits; plasticity studies | Mod. | High | Wide repertoire at low cost; simple 2D form with reset | Lower biophysical interpretability; heuristic fitting |

| RF–IZH [110,115] | Phase-aware resonance with lightweight reset; recurrent SNNs; neuromorphic stacks | Mod. | High | Preserves phase; efficient event rules; toolchain support (Lava) | Phenomenological; calibration of ; still > IF/LIF cost |

| CUBA [98] | Large-scale SNNs; theory; fast prototyping; hardware with current-mode synapses | Low | Low | Very fast; analytically convenient; event-driven synapses independent of V | Ignores reversal/shunt; biases in high-conductance regimes vs. COBA |

| [88,115,116] | Energy-constrained streaming (audio/vision); edge sensing; –LIF layers | Mod. | Mod. | Sparse, error-driven spikes; low switching energy; good reconstruction | Feedback/threshold tuning; integration details for stability and latency |

2.3.8. Izhikevich Neuron (IZH)

2.3.9. Resonate-and-Fire Izhikevich Neuron (RF–IZH)

2.3.10. Current-Based Neuron (CUBA)

2.3.11. Sigma–Delta Neuron ()

2.3.12. Trade-Offs in Neuron Model Selection for SNNs

- HH—Gold-standard ion-channel fidelity for mechanism studies; too costly/stiff for large or ultra-low-power networks; useful as a reference [97].

- CUBA—Current-based synapses are simple and fast for scaling and analysis; reduced realism vs. COBA grows in high-conductance regimes [98].

Practical Guidelines

- Energy/scale: LIF/ALIF or ; use CUBA when speed > realism.

- Temporal richness: ALIF, EIF/AdEx, or RF/RF–IZH for adaptation, resonance, or phase coding.

- Mechanistic fidelity: HH for channel-level questions; validate reduced models against HH.

- Trainability: Prefer models with robust surrogate-gradient practice; constrain parameters to avoid stiffness/instability.

- Hardware fit: Match state and nonlinearities to the target fabric (fixed-point, exponentials/CORDIC, event-driven kernels); layer-wise hybrids (e.g., LIF front ends + AdEx/ALIF deeper) often optimize accuracy–efficiency.

2.4. Learning Paradigms in SNNs

2.4.1. Supervised Learning

- SpikeProp

- SuperSpike

- SLAYER

- EventProp

2.4.2. Unsupervised Learning in SNNs

- STDP

- Adaptive STDP (aSTDP)

- Multiplicative STDP

- Triplet STDP

2.4.3. Reinforcement Learning in SNNs

- R-STDP

- ReSuMe (Rewarded Subspace Method)

- Eligibility Propagation (e-prop)

2.4.4. Hybrid Learning Paradigms

- (SSTDP)

- ANN-to-SNN Conversion

- Training a conventional ANN using backpropagation.

- Converting neuron activations to spike rates or spike times.

- Adjusting weights, thresholds, and normalization parameters to match the target SNN framework.

- Temporal coding conversion: Encodes information in spike timing to capture temporal patterns, reducing latency and improving performance on dynamic datasets [89].

| Algorithm Name | Learning Paradigm | Main Applications | Complexity | Biological Plausibility | Advantages | Challenges |

|---|---|---|---|---|---|---|

| SpikeProp [122] | Supervised | Temporal pattern recognition | Low | Low | Enables precise spike timing learning; first event-based backpropagation for SNNs | Limited to shallow networks; affected by spike non-differentiability |

| SuperSpike [123] | Supervised | Temporal pattern recognition; deep SNN training | Medium | Medium | Uses surrogate gradients; enables multilayer training | Requires careful surrogate design; added computational cost |

| SLAYER [119] | Supervised | Complex temporal data; sequence prediction | High | Medium | Addresses temporal credit assignment; handles sequences well | Computationally intensive; complex to implement |

| EventProp [124] | Supervised | Exact gradient computation; neuromorphic hardware | High | High | Computes exact gradients; efficient for event-driven processing | Complex discontinuity handling; implementation challenges |

| STDP [51,121] | Unsupervised | Pattern recognition; feature extraction | Low | High | Biologically plausible; local weight updates | Limited scalability; lower accuracy for large-scale tasks |

| aSTDP [125,126] | Unsupervised | Adaptive feature learning | Medium | High | Dynamically adapts learning parameters; robust | Parameter tuning complexity; additional computation |

| Multiplicative STDP [127] | Unsupervised | Weight updates scaled by current weight; prevents unbounded growth/decay | High | Medium | Improves biological plausibility; stabilizes learning | Requires careful parameter tuning |

| Triplet-STDP [128] | Unsupervised | Frequency-dependent learning | Medium | Medium | Captures multi-spike interactions; models frequency effects | Complex spike attribution; higher computation |

| R-STDP [52] | RL | Adaptive learning; decision making | Medium | High | Integrates reward signals; adaptive to reinforcement tasks | Requires carefully designed reward schemes |

| ReSuMe [141] | RL | Temporal precision learning | Medium | High | Combines supervised targets with reinforcement feedback | Dependent on reward design; non-gradient-based |

| e-prop [130] | RL | Temporal dependencies; complex dynamics | High | High | Tracks synaptic influence with eligibility traces | Computationally intensive; eligibility tracking complexity |

| SSTDP [131] | Hybrid | High temporal precision; visual recognition | Medium | High | Merges backpropagation and STDP; energy-efficient | Requires precise timing data; integration complexity |

| ANN-to-SNN Conversion [134,135] | Hybrid | Neuromorphic deployment | Medium | Low | Leverages pre-trained ANNs; fast deployment | Accuracy loss in conversion; parameter mapping issues |

2.5. Evolution of Supervised Learning in SNNs and Broader Context

3. Materials and Methods

3.1. Experimental Design

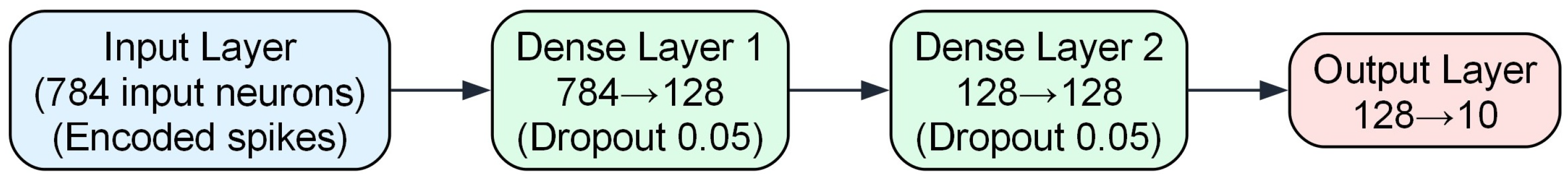

- Fully connected network (FCN): two hidden fully connected layers and a classification head, applied to MNIST; approximately 118,288 trainable parameters.

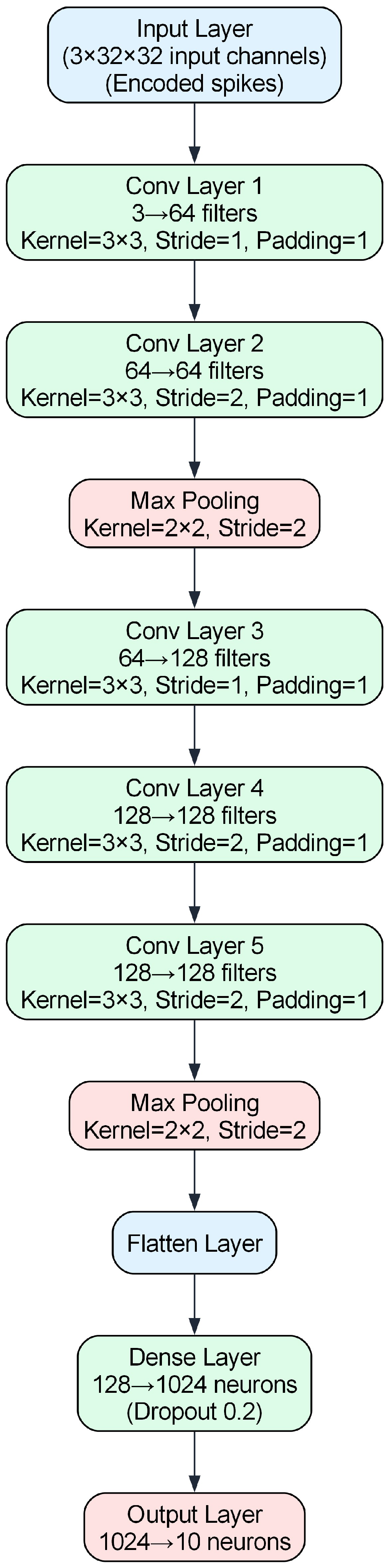

- Deep convolutional network (VGG7): five convolutional layers, two max-pooling layers, one hidden fully connected layer, and a classifier, applied to CIFAR-10; approximately 548,554 trainable parameters.

- Neuron models: IF, LIF, ALIF, CUBA, , RF, RF–IZH, EIF, and AdEx.

- Encoding schemes: Direct encoding, rate encoding, temporal TTFS, sigma–delta () encoding, burst coding, PoFC, and R–NoM. Each method transforms continuous pixel intensities into discrete spike trains distributed across specific time steps.

- Predictive efficacy (accuracy): proportion of correctly classified samples.

- Energy efficiency (power consumption): theoretical power usage estimated per inference.

- Training models on MNIST and CIFAR-10 under varying time-step configurations;

- Encoding input images using the predefined schemes;

- Measuring predictive accuracy and estimating energy consumption; and

- Comparing SNN performance against equivalent ANN baselines to quantify accuracy–energy trade-offs.

3.2. Data Collection and Preprocessing

3.3. Implementation Frameworks and Tools

3.3.1. Lava

3.3.2. SpikingJelly

3.3.3. Norse

3.3.4. PyTorch

3.4. Neural Network Architectures

3.5. Training Configuration and Procedures

3.6. Evaluation Metrics

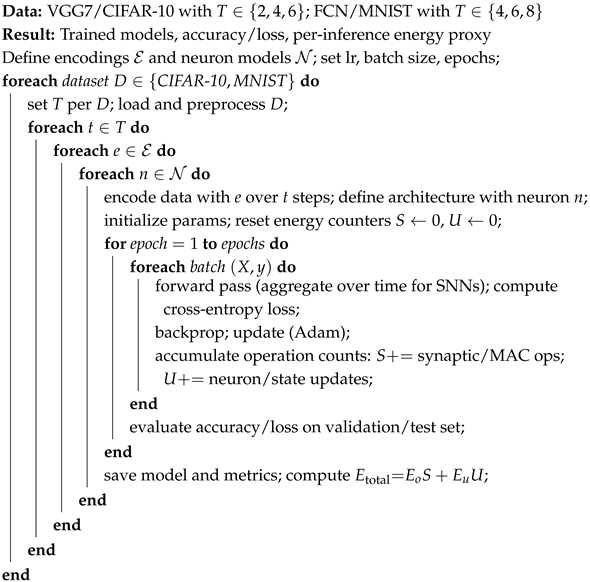

3.7. Algorithms

- Direct input: the image is duplicated across T time steps without spike synthesis.

- Rate (Poisson): intensities mapped to firing rates (maximum 100 Hz); Bernoulli sampling applied at each step.

- TTFS: a single spike latency monotonically mapped from intensity across T bins.

- : dead-zone delta encoder with feedback; spikes emitted when (here ), reconstructed by .

- R–NoM: rank-modulated top-N spiking from sorted intensities; N tuned by validation.

- PoFC: phase derived from normalized intensity over a cycle.

- Burst: intensity-dependent bursts capped at (selected via validation) within T.

- IF and LIF (SpikingJelly; ATan surrogate gradient).

- ALIF (Lava; dynamic threshold or current adaptation).

- CUBA (Lava; current-based neuron).

- neuron (Lava; event-driven, error-based spiking).

- RF and RF–IZH (Lava; resonance and phase sensitivity).

- EIF and AdEx (Norse; exponential onset and adaptive dynamics).

- SLAYER surrogate gradient (Lava/SLAYER 2.0).

- SuperSpike surrogate gradient (Norse).

- ATan surrogate function (SpikingJelly).

| Algorithm 1: Training/evaluation for VGG7 on CIFAR-10 and FCN on MNIST with operation counting for energy estimation. | |

| |

| Notes: thresholds were dataset-specific ( for FCN/MNIST; for VGG7/CIFAR-10). Shared SNN settings: current decay , voltage decay , tau gradient , scale gradient 3, refractory decay 1. Frameworks: PyTorch (ANNs); SpikingJelly/Lava/Norse (SNNs). Energy is reported via using hardware constants cited in Section 3.6. | |

4. Results

4.1. Performance on the MNIST Dataset

4.1.1. Classification Accuracy Results

Observation

- neurons achieving the highest accuracy: neurons attained the highest accuracy of 98.10% using rate encoding at eight time steps. They also performed strongly across other encodings, reaching 98.00% with encoding at both eight and six time steps. These results highlight their effectiveness in precise spike-based computation and suitability for tasks requiring accurate temporal processing and efficient encoding.

- Adaptive neuron models showing competitive performance: Adaptive neuron models such as ALIF and AdEx achieved competitive accuracy, particularly with direct and burst encodings. ALIF reached a maximum of 97.30% with rate encoding at eight time steps, while AdEx achieved 97.50% with direct encoding at six time steps. Their adaptation mechanisms enable better capture of temporal dynamics, providing a favorable trade-off between accuracy and computational efficiency.

- Solid performance of simpler neuron models: Simpler models such as IF and LIF also demonstrated strong performance with rate and encodings. IF achieved 97.70% accuracy with encoding at eight time steps, while LIF reached 97.50% at six time steps. Although slightly below the ANN baseline of 98.23%, these results confirm that simpler neuron models can still perform effectively when combined with appropriate encoding schemes.

- Performance of RF neurons: The standard RF neuron achieved lower accuracies compared with other models, with a maximum of 97.20% using direct encoding at eight time steps. The RF–IZH variant performed better, reaching 97.70% with rate and direct encodings at eight time steps.

- Robustness of CUBA neurons: CUBA neurons achieved consistent results, with a maximum accuracy of 97.66% using rate encoding at eight time steps and 97.60% using direct encoding. Their stability across encoding schemes highlights robustness and adaptability.

- EIF neurons’ performance: EIF neurons achieved 97.60% accuracy using direct encoding at six time steps, reflecting their capability to process direct input representations efficiently. Models such as CUBA and EIF that perform well across multiple encoding strategies demonstrate strong generalization and are suitable for applications requiring flexibility in encoding.

- Effectiveness of encoding schemes: Direct and encodings emerged as the most effective across multiple neuron types, frequently producing the highest accuracies. Burst encoding also performed well, particularly when paired with adaptive neuron models such as ALIF and AdEx. Temporal encodings (TTFS and PoFC) improved with increasing time steps but generally did not surpass direct or encoding.

- Effect of time steps on accuracy: Increasing the number of time steps generally improved accuracy for most neuron and encoding combinations, especially for temporal encodings such as TTFS and PoFC. However, models such as ALIF and neurons maintained high accuracy even with fewer time steps, demonstrating their efficiency in capturing temporal dynamics while minimizing computational and energy costs.

- Advanced versus simpler neuron models: Advanced neuron models such as , ALIF, and AdEx consistently achieved higher accuracies than simpler models like IF and LIF. This underscores the importance of mechanisms such as spike-frequency adaptation and precise spike timing in enhancing classification performance. Although some SNN configurations slightly lagged behind the ANN baseline, several achieved near-equivalent accuracy while maintaining superior energy efficiency and temporal processing capabilities.

- Variable performance of R–NoM encoding: R–NoM encoding showed variable performance across neuron models. ALIF neurons achieved a maximum of 58.00%, whereas EIF neurons reached 88.10%. This variability suggests that R–NoM’s effectiveness depends heavily on the underlying neuron dynamics.

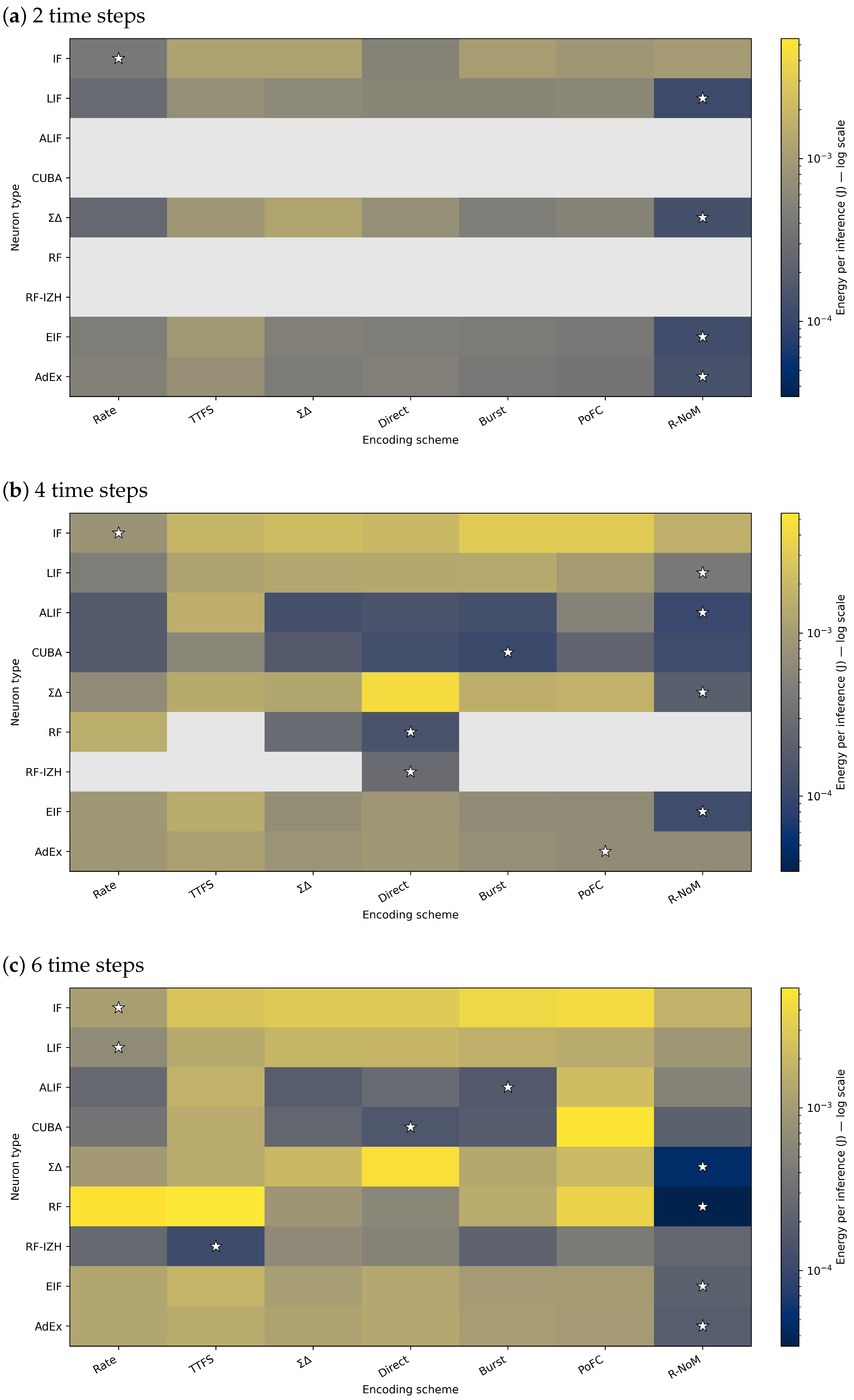

4.1.2. Energy Consumption

Trade-Off Between Accuracy and Power Consumption

- R–NoM: minimal energy, lower accuracy. R–NoM encoding achieves exceptionally low power usage, for instance J for the IF neuron at six time steps. However, these configurations typically exhibit weaker accuracies (approximately 70–75% for IF and considerably lower for ALIF), illustrating a clear trade-off between energy savings and predictive performance.

- High-accuracy configurations remain energy-efficient. Several neuron types—including , ALIF, and CUBA—achieve accuracies near or above 97–98% while consuming far less energy than the ANN baseline. For example, neurons with rate encoding at eight time steps yield 98.10% accuracy (Table 6) and require approximately J per inference (Table 7), representing about a tenfold improvement in energy efficiency relative to the ANN baseline.

- AdEx neurons: best energy with burst coding, highest accuracy with direct coding. AdEx neurons achieve their minimum energy consumption under burst coding (as reported in Table 7) but attain their best accuracy, around 97.4–97.5%, using direct coding (Table 6). This illustrates that the most energy-efficient configuration does not necessarily coincide with the highest-accuracy one, even within the same neuron model.

- Fewer time steps reduce energy but may lower accuracy. Most neuron types consume less power at four or six time steps than at eight. However, for temporal encodings such as TTFS and PoFC, reducing the number of steps can lower accuracy by several percentage points. Models with adaptive dynamics—ALIF, AdEx, and —maintain relatively high accuracy at lower time steps, making them favorable for energy-constrained applications.

- Overall SNN advantage. Almost all SNN configurations, including those achieving 97–98% accuracy, consume substantially less energy than the ANN baseline. This confirms the suitability of SNNs for edge and low-power deployments, where minor accuracy losses may be acceptable in exchange for significant energy savings.

- Balancing encoding and neuron type. Although R–NoM leads in power reduction, it consistently trails in accuracy. Encodings such as or direct coding achieve near-ANN accuracy with only moderate energy overhead compared with R–NoM, while still maintaining far lower energy usage than ANNs. Selecting the optimal combination of a neuron model and encoding scheme, therefore, requires balancing accuracy requirements against available power budgets, as each pairing exhibits distinct performance–efficiency characteristics.

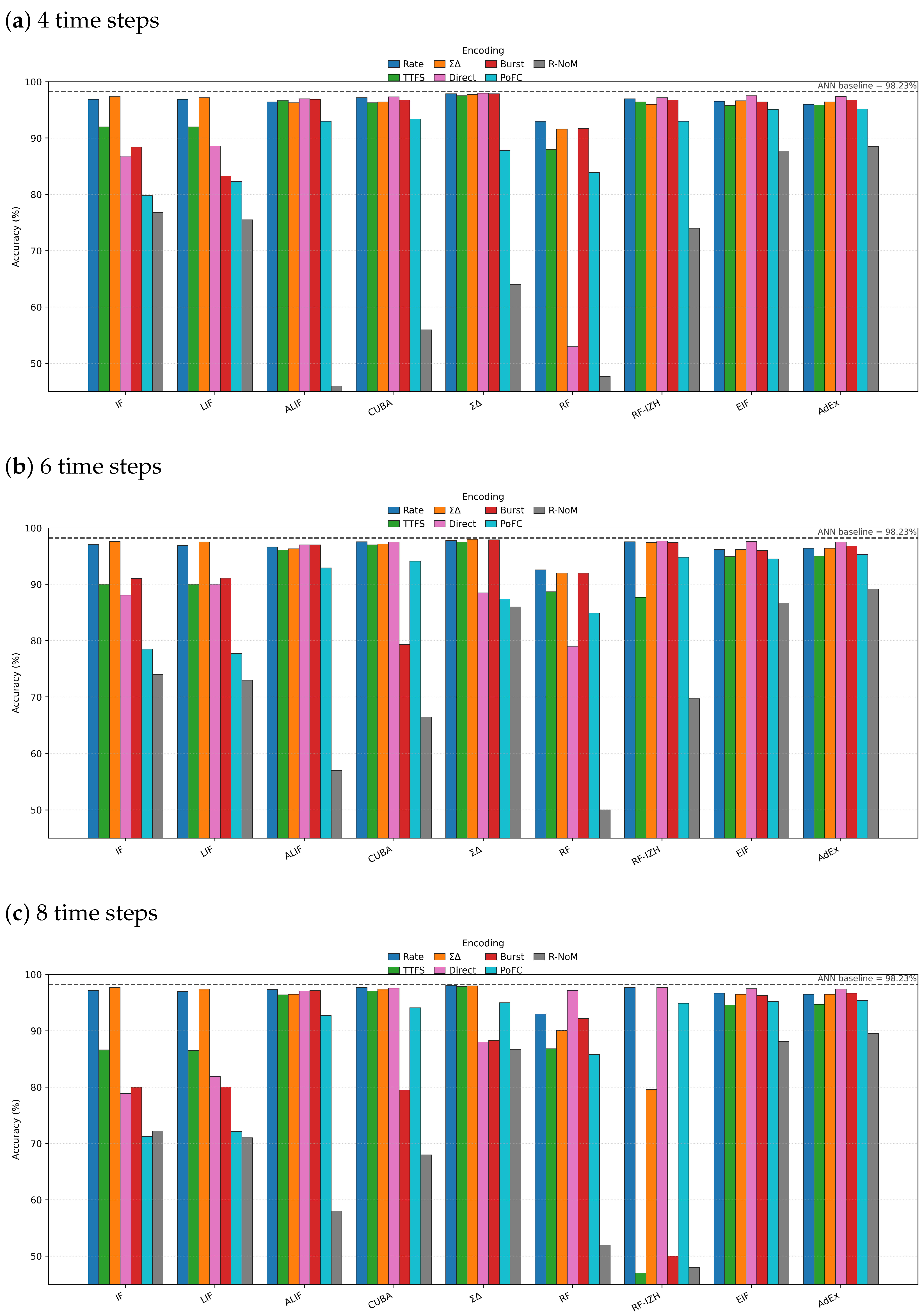

4.2. Performance on CIFAR-10 Dataset

4.2.1. Classification Accuracy Results

Observation

- neurons achieving the highest accuracy. neurons attained the highest SNN accuracy of 83.00% with direct coding at two time steps, closely matching the ANN baseline of 83.60%. This strong performance with a minimal number of time steps highlights the efficiency of neurons in capturing complex spatial–temporal patterns. In addition, TTFS encoding with neurons achieved accuracies up to 72.50%, confirming their versatility across different encoding schemes.

- Performance of IF and LIF neurons. IF and LIF neurons achieved comparable performance, with maximum accuracies of 74.50% each using direct coding at four time steps. These findings suggest that even simpler neuron models can perform effectively on complex datasets when combined with appropriate encoding methods. Accuracy improved modestly with additional time steps, indicating that temporal dynamics contribute positively to their classification capability.

- Performance of ALIF neurons. The adaptive LIF (ALIF) model achieved a maximum accuracy of 51.00% with rate encoding at 6 time steps. The relatively lower accuracy compared with other neuron types suggests that ALIF may require further tuning or more advanced encoding strategies to fully exploit its adaptation mechanisms on CIFAR-10.

- Performance of CUBA neurons. CUBA neurons reached a peak accuracy of 50.00% with TTFS encoding at 4 time steps. Although this exceeds random performance, it indicates that CUBA neurons alone may not effectively capture the complex features in CIFAR-10 without additional optimization.

- Performance of RF and RF–IZH neurons. RF and its Izhikevich variant (RF–IZH) achieved peak accuracies of 47.00% and 45.00%, respectively. These results suggest that resonate-and-fire dynamics are less suited for complex vision tasks such as CIFAR-10 image classification.

- Performance of EIF and AdEx neurons. EIF neurons reached an accuracy of 70.00% with direct coding at 2 time steps, while AdEx achieved 70.10% with direct coding at 6 time steps. These findings suggest that exponential spike initiation and adaptive dynamics enhance the processing of complex input patterns.

- Effectiveness of direct coding and TTFS encoding. Across neuron models, direct coding proved the most effective scheme, consistently yielding higher accuracies. This is likely because it preserves detailed spatial information without depending heavily on temporal representation. TTFS also performed well, particularly with neurons, indicating that precise spike timing benefits certain configurations.

- Impact of time steps. The number of time steps affected performance, though less strongly than in the MNIST experiments. Some models, such as , achieved high accuracy even at 2 time steps, highlighting computational efficiency. Increasing time steps generally provided modest accuracy gains, suggesting a balance between temporal resolution and computational cost.

- Comparison with ANN baseline. Although several SNN configurations approached the ANN baseline, many remained slightly below. Careful selection of neuron models and encoding schemes is, therefore, crucial to achieving high performance on complex datasets. In particular, neurons combined with direct coding exhibit strong potential, offering high accuracy at low time steps while maintaining energy and computational efficiency.

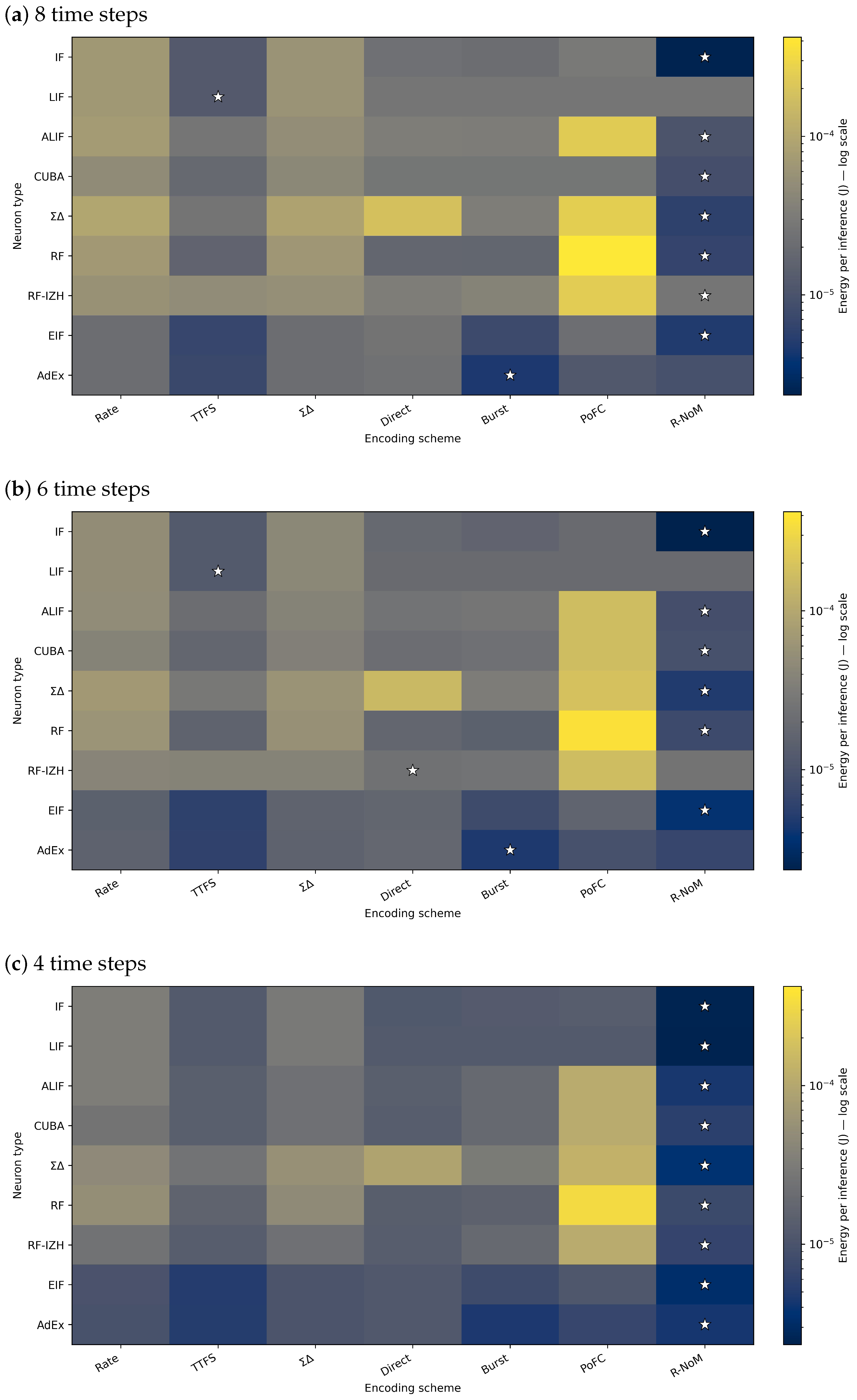

4.2.2. Energy Consumption on the CIFAR-10 Dataset

Observation

- Overall energy trends. SNNs consistently consume less energy than the baseline ANN across neuron types and encoding schemes. Models achieving higher classification accuracy—such as those using direct or temporal encodings—typically exhibit higher energy usage than simpler schemes. Nevertheless, even the most energy-demanding SNN configurations remain below the J reference value set by the ANN. This finding reinforces SNNs’ potential for substantial energy savings in complex tasks such as CIFAR-10 classification.

- Influence of time steps. A consistent pattern emerges in which increasing the number of time steps leads to higher energy consumption. For example, IF neurons increase from J at two time steps to J at six time steps under rate encoding. Although additional time steps can enhance classification accuracy, they also raise energy cost. Consequently, applications requiring real-time performance or low power consumption may favor configurations with fewer time steps, provided accuracy remains within acceptable limits.

- Neuron models and encoding schemes. Simpler neuron models (e.g., IF, LIF) generally exhibit moderate energy usage, but their efficiency depends strongly on the encoding strategy. For instance, IF neurons with rate encoding at two time steps require as little as J, whereas LIF neurons combined with temporal encodings consume more energy but often achieve better accuracy. Advanced models such as ALIF and AdEx may improve classification performance but do not always minimize energy consumption. Their adaptive dynamics can reduce spike activity under certain conditions, although this benefit varies depending on the encoding scheme and the number of time steps.

- Balancing accuracy and efficiency. Encoding schemes with low energy requirements often exhibit lower accuracy. Thus, selecting an optimal combination of neuron model, encoding method, and time steps is essential for balancing performance and efficiency. Overall, the results confirm that SNNs consistently maintain lower energy consumption than conventional ANNs, even when configured for higher accuracy. This balance between accuracy and energy efficiency underscores SNNs’ suitability for edge devices and other power-sensitive applications.

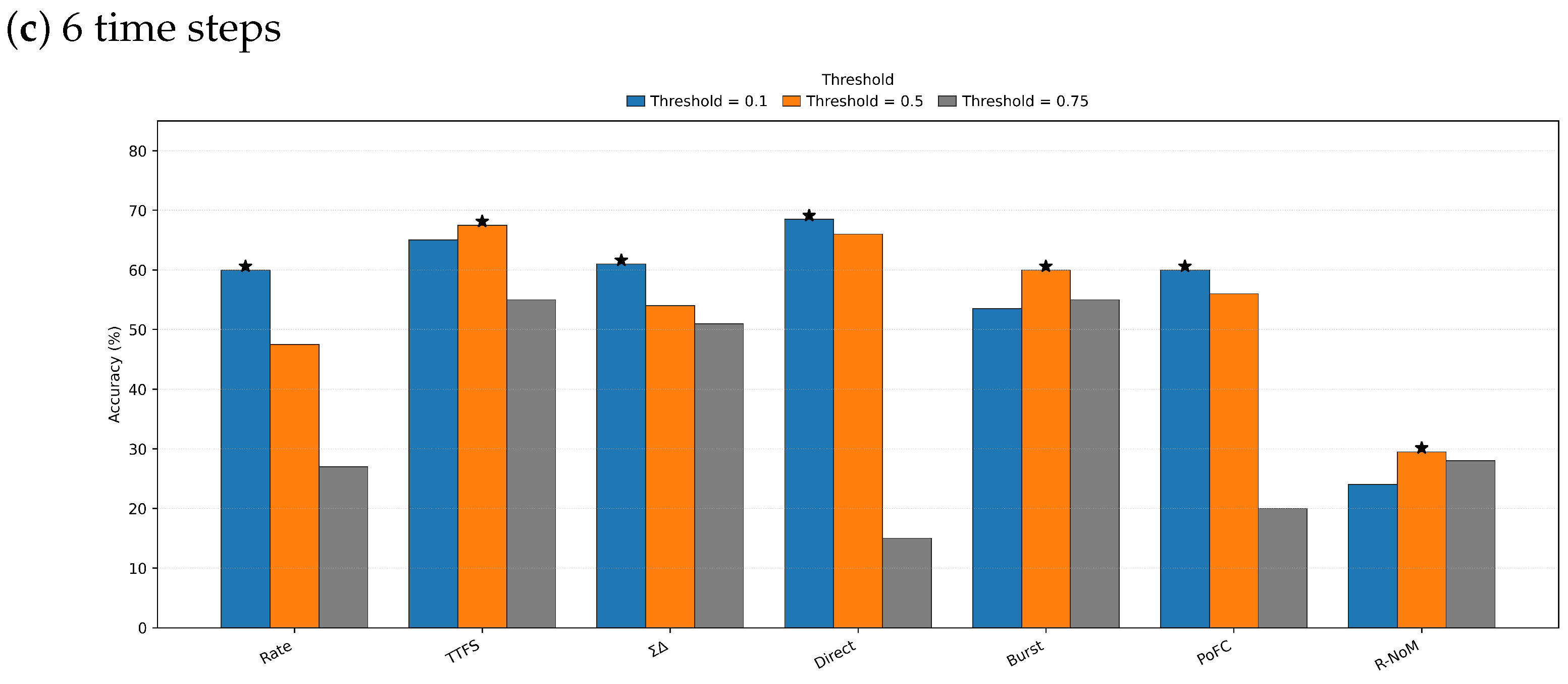

4.3. Effect of Thresholding and Encoding Schemes on Model Performance and Energy Consumption

4.3.1. Impact of Threshold Values on Classification Accuracy

4.3.2. Impact of Threshold Values on Energy Consumption

4.3.3. Trade-Off Between Accuracy and Energy Consumption

4.3.4. Comparison with Related Studies

4.4. Comparative Analysis and Discussion

- Accuracy-critical tasks: neurons with direct (CIFAR-10) or rate/ encodings (MNIST) achieve near-ANN accuracy, albeit with higher energy costs than ultra-sparse alternatives, yet still well below ANN levels.

- Energy-constrained or edge deployments: IF or LIF neurons combined with burst or R–NoM encoding and fewer time steps provide strong energy savings. Thresholds can be tuned to meet power constraints, accepting minor accuracy trade-offs.

5. Conclusions and Outlook

- (1)

- Accuracy–energy trade-off is real but tunable.

- MNIST (FCN): neurons with rate/ encodings reached up to 98.1% (vs. 98.23% ANN) while remaining energetically below the ANN proxy. ALIF/AdEx and even LIF/IF also performed strongly when paired with effective encodings.

- CIFAR-10 (VGG7): neurons with Direct input achieved 83.0% at 2 time steps (vs. 83.6% ANN), indicating that well-chosen neuron–encoding pairs can approach ANN performance even on a deeper, more complex task.

- Encodings that are extremely frugal (e.g., R–NoM) minimized the energy proxy but incurred the largest accuracy drops; conversely, settings that closed the accuracy gap (e.g., with direct/rate) used more energy—but still generally below the ANN reference on our GPU-targeted model.

- (2)

- Practical configuration rules.

- Accuracy-critical: Prefer neurons with Direct (CIFAR-10) or with Rate/ (MNIST); AdEx/EIF are solid fallbacks. Keep T as low as possible once the accuracy target is met.

- Energy-constrained: Favor simpler neurons (IF/LIF) with burst or R–NoM encoding and small T; expect some accuracy loss. Intermediate thresholds typically balance activity with correctness better than very low or very high ones.

- General tip: Tune thresholds jointly with the encoding; moderate T and carefully chosen thresholds often deliver the best accuracy-per-joule.

- (3)

- Neuromorphic potential.

- Limitations

- Future work

- Distinct contribution and comparison with existing literature

- Takeaway

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Strubell, E.; Ganesh, A.; McCallum, A. Energy and Policy Considerations for Deep Learning in NLP. arXiv 2019, arXiv:1906.02243. [Google Scholar] [CrossRef]

- Schwartz, R.; Dodge, J.; Smith, N.A.; Etzioni, O. Green AI. arXiv 2020, arXiv:2007.10792. [Google Scholar] [CrossRef]

- Han, S.; Pool, J.; Tran, J.; Dally, W.J. Learning both weights and connections for efficient neural networks. In Proceedings of the 28th Conference on Neural Information Processing Systems (NeurIPS 2015), Montreal, QC, Canada, 7–12 December 2015; Volume 28. [Google Scholar]

- Shi, Y.; Nguyen, L.; Oh, S.; Liu, X.; Kuzum, D. A soft-pruning method applied during training of spiking neural networks for in-memory computing applications. Front. Neurosci. 2019, 13, 405. [Google Scholar] [CrossRef]

- Hubara, I.; Courbariaux, M.; Soudry, D.; El-Yaniv, R.; Bengio, Y. Quantized neural networks: Training neural networks with low precision weights and activations. J. Mach. Learn. Res. 2017, 18, 6869–6898. [Google Scholar]

- Patterson, D.A.; Gonzalez, J.; Le, Q.V.; Liang, P.; Munguia, L.M.; Rothchild, D.; So, D.R.; Texier, M.; Dean, J. Carbon emissions and large neural network training. arXiv 2021, arXiv:2104.10350. [Google Scholar] [CrossRef]

- Paschek, S.; Förster, F.; Kipfmüller, M.; Heizmann, M. Probabilistic Estimation of Parameters for Lubrication Application with Neural Networks. Eng 2024, 5, 2428–2440. [Google Scholar] [CrossRef]

- Nashed, S.; Moghanloo, R. Replacing Gauges with Algorithms: Predicting Bottomhole Pressure in Hydraulic Fracturing Using Advanced Machine Learning. Eng 2025, 6, 73. [Google Scholar] [CrossRef]

- Sumon, R.I.; Ali, H.; Akter, S.; Uddin, S.M.I.; Mozumder, M.A.I.; Kim, H.C. A Deep Learning-Based Approach for Precise Emotion Recognition in Domestic Animals Using EfficientNetB5 Architecture. Eng 2025, 6, 9. [Google Scholar] [CrossRef]

- Maass, W. Networks of spiking neurons: The third generation of neural network models. Neural Netw. 1997, 10, 1659–1671. [Google Scholar] [CrossRef]

- Adrian, E.D.; Zotterman, Y. The impulses produced by sensory nerve endings: Part 3. impulses set up by touch and pressure. J. Physiol. 1926, 61, 465–483. [Google Scholar] [CrossRef]

- Rueckauer, B.; Liu, S.C. Conversion of analog to spiking neural networks using sparse temporal coding. In Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS 2018), Florence, Italy, 27–30 May 2018; pp. 1–5. [Google Scholar]

- Park, S.; Kim, S.; Choe, H.; Yoon, S. Fast and efficient information transmission with burst spikes in deep spiking neural networks. In Proceedings of the 56th Annual Design Automation Conference (DAC 2019), Las Vegas, NV, USA, 2–6 June 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Gollisch, T.; Meister, M. Rapid neural coding in the retina with relative spike latencies. Science 2008, 319, 1108–1111. [Google Scholar] [CrossRef]

- Yamazaki, K.; Vo-Ho, V.K.; Bulsara, D.; Le, N. Spiking neural networks and their applications: A review. Brain Sci. 2022, 12, 863. [Google Scholar] [CrossRef]

- Neftci, E.O.; Mostafa, H.; Zenke, F. Surrogate gradient learning in spiking neural networks: Bringing the power of gradient-based optimization to spiking neural networks. IEEE Signal Process. Mag. 2019, 36, 51–63. [Google Scholar] [CrossRef]

- Lee, J.; Delbrück, T.; Pfeiffer, M. Enabling Gradient-Based Learning in Spiking Neural Networks with Surrogate Gradients. Front. Neurosci. 2020, 14, 123. [Google Scholar] [CrossRef]

- Zhou, C.; Zhang, H.; Yu, L.; Ye, Y.; Zhou, Z.; Huang, L.; Tian, Y. Direct training high-performance deep spiking neural networks: A review of theories and methods. arXiv 2024, arXiv:2405.04289. [Google Scholar] [CrossRef]

- Auge, D.; Hille, J.; Mueller, E.; Knoll, A. A survey of encoding techniques for signal processing in spiking neural networks. Neural Process. Lett. 2021, 53, 4693–4710. [Google Scholar] [CrossRef]

- Zhou, X.; Hanger, D.P.; Hasegawa, M. DeepSNN: A Comprehensive Survey on Deep Spiking Neural Networks. Front. Neurosci. 2020, 14, 456. [Google Scholar] [CrossRef]

- Nguyen, D.A.; Tran, X.T.; Iacopi, F. A review of algorithms and hardware implementations for spiking neural networks. J. Low Power Electron. Appl. 2021, 11, 23. [Google Scholar] [CrossRef]

- Dora, S.; Kasabov, N. Spiking neural networks for computational intelligence: An overview. Big Data Cogn. Comput. 2021, 5, 67. [Google Scholar] [CrossRef]

- Pietrzak, P.; Szczęsny, S.; Huderek, D.; Przyborowski, Ł. Overview of spiking neural network learning approaches and their computational complexities. Sensors 2023, 23, 3037. [Google Scholar] [CrossRef] [PubMed]

- Thorpe, S.; Gautrais, J. Rank order coding. In Computational Neuroscience: Trends in Research; Springer: Boston, MA, USA, 1998; pp. 113–118. [Google Scholar]

- Paugam-Moisy, H. Spiking Neuron Networks: A Survey; Technical Report EPFL-REPORT-83371; École Polytechnique Fédérale de Lausanne (EPFL): Lausanne, Switzerland, 2006. [Google Scholar]

- Davies, M.; Wild, A.; Orchard, G.; Sandamirskaya, Y.; Guerra, G.A.F.; Joshi, P.; Risbud, S.R. Advancing neuromorphic computing with Loihi: A survey of results and outlook. Proc. IEEE 2021, 109, 911–934. [Google Scholar] [CrossRef]

- Paul, P.; Sosik, P.; Ciencialova, L. A survey on learning models of spiking neural membrane systems and spiking neural networks. arXiv 2024, arXiv:2403.18609. [Google Scholar] [CrossRef]

- Rathi, N.; Chakraborty, I.; Kosta, A.; Sengupta, A.; Ankit, A.; Panda, P.; Roy, K. Exploring neuromorphic computing based on spiking neural networks: Algorithms to hardware. ACM Comput. Surv. 2023, 55, 1–49. [Google Scholar] [CrossRef]

- Dampfhoffer, M.; Mesquida, T.; Valentian, A.; Anghel, L. Backpropagation-based learning techniques for deep spiking neural networks: A survey. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 11906–11921. [Google Scholar] [CrossRef]

- Nunes, J.D.; Carvalho, M.; Carneiro, D.; Cardoso, J.S. Spiking neural networks: A survey. IEEE Access 2022, 10, 60738–60764. [Google Scholar] [CrossRef]

- Schliebs, S.; Kasabov, N. Evolving spiking neural network—A survey. Evol. Syst. 2013, 4, 87–98. [Google Scholar] [CrossRef]

- Martinez, F.S.; Casas-Roma, J.; Subirats, L.; Parada, R. Spiking neural networks for autonomous driving: A review. Eng. Appl. Artif. Intell. 2024, 138, 109415. [Google Scholar] [CrossRef]

- Wu, J.; Wang, Y.; Li, Z.; Lu, L.; Li, Q. A review of computing with spiking neural networks. Comput. Mater. Contin. 2024, 78, 2909–2939. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar] [CrossRef]

- Dayan, P.; Abbott, L.F. Theoretical Neuroscience: Computational and Mathematical Modeling of Neural Systems; MIT Press: Cambridge, MA, USA, 2001. [Google Scholar]

- Nielsen, M.A. Neural Networks and Deep Learning; Determination Press: San Francisco, CA, USA, 2015; Volume 25, pp. 15–24. [Google Scholar]

- Diehl, P.U.; Cook, M. Unsupervised learning of digit recognition using spike-timing-dependent plasticity. Front. Comput. Neurosci. 2015, 9, 99. [Google Scholar] [CrossRef] [PubMed]

- Gerstner, W.; Kistler, W.M. Spiking Neuron Models: Single Neurons, Populations, Plasticity; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar]

- Izhikevich, E.M. Which model to use for cortical spiking neurons? IEEE Trans. Neural Netw. 2004, 15, 1063–1070. [Google Scholar] [CrossRef]

- Horowitz, M. Computing’s energy problem (and what we can do about it). In Proceedings of the 2014 IEEE International Solid-State Circuits Conference (ISSCC) Digest of Technical Papers, San Francisco, CA, USA, 9–13 February 2014; pp. 10–14. [Google Scholar] [CrossRef]

- Sze, V.; Chen, Y.-H.; Yang, T.-J.; Emer, J.S. How to Evaluate Deep Neural Network Processors: TOPS/W (Alone) Considered Harmful. IEEE Solid-State Circuits Mag. 2020, 12, 28–41. [Google Scholar] [CrossRef]

- Davies, M.; Srinivasa, N.; Lin, T.H.; Chinya, G.; Cao, Y.; Choday, S.H.; Dimou, G.; Joshi, P.; Imam, N.; Jain, S.; et al. Loihi: A neuromorphic manycore processor with on-chip learning. IEEE Micro 2018, 38, 82–99. [Google Scholar] [CrossRef]

- Akopyan, F.; Sawada, J.; Cassidy, A.; Alvarez-Icaza, R.; Arthur, J.; Merolla, P.; Imam, N.; Nakamura, Y.; Datta, P.; Nam, G.J.; et al. Truenorth: Design and tool flow of a 65 mw 1 million neuron programmable neurosynaptic chip. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2015, 34, 1537–1557. [Google Scholar] [CrossRef]

- Furber, S.B.; Galluppi, F.; Temple, S.; Plana, L.A. The spinnaker project. Proc. IEEE 2014, 102, 652–665. [Google Scholar] [CrossRef]

- Roy, K.; Jaiswal, A.; Panda, P. Towards spike-based machine intelligence with neuromorphic computing. Nature 2019, 575, 607–617. [Google Scholar] [CrossRef]

- Plank, J.S.; Rizzo, C.P.; Gullett, B.; Dent, K.E.M.; Schuman, C.D. Alleviating the Communication Bottleneck in Neuromorphic Computing with Custom-Designed Spiking Neural Networks. J. Low Power Electron. Appl. 2025, 15, 50. [Google Scholar] [CrossRef]

- Wang, X.; Zhu, Y.; Zhou, Z.; Chen, X.; Jia, X. Memristor-Based Spiking Neuromorphic Systems Toward Brain-Inspired Perception and Computing. Nanomaterials 2025, 15, 1130. [Google Scholar] [CrossRef]

- Panda, P.; Aketi, S.A.; Roy, K. Toward scalable, efficient, and accurate deep spiking neural networks with backward residual connections, stochastic softmax, and hybridization. Front. Neurosci. 2020, 14, 653. [Google Scholar] [CrossRef]

- Lemaire, Q.; Cordone, L.; Castagnetti, A.; Novac, P.E.; Courtois, J.; Miramond, B. An Analytical Estimation of Spiking Neural Networks Energy Efficiency. In Proceedings of the International Conference on Artificial Neural Networks (ICANN), Heraklion, Greece, 26–29 September 2023; pp. 585–597. [Google Scholar] [CrossRef]

- Shen, S.; Zhang, R.; Wang, C.; Huang, R.; Tuerhong, A.; Guo, Q.; Lu, Z.; Zhang, J.; Leng, L. Evolutionary Spiking Neural Networks: A Survey. Memetic Comput. 2024, 16, 123–145. [Google Scholar] [CrossRef]

- Bi, G.Q.; Poo, M.M. Synaptic modifications in cultured hippocampal neurons: Dependence on spike timing, synaptic strength, and postsynaptic cell type. J. Neurosci. 1998, 18, 10464–10472. [Google Scholar] [CrossRef]

- Mozafari, M.; Ganjtabesh, M.; Nowzari-Dalini, A.; Thorpe, S.J.; Masquelier, T. Bio-inspired digit recognition using reward-modulated spike-timing-dependent plasticity in deep convolutional networks. Pattern Recognit. 2019, 94, 87–95. [Google Scholar] [CrossRef]

- Amir, A.; Taba, B.; Berg, D.; Melano, T.; McKinstry, J.; Nolfo, C.D.; Nayak, T.; Andreopoulos, A.; Garreau, G.; Mendoza, M.; et al. A low power, fully event-based gesture recognition system. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2017), Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 7388–7397. [Google Scholar] [CrossRef]

- Massa, R.; Marchisio, A.; Martina, M.; Shafique, M. An efficient spiking neural network for recognizing gestures with a DVS camera on the Loihi neuromorphic processor. arXiv 2020, arXiv:2006.09985. [Google Scholar] [CrossRef]

- Ma, S.; Pei, J.; Zhang, W.; Wang, G.; Feng, D.; Yu, F.; Song, C.; Qu, H.; Ma, C.; Lu, M.; et al. Neuromorphic Computing Chip with Spatiotemporal Elasticity for Multi-Intelligent-Tasking Robots. Sci. Robot. 2022, 7, eabk2948. [Google Scholar] [CrossRef]

- Stewart, K.; Orchard, G.; Shrestha, S.B.; Neftci, E. Online few-shot gesture learning on a neuromorphic processor. arXiv 2020, arXiv:2008.01151. [Google Scholar] [CrossRef]

- Bartolozzi, C.; Indiveri, G.; Donati, E. Embodied Neuromorphic Intelligence. Nat. Commun. 2022, 13, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Leitão, D.; Cunha, R.; Lemos, J.M. Adaptive Control of Quadrotors in Uncertain Environments. Eng 2024, 5, 544–561. [Google Scholar] [CrossRef]

- Velarde-Gomez, S.; Giraldo, E. Nonlinear Control of a Permanent Magnet Synchronous Motor Based on State Space Neural Network Model Identification and State Estimation by Using a Robust Unscented Kalman Filter. Eng 2025, 6, 30. [Google Scholar] [CrossRef]

- Tan, C.; Šarlija, M.; Kasabov, N. NeuroSense: Short-Term Emotion Recognition and Understanding Based on Spiking Neural Network Modelling of Spatio-Temporal EEG Patterns. Neurocomputing 2021, 434, 137–148. [Google Scholar] [CrossRef]

- Yang, G.; Kang, Y.; Charlton, P.H.; Kyriacou, P.A.; Kim, K.K.; Li, L.; Park, C. Energy-Efficient PPG-Based Respiratory Rate Estimation Using Spiking Neural Networks. Sensors 2024, 24, 3980. [Google Scholar] [CrossRef]

- Kumar, N.; Tang, G.; Yoo, R.; Michmizos, K.P. Decoding EEG with Spiking Neural Networks on Neuromorphic Hardware. Transactions on Machine Learning Research. 2022, pp. 1–15. Available online: https://openreview.net/forum?id=ZPBJPGX3Bz (accessed on 23 October 2025).

- Garcia-Palencia, O.; Fernandez, J.; Shim, V.; Kasabov, N.K.; Wang, A.; the Alzheimer’s Disease Neuroimaging Initiative. Spiking Neural Networks for Multimodal Neuroimaging: A Comprehensive Review of Current Trends and the NeuCube Brain-Inspired Architecture. Bioengineering 2025, 12, 628. [Google Scholar] [CrossRef]

- Ayasi, B.; Vázquez, I.X.; Saleh, M.; Garcia-Vico, A.M.; Carmona, C.J. Application of Spiking Neural Networks and Traditional Artificial Neural Networks for Solar Radiation Forecasting in Photovoltaic Systems in Arab Countries. Neural Comput. Appl. 2025, 37, 9095–9127. [Google Scholar] [CrossRef]

- Sopeña, J.M.G.; Pakrashi, V.; Ghosh, B. A Spiking Neural Network Based Wind Power Forecasting Model for Neuromorphic Devices. Energies 2022, 15, 7256. [Google Scholar] [CrossRef]

- Thangaraj, V.K.; Nachimuthu, D.S.; Francis, V.A.R. Wind Speed Forecasting at Wind Farm Locations with a Unique Hybrid PSO-ALO Based Modified Spiking Neural Network. Energy Syst. 2023, 16, 713–741. [Google Scholar] [CrossRef]

- AbouHassan, I.; Kasabov, N.; Bankar, T.; Garg, R.; Sen Bhattacharya, B. PAMeT-SNN: Predictive Associative Memory for Multiple Time Series based on Spiking Neural Networks with Case Studies in Economics and Finance. TechRxiv 2023. [Google Scholar] [CrossRef]

- Joseph, G.V.; Pakrashi, V. Spiking Neural Networks for Structural Health Monitoring. Sensors 2022, 22, 9245. [Google Scholar] [CrossRef] [PubMed]

- Reid, D.; Hussain, A.J.; Tawfik, H. Financial Time Series Prediction Using Spiking Neural Networks. PLoS ONE 2014, 9, e103656. [Google Scholar] [CrossRef]

- Du, X.; Tong, W.; Jiang, L.; Yu, D.; Wu, Z.; Duan, Q.; Deng, S. SNN-IoT: Efficient Partitioning and Enabling of Deep Spiking Neural Networks in IoT Services. IEEE Trans. Serv. Comput. 2025; in press. [Google Scholar] [CrossRef]

- Li, H.; Tu, B.; Liu, B.; Li, J.; Plaza, A. Adaptive Feature Self-Attention in Spiking Neural Networks for Hyperspectral Classification. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–15. [Google Scholar] [CrossRef]

- Chunduri, R.K.; Perera, D.G. Neuromorphic Sentiment Analysis Using Spiking Neural Networks. Sensors 2023, 23, 7701. [Google Scholar] [CrossRef] [PubMed]

- Schuman, C.D.; Plank, J.S.; Bruer, G.; Anantharaj, J. Non-Traditional Input Encoding Schemes for Spiking Neuromorphic Systems. In Proceedings of the IEEE International Joint Conference on Neural Networks (IJCNN 2019), Budapest, Hungary, 14–19 July 2019; pp. 1–10. [Google Scholar] [CrossRef]

- Datta, G.; Liu, Z.; Abdullah-Al Kaiser, M.; Kundu, S.; Mathai, J.; Yin, Z.; Jacob, A.P.; Jaiswal, A.R.; Beerel, P.A. In-sensor and neuromorphic computing are all you need for energy-efficient computer vision. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2023), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Guo, W.; Fouda, M.E.; Eltawil, A.M.; Salama, K.N. Neural coding in spiking neural networks: A comparative study for robust neuromorphic systems. Front. Neurosci. 2021, 15, 638474. [Google Scholar] [CrossRef]

- Mostafa, H. Supervised learning based on temporal coding in spiking neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2017, 29, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Sakemi, Y.; Morino, K.; Morie, T.; Aihara, K. A supervised learning algorithm for multilayer spiking neural networks based on temporal coding toward energy-efficient VLSI processor design. IEEE Trans. Neural Netw. Learn. Syst. 2021, 34, 394–408. [Google Scholar] [CrossRef] [PubMed]

- Kim, Y.; Park, H.; Moitra, A.; Bhattacharjee, A.; Venkatesha, Y.; Panda, P. Rate coding or direct coding: Which one is better for accurate, robust, and energy-efficient spiking neural networks? In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2022), Singapore, 23–27 May 2022; pp. 71–75. [Google Scholar] [CrossRef]

- Zhou, S.; Li, X.; Chen, Y.; Chandrasekaran, S.T.; Sanyal, A. Temporal-coded deep spiking neural network with easy training and robust performance. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI 2021), Virtual Event, 2–9 February 2021; Volume 35, pp. 11143–11151. [Google Scholar] [CrossRef]

- Gautrais, J.; Thorpe, S. Rate coding versus temporal order coding: A theoretical approach. Biosystems 1998, 48, 57–65. [Google Scholar] [CrossRef]

- Duarte, R.C.; Uhlmann, M.; Van Den Broek, D.; Fitz, H.; Petersson, K.; Morrison, A. Encoding symbolic sequences with spiking neural reservoirs. In Proceedings of the IEEE International Joint Conference on Neural Networks (IJCNN 2018), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Bonilla, L.; Gautrais, J.; Thorpe, S.; Masquelier, T. Analyzing time-to-first-spike coding schemes: A theoretical approach. Front. Neurosci. 2022, 16, 971937. [Google Scholar] [CrossRef]

- Averbeck, B.B.; Latham, P.E.; Pouget, A. Neural correlations, population coding and computation. Nat. Rev. Neurosci. 2006, 7, 358–366. [Google Scholar] [CrossRef]

- Pan, Z.; Wu, J.; Zhang, M.; Li, H.; Chua, Y. Neural population coding for effective temporal classification. In Proceedings of the IEEE International Joint Conference on Neural Networks (IJCNN 2019), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Cheung, K.F.; Tang, P.Y. Sigma-delta modulation neural networks. In Proceedings of the IEEE International Conference on Neural Networks (ICNN 1993), San Francisco, CA, USA, 28 March–1 April 1993; pp. 489–493. [Google Scholar] [CrossRef]

- Yousefzadeh, A.; Hosseini, S.; Holanda, P.; Leroux, S.; Werner, T.; Serrano-Gotarredona, T.; Simoens, P. Conversion of synchronous artificial neural network to asynchronous spiking neural network using sigma-delta quantization. In Proceedings of the IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS 2019), Hsinchu, Taiwan, 18–20 March 2019; pp. 81–85. [Google Scholar] [CrossRef]

- Nasrollahi, S.A.; Syutkin, A.; Cowan, G. Input-layer neuron implementation using delta-sigma modulators. In Proceedings of the 2022 20th IEEE Interregional NEWCAS Conference (NEWCAS 2022), Québec City, QC, Canada, 19–22 June 2022; pp. 533–537. [Google Scholar] [CrossRef]

- Nair, M.V.; Indiveri, G. An ultra-low power sigma-delta neuron circuit. In Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS 2019), Sapporo, Japan, 26–29 May 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Izhikevich, E.M. Simple model of spiking neurons. IEEE Trans. Neural Netw. 2003, 14, 1569–1572. [Google Scholar] [CrossRef]

- Eyherabide, H.G.; Rokem, A.; Herz, A.V.; Samengo, I. Bursts generate a non-reducible spike-pattern code. Front. Neurosci. 2009, 3, 490. [Google Scholar] [CrossRef] [PubMed]

- O’Keefe, J.; Recce, M.L. Phase relationship between hippocampal place units and the EEG theta rhythm. Hippocampus 1993, 3, 317–330. [Google Scholar] [CrossRef]

- Montemurro, M.A.; Rasch, M.J.; Murayama, Y.; Logothetis, N.K.; Panzeri, S. Phase-of-firing coding of natural visual stimuli in primary visual cortex. Curr. Biol. 2008, 18, 375–380. [Google Scholar] [CrossRef]

- Masquelier, T.; Hugues, E.; Deco, G.; Thorpe, S.J. Oscillations, Phase-of-Firing Coding, and Spike Timing-Dependent Plasticity: An Efficient Learning Scheme. J. Neurosci. 2009, 29, 13484–13493. [Google Scholar] [CrossRef]

- Wang, Z.; Yu, N.; Liao, Y. Activeness: A Novel Neural Coding Scheme Integrating the Spike Rate and Temporal Information in the Spiking Neural Network. Electronics 2023, 12, 3992. [Google Scholar] [CrossRef]

- Qiu, X.; Zhu, R.J.; Chou, Y.; Wang, Z.; Deng, L.J.; Li, G. Gated attention coding for training high-performance and efficient spiking neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI 2024), Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 601–610. [Google Scholar] [CrossRef]

- Paugam-Moisy, H.; Bohte, S.M. Computing with Spiking Neuron Networks. In Handbook of Natural Computing; Rozenberg, G., Back, T., Kok, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 335–376. [Google Scholar] [CrossRef]

- Hodgkin, A.L.; Huxley, A.F. A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. 1952, 117, 500. [Google Scholar] [CrossRef]

- Brette, R.; Rudolph, M.; Carnevale, T.; Hines, M.; Beeman, D.; Bower, J.M.; Diesmann, M.; Morrison, A.; Goodman, P.H.; Harris, F.C., Jr.; et al. Simulation of networks of spiking neurons: A review of tools and strategies. J. Comput. Neurosci. 2007, 23, 349–398. [Google Scholar] [CrossRef]

- Indiveri, G.; Liu, S.C. Neuromorphic VLSI circuits for spike-based computation. Proc. IEEE 2011, 99, 2414–2435. [Google Scholar]

- Gerstner, W.; van Hemmen, J.L. Why spikes? Hebbian learning and retrieval of time-resolved excitation patterns. Biol. Cybern. 1993, 69, 503–515. [Google Scholar] [CrossRef][Green Version]

- Lapicque, L. Recherches quantitatives sur l’excitation électrique des nerfs traitée comme une polarisation. J. Physiol. Pathol. Générale 1907, 9, 620–635. [Google Scholar][Green Version]

- Burkitt, A.N. A review of the integrate-and-fire neuron model: I. Homogeneous synaptic input. Biol. Cybern. 2006, 95, 1–19. [Google Scholar] [CrossRef]

- Brunel, N.; Van Rossum, M.C.W. Lapicque’s 1907 paper: From frogs to integrate-and-fire. Biol. Cybern. 2007, 97, 337–339. [Google Scholar] [CrossRef] [PubMed]

- Benda, J.; Herz, A.V.M. A universal model for spike-frequency adaptation. Neural Comput. 2003, 15, 2523–2564. [Google Scholar] [CrossRef] [PubMed]

- Fourcaud-Trocmé, N.; Hansel, D.; Van Vreeswijk, C.; Brunel, N. How spike generation mechanisms determine the neuronal response to fluctuating inputs. J. Neurosci. 2003, 23, 11628–11640. [Google Scholar] [CrossRef]

- Gerstner, W.; Brette, R. Adaptive exponential integrate-and-fire model. Scholarpedia 2009, 4, 8427. [Google Scholar] [CrossRef]

- Makhlooghpour, A.; Soleimani, H.; Ahmadi, A.; Zwolinski, M.; Saif, M. High-accuracy implementation of adaptive exponential integrate-and-fire neuron model. In Proceedings of the IEEE International Joint Conference on Neural Networks (IJCNN 2016), Vancouver, BC, Canada, 24–29 July 2016; pp. 192–197. [Google Scholar] [CrossRef]

- Haghiri, S.; Ahmadi, A. A Novel Digital Realization of AdEx Neuron Model. IEEE Trans. Circuits Syst. II Express Briefs 2020, 67, 1444–1451. [Google Scholar] [CrossRef]

- Ahmadi, A.; Zwolinski, M. A modified Izhikevich model for circuit implementation of spiking neural networks. In Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS 2010), Paris, France, 30 May–2 June 2010; pp. 4253–4256. [Google Scholar] [CrossRef]

- Izhikevich, E.M. Resonate-and-fire neurons. Neural Netw. 2001, 14, 883–894. [Google Scholar] [CrossRef] [PubMed]

- Higuchi, S.; Kairat, S.; Bohte, S.M.; Otte, S. Balanced resonate-and-fire neurons. arXiv 2024, arXiv:2402.14603. [Google Scholar] [CrossRef]

- Lehmann, H.M.; Hille, J.; Grassmann, C.; Issakov, V. Direct Signal Encoding with Analog Resonate-and-Fire Neurons. IEEE Access 2023, 11, 71985–71995. [Google Scholar] [CrossRef]

- Leigh, A.J.; Heidarpur, M.; Mirhassani, M. A Resource-Efficient and High-Accuracy CORDIC-Based Digital Implementation of the Hodgkin–Huxley Neuron. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2023, 31, 1377–1388. [Google Scholar] [CrossRef]

- Devi, M.; Choudhary, D.; Garg, A.R. Information processing in extended Hodgkin–Huxley neuron model. In Proceedings of the 2020 3rd International Conference on Emerging Technologies in Computer Engineering: Machine Learning and Internet of Things (ICETCE 2020), Jaipur, India, 7–8 February 2020; pp. 176–180. [Google Scholar] [CrossRef]

- Intel Neuromorphic Computing Lab. Lava: A Software Framework for Neuromorphic Computing. 2021. Available online: https://lava-nc.org (accessed on 23 October 2025).

- Zambrano, D.; Bohte, S.M. Fast and efficient asynchronous neural computation with adapting spiking neural networks. arXiv 2016, arXiv:1609.02053. [Google Scholar] [CrossRef]

- Wu, Y.; Deng, L.; Li, G.; Zhu, J.; Shi, L. Spatio-Temporal Backpropagation for Training High-Performance Spiking Neural Networks. Front. Neurosci. 2018, 12, 331. [Google Scholar] [CrossRef]

- Lee, J.H.; Delbruck, T.; Pfeiffer, M. Training deep spiking neural networks using backpropagation. Front. Neurosci. 2016, 10, 508. [Google Scholar] [CrossRef]

- Shrestha, S.B.; Orchard, G. SLAYER: Spike layer error reassignment in time. In Proceedings of the 32nd Conference on Neural Information Processing Systems (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018; Volume 31. [Google Scholar]

- Lian, S.; Shen, J.; Liu, Q.; Wang, Z.; Yan, R.; Tang, H. Learnable surrogate gradient for direct training spiking neural networks. In Proceedings of the Thirty-Second International Joint Conference on Artificial Intelligence (IJCAI-23), Macao SAR, China, 19–25 August 2023; pp. 3002–3010. [Google Scholar] [CrossRef]

- Sjöström, J.; Gerstner, W. Spike-timing-dependent plasticity. Scholarpedia 2010, 5, 1362. [Google Scholar] [CrossRef]

- Bohte, S.; Kok, J.; Poutré, J. SpikeProp: Backpropagation for Networks of Spiking Neurons. In Proceedings of the 8th European Symposium on Artificial Neural Networks, ESANN 2000, Bruges, Belgium, 26–28 April 2000; Volume 48, pp. 419–424. [Google Scholar]

- Zenke, F.; Ganguli, S. Superspike: Supervised learning in multilayer spiking neural networks. Neural Comput. 2018, 30, 1514–1541. [Google Scholar] [CrossRef] [PubMed]

- Wunderlich, T.C.; Pehle, C. Event-based backpropagation can compute exact gradients for spiking neural networks. Sci. Rep. 2021, 11, 12829. [Google Scholar] [CrossRef] [PubMed]

- Gautam, A.; Kohno, T. Adaptive STDP-Based On-Chip Spike Pattern Detection. Front. Neurosci. 2023, 17, 1203956. [Google Scholar] [CrossRef]

- Li, S. aSTDP: A more biologically plausible learning. arXiv 2022, arXiv:2206.14137. [Google Scholar] [CrossRef]

- Paredes-Vallès, F.; Scheper, K.Y.; Croon, G.C.D. Unsupervised Learning of a Hierarchical Spiking Neural Network for Optical Flow Estimation: From Events to Global Motion Perception. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2051–2064. [Google Scholar] [CrossRef]

- Caporale, N.; Dan, Y. Spike Timing–Dependent Plasticity: A Hebbian Learning Rule. Annu. Rev. Neurosci. 2008, 31, 25–46. [Google Scholar] [CrossRef] [PubMed]

- Ponulak, F. ReSuMe—New Supervised Learning Method for Spiking Neural Networks; Technical Report; Institute of Control and Information Engineering, Poznań University of Technology: Poznań, Poland, 2005. [Google Scholar]

- Bellec, G.; Scherr, F.; Hajek, E.; Salaj, D.; Legenstein, R.; Maass, W. Biologically inspired alternatives to backpropagation through time for learning in recurrent neural nets. arXiv 2019, arXiv:1901.09049. [Google Scholar] [CrossRef]

- Liu, F.; Zhao, W.; Chen, Y.; Wang, Z.; Yang, T.; Jiang, L. SSTDP: Supervised Spike Timing Dependent Plasticity for Efficient Spiking Neural Network Training. Front. Neurosci. 2021, 15, 756876. [Google Scholar] [CrossRef]

- Diehl, P.U.; Neil, D.; Binas, J.; Cook, M.; Liu, S.C.; Pfeiffer, M. Fast-classifying, high-accuracy spiking deep networks through weight and threshold balancing. In Proceedings of the IEEE International Joint Conference on Neural Networks (IJCNN 2015), Killarney, Ireland, 12–17 July 2015; pp. 1–8. [Google Scholar] [CrossRef]

- Rueckauer, B.; Lungu, I.A.; Hu, Y.; Pfeiffer, M.; Liu, S.C. Conversion of continuous-valued deep networks to efficient event-driven networks for image classification. Front. Neurosci. 2017, 11, 682. [Google Scholar] [CrossRef]

- Cao, Y.; Chen, Y.; Khosla, D. Spiking deep convolutional neural networks for energy-efficient object recognition. Int. J. Comput. Vis. 2015, 113, 54–66. [Google Scholar] [CrossRef]

- Hunsberger, E.; Eliasmith, C. Spiking deep networks with LIF neurons. arXiv 2015, arXiv:1510.08829. [Google Scholar] [CrossRef]

- Han, B.; Srinivasan, G.; Roy, K. RMP-SNN: Residual membrane potential neuron for enabling deeper, high-accuracy, and low-latency spiking neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2020), Seattle, WA, USA, 14–19 June 2020; pp. 13558–13567. [Google Scholar] [CrossRef]

- Bu, T.; Fang, W.; Ding, J.; Dai, P.; Yu, Z.; Huang, T. Optimal ANN–SNN conversion for high-accuracy and ultra-low-latency spiking neural networks. In Proceedings of the 10th International Conference on Learning Representations (ICLR 2022), Virtual Conference, 25–29 April 2022; OpenReview: Online. Available online: https://openreview.net/forum?id=7B3IJMM1k_M (accessed on 23 October 2025).

- LeCun, Y.; Cortes, C.; Burges, C.J. The MNIST Database of Handwritten Digits; Technical Report; AT&T Labs: Florham Park, NJ, USA, 1998; Available online: http://yann.lecun.com/exdb/mnist/ (accessed on 23 October 2025).

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; Technical Report UTML TR 2009; University of Toronto: Toronto, ON, Canada, 2009. [Google Scholar]

- Gaurav, R.; Tripp, B.; Narayan, A. Spiking approximations of the MaxPooling operation in deep SNNs. arXiv 2022, arXiv:2205.07076. [Google Scholar] [CrossRef]

- Ponulak, F.; Kasinski, A. Introduction to spiking neural networks: Information processing, learning and applications. Acta Neurobiol. Exp. 2011, 71, 409–433. [Google Scholar] [CrossRef]

- Xin, J.; Embrechts, M.J. Supervised learning with spiking neural networks. In Proceedings of the IEEE International Joint Conference on Neural Networks (IJCNN 2001), Washington, DC, USA, 15–19 July 2001; Volume 3, pp. 1772–1777. [Google Scholar] [CrossRef]

- Ponulak, F.; Kasiński, A. Supervised learning in spiking neural networks with ReSuMe: Sequence learning, classification, and spike shifting. Neural Comput. 2010, 22, 467–510. [Google Scholar] [CrossRef]

- Xu, Y.; Zeng, X.; Zhong, S. A New Supervised Learning Algorithm for Spiking Neurons. Neural Comput. 2013, 25, 1472–1511. [Google Scholar] [CrossRef]

- Xu, Y.; Zeng, X.; Han, L.; Yang, J. A supervised multi-spike learning algorithm based on gradient descent for spiking neural networks. Neural Netw. 2013, 43, 99–113. [Google Scholar] [CrossRef]

- Ahmed, F.Y.; Shamsuddin, S.M.; Hashim, S.Z.M. Improved spikeprop for using particle swarm optimization. Math. Probl. Eng. 2013, 2013, 257085. [Google Scholar] [CrossRef]

- Yu, Q.; Tang, H.; Tan, K.C.; Yu, H. A brain-inspired spiking neural network model with temporal encoding and learning. Neurocomputing 2014, 138, 3–13. [Google Scholar] [CrossRef]

- Huh, D.; Sejnowski, T.J. Gradient descent for spiking neural networks. In Proceedings of the 32nd Conference on Neural Information Processing Systems (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018; Volume 31. [Google Scholar]

- Datta, G.; Kundu, S.; Beerel, P.A. Training energy-efficient deep spiking neural networks with single-spike hybrid input encoding. In Proceedings of the IEEE International Joint Conference on Neural Networks (IJCNN 2021), Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Zheng, H.; Wu, Y.; Deng, L.; Hu, Y.; Li, G. Going deeper with directly trained larger spiking neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI 2021), Virtual Event, 2–9 February 2021; Volume 35, pp. 11062–11070. [Google Scholar] [CrossRef]

- Shi, X.; Hao, Z.; Yu, Z. SpikingResFormer: Bridging ResNet and Vision Transformer in spiking neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2024), Seattle, WA, USA, 17–21 June 2024; pp. 5610–5619. [Google Scholar] [CrossRef]

- Zhou, C.; Zhang, H.; Zhou, Z.; Yu, L.; Huang, L.; Fan, X.; Yuan, L.; Ma, Z.; Zhou, H.; Tian, Y. Qkformer: Hierarchical spiking transformer using qk attention. arXiv 2024, arXiv:2403.16552. [Google Scholar] [CrossRef]

- Sorbaro, M.; Liu, Q.; Bortone, M.; Sheik, S. Optimizing the Energy Consumption of Spiking Neural Networks for Neuromorphic Applications. Front. Neurosci. 2020, 14, 516916. [Google Scholar] [CrossRef]

- Sengupta, A.; Ye, Y.; Wang, R.; Liu, C.; Roy, K. Going deeper in spiking neural networks: VGG and residual architectures. Front. Neurosci. 2019, 13, 95. [Google Scholar] [CrossRef]

- Kucik, A.S.; Meoni, G. Investigating spiking neural networks for energy-efficient on-board AI applications: A case study in land cover and land use classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW 2021), Virtual Event, 19–25 June 2021; pp. 2020–2030. [Google Scholar] [CrossRef]

- Applied Brain Research. KerasSpiking—Estimating Model Energy. 2024. Available online: https://www.nengo.ai/keras-spiking/examples/model-energy.html (accessed on 12 April 2024).

- Fang, W.; Chen, Y.; Ding, J.; Yu, Z.; Masquelier, T.; Chen, D.; Yu, Z.; Zhou, H.; Tian, Y. Spikingjelly: An open-source machine learning infrastructure platform for spike-based intelligence. Sci. Adv. 2023, 9, eadi1480. [Google Scholar] [CrossRef] [PubMed]

- Pehle, C.G.; Pedersen, J.E. Norse—A Deep Learning Library for Spiking Neural Networks. 2021. Available online: https://doi.org/10.5281/zenodo.4422025 (accessed on 23 October 2025).

- Ali, H.A.H.; Dabbous, A.; Ibrahim, A.; Valle, M. Assessment of recurrent spiking neural networks on neuromorphic accelerators for naturalistic texture classification. In Proceedings of the 2023 18th Conference on Ph.D. Research in Microelectronics and Electronics (PRIME 2023), Valencia, Spain, 18–21 June 2023; pp. 145–148. [Google Scholar] [CrossRef]

- Herbozo Contreras, L.F.; Huang, Z.; Yu, L.; Nikpour, A.; Kavehei, O. Biologically plausible algorithm for seizure detection: Toward AI-enabled electroceuticals at the edge. APL Mach. Learn. 2024, 2, 026113. [Google Scholar] [CrossRef]

- Takaghaj, S.M.; Sampson, J. Rouser: Robust SNN training using adaptive threshold learning. arXiv 2024, arXiv:2407.19566. [Google Scholar] [CrossRef]

| Encoding Type | Main Applications | Complexity | Biological Plausibility | Advantages | Challenges |

|---|---|---|---|---|---|

| Rate Coding [38,75,78] | Image and signal processing; ANN-to-SNN conversion; resource-constrained inference | Low | High | Simple implementation; noise–adversarial robustness; hardware-friendly mapping from activations | Loses fine temporal structure; window-length–latency sensitivity; can require high spike counts that raise energy [80] |

| Direct Input Encoding [73,74,76,78,81] | Deep vision and real-time pipelines with large datasets; accuracy/ latency–critical use | Moderate–high | Low | Fewer time steps; preserves input fidelity; simplifies front end; fast inference | Not event driven; multi-bit input raises compute–energy; lower biological realism |

| Temporal Coding [14,24,38,76,82] | Rapid sensory processing; real-time decisions; fine temporal discrimination–patterns | High | High | High information per spike; low-latency responses; potentially energy efficient with sparse spiking | Sensitive to jitter/noise; complex decoding; training with precise timings is challenging |

| Population Coding [83,84] | Speech–audio; noisy environments; improving separability with simple classifiers | High | High | Noise robustness via redundancy; improved linear separability | More neurons increase energy; decoding large populations adds computational overhead |

| Encoding [74,78,85,86,87,88] | Dynamic signals (wearables, biomedical, streaming sensors); energy-aware neuromorphic platforms | Moderate–high | Moderate | Encodes changes (fewer spikes) with good fidelity; noise shaping; strong energy savings | Requires feedback-loop–circuit tuning; trade-offs among fidelity, latency, and energy |

| Burst Coding [13,75,89,90] | Biologically realistic simulations; temporally complex signals; long-activity tasks | Moderate–high | High | Rapid information transfer in spike packets; can be energy saving when bursts are well managed | Synchronizing bursts complicates decoding; scalability and parameter tuning on hardware |

| PoFC [87,91,92,93] | Spatial navigation/sensory processing with oscillations; high-fidelity temporal representation | High | High | Dense information per spike via phase; strong discriminability; potential spike-count/energy reduction | Requires precise global phase reference; sensitive to timing noise; complex decoding and STDP integration |

| Layer | FCN Architecture | VGG7 Architecture |

|---|---|---|

| Input Layer | 784 input neurons | 3 channels, pixels |

| Layer 1 | Dense, 128 neurons, | Conv, 64 filters, kernel, stride 1, padding 1 |

| Layer 2 | Dense, 128 neurons, | Conv, 64 filters, kernel, stride 2, padding 1 |

| – | Output, 10 neurons | Max Pooling, kernel, stride 2 |

| Layer 3 | — | Conv, 128 filters, kernel, stride 1, padding 1 |

| Layer 4 | — | Conv, 128 filters, kernel, stride 2, padding 1 |

| Layer 5 | — | Conv, 128 filters, kernel, stride 2, padding 1 |

| – | — | Max Pooling, kernel, stride 2 |

| Flatten Layer | — | Flatten feature maps |

| Layer 6 | — | Dense, 1024 neurons, |

| Layer 7 | Output, 10 neurons | Output, 10 neurons |

| Special Components | Weight Normalization, Weight Scaling | Weight Normalization, Weight Scaling |

| Total Parameters | ∼118,016 | ∼548,554 |

| Frameworks Used | PyTorch for ANNs; Lava, Norse, SpikingJelly for SNNs | PyTorch for ANNs; Lava, Norse, SpikingJelly for SNNs |

| Parameter | ANN (FCN/VGG7) | SNN (FCN) | SNN (VGG7) |

|---|---|---|---|

| Optimizer | Adam | Adam | Adam |

| Learning rate | 0.001 | 0.001 | 0.001 |

| Weight decay | |||

| Loss function | Cross-Entropy | CrossEntropyLoss | CrossEntropyLoss |

| Number of epochs | FCN: 100; VGG7: 150 | 100 | 150 |

| Batch size | 64 | 64 | 64 |

| Dropout rate | FCN: 5%; VGG7: 20% | 5% | 20% |

| Activation function | ReLU | — | — |

| Weight initialization | PyTorch defaults | — | — |

| Neuron parameters | — | Threshold (): 1.25 Current decay: 0.25 Voltage decay: 0.03 Tau gradient: 0.03 Scale gradient: 3 Refractory decay: 1 | Threshold (): 0.5 Current decay: 0.25 Voltage decay: 0.03 Tau gradient: 0.03 Scale gradient: 3 Refractory decay: 1 |

| Neuron Type | Time Steps | Rate Encoding | TTFS | Direct Coding | Burst Coding | PoFC | R-NoM | |

|---|---|---|---|---|---|---|---|---|

| ANN (Baseline) | – | 98.23% | ||||||

| IF | 8 | 97.20 | 86.60 | 97.70 | 78.90 | 80.00 | 71.20 | 72.20 |

| 6 | 97.10 | 90.00 | 97.60 | 88.10 | 91.00 | 78.50 | 74.00 | |

| 4 | 96.90 | 92.00 | 97.40 | 86.80 | 88.40 | 79.80 | 76.80 | |

| LIF | 8 | 97.00 | 86.50 | 97.40 | 81.90 | 80.06 | 72.10 | 71.00 |

| 6 | 96.90 | 90.00 | 97.50 | 90.00 | 91.10 | 77.70 | 73.00 | |

| 4 | 96.90 | 92.00 | 97.20 | 88.60 | 83.30 | 82.30 | 75.50 | |

| ALIF | 8 | 97.30 | 96.40 | 96.50 | 97.10 | 97.14 | 92.70 | 58.00 |

| 6 | 96.60 | 96.10 | 96.30 | 97.00 | 97.00 | 92.90 | 57.00 | |

| 4 | 96.40 | 96.70 | 96.30 | 97.00 | 96.90 | 93.00 | 46.00 | |

| CUBA | 8 | 97.66 | 97.10 | 97.40 | 97.60 | 79.50 | 94.10 | 68.00 |

| 6 | 97.56 | 97.00 | 97.18 | 97.50 | 79.30 | 94.10 | 66.50 | |

| 4 | 97.20 | 96.30 | 96.40 | 97.30 | 96.80 | 93.40 | 56.00 | |

| 8 | 98.10 | 97.90 | 98.00 | 88.00 | 88.30 | 95.00 | 86.70 | |

| 6 | 97.80 | 97.50 | 98.00 | 88.50 | 97.90 | 87.40 | 86.00 | |

| 4 | 97.90 | 97.50 | 97.70 | 98.00 | 97.90 | 87.80 | 64.00 | |

| RF | 8 | 93.00 | 86.80 | 90.05 | 97.20 | 92.20 | 85.80 | 52.00 |

| 6 | 92.60 | 88.70 | 92.00 | 79.00 | 92.00 | 84.90 | 50.00 | |

| 4 | 93.00 | 88.00 | 91.60 | 53.00 | 91.70 | 83.90 | 47.70 | |

| RF-IZH | 8 | 97.70 | 47.00 | 79.60 | 97.70 | 50.00 | 94.90 | 48.00 |

| 6 | 97.55 | 87.70 | 97.40 | 97.70 | 97.40 | 94.80 | 69.70 | |

| 4 | 97.00 | 96.40 | 96.00 | 97.20 | 96.80 | 93.00 | 74.00 | |

| EIF | 8 | 96.70 | 94.60 | 96.50 | 97.50 | 96.30 | 95.20 | 88.10 |

| 6 | 96.20 | 94.90 | 96.20 | 97.60 | 96.00 | 94.50 | 86.70 | |

| 4 | 96.55 | 95.80 | 96.60 | 97.50 | 96.40 | 95.10 | 87.70 | |

| AdEx | 8 | 96.50 | 94.70 | 96.50 | 97.40 | 96.70 | 95.40 | 89.50 |

| 6 | 96.40 | 95.00 | 96.40 | 97.50 | 96.80 | 95.30 | 89.20 | |

| 4 | 96.00 | 95.90 | 96.44 | 97.39 | 96.80 | 95.20 | 88.50 | |

| Neuron Type | Time Steps | Rate Encoding | TTFS | Direct Coding | Burst Coding | PoFC | R-NoM | |

|---|---|---|---|---|---|---|---|---|

| IF | 8 | |||||||

| 6 | ||||||||

| 4 | ||||||||

| LIF | 8 | |||||||

| 6 | ||||||||

| 4 | ||||||||

| ALIF | 8 | |||||||

| 6 | ||||||||

| 4 | ||||||||

| CUBA | 8 | |||||||

| 6 | ||||||||

| 4 | ||||||||

| 8 | ||||||||

| 6 | ||||||||

| 4 | ||||||||

| RF | 8 | |||||||

| 6 | ||||||||

| 4 | ||||||||

| RF-IZH | 8 | |||||||

| 6 | ||||||||

| 4 | ||||||||

| EIF | 8 | |||||||

| 6 | ||||||||

| 4 | ||||||||

| AdEx | 8 | |||||||

| 6 | ||||||||

| 4 |

| Neuron Type | Time Steps | Rate Encoding | TTFS | Direct Coding | Burst Coding | PoFC | R-NoM | |

|---|---|---|---|---|---|---|---|---|

| ANN (Baseline) | – | 83.60% | ||||||

| IF | 2 | 57.00 | 60.00 | 62.00 | 74.00 | 60.00 | 57.00 | 29.00 |

| 4 | 56.50 | 62.00 | 65.00 | 74.50 | 64.00 | 65.00 | 27.00 | |

| 6 | 57.00 | 62.50 | 65.00 | 74.50 | 64.50 | 68.00 | 30.00 | |

| LIF | 2 | 50.00 | 61.50 | 62.50 | 74.30 | 57.60 | 59.00 | 28.00 |

| 4 | 51.00 | 62.00 | 64.50 | 74.50 | 61.00 | 63.00 | 23.00 | |

| 6 | 50.00 | 59.50 | 65.00 | 74.00 | 61.50 | 67.00 | 21.00 | |

| ALIF | 2 | 10.00 | 10.00 | 10.00 | 10.00 | 10.00 | 10.00 | 10.00 |

| 4 | 39.00 | 34.00 | 20.00 | 46.00 | 20.00 | 27.00 | 14.00 | |

| 6 | 51.00 | 38.00 | 28.00 | 49.00 | 27.00 | 29.00 | 24.00 | |

| CUBA | 2 | 10.00 | 10.00 | 10.00 | 10.00 | 10.00 | 10.00 | 10.00 |

| 4 | 34.00 | 50.00 | 33.50 | 40.00 | 30.00 | 24.00 | 25.00 | |

| 6 | 45.00 | 43.00 | 40.00 | 50.00 | 30.00 | 31.00 | 27.00 | |

| 2 | 57.00 | 72.00 | 56.00 | 83.00 | 57.00 | 57.00 | 30.00 | |

| 4 | 61.00 | 72.00 | 66.00 | 78.00 | 66.00 | 62.00 | 27.00 | |

| 6 | 60.00 | 72.50 | 68.00 | 79.00 | 66.00 | 67.00 | 24.00 | |

| RF | 2 | 10.00 | 10.00 | 10.00 | 10.00 | 10.00 | 10.00 | 10.00 |

| 4 | 22.00 | 10.00 | 10.00 | 42.00 | 10.00 | 10.00 | 10.00 | |

| 6 | 37.00 | 36.00 | 32.00 | 47.00 | 39.00 | 37.00 | 31.00 | |

| RF-IZH | 2 | 10.00 | 10.00 | 10.00 | 10.00 | 10.00 | 10.00 | 10.00 |

| 4 | 10.00 | 10.00 | 10.00 | 33.00 | 10.00 | 10.00 | 10.00 | |

| 6 | 45.00 | 37.00 | 30.00 | 39.00 | 32.00 | 32.00 | 27.00 | |

| EIF | 2 | 58.50 | 56.00 | 53.50 | 70.00 | 58.50 | 54.00 | 25.00 |

| 4 | 59.50 | 64.00 | 60.00 | 69.00 | 57.00 | 57.00 | 22.50 | |

| 6 | 60.00 | 65.00 | 61.00 | 68.50 | 53.50 | 60.00 | 24.00 | |

| AdEx | 2 | 59.00 | 55.50 | 60.00 | 69.50 | 58.50 | 54.00 | 27.00 |

| 4 | 60.00 | 62.00 | 61.50 | 70.00 | 56.50 | 59.00 | 29.00 | |

| 6 | 60.50 | 63.00 | 60.50 | 70.10 | 52.00 | 61.00 | 25.50 | |

| Neuron Type | Time Steps | Rate Encoding | TTFS | Direct Coding | Burst Coding | PoFC | R-NoM | |

|---|---|---|---|---|---|---|---|---|

| IF | 2 | |||||||

| 4 | ||||||||

| 6 | ||||||||

| LIF | 2 | |||||||

| 4 | ||||||||

| 6 | ||||||||

| ALIF | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4 | ||||||||

| 6 | ||||||||

| CUBA | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4 | ||||||||

| 6 | ||||||||

| 2 | ||||||||

| 4 | ||||||||

| 6 | ||||||||

| RF | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4 | 0 | 0 | 0 | 0 | ||||

| 6 | ||||||||

| RF-IZH | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4 | 0 | 0 | 0 | 0 | 0 | 0 | ||

| 6 | ||||||||

| EIF | 2 | |||||||

| 4 | ||||||||

| 6 | ||||||||

| AdEx | 2 | |||||||

| 4 | ||||||||

| 6 |

| Encoding Scheme | Threshold | 2 Steps | 4 Steps | 6 Steps |

|---|---|---|---|---|

| Rate encoding | 0.1 | 58.50 | 59.50 | 60.00 |

| 0.5 | 47.00 | 53.00 | 47.50 | |

| 0.75 | 40.00 | 41.00 | 27.00 | |

| TTFS encoding | 0.1 | 56.00 | 64.00 | 65.00 |

| 0.5 | 57.00 | 65.50 | 67.50 | |

| 0.75 | 54.00 | 65.00 | 55.00 | |

| encoding | 0.1 | 53.50 | 60.00 | 61.00 |

| 0.5 | 39.00 | 38.50 | 54.00 | |

| 0.75 | 51.50 | 30.00 | 51.00 | |

| Direct coding | 0.1 | 70.00 | 69.00 | 68.50 |

| 0.5 | 68.00 | 67.50 | 66.00 | |

| 0.75 | 16.00 | 17.00 | 15.00 | |

| Burst Coding | 0.1 | 58.50 | 57.00 | 53.50 |

| 0.5 | 32.00 | 56.00 | 60.00 | |

| 0.75 | 51.00 | 57.00 | 55.00 | |

| PoFC | 0.1 | 54.00 | 57.00 | 60.00 |

| 0.5 | 42.50 | 53.50 | 56.00 | |

| 0.75 | 17.00 | 39.50 | 20.00 | |

| R-NoM | 0.1 | 25.00 | 22.50 | 24.00 |

| 0.5 | 28.00 | 28.00 | 29.50 | |

| 0.75 | 29.00 | 28.50 | 28.00 |

| Encoding Scheme | Threshold | 2 Steps | 4 Steps | 6 Steps |

|---|---|---|---|---|

| Rate encoding | 0.1 | |||

| 0.5 | ||||

| 0.75 | ||||

| TTFS encoding | 0.1 | |||

| 0.5 | ||||

| 0.75 | ||||

| encoding | 0.1 | |||

| 0.5 | ||||

| 0.75 | ||||

| Direct coding | 0.1 | |||

| 0.5 | ||||

| 0.75 | ||||

| Burst Coding | 0.1 | |||

| 0.5 | ||||

| 0.75 | ||||

| PoFC | 0.1 | |||

| 0.5 | ||||

| 0.75 | ||||

| R-NoM | 0.1 | |||

| 0.5 | ||||

| 0.75 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ayasi, B.; Carmona, C.J.; Saleh, M.; García-Vico, A.M. A Practical Tutorial on Spiking Neural Networks: Comprehensive Review, Models, Experiments, Software Tools, and Implementation Guidelines. Eng 2025, 6, 304. https://doi.org/10.3390/eng6110304

Ayasi B, Carmona CJ, Saleh M, García-Vico AM. A Practical Tutorial on Spiking Neural Networks: Comprehensive Review, Models, Experiments, Software Tools, and Implementation Guidelines. Eng. 2025; 6(11):304. https://doi.org/10.3390/eng6110304

Chicago/Turabian StyleAyasi, Bahgat, Cristóbal J. Carmona, Mohammed Saleh, and Angel M. García-Vico. 2025. "A Practical Tutorial on Spiking Neural Networks: Comprehensive Review, Models, Experiments, Software Tools, and Implementation Guidelines" Eng 6, no. 11: 304. https://doi.org/10.3390/eng6110304