1. Introduction

Stress is a physiological response to various factors arising in individuals unable to consciously handle a specific situation. During a stressful situation, the sympathetic nervous system (SNS) is responsible for the fight-or-flight physiological reaction of the body, resulting in vasoconstriction and increased blood pressure and heart rate [

1]. In the long term, stress can lead to different health problems, being directly related to several physiological processes such as those involving the autonomic nervous system [

2], the immune systems [

3], and the cardiovascular and respiratory systems [

4].

Stress has been deeply investigated in recent years [

1,

5] given the relevant consequences of a prolonged stressful condition on the body. Nevertheless, the detection of stress remains a challenging task since no standardized and validated methodology for stress assessment has been established as the gold standard. Among the methods used to measure stress, there are questionnaires [

6,

7], the visual analogue scale [

8], and the detection of specific biomarkers (e.g., cortisol) related to the stress level [

9,

10]. Scale-related stress assessment methods do not require expensive tools but are often time-consuming and non-objective and pose difficulties for continuous monitoring. Conversely, biomarker detection methods allow for continuous monitoring by sensing biomarker fluctuations over time. However, these detection methods usually require very limited invasive and sophisticated tools, often based on non-reusable materials, despite significant advancements in biomaterials and biofabrication that have made them much less invasive [

11].

Given the drawbacks of the above-indicated detection methods, stress assessment through wearable sensors capable of acquiring physiological signals has emerged as a highly promising approach in recent times. Wearable devices are now even smaller in size and more affordable [

12], thus becoming non-intrusive tools able to handle continuous monitoring. Furthermore, the recent growth of classification and machine learning algorithms in the physiological data analysis area has profoundly improved stress evaluation [

13,

14]. In this context, the most employed biosignals are the electrocardiographic (ECG) [

15,

16], electromyographic (EMG) [

17], electroencephalographic (EEG) [

18], and photoplethysmographic (PPG) [

19] signals. These signals are often combined in a multi-domain approach which takes into account the interaction between multiple physiological data to better analyze the dynamics of each signal and extract further useful information.

Starting from the acquired biosignals, several methods have been reported in the literature to determine the stress level of a subject [

13,

20], mostly based on the extraction of various features from the signals, followed by a classifier to predict the stress level. For example, Gupta and colleagues [

21] employed a support vector machine (SVM) classification on features extracted from the EEG signal. This classificatory approach was also used in [

22] with the combination of ECG and EMG signals, obtaining an excellent binary classification accuracy. Other studies used the K-Nearest Neighbors (KNN) algorithm for classification using either ECG [

16] or EEG [

18] signals.

Although most machine learning approaches can predict the stress level of a subject with good accuracy, they do not consider a critical aspect related to physiological data: the inter-subject variability. Physiological signals, whether acquired at rest or in response to stimuli, exhibit significant subject dependency. While the normal resting heart rate (HR) can depend on factors like fitness [

23] and age [

24], the normal physiological range for resting HR itself exhibits high variability. This range typically spans 60 to 100 beats per minute (bpm) across healthy adults [

25]. Whether measured in a clinical setting [

26] or in real-world, out-of-clinic environments, this variability persists, with little change in the upper limits: the 95th percentile of HR is typically less than 110 bpm in individuals aged 18–45, less than 100 bpm in those aged 45–60, and less than 95 bpm in individuals older than 60 years old [

27].

Furthermore, other critical subject-specific physiological features are linked to respiratory or electrodermal activity signals [

28]. For instance, the respiratory rate in adults shows large inter-subject variability, generally ranging from 12 to 20 breaths per minute [

29]. In this context, some studies aiming to detect stress have employed either feature extraction or deep learning approaches, often incorporating amplitude normalization to account for inter-subject amplitude differences. However, time-related differences among subjects are often not adequately addressed. Common normalization techniques like scaling [

30] and feature standardization [

31] do not account for individual subject dependencies in the time domain, particularly concerning raw signals.

One possible approach to overcome this limitation is to normalize features by transforming the original feature vector into a common feature space where the feature exhibits the same mean or an arbitrary value across all subjects. However, this approach has a limitation: normalization is applied to a single feature independently (e.g., the RR interval), leading to a loss of correspondence with other features derived from the same signal. For instance, in the case of normalized RR features [

32,

33], the RR series is normalized by the mean value of all RR intervals within one ECG recording. Yet the remaining extracted features are often derived from the original, unnormalized signal, thereby losing their association with the normalized RR feature.

Therefore, it is crucial to develop a normalization procedure that addresses this limitation. This procedure must allow for the extraction of all features from a signal that is already in a normalized domain, ensuring that all features remain interconnected and associated within the same normalized framework. The aim of this work is to introduce a novel normalization approach for physiological signals to optimize the entire feature extraction pipeline. This allows features to effectively accounting for inter-subject variability across the entire signal. Our algorithm builds upon the previous work of Gasparini et al. [

34], which introduced a methodology for classifying PPG signal features that included an inter-subject normalization procedure to mitigate variability between individuals. As a key novelty, we propose a new interpretation of this inter-subject normalization algorithm, specifically tailored for multi-domain feature extraction within the context of multilevel stress classification. We validated our approach using an open-access database of multimodal physiological data collected during various driving conditions in a controlled environment [

30]. A key distinction from Gasparini et al.’s method is that we applied the inter-subject normalization procedure to two physiological signals: ECG and respiratory data. Notably, our novel methodology operates directly on the raw physiological signals, transforming them into a different domain. This means that any features subsequently extracted from these normalized signals are inherently normalized within a common framework. The structure of this normalization is directly determined by the specific features used in the normalization process itself—heart rate for ECG signals and breath rate for respiratory signals in this project. This ensures a strong and inherent interrelation among all extracted features.

The performance of this algorithm was tested in a feature-based classification of driving stress using an SVM classifier. The features, derived from various physiological signals, were combined during the classification step. The classification performances were then compared when either skipping or utilizing this inter-subject normalization procedure in the preprocessing step. Additionally, these results were compared with those obtained using other commonly employed feature normalization approaches.

2. Materials and Methods

2.1. Physiological Data

The data used in this study belongs to the database of J.A. Healey and colleagues [

30], a multimodal dataset of synchronized physiological data and video recordings. This dataset, previously employed in stress assessment studies [

35,

36], specifically comprises data collected from subjects under different driving conditions. Three different stress states were elicited during the experimental session, each one associated with a specific driving condition: low stress (resting, no driving), high stress (city driving) and medium stress (highway driving). The low-stress state was collected in two 15 min sessions, respectively, at the beginning and at the end of the experiment (herein referred as resting1 and resting2). In this condition, the subjects were sitting in a garage with their eyes closed, while the car was parked and idle. The medium-stress state was monitored while driving on the highway in two sessions (highway1 and highway2). The high stress was induced by driving in the congested streets of Boston (three driving phases named city1, city2 and city3). Depending on the traffic, acquisitions lasted from 50 to 90 min including the resting state.

The signals taken into account in this study were the ECG waveform, acquired through lead II configuration (sampling frequency of 496 Hz), and the respiration signal, recorded through an elastic Hall effect sensor monitoring the chest cavity expansion (acquired at 31 Hz). Consistent with the methodology of Lee’s study, only acquisitions with complete data, including ECG and respiratory data for each stress state and temporal division information for each driving state, were considered. The analysis thus comprises ten unique acquisitions.

2.2. Signal Preprocessing

The data preprocessing procedure prepared the raw physiological data for the successive feature extraction step. Following previous works [

14], the preprocessing in this study consisted of filtering the raw physiological data and then partitioning the data based on the labelled stress state.

The raw ECG signal was filtered using a zero-phase passband Butterworth filter (0.1–115 Hz cutoff frequencies). For the raw respiratory signal, its relative mean value was first removed, and then the signal was filtered with a zero-phase bandpass Butterworth filter (0.01–5 Hz cutoff frequencies). Both the ECG and the respiratory signals were then resampled to 250 Hz, ensuring high data quality and reducing computational processing. The filtered signals were subsequently organized into a structure containing the three primary stress phases based on the reported labels: resting, highway driving, and city driving.

Subsequently, each signal was segmented into 20-s windows with a 5-s overlap, thus performing a data augmentation, as this increased the number of samples available for classification. The choice of a 20-s window length was made to maximize the number of windows while preserving temporal information within each window [

37,

38,

39]. The 5-s overlap was chosen to ensure independence between samples, which, in turn, enhances the model’s ability to generalize during stress state prediction. According to the study by Farias da Silva and colleagues [

40], a 5-s overlap was found to be the best compromise for the overlapping window data augmentation technique.

In the following analyses, two different sets of data originating from the same database were utilized to emphasize the effects of the inter-subject normalization procedure. The first dataset comprised raw signals subjected to the preprocessing procedure without any additional modifications, as described in this paragraph. The second dataset was generated by applying the inter-subject normalization process before the feature extraction step. Specifically, for each driver, the resting phases were considered for subject normalization. From now on, the term original signal will be used to identify the first dataset, while the second dataset will be identified by the term subject-normalized signal.

All the procedures, including the preprocessing steps, were conducted in the MATLAB environment, version 2024b [

41].

2.3. Inter-Subject Normalization

This study employs an inter-subject normalization algorithm to account for inherent physiological variability among individuals during stress classification. During resting states, characterized by low stress, each driver exhibits unique physiological characteristics. For instance, a healthy individual’s resting heart rate typically ranges from 60 to 100 beats per minute (bpm), while their respiratory rate can vary from 12 to 20 breaths per minute [

25,

42]. This physiological diversity persists across all stress levels and can significantly impact classification accuracy.

Consider an example: a heart rate of 80 bpm might be recorded for one subject during rest, but the same heart rate could indicate moderate stress in another subject. This observation extends to other physiological conditions and stress levels. Without addressing this inter-subject variability, classification algorithms trained and tested with inconsistent data can lead to misclassification errors.

To mitigate this issue, this paper presents a novel resampling procedure applied to raw ECG and respiratory signals. This approach extends previous work by Gasparini and colleagues [

34], offering a multidomain solution. The primary goal of this procedure is to reduce inter-subject variability in specific physiological features during the resting state. This reduction improves the ability to first identify changes in these features across different stress levels and, indirectly, extends the normalization to all other features extracted from the signals, as the signals themselves are transformed into a new normalized domain.

The normalization procedure involves selecting a specific feature from each physiological signal (ECG and respiration) to serve as the basis for normalization. Subsequently, a new sampling frequency is assigned to the original signal. This adjustment ensures consistency of the selected feature among all subjects within the resting phase. The inter-subject normalization procedure for both ECG and breath signals is further detailed in the following subsections.

2.3.1. ECG Signal

The inter-subject normalization procedure on the ECG signal was conducted considering the heart rate as the normalized feature. This ensured that, after normalization, all subjects exhibited the same average heart rate during the resting state.

Starting from the raw ECG signal with a sampling frequency

equal to 250 Hz, the Pan-Tompkins algorithm [

43] was applied to detect the R peaks during the two resting phases. Subsequently, the R-R interval (RRI) time series were extracted (

Figure 1a), from which the heart rate series was derived as the inverse of the RRI time series. The mean heart rate

across the two resting phases was then calculated as a reference value using the following equation:

where

is the time (expressed in milliseconds) corresponding to the R peak, and

N and

M represent the number of R-peaks detected in the first and second resting phases, respectively.

The inter-subject normalization procedure aimed to transform all subjects’ data into a subject-normalized domain. In this domain, each subject’s resting heart rate was standardized to a predefined value

, which was set to 70 beats per minute (bpm). This normalization was achieved by resampling the raw ECG signals at a new sampling frequency

, which varied across subjects. The determination of

was based on the ratio between the chosen resting frequency

and each subject’s mean resting heart rate

, as follows:

where

is the original sampling frequency (250 Hz). The resampling frequency

determined from the resting phase heart rate, was then consistently applied to the ECG signals recorded during other stress phases (highway driving and city driving). This crucial step ensured that the temporal relationships and feature characteristics across different stress states were preserved following normalization.

Each subject could potentially exhibit a different heart rate during resting conditions. Therefore, following the described inter-subject normalization procedure, the raw ECG of each subject was resampled into a new domain characterized by a resampling frequency value determined by the ratio as described in (2). As an example, given a device that acquired the ECG signal with an original sampling frequency equal to 250 Hz:

If a driver exhibited a heart rate higher than 70 bpm at rest in the original signal, the value of the new resampling frequency was lower than 250 Hz.

If the driver presented a heart rate lower than 70 bpm at rest, the value of the new resampling frequency was higher than 250 Hz.

The inter-subject normalization procedure had two main consequences. Firstly, it shifted the signals of each subject into a new common domain based on chosen specific features (heart rate during resting in the case of ECG). In this new domain, each subject exhibited the same average value of the considered feature, maintaining unaffected the relative intra-subject difference during both resting and stressful conditions. Furthermore, the length of the resampled signals was different than the original ones (

Figure 1b). This was caused by the resampling procedure introducing a lengthening or shortening of the signal due to the decreasing or increasing of the resampling frequency, respectively.

2.3.2. Respiratory Signal

Analogous to the procedure carried out on the ECG signal, the inter-subject normalization procedure aimed to resample the original respiratory signal. This was done with a new frequency to ensure all subjects exhibited the same breath rate during the resting state. Therefore, the breath rate feature was chosen as the reference. Specifically, the resampling frequency

, defined by the following equation, was employed:

Here,

is the selected resting breathing frequency (in this project, set to 14 breaths per minute). The value of

indicates the mean breath frequency during the two resting periods detected on the original signal and derived as the inverse of the breath-to-breath interval (BBI) series. To obtain this value, the inspiration peak positions were first determined on the original signal (

Figure 1c). Subsequently, the time differences between consecutive peaks were calculated. These peak-to-peak series, when multiplied by 60 and divided by a factor of 1000, indicate the breath rate values expressed in breaths per minute. The final

value was obtained by averaging all the breath rate values within the two resting phases. The value of

derived in Equation (3) was then used to resample the respiratory signal across all stress phases, thereby preserving the temporal relationship between stress states for each considered feature.

Given that the original sampling frequency was consistent across different drivers, variations in the obtained resampling frequency across drivers were solely determined by the ratio between and . Consequently, a breath rate on the original signal higher than 14 breaths per minute resulted in a resampling frequency lower than 250 Hz. Conversely, the resampling frequency was higher than 250 Hz. The same implications of the inter-subject normalization outlined in the previous paragraph for the ECG traces also apply to the respiratory signals.

2.4. Feature Extraction

Feature extraction was performed on 20-s windows of the signal. This methodology required the exclusive use of time-domain features, as a 20-s duration was considered inadequate for a comprehensive frequency-domain analysis. Accurate frequency-domain analysis typically requires signals of greater length and a higher density of data points to yield statistically significant results [

44,

45]. By segmenting the original signal into shorter windows, the number of resultant feature vectors available for the subsequent classification step was augmented, thereby contributing to a more generalizable algorithm. The 20-s window length was specifically chosen to balance the need for sufficient temporal information with the necessity to generate a substantial number of samples.

In the context of feature extraction in the time domain, it was essential to accurately identify key morphological points of interest in each signal. For instance, in the ECG signal, the identification of R peaks was crucial, while in the respiratory signal, the focus was on detecting respiratory peaks.

In this study, we employed a modified version of the Pan–Tompkins algorithm [

46] to detect the positions of R peaks in the ECG signal from each window, resulting in the creation of the RR interval time series. From this, the obtained time-domain features were the average RR interval (

), the standard deviation (

) and the root mean square of successive RR interval differences (

) [

47]. In addition, the standard deviation of successive RR interval differences (

), the number of R peaks in each window that differ more than 50 milliseconds (

) and the associated percentage (

) were calculated [

48].

To extract the time-domain indices of the respiratory signal, the maximum and minimum points within each window were identified. The time difference between each maximum point was considered as a respiratory act. Therefore, the respiratory rate () was determined by calculating the average of the inverse of the time distances between the maxima. Inspiration and expiration times were investigated by computing the average rise time () and fall time () of the breathing signal within each respiratory act. Additionally, the average inspiration and expiration areas were extracted by considering the area under the inspiration and expiration phases in the respiratory signal, respectively.

It is worth noting that the difference in the length of raw signals and those subjected to the inter-subject normalization did not result in a difference in the length of the time series used in the feature extraction phase, for both the RRI and the BBI series. Additionally, any potential changes introduced by the inter-subject normalization process, particularly those associated with discrepancies in the time length between ECG signal samples and respiratory signal samples, did not affect stress classification. This is because the features were independently extracted from each physiological signal.

2.5. Data Organization and Imbalance Class Management

The feature dataset was organized in a table where each row represented a 20-s window, and each column corresponded to a specific feature. In total, 12 features were extracted from each of the 2979 windows for the original signals and each of the 2974 windows for the normalized signals. This slight difference in the number of samples was due to variations in the length of the normalized signals. These length changes resulted from different resampling frequencies applied to each subject, which in turn led to a different number of 20-s windows being obtainable from the complete signals.

An analysis of the number of windows associated with each stress label revealed a clear imbalance in class distribution. Specifically, for the time series derived from the original signals,

The resting condition had 1132 samples.

The city driving condition had 1275 samples.

The highway driving condition had 572 samples.

A similar sample distribution across the different classes was observed for the normalized signals. To address this class imbalance during classification and mitigate potential bias and inaccuracy that can arise when training a machine learning model on imbalanced data, the Synthetic Minority Oversampling Technique (SMOTE) [

49] was employed. This algorithm generates synthetic samples for classes with fewer existing samples by interpolating between points in the feature space. Applying SMOTE ensured an equal representation of samples across all classes.

2.6. Stress State Classification

To compare our findings with prior research on the same dataset, we employed a Support Vector Machine (SVM) for classification, mirroring the methodology of a previous study [

14]. Specifically, we utilized a Gaussian SVM, characterized by its Gaussian kernel function, a common choice for classification tasks [

50,

51]. The SVM was trained on 80% of the dataset, with the remaining 20% reserved for testing. This random partitioning strategy was employed to mitigate overfitting and ensure an unbiased evaluation of the model’s ability to generalize and accurately predict stress in unseen data.

Additionally, to account for potential variations in model performance due to different training and testing splits, we repeated the training and testing phases 100 times. This repetition minimizes the stochastic behavior of the process, and the final classification performances were obtained by averaging the values across these 100 iterations.

For each set of results, a global confusion matrix was generated. This matrix illustrates the relationship between predicted and true labels, from which key performance metrics—including accuracy, sensitivity, specificity, precision, and F-measure—were derived to comprehensively evaluate the model’s performance.

3. Inter-Subject Normalization Validation

The inter-subject normalization procedure was evaluated by comparing features extracted from subject-normalized signals with those from original signals. This evaluation also included comparisons to features processed using two common machine learning normalization techniques: standardization [

52] and scaling [

53].

Standardization

In the standardization procedure, each feature—derived from a window composed of a fixed number of seconds of the original signal—was normalized as follows:

Here, indicates the original feature array, the mean of , and the standard deviation of . This normalization resulted in a feature array with an average value equal to zero and a standard deviation equal to one.

Scaling

The scaling procedure remapped all features to the same scale, ensuring comparable ranges across features and subjects. For a single feature, the scaling procedure was represented as

Here, is the original features array and indicates the array with the considered features rescaled to the range [0, 1].

Inter-Subject Normalization

Unlike standardization and scaling, which directly operated on the features, inter-subject normalization modified the original signal. Consequently, features derived from inter-subject-normalized signals retained their original relationships. In contrast, standardization and scaling procedures acted independently on each feature, potentially leading to a loss of inter-feature information.

To evaluate the performance of each normalization procedure, the Chi-square goodness-of-fit test was applied to assess the normality of the distributions of performance metrics (i.e., precision, sensitivity, and accuracy). These metrics were obtained from 100 iterations of training and testing an SVM model. The original data served as the baseline for comparison. Specifically, the test was conducted on performance distributions derived from the original features, comparing them with distributions from subject-normalized features, standardized features, and scaled features.

To ensure a robust statistical comparison among the four groups, the ANOVA test was employed. Additionally, the Bonferroni method was applied to account for multiple comparisons in the statistical analysis. A two-sample parametric unpaired Student’s t-test was performed to detect significant differences between groups. This test specifically aimed to compare the performances achieved using the three different normalization procedures with the performances derived from the original data. The null hypothesis for all conditions was that there were no significant differences between the original features and the features obtained after inter-subject normalization, standardization, or scaling procedures.

4. Results

Following the inter-subject normalization procedure, both the electrocardiogram (ECG) and respiratory signals were resampled to a new domain. This resampling was performed using the individual’s resting heart rate (HR) and resting respiratory rate (RR) as normalization features, respectively.

Table 1 details the heart rates from the original signals and their associated resampling frequencies for each subject, while

Table 2 provides the same information for breath rates. It is important to note that a heart rate exceeding 70 bpm (or a breath rate greater than 14 breaths per minute) resulted in a resampling frequency lower than 250 Hz, and vice versa. This adaptive resampling ensured that the signals were transformed to a common domain relevant to each subject’s physiological baseline.

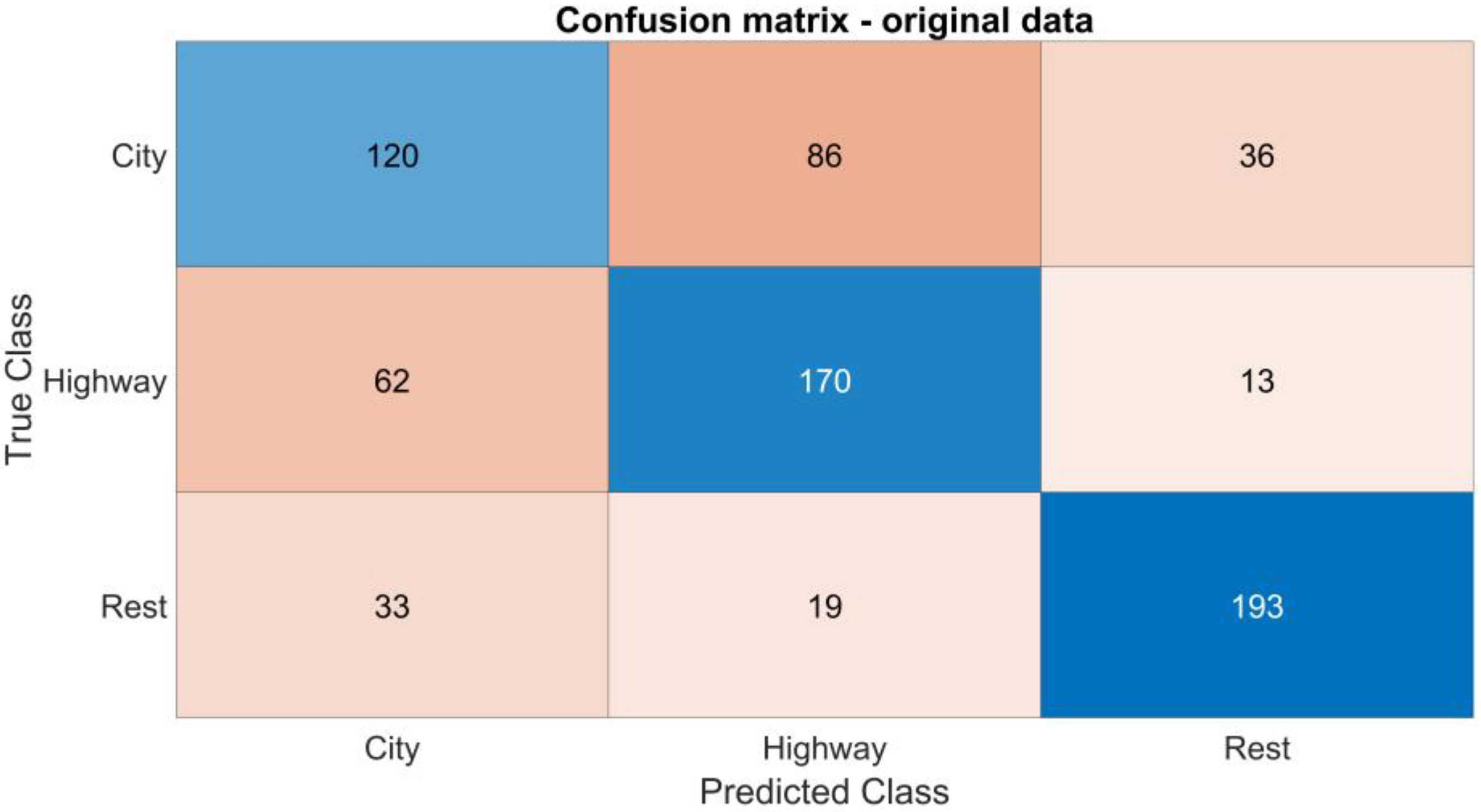

Starting from the inter-subject-normalized signals, and following the feature extraction and classification procedures, the developed Support Vector Machine (SVM) model was rigorously assessed. A confusion matrix was generated for each of the four experimental conditions examined in this study:

Original signals;

Subject-normalized signals;

Feature transformations through standardization;

Feature transformations using scaling procedures.

An example of a confusion matrix for a single iteration is illustrated in

Figure 2. This matrix was derived by training the SVM model on the training dataset and subsequently testing it on the unseen test data.

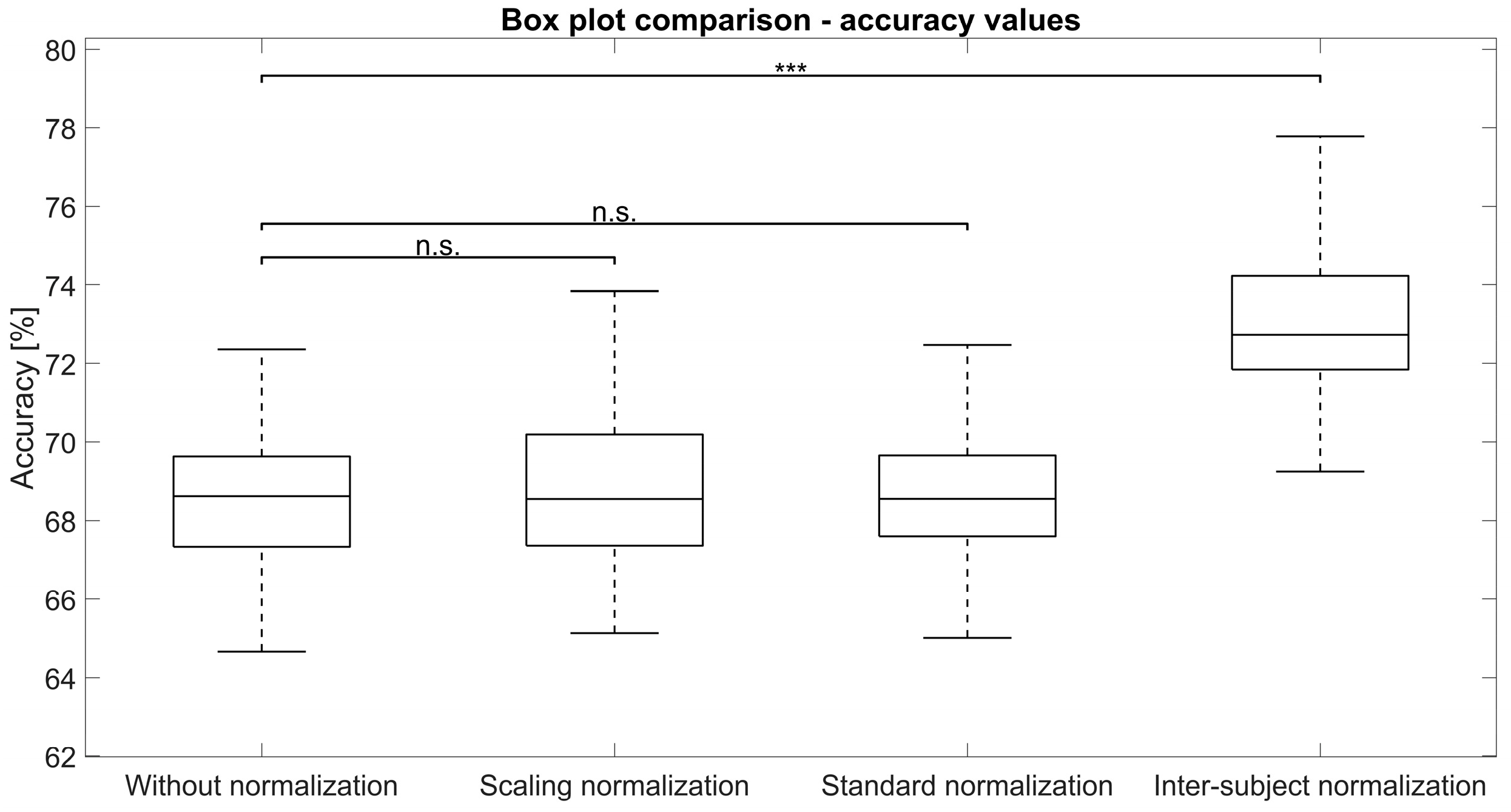

The performance metrics, averaged across 100 iterations for all four conditions, are presented in

Table 3 in terms of mean and standard deviation. Accuracy results are also visually represented in

Figure 3. In these figures, ‘n.s.’ as a superscript denotes no statistical significance, while three asterisks (***) indicate statistical significance (

p < 0.001) when comparing the original data to the considered group.

Our analysis revealed several key findings regarding the impact of different preprocessing techniques on model performance. When using the original, unprocessed data, the model consistently achieved precision, sensitivity, and accuracy of 68%, with specificity around 84%. On the other hand, both standardization and scaling procedures yielded very similar results to the original data, showing no substantial changes in precision, sensitivity, specificity, or accuracy. This suggests that these linear transformations alone did not significantly enhance the model’s ability to discriminate between classes in this context. In contrast, inter-subject normalization demonstrated an improvement in model performance. Precision, sensitivity, and accuracy all increased to 73%, while specificity also showed a slight improvement, reaching 86%. The superior statistical significance observed with subject-normalized data, as indicated by the three asterisks in

Figure 3, further underscores the effectiveness of this method. These improvements strongly suggest that inter-subject normalization holds significant promise for enhancing the overall performance of the model in similar physiological signal analysis tasks.

5. Discussion

This study investigated the feasibility of an inter-subject normalization procedure on electrocardiographic (ECG) and respiratory signals to account for physiological variability and thus improve classification performance. We selected ECG and respiratory data due to their established relevance in stress research, as evidenced by previous studies [

14,

38,

54]. These signals provide valuable insights into an individual’s physiological response to stress.

We performed a feature-based multilevel stress classification using a Support Vector Machine (SVM) classifier. This classification was conducted after applying inter-subject normalization and two widely used normalization procedures: standardization and scaling. Subsequently, we compared the classification performances derived from these procedures with those obtained from the original, unnormalized data.

As for general comments regarding the classification performance, the results from the confusion matrices (as shown in

Figure 2) showed that the discrimination between high stress (city driving) and medium stress (highway driving) can be more difficult with respect to the identification of resting conditions. This may be due to the slightly different physiological behaviors associated with city and highway driving, which probably show less difference when compared to the physiological activity during resting. To enhance this classification performance, a possible solution is to incorporate more features from other physiological signals to better capture changes in physiological behavior associated with these stress conditions. However, the main aim of this work is to present the contribution of the novel inter-subject normalization procedure, highlighting its performance against other normalization procedures. The features extracted, as well as the methodology for classification adopted in the study, were kept identical across all conditions. This approach allows the study to fully focus on the contribution of the different normalization methodologies on the same dataset, as demonstrated in

Table 3.

When using the original ECG and respiratory signals, our classification model consistently achieved a precision, sensitivity, and accuracy of 68%, while specificity was approximately 84%. Applying standardization and scaling techniques yielded comparable performance values (

Table 3), suggesting these methods do not significantly alter the model’s performance compared to using raw data. This outcome aligns with expectations, as both standardization and scaling primarily adjust data distribution without introducing substantial changes to the underlying information.

The inter-subject normalization procedure successfully reduced inter-subject variability in physiological features during the resting state, effectively aligning subjects with a common physiological domain. This normalization enhances the classification of stress levels by ensuring consistency in physiological data across resting and stressful conditions for different acquisitions. By minimizing the inherent physiological differences present in the original data, this approach allows the classification model to focus specifically on the true, stress-induced changes. This process minimizes the inherent physiological variations present in the raw data, allowing the classification model to focus precisely on the true, stress-induced changes. While this deep mitigation of differences in the resting state could potentially introduce a risk of misinterpretation of the physiological data, the sole goal of this procedure is to enhance stress assessment. It achieves this by providing a common reference point from which to highlight any differences observed under stressful conditions. The results clearly demonstrated the effectiveness of this approach, showing significant improvements over the original data, standardization, and scaling procedures. Precision, sensitivity, and accuracy increased from 68% to 73%, and specificity improved by approximately two percentage points. The enhancement in precision and sensitivity is particularly noteworthy, as these metrics directly reflect the model’s ability to accurately identify instances of stress.

Traditional feature normalization methods, such as standardization (z-score) [

52], min–max normalization [

53] and decimal-scaling [

55], are widely used in processing physiological signals. These approaches typically modify each feature independently by operating solely on the amplitude domain of the data (e.g., ECG or respiratory signal values) and ignoring the temporal relationships. While these methods often improve results compared to using the raw signal, their isolated treatment of features can disrupt the intrinsic consistency and varying levels of normalization between features, potentially impacting the subsequent classification step. In contrast, the proposed inter-subject normalization procedure operates directly on the time axis of the original signals rather than their amplitude scale. This involves placing the signals into a transformed space defined by a distinct sampling frequency. This novel approach ensures that features subsequently derived from the inter-subject-normalized signals are indirectly normalized in time. Crucially, this normalization structure is dependent only on specific physiological metrics used in the process (e.g., heart rate for ECG and breath rate for respiratory signals), thereby establishing a strong interconnection between all extracted features. This approach moves beyond simple, isolated feature adjustments—such as merely shifting a heart rate baseline to a fixed value like 70 bpm—where the natural, intrinsic relationships between other features remain uncorrelated or disrupted. Furthermore, since the proposed procedure primarily acts on the time axis, a common amplitude-domain normalization technique like z-score standardization could subsequently be applied to the time-normalized data. This two-step normalization would result in features being normalized in both the time axis (via the proposed inter-subject method) and the amplitude axis (via z-score), offering a potentially significant enhancement to overall classification performance.

A critical aspect of implementing this technique is identifying the most appropriate feature for defining the inter-subject normalization rescaling. In this project, we distinctly used the mean heart rate and mean breath rate during resting phases for ECG and respiratory signals, respectively, given their distinct physiological meanings.

Previous studies have demonstrated the use of physiological signals for stress assessment with various experimental protocols. For example, Smets and colleagues [

38] achieved approximately 82% classification accuracy using an SVM in a binary stress classification problem by combining ECG and respiratory features. Similarly, Han et al. [

54] discriminated against three stress states with 84% accuracy using similar features. These studies often incorporated both time and frequency domain features, which typically requires longer signal windows to ensure adequate frequency resolution. More recent studies on the specific dataset we consider have employed deep learning techniques, reaching an overall accuracy of about 90%. However, these promising results were achieved without addressing inter-subject variability. The aim of our proposed study is to validate the effectiveness of an inter-subject normalization procedure and to conduct a direct comparison with other widely used normalization techniques. By adopting the exact same data processing pipeline and only changing the data for classification, we ensure a fair and rigorous comparison. Our proposed work utilizes 20-s segments and the inter-subject normalization procedure to achieve efficient classification with strong performance, even without the inclusion of frequency domain features. This is a significant advantage, as it suggests our framework could be employed for real-time stress classification based on ultra-short-term recordings. Our approach using inter-subject-normalized data could potentially be combined with deep learning techniques, providing the algorithm with more robust input than data that ignores inter-subject variability. Future studies should deeply investigate this combination.

Further investigation is required to thoroughly understand the effect of inter-subject normalization on the frequency domain and to explore the potential contribution of frequency-domain features in the stress classification step. While the framework shows promise for short recordings, considering longer time windows for analysis would be beneficial for comprehensive understanding. Additionally, it would be valuable to investigate the incorporation of additional physiological data suitable for the inter-subject normalization approach, such as electrodermal activity (EDA), electromyography (EMG), or advanced features from other wearable devices like accelerometers and skin temperature sensors; combining these various data types has the potential to enhance stress classification accuracy and offer a more comprehensive understanding of an individual’s stress response.

6. Conclusions

This study investigated the impact of a subject-normalization procedure on stress classification. It utilized electrocardiogram (ECG) and respiratory physiological signals from drivers across varying stress levels. Employing a feature-driven methodology, the analysis used a total of approximately 3000 samples.

The findings demonstrate that this novel normalization procedure significantly improves stress classification compared to traditional standardization and scaling methods. The inter-subject normalization approach effectively reduced variability between individuals, leading to consistent results in both resting and stressful states. This enhancement was reflected in improved precision, sensitivity, and accuracy, indicating a greater ability to correctly identify stress. A key advantage of inter-subject normalization is its direct operation on the original signals, fostering strong interconnectivity among features. This differs from other methods that modify features independently. This novel procedure therefore establishes a deeper link between features, as they originate from a shared signal domain.

Future research should explore incorporating additional physiological data, extracting advanced features from wearable devices, and analyzing frequency-domain features. Such efforts could further enhance stress classification accuracy and provide a more complete understanding of stress responses. Given these results, this innovative inter-subject normalization technique shows strong potential for real-time stress classification in diverse applications like healthcare and stress management.