1. Introduction

Code-introspection methods, such as software debugging, play a critical role in analyzing and understanding program and system behavior. They enable the detection, diagnosis, and mitigation of issues, e.g., security-related problems, or facilitate measurement of execution performance. Virtual Machine Introspection (VMI) encompasses techniques for monitoring, analyzing, and manipulating the internal guest state of a VM from external environments such as the host system or another VMs. Introspection tools implement these techniques by combining their semantic interpretation with the values of the virtual CPUs (vCPU) registers and data in the main memory of the monitored VM; these are read and, if necessary, changed.

A vCPU is allocated by the hypervisor to a VM; it represents scheduled access to a physical core rather than a physical processor itself. A CPU refers to the physical processor chip, which may contain multiple cores, each capable of executing instructions independently. Thus, a vCPU runs on a core of a CPU, but its timing and performance can vary depending on the hypervisor and other VM activity.

Garfinkel and Rosenblum initially introduced VMI as a concept in 2003 [

1]. VMI allows administrators and defenders to detect and analyze malicious activities within VMs, so the security and forensic fields made practical use of VMI [

1]. Hence, there is significant interest in applications within the fast-growing cloud computing environment [

2]. Dynamic malware-analysis techniques—whether manual or highly automated in sandboxes—also make use of VMI-based tracing mechanisms [

3,

4].

VMI-based intrusion-detection or malware-analysis systems have several advantages over kernel-mode or user-mode methods that run on the same system (inside the same VM) that is to be monitored. Two important aspects include (i) isolation (sensor isolation from the analysis target) and (ii) transparency (invisibility of the sensor to the analysis target). Virtualization ensures strong isolation between the guest software to be monitored and the sensor software. This separation makes detecting and manipulating the monitoring software significantly more difficult for an attacker [

5], allowing for more resilient observation. However, a key challenge for VMI applications involves bridging the semantic gap [

6], i.e., accurately interpreting the guest software’s semantics based on guest memory. Typically, this involves an in-depth understanding of OS and application data structures [

7], which may be derived from debugging symbols or via complex reverse-engineering efforts, particularly when the guest software is closed-source. When this key challenge is successfully addressed, VMI monitoring enables comprehensive event tracing and memory analysis, providing critical information on the behavior of the system and application.

Modern VMI solutions primarily perform inspections in response to VM events (e.g., page faults, Control Register 3 (CR3) writes, or breakpoint interrupts). Dangl et al. [

8] refer to this reactive approach as active (or synchronous) VMI, while passive VMI tasks are scheduled asynchronously by the external monitoring software. A key method used in active VMI involves placing breakpoints at particular locations inside the guest code. Whenever the guest in the VM triggers one of these breakpoints, the VMI software can analyze the guest state in relation to the intercepted execution (e.g., it can read the function arguments of an invocation).

Beyond placing breakpoints, more invasive manipulations of the guest state can be useful. For example, the VMI application DRAKVUF [

9] uses function call injections to interact with the guest Operating System (OS) Application Programming Interfaces (APIs) to perform data transfers between the target VM and outer environments or to invoke process starts (in an approach called process injection)—valuable functionality in malware-analysis sandboxes.

In most use cases, runtime performance is critical for various reasons. For sandboxes, minimizing the overhead from VMI sensor interceptions is advantageous because the additional cycles spent while the vCPUs is paused extend the real-world execution time without affecting the effective execution time within the VM. Longer execution times reduce analysis throughput in sandbox clusters or provide angles for timing-based evasion checks [

10] and should be avoided. Similarly, for VMI on endpoint VMs with user interactions, low-latency VMI interceptions are very important because applications with soft real-time requirements do not tolerate long interruptions. This is especially true for graphical user interface activities in the case of VMs operated by human users. Customers of VMI-monitored VMs expect their systems to be responsive.

This study features an important novelty for VMI researchers and developers in that it compares common methods for hyper-breakpoint handling and hiding for VMI solutions for the first time and provides comparable measurements of their runtime overhead. To realize this contribution, we address the following research question: How do the existing breakpoint implementations compare in terms of runtime performance? We measured the execution times of the various breakpoint implementations under the XEN hypervisor on different Intel Core processors. Other virtualization or hardware platforms were not considered in this study. This seemingly simple question reveals upon closer examination quite a few facets, which we discuss in the following chapters. In summary, we make the following contributions.

We provide reproducible breakpoint-benchmark results for 20 devices with Intel Core processors ranging from the fourth to the thirteenth generation.

We provide our benchmark software stack to make our measurements reproducible and make it possible to benchmark other custom systems. For that purpose, we prepared a portable disk image containing recent releases of Ubuntu, XEN with QEMU, our modified versions of DRAKVUF, and SmartVMI, our benchmark tool bpbench inside a Windows VM, along with tools for VM snapshot management.

The rest of this paper is structured as follows:

Section 2 provides a thorough explanation of the breakpoint-handling implementations.

Section 3 highlights relevant existing work.

Section 4 first discusses all the aspects to consider when trying to answer the research question and eventually introduces our measurement study.

Section 5 gives additional specifications regarding which software and configurations the measurement setup contains. We give an overview of the utilized hardware platforms in

Section 6 before presenting and interpreting measurement results in

Section 7. Finally,

Section 8 concludes the paper by summarizing the work, discussing the results, and providing a view on future work.

2. Background

Virtual Machine Introspection (VMI) is a technique that allows the state and behavior of a virtual machine (VM) to be monitored and analyzed from the outside—i.e., from the host environment (type 2 VM), a control VM (type 1 HV) or a special monitoring VM—without having to install agent software within the VM itself. This is often used for security monitoring in malware sandboxes or in malware analysis. In the scientific community, there are a few generally useful open-source applications for performing security-related VM behavior monitoring on different virtualization platforms. We have identified two of these, which were designed for use as malware sandbox sensors, as particularly relevant. First there is DRAKVUF [

11], designed by Tamas Lengyel as a plugin-based framework for writing VMI applications; it supports the XEN-QEMU virtualization platform and includes many built-in monitoring features for Linux and Windows guest software [

12]. The other nominee is SmartVMI [

13], a plugin-based VMI software for Windows guests, which was developed by GDATA CyberDefense AG as a component of the SmartVMI research project [

14]. SmartVMI supports two virtualization platforms: XEN-QEMU (type 1 HV) and KVM-QEMU (type 2 HV). For the latter, KVMI, a VMI patch set for Linux KVM, is needed.

2.1. VMI Software Architecture

In order to better understand the technical workflows involved in breakpoint handling, as described in the following sections, we will first clarify a few terms and provide an overview of the software components involved, along with their tasks and locations inside a VMI software architecture.

The architecture of VMI software can typically be divided into several layers or components.

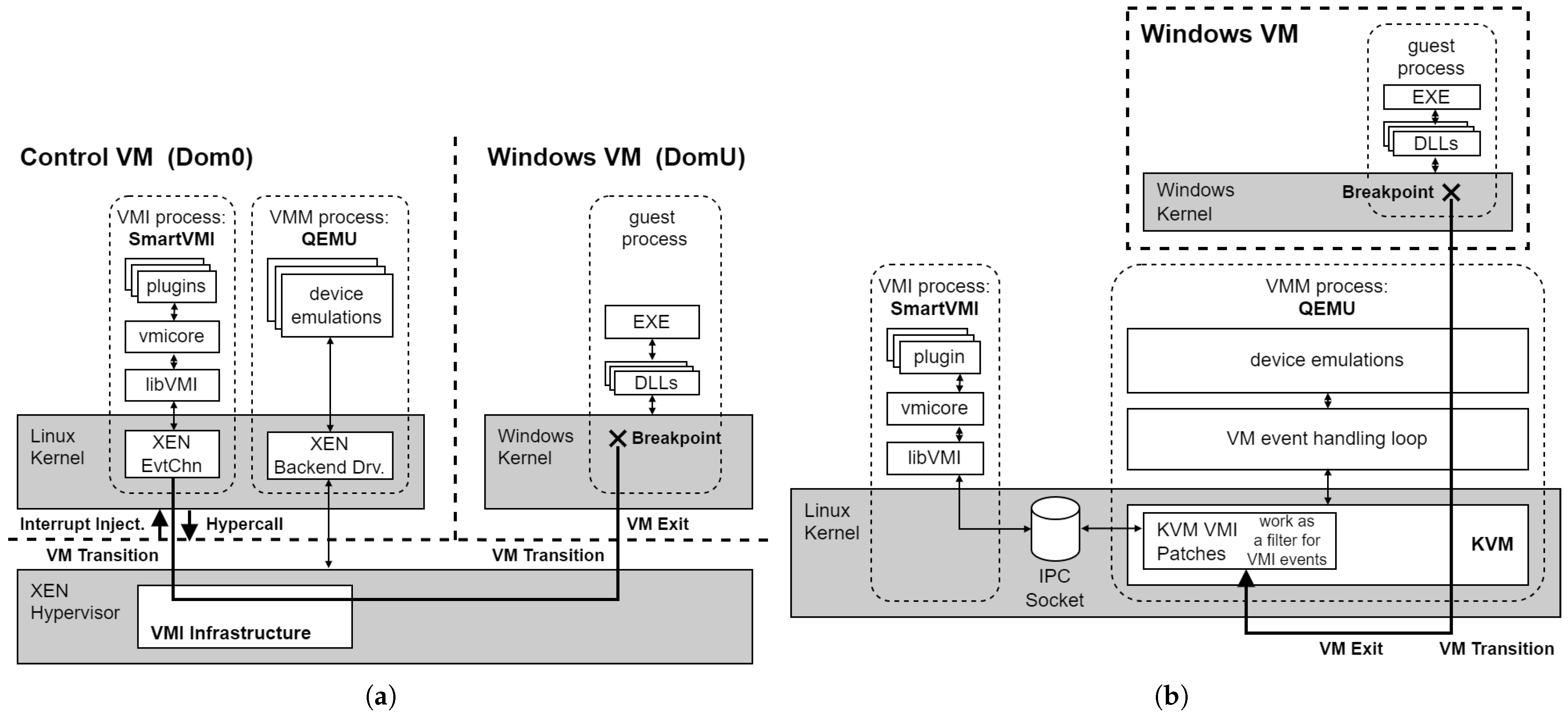

Figure 1 provides a visual overview. The foundation is the virtualization platform, which consists of a hypervisor (e.g., XEN, KVM) and a Virtual Machine Monitor (e.g., QEMU, CloudHypervisor). The virtualization platform implements access functions to the registers of the vCPUs and the guest physical memory of the VMs to be monitored, as well as an event system with which traps can be configured and notifications on VM exits can be established. These functionalities can be referred to as VMI infrastructure; they are provided by the virtualization platform via interfaces for use by the VMI application software. For XEN (type 1 HV), the corresponding mechanisms are located in the hypervisor itself in the host and are accessed via hypercalls by VMI software within a VM. For KVM (type 2 HV), the VMI infrastructure functions (KVMI) are also located in the hypervisor, i.e., in the KVM driver in the Linux kernel of the host system. The KVM driver is used by the Virtual Machine Monitor (VMM) process for the VM (QEMU process). The VMI application software communicates with the QEMU process, or rather with the KVM driver inside the Kernel part of the QEMU process, via an UNIX domain socket. An implementation of VMI infrastructure functions within the VMM software would theoretically be conceivable, but no implementations are known.

The VMI application software builds on and utilizes the VMI infrastructure. It is the core of a VMI software stack and implements the sensor logic used to interpret the guest software state (semantic gap) to monitor guest systems’ behavior. In the open-source VMI ecosystem, there is the

LibVMI library [

15], which is used by many VMI applications. It implements VMI access procedures and trap handling.

LibVMI forms an intermediate layer between the VMI application or sensor logic and the VMI infrastructure of the hypervisor. It generalizes the VMI access API to provide the VMI software with a uniform API independent of the underlying hypervisor. However,

LibVMI also plays a central role in dealing with the semantic gap. It implements address translation from guest virtual addresses (Guest Virtual Address (GVA)) to guest physical addresses (Guest Physical Address (GPA)). This allows the VMI software to work with virtual addresses from the address spaces of the guest processes. To do this,

LibVMI reads and interprets the guest page tables and performs the page-table walk in software. The mechanism is implemented in an efficient way with software-side caching.

2.2. Hyper-Breakpoint Handling

Modern VMI-based monitoring methods use active VMI as an instrumentation method, which enables event-based monitoring of the guest software. In order for VMI software to respond to events in the guest software (active VMI), traps must be installed. One of the most important types of traps is code breakpoints realized by breakpoint instructions (INT3 on x86) patched into the guest code.

The VMI software uses the hypervisor to configure the vCPUs of the VM to be monitored via Virtual Machine Control Structure (VMCS) structs so that code breakpoints lead to a VM exit and handling by the hypervisor and the VMI software. The hypervisor checks whether the breakpoint is a hyper breakpoint installed by the VMI software or a debug breakpoint set by guest software within the VM. In the former case, the VMI software is notified to handle the breakpoint. In the latter case, the breakpoint is injected as an interrupt into the VM during the VM resume process so that the guest software that installed the breakpoint can handle it.

A significant difference between hyper breakpoints and classic in-VM breakpoints with regard to inserting the breakpoint instruction is that, due to the copy-on-write (COW) policy for shared user-mode code pages within the guest systems, inserting an in-VM breakpoint results in a copy of the page. The new copy is private for the target process and will be patched with the breakpoint, which means that the breakpoint takes effect only in the user address space of the target process. In contrast, such guest-page policies do not exist for hyper breakpoints, which means that breakpoints in shared code pages are effective in all processes in which the corresponding shared and patched page is mapped.

To complete code breakpoint handling after the VMI sensor logic has processed the event, the original instruction that was overwritten by the patching with the breakpoint instruction must be executed before execution can continue with the next instruction of the guest code. Three basic concepts in VMI-based breakpoint handling ensure that the original instruction is executed. First, there is the repair mechanism, which restores the original instruction in main memory. As an alternative to changing the data in memory, the instruction can be emulated; this is the second method. The third method only works in the context of virtualization and is based on the fact that the hypervisor can provide a vCPU with different views of the memory.

Table 1 provides an overview of the three breakpoint-handling concepts: Instruction Repair, Instruction Emulation and Second Level Address Translation (SLAT) View Switch, all of which will be discussed in the following subsections. The first column contains the concept name as used throughout this work, accompanied by a concise identifier for its implementation. The subsequent columns reflect concept properties—such as multiprocessor safety, reliance on single-stepping, or the kind of trap mechanism employed—and provide a summary of the corresponding processing sequence.

2.2.1. Instruction Repair (1)

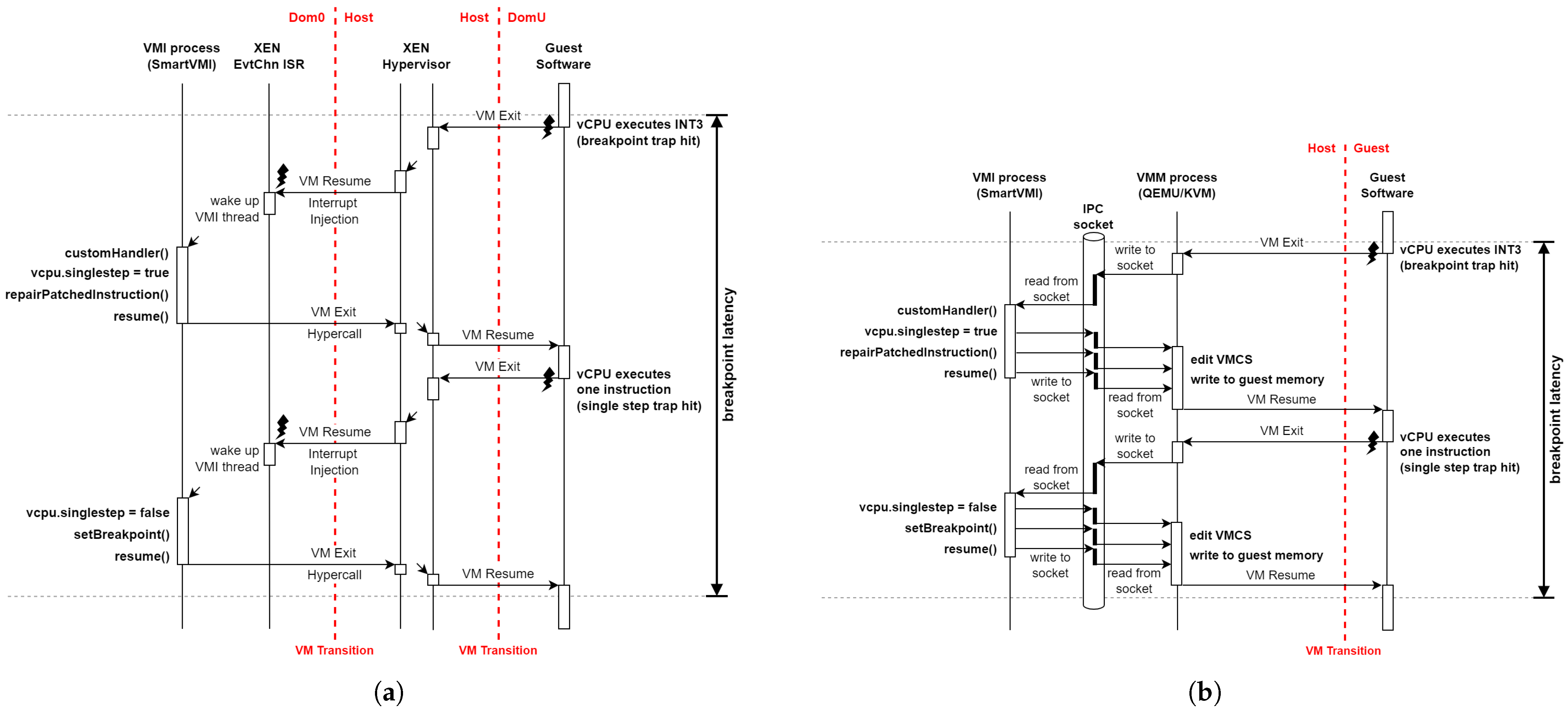

Figure 2 shows the control flow of the instruction-repair mechanism. After the breakpoint has been processed by the VMI sensor logic, the original byte of the instruction that was overwritten by setting the breakpoint instruction

INT3 is written back to the VM’s memory. The original instruction is thus restored or repaired. However, before it can return to the VM to execute the repaired original instruction, the interrupted vCPU must be set to single-stepping mode. This is done via the Monitor Trap Flag (MTF) in the VMCS of the vCPU managed by the hypervisor. The MTF in the VMCS configures the vCPU to operate in virtualized single-step mode, executing only one command of the guest software and then automatically triggering a VM exit again. The MTF thus corresponds to the Trap Flag (TF) of the

RFLAGS register in classic non-virtualized environments. After the repaired command of the guest software has been executed, the VMI software can patch the instruction again with the breakpoint instruction

INT3 in response to the single-step VM exit, so that the breakpoint trap is also set for future executions again.

During the period in which the original instruction is persistent in memory without a breakpoint, parallel executions could pass the code location without triggering the trap. For this reason, it should be ensured that no parallel executions exist, e.g., by deactivating all other vCPUs of the VM for this period or by strictly avoiding multiprocessing (using a single-vCPU VM). This solution must therefore be considered to be non-multiprocessor-safe.

Another disadvantage of this method is that single-stepping requires switching between the VM to be monitored and the VMI software a total of two times (for at least four VM transitions). In the case of a type 2 hypervisor such as XEN, this number doubles to eight VM transitions, as the VMI software itself executes within a VM that must be entered and exited. These additional VM transitions, along with the required communication between the hypervisor and the VMI software, introduce considerable overhead and significantly increase execution latency.

2.2.2. Instruction Emulation (2)

To avoid the single-step overhead of the instruction-repair mechanism and to create a method for hyper-breakpoint handling that is multiprocessor-safe, it is better to find an approach that avoids touching the VM’s memory with the patched guest code. The alternative is to emulate the original instruction outside the VM by using a software emulator that operates like a CPU instead of executing it on the real CPU. Because single-stepping is not needed, the control flow is simpler than for instruction repair.

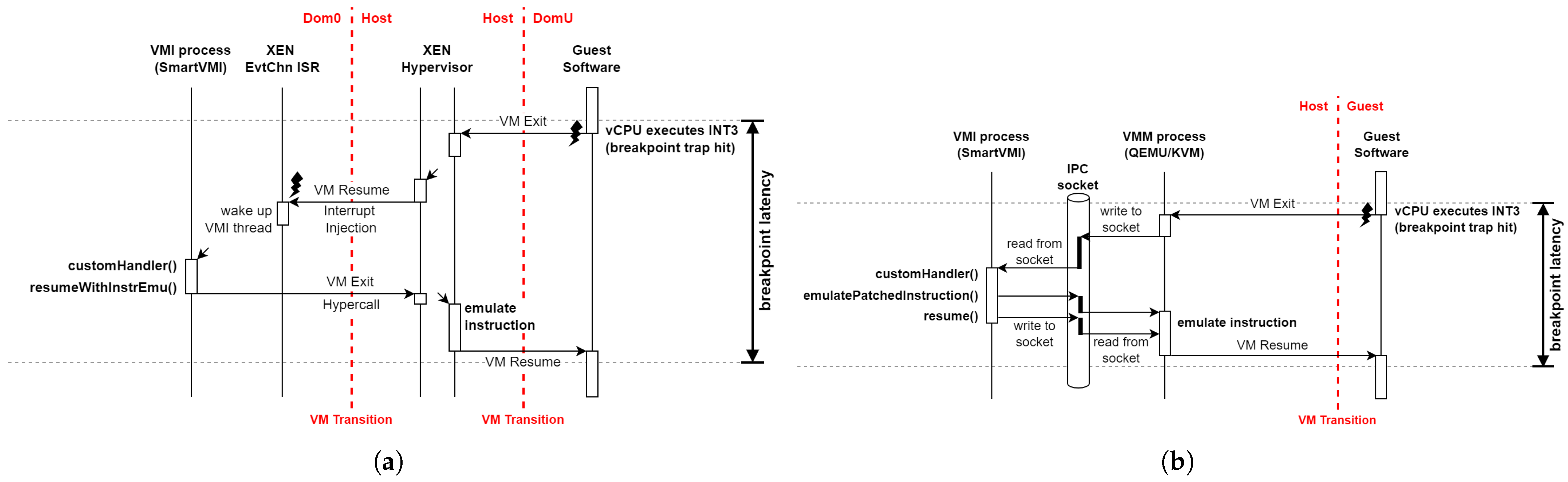

Figure 3 displays the emulation control flow. The emulation contains the original instruction from the guest code from before the implementation of breakpoint patch from the VMI software and executes it on the state (registers, memory) of the vCPU. Afterwards, the vCPU can resume execution (VMRESUME) with the next command of the guest software. Practical implementations of hyper-breakpoint handling using instruction emulation in VMI software utilize the x86/IA32/AMD64 instruction emulation that is integrated into the KVM and XEN hypervisors.

This method is multiprocessor-safe because the patched code with the breakpoint that is seen by other parallel vCPUs is never modified. The number of context switches and VM transitions also remains minimal (Type 1 HV: four, Type 2 HV: two), and single-step overhead is avoided.

Nevertheless, a notable limitation is that the emulation implementation may be incomplete and, as a result, may diverge from actual CPU execution. The extent of this issue largely depends on the quality of the selected emulation and its commitment to completeness and correctness. The x86/IA32/AMD64 instruction emulation integrated in KVM, XEN, and QEMU can be assumed to be highly accurate, as these systems are mature and widely used and have undergone extensive testing over time. However, specific edge cases involving certain instructions and argument combinations may arise; in such cases, emulation is best avoided. In the design of the VMI sensor logic, the approach can be aware of which guest software instruction is being patched with a hyper breakpoint. This knowledge allows the issue to be largely mitigated by avoiding the placement of breakpoints on extremely rare instructions where emulation may be unreliable or faulty.

2.2.3. SLAT View Switch (altp2m) (3)

Second Level Address Translation (SLAT)—implemented by Extended Page Table (EPT) in Intel x86-64 processors—provides opportunities for realizing execution-context-dependent manipulations of guest software by VMI software. This enables another option for handling hyper breakpoints, although it is possible only for hyper breakpoints in virtualized environments. The reason for this limitation is that it is based on SLAT, which forms the basis of address translation in virtualization solutions. SLAT is the translation stage in the host that enables guest physical addresses of the VM to be translated into host or machine physical addresses of the host.

Using multiple EPT page table sets for one VM or one vCPU, the hypervisor can implement multiple views of the memory from the perspective of a vCPU (SLAT views). By configuring the VMCS, the hypervisor can change the vCPU’s view of its memory. This allows a page of the VM memory to be mapped to several different page frames of the machine memory, depending on the view. This option can be used for breakpoint handling. The page of the VM’s memory that should be patched with a hyper breakpoint is mapped to two different page frames via two SLAT views. One page frame contains the original version of the code without the breakpoint instruction, while the other contains the patched version with the INT3 instruction. The hypervisor configures the vCPUs in such a way that the modified version with the breakpoint is used during normal execution, causing it to trigger when the code is executed. During breakpoint handling, the view of the original version is changed for the interrupted vCPU and the original instruction is executed in single-stepping mode. After the original instruction has been executed, single-stepping interrupts again and the VMI software can change the view of the interrupted vCPU back to the patched version with the breakpoint instruction. Then, execution of the guest code can continue.

Using this method, the original instruction is executed in hardware on the real CPU without the need for emulation. Real execution on CPUs is faster and more reliable in terms of correctness compared to emulation. In addition, this method is also multiprocessing-safe, as each vCPU has its own configuration specifying which EPT page table set to use and which view of the memory should be active. However, there again is the disadvantage that the VMI software and/or the hypervisor must intervene twice to switch the memory view back and forth. This again results in the previously mentioned single-step overhead, as it requires four switches between VM execution and VMI software (type 1 HV: eight VM transitions; type 2 HV: four VM transitions).

Another major advantage of SLAT view switching in the VMI context is that breakpoint traps can be enabled and disabled by switching views, as required by the VMI software. For example, breakpoints in operating system libraries in user-mode processes can be enabled or disabled for a vCPU depending on the process or thread that is currently being executed. This allows monitoring to be restricted to individual threads and processes of a system, thereby filtering out unimportant behavior noise. Especially for hyper breakpoints in shared libraries, whose code is mapped in different process address spaces and where breakpoints are therefore also effective in different processes, SLAT view switching can still be used to differentiate between individual enablements.

In order for VMI Software to use SLAT view switching for its purposes, the hypervisor must offer the feature via its VMI infrastructure API. In the XEN hypervisor project, the function is referred to the acronym “altp2m” (alternative guest physical memory to machine physical) [

12]. SLAT view switching as a method for hyper-breakpoint handling was first used in the VMI Framework DRAKVUF, developed concurrently with the VMI infrastructure functions in XEN in the same community, which established the term “altp2m” as a synonym for SLAT view switching in the VMI context.

2.3. Acceleration: Breakpoint Handling by the Hypervisor

In addition to the breakpoint-handling methods themselves, the layer within the VMI stack on which the method is implemented and executed also has a significant impact on execution speed. Theoretically, the mechanisms described (repair, emulation, SLAT view switching) can be implemented either within the VMI application (SmartVMI, DRAKVUF, LibVMI) or within the VMI infrastructure as part of the hypervisor or VMM. The latter option has the advantage that after the instruction has been executed as a single step, the hypervisor does not have to communicate with the VMI application that breakpoint handling can be completed. Instead, breakpoint handling is finished directly in the hypervisor. Communication between the hypervisor and the VMI process is at least interprocess communication, which represents additional avoidable overhead that carries the risk of delays and thus higher latencies. In the case of a Type 2 hypervisor such as KVM, this involves interprocess communication between the VMM process (QEMU) and the VMI process (SmartVMI). With a Type 1 hypervisor such as XEN, the overhead is significantly greater, as hyper-communication must take place between the VM with the VMI software and the hypervisor in the host, requiring additional VM transitions.

To avoid this overhead, DRAKVUF offers the Fast-Singlestep (FSS) option, whereby breakpoint handling during switching of SLAT views is performed not by DRAKVUF itself, but instead by the XEN hypervisor, which provides the necessary VMI infrastructure feature.

FSS works by delegating the single-step execution and associated breakpoint checks directly to the hypervisor, eliminating the need for interprocess communication with the DRAKVUF process. During this process, the hypervisor monitors the execution of the target instruction and applies any necessary modifications or checks before resuming normal execution. As a result, FSS significantly reduces latency and improves performance for operations that require frequent single-stepping, such as breakpoint handling in dynamic malware analysis.

The DRAKVUF feature is available only for breakpoint handling and is not used to accelerate read traps in relation to breakpoint hiding.

2.4. Hiding Breakpoints for Stealth

When using VMI-based monitoring in a security context, it may be important that the attacker or the malware to be monitored cannot detect the monitoring. Examples here would be malware sandboxes or high-interaction honeypots. Code-integrity checks, in which software reads the code and checks its integrity, are not only performed by malware or attackers to find monitoring hooks or breakpoints. Benign software also performs such checks to protect itself against malicious hooks and breakpoints. For example, the Windows kernel is protected against changes to the kernel code by breakpoints using Kernel Patch Protection (KPP), also known as PatchGuard. Therefore, a VMI-based instrumentation solution must provide mechanisms to hide set INT3 breakpoint instructions from being read by the guest software.

In principle, breakpoint instructions can be hidden using the same three concepts described above that are used for breakpoint handling (repair, emulation, SLAT view switching), with the same advantages and disadvantages. In breakpoint handling, the original instruction is executed; in breakpoint hiding, the original instruction must be read. In that context, a read trap is established using EPT permissions for the page in which the breakpoint is located in order to intercept a corresponding read access by the guest software. EPT permissions on the page works with page-granularity, so the trap will be triggered not only by read operations on the memory location where the breakpoint is set, but also by all other read operations performed on the page with any hyper breakpoint; triggering with lead to an interception by the hypervisor and VMI software. There are now two ways in which the VMI software could handle the intercepted read operation. Either every read operation on the page is handled as if it were a read of the breakpoint location, or the VMI software checks the read address to see if it is the breakpoint location and handles the read operation differently. Both options result in correct implementation of breakpoint hiding. The latter option may offer potential for performance optimization.

If the breakpoint instruction is read, the guest execution is trapped and the read operation must be given special treatment by the VMI software so that the original code is read instead of the breakpoint. To do this, SmartVMI uses the read emulation available in XEN and KVM via

LibVMI. The hypervisor is provided with the original bytes of the patched instruction, which it uses to emulate the read operation of the guest software with the advantages of multiprocessing safety and no single-step overhead. DRAKVUF, on the other hand, does not use emulation but instead uses SLAT view switching (alternative guest physical to machine translation (altp2m)). Read access is handled in such a way that the memory view of the reading vCPU is first switched to the original page without the breakpoint. Then, single-stepping is used to execute the one read instruction on the CPU. The single-stepping leads to another VM exit and interruption by the hypervisor and the VMI software, during which the memory view is switched back to the page with the breakpoint before guest execution can continue. The method is also multiprocessing-safe, but it has the disadvantage known from altp2m that the necessary single-stepping results in considerable overhead [

12].

To conclude this section,

Table 2 provides an overview of the three explained breakpoint-hiding strategies.

3. Related Work

Related work can be classified into three categories: (i) papers related directly to our work on the different techniques for (hyper-) breakpoint mechanisms and their performance; (ii) papers presenting security implementations where hyper breakpoints are utilized to collect data about a guest system, as well as commercial tools in this field; and (iii) papers with architectural proposals for designing hyper breakpoints in the CPU.

In our previous work [

16], we presented the existing implementations for hyper breakpoints, discussing their strengths, limitations, and trade-offs. Furthermore, we proposed and implemented a benchmark for x86_64 breakpoints that is suitable for measuring the execution performance of a VMI-based breakpoint implementation. This paper differs from our earlier work mainly because now we are able to benchmark and compare all breakpoint implementations across different Intel Core CPU generations, whereas previously, only Instruction Emulation breakpoints could be tested on a single evaluation system with an Intel Core i5 7300U.

Wahbe emphasized in his work in [

17] the difference between data hardware- and software-breakpoint implementations. He described how hardware breakpoints can indeed deliver the best performance; nevertheless, they are more expensive to provide and support a very limited number of concurrent breakpoints. Software approaches, on the other hand, patch the debuggee’s code or insert checks at relevant write instructions. These are easier to deploy and scale to arbitrary numbers of breakpoints, but they incur higher runtime overhead and can perturb the program’s behavior. Wahbe also discussed a third category, using the virtual memory system to monitor writes, which strikes a balance between the two previous approaches but depends heavily on operating-system support.

Wahbe et al. expanded on his work in [

18] by presenting the design and implementation of new practical data breakpoints that improve the feasibility of software-based implementations. In particular, they introduced compiler optimizations and efficient-run-time data structures, such as segmented bitmaps, to reduce the overhead associated with checking monitored memory locations. This approach made data breakpoints more scalable and portable than hardware solutions, achieving acceptable performance for interactive debugging.

Dangl et al. introduced RapidVMI to address multi-core and shared memory issues in active VMI. Their system, presented in [

8], implementsd process-bound and core-selective introspection by leveraging XEN’s alternative EPT mappings (altp2m). Breakpoints or injected code were mapped to shadow pages that apply only to a specific process or core to prevent unintended side effects across shared libraries or concurrent threads.

Spider Framework [

19] emphasized the importance of stealthiness during malware execution, and that is why they introduced the concept of invisible breakpoints using VMI. In particular, it leveraged EPT to maintain separate read and execute views of the code pages. The guest therefore sees unmodified instructions, while the hypervisor executes patched pages containing breakpoints. Once a breakpoint is hit, a VM exit is triggered and handled externally.

Karvandi et al. presented HyperDbg in [

20]. This is a modern hypervisor-assisted malware debugger that integrates breakpoint handling directly into its custom VMX-Root hypervisor. It uses EPT hidden hooks that avoid patching code directly, making breakpoints faster and stealthier than traditional

INT3s. HpyerDbg implements classic EPT hooks by injecting a #BP (

0xCC) into the target VM memory, along with Detours-style hooks that redirect control flow with a jump to the patched instruction. Normal execution resumes after the callback.

To the best of our knowledge, only a few companies disclose leveraging VMI in their detection products. VMRay provides a sandbox solution for malware analysis. They acknowledged in their white paper [

21] that they utilize VMI to monitor the target system from outside the VM, thereby achieving a high level of stealth. Joe Sandbox, on the other hand, does not directly declare that they use VMI, but they promote their technology using Hypervisor-based Introspection (HBI), which overlaps with our understanding of virtual machine introspection [

22]. As an example that does not use sandboxes, RYZOME is a startup offering a security-monitoring solution based on VMI; they promise tamper resistance and resilience against APT actors [

23].

Finally, Price proposed an architectural extension of Memory Management Unit (MMU) to overcome the inherent flaws of the existing breakpoints [

24]. The paper identified three core issues: (*) corruption of program bytes due to

INT3 patching (“critical byte problem”), (**) detectability of both software patching and limited hardware-debug registers, and (***) inefficiency of fallbacks such as single-stepping or emulation. The solution is a buddy-frame mechanism, where each page table entry can reference a companion frame containing per-byte breakpoint metadata (read/write/execute flags). When the breakpoint bit is set in a page table entry, the MMU consults this buddy frame during instruction fetch or memory access, implementing a trap if a breakpoint condition matches. This design removes the need to patch code, provides effectively unlimited and invisible breakpoints, and ensures robust and efficient debugging directly at the hardware level.

4. Experimental Design

This section describes our considerations and decisions about how to design the measurement study. The relevant topics are metrics, choice of hypervisor, and hardware and software setup, on a higher level.

Section 5 provides more detailed information about hardware and software.

4.1. A Metric for Breakpoint Performance

Our main research question is as follows: How do the existing breakpoint implementations compare with respect to performance? As noted earlier, addressing this seemingly straightforward question entails a variety of nontrivial considerations. This section discusses the relevant aspects from which the foundations for our measurement study are derived.

What does performance mean for a breakpoint implementation? Beierlieb et al. [

16] address this question in detail and identify three key aspects: the execution time required to handle a breakpoint hit, the execution time required for processing a read operation at a breakpoint location, and the overhead incurred even when no breakpoint is triggered. Since the overhead is independent of the specific breakpoint implementation, it is excluded from consideration in this paper. The read-handling execution time depends on the stealth mechanism and thus on the breakpoint-handling mechanism. Typically, this metric is less important than the time to handle a breakpoint hit because reads of code regions typically happen only during occasional code-integrity checks. Nonetheless, we include this metric in our evaluation. Thus, the remaining relevant metric is the handling time of breakpoint hits, which we regard as the most significant factor in assessing breakpoint performance. We use

bpbench to measure the execution and read times.

As can be seen from the above explanation and our previous study, it makes sense in this case to simplify the question of runtime performance to the size of the overhead time span. To measure this, it is legitimate to consider the procedure under investigation in isolation and to design the conditions in such a way that the effect can be captured as much in isolation as possible—that is, in a clean manner that allows for comparability.

To achieve this, we designed micro-benchmarks consisting of specific hardware and software conditions and simple workloads that enable the breakpoint overhead to be determined cleanly and comparably by measuring the execution time. We do not claim that such micro-benchmarks, evaluated under laboratory conditions, represent VMI performance under real-world system load; rather, the results represent the overhead due to execution time under optimal conditions. This makes the costs of breakpoint implementations visible regardless of the system load.

4.2. Choosing the Hypervisor

Using the same hypervisor is a necessity to ensure that measurement results are comparable. As a type 1 hypervisor, XEN incurs more VM entries and exits during transitions from the guest to the VMI application than does KVM, a type 2 hypervisor. Consequently, the same breakpoint implementation is expected to exhibit different execution times between the different hypervisors. Measuring two implementations on different hypervisors makes them incomparable because the performance influence of the approach cannot be differentiated from the influence of the hypervisor. KVM was excluded from this study because it is not supported by DRAKVUF. However, there are more reasons not to use KVM for such measurements, at least currently. The kernel with the KVMI patchset is based on Linux 5.4.24 (released in March 2020) and might later have trouble working on more modern hardware. KVMI/

LibVMI also do not work perfectly with breakpoints in userspace processes. We had to rewrite the breakpoint logic to support only a single breakpoint for our preliminary measurements in the

bpbench paper [

16] because the interrupt event resulting from a breakpoint did not report the corresponding instruction pointer, which typically allows identification of a breakpoint. Further, only the instruction-repair implementation works on KVM because

LibVMI currently does not support instruction emulation for KVMI. On the other hand, all implementations work flawlessly on XEN, establishing it as the most suitable hypervisor for this study.

4.3. Hardware Platforms Evaluated

The same principle applies to hardware: evaluating different implementations on different hardware platforms prevents the formulation of any reliable conclusions regarding the impact of the implementation itself. Breakpoint handling requires operations such as VM exits, VM entries, system calls, context switches between processes, interprocess communication, EPT view switches, and regular instructions. All operations depend on the CPU clock speed, but architectural changes between CPU generations could affect the speed of some operations regardless of the clock speed. Thus, only measurements of different implementations conducted on the same hardware setup are directly comparable. We performed the same measurements on 20 systems with Intel Core processors ranging from the fourth (released 2014) to the thirteenth generation (released 2023), spanning nine years. While this sample size is relatively small for precise statistical generalization, it is sufficient to reveal general trends and differences in breakpoint performance across CPU generations. The dataset captures a broad range of architectures, providing meaningful insights even if fine-grained quantitative conclusions would require more devices.

Section 6 provides a detailed overview.

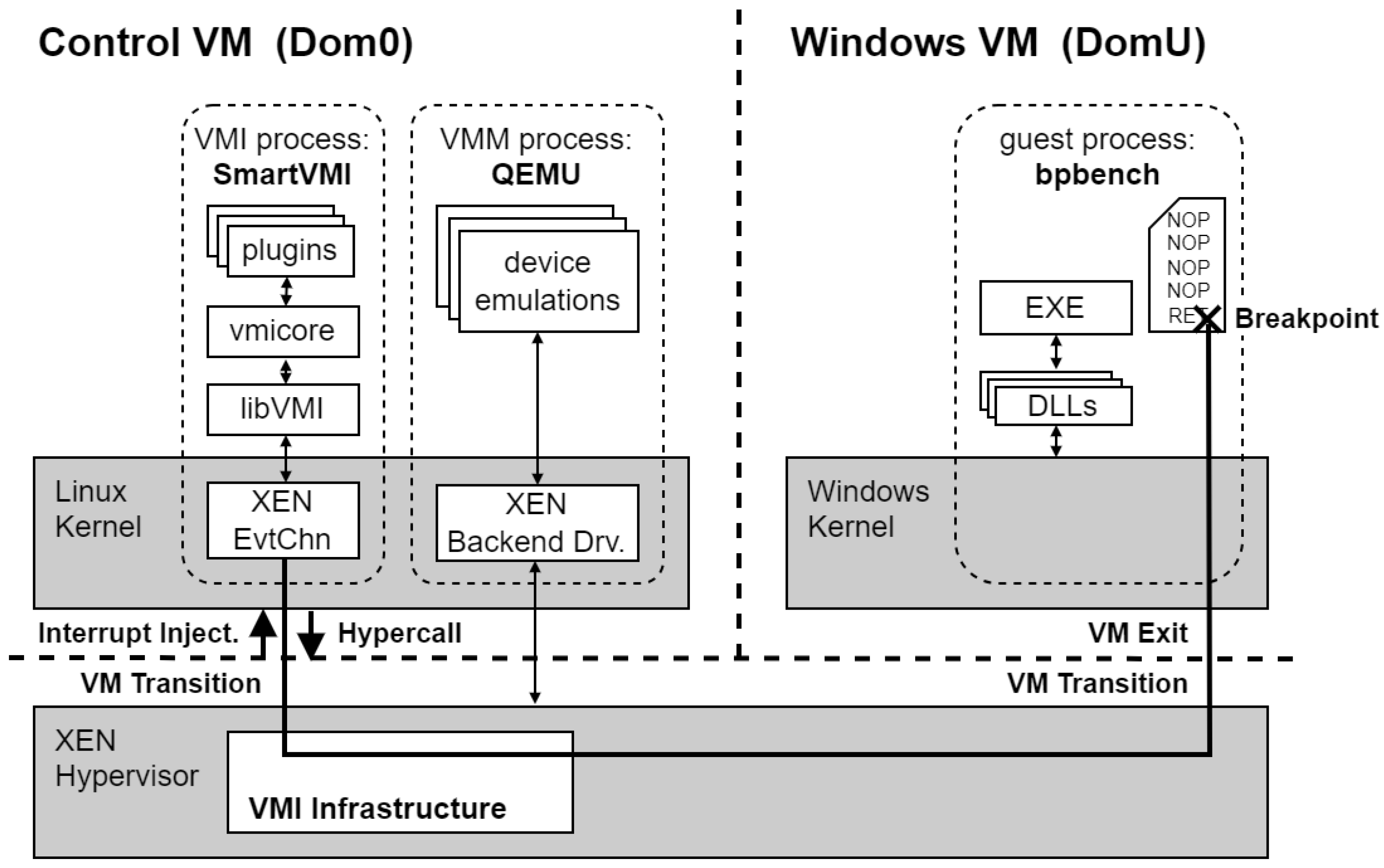

4.4. The bpbench Benchmark Tool

Our benchmark tool bpbench allocates a new page-aligned memory region in user space, sized at one page (4 KiB), with read, write and execute permissions. Then, it fills the whole page with a sequence of NOP instructions. The last byte of the memory region is written to with a RET instruction. The hyper breakpoint should be set on this return instruction. To that end, bpbench reports its process ID and the virtual address where the hyper breakpoint should be placed to the user and waits for confirmation from the user.

The user communicates the information on the breakpoint location to the MOCK-Breakpoint plugins SmartVMI and DRAKVUF, which were developed for this experiment. The VMI plugins install the hyper breakpoint (code breakpoint via INT3) in the address space of the bpbench process at the specified location. To handle the breakpoint event in the VMI software, both plugins register an empty callback function. The breakpoint-handling mechanism is not the responsibility of the plugin and is handled by code in the core logic of the SmartVMI/DRAKVUF framework or in the LibVMI library. The breakpoint-handling sequence is performed by the VMI software after it returns from the empty callback function. To investigate and measure the various breakpoint-handling methods for this experiment, we perform several measurement runs with bpbench, configuring DRAKVUF differently and loading different versions of SmartVMI. We use our own versions of both DRAKVUF and SmartVMI, which contain changes to support the various breakpoint-handling methods and are extended with our plugins.

After the VMI software has installed the breakpoint trap, the user starts the benchmark process in bpbench. The various workloads are applied to the selected breakpoint-handling configuration one after the other. Depending on the workload, either the return instruction (RET) with the breakpoint or the first NOP instruction of the written will be jumped directly by a CALL instruction. This triggers the hyper breakpoint to be measured. Before and after execution of the CALL instruction, a timestamp is determined via the Windows API using the QueryPerformanceCounter function. The process is repeated 200,000 times, with each individual time span being logged.

Since one of the methods considered is instruction emulation, the question arises as to what extent the runtime of instruction emulation depends on the instruction being emulated. The answer is that the execution time of emulation definitely depends on the instruction. Very simple instructions such as NOP or simple register assignments take much less time than complex instructions that involve memory accesses. In the case of memory accesses, the x86 instructions also work with virtual addressing. In emulation, address translation with possible page table walks must be performed in software, which increases the emulation time significantly. This raises the question of whether the instruction we have chosen is complex enough to ensure that a negative estimate is representative. We conclude that it is, since the return command involves both a jump and a memory access with virtual addressing when the return address is read from the stack.

For stealth-related workloads, bpbench does not perform a jump into the page; instead, the page is read, which should trigger the breakpoint-hiding mechanism, where the read operation is handled specially by the VMI software. To do this, for the two workloads, the RET instruction on the one hand and the entire page on the other hand are each read each 200,000 times, with both measurement and logging of the execution time of the read operation.

The execution-time measurements performed by

bpbench are based on the difference between two timestamps determined immediately before the start and immediately after the end of the workload. The Windows API function

QueryPerformanceCounter is used to determine the timestamps. The timestamps provided by Windows can be based on different time-source devices (TSC, LAPIC-timer, HPET, ACPI-PM-timer, RTC). Windows automatically selects the most stable time source based on the platform conditions [

25]. In virtualized environments, some time sources may be emulated devices. This means that a timestamp query to such a device triggers a VM exit and is handled by the device emulation in the host or control VM. This results in an additional delay, which makes the timestamp less accurate. The preferred time source changes, with Windows selecting the source depending on whether Windows is running within a VM or bare metal. TSC is usually selected for bare-metal execution. In our XEN environment, guest Windows usually selected HPET as the time source. Regardless of whether the time-source device was emulated or a real counter circuit was used, the question arises as to whether the time source is virtualized. A virtualized time source measures time in VM time, which is stopped when the VM or vCPU is not running. Both real time sources, such as the TSC, and emulated counter devices can be virtualized. While a virtualized time source is well suited for measuring the performance of isolated guest software, it is useless for measuring execution time across different VMs and host environments. It was therefore important to ensure that the selected timestamp source for each measurement was not virtualized. Furthermore, the sufficient accuracy of the time source had to be ensured by checking that the resolution was significantly higher than the smallest measured time span. In order to have a reference for the correctness and accuracy of our time source, we also determine the time required to query the timestamp via the Windows API function

QueryPerformanceCounter and report this value as a reference in all our measurement results. We found that connected VMI software negatively affects the performance of device emulation, slowing down the retrieval of timestamps. In most cases, the time source was still fast enough for accurate measurement. In a few cases involving SmartVMI, however, the impact was so severe that the measurements had to be discarded (

Section 7.3).

4.5. Workloads

We identify the following four specialized workloads, with each focusing on a different aspect of the measured breakpoint-handling and -hiding implementations. When their results are put together, they can provide a full overview of the overhead associated with the different breakpoint-handling and -hiding implementations. The first two workloads, WL1 and WL2, focus on the execution of the breakpoint and its handling. The last two workloads, WL3 and WL4, are related to measuring the breakpoint-hiding methods by reading data from the page where the breakpoint is placed.

WL1: Execute the breakpoint. This workload is supposed to measure how long it takes the whole VMI stack to handle a breakpoint. There are a multitude of factors that comprise this latency: VM transitions (exits and entries), processing in the hypervisor, communication between the hypervisor and the VMI application and processing in the VMI application.

WL2: Execute the page with the breakpoint. Techniques such as altp2m make changes to the EPT configuration of individual vCPUs, which could also impact caching and TLB performance. If it has an impact on performance, this may be noticeable when other instructions are executed on the same page where the breakpoint is located. The previous workload does not reflect that, so this one is supposed to measure the latency associated with executing the breakpoint and additional instructions (NOP) that are located on the same page.

In the rest of the paper, we will use the tags introduced in

Table 3 to designate which workload–breakpoint-approach combination a measurement belongs to.

WL3: Reading the breakpoint. The stealth-related breakpoint-hiding methods using EPT permissions to realize the read trap on the page where the breakpoint is located are associated with overhead for the same reasons mentioned in WL1. This workload is designed to quantify this latency by reading from the exact same memory location where the breakpoint is placed.

WL4: Reading the page with the breakpoint. The used read trap based on EPT permissions has page-granularity. This trap not only intercepts and handles the read operation at the address where the breakpoint is located, but also triggers for every other read operation on the page. This workload reflects this fact and performs multiple read operation on all bytes of the whole page where the breakpoint is located. The statement that code pages are hardly ever read is not true in every case. There is a real use case for this workload because code-integrity checks, such as those performed by KPP/PathGuard, involve reading entire code pages.

As for the breakpoint-execution workloads, we will use the tags introduced in

Table 4 to designate which workload–breakpoint-approach combination a measurement belongs to.

6. Hardware Platforms

In this section, we describe the hardware platforms utilized for the hyper-breakpoint benchmark. Our selection encompasses Intel Core processors from the fourth generation onward, with the exception of the fifth generation, for which no representative CPU was available. To capture a broader performance spectrum, we also include comparatively weak devices, such as the Intel Core i3-6100 and the Intel Core i5-7300U, both of which are limited to two cores. The complete set of devices employed in the experiments is summarized in

Table 6.

For all systems, we attempted to establish stable operating conditions for the processor by adjusting the firmware settings. As far as the corresponding CPU features were available on the devices and the UEFI firmware setup allowed them to be disabled, the following functions were disabled:

all Efficient cores (on processors with performance and efficient cores)

Simultaneous Multithreading (SMT) aka Intel Hyperthreading (Intel HT)

Intel SpeedStep

Intel SpeedShift

Intel Turbo Boost Mode

CPU Power Management

CPU Power-Saving Mode (C-states)

The firmware setup did not provide settings to disable the aforementioned features on all systems. On systems that had no corresponding settings options, the features remained enabled.

Table 7 provides an overview of which features could be disabled on which systems. In one case (ThinkPad T14 Gen3), SpeedStep was intentionally kept enabled (ENABLED) because of operational issues that arose when SpeedStep was disabled. There were two other cases in which SpeedStep could not be disabled, but a performance policy could instead be set via the firmware setup. In these cases, the processor performance was set to maximum (max perf.).

6.1. Special Cases

Intel NUC7i5DNHE (7th gen i5 7300U)

- −

Processor model has only two cores (0,1)

- −

We chose to run system threads on core 0 and the VM, VMM process and VMI software on core 1.

- −

The system has only 8 GB main memory. We had to configure both VMs down to give 4 GB to the control VM (Dom0) and 3.6 GB to the Windows VM (Domu).

Fujitsu ESPRIMO D757 (6th gen i3 6100)

- −

Processor model has only two cores (0,1)

- −

Similar pinning/memory management as the previous device

Lenovo ThinkPad L14 Gen3 (12th gen i7 1265U)

- −

Processor model has only two performance cores (0,1) and eight efficient cores (2,3,4,5,6,7,8,9).

- −

Control VM (dom0) was running on CPU 0,1,2,3 (two performance cores, two efficient cores).

- −

SmartVMI and DRAKVUF were running on CPU 1 (performance core).

- −

Windows VM with bpbech and VMM were running on CPU 2 (efficiency core).

- −

All other system processes were pinned to CPU 0,1,3.

InfinityBook Pro Gen8 (13th gen i7 13700H)

- −

Hyperthreading (SMT) could not be disabled via firmware setup.

- −

We enforced that only one logical CPU of each HT core was used by assigning only the first logical CPU of each core to the VMs via XEN config, with the effect that hyperthreading was not used.

6.2. XEN Performance Adjustments

Since it could not be ensured via the firmware settings that all processor models would use their base clock speed consistently (SpeedStep and Power Management disabled), an attempt was made to set the clock speed to the base clock speed via the performance settings of the XEN hypervisor to prevent any form of dynamic clock-speed adjustments. This worked for most models for which the firmware-setup approach failed. Details can be found in

Table 8.

7. Measurements

As described in the previous section, the time measurements for the four breakpoint-handling methods and two breakpoint-hiding mechanisms were performed with the four workloads on all 20 systems. Even though not all CPU features could be disabled on every system, the observed relative ordering of breakpoint implementations was consistent across all 20 platforms. This suggests that residual features like SpeedStep or Turbo Boost may shift absolute timings slightly but cannot explain the systematic ranking, so the performance differences are indeed attributable to the breakpoint mechanisms.

7.1. Initial Analysis and Filtering

Across all measurement results, workload WL2 (“executing a page with a breakpoint”) consistently shows values almost identical to those of WL1 (“executing the breakpoint”) for all four breakpoint-handling variants. Since WL2 differs from WL1 only in the execution of 4095 NOP instructions, this metric will be discarded in future plots.

A further observation is that the timer overhead (column 1) is generally very low, typically between 0.5 µs and 2.5 µs on standard CPU cores or performance cores. An exception occurs on the ThinkPad L14 (i7-1265U), where the Windows VM must run on an efficiency core, resulting in slightly higher values. For every bpbench run and method, the timer overhead is remeasured. The values of the two DRAKVUF runs (altp2m, altp2m_fss) and the two SmartVMI runs (instr_rep, instr_emul) are always very close to each other, but differences exist between DRAKVUF and SmartVMI. Therefore, timer overhead values can be merged only per VMI application and per system.

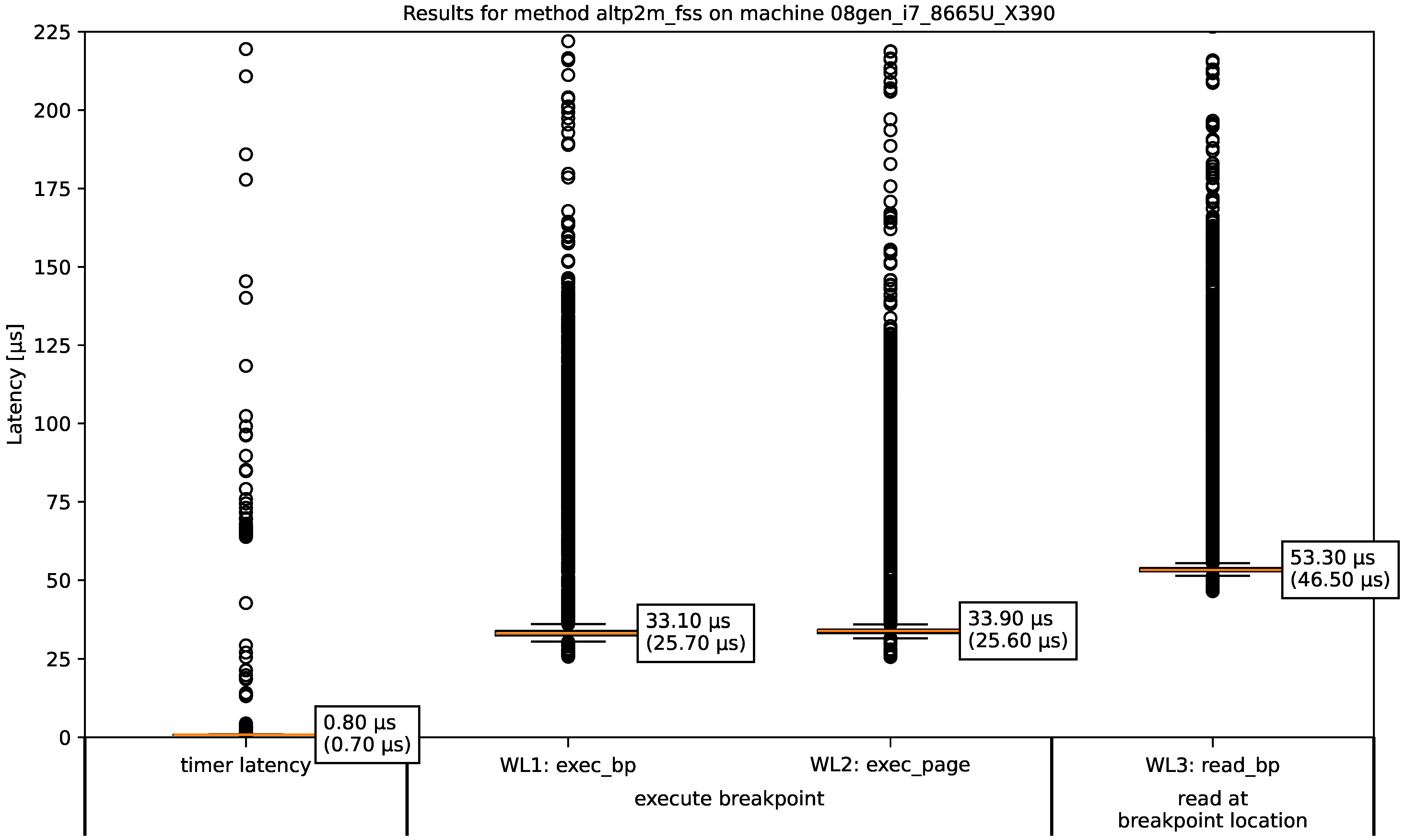

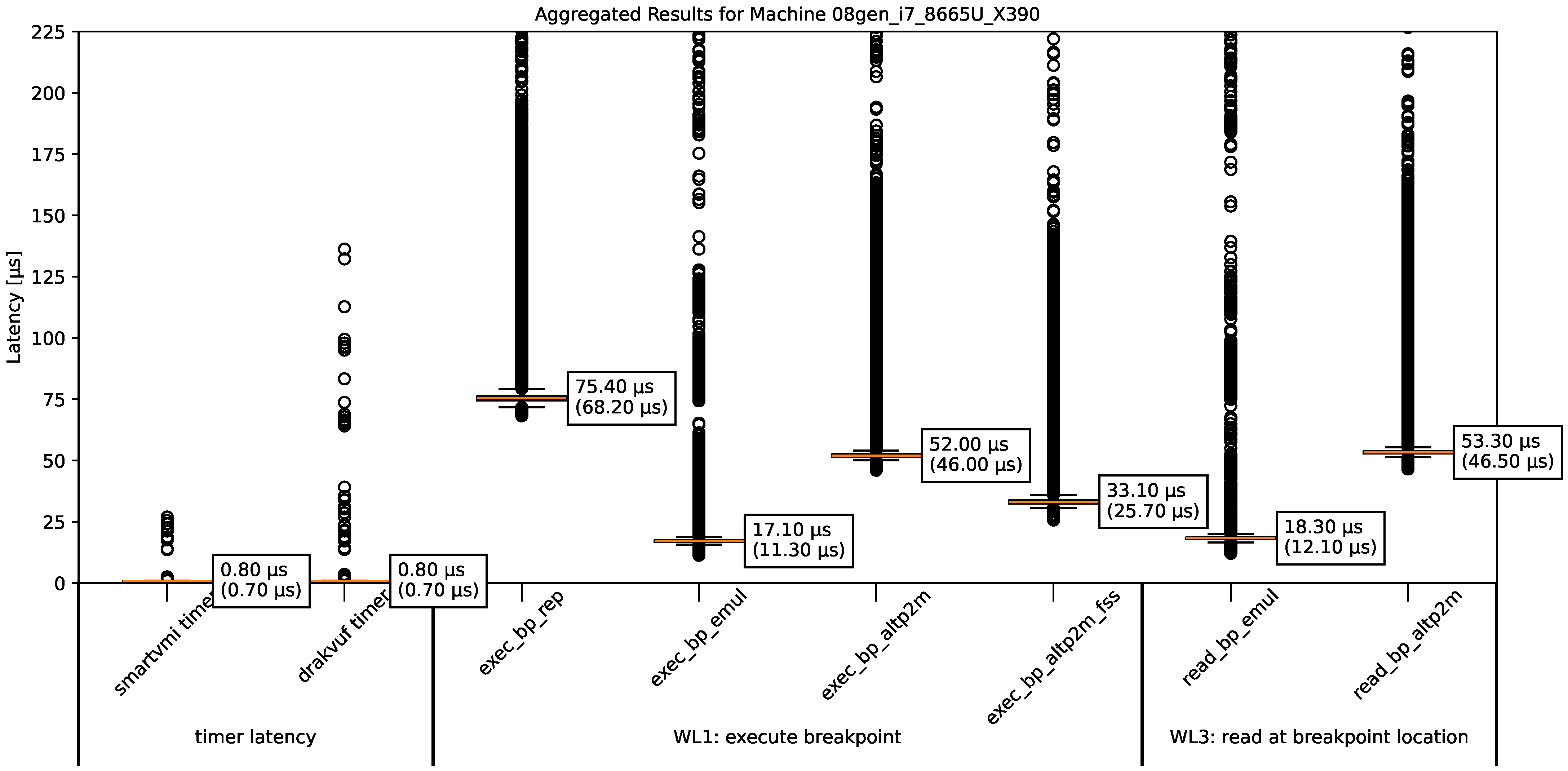

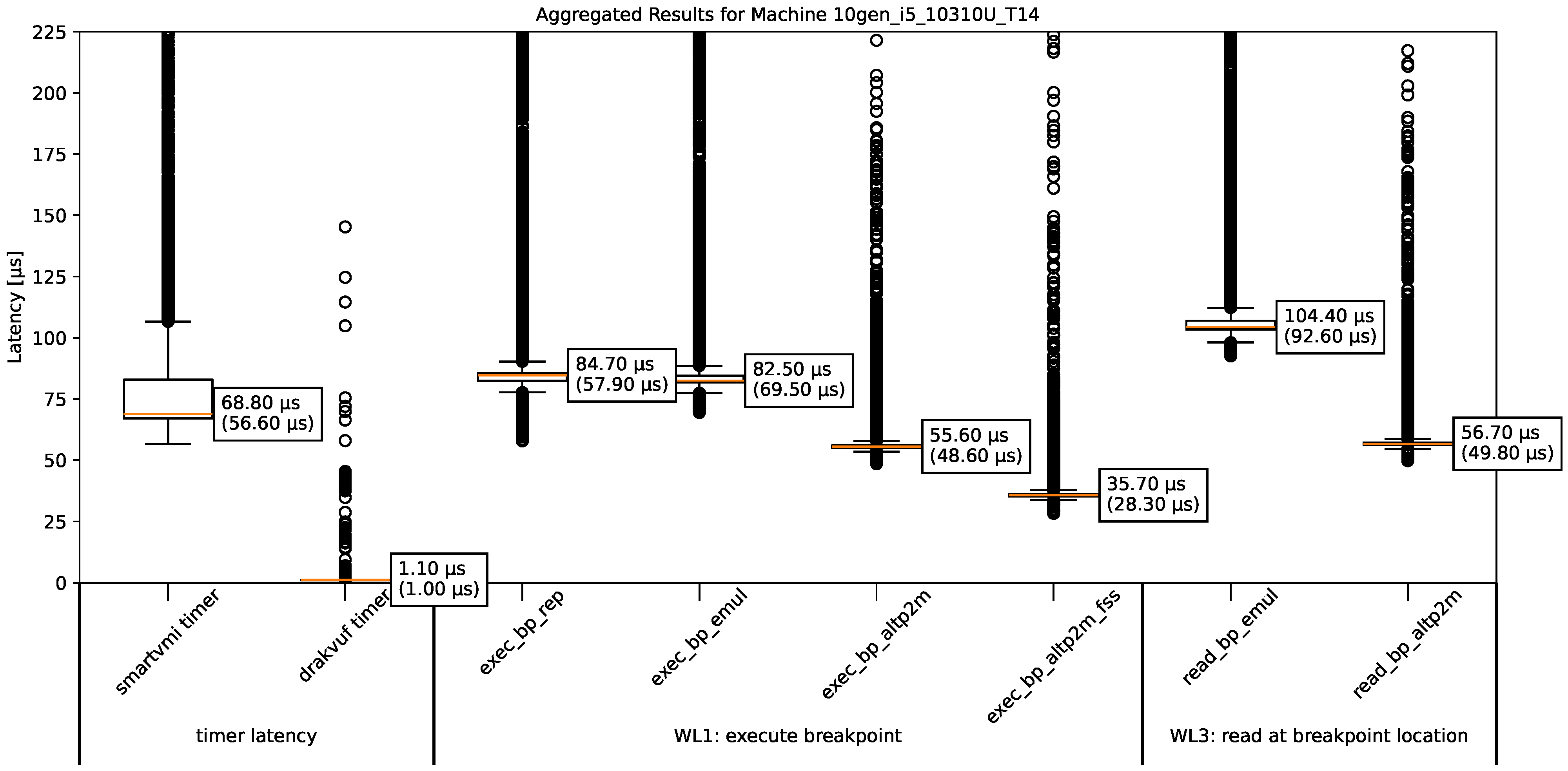

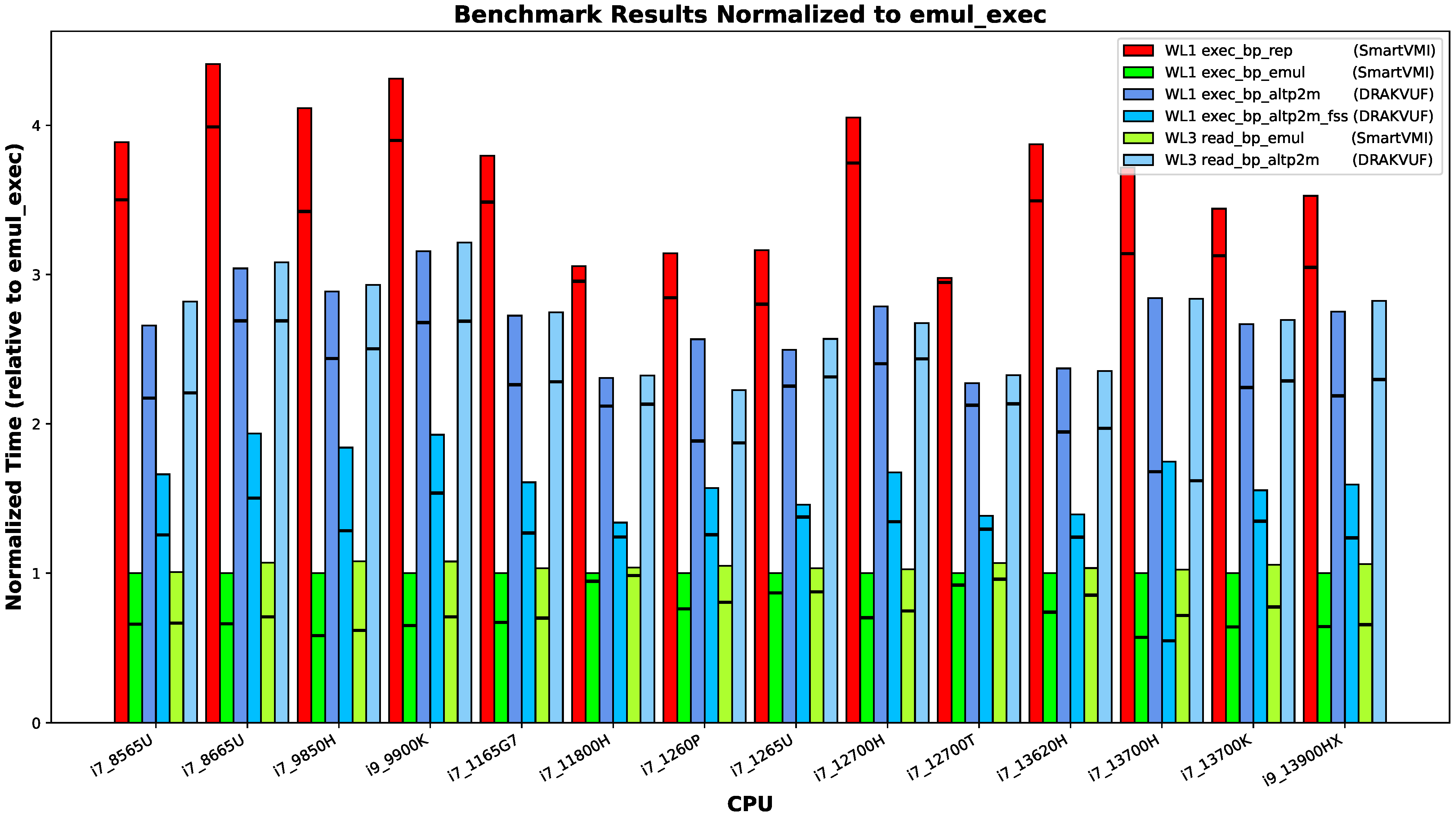

The boxplot in the figure illustrates the measured latencies of workloads WL1 (column 2), WL2 (column 3) and the stealth-related workload WL3 (column 4). Workload WL4 (“read a whole page with a breakpoint”) is not plotted, since reading the entire page through 4096 VMI-intercepted one-byte operations leads to latencies in the millisecond range, which cannot be meaningfully displayed with the other measurements. Column 1 serves as a reference, showing how long it takes to query the timestamp source.

The boxes indicate the InterQuartile Range (IQR), enclosing the middle 50% of the values. An orange line inside each box marks the median, which is also noted numerically to the right. The whiskers extend to the smallest and largest values within 1.5 times the IQR, while any values outside are plotted individually as outliers (circles). In all boxplots, very large outliers are omitted from the plot because they exceed the scale by several orders of magnitude. These stem from interruptions or context switches inside the Windows VM that pause bpbench’s execution. Nevertheless, all values, including outliers, are used to compute the IQR and median. Next to each box, the median and the minimum value (in brackets) are displayed, with the latter representing the best-case technical limit of the respective method.

7.2. Comparison of the Breakpoint Methods

We can see the following from the measurement results:

The breakpoint-handling mechanism using SLAT view switching (exec_bp_altp2m, exec_page_altp2m) incurs the same temporal costs as the SLAT-based breakpoint-hiding method (read_bp_altp2m, read_page_altp2m). This is expected, since both rely on the same operations (EPT switch, single-step execution, EPT switch back).

The fast single-step extension (FSS) Xen provides makes breakpoint handling considerably faster. The difference between exec_bp_altp2m and exec_bp_altp2m_fss essentially reflects the cost of switching from Xen to DRAKVUF and back again, which FSS avoids. Since FSS optimizes only breakpoint handling, the stealth-related read-trapping mechanism is unaffected, and thus read_bp_altp2m shows the same results regardless of whether FSS is enabled.

The instruction=repair method (exec_bp_rep) is quite a bit slower than the SLAT view-switching variant (exec_bp_altp2m). This matches our expectations, as both approaches require the same number of transitions, but instruction repair additionally performs a guest memory write, which appears to be more costly than VMCS manipulation.

The emulation of the original instruction as a breakpoint-handling method (exec_bp_emul) has roughly the same speed as stealth-related read emulation (read_bp_emul) across on all machines, which is reasonable.

The timer latencies were determined during each run of bpbench for each method. They are all very low and similar across all platforms, and therefore are not shown separately. Instead, we have combined them into a common timer latency dataset.

Each time bpbench is run, all four workloads are executed. This results in four measurement values for breakpoint-hiding mechanisms (WL3, WL4) on each machine with the tested methods. Since only two breakpoint-hiding mechanisms exist in our experiments with the selected VMI software, some methods appear twice. We present only the read_bp_altp2m, since DRAKVUF with and without fast-singlestep mode produces identical results (fast-single-step is not implemented for breakpoint hiding). Similarly, only read_bp_emul is shown, because this is the only breakpoint-hiding method that is implemented in SmartVMI. A breakpoint-hiding mechanism using instruction repair does not exist.

7.3. SmartVMI Anomalies

But before we go to compare hardware platforms, we have to investigate some anomalies. As a representative example,

Figure 7 presents the aggregated measurement results for the i5 10310U.

We can see that the timer latency with SmartVMI is very high; even the lowest measurement is roughly equal to the median value of the SLAT view-switching breakpoint-handling methods (altp2m). This is the case although there is no breakpoint trigger involved in determining the timer latency. SmartVMI also registers handlers for CR3 writes (context switches) to disable process-specific breakpoints for inactive processes. We can only assume that this causes the problem. The measurements of the SmartVMI-based breakpoint-handling methods are then also very different than those on the runs with normal timer latencies, so we have to discard these measurements because we do not know how much of the time is spent on breakpoint handling and how much is spent on the requests of timestamps before and after workload execution. This mostly affects older processor models and models with lower performance like i5 and i3 series, but the Intel Core i7 9750H certainly does not fall into those categories.

7.4. Measurements on All Hardware Platforms

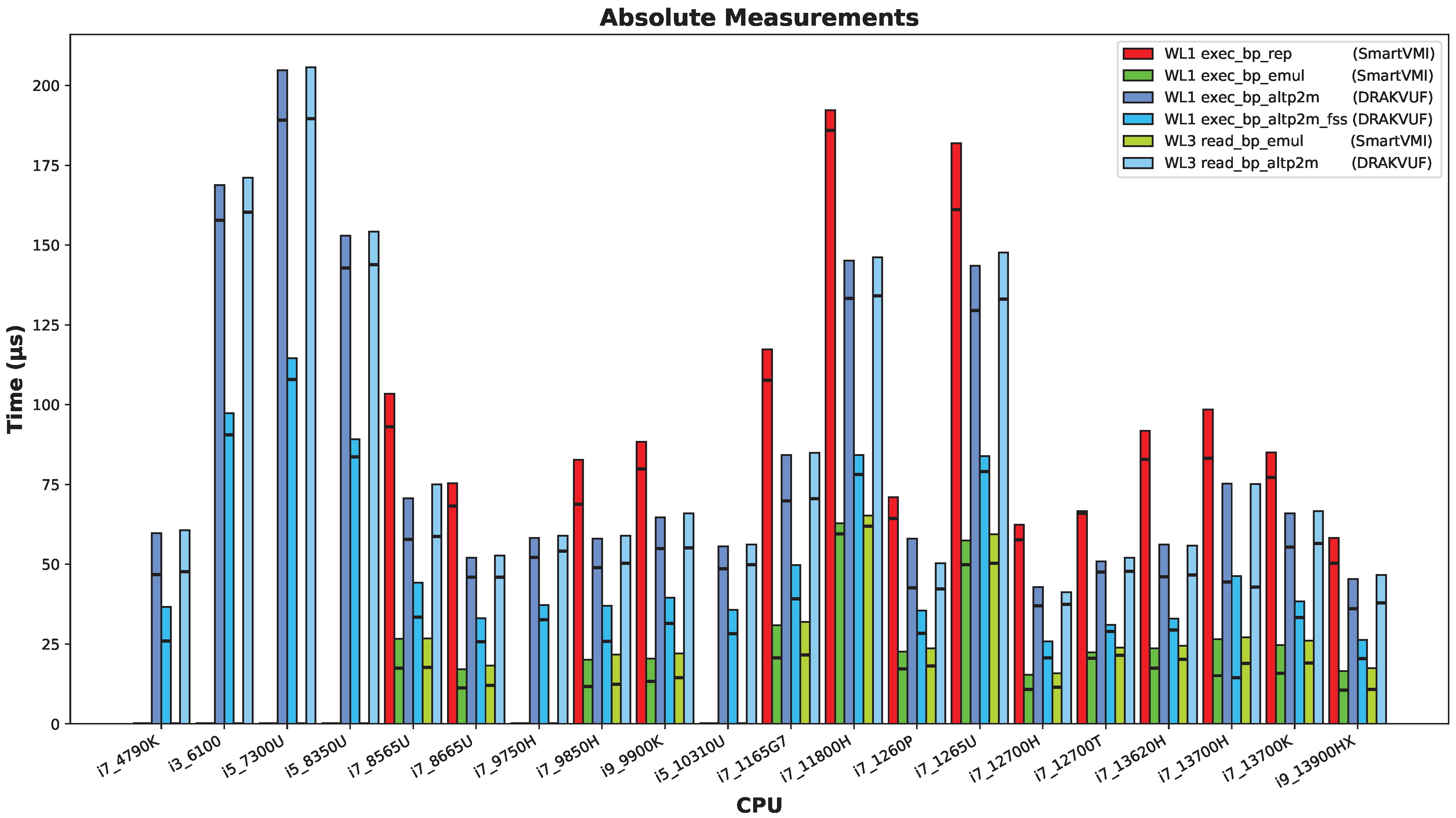

To find out how different processor models influence the measurement results, we summarize the results from the individual measurements for each machine in an aggregated form in

Figure 8. The aim here is not to compare the different hardware platforms with each other. Instead, the aim is to determine whether the pattern found in the method comparison remains the same across different systems or whether it changes visibly.

As described in

Section 7.3, a few runs of SmartVMI showed very high timer latencies, such that the results were unusable. Such SmartVMI results are excluded whenever the timer latency appears suspicious.

The measurement results presented are absolute/raw values of the measured temporal periods.

The height of each bar indicates the median value of all measurement points of a workload run. The horizontal black line within each bar provides the minimum measured time as a technical limit value for each measurement.

Since the

exec_bp_emul mechanism was the fastest in all experiments on all machines, it can be used as a baseline for normalizing the time measurements of all methods. However, this requires removing from the dataset all machines for which no valid measurements are available for the

exec_bp_emul method because of suspicious timer latencies, as discussed in

Section 7.3.

Figure 9 displays the normalized results.

Turning to common patterns across systems, we can confidently state that instruction emulation incurs lower overhead than SLAT view-switching (altp2m) because of the absence of single-stepping overhead. The two SLAT view-switching variants with and without fast-singlestep (altp2m vs. altp2m_fss) acceleration are both slower than emulation. However, in any case, with fast-singlestep mode (FSS) enabled, the procedure is faster. The instruction-repair mechanism consistently shows the highest temporal overhead. These relative differences are remarkably consistent across all tested platforms.

However, the exceptions among the machines are also clearly visible in the diagram. Both the Intel Core i3-6100U and Intel Core i5-7300U processors exhibit relatively slow performance. These CPUs each have only two cores, which prevents us from applying the default pinning scheme (core 1 for VMI software and core 3 for the VM and VMM processes and all other system threads on cores 0 and 2). On other machines, we observed performance degradation when the VM and VMI software were pinned to the same core. The Intel Core i7-1265U processor runs the VM on an efficiency core, which explains its comparatively lower speed, as expected. For the Intel Core i7-11800H, however, the cause of its performance behavior remains unclear; since it was connected to external power during measurement, battery-power management can be ruled out.

7.5. Does Hardware Advancement Have an Effect?

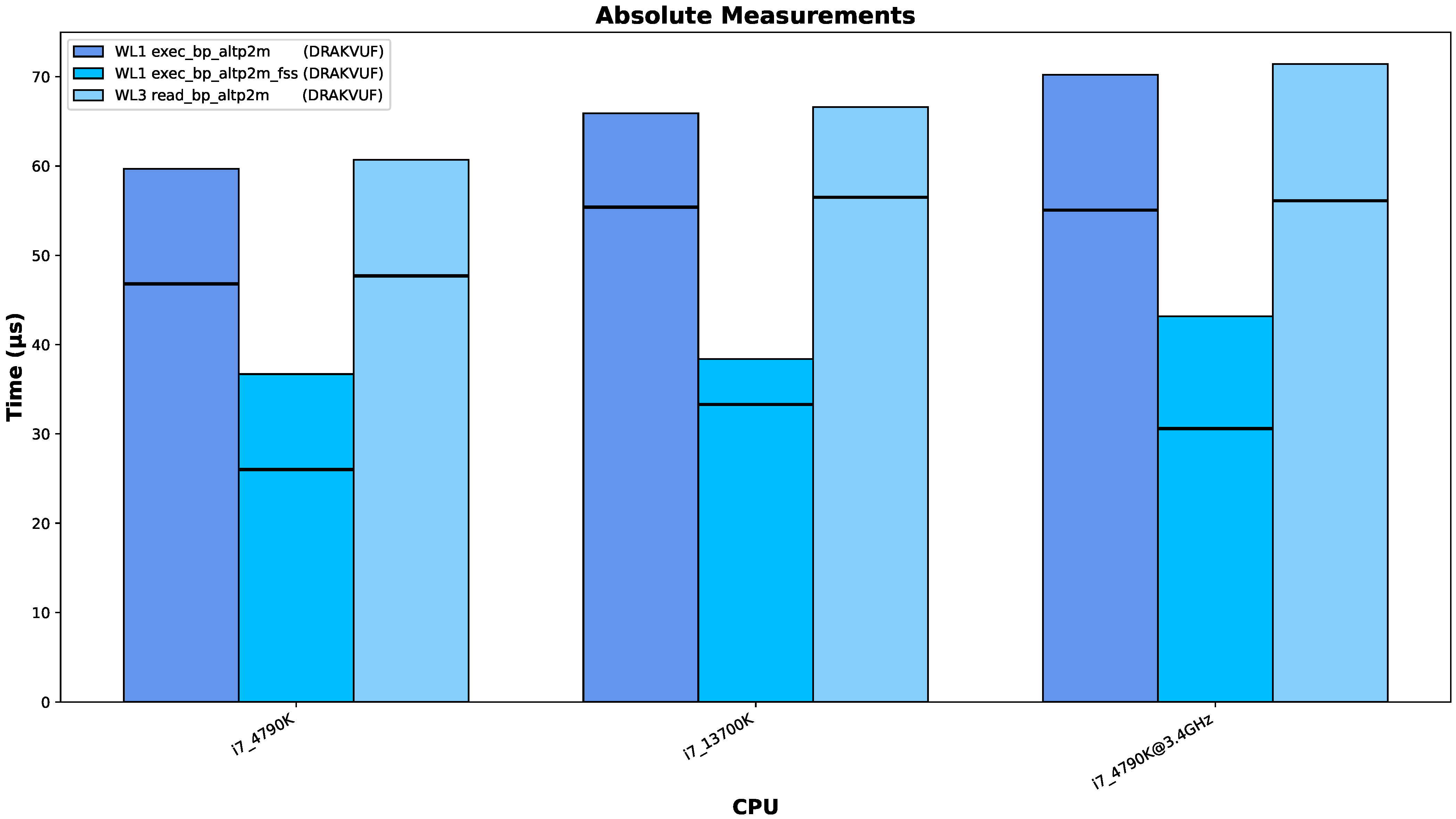

We aimed to investigate whether advancements in processor architecture have led to improvements in the performance of breakpoint-handling and -hiding methods and whether these developments result in changes to the observed outcomes.

However, making direct comparisons proves challenging in many cases, as laptops typically employ aggressive speed-stepping techniques and often operate at reduced frequencies. Additionally, disabling Intel TurboBoost functionality appears to be rarely supported on newer models. Furthermore, querying the actual CPU frequency using standard Linux tools is not easy feasible in our setup, since we run our VMI tools within the control VM Dom0 and our measurement tool inside an other VM and we can only observe virtual CPU information, which does not reflect true hardware frequencies. For these reasons, we limit our comparison to a small subset of processors for which we can ensure comparability. Specifically, we select the Intel Core i7-4790K and Intel Core i7-13700K as representative examples; both systems have TurboBoost disabled and operate at fixed frequencies of 4 GHz and 3.4 GHz, respectively. Intel certainly improved the CPU architecture in regard to computational performance. The thirteenth-generation processor more than makes up for the clock-speed deficit, probably with a combination of higher Inter-Process Communication (IPC) and better branch prediction, achieving a single-core sysbench score of 2921, while the fourth-generation processor achieved only 1292 points. However, the speed-ups do not carry over to breakpoint-handling performance.

Figure 10 shows the same measurements for the two processors as

Figure 8, but the results are easier to visually compare here. Additionally, we added bars on the right that show the times of the 4790K as if the CPU ran at 3.4 GHz (assuming the performance scales linearly). The 4790K slightly outperforms the 13700K in the EPT-switching-based workloads. When the 13700K and slower-scaled 4790K are compared, then the 13700K is faster, at least in the median results.

From these measurements and calculations, we cannot conclude whether there are architectural speed-ups associated with the crucial operations VM context switches, EPT table switch and single-stepping. If there are speed-ups, they are only marginal, especially compared to the increase in compute performance.

8. Conclusions

This section concludes this paper. In

Section 8.1, we summarize the main takeaways from the paper, before we discuss planned and potential future work in

Section 8.2.

8.1. Summary and Discussion

In this work, we present a measurement study that compares approaches to handling and hiding VMI breakpoints. Our results show that VMI developers a clear, evidence-based hierarchy of breakpoint techniques to prioritize for performance-critical applications.

In the course of our work, we created a portable OS image containing Ubuntu, XEN, DRAKVUF, SmartVMI and a Windows 10 VM with bpbench. We contribute this image to give VMI researchers the tool set to reproduce our measurements and benchmark other systems in the same way. To obtain the data needed to answer our research question, we configured the UEFI firmware on a range of devices with various Intel Core CPUs ranging from the fourth to the thirteenth generation for more consistent measurements, booted the image, and performed the breakpoint-benchmark measurements for all handling and hiding approaches.

We stated the research question that led to the creation of this work in the introduction: How do the existing breakpoint implementations compare in terms of performance?

Fortunately, our measurement results allow us now to give a conclusive answer to this question. On all measured CPUs, instruction emulation is the fastest breakpoint-handling approach, followed by EPT switching with fast single-stepping (FSS), EPT switching with normal single-stepping and finally instruction repair in memory. When it comes to keeping breakpoints stealthy, read emulation is consistently faster than switching EPT tables and single-stepping the reading instruction.

Some devices did not allow us to disable TurboBoost technology. Additionally, through the Xen layer, we did not have a feasible way of measuring the actual clock speeds, and the CPUs all came with different base frequencies and boosting behavior. All these factors make it difficult, if not impossible, to distinguish between the influences of CPU architecture and clock speed. Comparing the two desktop processors i7 4790K and i7 13700K, which we can be reasonably certain were running on the base frequencies, we could see that while the prime-number identification performance (as measured by sysbench) increased significantly, the breakpoint handling was affected only marginally, if at all.

Since the methods do not change when a different hypervisor is selected, the trend and thus the performance ranking of the methods should be the same on other hypervisors. However, specific values cannot be transferred because the overhead varies greatly, especially between Type 1 and Type 2 hypervisors.

8.2. Future Work

In this paper, we considered only the XEN hypervisor because the breakpoint approaches either already existed for Xen or were easy to implement (SmartVMI instruction emulation). The only working implementation for introspection with KVM is SmartVMI’s default instruction-repair mechanism. DRAKVUF is not compatible with KVM, and we are unsure how much work it would take to make the two compatible. According to the maintainer, SmartVMI supports EPT switching, so implementing DRAKVUF’s altp2m approach in SmartVMI should be possible (see

https://github.com/GDATASoftwareAG/smartvmi/issues/140#issue-2046303351, accessed on 2 September 2025). SmartVMI’s instruction emulation, which we implemented for XEN, does not directly work with KVM because LibVMI is missing the implementation for instruction emulation. However, SmartVMI’s read emulation is functional with KVM, so we hope the required changes in LibVMI and KVMI are small and that we will be able to implement instruction-emulation-based breakpoint handling for KVM soon.

Finally, we want to improve VMI infrastructure deployment. Our prepared image for the measurements has a size of 18 GB. It has to be stored on a file server for sharing, and making changes or updates is a tedious process (deploying image on a system, booting, updating, recreating a compressed image). Ideally, all components would be packaged with Nix, and we could simply share a NixOS configuration or NixOS module on GitHub, which could reproducibly build the OS locally and allow for simple configuration changes. XEN and SmartVMI are already usable with Nix, so packaging for DRAKVUF is the main component that is missing.