1. Introduction

In underground mining, technological growth is reflected in the real-time monitoring of mobile assets, a practice that optimizes operational efficiency, equipment condition, and overall performance [

1]. However, these advancements contrast with persistent challenges, such as the deterioration of mine haul roads, whose deficiencies cause premature wear on tires and structural components, while also reducing equipment reliability and productivity [

2]. These issues are exacerbated by unplanned downtime, which significantly impacts production and generates substantial economic losses [

3].

Although much of the literature focuses on open-pit mining—where the low efficiency of haul trucks directly affects economic indicators [

4]—the underground context faces equally critical challenges. The absence of predictive maintenance (PdM) in this field leads to suboptimal operational decisions, a lack of strategic planning, and, consequently, high maintenance costs alongside decreased equipment reliability [

5].

A promising solution lies in implementing PdM through advanced techniques, such as artificial intelligence (AI) models. Notably, the low availability of mining equipment can drastically disrupt the operational chain, especially when maintenance costs account for 35% to 50% of the mine’s total budget [

6]. Even with conventional preventive and predictive maintenance strategies, the recurrence of failures continues to impact operations, underscoring the need for more sophisticated, data-driven approaches.

The low availability of equipment in underground mining stems directly from the lack of advanced failure analysis techniques and poor planning/implementation of maintenance strategies. Adopting innovative approaches in this area would optimize operational costs, extend equipment lifespan, and ultimately increase availability for critical operations [

7]. While conventional strategies like Preventive Maintenance (PM), Corrective Maintenance (CM), and Predictive Maintenance (PdM) have proven their ability to enhance equipment reliability and reduce costs [

8], their effectiveness is compromised by two key factors: (i) the absence of real-time monitoring systems and (ii) the lack of historical records integrated into computerized maintenance management systems (CMMSs). This operational gap results in inadequate equipment condition supervision and, ultimately, suboptimal maintenance decisions [

9].

The progressive deterioration of equipment operational condition associated with these limitations highlights the critical need to implement PdM techniques based on advanced models (e.g., machine learning or vibration analysis). These technologies would not only enable more accurate failure prediction but also ensure operational continuity through proactive, data-driven interventions.

Recent research in predictive maintenance (PdM) has developed diverse methodologies applicable to mining equipment. For instance, a hybrid model combining metaheuristic algorithms to predict failure time in mining machinery has been proposed, achieving exceptional accuracy (R

2 = 0.99) [

10]. Similarly, data mining techniques have been applied to haul trucks in open-pit mining, enabling the diagnosis of critical failures and prediction of their remaining useful life (RUL) [

11].

In underground mining environments, autoregressive fault detection methods have demonstrated effectiveness in monitoring electrical machinery, improving reliability while reducing operational costs [

12]. Concurrently, Lubricant Condition Monitoring (LCM) has emerged as a key strategy for maintenance diagnosis and prognosis. By analyzing lubricant properties (such as wear particles, viscosity, and sulfur content), LCM facilitates data-driven decision-making [

13]. On the other hand, recent studies show that 3D laser scanning outperforms ultrasonic testing in predicting lining wear in mining ball mills [

14]. Additionally, the integration of Industry 4.0 technologies with predictive maintenance (PdM) in mining improves not only efficiency and profitability but also the accuracy of fault detection, offering key guidelines for its implementation [

15]. These findings support the superiority of PdM over traditional corrective or preventive approaches, as demonstrated in systematic reviews of the area [

16].

The most advanced LCM systems employ inline sensors (capacitive, inductive, acoustic, and optical) to proactively assess machine health [

17]. Among the most relevant parameters are:

Temperature anomalies, indicating excessive friction or inadequate lubrication.

Viscosity deviations, reflecting lubricant degradation or contamination.

Metallic particle concentration, signaling wear in critical components.

These indicators enable early fault detection, allowing interventions before catastrophic failures occur. However, the implementation of the Industrial Internet of Things (IoT) has gained particular relevance in predictive maintenance, as it enables real-time data collection—a fundamental requirement for developing robust predictive models [

18]. These data streams, which include operational variables and component states, not only facilitate failure anticipation through trend analysis but also optimize intervention scheduling, significantly reducing unplanned downtime.

This trend of adopting advanced PdM techniques extends beyond the mining sector, as evidenced by the broader literature. PdM is recognized for its ability to predict failures and optimize mining equipment operation through early fault detection. Unlike Preventive Maintenance (PM), which prevents failures, and Corrective Maintenance (CM), which acts after failures occur, PdM offers a proactive solution. Numerous studies have proposed advanced predictive techniques to enhance mining equipment performance. For instance, the Internet of Things (IoT) was used to monitor equipment in a coal mine, achieving higher operational efficiency [

19].

Other studies recommend implementing real-time condition monitoring for ore haul trucks, reducing equipment downtime and maintenance costs [

8]. However, researchers argue that PM and CM are insufficient for critical equipment, especially in mineral concentration plant mills, and propose PdM with mathematical algorithms to optimize maintenance for such assets [

16]. Additionally, laser scanning has been employed to predict wear in ball mill linings [

14], while PdM was used to forecast fatigue fractures in mining truck turbocharger shafts [

20].

A hybrid model for mining equipment achieved a 10.9% reduction in fuel consumption [

21]. Meanwhile, integrating PdM with the Weibull distribution improved sustainability and resource optimization in maintenance planning [

22]. To increase availability, reduce maintenance costs, and extend equipment lifespan, combining PdM with other techniques is essential [

23].

Machine learning provides more detailed and interpretable results for decision-making, significantly improving spare parts management [

24]. Specifically, AI enables the prediction of critical moments in underground mining machinery operation, such as sudden turns and dynamic overload analysis [

25]. Techniques like logistic regression and Random Forest have proven effective in this context. Furthermore, digital twins have demonstrated higher fault prediction accuracy, outperforming conventional models like CNNs and LSTMs [

26].

The integration of Digital Twins (DTs) and IoT with PdM has transformed manufacturing, optimizing production by up to 30% and reducing costs by 40% [

27]. These technologies enable real-time monitoring, advanced simulations, and predictive decision-making, enhancing supply chain resilience and visibility in the Industry 4.0 era. In the same sector, Explainable AI and sensor fusion with techniques like Random Forest and Fast Fourier Transform (FFT) achieved 95% fault detection accuracy, reducing downtime and improving operational efficiency while bolstering supply chain resilience [

28].

In the mechanical industry, a deep learning and Wavelet Transform approach was applied to analyze gearbox health, detecting faults with 97.11% accuracy—surpassing traditional methods while improving reliability and reducing costs and downtime [

29]. Deep learning is revolutionizing multiple sectors. In the energy sector, it optimizes the use of second-life batteries to maximize profits [

30]. Similarly, in networks, it enhances dynamic routing without human intervention [

31]. In automotive manufacturing, a DT-based methodology incorporating data-driven maintenance prioritization, genetic optimization, and dispatching rules optimized maintenance task allocation, reducing idle time and boosting production line efficiency [

32].

Recent literature reports significant advances in maintenance strategies across multiple sectors. In manufacturing, maintenance policies have been optimized by incorporating energy costs and emissions, leading to the development of energy-based maintenance (EBM) approaches. Through the use of digital twins, such methods have achieved cost reductions of up to 38%, demonstrating the potential of digital technologies to integrate sustainability criteria into asset management [

33]. In the field of energy infrastructure, condition-based monitoring (CBM) has been shown to enhance the resilience of multi-energy systems to failures, thereby reducing unplanned downtime—an equally critical principle for underground mining machinery [

34]. Furthermore, several studies have identified a strong correlation between digitalization and sustainable maintenance (SM), highlighting the capacity of digital tools to overcome organizational barriers and underscoring the need for enabling frameworks [

35]. Pioneering research has also optimized maintenance scheduling by simultaneously considering costs, carbon footprint, and technical fatigue through Deep Reinforcement Learning, thereby laying the foundation for SM [

36]. In photovoltaic generation, the implementation of non-periodic maintenance policies has reduced costs by up to 21.4% while increasing availability, providing evidence of the superiority of condition-based models [

37]. Finally, digital twin prototypes that integrate machine learning have been developed to prioritize actions based on sustainability and safety criteria, advancing the broader objective of SM-oriented decision-making [

38].

Despite notable advancements, two critical research gaps remain: (1) no existing study integrates field-acquired sensor data—such as pressure, temperature, and vibration—with oil analysis through artificial intelligence techniques specifically applied to underground mining equipment; and (2) existing models fail to adequately address data processing and fusion under highly dynamic and adverse operating conditions, including extreme temperatures, high humidity, airborne particulate matter, oxy-gen-deficient environments, and restricted continuous data acquisition. To overcome these limitations, this study proposes an innovative methodological framework that integrates multiple machine learning techniques to enhance predictive maintenance strategies in underground mining operations, with the following objectives:

Improve accuracy in early fault detection.

Optimize maintenance resource allocation.

Reduce operational costs by minimizing unplanned stoppages.

In contrast to the studies reviewed [

10,

11,

12,

13,

14,

15,

16], this is the first work to apply and validate this integrated approach specifically within the context of underground mining, demonstrating tangible improvements in the reliability of critical equipment such as diesel engines and hydraulic systems.

2. Materials and Methods

Despite the advances in PdM applied in sectors such as manufacturing, energy, and automotive, a significant gap is identified in its implementation within the context of underground mining. This gap is particularly evident regarding the prediction of critical component wear through oil analysis and operational variables. Although existing studies have employed AI to improve operational efficiency and reduce machinery failures, the specialized literature reveals a limited direct application of these approaches in real-world underground mining conditions. In this environment, factors such as humidity, temperature, accessibility, and limited data availability hinder the deployment of robust predictive systems. In this context, the present study poses the following hypothesis: the application of artificial intelligence models trained with historical data from oil analysis and operational variables can reliably anticipate the wear of critical components, allowing for the adjustment of maintenance frequency and a reduction in unexpected failures in underground mining equipment. This hypothesis guides the methodological structure of the work and enables an evaluation of the real-world applicability of ML techniques in complex and demanding industrial environments like mining.

For this study, historical data on mining machinery failures will be utilized, including oil analysis reports and equipment failure records.

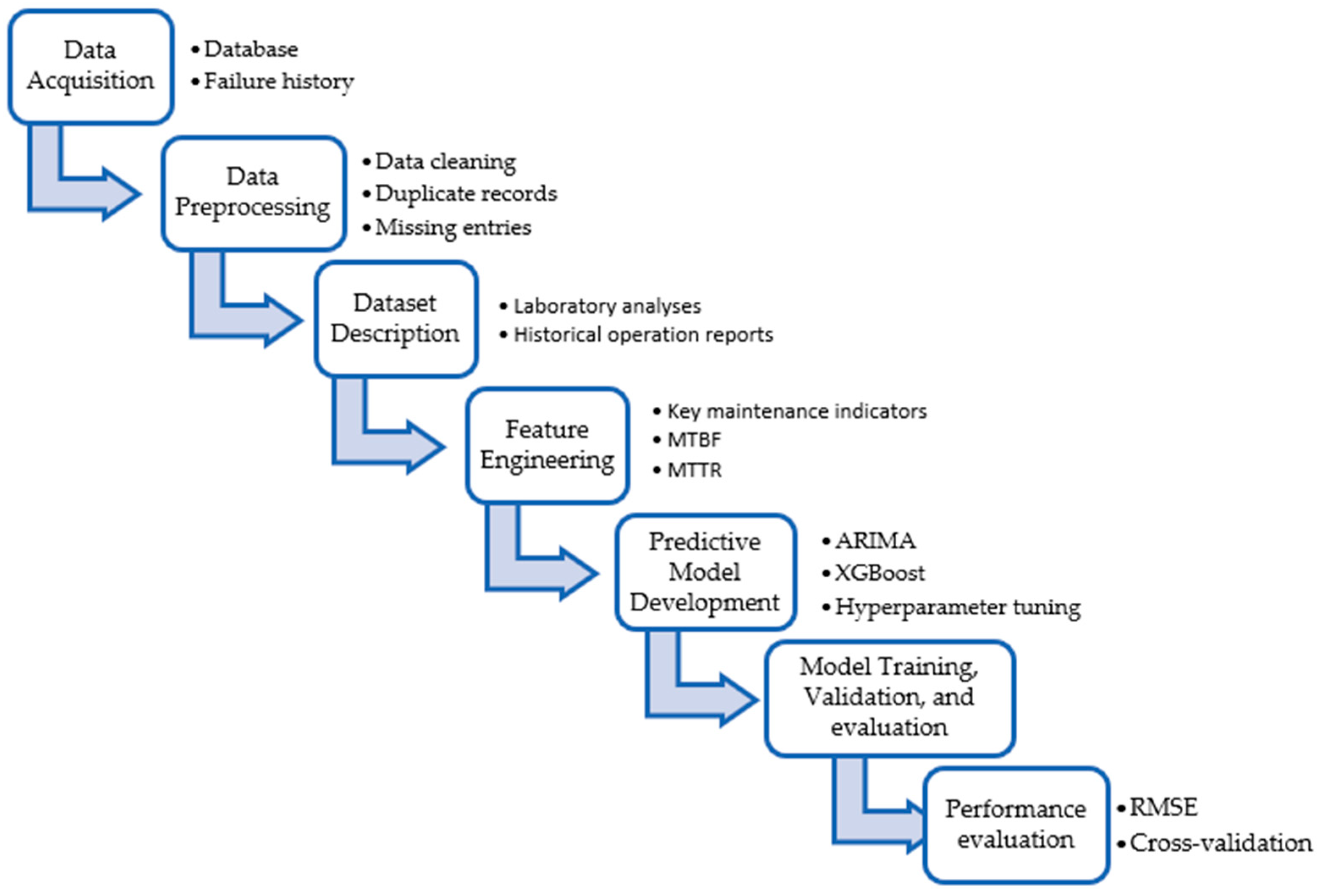

Figure 1 outlines the development methodology.

Data Acquisition: First, system downtime records for each equipment unit, motor system oil analysis data, and failure reports will be collected. The dataset covers the period from January 2023 to May 2024.

For this study, a comprehensive compilation of historical mining machinery failure data will be performed, including detailed records of classified downtime events and failures per system for each equipment unit. Additionally, motor system oil analysis reports will be evaluated to both assess current equipment condition and adjust preventive maintenance frequencies based on observed wear trends and specific operational conditions.

In underground mining, mineral extraction involves multiple interconnected processes, with mechanized support equipment representing the final link in the production chain. Consequently, this study focuses on analyzing failures of this critical equipment, collecting data from January 2023 to April 2024. The acquired data will be essential for evaluating operational reliability and optimizing maintenance strategies.

To ensure the coherence of the data sources, the mechanism used for integrating the different types of recorded data is described below. In this study, we worked with two primary data sources: The dataset consisted of two sources: (1) equipment sensor measurements (oil pressure, air pressure, temperature, and vibration) recorded as hourly averages, and (2) laboratory analyses of oil samples collected every 125 h of equipment operation. Both data types were processed separately during the acquisition and preprocessing stages. The integration between these two sources was performed through an approximate temporal alignment by timestamp, followed by the concatenation of the relevant variables into a single feature vector. We did not employ an advanced multi-source fusion scheme, as the asynchrony in the sampling frequency and the differing nature of the variables precluded a more complex joint integration. This approach allowed us to leverage the complementary value of both sources without compromising the integrity of the original data.

As shown in

Figure 2, the highest failure frequency occurs in the motor system (followed by hydraulic and electrical systems). These three systems account for over 80% of total failures, indicating they should be prioritized in maintenance planning. Steering, additive, and brake systems demonstrate significantly lower failure incidence, contributing minimally to cumulative downtime.

Data Preprocessing: The dataset was subjected to a rigorous preprocessing procedure. Duplicate records in the dataset were first identified and removed in order to prevent bias in subsequent analyses. To address missing data, variables exhibiting more than 20% missing values were discarded, as such levels of incompleteness could compromise the reliability of the statistical inferences. For the remaining variables, missing entries within the sensor time series were imputed through temporal linear interpolation, thereby preserving the continuity of the measurements without introducing artificial variance. Following this step, all multi-source sensor time series were aligned to an hourly frequency, ensuring temporal synchronization across heterogeneous data sources and enabling consistent feature extraction. Subsequently, feature scaling was performed using the StandardScaler class from the scikit-learn library (v1.2.2). This method standardizes each variable by subtracting the mean and dividing by the standard deviation, which improves the convergence of many machine learning algorithms. Importantly, the scaling parameters (mean and standard deviation) were estimated exclusively from the training dataset to avoid data leakage and subsequently applied to transform the test dataset, thereby ensuring the validity of the model evaluation process. This temporal alignment by timestamp ensured the chronological and causal coherence among the different data sources. This will include handling missing data, correcting inconsistencies, and normalizing the data.

The selected variables were sourced from sensors installed on equipment operating in the field, including oil pressure, temperature, vibration, and laboratory data from oil analyses, such as the levels of iron (Fe), silicon (Si), copper (Cu), and vanadium (V). The choice of these variables was based on their proven correlation with friction processes, internal degradation, lubricant contamination, and anomalous thermal conditions. This selection was supported by both relevant scientific literature on predictive maintenance and by accumulated operational experience in an underground mining environment.

Dataset Description: The dataset used in this study was collected from a real-world underground mining operation, spanning from January 2023 to May 2024. Records from three main sources were integrated: embedded sensors, laboratory oil analyses, and historical operational reports.

Embedded Sensors in Critical Equipment:

Monitored Variables: Oil pressure (diesel and hydraulic systems), air/fuel pressure, engine temperature, and vibration analysis.

Frequency: Hourly sampling, with real-time transmission.

Laboratory Analysis of Lubricants:

Samples: Collected every 125 h of operation.

Measured Parameters: Metal concentration (Fe, Cu, Si, V) and viscosity values, which are useful for estimating internal engine condition and lubricant degradation.

Historical Operational Records:

Documented Failure Events: Type (mechanical/electrical/hydraulic), affected component, downtime (minutes), and applied corrective actions.

These reports allowed us to identify pre-failure behavior and train functional predictive models.

Feature Engineering: From the preprocessed data, key maintenance indicators will be calculated, such as Mean Time Between Failures (MTBF) and Mean Time To Repair (MTTR). Additionally, wear trends in diesel engine internal components will be analyzed based on oil analysis, generating relevant features for prediction.

In the feature engineering stage, essential metrics will be calculated to evaluate equipment reliability and efficiency. The Mean Time Between Failures (MTBF) will be determined, which quantifies failure frequency, and the Mean Time To Repair (MTTR), which measures corrective action efficiency. These metrics are fundamental for optimizing equipment availability and refining maintenance planning.

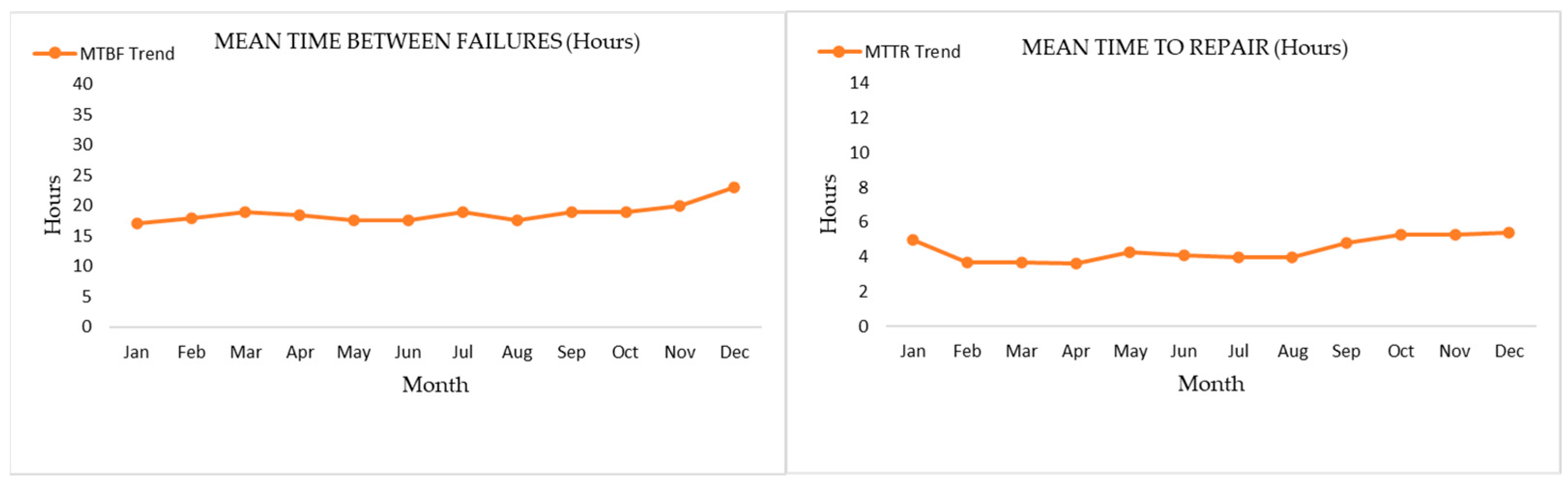

MTBF analysis reveals an upward trend throughout the year, suggesting improved system reliability, with failures occurring increasingly farther apart. In contrast, MTTR shows a slight downward trend, indicating an overall reduction in the time required to repair breakdowns over the year (see

Figure 3). These trends will help understand equipment behavior and prioritize maintenance actions.

Predictive Model Development: For failure prediction, the ARIMA (Autoregressive Integrated Moving Average) algorithm will be used, which is effective for modeling and forecasting future events based on historical patterns. This approach will identify trends and behaviors in past failure data. The application process of this algorithm is illustrated in

Figure 4.

The study will employ a hybrid approach combining traditional statistical models with advanced machine learning techniques to optimize failure prediction in underground mining equipment. As a baseline, the ARIMA model will be implemented to establish comparisons with the proposed methods. Subsequently, the following machine learning algorithms will be applied:

XGBoost (eXtreme Gradient Boosting): Selected for its ability to handle nonlinear relationships in structured data and its robustness against outliers.

Random Forest: Implemented to evaluate the importance of operational features through multiple decision trees.

Recurrent Neural Networks (RNN) and LSTM: Specifically designed to capture temporal patterns in historical equipment data.

These models will be trained using historical failure data, operational variables (load, temperature, vibration), and previous maintenance records. Cross-validation (k-fold) will ensure result generalization.

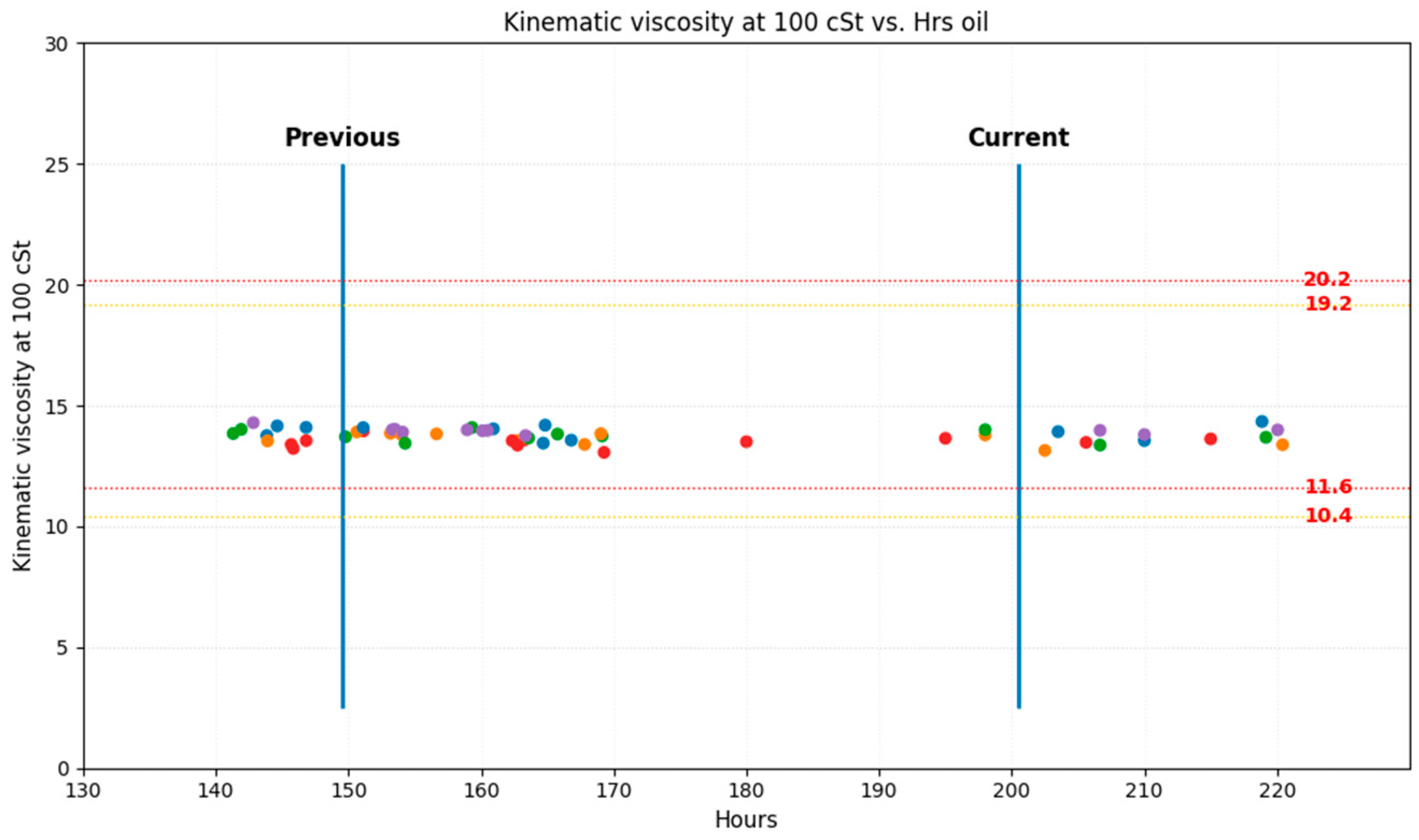

Additionally, oil analysis will be incorporated as a complementary technique to monitor the internal condition of systems, including engines and hydraulic systems. Oil analysis will act as a “window” into the machinery, enabling the evaluation of current maintenance frequency (

Table 1), optimizing it, and extending equipment lifespan (

Figure 5).

These parameters will be correlated with predictive model data through sensor fusion, serving as early indicators of wear (see

Table 2). The integration of this data will allow:

Model Training, Validation, and Evaluation: For model evaluation, the dataset was randomly partitioned into 80% for training and 20% for testing using the train_test_split function with a fixed seed (random_state = 42) to ensure reproducibility. During the training phase, 5-fold stratified cross-validation was implemented to mitigate overfitting and guarantee model stability against data variability. The hyperparameters of each algorithm were optimized using either Grid Search (GridSearchCV) or Random Search (RandomizedSearchCV), depending on the specific model. The optimization process evaluated various combinations based on the Root Mean Square Error (RMSE) or F1-score, as appropriate for the predictive task (regression or classification). This methodological design aims to reflect real-world operational conditions, thereby maximizing the transferability of the results to underground industrial environments where continuous monitoring and accurate fault prediction are essential for reducing costs and improving equipment availability.

Performance Evaluation of the predictive system was evaluated using key metrics such as the Mean Squared Error (MSE) and the coefficient of determination (R2). The results were compared against historical records to validate the model’s effectiveness.

To optimize the models’ performance, a systematic hyperparameter tuning was conducted using RandomizedSearchCV, with 100 iterations and 3-fold cross-validation. The primary metric used for this optimization was the F1-score, with a clear priority on minimizing false negatives, given the critical impact of an undetected failure. A summary of the optimized hyperparameters for the Random Forest model is presented in

Table 2:

This procedure was also applied to other models, such as XGBoost and LSTM, where we sought configurations that would improve the F1-score and reduce the prediction error on the test set.

Model Evaluation: Model performance was evaluated using metrics tailored to the study’s objectives. For the failure prediction task (a classification problem), we employed Accuracy, Recall, and the F1-score. For time series forecasting, the Root Mean Square Error (RMSE) was utilized. Additionally, the Early Detection Time was considered; this metric, defined as the interval between the model’s prediction of a failure and its actual occurrence, is key for maintenance planning.

Table 1 details the scope and duration of Preventive Maintenance (PM) activities for each proposed plan. It specifies which set of systems is being addressed in each case, allowing for a comparison of the complexity and effort required. For example, Plan A focuses exclusively on the drive system, with an estimated duration of 5 h. The more comprehensive Plan B also includes a general inspection of all equipment, increasing its scope. Finally, Plan C includes maintenance of the drive and transmission systems, requiring 10 h of work. The objective of comparing these plans was to evaluate their efficiency and prioritize them. For this purpose, it was crucial to predict failures in critical systems and thus optimize the scheduling of maintenance outages.

Given the challenges of real-time multivariate data acquisition in mining environments, our initial strategy focused on the ARIMA model. We applied this model to key variables with trend and seasonality patterns—such as oil pressure, engine temperature, and vibration to establish a baseline for short-term forecasting. These variables exhibit regular patterns under the extreme conditions of the underground operating environment, which justified our choice due to the existing restrictions on continuous multivariate data acquisition in the field. This allowed us to project local variations associated with wear and loss of efficiency. However, to achieve a more robust prediction, this analysis was complemented by more advanced machine learning models, such as XGBoost and LSTM, which enabled us to integrate multiple variables and capture nonlinear relationships. This staged approach, which combines the simplicity of an interpretable model with the power of advanced techniques, is a consolidated methodology in the industry and served as our reference framework for this study [

39,

40,

41].

The main objective of this approach is for the models to develop the ability to discern subtle patterns and establish meaningful relationships between observed trends in oil analysis and the probability of future failures. Understanding these interconnections will enable more accurate and proactive prediction of failure events, which in turn will allow optimization of preventive and corrective maintenance strategies, maximizing equipment lifespan and minimizing unplanned downtime.

3. Results

Figure 3 illustrates the direct relationship between operational reliability indicators and maintenance effectiveness. An increase in Mean Time Between Failures (MTBF) reflects higher equipment reliability, while a decrease in Mean Time To Repair (MTTR) suggests improvements in maintainability. These results underscore the need to integrate artificial intelligence models capable of accurately predicting failure events, enabling more efficient maintenance planning and data-driven technical decision-making. This strategy would contribute to increased equipment availability and, consequently, to optimized performance in demanding operational contexts such as underground mining.

Figure 6 displays the failure projection obtained using an optimized ARIMA model. The training data (blue line) exhibit high variability, whereas the actual observations (green line) reflect a more stable trend. The generated predictions (red line) for May 2024 exhibit a generally stable trend with minor fluctuations, indicating a low and stable probability of failure for the analyzed components. These results reinforce the appropriateness of maintaining the current predictive maintenance strategy, complemented by continuous monitoring to enable timely interventions and minimize the risk of critical failures.

Figure 7 shows the failure prediction generated by a second ARIMA model applied to a distinct time series. The historical data (blue line) exhibit high dispersion. The future predictions (red lines), projected from June 2024 onward, indicate stationary behavior without significant variations. This stabilization may be interpreted as a period of lower failure risk, provided operational and maintenance conditions remain constant.

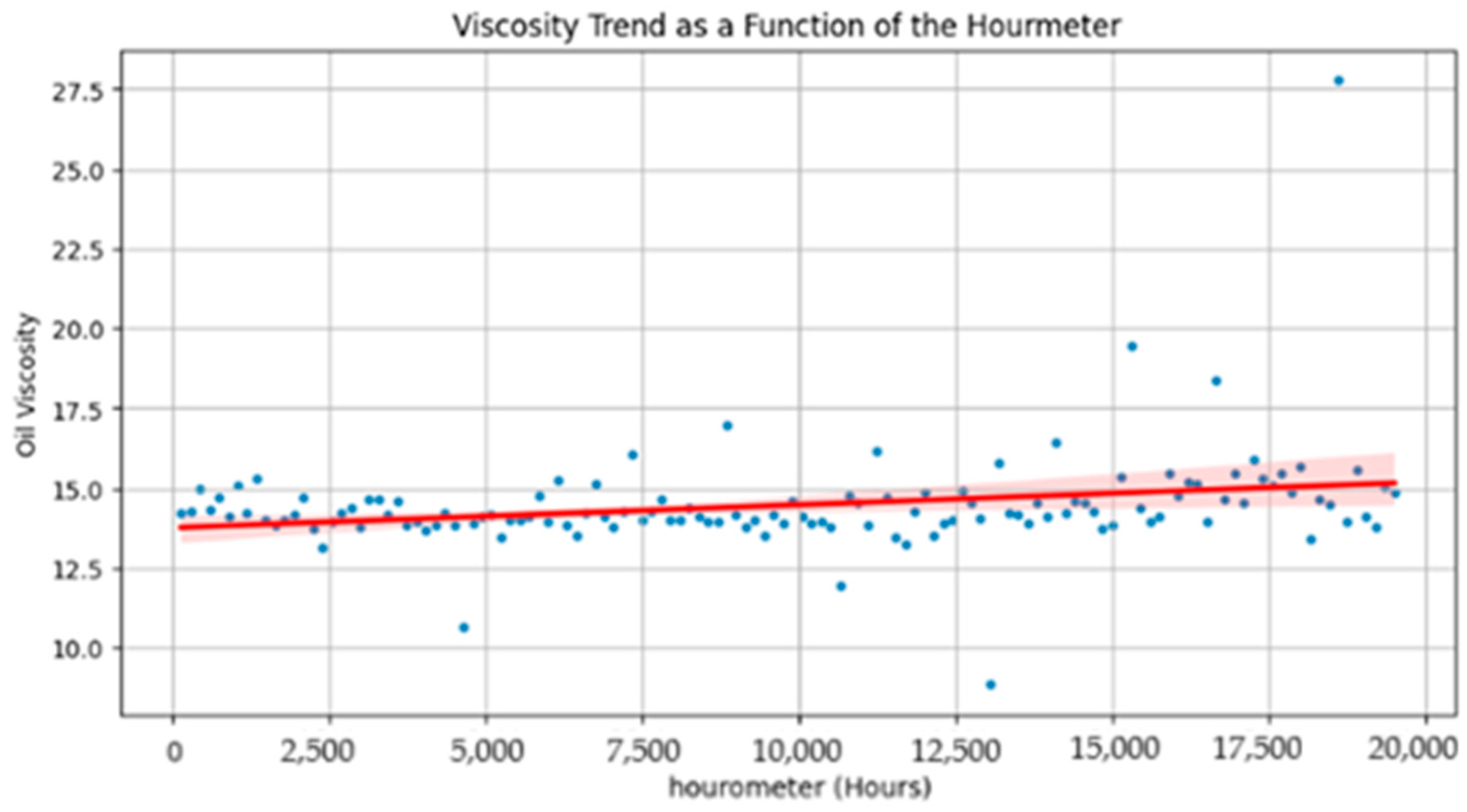

Figure 8 analyzes the evolution of kinematic viscosity at 100 °C as a function of oil service hours, using data processed through machine learning techniques. Two operational periods were compared: one before preventive maintenance adjustment and another after. The results show that in both cases, viscosity remains within the established technical limits (10.4 to 20.2 cSt), though with greater dispersion in the earlier period. This pattern suggests improved thermal stability of the lubricant in the current period and it supports adjusting the maintenance frequency to once every 200 h.

Figure 9 shows the time-based evolution of six metals in the lubricating oil (Al, Cu, Cr, Sn, Pb, and Fe), correlated with equipment operating hours. In most cases, concentrations remain within the manufacturer-defined operational ranges. However, a moderate increase in iron (Fe) and chromium (Cr) levels is observed, along with a slight upward trend in aluminum (Al) and copper (Cu) during specific phases of the operating cycle. This may be associated with fretting wear on internal components such as bearings, heat-treated surfaces, and aluminum alloy components. These results highlight the utility of oil analysis as an early indicator of mechanical degradation.

The integration of results from

Figure 8 and

Figure 9 suggests a technical opportunity to extend preventive maintenance intervals without compromising equipment operational integrity (see

Table 3). The observed stability in oil viscosity and acceptable wear metal levels support this decision. This extension would optimize asset availability and utilization while reducing costs associated with unplanned maintenance.

Figure 10 depicts the copper (Cu) wear progression in the lubricating oil, correlated with accumulated operating hours of the diesel engine. The individual data points (blue) represent periodic measurements, while the trend curve (red), accompanied by its shaded confidence interval, demonstrates a gradual increase in Cu levels over time. This pattern suggests progressive metallic particle release, likely associated with wear of internal components such as bearings or oil coolers, justifying its monitoring as an early warning indicator.

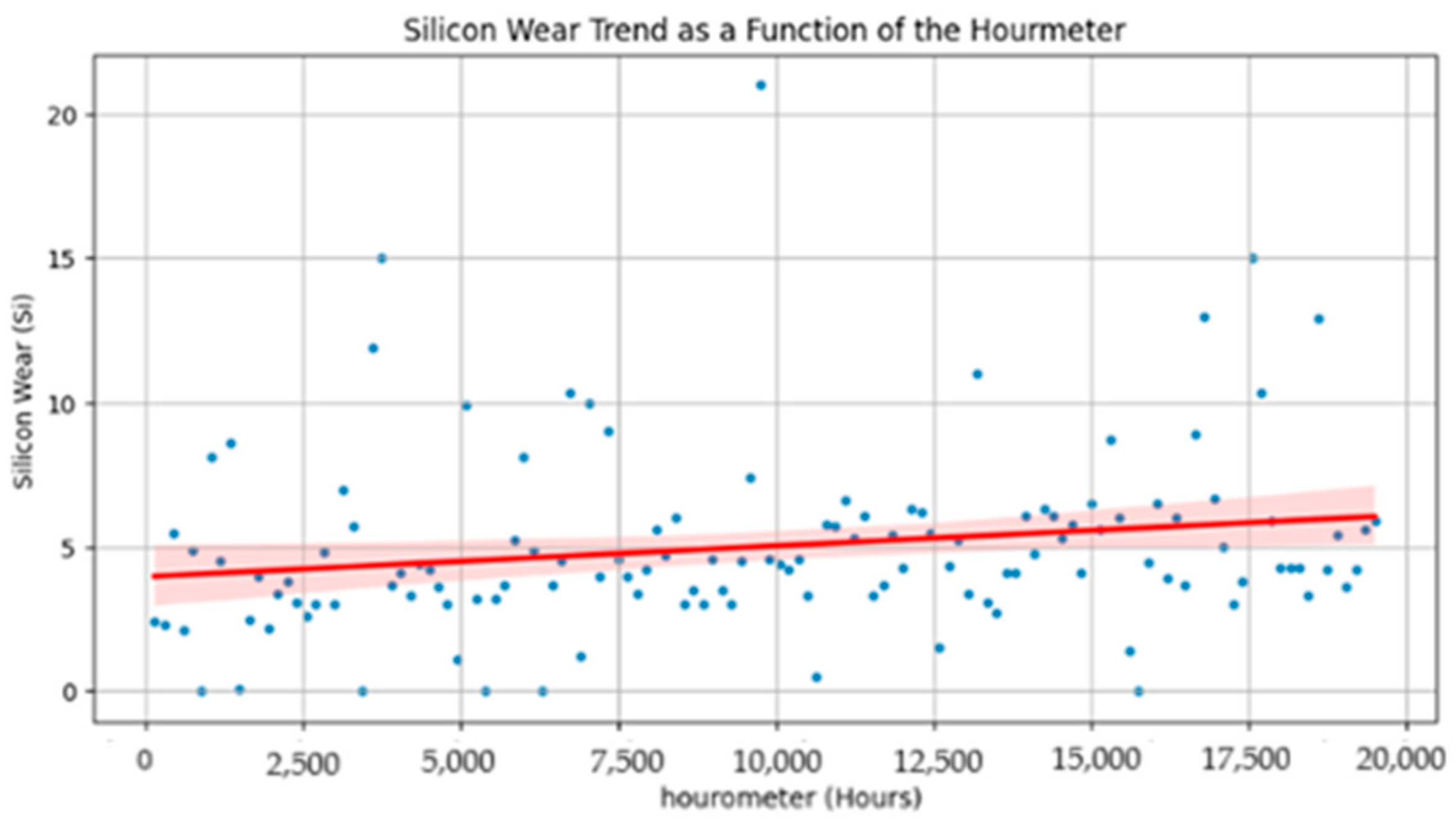

Figure 11 shows the silicon (Si) concentration trend in the lubricating oil as a function of accumulated operating hours of the diesel engine. Blue points indicate measured values in sequential order, while the red curve, accompanied by its shaded confidence interval, reveals a sustained upward trend. This behavior may be associated with abrasive contaminants, typically originating from external particle ingress (e.g., atmospheric dust or filtration system deficiencies). Since elevated silicon levels correlate with accelerated wear in internal components, this parameter constitutes a critical variable for predictive monitoring and preventive corrective maintenance scheduling.

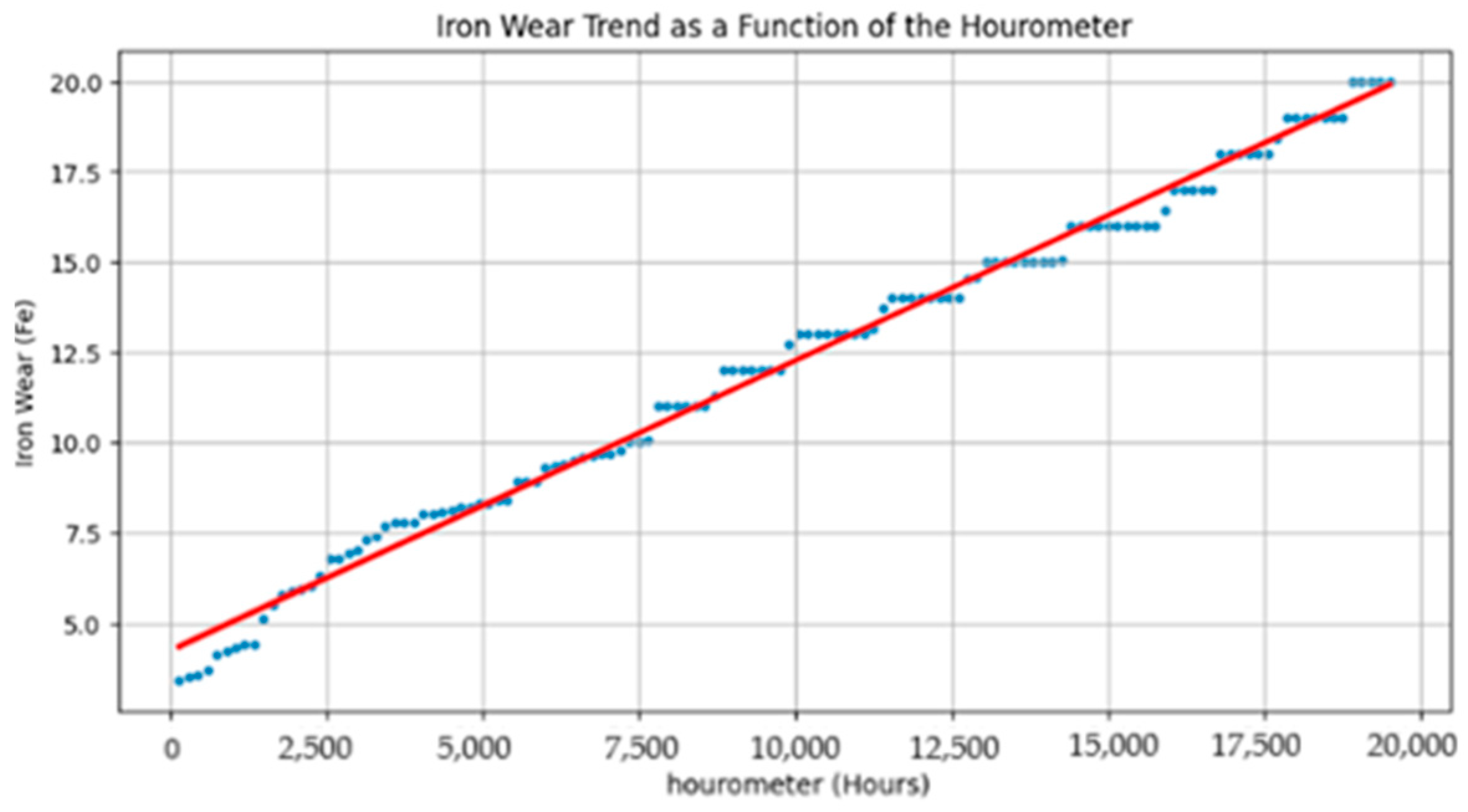

Figure 12 presents the temporal evolution of iron (Fe) particle concentration in the lubricating oil as a function of accumulated operating hours of the diesel engine. Both individual measurements (blue) and the trend curve (red) demonstrate a progressive increase in this metal over time. This behavior is typically associated with mechanical wear of internal components such as cylinder liners, bearings, or gears. The rising Fe concentration in the oil reinforces its value as a functional degradation indicator and suggests the need to incorporate this parameter into the continuous predictive monitoring system.

Figure 13 presents the evolution of the lubricating oil’s kinematic viscosity as a function of accumulated operating hours of the diesel engine. The blue points represent individual measurements, and the red curve illustrates the generalized trend, whose reliable operational limits are indicated by the shaded confidence band. A slight upward trend is observed toward the end of the evaluated period, which may reflect either thermal degradation of the oil or contamination by combustion products. A sustained viscosity increase can impair the oil’s ability to lubricate efficiently, particularly during cold starts, thereby increasing the risk of accelerated wear in the engine’s internal components.

Physical validation of predictive models in underground mining remains a critical challenge due to the limited access to equipment operating continuously and the extreme environmental conditions within mine shafts. Nevertheless, during a scheduled maintenance shutdown, the condition of a turbocharger coupled to a diesel engine was thoroughly documented. This turbocharger had accumulated 8050 operating hours under maximum dynamic load, an interval identified by the predictive model as presenting a high risk of degradation from thermo-mechanical fatigue. Macrographic analysis of the components (

Figure 14) revealed only minor surface wear (≤0.2 mm of material loss) on the turbine blades, with no evidence of microcracks or significant plastic deformation (red circle). Furthermore, the absence of axial play in the turbine shaft confirmed the structural integrity of the rotating assembly (yellow arrow line). The working pressure at full load remained within nominal parameters (217 ± 5 kPa), indicating preserved aerodynamic efficiency and compliance with the manufacturer’s technical specifications for underground mining operations. The strong correlation between the low predicted degradation and the actual physical condition of the component supports the model’s reliability in forecasting not only imminent failures but also extended periods of safe operation, thereby optimizing proactive maintenance strategies. These results demonstrate that monitored wear indicators (e.g., metallic content, viscosity) serve as robust predictors of the actual physical condition of components, establishing a solid foundation for the development of accurate predictive models.

Figure 15 demonstrates the Random Forest model’s capability to estimate iron (Fe) particle evolution in lubricating oil as a function of operating time. The model successfully captures nonlinear patterns in historical data, enabling accurate prediction of future Fe concentrations. This predictive capability proves critical for anticipating wear processes in engine internal components and for designing condition-based maintenance interventions.

Figure 16 demonstrates the performance of the XGBoost model in predicting Fe wear particles, demonstrating high capability to fit historical data and accurately forecast future values. The gradient boosting-based architecture enables capturing complex relationships between operational variables and wear levels, making this model a robust tool for predictive maintenance applications in demanding industrial environments.

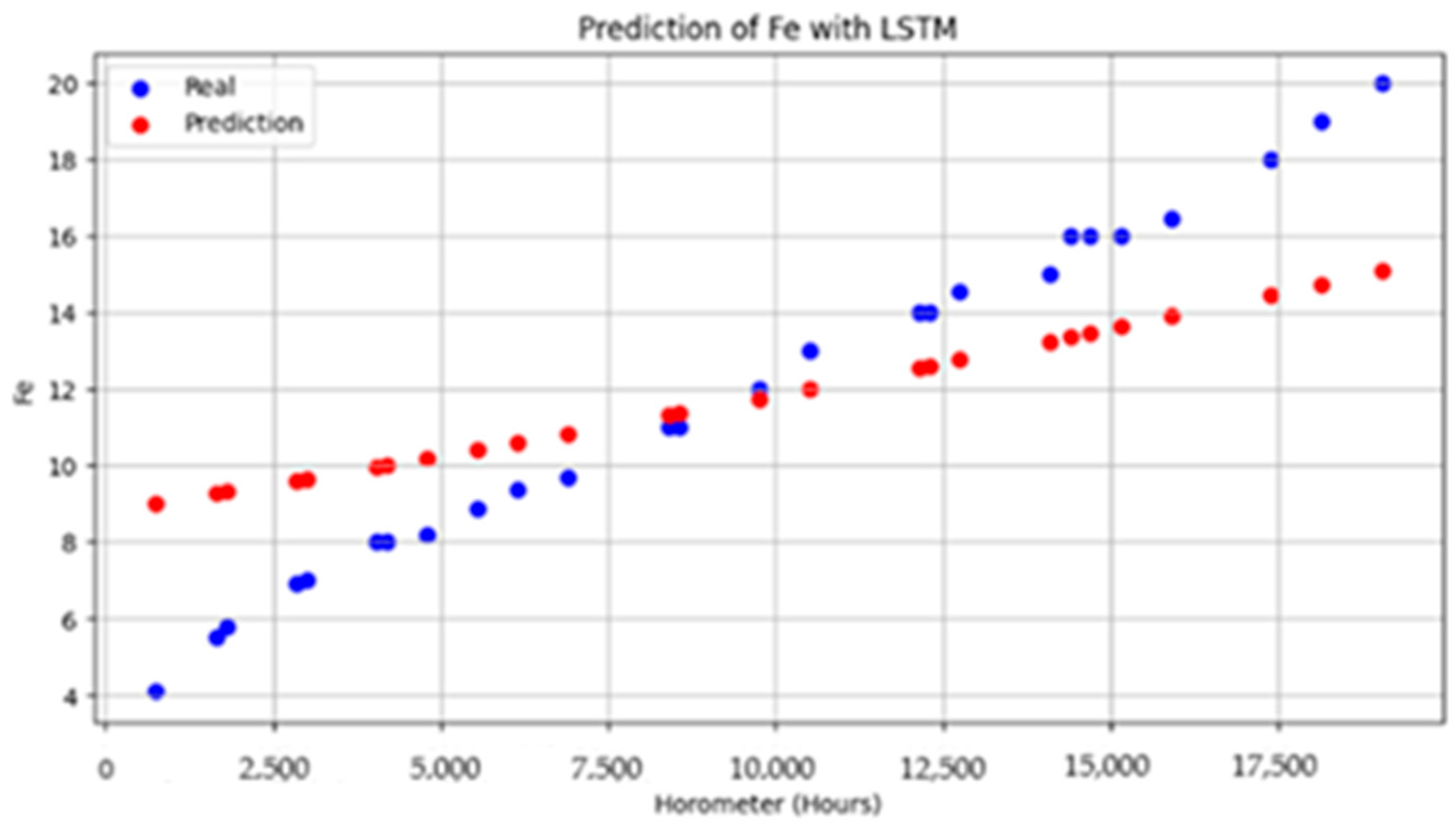

Figure 17 presents the Fe results obtained with the LSTM (Long Short-Term Memory) model, a recurrent neural network specialized in time series processing. While it partially captures the dynamics of historical data, its predictions show higher error levels compared to Random Forest and XGBoost models. This difference may stem from LSTM’s sensitivity to training data quantity and quality, as well as its greater structural complexity. Consequently, its predictions prove less accurate for wear particle estimation in this specific context.

Table 4 shows the metrics MAE (Mean Absolute Error), MSE (Mean Squared Error) and R2 score (R-squared).

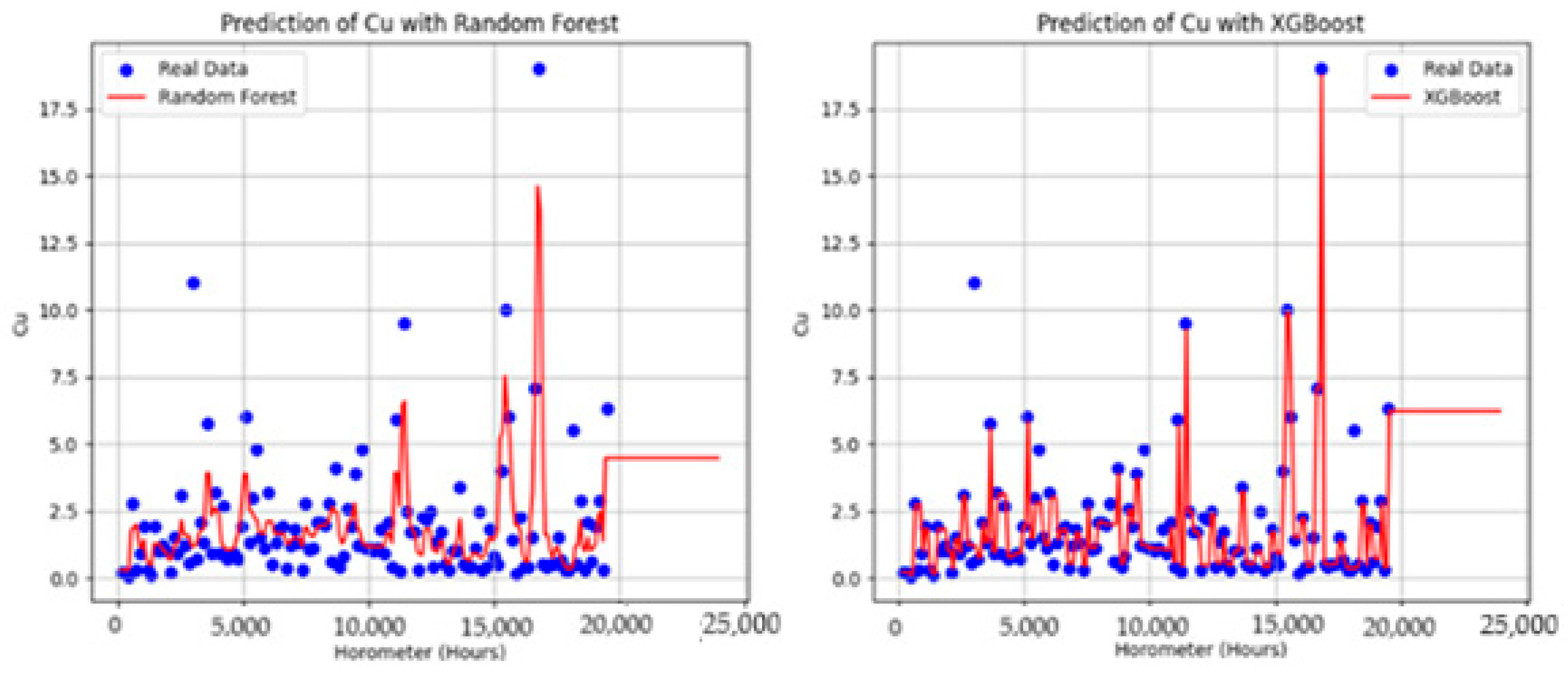

Figure 18 compares the predictive performance of Random Forest (left) and XGBoost (right) models in estimating copper (Cu) wear as a function of engine operating time. Both models demonstrate good fit to historical data, though XGBoost shows better capability to capture wear fluctuations, particularly during high-variability intervals. This difference suggests XGBoost’s greater sensitivity to complex patterns, which could translate into more precise decisions in real operational contexts.

Figure 19 presents the comparison between Random Forest (left) and XGBoost (right) models for predicting silicon (Si) wear as a function of accumulated operating time. Both algorithms demonstrate adequate fitting capability to historical data; however, XGBoost delivers more stable performance during high-dispersion intervals, reducing prediction error for atypical events. This characteristic positions it as a more robust alternative for anticipating Si wear behavior, which can be decisive for optimizing maintenance planning in mining environments.

Recent studies in other industrial sectors, such as textiles, have demonstrated the potential of machine learning models for predictive maintenance tasks. For instance, the AdaBoost algorithm achieved 92% accuracy in fault classification [

42], while deep neural networks reached R

2 = 0.86 and RMSE = 0.097 in quality control-oriented regression tasks [

43]. Although these results originate from different domains, they showcase the versatility of these techniques and support their potential applicability in mining contexts, particularly when combining signals like vibrations and wear particles. This cross-sector knowledge transfer strengthens the justification for the approach adopted in the present study.

4. Discussion

The analysis of historical failure data (January–April 2024) and its SARIMA-model projection (May–December 2024) partially supports the proposed hypothesis, demonstrating that a model trained on historical data can capture relevant temporal patterns for failure prediction. The generated forecast shows an oscillatory trend with marked periodicity, indicating that SARIMA identified a seasonal component in the data. This pattern suggests future failures will fluctuate around a mean value following a repetitive cycle—information valuable for preventive maintenance planning. However, the discrepancy between projected oscillations and historical failure distribution reveals the model’s lingering limitations in fully representing real operational dynamics. This fitting gap creates an opportunity to explore hybrid models integrating SARIMA’s seasonal component with machine learning’s nonlinear capabilities, ultimately enhancing predictive accuracy and strengthening operational decision-making.

The results for iron (Fe) particle concentration prediction demonstrate that both Random Forest and XGBoost models robustly fulfill the proposed hypothesis, showing that AI models trained with historical oil analysis data and operational variables can reliably anticipate critical component wear. In this case, both models achieved near-zero MSE values and determination coefficients (R

2) approaching 1 (

Figure 16), confirming their ability to capture complex nonlinear relationships and hidden patterns in time-series data. In contrast, the LSTM model exhibited higher MSE and lower R

2, indicating reduced accuracy in representing Fe wear dynamics in this context. This performance gap opens opportunities for future research focused on optimizing recurrent neural network architectures or developing hybrid approaches that combine LSTM’s sequential processing capability with the robustness of ensemble models, ultimately enhancing predictive performance.

The results obtained with Random Forest (R

2 = 0.9972) and XGBoost (R

2 = 0.9949) support the hypothesis that AI models trained with historical oil analysis data and operational variables can reliably predict critical component wear. These values surpass the performance of the neural network model (R

2 = 0.86 [

34]) reported in the textile sector, demonstrating that tree-based models offer superior predictive capability for this context. Furthermore, while AdaBoost [

36] and Random Forest [

37] have shown high effectiveness in classification tasks (92–98.26% accuracy) in sectors like textiles and manufacturing, our results confirm their adaptability to continuous-data domains like mining, for vibration and wear particle analysis. This cross-sector consistency strengthens the possibility of transferring methodologies proven in manufacturing to mining, provided specific adjustments are made in feature selection to accurately capture this sector’s unique operational conditions.

The lower performance observed in the LSTM model for predicting Fe levels may be related to data characteristics. As LSTMs are primarily designed to capture temporal dependencies in sequences, their effectiveness diminishes when the relationship between features and critical component wear lacks strong sequential patterns. Furthermore, these recurrent neural networks’ inherent complexity requires meticulous hyperparameter tuning and larger training datasets to achieve optimal performance. These results suggest that—under the analyzed conditions and data—tree-based models like Random Forest and XGBoost better align with the proposed hypothesis, demonstrating superior predictive capability for wear in mining predictive maintenance applications.

The observed performance difference between LSTM and tree-based models (Random Forest and XGBoost) can be attributed to the inherent limitations of recurrent neural networks. While LSTMs are designed to capture temporal dependencies, their advantage diminishes significantly when input features lack clear sequential patterns. Furthermore, their performance depends on fine-tuned hyperparameters and requires large volumes of labeled sequential data for effective generalization, making them particularly sensitive to noise and imbalanced distributions. In contrast, tree-based models efficiently process tabular data, possess implicit regularization, and demonstrate greater tolerance to data imperfections. This behavior, consistent with the proposed hypothesis, reinforces that in mining predictive maintenance contexts, tree algorithms offer a competitive advantage by reliably predicting critical component wear from historical oil analysis data and operational variables.

For copper (Cu), Random Forest tends to smooth predictions, limiting its ability to capture abrupt peaks in the time series. In contrast, XGBoost shows greater sensitivity to these variations, more accurately identifying some peaks, though it still struggles with extreme maximum values. These differences stem from each algorithm’s inherent characteristics: while Random Forest prioritizes stability and overfitting reduction through averaging multiple trees, XGBoost iteratively optimizes residual errors, enhancing its capacity to model local fluctuations. However, as the study hypothesis posits, selecting the optimal model for predicting critical component wear will depend on operational data characteristics, variable structure, and hyperparameter optimization—with both models remaining valid for predictive maintenance scenarios involving complex time series.

In the silicon (Si) analysis, both models—Random Forest and XGBoost—show high agreement with empirical data, particularly in low-to-medium value ranges, confirming their ability to predict general wear trends. However, challenges persist in forecasting sharp peaks and valleys. Random Forest provides more stable projections, favoring global trend interpretation, while XGBoost’s greater sensitivity to local variations enables detection of abrupt changes but with increased overfitting risk. This behavior aligns with the study’s hypothesis that AI models trained on historical data and operational variables can reliably predict critical component wear, though their ultimate effectiveness depends on balancing stability versus sensitivity according to operational context.

The selection of Random Forest and XGBoost is based on their proven robustness against noise, ability to process multivariate industrial data, and high accuracy in operationally variable environments. These tree-based models excel at failure classification and prediction, even with incomplete or heterogeneous data. The LSTM model was included as an alternative to explore its capacity for capturing complex temporal dependencies, particularly relevant in progressive wear processes where time is a determining factor. The results support the hypothesis that implementing AI models trained on historical oil analysis data and operational variables enables reliable prediction of critical component wear. Their integration into condition monitoring systems would help optimize maintenance interventions, reduce unplanned downtime, and improve equipment operational availability.

While the developed predictive models demonstrated robust performance in estimating wear trends and operational conditions, projections should be interpreted cautiously, considering the inherent variability in data and operating conditions. Specifically, adjusting oil change frequency based on viscosity allowed for a reduction in scheduled downtime, with an estimated operational benefit of up to 10 h per maintenance interval. Additionally, wear prediction projected a potential increase of up to 2000 h in the service life of critical components, such as the turbocharger, whose standard replacement occurred at 7000 h but could be extended to 9000 h under certain operating conditions. These findings support the hypothesis that using AI models trained on historical oil analysis data and operational variables can reliably predict wear, contributing to maintenance optimization and improved availability. Future research should validate these results across different operational contexts and evaluate their integration into automated maintenance management systems.

However, these estimates have not yet been cross-validated with physical wear measurements or documented field replacement records. Consequently, they should be regarded as preliminary approximations derived from historical analysis rather than definitive guides for operational decision-making without further verification. To enhance their applicability in demanding industrial environments, validation through controlled laboratory tests, systematic technical inspections, and comparison with actual maintenance logs is recommended. This process will not only corroborate the proposed hypothesis—that AI models can reliably predict critical component wear—but also enable model adjustments to operational specificities, thereby improving their generalization capacity and predictive robustness.

5. Conclusions

The Random Forest and XGBoost models demonstrated robust performance in predicting silicon wear, with XGBoost showing superior accuracy in detecting peaks and valleys. However, its sensitivity to fluctuations increases overfitting risk, underscoring the need for additional validation with data from diverse operational conditions to ensure generalization.

For copper prediction, both models captured the overall wear trend but showed limitations in reproducing sharp peaks and abrupt variations. These results suggest optimizing feature selection and hyperparameter tuning, as well as exploring hybrid approaches to improve accuracy in high-variability scenarios.

Regarding iron (Fe), Random Forest and XGBoost consistently outperformed the LSTM model, achieving low MSE values and R2 scores close to 1. LSTM’s lower performance indicates that its architecture is suboptimal for this tabular data type, reinforcing the suitability of tree-based models for predicting metal wear in industrial environments.

The ARIMA model identified a seasonal pattern in failure prediction but exhibited oscillations not fully aligned with historical behavior. Incorporating exogenous variables like workload, environmental conditions, and maintenance cycles could enhance its accuracy and strengthen its utility for predictive maintenance planning.

As future work, we propose expanding this framework into an integrated and autonomous maintenance system that combines the principles of Sustainable Maintenance (SM), Energy-Based Maintenance (EBM), and Prescriptive Maintenance. This would involve:

Developing prescriptive models that not only predict failures but also generate optimized action plans considering operational constraints and environmental impact (e.g., rescheduling maintenance activities or prioritizing the use of spare parts with a lower carbon footprint).

Implementing advanced IoT sensors to monitor the real-time energy consumption of air compressor equipment in underground mining and coupling them with EBM algorithms that correlate technical degradation with energy efficiency.

Integrating a digital twin of the maintenance system that simulates scenarios under multi-objective criteria (availability, cost, and CO2 emissions) to recommend interventions aligned with sustainability goals. This approach would position the mining operation toward a circular autonomous maintenance standard, where decisions are technically sound, energy-efficient, and environmentally responsible.

This work recognizes the potential lack of generalizability of the models as a main limitation due to the dynamic and aggressive conditions in underground mining, such as water infiltration, confined spaces, and operational variability. Additionally, the scarcity of specialized literature on predictive maintenance for mobile equipment in underground mining hampers a comparative validation of the results.