Pose Detection and Recurrent Neural Networks for Monitoring Littering Violations

Abstract

:1. Introduction

- (1)

- Reducing cleaning costs, because in a day, Palembang City PUPR has to deploy a lot of workers to clean the Sekanak area, so a low amount of rubbish means a low number of workers;

- (2)

- Reducing maintenance and repair costs associated with environmental conservation and restoration;

- (3)

- Increasing the aesthetic appeal of public spaces, thereby increasing property values in those areas, which also increases tax revenues for the city government;

- (4)

- Improving public health: handling litter is very important because littering tends to increase the number of pests;

- (5)

- Change society’s perspective: the proposed device is an effort to prevent littering, including public awareness campaigns and law enforcement actions;

- (6)

- Produces valuable data and insights regarding littering behavior patterns, which enables evidence-based decision-making for resource allocation and policy development;

- (7)

- Automate monitoring processes that minimize the need for continuous human supervision, which can reduce expenses related to law enforcement personnel. Additionally, municipal governments can earn revenue through fines and penalties imposed on violators who litter.

- (1)

- It can monitor air quality and provide information to the user if there is poor-quality air.

- (2)

- It monitors the temperature, humidity, and water level. IoT technology allows for devices to be accessed by many people from different places using different devices.

2. Related Work

3. Materials and Methods

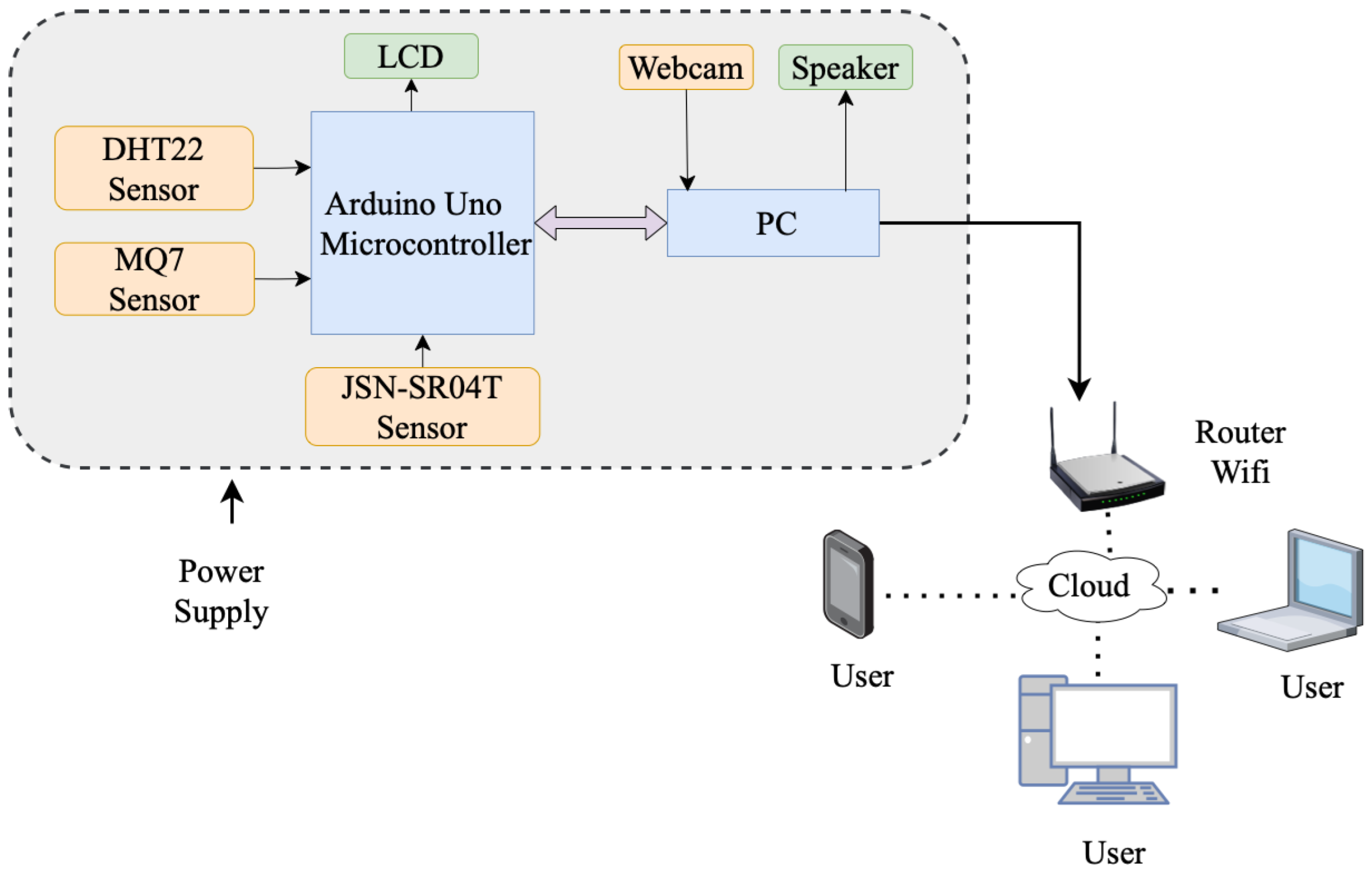

3.1. Hardware

3.2. Software

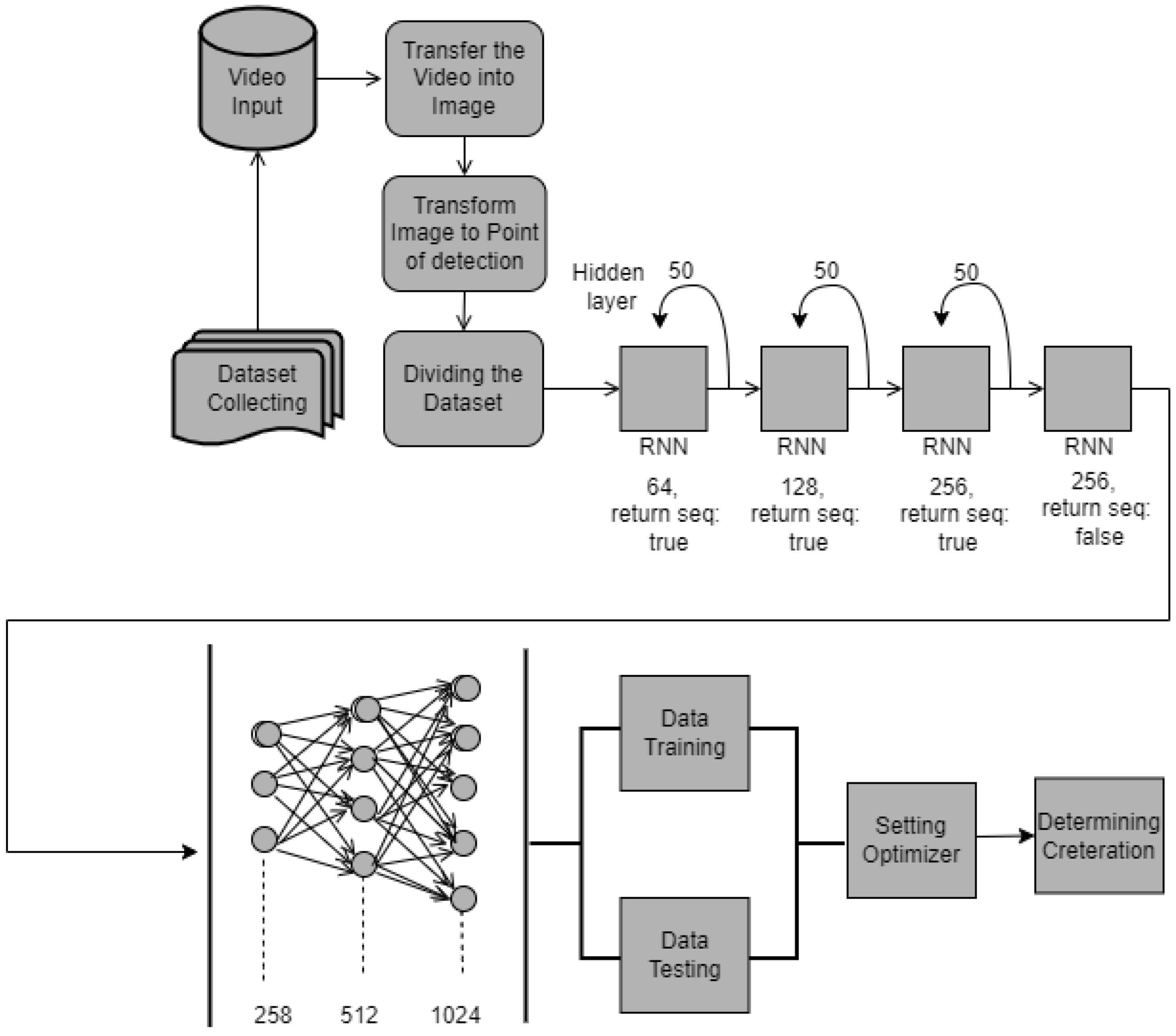

3.2.1. Dataset

3.2.2. Pre-Processing

3.2.3. Proposed Model

- Reading the provided sequential data (images) and processing them step by step.

- Recognizing each image’s relationship sequentially and capturing the image’s dependencies and temporal patterns.

- Maintaining information about previously observed actions and using it to predict future movements.

- Analyzing the context in which littering occurs indiscriminately. This can consider factors such as the person’s location, the presence of trash cans, and other environmental cues. This will help the system decide whether a series of actions will be categorized as littering.

- Operating in real-time, continuously processing incoming data and making predictions as new information becomes available.

- Learning and adapting the system’s recognition capabilities over time by adjusting to changing patterns and behavior.

- Triggering an alert or taking action when an anomaly is detected.

3.3. Web Integration

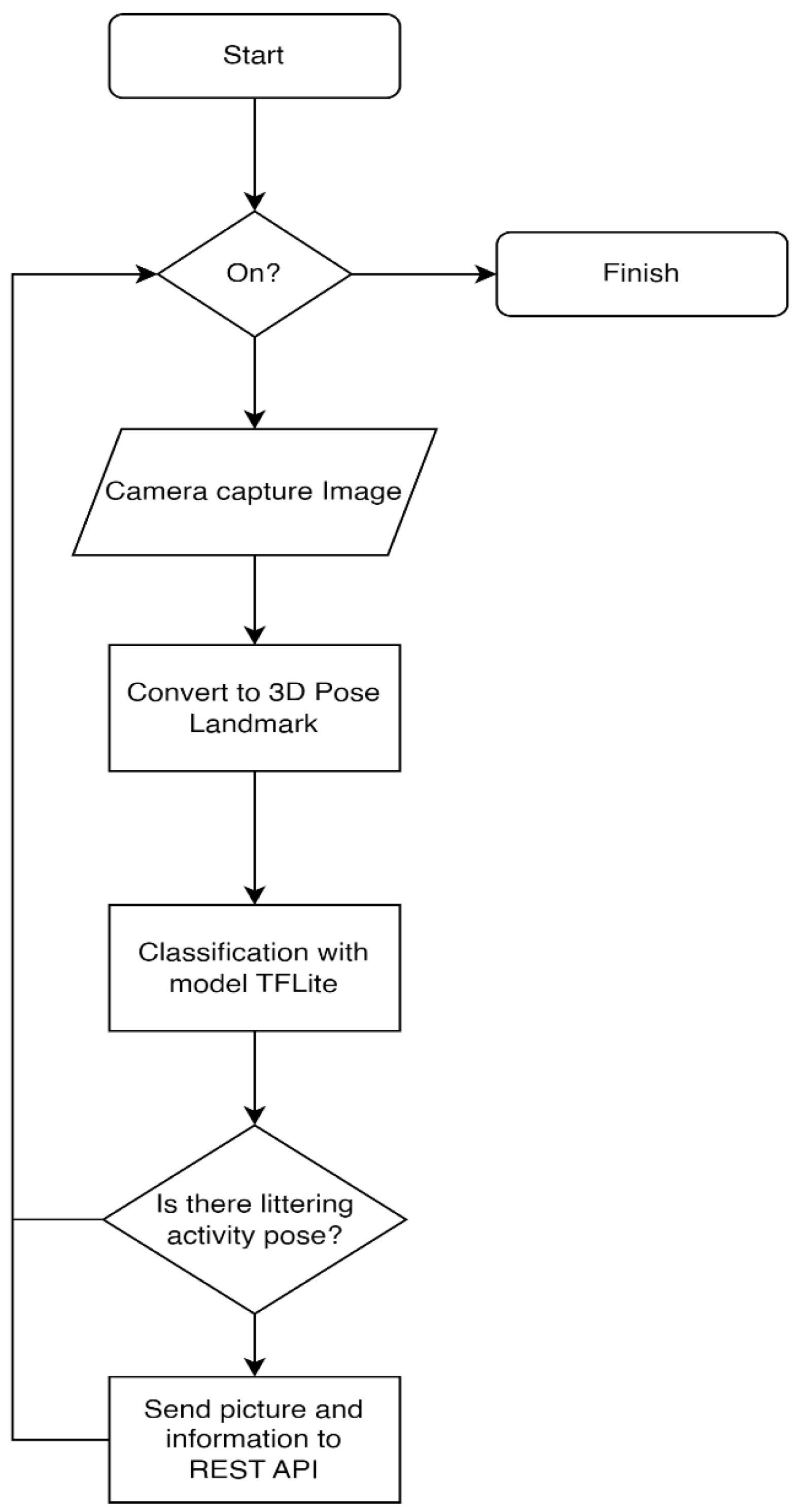

3.4. Flowchart

4. Results and Discussion

4.1. Standing Still Object

4.2. Moving Object

4.3. Sitting Object

- (1)

- Traffic management and smart cities: identify real-time traffic violations such as illegal parking, breaking signals, or speeding.

- (2)

- Traffic flow analysis: monitor traffic flow and predict congestion or accidents.

- (3)

- Security and surveillance: detect unauthorized entry into restricted zones and potential threats in crowded places or critical infrastructure.

- (4)

- Environmental monitoring: identifying illegal logging or forest clearing, detecting illegal hunting activities, or ensuring the safety of endangered species in their habitat.

- (5)

- Health: using sensors to monitor patient movement in care facilities to detect falls or other anomalies.

- (6)

- Agriculture and livestock: using sensors to detect disease and pest activity or monitor soil health, observing livestock movement and health in real time.

- (7)

- Retail: monitor customer movements in retail stores to gain insight into shopping patterns and preferences and detect potential shoplifting activity in real-time.

- (8)

- Facility usage analysis: monitoring public facilities such as parks, fitness centers, or libraries to collect data about peak times, user behavior, or facility health.

- (9)

- Early warning systems: use integrated sensors to detect early signs of natural disasters such as earthquakes, tsunamis, or volcanic eruptions, monitor affected areas to assess damage, track relief efforts, or detect secondary hazards.

- (10)

- Audience engagement analysis: observing audience behavior during a performance, concert, or exhibition to gather insights about engagement and preferences.

5. Conclusions

6. Patents

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Marfuah, D.; Noviyanti, R.D.; Kusudaryati, D.P.D.; Rahmala, G.U. Health education in improving clean and healthy life behavior (PHBS) at community in the Jebol Ngrombo village Baki Sukoharjo. J. Pengabdi. Dan Pemberdaya. Masy. Indones. 2022, 2, 309–320. [Google Scholar]

- Kustiari, T.; Suryadi, U.; Nadipah, I. Counseling on processing of waste into solid organic fertilizer for GAPOKTAN farmers rukun tani Segobang village, Licin district, Banyuwangi regency, East Java. J. Pengabdi. Dan Pemberdaya. Masy. Indones. 2022, 2, 165–174. [Google Scholar]

- Permatasari, A.; Triyono, T.; Walinegoro, B.G. Training on processing household waste into organic liquid fertilizer for PKK Cadres in Baturetno village. J. Pengabdi. Dan Pemberdaya. Masy. Indones. 2022, 2, 134–140. [Google Scholar]

- Herdiansyah, H.; Brotosusilo, A.; Negoro, H.A.; Sari, R.; Zakianis, Z. Parental education and good child habits to encourage sustainable littering behavior. Sustainability 2021, 13, 8645. [Google Scholar] [CrossRef]

- Siddiqua, A.; Hahladakis, J.N.; Al-Attiya, W.A.K.A. An overview of the environmental pollution and health effects associated with waste landfilling and open dumping. Environ. Sci. Pollut. Res. 2022, 29, 58514–58536. [Google Scholar] [CrossRef] [PubMed]

- Abubakar, I.R.; Maniruzzaman, K.M.; Dano, U.L.; AlShihri, F.S.; AlShammari, M.S.; Ahmed, S.M.S.; Al-Gehlani, W.A.G.; Alrawaf, T.I. Environmental sustainability impacts of solid waste management practices in the global south. Int. J. Environ. Res. Public Health 2021, 19, 12717. [Google Scholar] [CrossRef] [PubMed]

- Efendi, Y.; Imardi, S.; Muzawi, R.; Syaifullah, M. Application of RFID internet of things for school empowerment towards smart school. J. Pengabdi. Dan Pemberdaya. Masy. Indones. 2021, 1, 67–77. [Google Scholar] [CrossRef]

- Qasim, A.B.; Pettirsch, A. Recurrent neural networks for video object detection. arXiv 2020, arXiv:2010.15740. [Google Scholar]

- Husni, N.L.; Sari, P.A.R.; Handayani, A.S.; Dewi, T.; Seno, S.A.H.; Caesarendra, W.; Glowacz, A.; Oprzędkiewicz, K.; Sułowicz, M. Real-time littering activity monitoring based on image classification method. Smart Cities 2021, 4, 1496–1518. [Google Scholar] [CrossRef]

- Xia, K.; Huang, J.; Wang, H. LSTM-CNN architecture for human activity recognition. IEEE Access 2020, 8, 56855–56866. [Google Scholar] [CrossRef]

- Mohsen, S. Recognition of human activity using GRU deep learning algorithm. Multimed. Tools Appl. 2023. [Google Scholar] [CrossRef]

- Hernández, Ó.G.; Morell, V.; Ramon, J.L.; Jara, C.A. Human pose detection for robotic-assisted and rehabilitation environments. Appl. Sci. 2021, 11, 4183. [Google Scholar] [CrossRef]

- Noori, F.M.; Wallace, B.; Uddin, M.Z.; Torresen, J. A robust human activity recognition approach using OpenPose, motion features, and deep recurrent neural network. LNCS 2019, 11482, 299–310. [Google Scholar]

- Yu, T.; Chen, J.; Yan, N.; Liu, X. A multi-layer parallel LSTM network for human activity recognition with smartphone sensors. In Proceedings of the 10th International Conference on Wireless Communications and Signal Processing (WCSP), Hangzhou, China, 18–20 October 2018; pp. 1–6. [Google Scholar]

- Luvizon, D.C.; Picard, D.; Tabia, H. 2D/3D pose estimation and action recognition using multitask deep learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5137–5146. [Google Scholar]

- Debnath, B.; Orbrien, M.; Yamaguchi, M.; Behera, A. Adapting MobileNets for mobile based upper body pose estimation. In Proceedings of the AVSS 2018—2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; pp. 1–6. [Google Scholar]

- Alessandrini, M.; Biagetti, G.; Crippa, P.; Falaschetti, L.; Turchetti, C. Recurrent Neural Network for Human Activity Recognition in Embedded Systems Using PPG and Accelerometer Data. Electronics 2021, 10, 1715. [Google Scholar] [CrossRef]

- Park, S.U.; Park, J.H.; Al-Masni, M.A.; Al-Antari, M.A.; Uddin, M.Z.; Kim, T.S. A depth camera-based human activity recognition via deep learning recurrent neural network for health and social care services. Procedia Comput. Sci. 2016, 100, 78–84. [Google Scholar] [CrossRef]

- Singh, D.; Merdivan, E.; Psychoula, I.; Kropf, J.; Hanke, S.; Geist, M.; Holzinger, A. Human Activity recognition using recurrent neural networks. arXiv 2018, arXiv:1804.07144. [Google Scholar]

- Perko, R.; Fassold, H.; Almer, A.; Wenighofer, R.; Hofer, P. Human Tracking and Pose Estimatin for Subsurface Operations. Available online: https://pure.unileoben.ac.at/en/publications/human-tracking-and-pose-estimatin-for-subsurface-operations (accessed on 26 October 2023).

- Zhang, Y.; Wang, C.; Wang, X.; Liu, W.; Zeng, W. VoxelTrack: Multi-person 3D human pose estimation and tracking in the wild. arXiv 2021, arXiv:2108.02452. [Google Scholar] [CrossRef]

- Megawan, S.; Lestari, W.S. Deteksi spoofing wajah menggunakan Faster R-CNN dengan arsitektur Resnet50 pada video. J. Nas. Tek. Elektro dan Teknol. Inf. 2020, 9, 261–267. [Google Scholar]

- Rikatsih, N.; Supianto, A.A. Classification of posture reconstruction with univariate time series data type. In Proceedings of the 2018 International Conference on Sustainable Information Engineering and Technology (SIET), Malang, Indonesia, 10–12 November 2018; pp. 322–325. [Google Scholar]

- Andriluka, M.; Pishchulin, L.; Gehler, P.; Schiele, B. 2D human pose estimation: New benchmark and state of the art analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 3686–3693. [Google Scholar]

- Fassold, H.; Gutjahr, K.; Weber, A.; Perko, R. A real-time algorithm for human action recognition in RGB and thermal video. arXiv 2023, arXiv:2304.01567v1. [Google Scholar]

- Cheng, Y.; Yang, B.; Wang, B.; Tan, R.T. 3D human pose estimation using spatio-temporal networks with explicit occlusion training. In Proceedings of the AAAI 2020—34th AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 10631–10638. [Google Scholar]

- Zhang, J.; Wang, Y.; Zhou, Z.; Luan, T.; Wang, Z.; Qiao, Y. Learning dynamical human-joint affinity for 3D pose estimation in videos. IEEE Trans. Image Process. 2021, 30, 7914–7925. [Google Scholar] [CrossRef]

- Uddin, M.Z.; Torresen, J. A deep learning-based human activity recognition in darkness. In Proceedings of the 2018 Colour and Visual Computing Symposium, Gjovik, Norway, 19–20 September 2018; pp. 1–5. [Google Scholar]

- Wang, J.; Xu, E.; Xue, K.; Kidzinski, L. 3D pose detection in videos: Focusing on occlusion. arXiv 2020, arXiv:2006.13517. [Google Scholar]

- Steven, G.; Purbowo, A.N. Penerapan 3D human pose estimation indoor area untuk motion capture dengan menggunakan YOLOv4-Tiny, EfficientNet simple baseline, dan VideoPose3D. J. Infra 2022, 10, 1–7. [Google Scholar]

- Liu, R.; Shen, J.; Wang, H.; Chen, C.; Cheung, S.-C.; Asari, V.K. Enhanced 3D human pose estimation from videos by using attention-based neural network with dilated convolutions. Int. J. Comput. Vis. 2021, 129, 1596–1615. [Google Scholar] [CrossRef]

- Llopart, A. LiftFormer: 3D human pose estimation using attention models. arXiv 2020, arXiv:2009.00348. [Google Scholar]

- Yu, C.; Wang, B.; Yang, B.; Tan, R.T. Multi-scale networks for 3D human pose estimation with inference stage optimization. arXiv 2020, arXiv:2010.06844. [Google Scholar]

- Krizhevsky, B.A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2012, 60, 84–90. [Google Scholar] [CrossRef]

- Wu, D.; Zheng, S.-J.; Zhang, X.-P.; Yuan, C.-A.; Cheng, F.; Zhao, Y.; Lin, Y.-J.; Zhao, Z.-Q.; Jiang, Y.-J.; Huang, D.-S. Deep learning-based methods for person re-identification: A comprehensive review. Neurocomputing 2019, 337, 354–371. [Google Scholar] [CrossRef]

- Chen, Y.; Tian, Y.; He, M. Monocular human pose estimation: A survey of deep learning-based methods. Comput. Vis. Image Underst. 2020, 192, 102897. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, R.; Liu, M.; Dong, G.; Basu, A. SPGNet: Spatial projection guided 3D human pose estimation in low dimensional space. Smart Multimed. LNCS 2022, 13497, 1–15. [Google Scholar]

| No. | Area | Method/Technique | Datasets | Majority | Ref. |

|---|---|---|---|---|---|

| 1. | Indoor | CNN-based | Images of humans. | For robotic-assisted and rehabilitation environments. | [12] |

| 2. | Open-Pose, RNNs, LSTM | Multimodal human action dataset, such as standing up, sitting down, jumping, bending, waving, clapping, and throwing. | Using motion features. | [13,14] | |

| 3. | CNNs | High-level pose representation, such as drinking water, making a phone call | Using multitask deep learning. | [15,16] | |

| 4. | RNN using PPG | three different activities (resting, squat, and stepper). | Embedded systems using PPG and accelerometer data. | [17] | |

| 5. | RNN | Twelve activities, such as left arm, push right, goggles, and so on. | For health and social care services. | [18,19] | |

| 6. | YoloV3 to the Scaled-YoloV4, TCN | COCO, MPII, and HumanEva-1, such as smoking. | For subsurface operations. | [20,21] | |

| 7. | Outdoor | CNN, LSTM, Faster R-CNN | Walking, walking upstairs, laying, and yoga. | Lightweight deep learning model using smartphones | [22] |

| 8. | LSTM, RNN | Walking, jogging, standing, walking class, jumping, and running. | Implemented in darkness. | [23,24] | |

| 9. | K-NN | Walking, running, swimming, and jumping. | Univariate time series data | [25] | |

| 10. | Indoor and outdoor | GCN | MPII, and HumanEva-1. | Using learning dynamics. | [26] |

| 11. | Mask-RCNN | Walking and boxing. | Using attention models. | [27] |

| No. | Captured Position | Time | Activity | Device Detection | Notification | Note |

|---|---|---|---|---|---|---|

| 1. | FRONT | Morning | Normal | Normal | Off | Success |

| 2. | Littering | Littering | On | Success | ||

| 3. | Afternoon | Normal | Normal | Off | Success | |

| 4. | Littering | Littering | On | Success | ||

| 5. | Night | Normal | Normal | Off | Success | |

| 6. | Littering | Littering | On | Success | ||

| 7. | LEFT | Morning | Normal | Normal | Off | Success |

| 8. | Littering | Littering | On | Success | ||

| 9. | Afternoon | Normal | Normal | Off | Success | |

| 10. | Littering | Littering | On | Success | ||

| 11. | Night | Normal | Normal | Off | Success | |

| 12. | Littering | Littering | On | Success | ||

| 13. | RIGHT | Morning | Normal | Normal | Off | Success |

| 14. | Littering | Littering | On | Success | ||

| 15. | Afternoon | Normal | Normal | Off | Success | |

| 16. | Littering | Littering | On | Success | ||

| 17. | Night | Normal | Normal | Off | Success | |

| 18. | Littering | Littering | On | Success | ||

| 19. | BACK | Morning | Normal | Normal | Off | Success |

| 20. | Littering | Littering | On | Success | ||

| 21. | Afternoon | Normal | Normal | Off | Success | |

| 22. | Littering | Littering | On | Success | ||

| 23. | Night | Normal | Normal | Off | Success | |

| 24. | Littering | Littering | On | Success |

| No. | Captured Position | Time | Activity | Device Detection | Notification | Note |

|---|---|---|---|---|---|---|

| 1. | FRONT | Morning | Normal | Normal | Off | Success |

| 2. | Littering | Littering | On | Success | ||

| 3. | Afternoon | Normal | Normal | Off | Success | |

| 4. | Littering | Littering | On | Success | ||

| 5. | Night | Normal | Normal | Off | Success | |

| 6. | Littering | Littering | On | Success | ||

| 7. | LEFT | Morning | Normal | Normal | Off | Success |

| 8. | Littering | Littering | On | Success | ||

| 9. | Afternoon | Normal | Normal | Off | Success | |

| 10. | Littering | Littering | On | Success | ||

| 11. | Night | Normal | Normal | Off | Success | |

| 12. | Littering | Littering | On | Success | ||

| 13. | RIGHT | Morning | Normal | Normal | Off | Success |

| 14. | Littering | Littering | On | Success | ||

| 15. | Afternoon | Normal | Normal | Off | Success | |

| 16. | Littering | Littering | On | Success | ||

| 17. | Night | Normal | Normal | Off | Success | |

| 18. | Littering | Littering | On | Success | ||

| 19. | BACK | Morning | Normal | Normal | Off | Success |

| 20. | Littering | Littering | On | Success | ||

| 21. | Afternoon | Normal | Normal | Off | Success | |

| 22. | Littering | Littering | On | Success | ||

| 23. | Night | Normal | Normal | Off | Success | |

| 24. | Littering | Littering | On | Success |

| No. | Captured Position | Time | Activity | Device Detection | Notification | Note |

|---|---|---|---|---|---|---|

| 1. | FRONT | Morning | Normal | Normal | Off | Success |

| 2. | Littering | Littering | On | Success | ||

| 3. | Afternoon | Normal | Normal | Off | Success | |

| 4. | Littering | Littering | On | Success | ||

| 5. | Night | Normal | Normal | Off | Success | |

| 6. | Littering | Littering | On | Success | ||

| 7. | LEFT | Morning | Normal | Normal | Off | Success |

| 8. | Littering | Littering | On | Success | ||

| 9. | Afternoon | Normal | Normal | Off | Success | |

| 10. | Littering | Littering | On | Success | ||

| 11. | Night | Normal | Normal | Off | Success | |

| 12. | Littering | Littering | On | Success | ||

| 13. | RIGHT | Morning | Normal | Normal | Off | Success |

| 14. | Littering | Littering | On | Success | ||

| 15. | Afternoon | Normal | Normal | Off | Success | |

| 16. | Littering | Littering | On | Success | ||

| 17. | Night | Normal | Normal | Off | Success | |

| 18. | Littering | Littering | On | Success | ||

| 19. | BACK | Morning | Normal | Normal | Off | Success |

| 20. | Littering | Littering | On | Success | ||

| 21. | Afternoon | Normal | Normal | Off | Success | |

| 22. | Littering | Littering | On | Success | ||

| 23. | Night | Normal | Normal | Off | Success | |

| 24. | Littering | Littering | On | Success |

| No. | Temperature (°C) | Humidity (%) | Water Level (cm) | Air Quality (ADC.) |

|---|---|---|---|---|

| 1. | 29 | 72 | 107 | 156 |

| 2. | 36 | 55 | 116 | 146 |

| 3. | 35 | 60 | 105 | 138 |

| 4. | 27 | 72 | 99 | 127 |

| 5. | 37 | 55 | 102 | 137 |

| 6. | 35 | 60 | 100 | 172 |

| 7. | 37 | 57 | 107 | 122 |

| 8. | 34 | 64 | 98 | 147 |

| 9. | 32 | 68 | 101 | 166 |

| 10. | 31 | 75 | 120 | 128 |

| 11. | 30 | 78 | 161 | 145 |

| 12. | 32 | 69 | 191 | 162 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Husni, N.L.; Felia, O.; Abdurrahman; Handayani, A.S.; Pasarella, R.; Bastari, A.; Sylvia, M.; Rahmaniar, W.; Seno, S.A.H.; Caesarendra, W. Pose Detection and Recurrent Neural Networks for Monitoring Littering Violations. Eng 2023, 4, 2722-2740. https://doi.org/10.3390/eng4040155

Husni NL, Felia O, Abdurrahman, Handayani AS, Pasarella R, Bastari A, Sylvia M, Rahmaniar W, Seno SAH, Caesarendra W. Pose Detection and Recurrent Neural Networks for Monitoring Littering Violations. Eng. 2023; 4(4):2722-2740. https://doi.org/10.3390/eng4040155

Chicago/Turabian StyleHusni, Nyayu Latifah, Okta Felia, Abdurrahman, Ade Silvia Handayani, Rosi Pasarella, Akhmad Bastari, Marlina Sylvia, Wahyu Rahmaniar, Seyed Amin Hosseini Seno, and Wahyu Caesarendra. 2023. "Pose Detection and Recurrent Neural Networks for Monitoring Littering Violations" Eng 4, no. 4: 2722-2740. https://doi.org/10.3390/eng4040155

APA StyleHusni, N. L., Felia, O., Abdurrahman, Handayani, A. S., Pasarella, R., Bastari, A., Sylvia, M., Rahmaniar, W., Seno, S. A. H., & Caesarendra, W. (2023). Pose Detection and Recurrent Neural Networks for Monitoring Littering Violations. Eng, 4(4), 2722-2740. https://doi.org/10.3390/eng4040155