A Basic Study for Predicting Dysphagia in Panoramic X-ray Images Using Artificial Intelligence (AI) Part 2: Analysis of the Position of the Hyoid Bone on Panoramic Radiographs

Abstract

1. Introduction

2. Materials and Methods

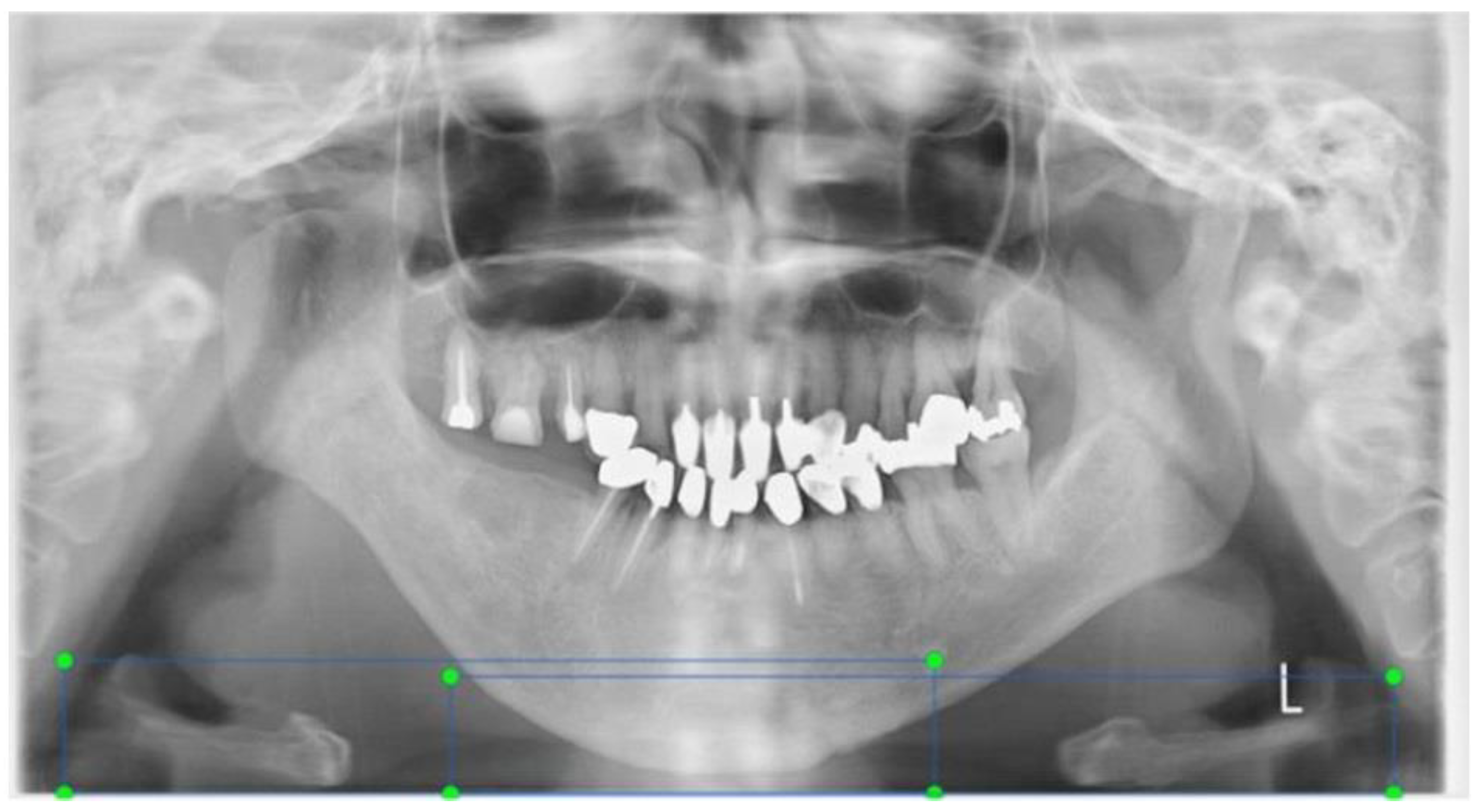

2.1. Acquisition of Panoramic X-ray Images

2.2. Ethical Statement

2.3. Vertical Position Classification of the Hyoid Bone

- The bilateral mandible line: A simulated line connecting the right and left sides of the angles of the mandible.

- The mandibular border line: The line that moved the bilateral mandibular line parallel to the lowest point of the lower border of the mandible.

2.4. Convolutional Neural Network Selection

Annotations

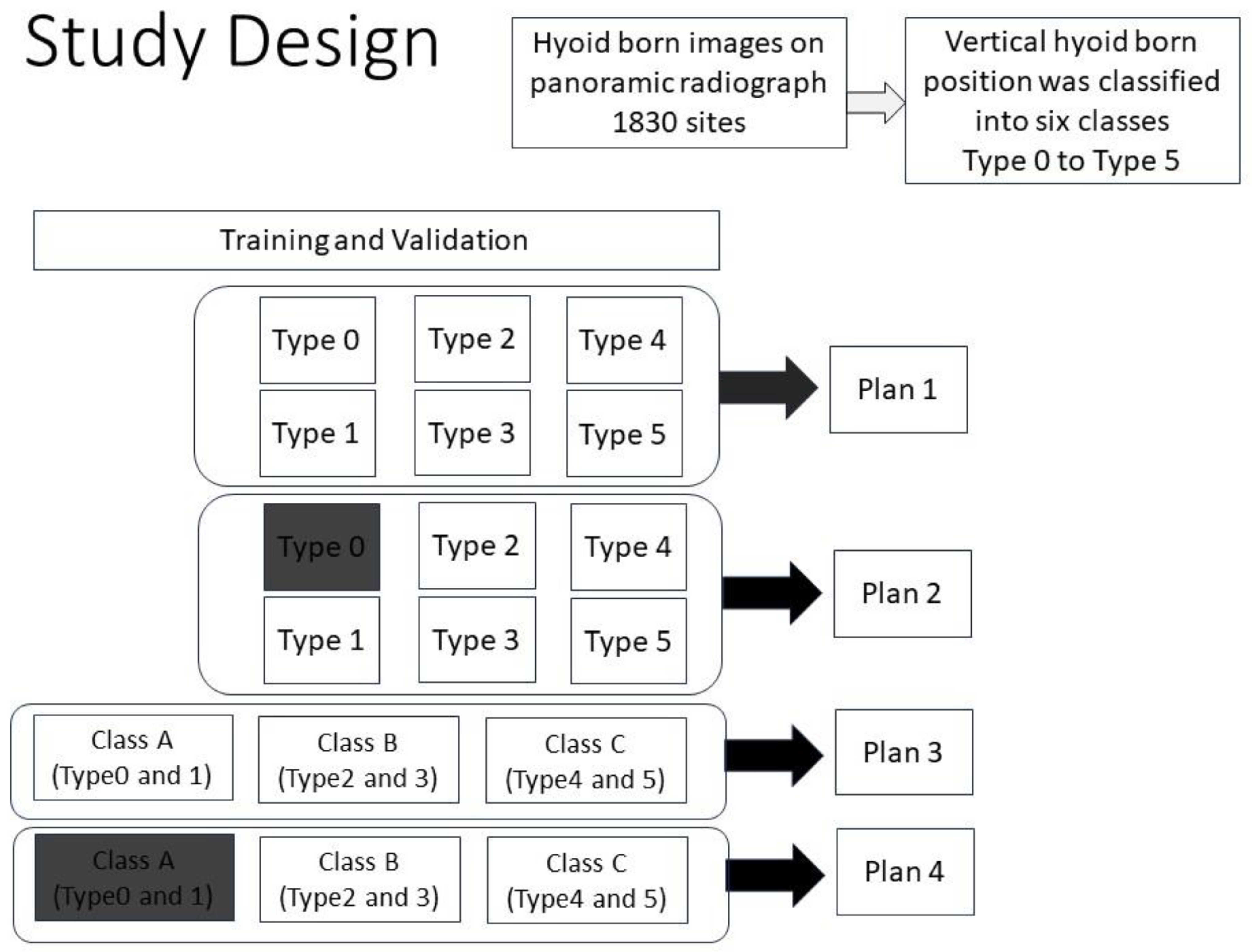

2.5. Learning Method

- Plan 1 (study of 6 types).

- Plan 2 (study of 5 types).

- Plan 3 (study of 3 Classes)

- Plan 4 (study of 2 Classes)

2.6. Cross Validation

2.7. Evaluation

- Recall (true positive rate, TPR) = TP/(TP + FN).

- Precision = TP/(TP + FP).

- Accuracy = (TP + TN)/(TP + FP + TN + FN).

- PR-AUC (area under the precision–recall curve) was plotted for evaluation of the classification ability, and the AUC values under the PR curve were calculated. The AUC of a random model was 0.5, and the predictive ability/diagnostic ability was judged based on the AUC value, as follows:

3. Results

3.1. PR Curves and AUC Values

3.2. Precision, Recall, F-Values, and Accuracy

4. Discussion

4.1. Hyoid Bone Detection

4.2. Regarding Research Plan Setting Conditions

4.2.1. Plan 1: Learning the Position of the Hyoid Bone in Six Groups

4.2.2. Plan 2: Learning the Position of the Hyoid Bone in Five Groups

4.2.3. On Reducing Grouping

4.3. Recognition of Missing Images

4.4. Limitations of This Study

4.5. About the AI Program

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kuroda, M.; Matsuda, Y.; Ito, E.; Araki, K. Potential of Panoramic Radiography as a Screening Method for Oral Hypofunction in the Evaluation of Hyoid Bone Position. Showa Univ. J. Med. Sci. 2019, 31, 227–235. [Google Scholar] [CrossRef][Green Version]

- Ito, E.; Matsuda, Y.; Kuroda, M.; Araki, K. A novel dysphagia screening method using panoramic radiography. Showa Univ. J. Med. Sci. 2021, 33, 74–81. [Google Scholar] [CrossRef]

- Matsuda, Y.; Ito, E.; Kuroda, M.; Araki, K. A Basic Study for Predicting Dysphagia in Panoramic X-ray Images Using Artificial Intelligence (AI)—Part 1: Determining Evaluation Factors and Cutoff Levels. Int. J. Environ. Res. Public Health 2022, 19, 4529. [Google Scholar] [CrossRef] [PubMed]

- Kabir, T.; Lee, C.-T.; Chen, L.; Jiang, X.; Shams, S. A comprehensive artificial intelligence framework for dental diagnosis and charting. BMC Oral Health 2022, 22, 480. [Google Scholar] [CrossRef] [PubMed]

- Yilmaz, S.; Tasyurek, M.; Amuk, M.; Celik, M.; Canger, E.M. Developing deep learning methods for classification of teeth in dental panoramic radiography. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2023. epub ahead of print. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.-H.; Han, S.-S.; Kim, Y.H.; Lee, C.; Kim, I. Application of a fully deep convolutional neural network to the automation of tooth segmentation on panoramic radiographs. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2020, 129, 635–642. [Google Scholar] [CrossRef] [PubMed]

- Shafi, I.; Sajad, M.; Fatima, A.; Aray, D.G.; Lipari, V.; Diez, I.D.L.T.; Ashraf, I. Teeth Lesion Detection Using Deep Learning and the Internet of Things Post-COVID-19. Sensors 2023, 23, 6837. [Google Scholar] [CrossRef] [PubMed]

- Fatima, A.; Shafi, I.; Afzal, H.; Mahmood, K.; Díez, I.D.L.T.; Lipari, V.; Ballester, J.B.; Ashraf, I. Deep Learning-Based Multiclass Instance Segmentation for Dental Lesion Detection. Healthcare 2023, 11, 347. [Google Scholar] [CrossRef] [PubMed]

- Mao, Y.-C.; Huang, Y.-C.; Chen, T.-Y.; Li, K.-C.; Lin, Y.-J.; Liu, Y.-L.; Yan, H.-R.; Yang, Y.-J.; Chen, C.-A.; Chen, S.-L.; et al. Deep Learning for Dental Diagnosis: A Novel Approach to Furcation Involvement Detection on Periapical Radiographs. Bioengineering 2023, 10, 802. [Google Scholar] [CrossRef] [PubMed]

- Son, D.-M.; Yoon, Y.-A.; Kwon, H.-J.; An, C.-H.; Lee, S.-H. Automatic Detection of Mandibular Fractures in Panoramic Radiographs Using Deep Learning. Diagnostics 2021, 11, 933. [Google Scholar] [CrossRef] [PubMed]

- Ha, E.-G.; Jeon, K.J.; Kim, Y.H.; Kim, J.-Y.; Han, S.-S. Automatic detection of mesiodens on panoramic radiographs using artificial intelligence. Sci. Rep. 2021, 11, 23061. [Google Scholar] [CrossRef] [PubMed]

- Okazaki, S.; Mine, Y.; Iwamoto, Y.; Urabe, S.; Mitsuhata, C.; Nomura, R.; Kakimoto, N.; Murayama, T. Analysis of the feasibility of using deep learning for multiclass classification of dental anomalies on panoramic radiographs. Dent. Mater. J. 2022, 41, 889–895. [Google Scholar] [CrossRef] [PubMed]

- Yang, H.; Jo, E.; Kim, H.J.; Cha, I.-H.; Jung, Y.-S.; Nam, W.; Kim, J.-Y.; Kim, J.-K.; Kim, Y.H.; Oh, T.G.; et al. Deep Learning for Automated Detection of Cyst and Tumors of the Jaw in Panoramic Radiographs. J. Clin. Med. 2020, 9, 1839. [Google Scholar] [CrossRef] [PubMed]

- Tareq, A.; Faisal, M.I.; Islam, M.S.; Rafa, N.S.; Chowdhury, T.; Ahmed, S.; Farook, T.H.; Mohammed, N.; Dudley, J. Visual Diagnostics of Dental Caries through Deep Learning of Non-Standardised Photographs Using a Hybrid YOLO Ensemble and Transfer Learning Model. Int. J. Environ. Res. Public Health 2023, 20, 5351. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Xu, Y.; Lei, Y.; Wang, Q.; Gao, X. Automatic Classification for Sagittal Craniofacial Patterns Based on Different Convolutional Neural Networks. Diagnostics 2022, 12, 1359. [Google Scholar] [CrossRef] [PubMed]

- Park, W.-S.; Huh, J.-K.; Lee, J.-H. Automated deep learning for classification of dental implant radiographs using a large multi-center dataset. Sci. Rep. 2023, 13, 4862. [Google Scholar] [CrossRef]

- Putra, R.H.; Doi, C.; Yoda, N.; Astuti, E.R.; Sasaki, K. Current applications and development of artificial intelligence for digital dental radiography. Dentomaxillofac. Radiol. 2022, 51, 20210197. [Google Scholar] [CrossRef]

- Thurzo, A.; Urbanová, W.; Novák, B.; Czako, L.; Siebert, T.; Stano, P.; Mareková, S.; Fountoulaki, G.; Kosnáčová, H.; Varga, I. Where Is the Artificial Intelligence Applied in Dentistry? Systematic Review and Literature Analysis. Healthcare 2022, 10, 1269. [Google Scholar] [CrossRef] [PubMed]

- Izzetti, R.; Nisi, M.; Aringhieri, G.; Crocetti, L.; Graziani, F.; Nardi, C. Basic Knowledge and New Advances in Panoramic Radiography Imaging Techniques: A Narrative Review on What Dentists and Radiologists Should Know. Appl. Sci. 2021, 11, 7858. [Google Scholar] [CrossRef]

- Elmahmudi, A.; Ugail, H. Deep face recognition using imperfect facial data. Future Gener. Comput. Syst. 2019, 99, 213–225. [Google Scholar] [CrossRef]

| Age | Class 0 | Class 1 | Class 2 | Class 3 | Class 4 | Class 5 |

|---|---|---|---|---|---|---|

| 20–29 | 4 | 24 | 22 | 14 | 124 | 92 |

| 30–39 | 36 | 58 | 26 | 50 | 90 | 72 |

| 40–49 | 60 | 92 | 16 | 50 | 88 | 66 |

| 50–59 | 74 | 72 | 24 | 18 | 54 | 72 |

| 60–69 | 56 | 46 | 30 | 22 | 30 | 44 |

| 70–79 | 40 | 44 | 28 | 24 | 36 | 4 |

| 80–95 | 8 | 2 | 22 | 52 | 48 | 2 |

| (a) | Plan 1 | |||||

| Type 0 | Type 1 | Type 2 | Type 3 | Type 4 | Type 5 | |

| Training set | 222 | 240 | 114 | 154 | 324 | 244 |

| Validation set | 14 | 30 | 18 | 24 | 50 | 36 |

| Test set | 42 | 68 | 36 | 48 | 96 | 70 |

| (b) | Plan 2 | |||||

| Type 0 | Type 1 | Type 2 | Type 3 | Type 4 | Type 5 | |

| Training set | - | 240 | 114 | 154 | 324 | 244 |

| Validation set | - | 30 | 18 | 24 | 50 | 36 |

| Test set | 278 | 68 | 36 | 48 | 96 | 70 |

| (c) | Plan 3 | |||||

| Class A | Class B | Class C | ||||

| Training set | 462 | 268 | 524 | |||

| Validation set | 44 | 42 | 130 | |||

| Test set | 110 | 84 | 166 | |||

| (d) | Plan 4 | |||||

| Class A | Class B | Class C | ||||

| Training set | - | 268 | 524 | |||

| Validation set | - | 42 | 130 | |||

| Test set | 616 | 84 | 166 | |||

| Model | Classification | Precision | Recall | F-Score | Accuracy |

|---|---|---|---|---|---|

| Plan 1 | Type 0 | 0.43 | 0.69 | 0.53 | |

| Type 1 | 0.58 | 0.46 | 0.51 | ||

| Type 2 | 0.39 | 0.36 | 0.38 | ||

| Type 3 | 0.77 | 0.42 | 0.54 | ||

| Type 4 | 0.90 | 0.93 | 0.91 | ||

| Type 5 | 1.00 | 0.89 | 0.94 | ||

| Average (overall) | 0.68 | 0.62 | 0.64 | 0.68 | |

| Plan 2 | Type 0 | 0.87 | 0.80 | 0.83 | |

| Type 1 | 0.42 | 0.66 | 0.52 | ||

| Type 2 | 0.46 | 0.50 | 0.48 | ||

| Type 3 | 0.68 | 0.48 | 0.56 | ||

| Type 4 | 0.87 | 0.89 | 0.88 | ||

| Type 5 | 0.92 | 0.81 | 0.86 | ||

| Average (overall) | 0.70 | 0.69 | 0.69 | 0.76 | |

| Plan 3 | Class A (Types 0 and 1) | 0.81 | 0.95 | 0.87 | |

| Class B (Types 2 and 3) | 0.93 | 0.62 | 0.74 | ||

| Class C (Types 4 and 5) | 0.95 | 0.93 | 0.94 | ||

| Average (overall) | 0.90 | 0.83 | 0.85 | 0.86 | |

| Plan 4 | Class A (Types 0 and 1) | 0.97 | 0.95 | 0.96 | |

| Class B (Types 2 and 3) | 0.66 | 0.71 | 0.69 | ||

| Class C (Types 4 and 5) | 0.95 | 0.95 | 0.95 | ||

| Average (overall) | 0.86 | 0.87 | 0.87 | 0.93 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Matsuda, Y.; Ito, E.; Kuroda, M.; Araki, K.; Nakada, W.; Hayakawa, Y. A Basic Study for Predicting Dysphagia in Panoramic X-ray Images Using Artificial Intelligence (AI) Part 2: Analysis of the Position of the Hyoid Bone on Panoramic Radiographs. Eng 2023, 4, 2542-2552. https://doi.org/10.3390/eng4040145

Matsuda Y, Ito E, Kuroda M, Araki K, Nakada W, Hayakawa Y. A Basic Study for Predicting Dysphagia in Panoramic X-ray Images Using Artificial Intelligence (AI) Part 2: Analysis of the Position of the Hyoid Bone on Panoramic Radiographs. Eng. 2023; 4(4):2542-2552. https://doi.org/10.3390/eng4040145

Chicago/Turabian StyleMatsuda, Yukiko, Emi Ito, Migiwa Kuroda, Kazuyuki Araki, Wataru Nakada, and Yoshihiko Hayakawa. 2023. "A Basic Study for Predicting Dysphagia in Panoramic X-ray Images Using Artificial Intelligence (AI) Part 2: Analysis of the Position of the Hyoid Bone on Panoramic Radiographs" Eng 4, no. 4: 2542-2552. https://doi.org/10.3390/eng4040145

APA StyleMatsuda, Y., Ito, E., Kuroda, M., Araki, K., Nakada, W., & Hayakawa, Y. (2023). A Basic Study for Predicting Dysphagia in Panoramic X-ray Images Using Artificial Intelligence (AI) Part 2: Analysis of the Position of the Hyoid Bone on Panoramic Radiographs. Eng, 4(4), 2542-2552. https://doi.org/10.3390/eng4040145