Image-Based Vehicle Classification by Synergizing Features from Supervised and Self-Supervised Learning Paradigms

Abstract

1. Introduction

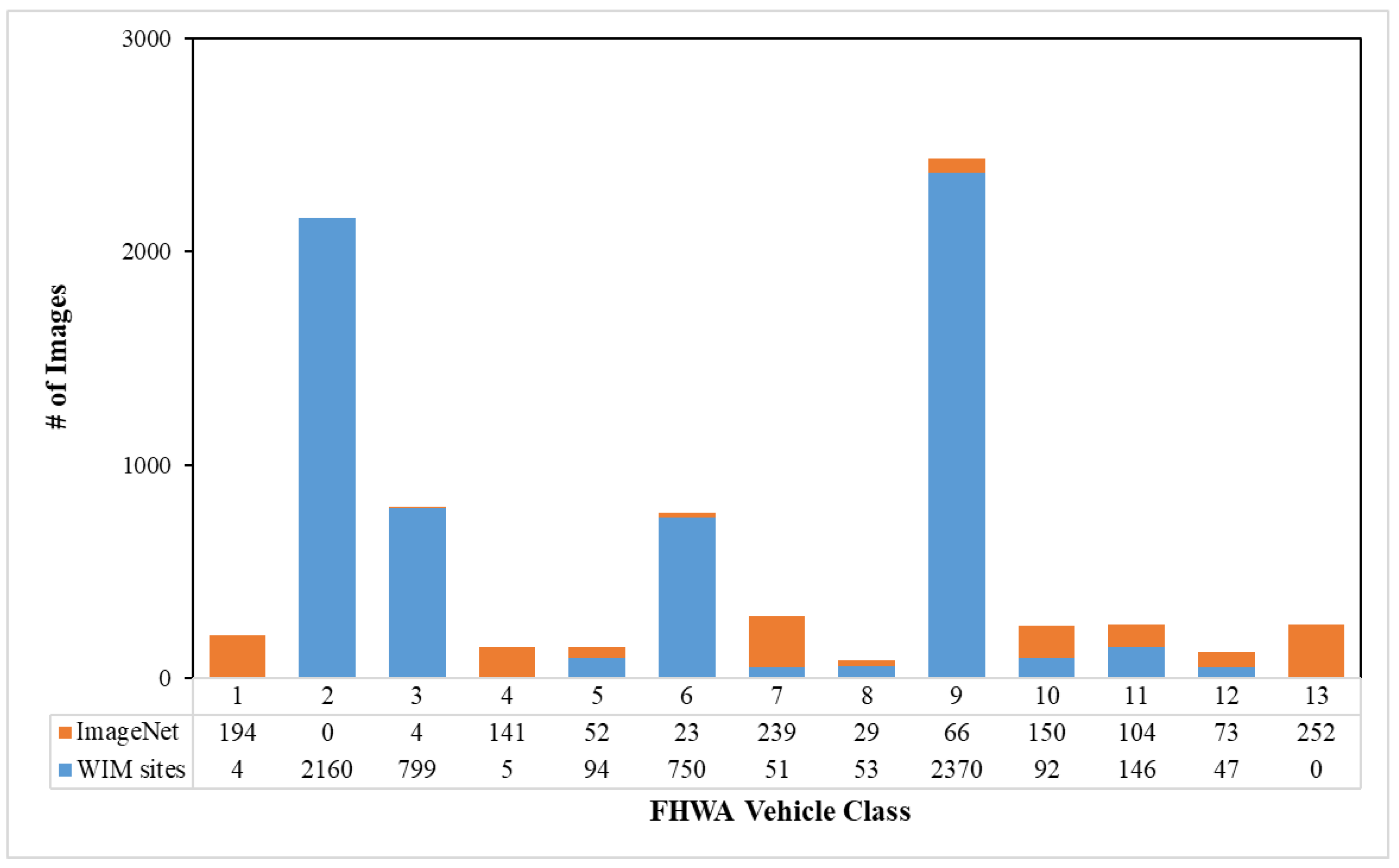

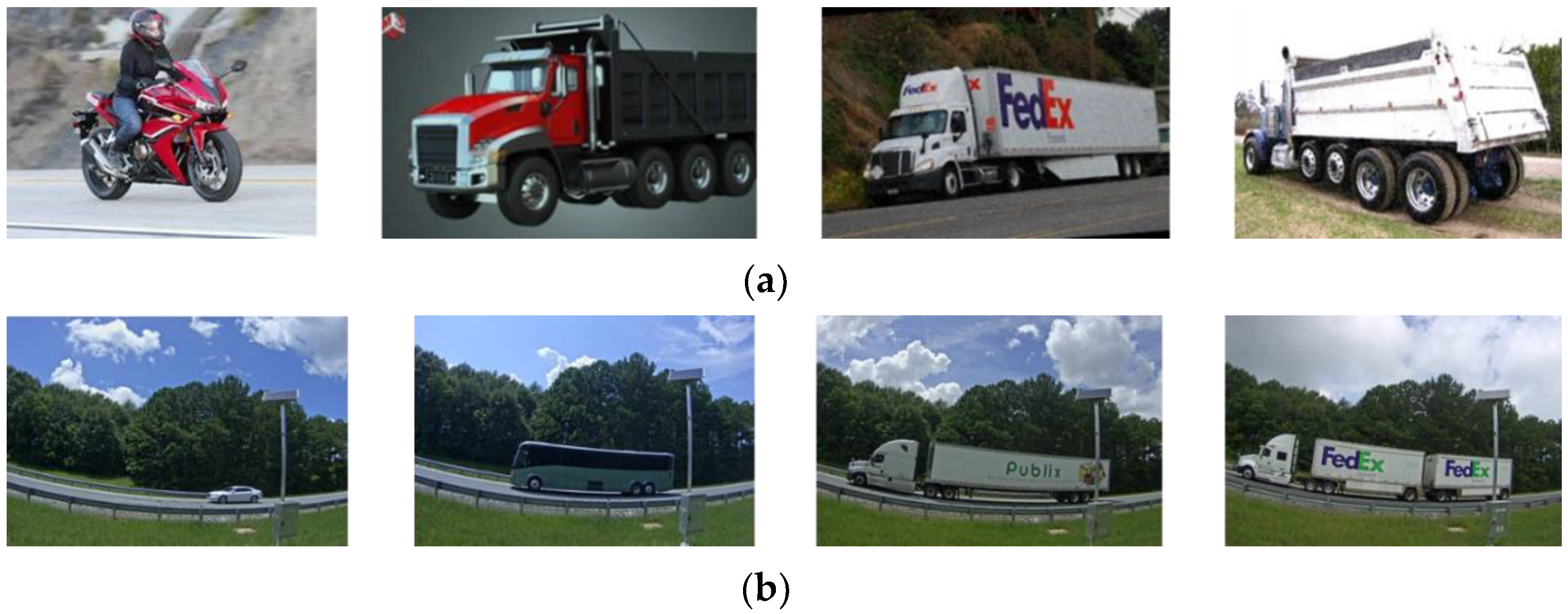

2. Data Description

3. Method

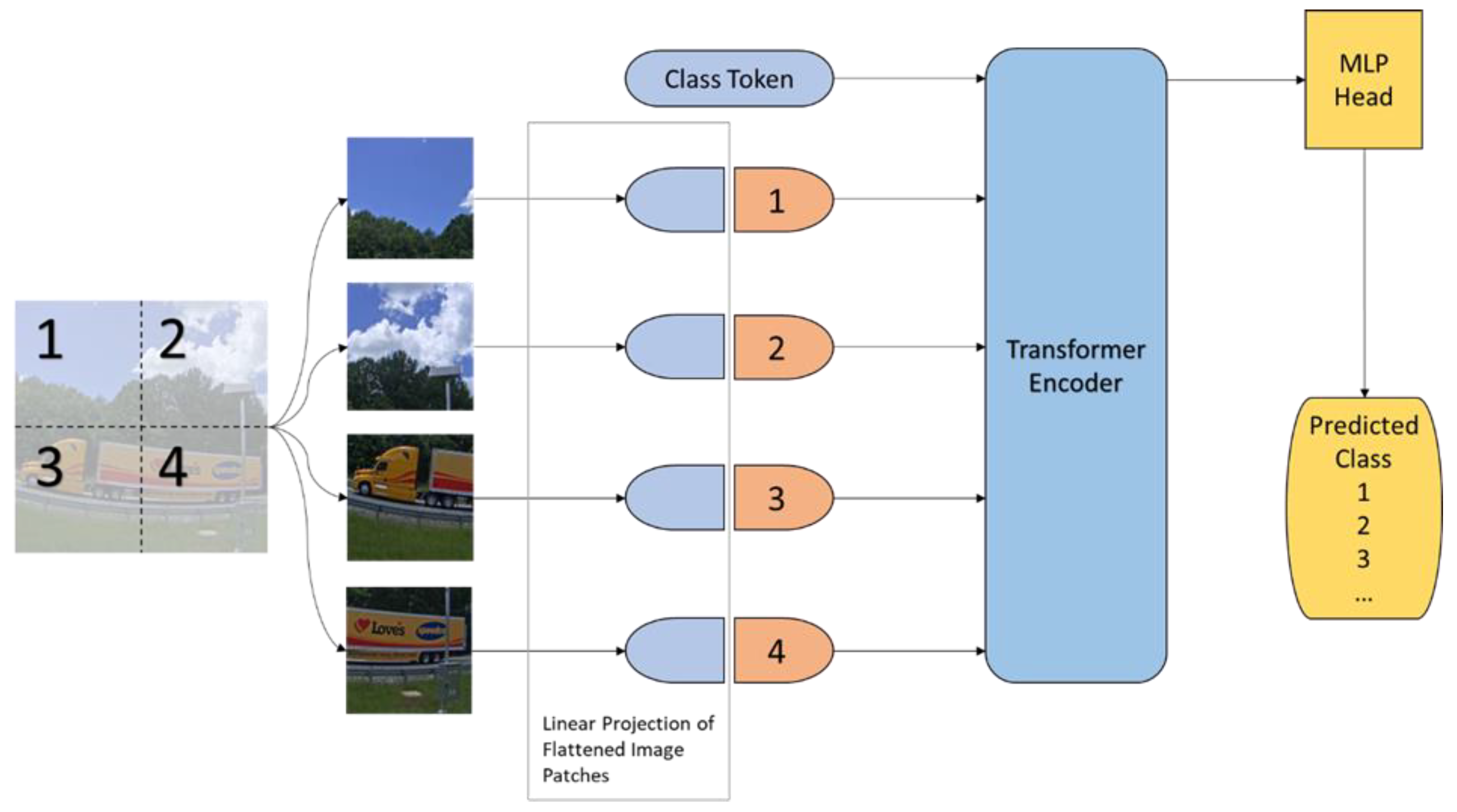

3.1. Vision Transformer

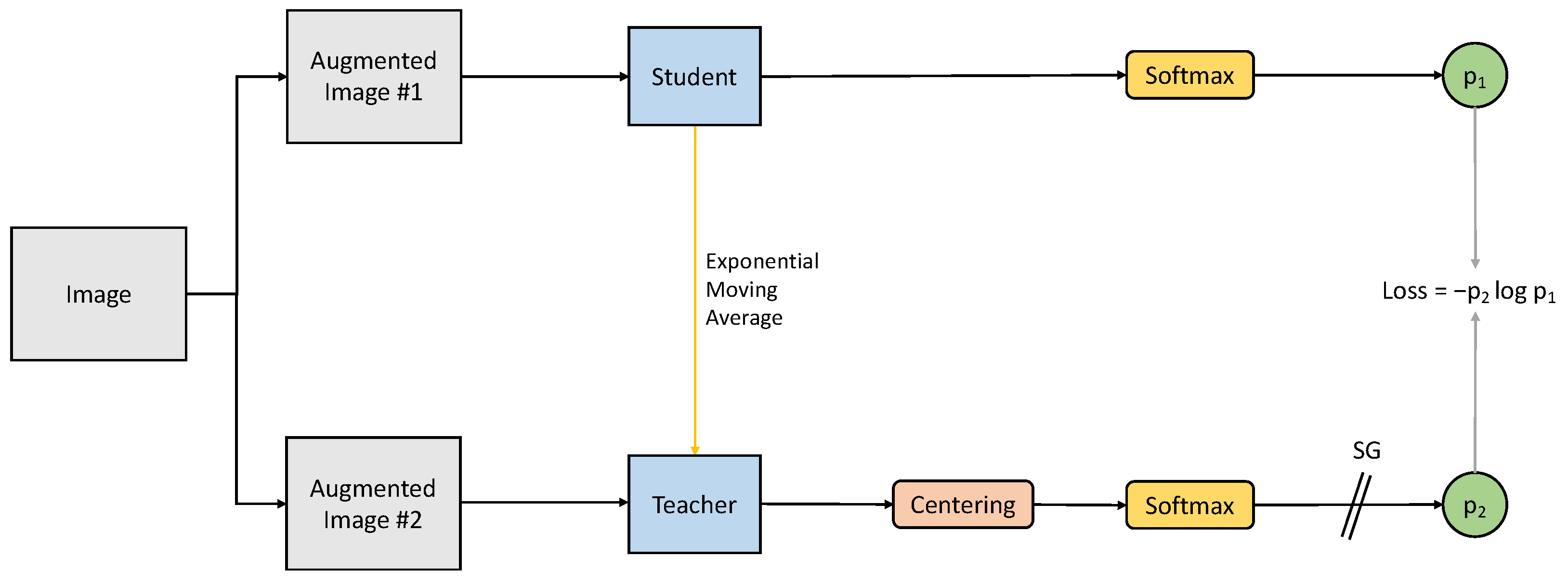

3.2. Self-Supervised Pretraining

3.3. Wheel Detection

3.4. Composite Model Architecture

4. Experiments

4.1. Effects of Self-Supervised Pretraining

4.2. Performance of Composite Models

4.3. Random Wheel Masking Strategy

5. Conclusions and Discussions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cheung, S.Y.; Coleri, S.; Dundar, B.; Ganesh, S.; Tan, C.-W.; Varaiya, P. Traffic measurement and vehicle classification with single magnetic sensor. Transp. Res. Rec. 2005, 1917, 173–181. [Google Scholar] [CrossRef]

- Wu, J.; Xu, H.; Zheng, Y.; Zhang, Y.; Lv, B.; Tian, Z. Automatic vehicle classification using roadside LiDAR data. Transp. Res. Rec. 2019, 2673, 153–164. [Google Scholar] [CrossRef]

- Sarikan, S.S.; Ozbayoglu, A.M.; Zilci, O. Automated vehicle classification with image processing and computational intelligence. Procedia Comput. Sci. 2017, 114, 515–522. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Zhou, Y.; Nejati, H.; Do, T.-T.; Cheung, N.-M.; Cheah, L. Image-based vehicle analysis using deep neural network: A systematic study. In Proceedings of the 2016 IEEE International Conference on Digital Signal Processing (DSP), Beijing, China, 16–18 October 2016; pp. 276–280. [Google Scholar]

- Han, Y.; Jiang, T.; Ma, Y.; Xu, C. Pretraining convolutional neural networks for image-based vehicle classification. Adv. Multimed. 2018, 2018, 3138278. [Google Scholar] [CrossRef]

- Jung, H.; Choi, M.-K.; Jung, J.; Lee, J.-H.; Kwon, S.; Young Jung, W. ResNet-based vehicle classification and localization in traffic surveillance systems. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 61–67. [Google Scholar]

- Butt, M.A.; Khattak, A.M.; Shafique, S.; Hayat, B.; Abid, S.; Kim, K.-I.; Ayub, M.W.; Sajid, A.; Adnan, A. Convolutional neural network based vehicle classification in adverse illuminous conditions for intelligent transportation systems. Complexity 2021, 2021, 6644861. [Google Scholar] [CrossRef]

- Hallenbeck, M.E.; Selezneva, O.I.; Quinley, R. Verification, Refinement, and Applicability of Long-Term Pavement Performance Vehicle Classification Rules; United States. Federal Highway Administration. Office of Infrastructure: Washington, DC, USA, 2014.

- Adm, F.H. Traffic Monitoring Guide; United States. Federal Highway Administration. Office of Highway Policy Information: Washington, DC, USA, 2001. Available online: https://rosap.ntl.bts.gov/view/dot/41607 (accessed on 22 January 2023).

- Asborno, M.I.; Burris, C.G.; Hernandez, S. Truck body-type classification using single-beam LiDAR sensors. Transp. Res. Rec. 2019, 2673, 26–40. [Google Scholar] [CrossRef]

- Hernandez, S.V.; Tok, A.; Ritchie, S.G. Integration of Weigh-in-Motion (WIM) and inductive signature data for truck body classification. Transp. Res. Part C Emerg. Technol. 2016, 68, 1–21. [Google Scholar] [CrossRef]

- He, P.; Wu, A.; Huang, X.; Scott, J.; Rangarajan, A.; Ranka, S. Deep learning based geometric features for effective truck selection and classification from highway videos. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 824–830. [Google Scholar]

- He, P.; Wu, A.; Huang, X.; Scott, J.; Rangarajan, A.; Ranka, S. Truck and trailer classification with deep learning based geometric features. IEEE Trans. Intell. Transp. Syst. 2020, 22, 7782–7791. [Google Scholar] [CrossRef]

- Caron, M.; Touvron, H.; Misra, I.; Jégou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging properties in self-supervised vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9650–9660. [Google Scholar]

- Baevski, A.; Hsu, W.-N.; Xu, Q.; Babu, A.; Gu, J.; Auli, M. Data2vec: A general framework for self-supervised learning in speech, vision and language. arXiv 2022, arXiv:2202.03555. [Google Scholar]

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. You only learn one representation: Unified network for multiple tasks. arXiv 2021, arXiv:2105.04206. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Thirty-First Annual Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Hu, H.; Lu, X.; Zhang, X.; Zhang, T.; Sun, G. Inheritance attention matrix-based universal adversarial perturbations on vision transformers. IEEE Signal Process. Lett. 2021, 28, 1923–1927. [Google Scholar] [CrossRef]

- Zhou, X.; Bai, X.; Wang, L.; Zhou, F. Robust ISAR Target Recognition Based on ADRISAR-Net. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 5494–5505. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Wang, H.; Ji, Y.; Song, K.; Sun, M.; Lv, P.; Zhang, T. ViT-P: Classification of Genitourinary Syndrome of Menopause From OCT Images Based on Vision Transformer Models. IEEE Trans. Instrum. Meas. 2021, 70, 1–14. [Google Scholar] [CrossRef]

- Cuenat, S.; Couturier, R. Convolutional Neural Network (CNN) vs Visual Transformer (ViT) for Digital Holography. arXiv 2021, arXiv:2108.09147. [Google Scholar]

- Yuan, L.; Chen, Y.; Wang, T.; Yu, W.; Shi, Y.; Jiang, Z.-H.; Tay, F.E.; Feng, J.; Yan, S. Tokens-to-token vit: Training vision transformers from scratch on imagenet. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 558–567. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| Vehicle Class | Class Includes | Number of Axles | Vehicle Class | Class Includes | Number of Axles |

|---|---|---|---|---|---|

| 1 | Motorcycles | 2 | 8 | Four or fewer axle single-trailer trucks | 3 or 4 |

| 2 | All cars Cars with one- and two- axle trailers | 2,3, or 4 | 9 | Five-axle single-trailer trucks | 5 |

| 3 | Pick-ups and vans Pick-ups and vans with one- and two- axle trailers | 2, 3, or 4 | 10 | Six or more axle single-trailer trucks | 6 or more |

| 4 | Buses | 2 or 3 | 11 | Five or fewer axle multi-trailer trucks | 4 or 5 |

| 5 | Two-Axle, six-Tire, single-unit trucks | 2 | 12 | Six-axle multi-trailer trucks | 6 |

| 6 | Three-axle single-unit trucks | 3 | 13 | Seven or more axle multi-trailer trucks | 7 or more |

| 7 | Four or more axle single-unit trucks | 4 or more |

| Network | Top-1 Acc. (%) | Weighted Avg. Precision (%) | Weighted Avg. Recall (%) |

|---|---|---|---|

| ViT | 90.7 | 90.7 | 90.7 |

| ViT + DINO (freeze-encoder) | 94.6 | 94.6 | 94.6 |

| ViT + DINO | 95.6 | 95.7 | 95.6 |

| ViT + data2vec (freeze-encoder) | 93.5 | 93.5 | 93.5 |

| ViT + data2vec | 95.0 | 95.0 | 95.0 |

| ViT without Pretraining | ViT Pretrained with DINO | ViT Pretrained with data2vec | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Precision (%) | Recall (%) | F1-Score (%) | Precision (%) | Recall (%) | F1-Score (%) | Precision (%) | Recall (%) | F1-Score (%) | |

| Class 1 | 88.0 | 55.0 | 67.7 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 |

| Class 2 | 96.6 | 98.6 | 97.6 | 98.4 | 99.1 | 98.7 | 98.2 | 99.8 | 99.0 |

| Class 3 | 93.5 | 89.4 | 91.4 | 97.5 | 95.7 | 96.6 | 99.4 | 95.0 | 97.1 |

| Class 4 | 73.5 | 86.2 | 79.4 | 100.0 | 93.1 | 96.4 | 96.4 | 93.1 | 94.7 |

| Class 5 | 90.0 | 62.1 | 73.5 | 88.5 | 79.3 | 83.6 | 88.5 | 79.3 | 83.6 |

| Class 6 | 90.3 | 96.1 | 93.1 | 87.2 | 96.8 | 91.7 | 88.2 | 96.1 | 92.0 |

| Class 7 | 66.2 | 74.1 | 69.9 | 95.8 | 79.3 | 86.8 | 84.9 | 77.6 | 81.1 |

| Class 8 | 57.1 | 50.0 | 53.3 | 78.6 | 68.8 | 73.3 | 90.0 | 56.3 | 69.2 |

| Class 9 | 96.2 | 98.2 | 97.2 | 96.8 | 98.4 | 97.6 | 97.0 | 98.4 | 97.7 |

| Class 10 | 64.4 | 60.4 | 62.4 | 90.0 | 75.0 | 81.8 | 81.4 | 72.9 | 76.9 |

| Class 11 | 78.9 | 82.0 | 80.4 | 97.8 | 90.0 | 93.8 | 94.0 | 94.0 | 94.0 |

| Class 12 | 88.2 | 62.5 | 73.2 | 92.3 | 100.0 | 96.0 | 95.7 | 91.7 | 93.6 |

| Class 13 | 68.6 | 68.6 | 68.6 | 90.6 | 94.1 | 92.3 | 80.4 | 80.4 | 80.4 |

| Accuracy (%) | 90.7 | 95.6 | 95.0 | ||||||

| Network | Top-1 Acc. (%) | Weighted Avg. Precision (%) | Weighted Avg. Recall (%) |

|---|---|---|---|

| ViT | 90.7 | 90.7 | 90.7 |

| ViT + DINO (freeze-encoder) | 94.6 | 94.6 | 94.6 |

| ViT + DINO | 95.6 | 95.7 | 95.6 |

| ViT + data2vec (freeze-encoder) | 93.5 | 93.5 | 93.5 |

| ViT + data2vec | 95.0 | 95.0 | 95.0 |

| ViT + YOLOR | 91.4 | 91.6 | 91.4 |

| ViT + DINO (freeze-encoder) + YOLOR | 95.4 | 95.5 | 95.4 |

| ViT + DINO + YOLOR | 96.0 | 96.0 | 96.0 |

| ViT + data2vec (freeze-encoder) + YOLOR | 95.0 | 95.0 | 95.0 |

| ViT + data2vec + YOLOR | 95.3 | 95.2 | 95.3 |

| DINO (Pretrained), without Wheel Features | DINO (Pretrained) + YOLOR, with Wheel Features | |||||

|---|---|---|---|---|---|---|

| Precision (%) | Recall (%) | F1-Score (%) | Precision (%) | Recall (%) | F1-Score (%) | |

| Class 1 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 |

| Class 2 | 99.1 | 99.5 | 99.3 | 99.1 | 99.5 | 99.3 |

| Class 3 | 98.7 | 97.5 | 98.1 | 98.7 | 97.5 | 98.1 |

| Class 4 | 100.0 | 93.1 | 96.4 | 100.0 | 86.2 | 92.6 |

| Class 5 | 88.5 | 79.3 | 83.6 | 80.7 | 86.2 | 83.3 |

| Class 6 | 90.5 | 98.7 | 94.4 | 92.2 | 98.7 | 95.3 |

| Class 7 | 91.4 | 91.4 | 91.4 | 92.7 | 87.9 | 90.3 |

| Class 8 | 70.6 | 75.0 | 72.7 | 92.9 | 81.3 | 86.7 |

| Class 9 | 98.2 | 98.0 | 98.1 | 97.6 | 98.6 | 98.1 |

| Class 10 | 88.6 | 81.3 | 84.8 | 88.9 | 83.3 | 86.0 |

| Class 11 | 86.2 | 100.0 | 92.6 | 88.9 | 96.0 | 92.3 |

| Class 12 | 100.0 | 87.5 | 93.3 | 95.8 | 95.8 | 95.8 |

| Class 13 | 95.1 | 76.5 | 84.8 | 100.0 | 80.4 | 89.1 |

| Accuracy (%) | 96.3 | 96.6 | ||||

| Network | Without Wheel Masking | Randomly Masking One Wheel | ||||

|---|---|---|---|---|---|---|

| Top-1 Acc. (%) | WAP * (%) | WAR * (%) | Top-1 Acc. (%) | WAP (%) | WAR (%) | |

| ViT + YOLOR | 91.4 | 91.6 | 91.4 | 91.7 | 91.6 | 91.7 |

| ViT + DINO (freeze-encoder) + YOLOR | 95.4 | 95.5 | 95.4 | 96.0 | 96.0 | 96.0 |

| ViT + DINO + YOLOR | 96.3 | 96.3 | 96.3 | 96.7 | 96.8 | 96.7 |

| ViT + data2vec (freeze-encoder) +YOLOR | 95.0 | 95.0 | 95.0 | 96.5 | 96.6 | 96.5 |

| ViT + data2vec + YOLOR | 95.3 | 95.2 | 95.3 | 97.2 | 97.2 | 97.2 |

| ViT + Data2vec + YOLOR, without Wheel Masking | ViT + Data2vec + YOLOR, with Wheel Masking | |||||

|---|---|---|---|---|---|---|

| Precision (%) | Recall (%) | F1-Score (%) | Precision (%) | Recall (%) | F1-Score (%) | |

| Class 1 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 |

| Class 2 | 98.2 | 99.8 | 99.0 | 99.3 | 99.8 | 99.5 |

| Class 3 | 99.4 | 95.0 | 97.1 | 99.4 | 98.1 | 98.8 |

| Class 4 | 93.3 | 96.6 | 94.9 | 96.6 | 96.6 | 96.6 |

| Class 5 | 88.5 | 79.3 | 83.6 | 92.6 | 86.2 | 89.3 |

| Class 6 | 88.6 | 95.5 | 91.9 | 93.2 | 97.4 | 95.3 |

| Class 7 | 84.9 | 77.6 | 81.1 | 94.2 | 84.5 | 89.1 |

| Class 8 | 100.0 | 62.5 | 76.9 | 100.0 | 81.3 | 89.7 |

| Class 9 | 97.2 | 98.6 | 97.9 | 97.0 | 99.4 | 98.2 |

| Class 10 | 81.8 | 75.0 | 78.3 | 97.6 | 83.3 | 89.9 |

| Class 11 | 94.0 | 94.0 | 94.0 | 94.2 | 98.0 | 96.1 |

| Class 12 | 95.8 | 95.8 | 95.8 | 88.5 | 95.8 | 92.0 |

| Class 13 | 83.7 | 80.4 | 82.0 | 97.8 | 88.2 | 92.8 |

| Accuracy (%) | 95.3 | 97.2 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, S.; Yang, J.J. Image-Based Vehicle Classification by Synergizing Features from Supervised and Self-Supervised Learning Paradigms. Eng 2023, 4, 444-456. https://doi.org/10.3390/eng4010027

Ma S, Yang JJ. Image-Based Vehicle Classification by Synergizing Features from Supervised and Self-Supervised Learning Paradigms. Eng. 2023; 4(1):444-456. https://doi.org/10.3390/eng4010027

Chicago/Turabian StyleMa, Shihan, and Jidong J. Yang. 2023. "Image-Based Vehicle Classification by Synergizing Features from Supervised and Self-Supervised Learning Paradigms" Eng 4, no. 1: 444-456. https://doi.org/10.3390/eng4010027

APA StyleMa, S., & Yang, J. J. (2023). Image-Based Vehicle Classification by Synergizing Features from Supervised and Self-Supervised Learning Paradigms. Eng, 4(1), 444-456. https://doi.org/10.3390/eng4010027