Abstract

Biometrics deals with the recognition of humans based on their unique physical characteristics. It can be based on face identification, iris, fingerprint and DNA. In this paper, we have considered the iris as a source of biometric verification as it is the unique part of eye which can never be altered, and it remains the same throughout the life of an individual. We have proposed the improved iris recognition system including image registration as a main step as well as the edge detection method for feature extraction. The PCA-based method is also proposed as an independent iris recognition method based on a similarity score. Experiments conducted using our own developed database demonstrate that the first proposed system reduced the computation time to 6.56 sec, and it improved the accuracy to 99.73, while the PCA-based method has less accuracy than this system does.

1. Introduction

The classical human identification system was based on physical keys, passwords or ID cards, etc., which can be lost or be forgotten easily, while the modern identification system is based on distinct and unique traits, i.e., physical or behavioral characteristics. The human eye has a very sensitive part named the iris, which has unique pattern in every individual. The iris has a thin structure, and it has a sphincter muscle lying in between the sclera and the pupil of the human eye. It is just like a person who has a living password which can never be altered. Although, fingerprints, face and voice recognition have been also widely used as proofs of identity [1], the iris pattern is more reliable, non-invasive and has higher recognition accuracy rate [2,3,4]. The iris pattern does not change significantly throughout the human’s life, and even the left and right eyes have different iris patterns [5]. Every eye has its own iris features with a very high degree of freedom [6]. These are some benefits of iris recognition, which make it better than the other recognition systems [7]. It began in 1936 when Dr. Frank Burch proposed the innovative idea of using iris patterns as a method to recognize an individual. In 1995, the first commercial product was made available [8]. Nowadays, iris recognition is extensively applied in many corporations for identification such as in security systems, immigration systems, border control systems, attendance systems, and many more [9].

The iris recognition framework is divided into four sections: iris segmentation, iris normalization, iris feature extraction and matching [10]. Daugman proposed the method of capturing the image at a very close range using camera and a point light source [2,11]. After an iris image has been captured, a series of integro-differential operators can be used for its segmentation [12]. In [13], the author proposed that the active contour method is better than fixed shape modelling is for describing the inner and outer boundaries of iris [14]. Wildes proposed the localization of the iris boundaries using Hough transform, and they represented the iris pattern via Laplacian pyramid. The author used a normalized correlation to find the goodness of matching between two iris patterns [15]. Boles et al. proposed a WT zero-crossing representation for a finer approximation of the iris features at different resolution levels, and an average dissimilarity at each resolution level was calculated to determine the overall dissimilarity between two irises [16].

Zhu et al. used a multi-scale texture analysis for global feature extraction, 2D texture analysis for feature extraction and a weighted Euclidean distance classifier for iris matching [17]. Daouk et al. proposed the Hough transform and canny edge detector to detect the inner and outer boundaries of an iris [18]. Tan et al. proposed the iterative pulling and pushing method for the localization of the iris boundaries, and they used key local variations and ordinal measure encoding to represent the iris pattern [18,19,20,21]. Patil et al. proposed the lifting wavelet scheme for the iris features [22]. Sundaram et al. used the circular Hough transform for the iris localization, 2D Haar wavelet and the Grey Level Co-occurrence Matrix (GLCM) to describe the iris features and the Probabilistic Neural Network (PNN) for matching the computed iris features [23].

Shin et al. proposed pre-classification based on the left or right eye and the color information of the iris, and then, they authenticated the iris by comparing the texture information in terms of binary code [24]. The authors proposed the Contrast Limited Adaptive Histogram Equalization (CLAHE) to remove the noise and occlusions from the image, and they used SURF (Speeded Up Robust Features)-based descriptors for the feature extraction [25,26]. Jamaludin et al. proposed the improved Chan–Vese active contour method for iris localization and the 1D log-Gabor filter for feature extraction for non-ideal iris recognition [9]. Kamble et al. proposed their own developed database and used Fourier descriptors to make an image quality assessment [27]. Dua et al. proposed an integro-differential operator and Hough transform for segmentation, a 1D log-wavelet filter for feature encoding and a Radial basis function neural network (RBFNN) for classification and matching [28]. This algorithm presented very high precision value, but it involved massive calculations which increased the computation time, and as well as this, the method was not tested practically on humans or animals. So, in order to improve the recognition efficiency and reduce the computation time, we sum up the state-of-the-art technology in the domain of iris recognition. We propose an improved state-of-the-art Iris recognition system based on image registration along with feature extraction which employs the physiological characteristics of the human eye. We propose two different methods, i.e., the classical image processing-based method and the PCA-based method to determine which will handle the noisy conditions, the illumination problems, as well as camera-to-face distance problems better in the real-time implementation of the systems.

In this paper, Section 2 describes the steps of proposed iris recognition method in which we have added image registration (to align the image) as a compulsory step to reduce FAR (False Acceptance Rate) and FRR (False Rejection Rate). Section 3 describes the principle component analysis, which is our own proposed method to describe the iris texture in terms of its Eigen vector, Eigen value and similarity score. Section 4 describes the evaluation part, and last section concludes the paper.

2. Proposed System

The proposed system based on iris recognition consists of six main steps: data acquisition, pre-processing, image registration, segmentation, feature extraction and matching. The principle component analysis method is the second proposed method for iris recognition. These methods are proposed in context of them having less computation time and a high level of accuracy.

2.1. Data Acquisition

The data in our case are based on real-time images, while generally, pre-captured images are used. It consists of two steps, either using the images that are already available for the testing system, i.e., the CASIA database, or using images that were taken from camera directly. The iris pattern is only visible in the presence of infrared rays so an ordinary camera cannot be used for this purpose. The real-time implementation of the system proves the system’s effectiveness, so we have used our own database based on real-time images that were taken using an IR-based iris scanner, which were taken instantly. The core characteristics of the iris scanner are: auto-iris-detection, auto-capturing and auto-storage to a directory that has been assigned. The specifications of the iris scanner include a monocular IR camera, a capture distance of 4.7 cm to 5.3 cm using an image sensor, a capturing speed of 1 s, an image dimension of 640 × 480 (Grayscale) and the compression format was BMP.

Iris database created using this scanner consists of 454 images of 43 persons. For 12 persons, we have captured ten images of the right eye and five images of the left eye. For 15 persons, we have captured five images of the right eye and five images of the left eye, and for 16 persons, we have captured two images of the left eye and two images of the right eye. All of the images were captured at different angles and different iris locations.

2.2. Pre-Processing

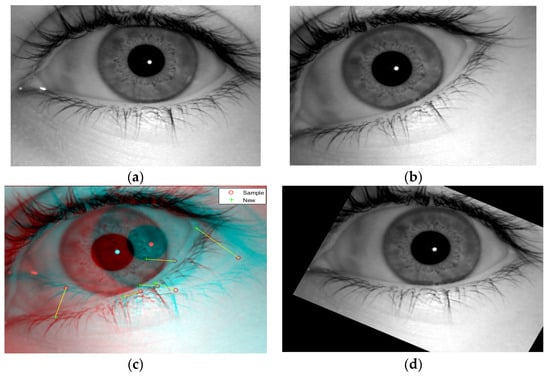

After loading the images, the next step was pre-processing. This mainly involved RGB-to-grayscale conversion, contrast adjustment, brightness adjustment and deblurring. Figure 1 shows the four right eye images of same person taken using the iris scanner. As our images are already in grayscale, there was no need to conduct the grayscale conversion. The iris scanner took images after focusing, so there was very little chance that image would be blurred, but still, we have used a weighted average filter to perform deblurring.

Figure 1.

Four right eye images of one person.

In weighted average filters, there will be higher intensities of pixels in the center of the image. The center values (pixels) of the mask are multiplied with highest values, thus, the intensities of the pixels at center are the highest. The weighted average filter was implemented for filtering an image in the order of with filter of size , which is given by Equation (1).

where w (s, t) is the weight, f (x + s, y + t) is the input for which x = 0, 1, 2…., m − 1 and y = 0, 1, 2…., n − 1 and g (x, y) are the output image. This will result in the image having better intensities in the iris portion than those that are shown in Figure 2. During the iris recognition process, the pre-processing steps can also be used again and again if they are required.

Figure 2.

(a) Original Image. (b) Output of weighted average filter image.

2.3. Image Registration

Image registration is a process during image processing that overlaps two or more images from various imaging equipment or sensors which are taken at different angles to geometrically align the images for an analysis and to reduce the problems of misalignment, rotation and scale, etc. If the angle of the iris is changed (i.e., the person kept their eye near the scanner at a different angle or a different position), then the iris pattern at the same position as in other image will be changed, and it can cause mismatch. As it can be seen in Figure 1, the images were taken at different positions as well as angles, so we needed to perform image registration. If the new image In (x, y) is rotated or tilted at any angle, it will be compared with the sample image Is (x, y), and it will automatically be rotated to the ideal position. There are different processes in image registration based on point mapping, multimodal configurations and feature matching. These points will be detected in both of the images to find whether they are at same angle and position or not, and if they are not, then the images will be aligned by adjusting these points, respectively. When we were choosing a mapping function (u (x, y), v (x, y)) for the ordinal coordinates transformation, the intensity values of the new image were made to be close to the corresponding points in the sample image. The mapping function must simplify to Equation (2).

It is constrained to capture the similarity transformation of the image coordinates from (x, y) to (x′, y′) as shown in Equation (3).

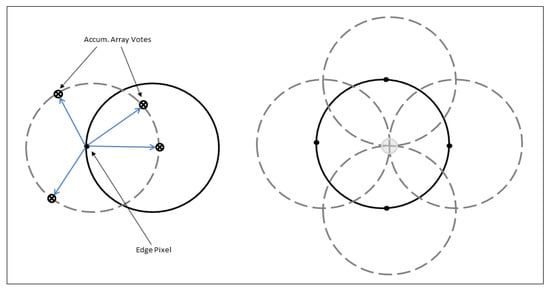

where s is a scaling factor and R() is the rotation matrix, which is represented by . Practically, when a pair of iris images In and Id are given, the warping parameters s and are recovered via an iterative minimization procedure. The output of image registration is shown in Figure 3, and image registration data are represented in Algorithm 1.

| Algorithm 1: Image Registration. |

| Step 1: Read sample iris image and new (i.e., tilted or rotated) grayscale eye image. Step 2: Detect surface features of both images. Step 3: Extract features from both images. Step 4: Find the matching features using Equation (2). Step 5: Retrieve location of corresponding points for both images using Equation (3). Step 6: Find a transformation corresponding to the matching point pairs using M-estimator Sample Consensus (MSAC) algorithm. Step 7: Use geometric transform to recover the scale and angle of new image corresponding to the sample image. Let sc = scale ∗ cos (theta) and ss = scale ∗ sin (theta), then: Tinv = [sc-ss 0; ss sc 0; tx ty 1] where tx and ty are x and y translations of new image relative to the sample image, respectively. Step 8: Make the size of new image same as that of sample and display in same frame. |

Figure 3.

Image Registration. (a) Sample Image. (b) New Image. (c) Matching Points Between both images. (d) Rotated/Registered Image.

2.4. Segmentation

Segmentation mainly involves the separation of the iris portion from the eye. The iris region consists of two circles, one of them is the outer iris–sclera boundary, and another one is the interior iris–pupil boundary. The eyelids and eyelashes sometimes hide the upper and lower parts of the iris region. It is considered to be a very crucial stage to achieve the correct detection the of outer and inner boundaries of the iris. There are different methods which are commonly used for this section including the integro-differential integrator [29], moving agent [30], Hough transform [31], circular Hough transform [32], iterative algorithm [33], Chan–Vese active contour method [34] and Fourier spectral density ones, [35] etc. We have used the circular Hough transform (CHT) one for the detection of the iris boundaries due to its robust performance even in noise, occlusion and varying illumination. It depends upon the equation of circle which is described by Equation (4):

where a, b is the center, r is the radius and x, y represents the coordinates of the circle. Equations (5) and (6) shows the parametric representation of this circle:

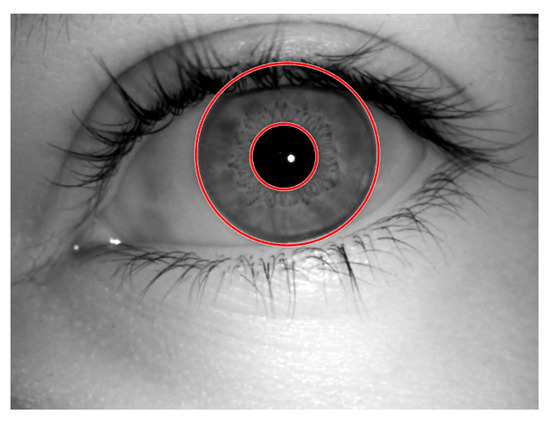

The CHT use a 3D array with the first two dimensions, representing the coordinates of the circle, and the last third of it specifies the radii. When a circle of a desired radii is drawn at every edge point, the values in the accumulator (the array which will find the intersection point) will increase. The accumulator, which keeps count of the circles passing through the coordinates of each edge point, will vote for the highest count as shown in Figure 4.

Figure 4.

Circular Hough transform voting pattern.

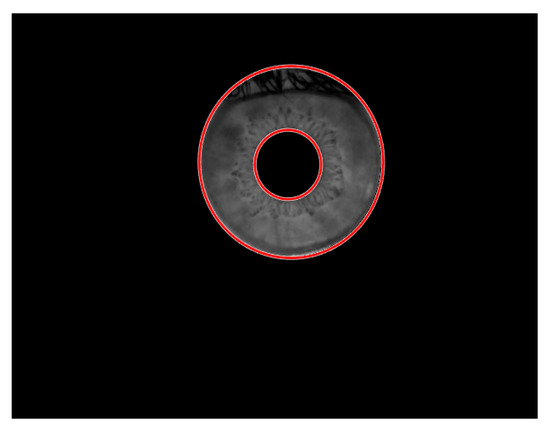

The coordinates of the center of circles in the images will be the coordinates with the highest count. For efficient recognition, the circular Hough transform was performed on the iris–sclera boundary first, and then, on the iris–pupil boundary. The segmentation using the circular Hough transform method is shown in Figure 5. After the circular portion was detected, the next step was to separate this circular portion from the eye. We have applied the mask of zeros to extract the iris from the eye as shown in Figure 6, and the circle detection is represented in Algorithm 2.

| Algorithm 2: Circle Detection Using Circular Hough Transform. |

| Step 1: Define iris radius range [50, 155] and pupil radius range [20, 55]. Step 2: Define object polarity bright as dark. Step 3: Define sensitivity of 0.98. Step 4: Define edge threshold value of 0.05. Step 5: Apply circular Hough transform for boundary detection. Step 6: Find centers and radii and display only required circles. Step 7: Display the detected iris portion. Step 8: Apply mask to separate the iris from eye. |

Figure 5.

Output of circle detection.

Figure 6.

Output of segmentation.

2.5. Feature Extraction

Feature extraction is one of the most important steps involved in process. We have used two different methods for the feature extraction. One is the two-dimensional Discrete Wavelet Transform (DWT) and other is edge detection.

2.5.1. Two-Dimensional Discrete Wavelet Transform (2-D DWT)

The 2D wavelet and scaling functions were obtained by taking the vector product of the 1D wavelet and the scaling functions. This leads to the decomposition of the approximate coefficients at level j in four components, i.e., the approximation at level j + 1, and the details in three orientations (the horizontal, vertical, and diagonal ones). Two-dimensional wavelet transform is generally obtained by separable products of scaling functions Ø and wavelet functions Ψ as in Equation (7):

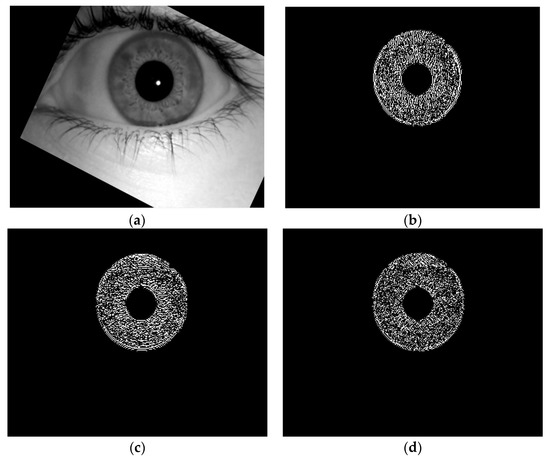

The detail coefficient images, which are obtained from three wavelets, are given by Equations (8)–(10), and they are shown in Figure 7.

Figure 7.

Feature extraction using 2D DWT. (a) Original eye image. (b) Vertical wavelet. (c) Horizontal wavelet. (d) Diagonal wavelet.

The energy is computed to approximate the three detailed coefficients (the horizontal, vertical and diagonal ones) by Equation (11):

where X (m, n) is a discrete function whose energy is to be computed.

2.5.2. Edge Detection:

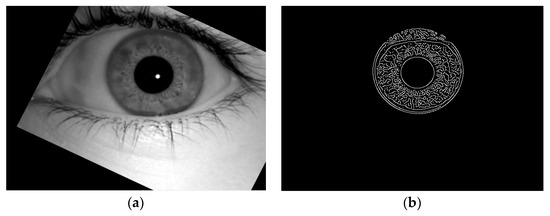

Edge detection consists of a variety of mathematical methods that can be used to identify the points in an image where the image brightness changes sharply, which are generally organized into set of curved line segments, or they have discontinuities. It can also be used for finding out those points where the intensities change rapidly. There are a lot of different edge detection techniques, but we have used a zero-crossing-based second-order derivative edge detector named the canny edge detector. This technique uses two thresholds: a high threshold for low edge sensitivity and a low threshold for high edge sensitivity to detect strong as well as weak edges which enables the edge detector to not be affected by noise, and thus, it is more likely to detect the true weak edges. Bing Wang and Shaosheng Fan developed a filter which evaluated the discontinuity between the grayscale values of each pixel [36]. For higher discontinuity, a lower weight value was set to smooth the filter at the corresponding point, and for lower a discontinuity between the grayscale values, the higher weight value was set to the filter. The resultant image after applying the edge detection is shown in Figure 8, and feature extraction is represented in Algorithm 3.

| Algorithm 3: Feature Extraction using Edge Detection. |

| Step 1: Convolve the Gaussian filter with image to smooth the image using: where is standard deviation and kernel size is (2k + 1) × (2k + 1). Step 2: Compute the local gradient at each point. Step 3: Find edge direction at each point. Step 4: Apply an edge thinning technique to get more accurate representation of real edges. Step 5: Apply hysteresis thresholding based on two thresholds, and with , to determine potential edges in image. Step 6: Perform edge linking by incorporating the weak pixels connected to the strong pixels. |

Figure 8.

Feature extraction using canny edge detector. (a) Original eye image. (b) Output image of feature extraction using edge detection.

2.6. Feature Matching

For matching, we have used two different methods, i.e., the Hamming distance and Absolute differencing method.

2.6.1. Hamming Distance

Hamming distance makes use of only those parts in both of the iris patterns which corresponds to “0”. It is calculated by using the formula in Equation (12). Its value will be zero when both of the iris patterns match exactly, but unfortunately this never happens because of light variation while we are capturing the image, noise which will remain undetected during normalization and environmental effects on the sensor, etc. So, a value up to a 0.5 distance which was chosen in the hit and trial method is usually considered to be accurate. If the hamming distance is below 0.5, it means that the both iris patterns are the same, but if the distance is greater than 0.5, it means that the iris patterns may be matched or not matched. If the distance has value of 1, it clearly means that the iris patterns are not matched.

where N is the number of parts to be compared and is the difference between the two iris patterns.

2.6.2. Absolute Differencing

The absolute differencing method will find the absolute difference between each element in one iris pattern from the corresponding element in other iris pattern, and it returns the absolute difference in the corresponding element of the output. If one pattern is similar to the other, then the absolute difference will be zero.

We have mixed both of these techniques in a way that first, it finds the hamming distance, and then, find out the absolute difference between the two images. If the distance between the two images is zero, the display feature are matched, but if it is not zero, then, we must calculate the absolute difference between the two patterns. However, if the absolute difference is zero, it will display the non-matched features.

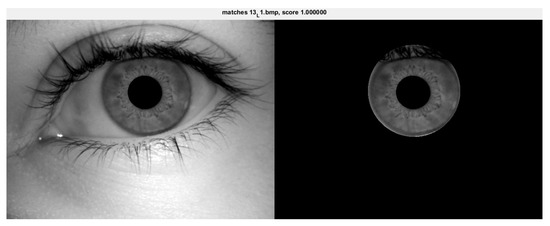

3. Principal Component Analysis (PCA)

Principal component analysis is a method used to extract strong patterns from a given dataset. The data become easy to visualize using this technique and it converts set of correlated variables into a linearly correlated variable. This process gives the differences and similarities in the dataset. The dataset which has highest variance becomes the first axis, which is called the first principal component. The dataset which has the second highest variance becomes the second axis, which is called the second principal component and so on. PCA reduces the dimensions of the dataset, but it retains the features and characteristics of the dataset. We have used PCA to reduce the steps and obtain the desired results as by using the traditional image processing steps. It does not detect the inner features which is very important step for our system. It makes a decision based on the Eigenvectors, Eigenvalues and matching score between the two images. The results obtained using PCA are shown in Figure 9, and the PCA is represented in Algorithm 4.

| Algorithm 4: Principal Component Analysis (PCA). |

| Step 1: Create MAT file of the database and load database. Step 2: Find the mean of images using . Step 3: Find the mean shifted input image. Step 4: Calculate the Eigen vector and Eigen values using , where matrix λ is the Eigen value of non-zero square matrix (A) corresponding to ʋ. Step 5: Find the cumulative energy content for each Eigen vector by , j = 1, 2, 3,…, p. It will retain the top principal components only. Step 6: Create the feature vector by taking the product of cumulative energy content of Eigen vector and mean shifted input image. Step 7: Separate out feature vector (iris section) from input image. Step 8: Find the similarity score with images in database. Step 9: Display the image having highest similarity score with input image. |

Figure 9.

Results of applying principal component analysis.

4. Results and Discussion

Our proposed work was implemented using same laptop with the specifications of an Intel Core 5Y10C, 4 GB RAM, Windows 8, IriCore software and MATLAB R2017a. About 454 images of 43 persons taken using a camera, MK-2120U, using IriCore software were used to test the performance of the proposed system. Multiple samples of individual eyes were recorded in our database. Each image was captured at 640 × 480, 8-bit grayscale image, and they were saved in the BMP format. Figure 10 shows the left and right eye images of different persons.

Figure 10.

Iris images of different persons in our database.

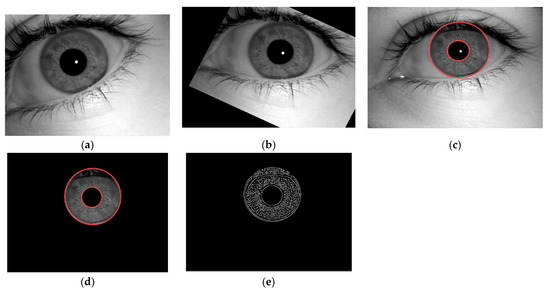

- Image registration aligns the input image with the reference image. In this method, we have taken an eye image as shown in Figure 11a, and the image registration was performed to align the image, as shown in Figure 11b. It is one of the major step that is performed to align the images for the analysis, and it reduce the problems of misalignment, rotation and scale.

Figure 11. (a) Original eye image. (b) Image after registration. (c) Outer and inner boundaries of image. (d) Iris portion separation from an eye image. (e) Features of an Iris.

Figure 11. (a) Original eye image. (b) Image after registration. (c) Outer and inner boundaries of image. (d) Iris portion separation from an eye image. (e) Features of an Iris. - Segmentation involves the circular portion detection and extraction from an eye image. Iris segmentation combines the technique of edge detection and Hough transform to detect the circular edges in the image. The segmented iris is shown in Figure 11c. It also involves the extraction of the iris region from an eye image, as shown in Figure 11d, which was evaluated by the combination of the circular Hough transform and masking methods, and this resulted in the circular iris portion extraction.

- Feature extraction was realized by using 2-Dimensional Discrete Wavelet transformation and canny edge detection. The 2D DWT results in the horizontal, vertical and diagonal components of the iris feature contain a lot of information which are difficult to handle, while on other hand, the edge detection technique provides all of the features in a single matrix. If we compared both of the techniques, 2D DWT takes more time to execute and it provides the desired results in three matrices, which will further take more time in matching, while the edge detection technique takes less time to provide the desired result, and it will be easy to find matched features, as it can be seen in Figure 11e. So, the results of the edge detection technique were employed for further processing.

- Matching comprises of two different methods to avoid FAR and FRR as much as possible. These methods include the Hamming distance and absolute differencing method. First, the Hamming distance method was implemented to find the distance between the iris features, and if this distance is zero, then access is granted. This is difficult to achieve as occlusions such as eyelids, eyelashes, change under different lightening conditions and noise effect the features of an iris. So, threshold of 0.5 was adjusted to pass the barrier (gate), but still, the iris pattern was subjected to the absolute differencing method. This method checks the difference between the features of the two iris patterns, and it grant access or denies access to the barrier. If the Hamming distance is greater than 0.5 and there also exists absolute difference, the access to the system will be denied.

- Principal component analysis comprises all of the steps from reading an image to matching it. It consists of iris extraction from an eye image and calculating the mean Eigen values, Eigen vectors and similarity score of an image to compare them with those of the images in the database. The decision is based on the similarity score between two images as shown in Figure 9. The similarity score is 1 with ID 13_L1, which means its features are similar to a person having this ID. This method takes the surface features mainly, while the iris pattern has detailed information hidden which needs to be involved during processing.

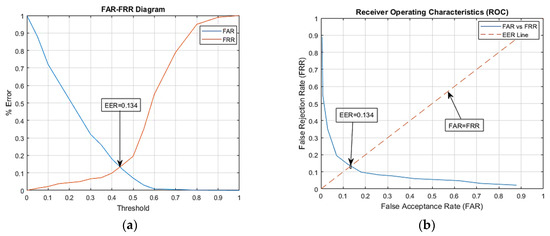

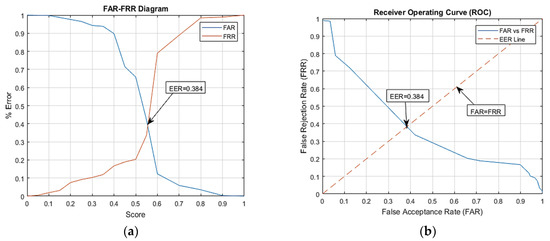

To check the accuracy of the proposed system, it was evaluated using the false acceptance rate, the false rejection rate and the equal error rate. The equal error rate (EER) is achieved at a point where the FAR and FRR overlaps; the lower the EER is, the better the performance accuracy of the system will be. The matching algorithm uses a threshold value which will determine the closeness of the input iris to the database iris. The lower the threshold value is, the lower the FRR will be, while the FAR will be higher, and a higher threshold value will lead to a lower FAR and higher FRR, as shown in Figure 12 and Figure 13. EER is the point at which the FRR equals the FAR, and it is considered to be the most important measure of the biometric system’s accuracy. The proposed iris recognition system gives an EER = 0.134 as shown in Figure 12, while using a PCA-based method for iris recognition gives an EER = 0.384 as shown in Figure 13.

Figure 12.

Performance graph for proposed method. (a) % Error for threshold distance (b) ROC curve.

Figure 13.

Performance graph for PCA-based method. (a) % Error for threshold distance. (b) ROC curve.

Table 1 describes the performance of different methodologies for iris recognition. It shows the false acceptance rates (FAR), the false rejection rates (FRR) and the recognition accuracy taken using different methodologies. It can be observed from the table that our proposed system has outperformed the already existing techniques in terms of the processing time. Our proposed system has recognition rate of 99.73%, and the execution time is 6.56 s, while the other system based on PCA has a recognition rate of 88.99%, and the execution time is 21.52 s. Therefore, the proposed system without PCA is more proficient for identification than the proposed system with PCA because it has less accuracy.

Table 1.

Performance comparison of different methodologies.

It can be seen from the above table that our proposed system’s accuracy is better than the other methods are as we have involved image registration which provide much better results, while the PCA-based system is less efficient than the other proposed system is, but it can be made more efficient by using a camera with a higher resolution. So, the proposed system with image registration is proficient for the identification and verification of the iris.

5. Conclusions

Iris recognition is an emerging field in biometrics as the iris has a data-rich unique structure, which makes it one of the best ways to identify an individual. The designed project is an innovation in the current modes of security systems that are being used today. Due to the unique nature of the iris, it can be used as a password for life. As the iris is the only part of human that can never be altered, there are no chances of trespassing when one is using an iris detection system, by any means. In this paper, an efficient approach for an iris recognition system using image registration and PCA is presented using a database that was built using images taken using an iris scanner. The iris characteristics enhance its suitability in the automatic identification which includes the ease of image registration, the natural protection from external environment and surgical impossibilities without the loss of vision.

The application of the iris recognition system has been seen in various areas of life such as in crime detection, airport, business application, banks and industries. Image registration adjust the angle and alignment of the input image to the reference image. The iris segmentation uses the circular Hough transform method for the iris portion detection, and then, the mask is applied to extract the iris segment from the eye. Feature extraction is achieved by using the two-dimensional discrete wavelet transform (2-D DWT) method and by an edge detection technique so that the most supreme areas of the iris pattern can be extracted, and hence, a high recognition rate and less computation time is achieved. The Hamming distance and absolute differencing methods were applied on the extracted features which give us the accuracy of 99.73%.

PCA was also applied on the same database, and the decision is purely based on the matching score. This system gives us the recognition rate of 89.99% as it does not analyses the deep features. Then, the doors were automated using serial communication between MATLAB and Arduino. The door automation using iris recognition has reduced the labor work for opening and closing the barrier. The system proved to be efficient, and it saved time as it had a processing time of 6.56 s which can be reduced further if an advance programming software had been used. In the future, an improved system can be accessible by investigating with the proposed iris recognition system under different constraints and environments.

Author Contributions

H.H.: Proposed topic, basic study Design, Data Collection, methodology, statistical analysis and interpretation of results; M.N.Z.: Manuscript Writing, Review and Editing; C.A.A.: Literature review, Writing and Referencing; H.E.: Review and Editing; M.O.A.: Review and Editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The author acknowledges the National Development Complex (NDC, NESCOM) Islamabad, Pakistan, for giving sponsorship for the project and authorized faculty for technical and non-technical help to accomplish the goal.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, D. Biometrics technologies and applications. In Proceedings of the International Conference on Image and Graphics, Vancouver, BC, Canada, 10–13 September 2000. [Google Scholar]

- Daugman, J.G. High confidence visual recognition of persons by a test of statistical independence. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 15, 1148–1161. [Google Scholar] [CrossRef]

- Sanchez-Avila, C.; Sanchez-Reillo, R. Two different approaches for iris recognition using Gabor filters and multiscale zero-crossing representation. Pattern Recognit. 2005, 38, 231–240. [Google Scholar] [CrossRef]

- Tisse, C.-L.; Martin, L.; Torres, L.; Robert, M. Person identification technique using human iris recognition. In Proceeding Vision Interface; Citeseer: Princeton, NJ, USA, 2002. [Google Scholar]

- Daugman, J.; Downing, C. Epigenetic Randomness, Complexity and Singularity of Human Iris patterns. Proc. R. Soc. Lond. Ser. B Biol. Sci. 2001, 268, 1737–1740. [Google Scholar] [CrossRef] [PubMed]

- Jeong, D.S.; Hwang, J.W.; Kang, B.J.; Park, K.R.; Won, C.S.; Park, D.-K.; Kim, J. A new iris segmentation method for non-ideal iris images. Image Vis. Comput. 2010, 28, 254–260. [Google Scholar] [CrossRef]

- Szewczyk, R.; Grabowski, K.; Napieralska, M.; Sankowski, W.; Zubert, M.; Napieralski, A. A reliable iris recognition algorithm based on reverse biorthogonal wavelet transform. Pattern Recognit. Lett. 2012, 33, 1019–1026. [Google Scholar] [CrossRef]

- Sreekala, P.; Jose, V.; Joseph, J.; Joseph, S. The human iris structure and its application in security system of car. In Proceedings of the 2012 IEEE International Conference on Engineering Education: Innovative Practices and Future Trends (AICERA), Kottayam, India, 19–21 July 2012; IEEE: Piscataway, NJ, USA, 2012. [Google Scholar]

- Jamaludin, S.; Zainal, N.; Zaki, W.M.D.W. Sub-iris Technique for Non-ideal Iris Recognition. Arab. J. Sci. Eng. 2018, 43, 7219–7228. [Google Scholar] [CrossRef]

- Daugman, J. Demodulation by complex-valued wavelets for stochastic pattern recognition. Int. J. Wavelets Multiresolution Inf. Process. 2003, 1, 1–17. [Google Scholar] [CrossRef]

- Daugman, J. Statistical richness of visual phase information: Update on recognizing persons by iris patterns. Int. J. Comput. Vis. 2001, 45, 25–38. [Google Scholar] [CrossRef]

- Daugman, J. How iris recognition works. In The Essential Guide to Image Processing; Elsevier: Amsterdam, The Netherlands, 2009; pp. 715–739. [Google Scholar]

- Daugman, J. New methods in iris recognition. IEEE Trans. Syst. Man Cybern. Part B 2007, 37, 1167–1175. [Google Scholar] [CrossRef]

- Daugman, J. Information theory and the iriscode. IEEE Trans. Inf. Forensics Secur. 2015, 11, 400–409. [Google Scholar] [CrossRef]

- Wildes, R.P. Iris recognition: An emerging biometric technology. Proc. IEEE 1997, 85, 1348–1363. [Google Scholar] [CrossRef]

- Boles, W.W.; Boashash, B. A human identification technique using images of the iris and wavelet transform. IEEE Trans. Signal Process. 1998, 46, 1185–1188. [Google Scholar] [CrossRef]

- Zhu, Y.; Tan, T.; Wang, Y. Biometric personal identification based on iris patterns. In Proceedings of the 15th International Conference on Pattern Recognition. ICPR-2000, Barcelona, Spain, 3–7 September 2000; IEEE: Piscataway, NJ, USA, 2000. [Google Scholar]

- Ma, L.; Wang, Y.; Tan, T. Iris recognition using circular symmetric filters. In Object Recognition Supported by User Interaction for Service Robots; IEEE: Piscataway, NJ, USA, 2002. [Google Scholar]

- Ma, L.; Tan, T.; Wang, Y.; Zhang, D. Efficient iris recognition by characterizing key local variations. IEEE Trans. Image Process. 2004, 13, 739–750. [Google Scholar] [CrossRef]

- He, Z.; Tan, T.; Sun, Z.; Qiu, X. Toward accurate and fast iris segmentation for iris biometrics. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 31, 1670–1684. [Google Scholar]

- Sun, Z.; Tan, T. Ordinal measures for iris recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 31, 2211–2226. [Google Scholar]

- Patil, C.M.; Patilkulkarani, S. Iris feature extraction for personal identification using lifting wavelet transform. In Proceedings of the 2009 International Conference on Advances in Computing, Control, and Telecommunication Technologies, Bangalore, India, 28–29 December 2009; IEEE: Piscataway, NJ, USA, 2009. [Google Scholar]

- Sundaram, R.M.; Dhara, B.C. Neural network based Iris recognition system using Haralick features. In Proceedings of the 2011 3rd International Conference on Electronics Computer Technology, Kanyakumari, India, 8–10 April 2011; IEEE: Piscataway, NJ, USA, 2011. [Google Scholar]

- Shin, K.Y.; Nam, G.P.; Jeong, D.S.; Cho, D.H.; Kang, B.J.; Park, K.R.; Kim, J. New iris recognition method for noisy iris images. Pattern Recognit. Lett. 2012, 33, 991–999. [Google Scholar] [CrossRef]

- Ismail, A.I.; Hali, S.; Farag, F.A. Efficient enhancement and matching for iris recognition using SURF. In Proceedings of the 2015 5th national symposium on information technology: Towards new smart world (NSITNSW), Riyadh, Saudi Arabia, 17–19 February 2015; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar]

- Ali, H.S.; Ismail, A.I.; Farag, F.A.; El-Samie, F.E.A. Speeded up robust features for efficient iris recognition. Signal Image Video Process. 2016, 10, 1385–1391. [Google Scholar] [CrossRef]

- Kamble, U.R.; Waghmare, L. Person Identification Using Iris Recognition: CVPR_IRIS Database. In Proceedings of the International Conference on ISMAC in Computational Vision and Bio-Engineering, Palladam, India, 16–17 May 2018; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Dua, M.; Gupta, R.; Khari, M.; Crespo, R.G. Biometric iris recognition using radial basis function neural network. Soft Comput. 2019, 23, 11801–11815. [Google Scholar] [CrossRef]

- Labati, R.D.; Genovese, A.; Piuri, V.; Scotti, F. Iris segmentation: State of the art and innovative methods. In Cross Disciplinary Biometric Systems; Springer: Berlin/Heidelberg, Germany, 2012; pp. 151–182. [Google Scholar]

- Rankin, D.M.; Scotney, B.W.; Morrow, P.J.; McDowell, D.R.; Pierscionek, B.K. Dynamic iris biometry: A technique for enhanced identification. BMC Res. Notes 2010, 3, 182. [Google Scholar] [CrossRef]

- Proença, H.; Alexandre, L.A. Iris segmentation methodology for non-cooperative recognition. IEE Proc.-Vis. Image Signal Process. 2006, 153, 199–205. [Google Scholar] [CrossRef]

- de Martin-Roche, D.; Sanchez-Avila, C.; Sanchez-Reillo, R. Iris recognition for biometric identification using dyadic wavelet transform zero-crossing. In Proceedings of the IEEE 35th Annual 2001 International Carnahan Conference on Security Technology (Cat. No. 01CH37186), London, UK, 16–19 October 2001; IEEE: Piscataway, NJ, USA, 2001. [Google Scholar]

- Sreecholpech, C.; Thainimit, S. A robust model-based iris segmentation. In Proceedings of the 2009 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), Kanazawa, Japan, 7–9 December 2009; IEEE: Piscataway, NJ, USA, 2009. [Google Scholar]

- Puhan, N.B.; Sudha, N.; Kaushalram, A.S. Efficient segmentation technique for noisy frontal view iris images using Fourier spectral density. Signal Image Video Process. 2011, 5, 105–119. [Google Scholar] [CrossRef]

- Teo, C.C.; Ewe, H.T. An efficient one-dimensional fractal analysis for iris recognition. In Proceedings of the 13th WSCG International Conference in Central Europe on Computer Graphics, Visualization and Computer Vision, Plzen-Bory, Czech Republic, 31 January–4 February 2005. [Google Scholar]

- Ad, S.; Sasikala, T.; Kumar, C.U. Edge Detection Algorithm and its application in the Geo-Spatial Technology. In Proceedings of the 2017 IEEE International Conference on Computational Intelligence and Computing Research (ICCIC), Coimbatore, India, 14–16 December 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).