Brain-Computer Interfaces and AI Segmentation in Neurosurgery: A Systematic Review of Integrated Precision Approaches

Abstract

1. Introduction

1.1. Background and Motivation

1.2. Advances in AI-Driven Medical Image Segmentation

- U-Net and Variants: Initially introduced for biomedical image segmentation, U-Net has been widely adopted due to its encoder–decoder structure, which effectively captures spatial and contextual information. Variants like Attention U-Net and 3D U-Net have further improved segmentation accuracy for volumetric imaging [8].

- Transformers in Segmentation: Vision transformers (ViTs) and Swin transformers have recently demonstrated superior performance in segmenting medical images by leveraging self-attention mechanisms to capture long-range dependencies [9].

- Generative Adversarial Networks (GANs): GAN-based segmentation models enhance the precision of medical image delineation by generating realistic synthetic data and refining segmentation boundaries [10].

- EEG and MEG: While traditionally used for functional brain mapping, AI-assisted segmentation techniques now improve spatial resolution by segmenting source-localized brain activity. DL enhances artifact removal and signal interpretation [11].

- fNIRS: AI models segment hemodynamic responses from fNIRS data, distinguishing oxygenated and deoxygenated hemoglobin concentrations to map cortical activity with higher precision.

- EMG: AI-driven segmentation aids in the precise identification of muscle activity patterns, improving applications in neuromuscular disorder diagnosis and prosthetic control.

- ECoG and High-Density Arrays: AI models segment cortical activity recorded from ECoG and high-density electrode arrays, enabling more refined brain mapping for epilepsy monitoring and BCI applications [14].

1.3. Challenges and Future Directions

1.4. Importance of Precision Neurosurgery

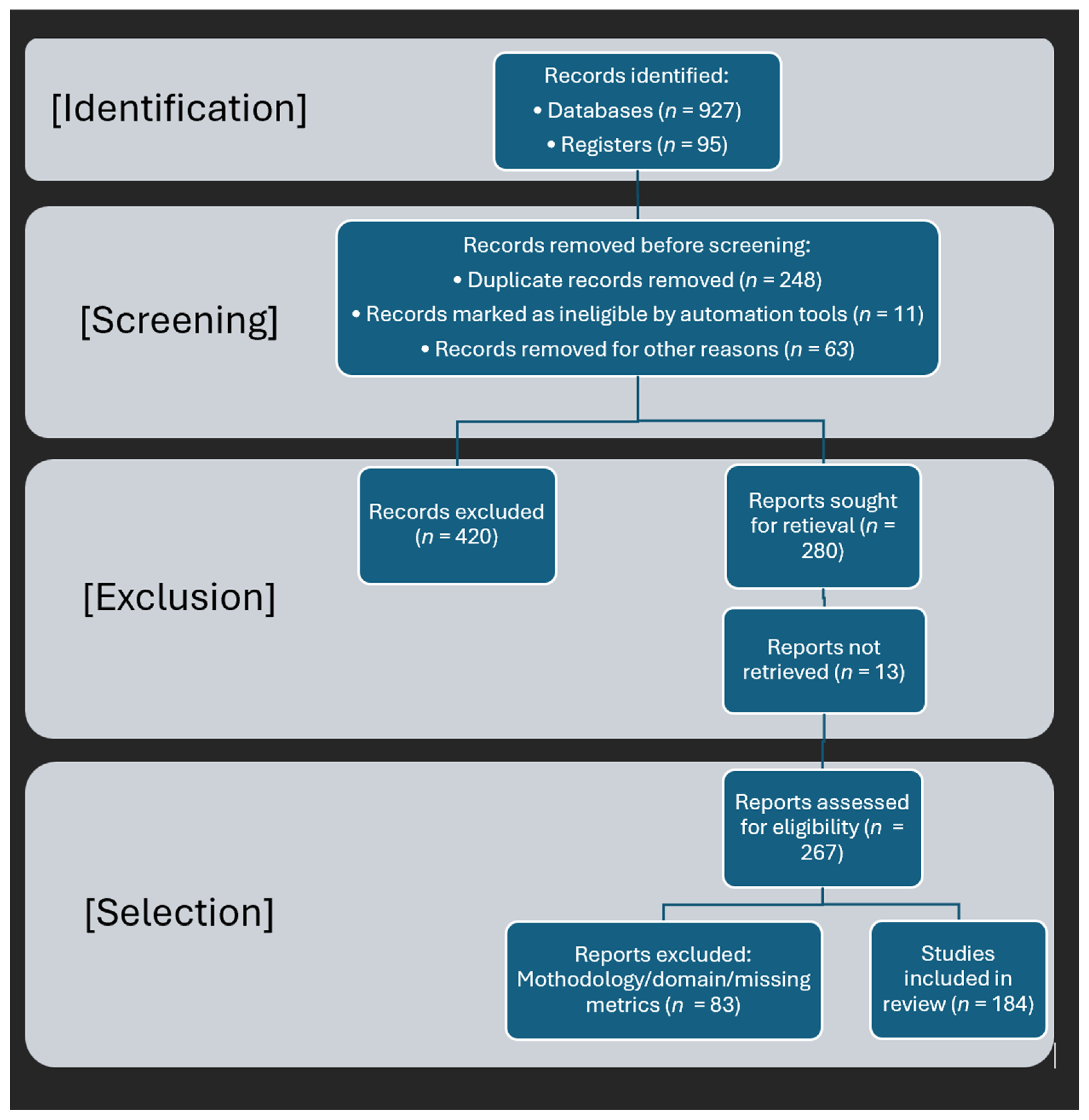

1.5. Methodology and Literature Selection

1.6. Contributions of This Review

1.7. Structure of the Paper

2. Methods

2.1. Advanced Neuroimaging Modalities for Precision Neurosurgery

2.1.1. Role of Neuroimaging in Precision Neurosurgery

- MRI and CT: MRI and CT scans serve as foundational tools for visualizing anatomical structures, aiding in tumor resection, and identifying vascular abnormalities [22].

- FGS: The use of fluorescence agents such as 5-ALA enhances real-time intraoperative tumor visualization, thereby improving the accuracy of surgical resection [28].

2.1.2. Clinical Relevance in Neurosurgical Practice

- Brain Tumor Resection: AI-enhanced segmentation assists in accurately distinguishing tumor margins from healthy tissue, thereby reducing the risk of postoperative neurological deficits [40]. Studies have demonstrated that DL models such as CNNs and transformers outperform traditional segmentation methods in identifying tumor boundaries, leading to improved surgical planning [41].

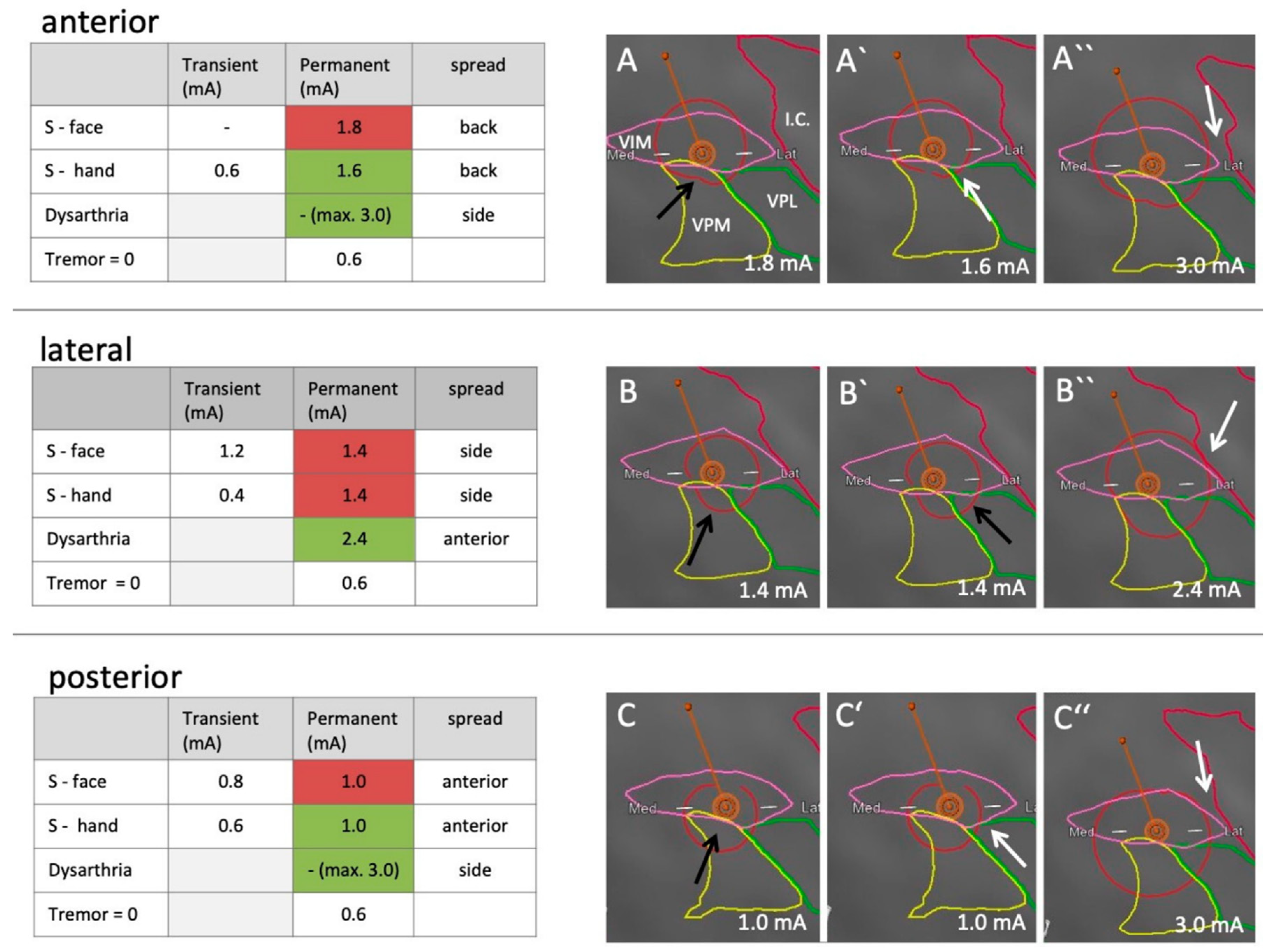

- Deep Brain Stimulation (DBS) Planning: Accurate segmentation of subcortical structures is crucial for optimal electrode placement in DBS procedures used to treat movement disorders such as Parkinson’s disease [43]. AI-based volumetric segmentation has been shown to enhance the precision of target selection in DBS, thereby improving therapeutic outcomes [44].

- Epilepsy Surgery: AI-based identification of seizure foci enhances the precision of both resective and neuromodulatory treatments for epilepsy [46]. Machine learning algorithms, particularly support vector machines (SVMs) and recurrent neural networks (RNNs), have been employed to analyze intracranial EEG (iEEG) signals and detect epileptogenic zones with high accuracy [47].

- Interpretability: The “black box” nature of many AI models remains a significant barrier to clinical adoption. To improve transparency, XAI approaches such as attention mechanisms and saliency maps are being explored to provide visual interpretability of AI-generated segmentations [49]. These techniques enhance clinician trust and facilitate regulatory approval [50].

- Regulatory Approvals: AI-driven medical imaging tools require rigorous validation and approval from regulatory bodies such as the U.S. Food and Drug Administration (USFDA) and the European Conformité Européenne (ECE) certification before they can be deployed in clinical settings [51]. Regulatory frameworks are continually evolving to address concerns related to data privacy, bias, and reliability.

- Intraoperative Validation: Real-time validation of AI-generated segmentations during surgery remains a challenge. AI must seamlessly integrate with intraoperative imaging systems, such as neuronavigation platforms, to ensure reliable guidance during neurosurgical procedures [52,53]. Additionally, AR and AI-assisted robotics are emerging as potential solutions for improving intraoperative accuracy [54].

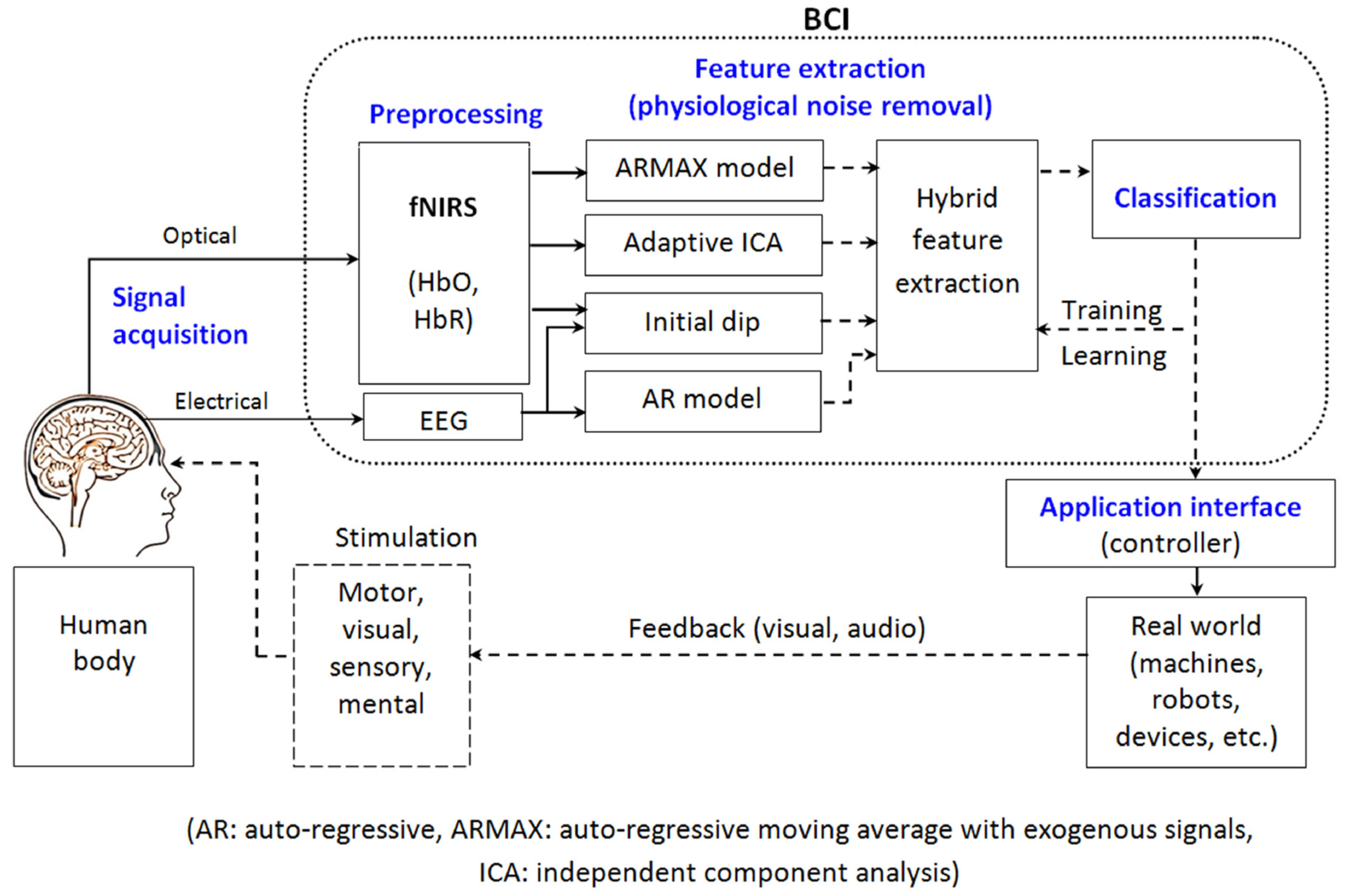

2.2. Brain–Computer Interfaces: Principles and Applications

2.2.1. Fundamentals of BCIs

Signal Acquisition

Signal Processing and Feature Extraction

Control and Feedback Mechanisms

2.2.2. BCI Paradigms

Motor Imagery (MI)

P300 Event-Related Potential (ERP)

Steady-State Visual Evoked Potentials (SSVEPs)

2.2.3. Neuroimaging Modalities for BCI

Electrophysiological Modalities

EEG

ECoG

LFPs

Single-Unit and Multi-Unit Recordings

Hemodynamic and Metabolic Modalities

fNIRS

fMRI

MEG

Emerging and Hybrid Modalities

EEG-fNIRS Hybrid Systems

EEG-fMRI Hybrid Systems

Invasive Hybrid BCIs

2.2.4. Latest Developments

2.3. AI-Driven Brain Image Segmentation: State-of-the-Art

2.3.1. Machine Learning and Deep Learning in Image Segmentation

2.3.2. Mathematical Formulation of CNN-Based Segmentation

- Cross-entropy loss, used for pixel-wise classification:

- Dice loss, which quantifies the degree of overlap between the predicted and true segmentation masks:

- Focal loss, designed to mitigate class imbalance by down-weighting easily classified examples:

2.4. Hybrid BCI and Image Segmentation Model for Precision Neurosurgery

2.4.1. System Architecture and Workflow

- Neural Signal Acquisition: EEG and ECoG signals are collected using high-resolution sensors to capture real-time brain activity. Recent advances in non-invasive and minimally invasive BCI techniques improve spatial resolution and signal fidelity, enabling finer neuro-modulatory applications [79,90,124].

- Preprocessing Pipeline: Raw neural signals undergo artifact removal, band-pass filtering, and feature extraction to ensure noise-free input for classification. State-of-the-art signal processing frameworks integrate ICA and wavelet decomposition to enhance the robustness of feature extraction [125].

- DL-Based Image Segmentation: MRI and CT images are processed using transformer-based segmentation models, such as Swin UNETR, for precise delineation of brain structures. The combination of CNNs and self-attention mechanisms significantly improves segmentation accuracy in glioma detection and tumor boundary definition [38].

- Decision Support System (DSS): The integration of BCI-derived cognitive feedback and AI-based image analysis aids neurosurgeons in optimizing surgical interventions. Multimodal data fusion techniques enhance real-time surgical decision-making, reducing intraoperative errors and improving patient outcomes [126].

- Cloud Integration: A cloud-based AI/ML framework ensures scalability and real-time computational efficiency. Federated learning models deployed in cloud-based medical AI systems facilitate secure, distributed model training while maintaining patient data privacy [127].

2.4.2. Signal Processing for Real-Time Neurosurgical Assistance

- Fourier and Wavelet Transforms: Fourier and wavelet transforms are essential mathematical tools for analyzing EEG signals in the frequency domain. The Fourier transform (FT) decomposes EEG waveforms into constituent frequency components, allowing researchers to identify specific oscillatory patterns associated with cognitive processes and motor intentions. However, the FT assumes stationarity in the signal, which is not always applicable to dynamic brain activity [128].

- Independent Component Analysis (ICA): Neural signal recordings, especially EEG, often contain artifacts from non-neural sources such as eye blinks, muscle movements, and external electrical noise. ICA is a powerful statistical technique used to separate and remove these unwanted artifacts while preserving relevant neural information.

- Deep Neural Networks (DNNs): Recent advancements in DL have significantly improved EEG-based neural decoding. CNNs and RNNs are particularly effective in extracting spatial and temporal features from EEG data, enabling the classification of brain states with high precision [130].

- ○

- CNNs: These networks process EEG signals as spatially structured data, identifying patterns related to motor imagery, cognitive load, and surgical stress responses. CNNs efficiently learn hierarchical representations, making them robust against variations in electrode placement and signal noise.

- ○

- RNNs and Long Short-Term Memory (LSTM) Networks: Unlike CNNs, RNNs capture temporal dependencies in EEG signals. LSTM networks, a variant of RNNs, are particularly effective in modeling sequential EEG data, predicting user intent, and tracking dynamic changes in brain activity over time.

- Kalman Filters (KFs) and Hidden Markov Models (HMMs): Decoding neural signals in real time involves inherent uncertainty due to noise, signal fluctuations, and measurement errors. KFs and HMMs are probabilistic frameworks designed to address these challenges by smoothing and predicting neural signal patterns.

- ○

- Kalman Filters: These are widely used in brain–computer interfaces to estimate dynamic brain states based on noisy EEG measurements. In neurosurgical applications, Kalman filters improve the real-time tracking of neural activity, making it possible to predict intended movements with greater precision.

- ○

- HMMs are particularly effective for modeling sequential neural events, such as transitions between different mental states or MI patterns. HMMs assign probabilistic states to EEG sequences, enhancing the accuracy of neurofeedback and BCI-driven assistive technologies.

2.4.3. Automated Brain Image Analysis Using DL

- Transformer-Based Segmentation: Traditional convolutional networks often struggle to maintain spatial consistency in brain MRI segmentation. Transformer-based models such as Swin UNETR and TransUNet address this limitation by incorporating self-attention mechanisms that improve feature representation across long-range spatial dependencies.

- ○

- Swin UNETR: A hierarchical vision transformer that refines feature extraction while preserving high-resolution structural details in brain MRI scans.

- ○

- TransUNet: A hybrid model that combines CNN feature extraction with transformer-based contextual modeling, leading to superior segmentation accuracy in neurosurgical planning and brain tumor delineation [132].

- Hybrid Attention Mechanisms: DL-based brain segmentation benefits from hybrid attention models, which combine self-attention (global feature learning) and spatial attention (local feature refinement). This approach enhances the precision of region delineation, crucial for neurosurgical decision-making [133].

- Self-Supervised Learning (SSL): One major limitation of DL in medical imaging is the reliance on large manually labeled datasets. SSL mitigates this issue by leveraging contrastive learning techniques to pre-train models using unlabeled data. This method significantly reduces annotation requirements while maintaining high segmentation accuracy [134].

- Multi-Modal Fusion: Combining data from multiple imaging modalities, including MRI, CT, and fMRI, enhances diagnostic accuracy by integrating complementary information. DL models perform multi-modal fusion using attention mechanisms, improving robustness against modality-specific noise and artifacts [135].

2.4.4. Integration with Cloud-Based AI/ML Platforms

- Edge Computing for Low-Latency Processing: To ensure real-time inference in surgical settings, edge computing is employed, enabling on-device processing with minimal latency. This is critical for applications requiring immediate neural signal decoding and feedback mechanisms [136]. Additionally, transformer-based segmentation models used in the system are quantized for on-device inference, enabling real-time processing on edge hardware such as embedded ARM-based systems or neurosurgical workstations with limited GPU capabilities. This significantly reduces latency and reliance on high-bandwidth connectivity, allowing responsive decision support in intraoperative and bedside settings.

- AutoML for Continuous Model Optimization: AutoML techniques automate model selection, hyperparameter tuning, and retraining, allowing continuous improvement of neurosurgical AI models [139]. To further address hardware constraints, knowledge distillation pipelines are employed to generate lightweight student models from large pre-trained segmentation networks. These distilled models retain diagnostic performance while reducing parameter count and computational load, making them suitable for deployment in clinics with modest computational infrastructure. Additionally, AutoML-guided pruning strategies dynamically trim non-contributing network branches, reducing memory footprint and accelerating inference times. To address this, the system integrates adaptive learning mechanisms, including transfer learning and few-shot learning, which enable the model to recalibrate individual neural signatures using minimal new data. This dynamic personalization helps maintain performance despite inter-subject heterogeneity or intra-session variability. Additionally, real-time signal quality estimators such as entropy-based thresholds and SNR filters are incorporated to detect and reject artifact-heavy or physiologically implausible EEG segments before feature extraction. These estimators operate in conjunction with established preprocessing routines—such as ICA, Kalman filtering, and wavelet decomposition—to enhance the reliability of extracted neural features.

- Blockchain for Data Integrity: Blockchain technology ensures tamper-proof medical records through smart contracts, enhancing transparency and security in neurosurgical data management. Smart contracts ensure unbiased and tamper-proof record-keeping of surgical decisions and patient data [140].

2.5. Performance Evaluation and Statistical Analysis

2.5.1. Performance Metrics for BCI Systems

2.5.2. Evaluating Segmentation Accuracy

2.5.3. Statistical Significance Testing

- Paired t-test: Used when comparing the performance of two models on the same dataset, evaluating whether the mean difference between paired observations is statistically significant.

- Wilcoxon Signed-Rank Test: A non-parametric alternative to the paired t-test, suitable when the data does not follow a normal distribution.

- Analysis of Variance (ANOVA): Applied when comparing multiple models or experimental conditions to determine whether significant differences exist among them.

- Permutation Testing: A robust statistical method used to assess the significance of performance differences by randomly shuffling labels and recalculating metrics to generate a null distribution.

3. Results and Discussion

3.1. Challenges in Real-World Implementation

3.2. Ethical Considerations in AI-Driven Neurosurgical Systems

3.3. Future Research Directions

3.4. Translational Impact of AI-Based Segmentation Models in Neurosurgical Practice

- TransUNet: One of the first architectures to integrate transformers into medical segmentation [167]. TransUNet combines a CNN encoder with a transformer module for long-range dependency capture, and a decoder for precise localization. This hybrid design has shown improved accuracy over pure CNNs—for instance, TransUNet yielded ~1–4% higher Dice scores than the robust nnU-Net on multi-organ and tumor segmentation tasks. In neurosurgical imaging, added self-attention allows better identification of diffuse or irregular tumor margins than convolution alone.

- Swin UNETR: A 3D segmentation model using a Swin transformer-based encoder with a U-Net style decoder [168]. By employing hierarchical transformers (Swin) that compute self-attention in shifted windows, Swin UNETR excels at capturing multi-scale context in volumetric MRI. This model achieved state-of-the-art performance on the brain tumor segmentation (BraTS) challenge, with reported average Dice scores ~90%+ across tumor subregions. Such performance illustrates the ability of transformer-based models to handle the variable sizes and shapes of neurosurgical pathologies.

- nnU-Net: A self-configuring framework that automatically tunes the segmentation pipeline to a given dataset. Rather than a novel network architecture, nnU-Net optimizes preprocessing, architecture selection, training, and postprocessing in an all-in-one manner [169]. It has dominated many medical segmentation benchmarks, including neurosurgical tasks, by adapting U-Net variants to the data at hand. Remarkably, nnU-Net out-of-the-box has matched or surpassed custom models on 23 public datasets. In neurosurgical applications (tumor, vessel, and tract segmentation), nnU-Net’s optimized approach yields Dice scores often above 90%, essentially setting a performance ceiling that new architectures strive to beat.

| Model | Key Characteristics | Example Application (Dataset) | Performance (Dice) | Ref. |

|---|---|---|---|---|

| U-Net | CNN encoder–decoder with skip connections; first widely adopted medical segmentation network. | Brain tumor MRI segmentation (BraTS) [170] | ~85% (whole tumor Dice) | [171,172] |

| TransUNet | Hybrid transformer + U-Net architecture capturing long-range context. | Multiorgan CT; also applied to brain tumors. | Outperforms basic U-Net (e.g., +1–4% Dice vs. nnU-Net). | [167,173] |

| Swin UNETR | Swin transformer encoder with U-Net decoder for 3D volumes. | Brain tumors (BraTS 2021) | ~90–93% (Dice across tumor subregions) | [168] |

| nnU-Net | Auto-configuring U-Net pipeline; no manual tuning needed. | Multiple (tumors, vessels, etc.—various challenges) | ~90%+ (top performance on numerous tasks) | [159] |

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mudgal, S.K.; Sharma, S.K.; Chaturvedi, J.; Sharma, A. Brain Computer Interface Advancement in Neurosciences: Applications and Issues. Interdiscip. Neurosurg. 2020, 20, 100694. [Google Scholar] [CrossRef]

- Vidal, J.J. Toward Direct Brain-Computer Communication. Annu. Rev. Biophys. Bioeng. 1973, 2, 157–180. [Google Scholar] [CrossRef]

- Maiseli, B.; Abdalla, A.T.; Massawe, L.V.; Mbise, M.; Mkocha, K.; Nassor, N.A.; Ismail, M.; Michael, J.; Kimambo, S. Brain-Computer Interface: Trend, Challenges, and Threats. Brain Inform. 2023, 10, 20. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Wang, R.; Gao, X.; Hong, B.; Gao, S. A Practical Vep-Based Brain-Computer Interface. IEEE Trans. Neural Syst. Rehabil. Eng. 2006, 14, 234–240. [Google Scholar] [CrossRef]

- Ang, K.K.; Guan, C.; Phua, K.S.; Wang, C.; Zhou, L.; Tang, K.Y.; Ephraim Joseph, G.J.; Keong Kuah, C.W.; Geok Chua, K.S. Brain-Computer Interface-Based Robotic End Effector System for Wrist and Hand Rehabilitation: Results of a Three-Armed Randomized Controlled Trial for Chronic Stroke. Front. Neuroeng. 2014, 7, 30. [Google Scholar] [CrossRef] [PubMed]

- Yuste, R.; Goering, S.; Agüeray Arcas, B.; Bi, G.; Carmena, J.M.; Carter, A.; Fins, J.J.; Friesen, P.; Gallant, J.; Huggins, J.E.; et al. Four Ethical Priorities for Neurotechnologies and AI. Nature 2017, 551, 159–163. [Google Scholar] [CrossRef]

- Brocal, F. Brain-Computer Interfaces in Safety and Security Fields: Risks and Applications. Saf. Sci. 2023, 160, 106051. [Google Scholar] [CrossRef]

- Xu, Y.; Quan, R.; Xu, W.; Huang, Y.; Chen, X.; Liu, F. Advances in Medical Image Segmentation: A Comprehensive Review of Traditional, Deep Learning and Hybrid Approaches. Bioengineering 2024, 11, 1034. [Google Scholar] [CrossRef]

- Khan, R.F.; Lee, B.D.; Lee, M.S. Transformers in Medical Image Segmentation: A Narrative Review. Quant. Imaging Med. Surg. 2023, 13, 8747–8767. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015—Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Liu, Z.; Ding, L.; He, B. Integration of EEG/MEG with MRI and FMRI in Functional Neuroimaging. IEEE Eng. Med. Biol. Mag. 2006, 25, 46. [Google Scholar] [CrossRef]

- Christ, P.F.; Ettlinger, F.; Grün, F.; Elshaera, M.E.A.; Lipkova, J.; Schlecht, S.; Ahmaddy, F.; Tatavarty, S.; Bickel, M.; Bilic, P.; et al. Automatic Liver and Tumor Segmentation of CT and MRI Volumes Using Cascaded Fully Convolutional Neural Networks. arXiv 2017, arXiv:1702.05970. [Google Scholar]

- Panayides, A.S.; Amini, A.; Filipovic, N.D.; Sharma, A.; Tsaftaris, S.A.; Young, A.; Foran, D.; Do, N.; Golemati, S.; Kurc, T.; et al. AI in Medical Imaging Informatics: Current Challenges and Future Directions. IEEE J. Biomed. Health Inform. 2020, 24, 1837–1857. [Google Scholar] [CrossRef]

- Hamilton, L.S.; Chang, D.L.; Lee, M.B.; Chang, E.F. Semi-Automated Anatomical Labeling and Inter-Subject Warping of High-Density Intracranial Recording Electrodes in Electrocorticography. Front. Neuroinform. 2017, 11, 272432. [Google Scholar] [CrossRef] [PubMed]

- Ruberto, C.D.; Stefano, A.; Comelli, A.; Putzu, L.; Loddo, A.; Kebaili, A.; Lapuyade-Lahorgue, J.; Ruan, S. Deep Learning Approaches for Data Augmentation in Medical Imaging: A Review. J. Imaging 2023, 9, 81. [Google Scholar] [CrossRef]

- Chen, X.; Konukoglu, E. Unsupervised Detection of Lesions in Brain MRI Using Constrained Adversarial Auto-Encoders. arXiv 2018, arXiv:1806.04972. [Google Scholar]

- Pham, D.L.; Xu, C.; Prince, J.L. Current Methods in Medical Image Segmentation. Annu. Rev. Biomed. Eng. 2000, 2, 315–337. [Google Scholar] [CrossRef]

- Singh, A.; Sengupta, S.; Lakshminarayanan, V. Explainable Deep Learning Models in Medical Image Analysis. J. Imaging 2020, 6, 52. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Gomez, C.; Huang, C.M.; Unberath, M. Explainable Medical Imaging AI Needs Human-Centered Design: Guidelines and Evidence from a Systematic Review. Npj Digit. Med. 2022, 5, 156. [Google Scholar] [CrossRef]

- Chatfield, K.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Return of the Devil in the Details: Delving Deep into Convolutional 1505 Nets. arXiv 2014, arXiv:1405.3531. [Google Scholar]

- Mahmood, A.; Patille, R.; Lam, E.; Mora, D.J.; Gurung, S.; Bookmyer, G.; Weldrick, R.; Chaudhury, H.; Canham, S.L. Correction: Mahmood et al. Aging in the Right Place for Older Adults Experiencing Housing Insecurity: An Environmental Assessment of Temporary Housing Program. Int. J. Environ. Res. Public Health 2023, 20, 6260. [Google Scholar] [CrossRef]

- Fathi Kazerooni, A.; Arif, S.; Madhogarhia, R.; Khalili, N.; Haldar, D.; Bagheri, S.; Familiar, A.M.; Anderson, H.; Haldar, S.; Tu, W.; et al. Automated Tumor Segmentation and Brain Tissue Extraction from Multiparametric MRI of Pediatric Brain Tumors: A Multi-Institutional Study. Neurooncol. Adv. 2023, 5, vdad027. [Google Scholar] [CrossRef]

- Belliveau, J.W.; Kennedy, D.N.; McKinstry, R.C.; Buchbinder, B.R.; Weisskoff, R.M.; Cohen, M.S.; Vevea, J.M.; Brady, T.J.; Rosen, B.R. Functional Mapping of the Human Visual Cortex by Magnetic Resonance Imaging. Science 1991, 254, 716–719. [Google Scholar] [CrossRef]

- Ogawa, S.; Tank, D.W.; Menon, R.; Ellermann, J.M.; Kim, S.G.; Merkle, H.; Ugurbil, K. Intrinsic Signal Changes Accompanying Sensory Stimulation: Functional Brain Mapping with Magnetic Resonance Imaging. Proc. Natl. Acad. Sci. USA 1992, 89, 5951–5955. [Google Scholar] [CrossRef]

- Sterman, M.B.; Friar, L. Suppression of Seizures in Epileptic Following on Sensorimotor EEG Feedback Training. Electroencephalogr. Clin. Neurophysiol. 1972, 33, 89–95. [Google Scholar] [CrossRef] [PubMed]

- Alarcon, G.; Garcia Seoane, J.J.; Binnie, C.D.; Martin Miguel, M.C.; Juler, J.; Polkey, C.E.; Elwes, R.D.C.; Ortiz Blasco, J.M. Origin and Propagation of Interictal Discharges in the Acute Electrocorticogram. Implications for Pathophysiology and Surgical Treatment of Temporal Lobe Epilepsy. Brain 1997, 120, 2259–2282. [Google Scholar] [CrossRef]

- Pan, R.; Yang, C.; Li, Z.; Ren, J.; Duan, Y. Magnetoencephalography-Based Approaches to Epilepsy Classification. Front. Neurosci. 2023, 17, 1183391. [Google Scholar] [CrossRef] [PubMed]

- Schupper, A.J.; Rao, M.; Mohammadi, N.; Baron, R.; Lee, J.Y.K.; Acerbi, F.; Hadjipanayis, C.G. Fluorescence-Guided Surgery: A Review on Timing and Use in Brain Tumor Surgery. Front. Neurol. 2021, 12, 682151. [Google Scholar] [CrossRef] [PubMed]

- Hassan, A.M.; Rajesh, A.; Asaad, M.; Nelson, J.A.; Coert, J.H.; Mehrara, B.J.; Butler, C.E. Artificial Intelligence and Machine Learning in Prediction of Surgical Complications: Current State, Applications, and Implications. Am. Surg. 2022, 89, 25. [Google Scholar] [CrossRef]

- Spyrantis, A.; Woebbecke, T.; Rueß, D.; Constantinescu, A.; Gierich, A.; Luyken, K.; Visser-Vandewalle, V.; Herrmann, E.; Gessler, F.; Czabanka, M.; et al. Accuracy of Robotic and Frame-Based Stereotactic Neurosurgery in a Phantom Model. Front. Neurorobot. 2022, 16, 762317. [Google Scholar] [CrossRef]

- Matsuzaki, K.; Kumatoriya, K.; Tando, M.; Kometani, T.; Shinohara, M. Polyphenols from Persimmon Fruit Attenuate Acetaldehyde-Induced DNA Double-Strand Breaks by Scavenging Acetaldehyde. Sci. Rep. 2022, 12, 10300. [Google Scholar] [CrossRef]

- Belkacem, A.N.; Jamil, N.; Khalid, S.; Alnajjar, F. On Closed-Loop Brain Stimulation Systems for Improving the Quality of Life of Patients with Neurological Disorders. Front. Hum. Neurosci. 2023, 17, 1085173. [Google Scholar] [CrossRef] [PubMed]

- Mokienko, O.A. Brain-Computer Interfaces with Intracortical Implants for Motor and Communication Functions Compensation: Review of Recent Developments. Mod. Technol. Med. 2024, 16, 78. [Google Scholar] [CrossRef] [PubMed]

- Vadhavekar, N.H.; Sabzvari, T.; Laguardia, S.; Sheik, T.; Prakash, V.; Gupta, A.; Umesh, I.D.; Singla, A.; Koradia, I.; Patiño, B.B.R.; et al. Advancements in Imaging and Neurosurgical Techniques for Brain Tumor Resection: A Comprehensive Review. Cureus 2024, 16, e72745. [Google Scholar] [CrossRef]

- Livanis, E.; Voultsos, P.; Vadikolias, K.; Pantazakos, P.; Tsaroucha, A. Understanding the Ethical Issues of Brain-Computer Interfaces (BCIs): A Blessing or the Beginning of a Dystopian Future? Cureus 2024, 16, e58243. [Google Scholar] [CrossRef] [PubMed]

- Iftikhar, M.; Saqib, M.; Zareen, M.; Mumtaz, H. Artificial Intelligence: Revolutionizing Robotic Surgery: Review. Ann. Med. Surg. 2024, 86, 5401. [Google Scholar] [CrossRef]

- Abu Mhanna, H.Y.; Omar, A.F.; Radzi, Y.M.; Oglat, A.A.; Akhdar, H.F.; Al Ewaidat, H.; Almahmoud, A.; Bani Yaseen, A.B.; Al Badarneh, L.; Alhamad, O.; et al. Systematic Review of Functional Magnetic Resonance Imaging (FMRI) Applications in the Preoperative Planning and Treatment Assessment of Brain Tumors. Heliyon 2025, 11, e42464. [Google Scholar] [CrossRef]

- Yue, W.; Zhang, H.; Zhou, J.; Li, G.; Tang, Z.; Sun, Z.; Cai, J.; Tian, N.; Gao, S.; Dong, J.; et al. Deep Learning-Based Automatic Segmentation for Size and Volumetric Measurement of Breast Cancer on Magnetic Resonance Imaging. Front. Oncol. 2022, 12, 984626. [Google Scholar] [CrossRef]

- Manakitsa, N.; Maraslidis, G.S.; Moysis, L.; Fragulis, G.F. A Review of Machine Learning and Deep Learning for Object Detection, Semantic Segmentation, and Human Action Recognition in Machine and Robotic Vision. Technologies 2024, 12, 15. [Google Scholar] [CrossRef]

- Agadi, K.; Dominari, A.; Tebha, S.S.; Mohammadi, A.; Zahid, S. Neurosurgical Management of Cerebrospinal Tumors in the Era of Artificial Intelligence: A Scoping Review. J. Korean Neurosurg. Soc. 2022, 66, 632. [Google Scholar] [CrossRef]

- Rayed, M.E.; Islam, S.M.S.; Niha, S.I.; Jim, J.R.; Kabir, M.M.; Mridha, M.F. Deep Learning for Medical Image Segmentation: State-of-the-Art Advancements and Challenges. Inform. Med. Unlocked 2024, 47, 101504. [Google Scholar] [CrossRef]

- Ranjbarzadeh, R.; Bagherian Kasgari, A.; Jafarzadeh Ghoushchi, S.; Anari, S.; Naseri, M.; Bendechache, M. Brain Tumor Segmentation Based on Deep Learning and an Attention Mechanism Using MRI Multi-Modalities Brain Images. Sci. Rep. 2021, 11, 10930. [Google Scholar] [CrossRef]

- Fariba, K.A.; Gupta, V. Deep Brain Stimulation. In Encyclopedia of Movement Disorders; Lang, A.E., Lozano, A.M., Eds.; Elsevier: Oxford, UK, 2010; Volume 1, pp. 277–282. [Google Scholar] [CrossRef]

- Chandra, V.; Hilliard, J.D.; Foote, K.D. Deep Brain Stimulation for the Treatment of Tremor. J. Neurol. Sci. 2022, 435, 120190. [Google Scholar] [CrossRef] [PubMed]

- Krüger, M.T.; Kurtev-Rittstieg, R.; Kägi, G.; Naseri, Y.; Hägele-Link, S.; Brugger, F. Evaluation of Automatic Segmentation of Thalamic Nuclei through Clinical Effects Using Directional Deep Brain Stimulation Leads: A Technical Note. Brain Sci. 2020, 10, 642. [Google Scholar] [CrossRef]

- Miller, K.J.; Fine, A.L. Decision Making in Stereotactic Epilepsy Surgery. Epilepsia 2022, 63, 2782. [Google Scholar] [CrossRef] [PubMed]

- Mirchi, N.; Warsi, N.M.; Zhang, F.; Wong, S.M.; Suresh, H.; Mithani, K.; Erdman, L.; Ibrahim, G.M. Decoding Intracranial EEG With Machine Learning: A Systematic Review. Front. Hum. Neurosci. 2022, 16, 913777. [Google Scholar] [CrossRef] [PubMed]

- Courtney, M.R.; Sinclair, B.; Neal, A.; Nicolo, J.P.; Kwan, P.; Law, M.; O’Brien, T.J.; Vivash, L. Automated Segmentation of Epilepsy Surgical Resection Cavities: Comparison of Four Methods to Manual Segmentation. Neuroimage 2024, 296, 120682. [Google Scholar] [CrossRef]

- Hassija, V.; Chamola, V.; Mahapatra, A.; Singal, A.; Goel, D.; Huang, K.; Scardapane, S.; Spinelli, I.; Mahmud, M.; Hussain, A. Interpreting Black-Box Models: A Review on Explainable Artificial Intelligence. Cognit. Comput. 2024, 16, 45–74. [Google Scholar] [CrossRef]

- Mienye, I.D.; Obaido, G.; Jere, N.; Mienye, E.; Aruleba, K.; Emmanuel, I.D.; Ogbuokiri, B. A Survey of Explainable Artificial Intelligence in Healthcare: Concepts, Applications, and Challenges. Inform. Med. Unlocked 2024, 51, 101587. [Google Scholar] [CrossRef]

- Liu, Y.; Yu, W.; Dillon, T. Regulatory Responses and Approval Status of Artificial Intelligence Medical Devices with a Focus on China. NPJ Digit. Med. 2024, 7, 255. [Google Scholar] [CrossRef]

- Khan, D.Z.; Valetopoulou, A.; Das, A.; Hanrahan, J.G.; Williams, S.C.; Bano, S.; Borg, A.; Dorward, N.L.; Barbarisi, S.; Culshaw, L.; et al. Artificial Intelligence Assisted Operative Anatomy Recognition in Endoscopic Pituitary Surgery. NPJ Digit. Med. 2024, 7, 314. [Google Scholar] [CrossRef]

- Nam, S.M.; Byun, Y.H.; Dho, Y.-S.; Park, C.-K. Envisioning the Future of the Neurosurgical Operating Room with the Concept of the Medical Metaverse. J. Korean Neurosurg. Soc. 2025, 68, 137–149. [Google Scholar] [CrossRef] [PubMed]

- Brockmeyer, P.; Wiechens, B.; Schliephake, H. The Role of Augmented Reality in the Advancement of Minimally Invasive Surgery Procedures: A Scoping Review. Bioengineering 2023, 10, 501. [Google Scholar] [CrossRef] [PubMed]

- Tangsrivimol, J.A.; Schonfeld, E.; Zhang, M.; Veeravagu, A.; Smith, T.R.; Härtl, R.; Lawton, M.T.; El-Sherbini, A.H.; Prevedello, D.M.; Glicksberg, B.S.; et al. Artificial Intelligence in Neurosurgery: A State-of-the-Art Review from Past to Future. Diagnostics 2023, 13, 2429. [Google Scholar] [CrossRef] [PubMed]

- Cervera, M.A.; Soekadar, S.R.; Ushiba, J.; Millán, J.d.R.; Liu, M.; Birbaumer, N.; Garipelli, G. Brain–Computer Interfaces for Post-Stroke Motor Rehabilitation: A Meta-Analysis. Ann. Clin. Transl. Neurol. 2018, 5, 651–663. [Google Scholar] [CrossRef]

- Caiado, F.; Ukolov, A. The History, Current State and Future Possibilities of the Non-Invasive Brain Computer Interfaces. Med. Nov. Technol. Devices 2025, 25, 100353. [Google Scholar] [CrossRef]

- Brookes, M.J.; Leggett, J.; Rea, M.; Hill, R.M.; Holmes, N.; Boto, E.; Bowtell, R. Magnetoencephalography with Optically Pumped Magnetometers (OPM-MEG): The next Generation of Functional Neuroimaging. Trends Neurosci. 2022, 45, 621–634. [Google Scholar] [CrossRef]

- Acuña, K.; Sapahia, R.; Jiménez, I.N.; Antonietti, M.; Anzola, I.; Cruz, M.; García, M.T.; Krishnan, V.; Leveille, L.A.; Resch, M.D.; et al. Functional Near-Infrared Spectrometry as a Useful Diagnostic Tool for Understanding the Visual System: A Review. J. Clin. Med. 2024, 13, 282. [Google Scholar] [CrossRef]

- Coles, L.; Ventrella, D.; Carnicer-Lombarte, A.; Elmi, A.; Troughton, J.G.; Mariello, M.; El Hadwe, S.; Woodington, B.J.; Bacci, M.L.; Malliaras, G.G.; et al. Origami-Inspired Soft Fluidic Actuation for Minimally Invasive Large-Area Electrocorticography. Nat. Commun. 2024, 15, 6290. [Google Scholar] [CrossRef]

- Hong, J.W.; Yoon, C.; Jo, K.; Won, J.H.; Park, S. Recent Advances in Recording and Modulation Technologies for Next-Generation Neural Interfaces. IScience 2021, 24, 103550. [Google Scholar] [CrossRef]

- Islam, M.K.; Rastegarnia, A.; Sanei, S. Signal Artifacts and Techniques for Artifacts and Noise Removal. Intell. Syst. Ref. Libr. 2021, 192, 23–79. [Google Scholar] [CrossRef]

- Barnova, K.; Mikolasova, M.; Kahankova, R.V.; Jaros, R.; Kawala-Sterniuk, A.; Snasel, V.; Mirjalili, S.; Pelc, M.; Martinek, R. Implementation of Artificial Intelligence and Machine Learning-Based Methods in Brain-Computer Interaction. Comput. Biol. Med. 2023, 163, 107135. [Google Scholar] [CrossRef]

- Xu, Y.; Zhou, Y.; Sekula, P.; Ding, L. Machine Learning in Construction: From Shallow to Deep Learning. Dev. Built Environ. 2021, 6, 100045. [Google Scholar] [CrossRef]

- Chaudhary, U. Machine Learning with Brain Data. In Expanding Senses Using Neurotechnology; Springer: Berlin/Heidelberg, Germany, 2025; pp. 179–223. [Google Scholar] [CrossRef]

- Si-Mohammed, H.; Petit, J.; Jeunet, C.; Argelaguet, F.; Spindler, F.; Evain, A.; Roussel, N.; Casiez, G.; Lecuyer, A. Towards BCI-Based Interfaces for Augmented Reality: Feasibility, Design and Evaluation. IEEE Trans. Vis. Comput. Graph. 2020, 26, 1608–1621. [Google Scholar] [CrossRef]

- Kim, S.; Lee, S.; Kang, H.; Kim, S.; Ahn, M. P300 Brain-Computer Interface-Based Drone Control in Virtual and Augmented Reality. Sensors 2021, 21, 5765. [Google Scholar] [CrossRef]

- Farwell, L.A.; Donchin, E. Talking off the Top of Your Head: Toward a Mental Prosthesis Utilizing Event-Related Brain Potentials. Electroencephalogr. Clin. Neurophysiol. 1988, 70, 510–523. [Google Scholar] [CrossRef]

- McFarland, D.J.; Wolpaw, J.R. EEG-Based Brain-Computer Interfaces. Curr. Opin. Biomed. Eng. 2017, 4, 194–200. [Google Scholar] [CrossRef]

- Awuah, W.A.; Ahluwalia, A.; Darko, K.; Sanker, V.; Tan, J.K.; Tenkorang, P.O.; Ben-Jaafar, A.; Ranganathan, S.; Aderinto, N.; Mehta, A.; et al. Bridging Minds and Machines: The Recent Advances of Brain-Computer Interfaces in Neurological and Neurosurgical Applications. World Neurosurg. 2024, 189, 138–153. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Neuper, C. Motor Imagery Activates Primary Sensorimotor Area in Humans. Neurosci. Lett. 1997, 239, 65–68. [Google Scholar] [CrossRef]

- Saibene, A.; Caglioni, M.; Corchs, S.; Gasparini, F. EEG-Based BCIs on Motor Imagery Paradigm Using Wearable Technologies: A Systematic Review. Sensors 2023, 23, 2798. [Google Scholar] [CrossRef]

- Pan, J.; Chen, X.N.; Ban, N.; He, J.S.; Chen, J.; Huang, H. Advances in P300 Brain-Computer Interface Spellers: Toward Paradigm Design and Performance Evaluation. Front. Hum. Neurosci. 2022, 16, 1077717. [Google Scholar] [CrossRef]

- Norcia, A.M.; Gregory Appelbaum, L.; Ales, J.M.; Cottereau, B.R.; Rossion, B. The Steady-State Visual Evoked Potential in Vision Research: A Review. J. Vis. 2015, 15, 4. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing Atari with Deep Reinforcement Learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Haslacher, D.; Akmazoglu, T.B.; van Beinum, A.; Starke, G.; Buthut, M.; Soekadar, S.R. AI for Brain-Computer Interfaces. Dev. Neuroethics Bioeth. 2024, 7, 3–28. [Google Scholar] [CrossRef]

- Siribunyaphat, N.; Punsawad, Y. Steady-State Visual Evoked Potential-Based Brain—Computer Interface Using a Novel Visual Stimulus with Quick Response (QR) Code Pattern. Sensors 2022, 22, 1439. [Google Scholar] [CrossRef]

- Neuper, C.; Müller-Putz, G.R.; Scherer, R.; Pfurtscheller, G. Motor Imagery and EEG-Based Control of Spelling Devices and Neuroprostheses. Prog. Brain Res. 2006, 159, 393–409. [Google Scholar] [CrossRef]

- Branco, M.P.; Pels, E.G.M.; Sars, R.H.; Aarnoutse, E.J.; Ramsey, N.F.; Vansteensel, M.J.; Nijboer, F. Brain-Computer Interfaces for Communication: Preferences of Individuals With Locked-in Syndrome. Neurorehabil. Neural Repair 2021, 35, 267–279. [Google Scholar] [CrossRef]

- Adewole, D.O.; Serruya, M.D.; Harris, J.P.; Burrell, J.C.; Petrov, D.; Chen, H.I.; Wolf, J.A.; Cullen, D.K. The Evolution of Neuroprosthetic Interfaces. Crit. Rev. Biomed. Eng. 2016, 44, 123. [Google Scholar] [CrossRef]

- Collinger, J.L.; Gaunt, R.A.; Schwartz, A.B. Progress towards Restoring Upper Limb Movement and Sensation through Intracortical Brain-Computer Interfaces. Curr. Opin. Biomed. Eng. 2018, 8, 84–92. [Google Scholar] [CrossRef]

- Collinger, J.L.; Wodlinger, B.; Downey, J.E.; Wang, W.; Tyler-Kabara, E.C.; Weber, D.J.; McMorland, A.J.C.; Velliste, M.; Boninger, M.L.; Schwartz, A.B. High-Performance Neuroprosthetic Control by an Individual with Tetraplegia. Lancet 2013, 381, 557–564. [Google Scholar] [CrossRef] [PubMed]

- Hu, X.; Assaad, R.H. The Use of Unmanned Ground Vehicles (Mobile Robots) and Unmanned Aerial Vehicles (Drones) in the Civil Infrastructure Asset Management Sector: Applications, Robotic Platforms, Sensors, and Algorithms. Expert Syst. Appl. 2023, 232, 120897. [Google Scholar] [CrossRef]

- Flesher, S.N.; Downey, J.E.; Weiss, J.M.; Hughes, C.L.; Herrera, A.J.; Tyler-Kabara, E.C.; Boninger, M.L.; Collinger, J.L.; Gaunt, R.A. A Brain-Computer Interface That Evokes Tactile Sensations Improves Robotic Arm Control. Science 2021, 372, 831–836. [Google Scholar] [CrossRef] [PubMed]

- Karmakar, S.; Kamilya, S.; Dey, P.; Guhathakurta, P.K.; Dalui, M.; Bera, T.K.; Halder, S.; Koley, C.; Pal, T.; Basu, A. Real Time Detection of Cognitive Load Using FNIRS: A Deep Learning Approach. Biomed. Signal Process. Control. 2023, 80, 104227. [Google Scholar] [CrossRef]

- Mughal, N.E.; Khan, M.J.; Khalil, K.; Javed, K.; Sajid, H.; Naseer, N.; Ghafoor, U.; Hong, K.S. EEG-FNIRS-Based Hybrid Image Construction and Classification Using CNN-LSTM. Front. Neurorobot. 2022, 16, 873239. [Google Scholar] [CrossRef]

- Murphy, E.; Poudel, G.; Ganesan, S.; Suo, C.; Manning, V.; Beyer, E.; Clemente, A.; Moffat, B.A.; Zalesky, A.; Lorenzetti, V. Real-Time FMRI-Based Neurofeedback to Restore Brain Function in Substance Use Disorders: A Systematic Review of the Literature. Neurosci. Biobehav. Rev. 2024, 165, 105865. [Google Scholar] [CrossRef]

- Van Der Lande, G.J.M.; Casas-Torremocha, D.; Manasanch, A.; Dalla Porta, L.; Gosseries, O.; Alnagger, N.; Barra, A.; Mejías, J.F.; Panda, R.; Riefolo, F.; et al. Brain State Identification and Neuromodulation to Promote Recovery of Consciousness. Brain Commun. 2024, 6, fcae362. [Google Scholar] [CrossRef]

- Papadopoulos, S.; Bonaiuto, J.; Mattout, J. An Impending Paradigm Shift in Motor Imagery Based Brain-Computer Interfaces. Front. Neurosci. 2022, 15, 824759. [Google Scholar] [CrossRef]

- Zhang, Y.; Yagi, K.; Shibahara, Y.; Tate, L.; Tamura, H. A Study on Analysis Method for a Real-Time Neurofeedback System Using Non-Invasive Magnetoencephalography. Electronics 2022, 11, 2473. [Google Scholar] [CrossRef]

- Aghajani, H.; Omurtag, A. Assessment of Mental Workload by EEG+FNIRS. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2016, 2016, 3773–3776. [Google Scholar] [CrossRef]

- Warbrick, T. Simultaneous EEG-FMRI: What Have We Learned and What Does the Future Hold? Sensors 2022, 22, 2262. [Google Scholar] [CrossRef] [PubMed]

- Padmanabhan, P.; Nedumaran, A.M.; Mishra, S.; Pandarinathan, G.; Archunan, G.; Gulyás, B. The Advents of Hybrid Imaging Modalities: A New Era in Neuroimaging Applications. Adv. Biosyst. 2017, 1, 1700019. [Google Scholar] [CrossRef] [PubMed]

- Freudenburg, Z.V.; Branco, M.P.; Leinders, S.; van der Vijgh, B.H.; Pels, E.G.M.; Denison, T.; van den Berg, L.H.; Miller, K.J.; Aarnoutse, E.J.; Ramsey, N.F.; et al. Sensorimotor ECoG Signal Features for BCI Control: A Comparison Between People With Locked-In Syndrome and Able-Bodied Controls. Front. Neurosci. 2019, 13, 457334. [Google Scholar] [CrossRef]

- Zhao, Z.P.; Nie, C.; Jiang, C.T.; Cao, S.H.; Tian, K.X.; Yu, S.; Gu, J.W. Modulating Brain Activity with Invasive Brain—Computer Interface: A Narrative Review. Brain Sci. 2023, 13, 134. [Google Scholar] [CrossRef]

- Alahi, M.E.E.; Liu, Y.; Xu, Z.; Wang, H.; Wu, T.; Mukhopadhyay, S.C. Recent Advancement of Electrocorticography (ECoG) Electrodes for Chronic Neural Recording/Stimulation. Mater. Today Commun. 2021, 29, 102853. [Google Scholar] [CrossRef]

- Merk, T.; Peterson, V.; Köhler, R.; Haufe, S.; Richardson, R.M.; Neumann, W.J. Machine Learning Based Brain Signal Decoding for Intelligent Adaptive Deep Brain Stimulation. Exp. Neurol. 2022, 351, 113993. [Google Scholar] [CrossRef]

- Rudroff, T. Decoding Thoughts, Encoding Ethics: A Narrative Review of the BCI-AI Revolution. Brain Res. 2025, 1850, 149423. [Google Scholar] [CrossRef]

- Saha, S.; Mamun, K.A.; Ahmed, K.; Mostafa, R.; Naik, G.R.; Darvishi, S.; Khandoker, A.H.; Baumert, M. Progress in Brain Computer Interface: Challenges and Opportunities. Front. Syst. Neurosci. 2021, 15, 578875. [Google Scholar] [CrossRef]

- Zhang, H.; Jiao, L.; Yang, S.; Li, H.; Jiang, X.; Feng, J.; Zou, S.; Xu, Q.; Gu, J.; Wang, X.; et al. Brain-Computer Interfaces: The Innovative Key to Unlocking Neurological Conditions. Int. J. Surg. 2024, 110, 5745. [Google Scholar] [CrossRef]

- Merk, T.; Peterson, V.; Lipski, W.J.; Blankertz, B.; Turner, R.S.; Li, N.; Horn, A.; Richardson, R.M.; Neumann, W.J. Electrocorticography Is Superior to Subthalamic Local Field Potentials for Movement Decoding in Parkinson’s Disease. Elife 2022, 11, e75126. [Google Scholar] [CrossRef]

- Cao, T.D.; Truong-Huu, T.; Tran, H.; Tran, K. A Federated Deep Learning Framework for Privacy Preservation and Communication Efficiency. J. Syst. Archit. 2022, 124, 102413. [Google Scholar] [CrossRef]

- Lebedev, M.A.; Nicolelis, M.A.L. Brain-Machine Interfaces: From Basic Science to Neuroprostheses and Neurorehabilitation. Physiol. Rev. 2017, 97, 767–837. [Google Scholar] [CrossRef]

- Fick, T.; Van Doormaal, J.A.M.; Tosic, L.; Van Zoest, R.J.; Meulstee, J.W.; Hoving, E.W.; Van Doormaal, T.P.C. Fully Automatic Brain Tumor Segmentation for 3D Evaluation in Augmented Reality. Neurosurg. Focus 2021, 51, E14. [Google Scholar] [CrossRef]

- Kazemzadeh, K.; Akhlaghdoust, M.; Zali, A. Advances in Artificial Intelligence, Robotics, Augmented and Virtual Reality in Neurosurgery. Front. Surg. 2023, 10, 1241923. [Google Scholar] [CrossRef]

- Zhou, T.; Yu, T.; Li, Z.; Zhou, X.; Wen, J.; Li, X. Functional Mapping of Language-Related Areas from Natural, Narrative Speech during Awake Craniotomy Surgery. Neuroimage 2021, 245, 118720. [Google Scholar] [CrossRef]

- Sarubbo, S.; Annicchiarico, L.; Corsini, F.; Zigiotto, L.; Herbet, G.; Moritz-Gasser, S.; Dalpiaz, C.; Vitali, L.; Tate, M.; De Benedictis, A.; et al. Planning Brain Tumor Resection Using a Probabilistic Atlas of Cortical and Subcortical Structures Critical for Functional Processing: A Proof of Concept. Oper. Neurosurg. 2021, 20, E175–E183. [Google Scholar] [CrossRef]

- Lachance, B.; Wang, Z.; Badjatia, N.; Jia, X. Somatosensory Evoked Potentials (SSEP) and Neuroprognostication after Cardiac Arrest. Neurocrit. Care 2020, 32, 847. [Google Scholar] [CrossRef]

- Nikolov, P.; Heil, V.; Hartmann, C.J.; Ivanov, N.; Slotty, P.J.; Vesper, J.; Schnitzler, A.; Groiss, S.J. Motor Evoked Potentials Improve Targeting in Deep Brain Stimulation Surgery. Neuromodulation 2022, 25, 888–894. [Google Scholar] [CrossRef]

- Esfandiari, H.; Troxler, P.; Hodel, S.; Suter, D.; Farshad, M.; Cavalcanti, N.; Wetzel, O.; Mania, S.; Cornaz, F.; Selman, F.; et al. Introducing a Brain-Computer Interface to Facilitate Intraoperative Medical Imaging Control—a Feasibility Study. BMC Musculoskelet Disord 2022, 23, 701. [Google Scholar] [CrossRef]

- Mridha, M.F.; Das, S.C.; Kabir, M.M.; Lima, A.A.; Islam, M.R.; Watanobe, Y. Brain-Computer Interface: Advancement and Challenges. Sensors 2021, 21, 5746. [Google Scholar] [CrossRef]

- Kim, M.S.; Park, H.; Kwon, I.; An, K.O.; Kim, H.; Park, G.; Hyung, W.; Im, C.H.; Shin, J.H. Efficacy of Brain-Computer Interface Training with Motor Imagery-Contingent Feedback in Improving Upper Limb Function and Neuroplasticity among Persons with Chronic Stroke: A Double-Blinded, Parallel-Group, Randomized Controlled Trial. J. Neuroeng. Rehabil. 2025, 22, 1. [Google Scholar] [CrossRef]

- Pignolo, L.; Servidio, R.; Basta, G.; Carozzo, S.; Tonin, P.; Calabrò, R.S.; Cerasa, A. The Route of Motor Recovery in Stroke Patients Driven by Exoskeleton-Robot-Assisted Therapy: A Path-Analysis. Med. Sci. 2021, 9, 64. [Google Scholar] [CrossRef]

- Yang, S.; Li, R.; Li, H.; Xu, K.; Shi, Y.; Wang, Q.; Yang, T.; Sun, X. Exploring the Use of Brain-Computer Interfaces in Stroke Neurorehabilitation. Biomed. Res. Int. 2021, 2021, 9967348. [Google Scholar] [CrossRef]

- Jin, W.; Zhu, X.X.; Qian, L.; Wu, C.; Yang, F.; Zhan, D.; Kang, Z.; Luo, K.; Meng, D.; Xu, G. Electroencephalogram-Based Adaptive Closed-Loop Brain-Computer Interface in Neurorehabilitation: A Review. Front. Comput. Neurosci. 2024, 18, 1431815. [Google Scholar] [CrossRef]

- Mane, R.; Wu, Z.; Wang, D. Poststroke Motor, Cognitive and Speech Rehabilitation with Brain-Computer Interface: A Perspective Review. Stroke Vasc. Neurol. 2022, 7, 541–549. [Google Scholar] [CrossRef]

- Zhang, X.; Ma, Z.; Zheng, H.; Li, T.; Chen, K.; Wang, X.; Liu, C.; Xu, L.; Wu, X.; Lin, D.; et al. The Combination of Brain-Computer Interfaces and Artificial Intelligence: Applications and Challenges. Ann. Transl. Med. 2020, 8, 712. [Google Scholar] [CrossRef]

- Menze, B.H.; Jakab, A.; Bauer, S.; Kalpathy-Cramer, J.; Farahani, K.; Kirby, J.; Burren, Y.; Porz, N.; Slotboom, J.; Wiest, R.; et al. The Multimodal Brain Tumor Image Segmentation Benchmark (BRATS). IEEE Trans. Med. Imaging 2015, 34, 1993–2024. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. Lect. Notes Comput. Sci. (Incl. Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinform.) 2015, 9351, 234–241. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual, 3–7 May 2021. [Google Scholar] [CrossRef]

- Hatamizadeh, A.; Nath, V.; Tang, Y.; Yang, D.; Roth, H.R.; Xu, D. Swin UNETR: Swin Transformers for Semantic Segmentation of Brain Tumors in MRI Images. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2022; Volume 12962, pp. 272–284. [Google Scholar] [CrossRef]

- Paulmurugan, K.; Vijayaragavan, V.; Ghosh, S.; Padmanabhan, P.; Gulyás, B. Brain-Computer Interfacing Using Functional Near-Infrared Spectroscopy (fNIRS). Biosensors 2021, 11, 389. [Google Scholar] [CrossRef]

- Peng, C.J.; Chen, Y.C.; Chen, C.C.; Chen, S.J.; Cagneau, B.; Chassagne, L. An EEG-Based Attentiveness Recognition System Using Hilbert-Huang Transform and Support Vector Machine. J. Med. Biol. Eng. 2020, 40, 230–238. [Google Scholar] [CrossRef]

- Rakhmatulin, I.; Dao, M.S.; Nassibi, A.; Mandic, D. Exploring Convolutional Neural Network Architectures for EEG Feature Extraction. Sensors 2024, 24, 877. [Google Scholar] [CrossRef]

- Peksa, J.; Mamchur, D. State-of-the-Art on Brain-Computer Interface Technology. Sensors 2023, 23, 6001. [Google Scholar] [CrossRef]

- Mungoli, N. Scalable, Distributed AI Frameworks: Leveraging Cloud Computing for Enhanced Deep Learning Performance and Efficiency. arXiv 2023, arXiv:2304.13738. [Google Scholar]

- Subasi, A. Practical Guide for Biomedical Signals Analysis Using Machine Learning Techniques: A MATLAB Based Approach; Academic Press: Cambridge, MA, USA, 2019; pp. 1–443. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An Open Source Toolbox for Analysis of Single-Trial EEG Dynamics Including Independent Component Analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [PubMed]

- Schirrmeister, R.T.; Springenberg, J.T.; Fiederer, L.D.J.; Glasstetter, M.; Eggensperger, K.; Tangermann, M.; Hutter, F.; Burgard, W.; Ball, T. Deep Learning with Convolutional Neural Networks for EEG Decoding and Visualization. Hum. Brain Mapp. 2017, 38, 5391–5420. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Hao, S.; Peng, X.; Hu, L. Deep Learning for Sensor-Based Activity Recognition: A Survey. Pattern Recognit. Lett. 2019, 119, 3–11. [Google Scholar] [CrossRef]

- Hatamizadeh, A.; Tang, Y.; Yang, D.; Myronenko, A.; Xu, D. Swin UNETR++: Towards More Efficient and Accurate Medical Image Segmentation. In MICCAI BrainLes 2023, Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2023; Volume 14386, pp. 113–124. [Google Scholar] [CrossRef]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum Contrast for Unsupervised Visual Representation Learning. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9726–9735. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A Survey on Deep Learning in Medical Image Analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge Computing: Vision and Challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated Machine Learning: Concept and Applications. ACM Trans. Intell. Syst. Technol. 2019, 10, 1–19. [Google Scholar] [CrossRef]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y.; Gan, C.; Li, K.; Ho, J. Secure Federated Transfer Learning. arXiv 2018, arXiv:1812.03387. [Google Scholar] [CrossRef]

- Zoph, B.; Le, Q.V. Neural Architecture Search with Reinforcement Learning. In Proceedings of the 5th International Conference on Learning Representations, ICLR 2017—Conference Track Proceedings, Toulon, France, 24–26 April 2017. [Google Scholar]

- Chen, Z.; Jing, L.; Li, Y.; Li, B. Bridging the Domain Gap: Self-Supervised 3D Scene Understanding with Foundation Models. Adv. Neural. Inf. Process. Syst. 2023, 36, 79226–79239. [Google Scholar]

- Edelman, B.J.; Zhang, S.; Schalk, G.; Brunner, P.; Muller-Putz, G.; Guan, C.; He, B. Non-Invasive Brain-Computer Interfaces: State of the Art and Trends. IEEE Rev. Biomed. Eng. 2025, 18, 26–49. [Google Scholar] [CrossRef] [PubMed]

- Simon, C.; Bolton, D.A.E.; Kennedy, N.C.; Soekadar, S.R.; Ruddy, K.L. Challenges and Opportunities for the Future of Brain-Computer Interface in Neurorehabilitation. Front. Neurosci. 2021, 15, 699428. [Google Scholar] [CrossRef]

- Fan, L.; Zhang, F.; Fan, H.; Zhang, C. Brief Review of Image Denoising Techniques. Vis. Comput. Ind. Biomed. Art. 2019, 2, 7. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Wang, F.; Li, T.; Zhao, L.; Gong, A.; Nan, W.; Ding, P.; Fu, Y. Considerations and Discussions on the Clear Definition and Definite Scope of Brain-Computer Interfaces. Front. Neurosci. 2024, 18, 1449208. [Google Scholar] [CrossRef] [PubMed]

- Peng, W.; Wang, Y.; Liu, Z.; Zhong, L.; Wen, X.; Wang, P.; Gong, X.; Liu, H. The Application of Brain–Computer Interface in Upper Limb Dysfunction after Stroke: A Systematic Review and Meta-Analysis of Randomized Controlled Trials. Front. Hum. Neurosci. 2024, 18, 1438095. [Google Scholar] [CrossRef]

- Rajpura, P.; Cecotti, H.; Kumar Meena, Y. Explainable Artificial Intelligence Approaches for Brain-Computer Interfaces: A Review and Design Space. J. Neural. Eng. 2023, 21, 4. [Google Scholar] [CrossRef]

- Abiri, R.; Borhani, S.; Sellers, E.W.; Jiang, Y.; Zhao, X. A Comprehensive Review of EEG-Based Brain–Computer Interface Paradigms. J. Neural Eng. 2019, 16, 011001. [Google Scholar] [CrossRef]

- Salles, A.; Farisco, M. Neuroethics and AI Ethics: A Proposal for Collaboration. BMC Neurosci. 2024, 25, 41. [Google Scholar] [CrossRef]

- Chaudhary, U.; Birbaumer, N.; Ramos-Murguialday, A. Brain-Computer Interfaces for Communication and Rehabilitation. Nat. Rev. Neurol. 2016, 12, 513–525. [Google Scholar] [CrossRef]

- Sun, X.-Y.; Ye, B. The Functional Differentiation of Brain-Computer Interfaces (BCIs) and Its Ethical Implications. Humanit. Soc. Sci. Commun. 2023, 10, 878. [Google Scholar] [CrossRef]

- Keskinbora, K.H.; Keskinbora, K. Ethical Considerations on Novel Neuronal Interfaces. Neurol. Sci. 2018, 39, 607–613. [Google Scholar] [CrossRef]

- Vlek, R.J.; Steines, D.; Szibbo, D.; Kübler, A.; Schneider, M.J.; Haselager, P.; Nijboer, F. Ethical Issues in Brain-Computer Interface Research, Development, and Dissemination. J. Neurol. Phys. Ther. 2012, 36, 94–99. [Google Scholar] [CrossRef] [PubMed]

- McIntyre, C.C.; Hahn, P.J. Network Perspectives on the Mechanisms of Deep Brain Stimulation. Neurobiol. Dis. 2010, 38, 329–337. [Google Scholar] [CrossRef]

- Borton, D.A.; Yin, M.; Aceros, J.; Nurmikko, A. An Implantable Wireless Neural Interface for Recording Cortical Circuit Dynamics in Moving Primates. J. Neural. Eng. 2013, 10, 026010. [Google Scholar] [CrossRef] [PubMed]

- Fernandez-Leon, J.A.; Parajuli, A.; Franklin, R.; Sorenson, M.; Felleman, D.J.; Hansen, B.J.; Hu, M.; Dragoi, V. A Wireless Transmission Neural Interface System for Unconstrained Non-Human Primates. J. Neural. Eng. 2015, 12, 056005. [Google Scholar] [CrossRef]

- Yin, M.; Borton, D.A.; Aceros, J.; Patterson, W.R.; Nurmikko, A.V. A 100-Channel Hermetically Sealed Implantable Device for Chronic Wireless Neurosensing Applications. IEEE Trans. Biomed. Circuits Syst. 2013, 7, 115–128. [Google Scholar] [CrossRef]

- Gao, Y.; Jiang, Y.; Peng, Y.; Yuan, F.; Zhang, X.; Wang, J. Medical Image Segmentation: A Comprehensive Review of Deep Learning-Based Methods. Tomography 2025, 11, 52. [Google Scholar] [CrossRef]

- Ienca, M.; Andorno, R. Towards New Human Rights in the Age of Neuroscience and Neurotechnology. Life Sci. Soc. Policy 2017, 13, 5. [Google Scholar] [CrossRef]

- Yao, D.; Koivu, A.; Simonyan, K. Applications of Artificial Intelligence in Neurological Voice Disorders. World J. Otorhinolaryngol. Head Neck Surg. 2025, 9, 100017. [Google Scholar] [CrossRef]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jégou, H. Training Data-Efficient Image Transformers & Distillation Through Attention. In Proceedings of the 38th International Conference on Machine Learning (ICML), Online, 18–24 July 2021; Volume 139, pp. 10347–10357. [Google Scholar]

- Wodlinger, B.; Downey, J.E.; Tyler-Kabara, E.C.; Schwartz, A.B.; Boninger, M.L.; Collinger, J.L. Ten-Dimensional Anthropomorphic Arm Control in a Human Brain–Machine Interface: Difficulties, Solutions, and Limitations. J. Neural Eng. 2015, 12, 016011. [Google Scholar] [CrossRef] [PubMed]

- Andrews, M.; Di Ieva, A. Artificial intelligence for brain neuroanatomical segmentation in MRI: A literature review. J. Clin. Neurosci. 2025, 134, 111073. [Google Scholar] [CrossRef]

- Lee, M.; Kim, J.H.; Choi, W.; Lee, K.H. AI-assisted Segmentation Tool for Brain Tumor MR Image Analysis. J. Imaging Inform. Med. 2024, 38, 74–83. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Monroe, J.I.; Lo, S.; Yao, M.; Harari, P.M.; Machtay, M.; Sohn, J.W. Quantitative evaluation of image segmentation incorporating medical consideration functions. Med. Phys. 2015, 42, 3013–3023. [Google Scholar] [CrossRef] [PubMed]

- Shen, D.; Wu, G.; Suk, H.-I. Deep Learning in Medical Image Analysis. Annu. Rev. Biomed. Eng. 2017, 19, 221–248. [Google Scholar] [CrossRef]

- Yousef, R.; Fahmy, H.; Abdelsamea, M.M.; Hamed, M.; Kim, J. U-Net-Based Models for Optimal MR Brain Image Segmentation. Diagnostics 1858 2023, 13, 1624. [Google Scholar] [CrossRef]

- Chen, J.; Mei, J.; Li, X.; Lu, Y.; Yu, Q.; Wei, Q.; Luo, X.; Xie, Y.; Adeli, E.; Wang, Y.; et al. TransUNet: Rethinking the U-Net Architecture Design for Medical Image Segmentation Through the Lens of Transformers. Med. Image Anal. 2024, 97, 103280. [Google Scholar] [CrossRef]

- Pang, H.; Guo, W.; Ye, C. Multi-Modal Brain MRI Synthesis Based on SwinUNETR. arXiv 2025, arXiv:2506.02467. [Google Scholar] [CrossRef]

- Isensee, F.; Jaeger, P.F.; Kohl, S.A.A.; Petersen, J.; Maier-Hein, K.H. nnU-Net: A self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 2021, 18, 203–211. [Google Scholar] [CrossRef]

- Zhang, W.; Wu, Y.; Yang, B.; Hu, S.; Wu, L.; Dhelim, S. Overview of Multi-Modal Brain Tumor MR Image Segmentation. Healthcare 2021, 9, 1051. [Google Scholar] [CrossRef]

- Yousef, R.; Khan, S.; Gupta, G.; Siddiqui, T.; Albahlal, B.M.; Alajlan, S.A.; Haq, M.A.; Ali, A. Bridged-U-Net-ASPP-EVO and Deep Learning Optimization for Brain Tumor Segmentation. Diagnostics 2023, 13, 2633. [Google Scholar] [CrossRef] [PubMed]

- Çiçek, Ö.; Abdulkadir, A.; Lienkamp, S.S.; Brox, T.; Ronneberger, O. 3D U-Net: Learning dense volumetric segmentation from sparse annotation. In Proceedings of the MICCAI 2016, Athens, Greece, 17–21 October 2016; pp. 424–432. [Google Scholar] [CrossRef]

- Chen, J.; Mei, J.; Li, X.; Lu, Y.; Yu, Q.; Wei, Q.; Luo, X.; Xie, Y.; Adeli, E.; Wang, Y.; et al. 3D TransUNet: Advancing Medical Image Segmentation through Vision Transformers. arXiv 2023, arXiv:2310.07781. [Google Scholar] [CrossRef]

- Tang, D.; Chen, J.; Ren, L.; Wang, X.; Li, D.; Zhang, H. Reviewing CAM-Based Deep Explainable Methods in Healthcare. Appl. Sci. 2024, 14, 4124. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar] [CrossRef]

- Wang, H.; Wang, Z.; Du, M.; Yang, F.; Zhang, Z.; Ding, S.; Mardziel, P.; Hu, X. Score-CAM: Score-Weighted Visual Explanations for Convolutional Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 24–25. [Google Scholar] [CrossRef]

- Schlemper, J.; Oktay, O.; Schaap, M.; Heinrich, M.; Kainz, B.; Glocker, B.; Rueckert, D. Attention Gated Networks: Learning to Leverage Salient Regions in Medical Images. Med. Image Anal. 2019, 53, 197–207. [Google Scholar] [CrossRef]

- Larrazabal, A.J.; Nieto, N.; Peterson, V.; Milone, E.H. Gender imbalance in medical imaging datasets produces biased classifiers for computer-aided diagnosis. Proc. Natl. Acad. Sci. USA 2020, 117, 12592–12594. [Google Scholar] [CrossRef]

- Samek, W.; Wiegand, T.; Müller, K.R. Explainable artificial intelligence: Understanding, visualizing and interpreting deep learning models. arXiv 2017, arXiv:1708.08296. [Google Scholar] [CrossRef]

- European Commission. Proposal for a Regulation Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act). COM/2021/206 final. Brussels. 21 April 2021. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:52021PC0206 (accessed on 10 March 2025).

- Roy, Y.; Banville, H.; Albuquerque, I.; Gramfort, A.; Falk, T.H.; Faubert, J. Deep learning-based electroencephalography analysis: A systematic review. J. Neural Eng. 2019, 16, 051001. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

| Modality | Spatial Resolution | Temporal Resolution | Invasiveness | Clinical Utility |

|---|---|---|---|---|

| EEG | Low (~10–30 mm) | High (~1 ms) | Non-invasive | Real-time monitoring, BCI |

| fNIRS | Moderate (~10 mm) | Moderate (~100 ms) | Non-invasive | Hemodynamic response analysis |

| fMRI | Hig (~1–2 mm3) | Low (~2–3 ms) | Non-invasive | Functional and anatomical mapping. |

| CT | Very high (~0.5–1 mm) | None (static) | Non-invasive | Structural imaging, intraoperative guidance |

| PET | Low (~4–6 mm) | Very low (~minutes) | Semi-invasive | Metabolic imaging, tumor detection |

| Paradigm/Modality | Signal Source | Type | Temporal Resolution | Spatial Resolution | Invasiveness | Training Required | Clinical Applications | Notable Limitations |

|---|---|---|---|---|---|---|---|---|

| Motor Imagery (MI) | EEG | Endogenous | ~300 ms–1 s | Low (cm-level) | Non-invasive | High (weeks) | Neuroprosthetics, robotic control | Long training, high variability |

| P300 ERP | EEG | Exogenous (event-based) | ~300 ms | Low-moderate | Non-invasive | Low | Communication interfaces (e.g., spellers) | Slower ITR, stimulus dependency |

| SSVEP | EEG | Exogenous (frequency-coded) | ~100–200 ms | Low | Non-invasive | Very low | High-speed selection (spellers, AR) | Requires sustained gaze, limited in visually impaired |

| fNIRS | Hemodynamic | Exogenous (oxy-Hb response) | ~2–5 s | Moderate (1–3 cm) | Non-invasive | Low-moderate | Cognitive load detection, BCI-fNIRS hybrids | Poor temporal resolution |

| ECoG | Cortical surface | Endogenous | ~50–100 ms | High (mm-level) | Minimally invasive | Moderate | Seizure mapping, high-resolution BCIs | Surgical access required |

| LFP | Deep brain regions | Endogenous | ~10–50 ms | Very high (sub-mm) | Invasive | Moderate | Parkinson’s, closed-loop DBS systems | Deep implantation risk |

| EEG-fNIRS Hybrid | EEG + fNIRS | Multimodal | ~200 ms–5 s | Improved over single-modality | Non-invasive | Moderate | Enhanced classification, error detection | Signal fusion complexity |

| EEG-fMRI Hybrid | EEG + fMRI | Multimodal | EEG: ~ms, fMRI: ~2s | Very high (fMRI) | Non-invasive | High | Cognitive neuroscience, task mapping | Infrastructure, synchronization issues |

| Invasive Hybrid (e.g., ECoG + LFP) | Cortical + subcortical | Multimodal | ~10–100 ms | Ultra-high | Highly invasive | Moderate | Precision neuroprosthetics | Ethical and surgical constraints |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ghosh, S.; Sindhujaa, P.; Kesavan, D.K.; Gulyás, B.; Máthé, D. Brain-Computer Interfaces and AI Segmentation in Neurosurgery: A Systematic Review of Integrated Precision Approaches. Surgeries 2025, 6, 50. https://doi.org/10.3390/surgeries6030050

Ghosh S, Sindhujaa P, Kesavan DK, Gulyás B, Máthé D. Brain-Computer Interfaces and AI Segmentation in Neurosurgery: A Systematic Review of Integrated Precision Approaches. Surgeries. 2025; 6(3):50. https://doi.org/10.3390/surgeries6030050

Chicago/Turabian StyleGhosh, Sayantan, Padmanabhan Sindhujaa, Dinesh Kumar Kesavan, Balázs Gulyás, and Domokos Máthé. 2025. "Brain-Computer Interfaces and AI Segmentation in Neurosurgery: A Systematic Review of Integrated Precision Approaches" Surgeries 6, no. 3: 50. https://doi.org/10.3390/surgeries6030050

APA StyleGhosh, S., Sindhujaa, P., Kesavan, D. K., Gulyás, B., & Máthé, D. (2025). Brain-Computer Interfaces and AI Segmentation in Neurosurgery: A Systematic Review of Integrated Precision Approaches. Surgeries, 6(3), 50. https://doi.org/10.3390/surgeries6030050