Abstract

This research presents a study on enhancing the localization and orientation accuracy of indoor Autonomous Guided Vehicles (AGVs) operating under a centralized, camera-based control system. We investigate and compare the performance of two Extended Kalman Filter (EKF) configurations: a standard EKF and a novel Blended EKF. The research methodology comprises four primary stages: (1) Sensor bias correction for the camera (CAM), Dead Reckoning, and Inertial Measurement Unit (IMU) to improve raw data quality; (2) Calculation of sensor weights using the Inverse-Variance Weighting principle, which assigns higher confidence to sensors with lower variance; (3) Multi-sensor data fusion to generate a stable state estimation that closely approximates the ground truth (GT); and (4) A comparative performance evaluation between the standard EKF, which processes sensor updates independently, and the Blended EKF, which fuses CAM and DR (Dead Reckoning) measurements prior to the filter’s update step. Experimental results demonstrate that the implementation of bias correction and inverse-variance weighting significantly reduces the Root Mean Square Error (RMSE) across all sensors. Furthermore, the Blended EKF not only achieved a lower RMSE in certain scenarios but also produced smooth trajectories similar to or less than the standard EKF in some weightings. These findings indicate the significant potential of the proposed approach in developing more accurate and robust navigation systems for AGVs in complex indoor environments.

1. Introduction

A centralized, vision-based control architecture for Autonomous Guided Vehicles (AGVs) offers a cost-effective alternative to systems reliant on expensive onboard sensors like LIDAR [1]. In industrial environments, positioning accuracy is critical [2]. This approach, often termed “infrastructure-based control”, transfers complex navigation and fleet management tasks from individual vehicles to a powerful central server, enabling sophisticated coordination while reducing per-vehicle hardware costs [3]. The system’s core components include a network of CCTV cameras, a central processing server, a wireless communication network, and the vehicles themselves. The cameras provide a global “God’s-eye view” of the operational area, tracking vehicles and obstacles in real-time. This centralized vision approach leverages advances in computer vision and deep learning techniques for robust localization, as demonstrated by recent work combining imaging sensors with deep convolutional neural networks (DCNNs) for AGV pose estimation [1]. The central server processes this visual data through fleet management software that handles localization, path planning, and traffic management using AI [4] and computer vision techniques [5]. The server then transmits simple motion commands, such as “move forward 5 m”, to the AGVs via wireless communication networks. Recent infrastructure-based vision studies demonstrate that overhead or roadside cameras can detect and track mobile robots reliably in real time, underscoring the practicality of centralized, vision-first approaches [6,7]. Yet long-term localization remains challenging due to illumination changes, occlusions, and drift. A systematic review highlights the need for robust filtering, cross-sensor redundancy, and adaptive models to sustain performance over extended periods [8]. The vehicles execute these commands precisely by utilizing onboard sensor fusion techniques, integrating data from multiple sensors including encoders for distance measurement and Inertial Measurement Units (IMUs) for orientation tracking [9]. Recent advances in sensor fusion have shown that passive wheel odometry can outperform traditional drive-wheel odometry in many scenarios, while IMU/AHRS integration significantly improves accuracy during angular motion [5]. Advanced filtering techniques, including hybrid Kalman-particle filters and trainable Extended Kalman Filters with adaptive noise models, have demonstrated improved localization accuracy and robustness in indoor AGV environments [3,9]. Ultrasonic sensors act as a final safety measure, preventing collisions with unforeseen obstacles that may be in the camera’s blind spots. This hybrid model significantly reduces the cost per vehicle by replacing expensive onboard LIDAR systems with lower-cost sensor combinations and allows for sophisticated, system-wide traffic optimization through centralized path planning algorithms [10]. Modern optimization approaches, such as hybrid Grey Wolf Optimization combined with Kalman corrections, have shown improved path predictability and collision avoidance capabilities for dense warehouse AGV operations [10,11]. However, this centralized approach relies heavily on the central infrastructure, making it vulnerable to single-point failures and potentially less flexible in dynamic environments compared to fully autonomous, SLAM-based robots that maintain complete onboard sensing and processing capabilities. Although the Extended Kalman Filter (EKF) has been widely used for AGV positioning [12], its performance may degrade when raw sensor data have different characteristics, such as camera (CAM) data with high noise and low update rate, compared to Dead Reckoning data with high update rate but prone to accumulated errors (drift). The normal EKF implementations often treat each sensor measurement update independently, which can lead to inconsistencies and non-smooth trajectory estimates, particularly when one sensor is prone to high noise or sudden disturbances. This highlights the research gap in developing an efficient data fusion strategy that can blend data from different sensors before feeding it to the filter update stage. Therefore, the contribution of this paper is to propose and evaluate a novel Blended EKF, which pre-fuses CAM and DR data using a weighted blending strategy, in which the blending parameters are optimized by Optuna before being supplied to the EKF. It is hypothesized that this approach can further reduce localization error compared to the Normal EKF in indoor environments.

2. Materials and Methods

This research presents an integrated approach for indoor localization of automated guided vehicles (AGVs) using multiple low-cost sensors, including cameras (CAMs), encoders, inertial measurement units (IMUs), and approximate positioning (Dead Reckoning—DR) [13].

2.1. Preparation and Equipment Used in the Experiment

2.1.1. Preparation Used in the Experiment

The BALTY AGV robot used in the experiment were equipped with specified sensors. Data from the encoder and IMU were recorded at a sampling rate of 10 Hz (every 0.1 s), while data from the camera (CAM) for absolute positioning were recorded at a rate of 5 Hz (every 0.2 s) [14]. To ensure the accuracy and consistency of the data used in the data fusion process, two data sampling scenarios were defined: (1) using the original sampling rate, with the IMU and Wheel Encoder set at 10 Hz and the CAM at 5 Hz, and (2) adjusting the sampling rate of all sensors to 5 Hz to create temporal consistency and facilitate efficient joint processing. Since the data from each sensor is collected asynchronously, a temporal alignment using an event-based synchronization method was performed in the post-processing step, with the “AGV starting point” set as the reference marker for synchronizing the entire dataset. For data communication between sensors, the MQTT protocol is used, which is set to a basic Quality of Service (QoS) level, prioritizing data transmission speed. However, this approach may result in some data loss in cases of unstable Wi-Fi network signals. The Ground Truth (GT) data is obtained by recording the AGV movement video using a top-view camera. Then, the image is processed frame by frame to extract the AGV position using Camera Calibration and Coordinate Transformation [15]. In this study, the Ground Truth (GT) data were obtained using a dedicated reference camera mounted above the experimental area. This camera recorded the complete AGV motion throughout each trial, providing a high-accuracy baseline for evaluating the performance of CAM, DR, and EKF estimates. The reference camera video was processed frame-by-frame to extract the AGV position. Each frame was timestamped and associated with the detected image features of the AGV, allowing consistent temporal alignment with the sensor data streams. A camera calibration procedure was performed using a checkerboard pattern placed on the floor. The calibration provided intrinsic and extrinsic camera parameters, from which a planar homography matrix was derived to convert pixel coordinates into world coordinates . This ensured that the GT trajectory was mapped accurately to the real experimental environment. To ensure temporal consistency, the GT data were synchronized with the IMU, encoder, and CAM measurements using event-based timestamp alignment. The initial movement of the AGV (“start frame”) was used as a reference marker. All sensor streams were then interpolated to the GT timestamps, enabling comparison across DR, CAM, and EKF estimates. Reference positions on the experimental field are established as ground truth coordinates, which serve as a reference data set to assess the accuracy and errors of the sensors, as well as to perform error correction and evaluate the overall system performance [2].

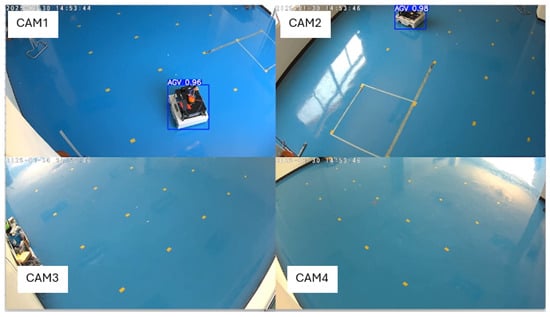

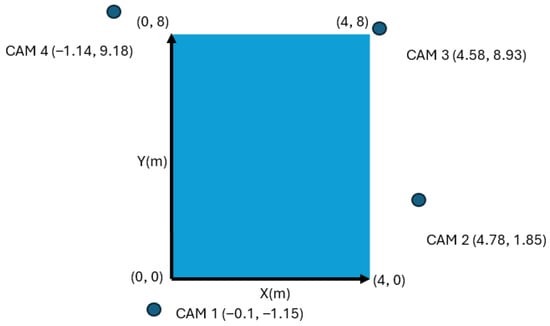

To support camera-based localization, four CCTV cameras (CAM1–CAM4) were installed around the m experimental area to provide full visual coverage of the AGV motion. Each camera observes a specific region of the testbed, and their combined views enable continuous 2D position tracking of the AGV. It is important to note that these four cameras are used as measurement sensors (CAM data) and are not used as ground-truth (GT) sources (Figure 1).

Figure 1.

Overview of the four CCTV cameras (CAM1–CAM4) positioned around the m indoor test area. These cameras serve as the primary vision sensors for CAM-based localization and provide 2D positional measurements of the AGV. Note that these cameras are not used as ground-truth (GT); GT is recorded separately using a dedicated reference camera.

All sensor data used in this study were collected offline. The AGV motion was recorded first, and EKF-based fusion was subsequently applied offline. Because the EKF operates at 10 Hz while the camera provides measurements at 5 Hz, only every second EKF cycle contains a camera observation. When no camera data is available at time , the EKF performs a prediction-only update using the motion model and Dead Reckoning (DR). A full measurement update is executed only when a new camera arrives. To ensure temporal consistency, all MQTT messages include a unified timestamp. Before the EKF update, a timestamp-alignment procedure selects the camera closest to the EKF cycle. If a camera arrives between two EKF cycles, linear interpolation is used to estimate the camera position at the exact EKF timestamp. This prevents time mismatch and reduces trajectory discontinuity.

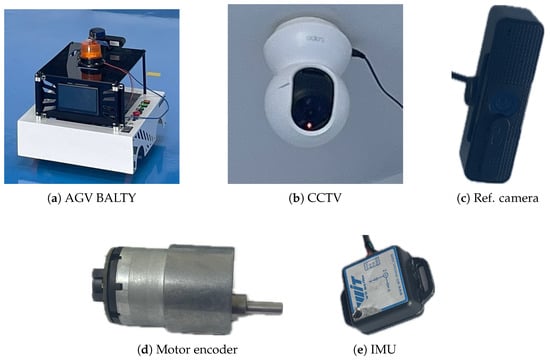

2.1.2. Equipment Used in the Experiment

In this experiment, various sensors were installed to determine the grid position at (Table 1). An encoder coupled to a DC motor (JGB37-520) provides an AB-phase quadrature signal of 90 pulses per revolution, which is used to calculate the distance and direction of rotation. The WitMotion WT901C Inertial Measurement Unit (IMU) is a 9-DOF sensor that can measure linear acceleration (±16 g) and rotation rate (up to ±2000°/s) and is set to output data at a frequency of 10 Hz. For image sensing, a TP-Link Tapo C200 camera was installed in a 4 × 8 m the experimental area (Figure 2), which supports Full HD (1080p) recording at 30 frames per second. The data from these three sensors is used to integrate the data, which helps the system determine the position of the AGV.

Table 1.

Summary of sensor specifications used in the AGV localization system.

Figure 2.

Illustration of the experimental area showing the four CCTV camera placements around the m testbed. Each camera covers a specific quadrant to provide full-area visual tracking of the AGV.

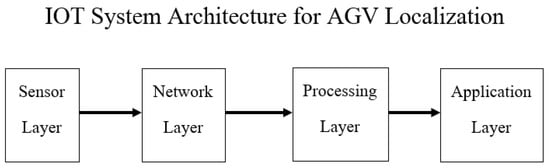

2.1.3. IoT System Architecture Concepts

The conceptual framework for the IoT system architecture for AGV localization (Figure 3) is designed to collect, synchronize, and integrate data between multiple sensors and a central processing unit. This architecture consists of four main layers:

Figure 3.

IoT system architecture for AGV localization. The framework consists of three layers: the perception layer (sensors such as UWB, IMU, and encoders), the network layer (wireless data transmission via Wi-Fi or MQTT), and the application layer (central server performing sensor fusion and control via the EKF).

- Sensor (Sensor) Layer: Consists of the encoder + IMU and a CCTV camera that records data from the experimental area.

- Network (Communication) Layer: Uses Wi-Fi wireless communication with the MQTT protocol for lightweight messaging to exchange sensor data with low latency.

- Processing Layer (Central Server): The central server collects sensor data streams, performs synchronization, integrates the data, and stores the records for analysis.

- Application Layer: Provides visualization and location identification for the experimental area.

2.2. Localization Methods

Multi-sensor fusion works to determine the position and orientation of the AGV by combining data from internal sensors (e.g., encoder, IMU) with external sensors (e.g., camera) using EKF [16] with encoder, IMU, and camera.

2.2.1. Dead Reckoning Localization

Dead Reckoning estimation, which estimates the position of an AGV based on its previous state and movement data, is divided into two parts: measuring the distance from the wheels (encoder)and the direction from the IMU. This method is widely used in positioning research [17,18,19]. The state vector for Dead Reckoning is defined as

which represents the Cartesian coordinates and heading angle of the AGV. The AGV’s motion can be estimated using a kinematic motion model using the linear velocity v and angular velocity data from the sensors, as shown in the equation:

When integrating this equation with respect to time , the state update is obtained as follows:

where k is the current time index and is the previous time index. The data and are obtained by fusing data from:

- Distance measurement from encoder: An encoder mounted to the drive motor provides wheel rotation data that is converted to linear velocity and angular velocity using the vehicle kinematics model. However, this method is limited by accumulated errors caused by wheel slip, calibration inaccuracies, and surface irregularities [18,19].

- Direction from IMU: A 9-DOF IMU provides angular velocity and linear acceleration . The gyroscope output is crucial for direction calculations, as accelerometer data is highly sensitive to noise and bias errors that increase with time [20].

A limitation of the DR method is the continuous error introduced by the time integral, which leads to an infinitely increasing error [16,21]. Therefore, periodic corrections from external sensors are required.

2.2.2. Camera Localization

To address the accumulated drift of Dead Reckoning (DR) that cannot be corrected with internal sensor data, external sensors, such as cameras, LiDAR, or CCTV, are used to measure the position based on world coordinates, thus correcting the position estimate [17]. The process is as follows:

- Feature Detection: The system detects the features of the AGV to be located using standard computer vision algorithms.

- Coordinate Transformation: The AGV’s position in the image plane is converted to its real-world coordinates (world frame) via perspective transformation or a pre-calibrated homography matrix.

The measurement values obtained from this camera, , are crucial for making the system observable, meaning that important system states (such as position and velocity) can be estimated [20]. A computer vision pipeline was implemented to extract the AGV position from the overhead CCTV system. The AGV is first detected in each frame using feature-based bounding-box extraction. The centroid of the bounding box is computed in pixel coordinates, and a calibrated homography mapping is applied to convert pixel coordinates into world coordinates. Therefore, the camera system provides only the 2-D positional measurements and does not supply heading or velocity information [22].

The EKF state vector is defined as

where the heading is observable only from the Dead-Reckoning (DR) and IMU sensors. The camera (CAM) provides only 2D positional measurements and cannot observe the heading. Consequently, the CAM observation matrix affects only the positional states and does not update . Measurement model for the camera: The relationship between the camera observation and the EKF state is expressed as

and is the camera measurement noise. This explicitly defines how the camera-derived measurement corresponds to the observable components of the state vector.

The measurement values, , are fed into a filter for interpolation and prediction of through a nonlinear measurement model, h, noise, as shown in the equation:

These measurement values are then used in a Kalman Filter to calculate the innovation or error of the measurement, :

The accuracy of coordinate transformation and feature detection is crucial because they affect the accuracy of , which is a key factor in reducing the cumulative error of the DR.

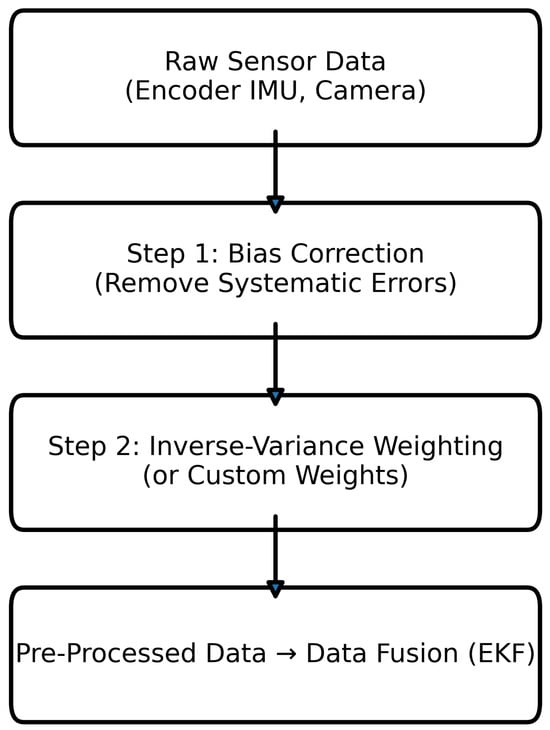

2.3. Pre-Processing Data Analysis

Before data from different sensors can be fed through the data fusion process, it is essential to perform a pre-processing step to reduce bias and increase the reliability of the input data. This step consists of two main steps designed to apply bias correction and appropriately weight each sensor based on its statistical variance. An overview of all these pre-processing steps is shown in Figure 4. The next subsections describe each step in detail.

2.3.1. Bias Correction

Before using the raw sensor data in the fusion process, the systematic bias of each sensor is assessed and corrected [23]. This is done by calculating the average deviation between the measured sensor value S and the reference value from the Ground Truth (GT) according to the equation:

where is the measured value from the sensor at time t and is the reference value from the true path GT at the same time. The corrected value is then given by:

This process reduces the Root Mean Squared Error (RMSE) of the raw data and improves the reliability of the data [24].

Figure 4.

Data pre-processing workflow for multi-sensor fusion. The process includes noise filtering, time synchronization, bias correction, and resampling to align data from Camera, IMU, and encoder sensors prior to fusion using the Extended Kalman Filter (EKF).

2.3.2. Inverse-Variance Weighting

Data from the error-corrected sensors are weighted statistically using the Inverse-Variance Weighting [25] method. This method assumes that sensors with low error variance are given higher weights during data fusion. The weight for each sensor i is calculated from the error variance as follows:

The weights are then normalized to Normalized Weights so that the sum of all weights is 1, defined as:

where M is the total number of sensors.

2.4. Extended Kalman Filter (EKF)

This research developed and compared the performance of two Extended Kalman Filter (EKF) models to estimate the state of an AGV, consisting of its position (X, Y axes) and heading. The state equations and measurement equations used in the EKF system can be written in the following general form: [3]:

where: is the AGV state vector (position and direction), is the state transition function, is the control data from the IMU and encoder, is the process noise, is the measured value from the sensor, is the measurement function, is the measurement noise.

2.4.1. Normal Series Fusion EKF (Normal EKF)

A typical EKF operates using an iterative process consisting of two main steps: Prediction Step and Update Step [26]

Prediction Step:

In this step, the EKF uses the AGV’s motion model, combined with data from the IMU and DR (encoder) sensors to predict the AGV’s next state (position and direction) based on the predicted state values. and the variance of the estimate (covariance) According to the equation:

where and is the predicted state and covariance, is the Jacobian matrix of the state transition function f, and is the process noise covariance of the system.

Update Step:

When new measurements from a sensor (e.g., a CAM) are received, the EKF uses them to update the predictions.

The Kalman Gain is calculated to determine the appropriate weight between the predicted and actual measured values. Then, the state and covariance are updated according to the equation:

where is the Jacobian matrix of the measurement function h, and is the measurement noise covariance.

A conventional EKF updates its values using measurements from each sensor separately in sequential updating.

2.4.2. Blended EKF

The purpose of Blended EKF is to correct for the characteristics of Camera-Based Reckoning (CAM) and Dead Reckoning (DR) measurements. While CAM provides drift-free absolute position data, it suffers from high noise, inconsistent update rates, and occasional occlusions. DR, on the other hand, provides relative motion estimates, but inevitably accumulates drift over time. The raw data causes EKF to be unsmooth, as it alternates between noisy camera updates and drifting DR estimates. To correct for this, CAM and DR measurements are pre-blended prior to the update step. This blending produces a more stable and consistent measurement input, which reduces oscillations and produces a smoother trajectory.

The Blended EKF method fuses measurements from the Camera (CAM) and Dead Reckoning (DR) before the update process. User-defined weights are used to control the proportion of influence of each measurement source. This can be written as the following equation:

where:

- is the weight assigned to the Camera (CAM) measurements;

- is the weight assigned to the Dead Reckoning (DR) measurements.

The blended measurement is designed as a statistical fusion of CAM and DR observations. The blending process is motivated by the principle of inverse-variance weighting, where sensors with lower measurement variance (higher reliability) contribute more strongly to the fused value. This allows the blended observation to reduce high-frequency camera noise while compensating for long-term drift in the DR subsystem. In the Blended EKF, the blended measurement vector is defined only for the positional components . The blended observation vector used in the EKF update step is expressed as:

where and control the relative contributions of CAM and DR for each axis.

The heading is not blended because the camera does not provide orientation information. Instead, is updated through the motion model in the prediction step and through DR/IMU measurements in the update step, which are the only sensors capable of providing heading observations.

In the Blended EKF, the blended measurement vector (Equation (21)) is defined only for the positional components are closely related to the principle of inverse-variance weighting used in statistical sensor fusion. Sensors with lower measurement variance (higher reliability) receive higher blending weight, while sensors with higher variance receive lower weight. This ensures that the blended measurement emphasizes the more reliable sensor at each axis.

In the EKF update step, the blended measurement replaces the individual camera or DR measurements. This ensures that the EKF receives a single, statistically optimized observation at each update cycle.

To achieve balance in the aggregation, the following conditions are defined. Normalization:

The uncertainty of the mixed measurement covariance, or , can be estimated as:

The selection of the values of and may be based on experimental testing or tuning to suit the actual application environment, as discussed in the next section. By supplying the EKF with a statistically blended observation rather than two independent updates, the filter receives a smoother and more reliable measurement input. This reduces oscillations caused by noisy camera updates and compensates for long-term drift from DR, which ultimately improves the robustness and stability of the estimated trajectory.

2.4.3. Parameter Optimization Using Optuna and TPE (Tree-Structured Parzen Estimator) Sampler for the Blended EKF

The selection of the blending weights in the Blended Extended Kalman Filter (Blended EKF) plays a crucial role in determining the relative confidence assigned to Camera (CAM) and Dead Reckoning (DR) measurements. Manual tuning of these parameters is time-consuming and does not guarantee optimal performance. To address this issue, this study employs Optuna, a next-generation hyperparameter optimization framework based on a define-by-run architecture [27]. Optuna uses the Tree-structured Parzen Estimator (TPE) sampler, a Bayesian optimization algorithm originally proposed by Bergstra et al. [28], to efficiently search for optimal blending parameters.

Search Space

The blending weights and were defined over the discrete domain:

covering configurations ranging from DR-dominant (values near 0) to CAM-dominant (values near 1).

Objective Function

Each candidate parameter pair was evaluated using the Root Mean Square Error (RMSE) between the estimated trajectory and the ground truth:

Thus, the optimization target is

TPE Mechanism

The TPE sampler models the probability density of “good (l(x))” and “bad (g(x))” parameter configurations using kernel density estimation. It separates prior trial results into two groups based on a performance threshold:

and proposes new candidate parameters by maximizing the ratio

which increases the likelihood of selecting parameter values that improve RMSE in subsequent evaluations [29].

Optimization Procedure

The optimization process proceeds as follows:

- The TPE sampler proposes candidate values for ;

- The Blended EKF is executed using these parameters;

- The RMSE is computed and stored;

- TPE updates its probability density models (, );

- A new candidate is selected by maximizing ;

- The process repeats until the maximum number of trials is reached.

The use of Bayesian optimization enables Optuna to significantly reduce the number of required evaluations compared to exhaustive approaches such as grid search [27]. Experimental results presented in Section 4 demonstrate that Optuna consistently identifies blending weights that yield lower RMSE and smoother trajectories compared to manually tuned configurations.

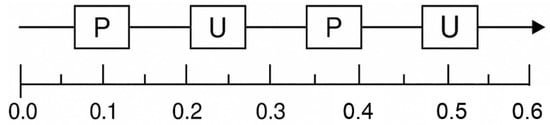

2.5. Sensor Synchronization Between EKF and Camera

The Extended Kalman Filter (EKF) operates at 10 Hz (0.1 s), whereas the camera provides measurements at 5 Hz (0.2 s). Therefore, the EKF receives a new camera observation only every two prediction cycles.

When a camera measurement is not available, the EKF performs a prediction-only update using Dead Reckoning (DR) and the motion model:

Both DR and camera data are transmitted via MQTT with unified timestamps from the publisher. Before performing the measurement update, the EKF aligns timestamps by selecting the camera measurement whose timestamp is closest to the EKF cycle time. If the camera arrives between EKF cycles, linear interpolation is applied to estimate the camera pose at the exact EKF timestamp.

This synchronization prevents temporal mismatch between the two measurements and ensures that the EKF update uses time-consistent data.

Figure 5 illustrates the synchronization mechanism between the EKF operating at 10 Hz and the camera providing measurements at 5 Hz. Because the camera provides a new observation every 0.2 s, the EKF receives a camera update only every second cycle. Cycles marked “P” represent prediction-only updates using Dead Reckoning (DR), while cycles marked “U” correspond to full update steps that include both DR and camera updates.

Figure 5.

Synchronization timeline between EKF (10 Hz) and camera (5 Hz). P = Prediction + DR-only update; U = Prediction + DR update + Camera update.

This timeline also reflects the timestamp-alignment, where unified MQTT timestamps and used to match EKF cycles with the closest camera. When camera arrive between EKF cycles, linear interpolation is used to estimate the camera pose at the exact EKF timestamp. This ensures that the EKF operates on time-consistent measurements despite the 10–5 Hz mismatch.

3. Experimental Design

This experiment aimed to evaluate the performance of AGV location identification using fusion of data from multiple sensors.The main equipment used in this experiment is the AGV BALTY and various sensors, shown in Figure 6. The experimental design was structured and controlled to generate reliable data for analysis. The experimental data processing steps consisted of the following:

Figure 6.

Experimental equipment used for AGV localization: (a) AGV BALTY; (b) CCTV; (c) reference camera; (d) motor encoder; and (e) IMU.

- Bias Correction Analysis: The raw data obtained from each sensor type were analyzed against Ground Truth (GT) data, which is akin to the “true path”, to determine the systematic bias and variance.This step is crucial in reducing the Root Mean Squared Error (RMSE) of the raw data and enhancing the reliability of the data before further use.

- Weighting with Inverse-Variance Weighting: The error-corrected data were then statistically weighted. The principle is that sensors with lower bias variance (which means higher reliability) will receive higher weight in data fusion.

- Data Fusion with EKF: The pre-processed data is fed into the EKF system to estimate the state (position and orientation). Two EKF models have been developed and compared: (1) a conventional EKF and (2) a blended EKF, which fuses measurements from the CAM and DR sensors before the update process.

Moreover, the blending weights of the Blended EKF were optimized using Optuna with the Tree-structured Parzen Estimator (TPE) sampler, as described in Section 2.4.3.

To ensure that the performance evaluation covers real-world application scenarios, the AGV was tested to move along a specially designed route, which consists of two main parts:

- Straight Section: This movement involves constant speed and no sudden changes of direction to assess the ability to maintain a precise position under normal conditions.

- Curved Section: It is a dynamic movement, which easily causes accumulated drift in the dead reckoning system to assess the system capability and stability under the condition of changing direction of movement.

The experiment starts at x = 0, y = 7, moves in a straight line, stops at position x = 1, y = 7, then rotates and moves in a straight line, then rotates and moves in a straight line again, then stops the movement, which is a comprehensive experiment to test the accuracy and stability under the conditions of movement.

4. Results and Discussion

This section presents and discusses experimental results obtained from sensor data analysis and data fusion, focusing on evaluating the performance of the designed algorithmic location system.

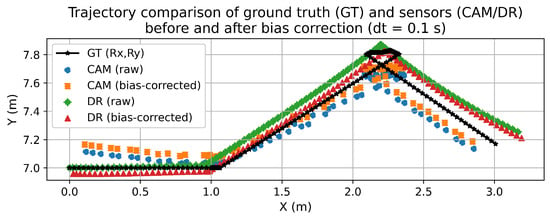

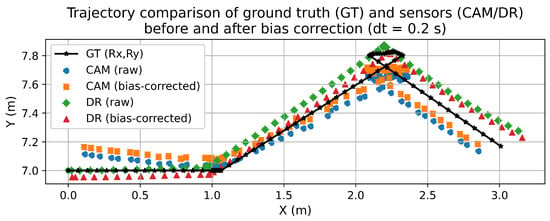

4.1. Analysis of Bias Correction (BC) Results

Bias correction improves the accuracy of the sensor, particularly its positioning. The analysis of the average bias errors for each sensor reveals its inherent limitations and baseline accuracy prior to applying any correction. Table 2 shows the average sensor error values at two sampling rates (dt), 0.1 s and 0.2 s, the sensor’s data acquisition frequency.

Table 2.

Average bias errors of each sensor at different sampling intervals.

- Camera Sensor (CAM): The bias error values in the X-axis (Mean Error X) were positive, while the Y-axis (Mean Error Y) were found to be negative in both cases ( = 0.1 and 0.2). However, the camera sensor did not measure the rotation angle, so there was no error for the Mean Error .

- Dead Reckoning (DR) Sensor: For DR, the error values in the X-axis (Mean Error X) were negative, while the Y-axis (Mean Error Y) were positive, indicating that there was error in both directions.

- Inertial Measurement Unit (IMU) Sensors: IMU sensors are used to measure changes in angle (). The only error is the Mean Error ().

Different types of sensors have different bias tolerances, both in direction (positive/negative) and magnitude. This information is used to develop bias correction algorithms, and the analysis, using Root Mean Square Error (RMSE) values, was calculated for two sampling intervals: dt = 0.1 s and dt = 0.2 s (Table 3).

Table 3.

Root mean square error (RMSE) values of the sensors before (raw) and after bias correction (BC) at two sampling intervals ( s and s).

- Camera Sensor (CAM):

- –

- Before Correction (Raw): CAM had RMSE X values of 0.0665 m and 0.0601 m, and RMSE Y values of 0.1002 m and 0.0993 m for s and s, respectively.

- –

- After Correction (Corrected): RMSE values significantly decreased, with RMSE X decreasing to 0.0660 m and 0.0598 m, and RMSE Y decreasing to 0.0859 m and 0.0858 m, respectively.

- Dead Reckoning (DR) Sensor:

- –

- Before Correction (Raw): DR had RMSE X values of 0.0934 m and 0.0898 m, and RMSE Y values of 0.0503 m and 0.0561 m.

- –

- After Correction (Corrected): Error correction significantly reduced the RMSE of DR. RMSE X decreased to 0.0897 m and 0.0858 m, while RMSE Y decreased to 0.0272 m and 0.0317 m, respectively.

- Inertial Measurement Unit (IMU) Sensor:

- –

- Before Correction (Raw): The IMU is used to measure the rotation angle (). The RMSE values before correction were approximately 1.1959 rad and 1.2039 rad.

- –

- After Correction (Corrected): The RMSE values decreased to approximately 1.1524 rad and 1.1497 rad.

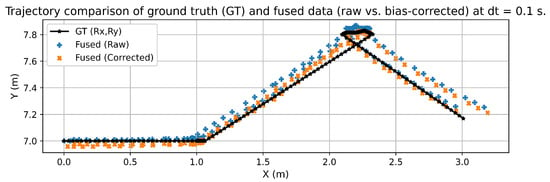

The trajectories of the CAM and DR sensors before and after the aberration correction, compared to the ground truth (GT) trajectories at dt = 0.1 s and dt = 0.2 s, are shown Figure 7 and Figure 8 respectively.

Figure 7.

Trajectory comparison of ground truth (GT) and sensor data (CAM and DR): Raw vs. Bias-Corrected (BC) at s.

Figure 8.

Trajectory comparison of ground truth (GT) and sensor data (CAM and DR): Raw vs. Bias-Corrected (BC) at s.

- True Trajectory (GT): The black line represents the actual trajectory of the object, which is the reference line.

- CAM (Raw): The dark blue dots represent the CAM path before correction.

- CAM (Bias-Corrected): The orange dots represent the CAM path after correction.

- DR (Raw): The green dots represent the DR path before correction.

- DR (Bias-Corrected): The red dots represent the DR path after correction.

Bias correction reduces sensor errors. The apparent decrease in RMSE values corresponds to a closer match of the corrected trajectory to the ground truth, demonstrating the importance of performing bias correction before data acquisition. The variances of the raw and bias-corrected data remain identical because bias correction only subtracts a constant offset from the original signal. This operation affects the mean but does not change the statistical spread (variance) of the data. According to the fundamental identity of statistics, subtracting a constant does not influence the variability of the data samples. Therefore, although the mean error decreases after bias correction, the variance values reported in Table 4 and Table 5 remain unchanged, which is theoretically expected. In addition to bias correction, synchronization between the EKF (10 Hz) and camera measurements (5 Hz) plays a significant role in improving the consistency and stability of the estimated trajectories. When camera data are not available in a given cycle, the EKF performs a prediction-only update using Dead Reckoning (DR), which prevents discontinuities caused by missing measurements. When a camera becomes available, a full update step is executed, incorporating both DR and camera observations.

Table 4.

Variance and corresponding weights of CAM, DR, and IMU sensors at s.

Table 5.

Variance and corresponding weights of CAM, DR, and IMU sensors at s.

4.2. Analysis of the Results of Sensor Data Fusion Using Inverse-Variance Weighting

Data from multiple sensors is combined using Inverse-Variance Weighting, a method that prioritizes low variance (i.e., high accuracy) data. Data from cameras (CAM), dead reckoning (DR), and inertial measurement units (IMU) are compared between raw data and bias corrected at s and s.

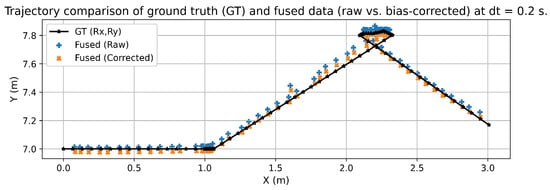

From Table 4 and Table 5, the variance values were used to determine the sensor weights via inverse-variance weighting, which assigns larger weights to measurements with lower variance. In particular, the dead reckoning (DR) sensor, having a very low Y-axis variance (), receives a high Y-axis weight (). Conversely, the camera (CAM) has a higher Y-axis variance, resulting in a lower weight (), while along the X-axis the similar variances lead to more balanced weights. For the rotation angle (), the inertial measurement unit (IMU)—as the sole information source—receives a weight of 1. These findings are consistent with the trajectory comparison in Figure 9 and Figure 10, where the bias-corrected trajectories lie closer to the ground truth.

Figure 9.

Trajectory comparison between the ground truth (GT) and fused data (raw and bias-corrected) at s.

Figure 10.

Trajectory comparison between the ground truth (GT) and fused data (raw and bias-corrected) at s.

Table 6, which shows the root mean square error (RMSE) values of the sensor data fusion, shows that bias-corrected data were obtained before fusion. Comparing the raw data and the fused data after bias correction, the RMSE of the bias-corrected data decreased on both the X and Y axes.

Table 6.

Summary of RMSE values for fused data before and after bias correction at two different sampling intervals.

4.3. Analysis of Data Integration Results Using EKF

Indoor localization of an Automated Guided Vehicle (AGV) involves the fusion of data from multiple sensor sources to improve positional accuracy. The Extended Kalman Filter (EKF) is widely adopted due to its capability of handling nonlinear system behavior and multi-sensor integration. In this study, two EKF models were evaluated:

- EKF Normal: the standard EKF model using independent CAM and DR observations (tested on both raw and bias-corrected data).

- EKF Blended: an improved EKF model that uses pre-blended CAM–DR measurements based on the user-defined weighting coefficients .

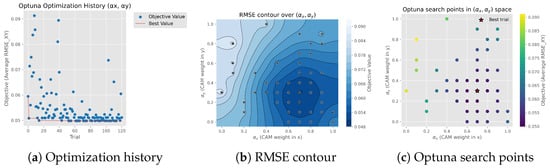

In addition, the blending coefficients were not manually selected. Instead, a hyperparameter optimization framework, Optuna, employing the Tree-structured Parzen Estimator (TPE) sampler, was used to automatically search for the optimal blending weights that minimize the trajectory error, as described earlier in Section 2.4.3. The results of this optimization process are visualized in Figure 11.

Figure 11.

Overview of Optuna blending-weight optimization: (a) optimization history, (b) RMSE contour, (c) search points in the space.

4.3.1. RMSE Comparison of EKF Normal and EKF Blended

From Table 7, it is clear that applying bias correction significantly improves the performance of the EKF Normal model. For both sampling intervals ( s and s), the Normal (Bias Corrected) configuration yields lower RMSE values than the Normal (RAW) model, demonstrating that removing systematic sensor offsets stabilizes the EKF and reduces overall position error.

Table 7.

Summary of root mean square error (RMSE) values for Extended Kalman Filter (EKF) models at two sampling intervals ( s and s).

Furthermore, the EKF Blended model provides additional performance gains. In most configurations, the blended models show lower RMSEXY values than both Normal models. This improvement results from supplying the EKF with smoother and more statistically consistent measurements obtained from the blended CAM–DR observation vector before the update step.

4.3.2. Contribution of Optuna to Blending-Weight Selection

Manual selection of blending weights can be time-consuming and may not yield optimal values. Optuna automates this process through Bayesian optimization: sampling candidate values, running the EKF Blended model, computing RMSEXY, updating probability models, and selecting new candidate values that are more likely to reduce the error. Figure 11 illustrates:

- optimization history,

- RMSE contour over the domain,

- distribution of Optuna search points.

The optimal solutions discovered by Optuna tend to assign:

- higher (greater CAM contribution in the X-axis), and

- lower (greater DR contribution in the Y-axis).

This behavior matches the statistical reliability of each sensor. Table 4 and Table 5 show that the DR sensor has very low variance in the Y-axis, while the CAM sensor performs better along the X-axis. Thus, Optuna automatically learns the statistically appropriate weighting strategy based on variance characteristics.

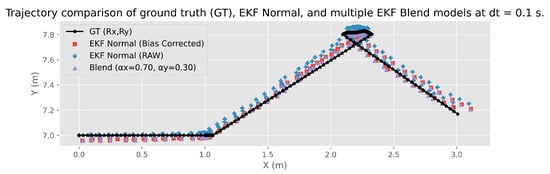

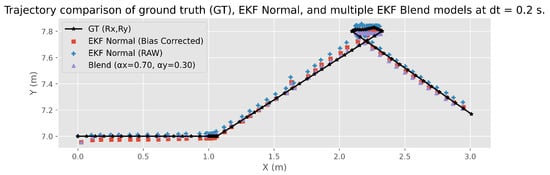

4.3.3. Trajectory Comparison

Figure 12 and Figure 13 compare the trajectories obtained from the EKF Normal (RAW and Bias Corrected) models and the EKF Blended model. The Normal (RAW) model deviates the most from the ground truth (GT), particularly at s, where the higher sampling rate also amplifies noise. When bias correction is applied, the trajectory aligns more closely with the GT. The Blended EKF demonstrates the best performance overall, producing a smoother and more accurate trajectory.

Figure 12.

Trajectory comparison between the normal and blended Extended Kalman Filter (EKF) models at s.

Figure 13.

Trajectory comparison between the normal and blended Extended Kalman Filter (EKF) models at s.

At s, the estimated trajectories contain more detail but also exhibit more oscillation. At s, trajectories are smoother due to the reduced update frequency of the camera measurements.

4.3.4. Interpretation and Summary

The RMSE results in Table 7, combined with the variance-based weights in Table 4 and Table 5, confirm that certain weighting configurations yield significant improvements. Configurations with higher and lower typically provide the lowest RMSE values, validating the inverse-variance weighting principle.

It is also important to note that all EKF and Blended EKF evaluations were performed using offline processing. The filters were applied to recorded sensor logs, allowing controlled examination of noise behavior, bias correction effects, and fusion performance. This offline processing ensures that the analysis remains independent of communication latency or computational delays that could occur in real-time systems.

4.4. Trajectory Roughness Index (TRI)

In addition to point-wise accuracy metrics such as RMSE, evaluating the smoothness of the estimated trajectory is essential for understanding the dynamic stability of AGV motion and the quality of sensor fusion. Prior literature has proposed smoothness measures based on first-order differences of trajectory signals [30], where small consecutive differences indicate smooth motion and large variations indicate abrupt changes or oscillations. Similarly, angle-based smoothness metrics have also been used to quantify abrupt changes in directional gradients of trajectory signals [31].

Building upon these concepts, this study introduces the Trajectory Roughness Index (TRI) for evaluating smoothness in 2D AGV trajectories. The TRI is derived from the first-order discrete derivative of the estimated position sequence. Let denote the estimated AGV position at time step k, and let N be the total number of samples. The TRI is defined as:

Alternatively, the TRI can be expressed in expanded form:

A lower TRI value indicates a smoother trajectory, whereas larger TRI reflects oscillatory or jittery motion. This metric is used to compare trajectory quality across CAM, DR, EKF-Normal, and the proposed EKF-Blend.

The results in Table 8 show that the Blended EKF improves trajectory smoothness across both sampling intervals. In particular, the configuration yields the lowest TRI values for both s and s, indicating the smoothest estimated trajectories. These findings complement the RMSE analysis and confirm that appropriate blending weights enhance not only point-wise accuracy but also dynamic smoothness of AGV motion.

Table 8.

Trajectory Roughness Index (TRI) for EKF models at sampling intervals s and s. Lower TRI indicates smoother estimated trajectories.

4.5. Discussion and Suggestions

The experiments demonstrate the advantages of Blended EKF, but there are some limitations.

First, the experiments were performed indoors under controlled environmental and camera configurations. Such conditions may not fully represent more complex real-world environments where illumination, occlusion, or camera-viewpoint variations can influence measurement quality. Second, the analysis assumes that the statistical properties of each sensor (bias, variance, drift) remain constant throughout the experiment. In practice, sensor characteristics may vary over time due to temperature changes, mechanical wear, or electromagnetic disturbance, which can affect the performance of bias correction and sensor fusion. Third, the blending coefficients used in the Blended EKF were tuned based on offline optimization using Optuna and the TPE sampler, as presented in Section 2.4.3. Although this method successfully identified weight combinations that minimize RMSE and TRI, the resulting blending coefficients remain fixed during operation.

Beyond the fixed-weight blending approach used in this study, an additional consideration is the use of Adaptive Blending, where the blending weights are automatically adjusted according to the instantaneous noise characteristics of each sensor. Machine-learning-based techniques such as Gaussian Process Regression (GPR), Reinforcement Learning (RL), or other online learning frameworks could be applied to estimate time-varying measurement variance for both CAM and DR in real time, allowing the EKF to update blending weights dynamically at each cycle. This approach is particularly beneficial when the CAM suffers from fluctuating noise or when DR drift becomes significant, potentially improving stability and overall positioning accuracy beyond the fixed-weight method.

This limitation provides a useful guideline for future research. Future studies could expand the experiments to larger or more complex environments and incorporate dynamic simulations, such as moving obstacles or the interaction of multiple automated guided vehicles (AGVs). Developing methods and compensating for time-varying biases is also important. Furthermore, adopting adaptive or machine-learning mechanisms to automatically adjust blending weights may contribute to the practical application of the proposed Blended EKF in industrial and logistics contexts. Future research may further extend this direction by implementing a complete Adaptive Blending framework based on RL or GPR models. Such models can learn sensor noise behavior online and adjust the blending weights in real time during EKF execution, enabling more robust fusion under dynamic uncertainty conditions.

5. Conclusions

This research has demonstrated an effective data fusion framework for the localization of Automated Guided Vehicles (AGVs) in indoor environments. The core of this framework, which incorporates bias correction and inverse-variance weighting, yielded a significant reduction in the Root Mean Square Error (RMSE) across all sensors, causing the corrected trajectories to align more closely with the ground truth (GT). This weighting method strategically assigns greater influence to more reliable sensor data, such as the high weight given to the Dead Reckoning (DR) sensor’s stable Y-axis measurements.

The proposed Blended EKF methodology proved superior to the standard EKF. Not only did it achieve a lower RMSE in key scenarios, but it also produced a markedly smoother trajectory that demonstrated the highest fidelity to the ground truth. This improvement is further supported by the Trajectory Roughness Index (TRI), where the Blended EKF consistently achieved the lowest TRI values, confirming superior smoothness in the estimated trajectory. Furthermore, this study confirmed that fine-tuning the blending coefficients to reflect the statistical properties of each sensor enhances both accuracy and stability. Specifically, assigning greater weight to the DR sensor for the Y-axis and the Camera (CAM) for the X-axis minimized the overall RMSE. In particular, the axis-dependent weighting strategy—assigning greater weight to the DR sensor for the Y-axis and the Camera (CAM) for the X-axis—was validated through experimental results. Additionally, the Optuna-based blending-weight optimization effectively identified the optimal coefficient pairs that minimized the EKF error, reinforcing the importance of data-driven blending selection.

In conclusion, the integration of bias correction, inverse-variance weighting, TRI-based smoothness evaluation, and optimized blending establishes the proposed Blended EKF as an efficient and robust localization framework. the experimental results indicate that the proposed approach provides a low-cost, accurate, and robust localization solution, making it highly suitable for practical AGV applications in indoor settings.

Author Contributions

Conceptualization: N.K. and J.S.; Methodology: N.K., S.K. and J.S.; Software: N.K. and S.P.; Validation: N.K. and J.S.; Formal analysis: N.K. and S.S.; Investigation: N.K. and J.S.; Resources: N.K., S.K. and S.P.; Data curation: N.K. and S.S.; Writing—original draft presentation: N.K. and J.S.; Writing—review and editing: J.S., N.K. and S.P.; Visualization: N.K.; Supervision: S.P., S.K. and J.S.; Project administration: J.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Suranaree University of Technology. This research was funded by the Suranaree University of Technology (SUT), grant OROG No.30/2566.

Data Availability Statement

The datasets generated during and/or analyzed during the current study are available from the corresponding author upon reasonable request.

Acknowledgments

This work was supported by Suranaree University of Technology and Department of Mechatronics and Robotics Engineering, School of Engineering and Innovation, Rajamangala University of Technology Tawan-ok.

Conflicts of Interest

The authors declare no potential conflicts of interests.

References

- Ito, S.; Hiratsuka, S.; Ohta, M.; Matsubara, H.; Ogawa, M. Small Imaging Depth LIDAR and DCNN-Based Localization for Automated Guided Vehicle. Sensors 2018, 18, 177. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, J.; Liao, Y.; Huang, B.; Li, H.; Zhou, J. Research on Multi-Sensor Fusion Localization for Forklift AGV Based on Adaptive Weight Extended Kalman Filter. Sensors 2025, 25, 5670. [Google Scholar] [CrossRef]

- Milam, G.; Xie, B.; Liu, R.; Zhu, X.; Park, J.; Kim, G.; Park, C.H. Trainable Quaternion Extended Kalman Filter with Multi-Head Attention for Dead Reckoning in Autonomous Ground Vehicles. Sensors 2022, 22, 7701. [Google Scholar] [CrossRef]

- Fragapane, G.; de Koster, R.; Sgarbossa, F.; Strandhagen, J.O. Planning and Control of Autonomous Mobile Robots for Intralogistics: Literature Review and Research Agenda. Eur. J. Oper. Res. 2021, 294, 405–426. [Google Scholar] [CrossRef]

- Bereszyński, K.; Pelic, M.; Paszkowiak, W.; Pabiszczak, S.; Myszkowski, A.; Walas, K.; Czechmanowski, G.; Węgrzynowski, J.; Bartkowiak, T. Passive Wheels—A New Localization System for Automated Guided Vehicles. Heliyon 2024, 10, e34967. [Google Scholar] [CrossRef]

- Vignarca, D.; Vignati, M.; Arrigoni, S.; Sabbioni, E. Infrastructure-Based Vehicle Localization through Camera Calibration for I2V Communication Warning. Sensors 2023, 23, 7136. [Google Scholar] [CrossRef]

- Zheng, Z.; Lu, Y. Research on AGV Trackless Guidance Technology Based on the Global Vision. Sci. Prog. 2022, 105, 00368504221103766. [Google Scholar] [CrossRef] [PubMed]

- Sousa, R.B.; Sobreira, H.M.; Moreira, A.P. A Systematic Literature Review on Long-Term Localization and Mapping for Mobile Robots. J. Field Robot. 2023, 40, 1245–1322. [Google Scholar] [CrossRef]

- Zeghmi, L.; Amamou, A.; Kelouwani, S.; Boisclair, J.; Agbossou, K. A Kalman–Particle Hybrid Filter for Improved Localization of AGV in Indoor Environment. In Proceedings of the 2nd International Conference on Robotics, Automation and Artificial Intelligence (RAAI), Singapore, 9–11 December 2022; pp. 141–147. [Google Scholar] [CrossRef]

- Xu, H.; Li, Y.; Lu, Y. Research on Indoor AGV Fusion Localization Based on Adaptive Weight EKF Using Multi-Sensor. J. Phys. Conf. Ser. 2023, 2428, 012028. [Google Scholar] [CrossRef]

- Sousa, L.C.; Silva, Y.M.R.; Schettino, V.B.; Santos, T.M.B.; Zachi, A.R.L.; Gouvea, J.A.; Pinto, M.F. Obstacle Avoidance Technique for Mobile Robots at Autonomous Human–Robot Collaborative Warehouse Environments. Sensors 2025, 25, 2387. [Google Scholar] [CrossRef]

- Urrea, C.; Agramonte, R. Kalman Filter: Historical Overview and Review of Its Use in Robotics 60 Years after Its Creation. J. Sens. 2021, 2021, 9674015. [Google Scholar] [CrossRef]

- Grzechca, D.; Ziebinski, A.; Paszek, K.; Hanzel, K.; Giel, A.; Czerny, M.; Becker, A. How Accurate Can UWB and Dead Reckoning Positioning Systems Be? Comparison to SLAM Using the RPLidar System. Sensors 2020, 20, 3761. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, S.; Xie, Y.; Xiong, T.; Wu, M. A Review of Sensing Technologies for Indoor Autonomous Mobile Robots. Sensors 2024, 24, 1222. [Google Scholar] [CrossRef] [PubMed]

- Brooks, A.; Makarenko, A.; Upcroft, B. Gaussian Process Models for Sensor-Centric Robot Localisation. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation (ICRA 2006), Orlando, FL, USA, 15–19 May 2006; pp. 56–61. [Google Scholar] [CrossRef]

- Li, M.; Mourikis, A.I. High-Precision, Consistent EKF-Based Visual–Inertial Odometry. Int. J. Robot. Res. 2013, 32, 690–711. [Google Scholar] [CrossRef]

- Fan, Z.; Zhang, L.; Wang, X.; Shen, Y.; Deng, F. LiDAR, IMU, and Camera Fusion for Simultaneous Localization and Mapping: A Systematic Review. Artif. Intell. Rev. 2025, 58, 174. [Google Scholar] [CrossRef]

- Teng, X.; Shen, Z.; Huang, L.; Li, H.; Li, W. Multi-Sensor Fusion Based Wheeled Robot Research on Indoor Positioning Method. Results Eng. 2024, 22, 102268. [Google Scholar] [CrossRef]

- Yan, Y.; Zhang, B.; Zhou, J.; Zhang, Y.; Liu, X. Real-Time Localization and Mapping Utilizing Multi-Sensor Fusion and Visual–IMU–Wheel Odometry for Agricultural Robots. Agronomy 2022, 12, 1740. [Google Scholar] [CrossRef]

- Hesch, J.A.; Kottas, D.G.; Bowman, S.L.; Roumeliotis, S.I. Camera–IMU-Based Localization: Observability Analysis and Consistency Improvement. Int. J. Robot. Res. 2013, 33, 182–201. [Google Scholar] [CrossRef]

- Niu, X.; Wu, Y.; Kuang, J. Wheel-INS: A Wheel-Mounted MEMS IMU-Based Dead Reckoning System. IEEE Trans. Veh. Technol. 2021, 70, 9814–9825. [Google Scholar] [CrossRef]

- Pawako, S.; Khaewnak, N.; Kosiyanurak, A.; Wanglomklang, T.; Srisertpol, J. Optimizing Multi-Camera PnP Localization via Gaussian Process Regression for Intelligent AGV Navigation. Int. J. Intell. Eng. Syst. 2025, 18, 673–686. [Google Scholar] [CrossRef]

- Perera, L.D.L.; Wijesoma, W.S.; Adams, M.D. The Estimation Theoretic Sensor Bias Correction Problem in Map Aided Localization. Int. J. Robot. Res. 2006, 25, 645–667. [Google Scholar] [CrossRef]

- Khaleghi, B.; Khamis, A.; Karray, F.O.; Razavi, S.N. Multisensor Data Fusion: A Review of the State-of-the-Art. Inf. Fusion 2013, 14, 28–44. [Google Scholar] [CrossRef]

- Chen, Y.; Cui, Q.; Wang, S. Fusion Ranging Method of Monocular Camera and Millimeter-Wave Radar Based on Improved Extended Kalman Filtering. Sensors 2025, 25, 3045. [Google Scholar] [CrossRef] [PubMed]

- Akhlaghi, S.; Zhou, N.; Huang, Z. Adaptive Adjustment of Noise Covariance in Kalman Filter for Dynamic State Estimation. In Proceedings of the 2017 IEEE Power & Energy Society General Meeting, Chicago, IL, USA, 16–20 July 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Akiba, T.; Sano, S.; Yanase, T.; Ohta, T.; Koyama, M. Optuna: A Next-Generation Hyperparameter Optimization Framework. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; ACM: New York, NY, USA, 2019; pp. 2623–2631. [Google Scholar] [CrossRef]

- Bergstra, J.; Bardenet, R.; Bengio, Y.; Kégl, B. Algorithms for Hyper-Parameter Optimization. In Advances in Neural Information Processing Systems; Shawe-Taylor, J., Zemel, R., Bartlett, P., Pereira, F., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2011; Volume 24, pp. 1–9. Available online: https://proceedings.neurips.cc/paper_files/paper/2011/file/86e8f7ab32cfd12577bc2619bc635690-Paper.pdf (accessed on 23 November 2025).

- Snoek, J.; Larochelle, H.; Adams, R.P. Practical Bayesian Optimization of Machine Learning Algorithms. In Advances in Neural Information Processing Systems; Pereira, F., Burges, C.J., Bottou, L., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2012; Volume 25, pp. 1–9. Available online: https://proceedings.neurips.cc/paper_files/paper/2012/file/05311655a15b75fab86956663e1819cd-Paper.pdf (accessed on 23 November 2025).

- Roy, S.; Petrizze, D.; Dihel, L.; Xue, M.; Dolph, C.; Holbrook, H. Using Trajectory Smoothness Metrics to Identify Drones in Radar Track Data. In Proceedings of the AIAA Aviation 2022 Forum. NASA Technical Report 20220006977, Chicago, IL, USA, 27 June–1 July 2022; Available online: https://ntrs.nasa.gov/citations/20220006977 (accessed on 23 November 2025).

- Desai, S.; Shrivastava, A.; D’Elia, M.; Najm, H.N.; Dingreville, R. Trade-Offs in the Latent Representation of Microstructure Evolution. Acta Mater. 2024, 263, 119514. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.