Evaluation of Pavement Marking Damage Degree Based on Rotating Target Detection in Real Scenarios

Abstract

1. Introduction

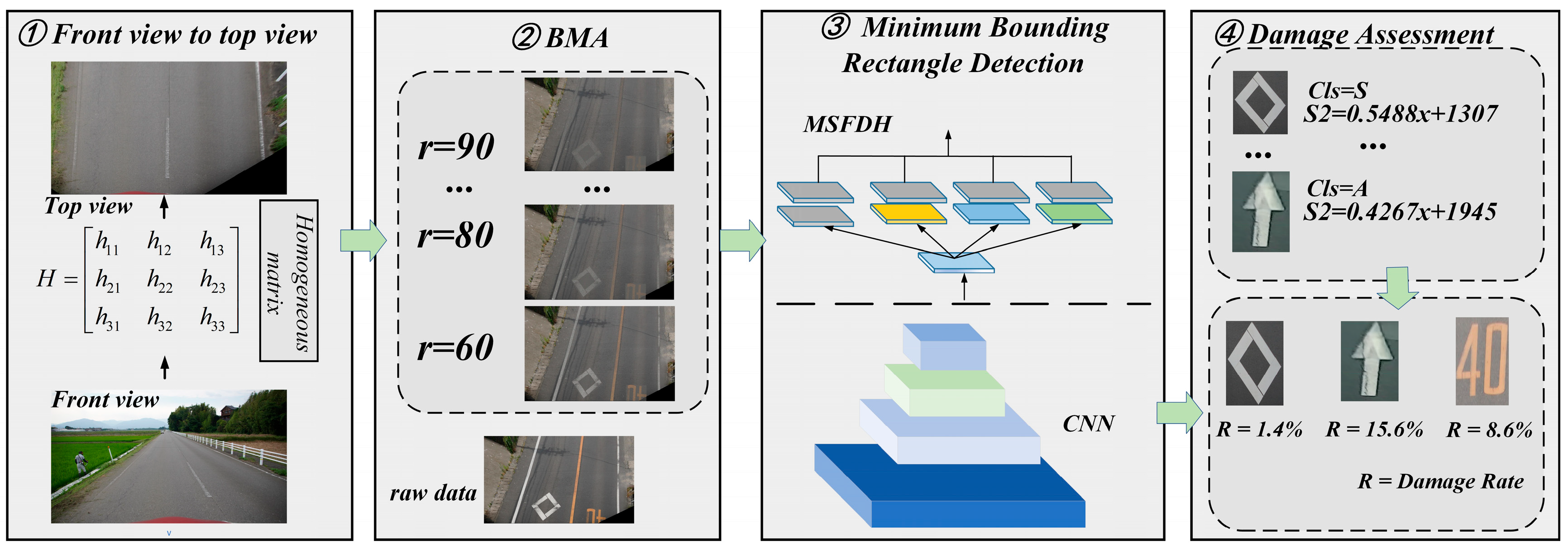

- (1)

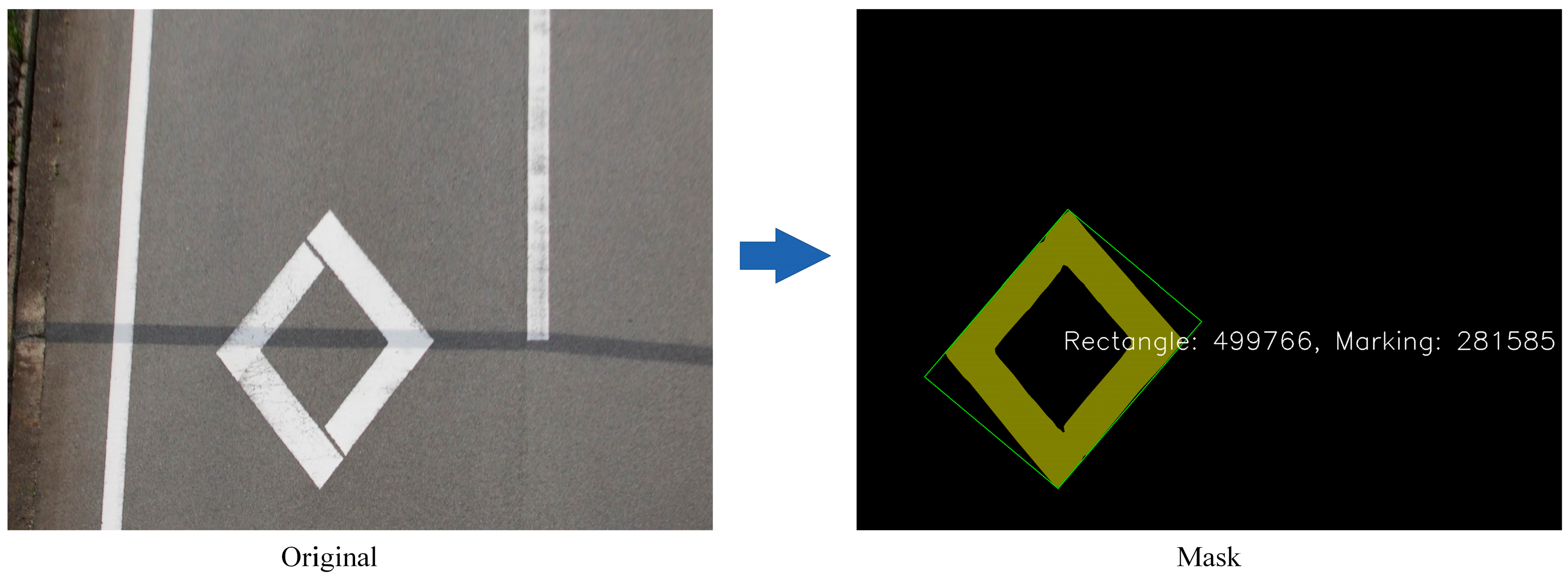

- A novel method for pavement marking damage detection is proposed. The method estimates the theoretical intact area of a marking from its minimum bounding rectangle and, by comparing it with the actual damaged area under a predefined threshold, quantitatively assesses the degree of damage.

- (2)

- A mathematical model is developed for different types of pavement markings to describe the relationship between the marking area and its minimum bounding rectangle, providing a theoretical basis for quantitative damage assessment.

- (3)

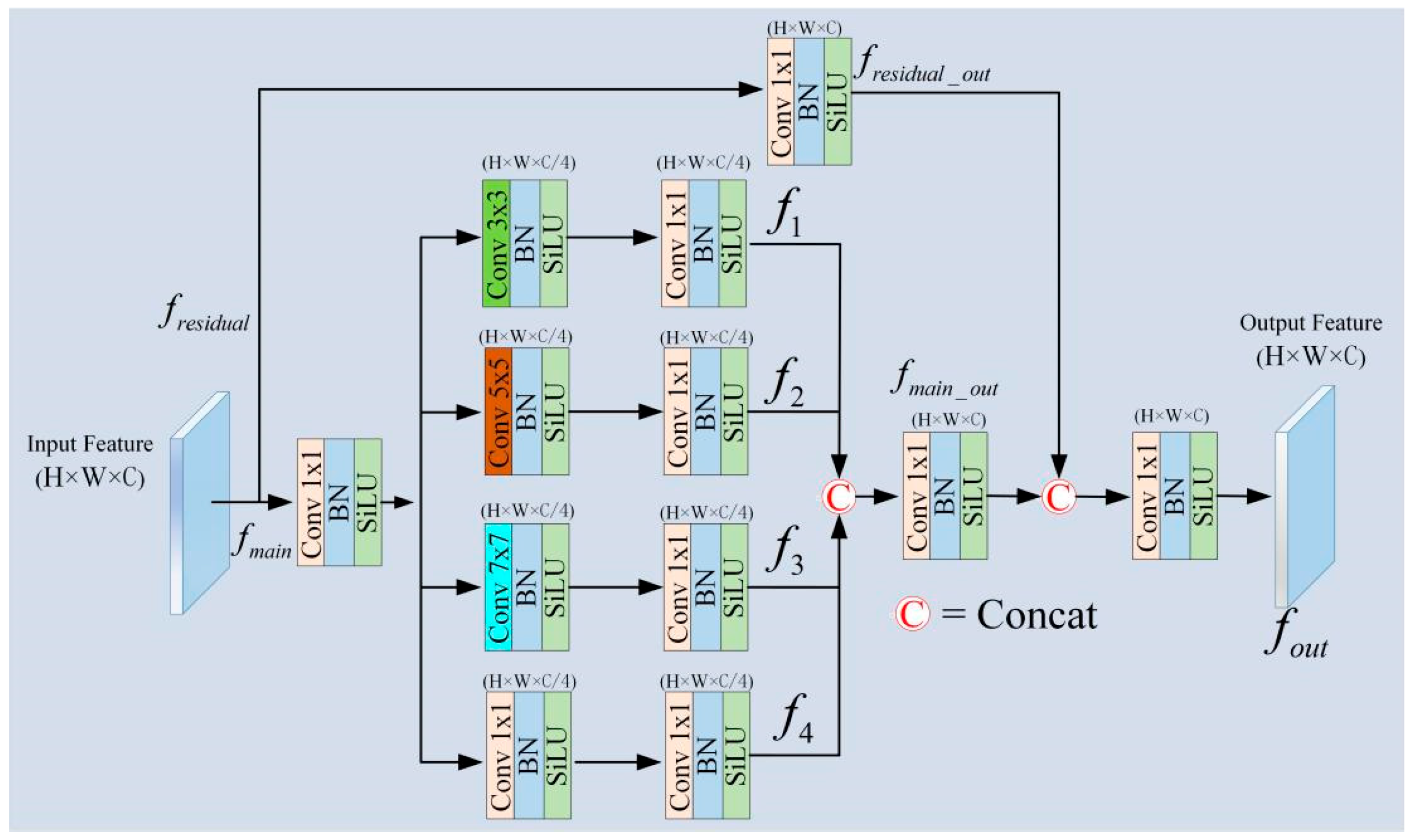

- To handle the elongated and diverse shapes of pavement markings and improve minimum bounding rectangle detection, this study proposes a Multi-Scale Feature Fusion-based Detector Head (MSFDH). By fusing multi-scale features, the proposed head enhances boundary perception and significantly boosts detection accuracy and robustness.

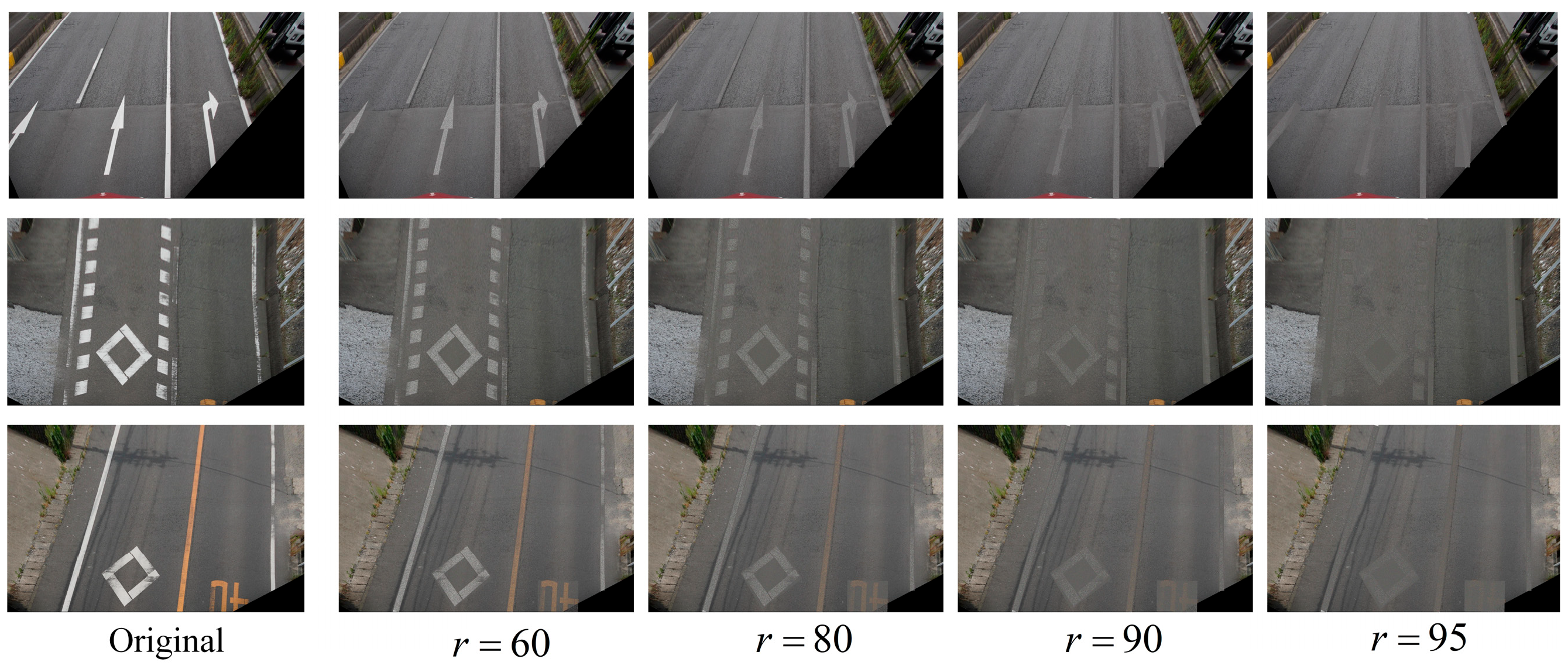

- (4)

- A Broken Marker Augmentation (BMA) method is proposed to simulate road markings under varying degrees of damage, thereby enhancing data diversity and improving the model’s accuracy and robustness in detecting severely damaged markings.

2. Related Work

2.1. Deep Learning-Based Road Marking Detection

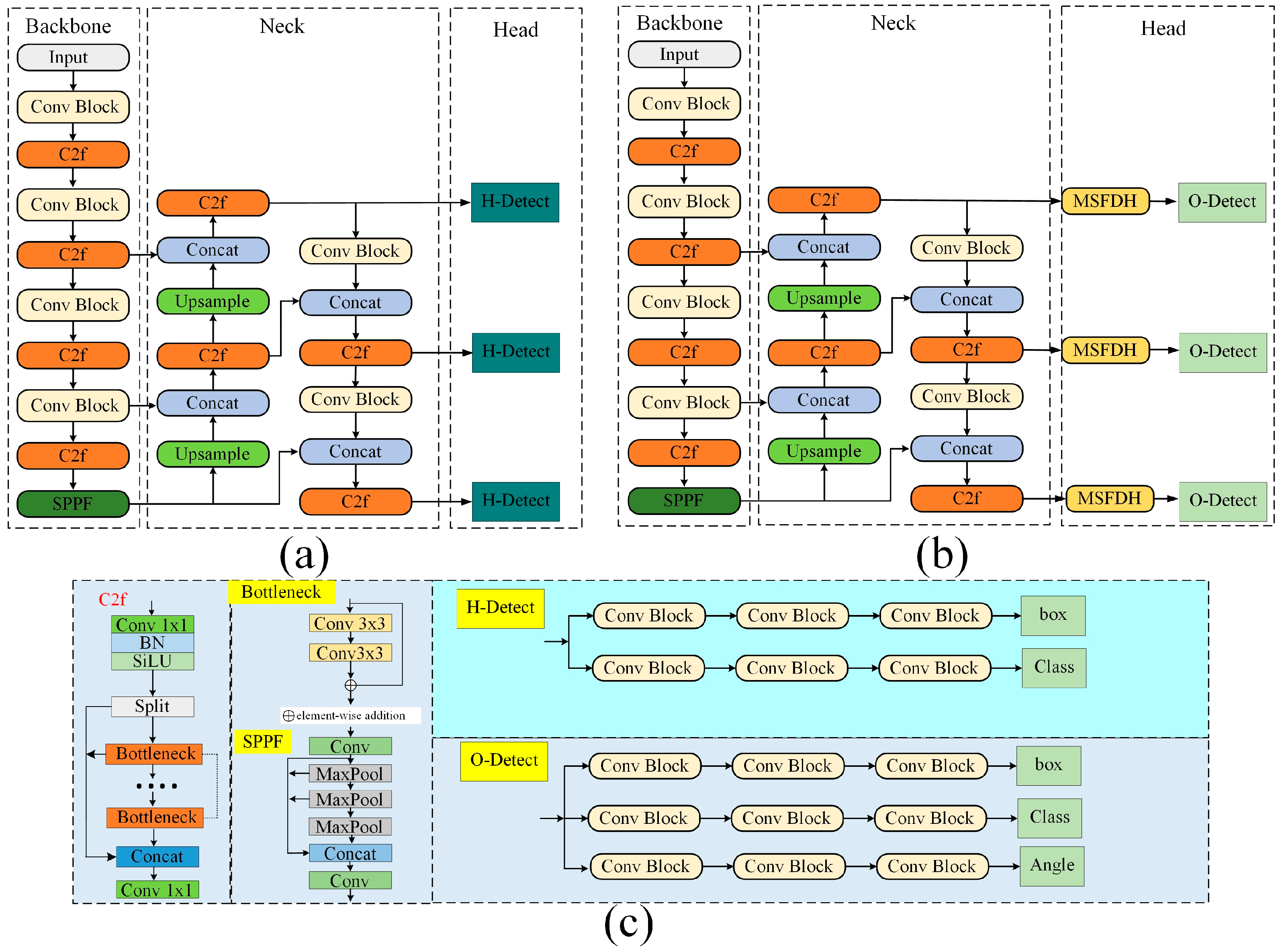

2.2. YOLOv8 Object Detection

2.3. Oriented Object Detection (OOD)

2.4. Data Augmentation

2.5. Feature Fusion

3. Proposed Approach

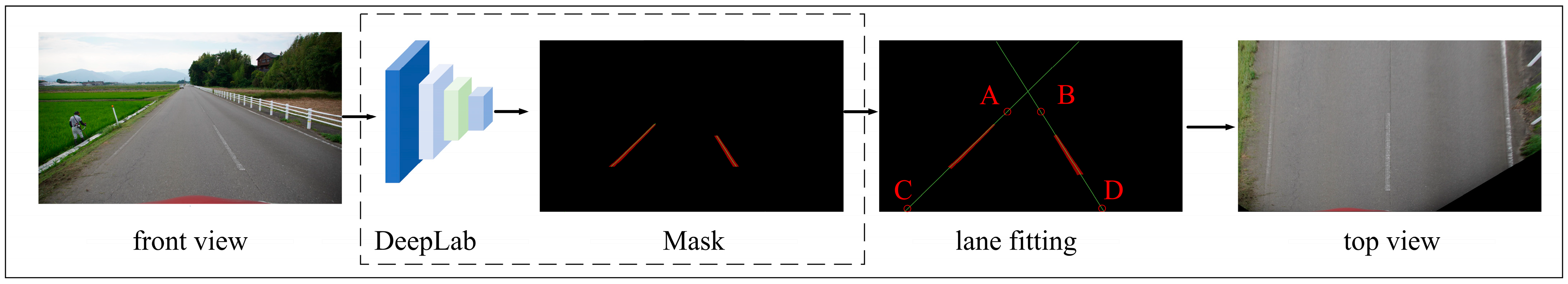

3.1. Transformation of Front View to Top View

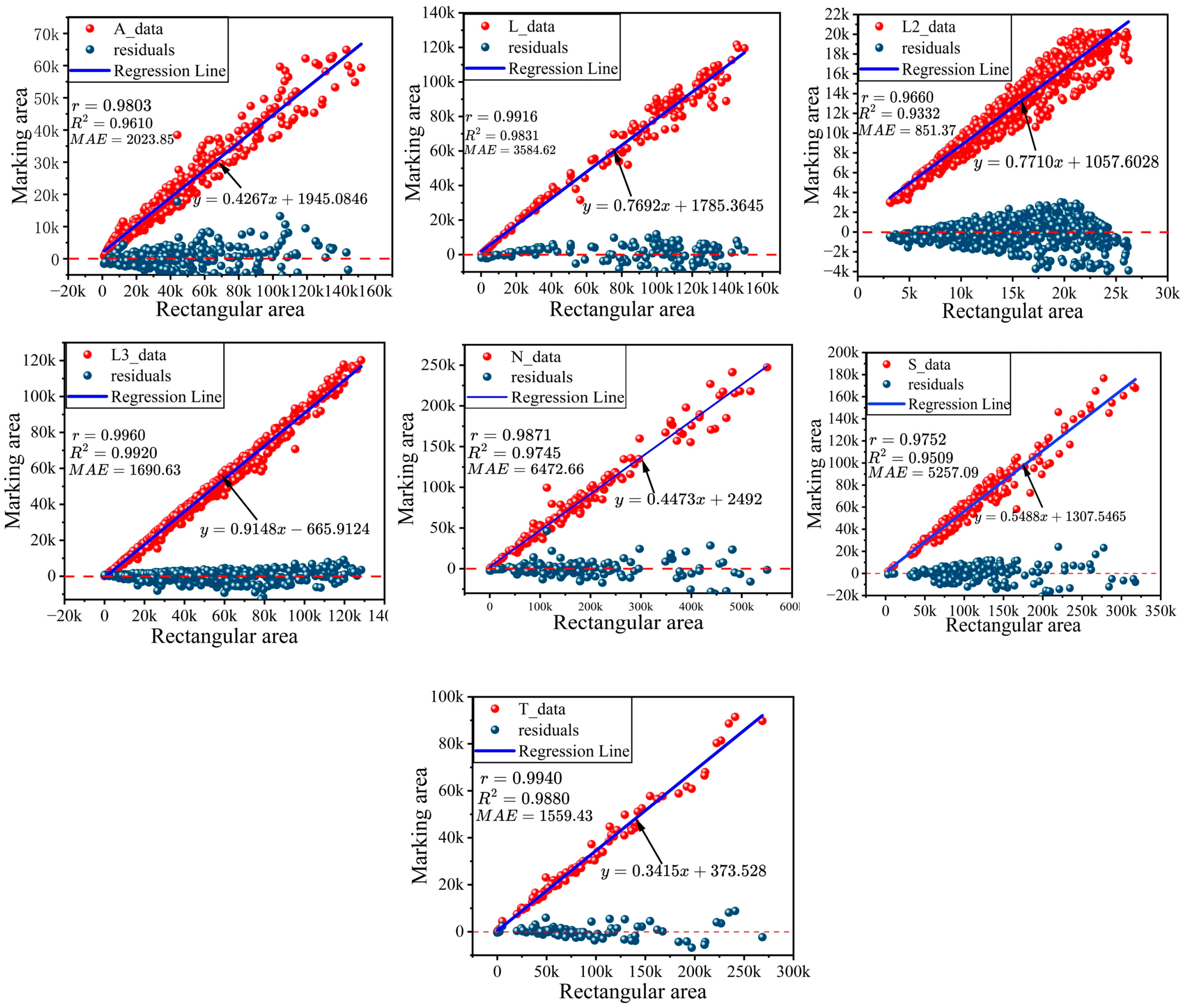

3.2. Establishing a Mathematical Model Between a Rectangular Box and a Marked Line

3.3. Broken Marker Data Augmentation Method (BMA)

| Algorithm 1 Pseudo-code of the proposed method BMA. Damaged road marking data augmentation |

| Input: Input a picture and the rotated label file that corresponds to it. |

| Output: Updating a BMA-processed image |

| 1: Counts the values of the most frequently occurring pixels in an image in Equation (7). 2: Calculate the number of filled pixel points in Equation (8). 3: Randomly selects the pixel point locations to be filled in Equation (9). 4: Fills the most frequent pixel values to the specified position in Equation (10). 5: End |

3.4. Improved Rotating Object Detection Based on YOLOv8

4. Experimental Verification and Analysis

4.1. Model Evaluation Indicators and Experimental Setting

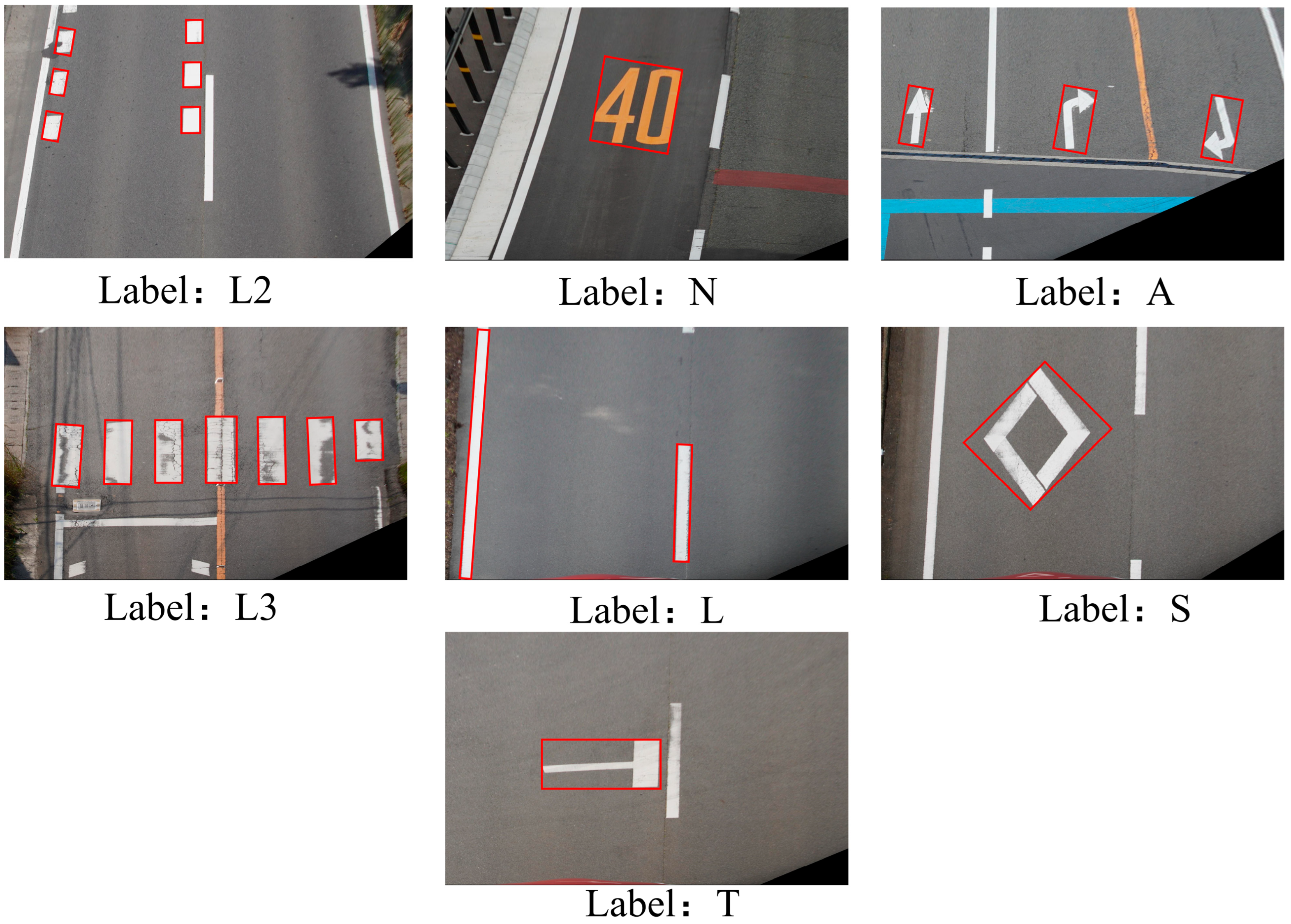

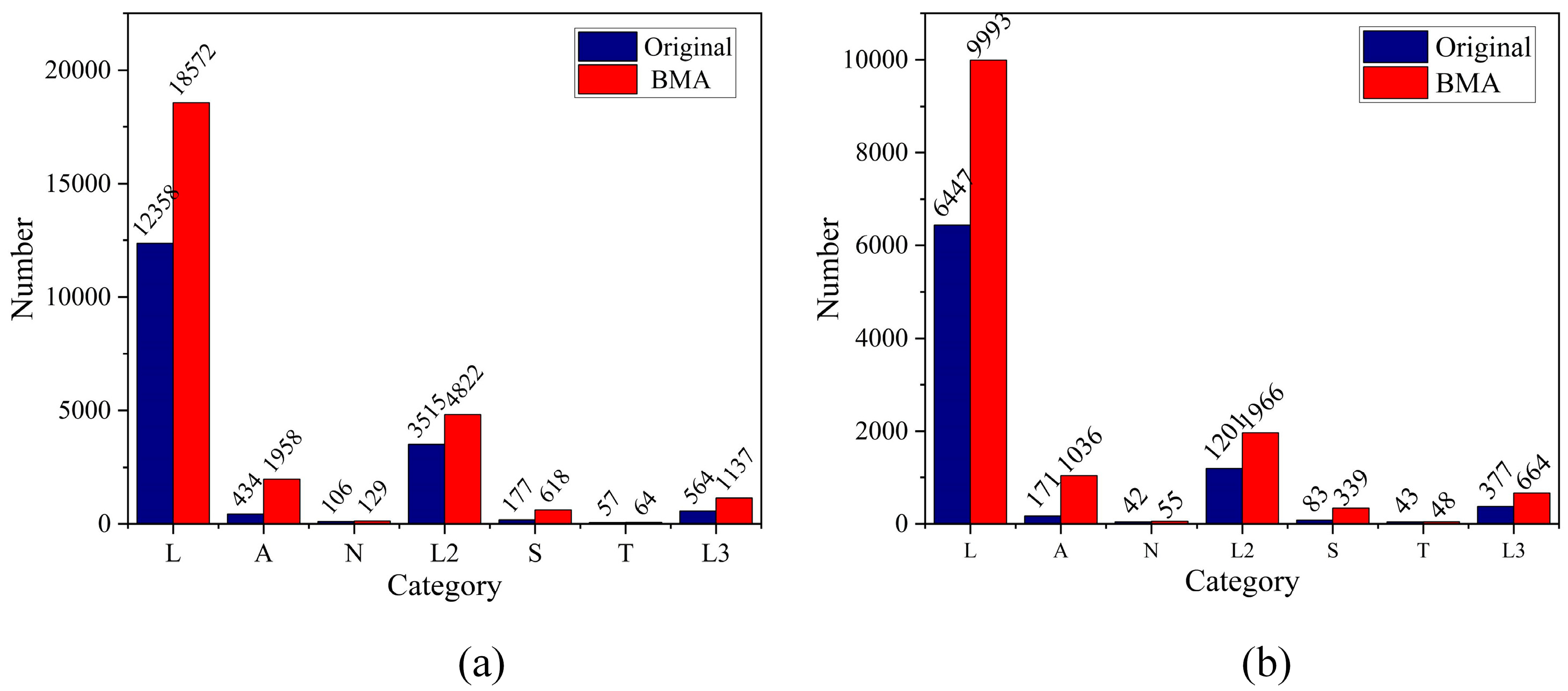

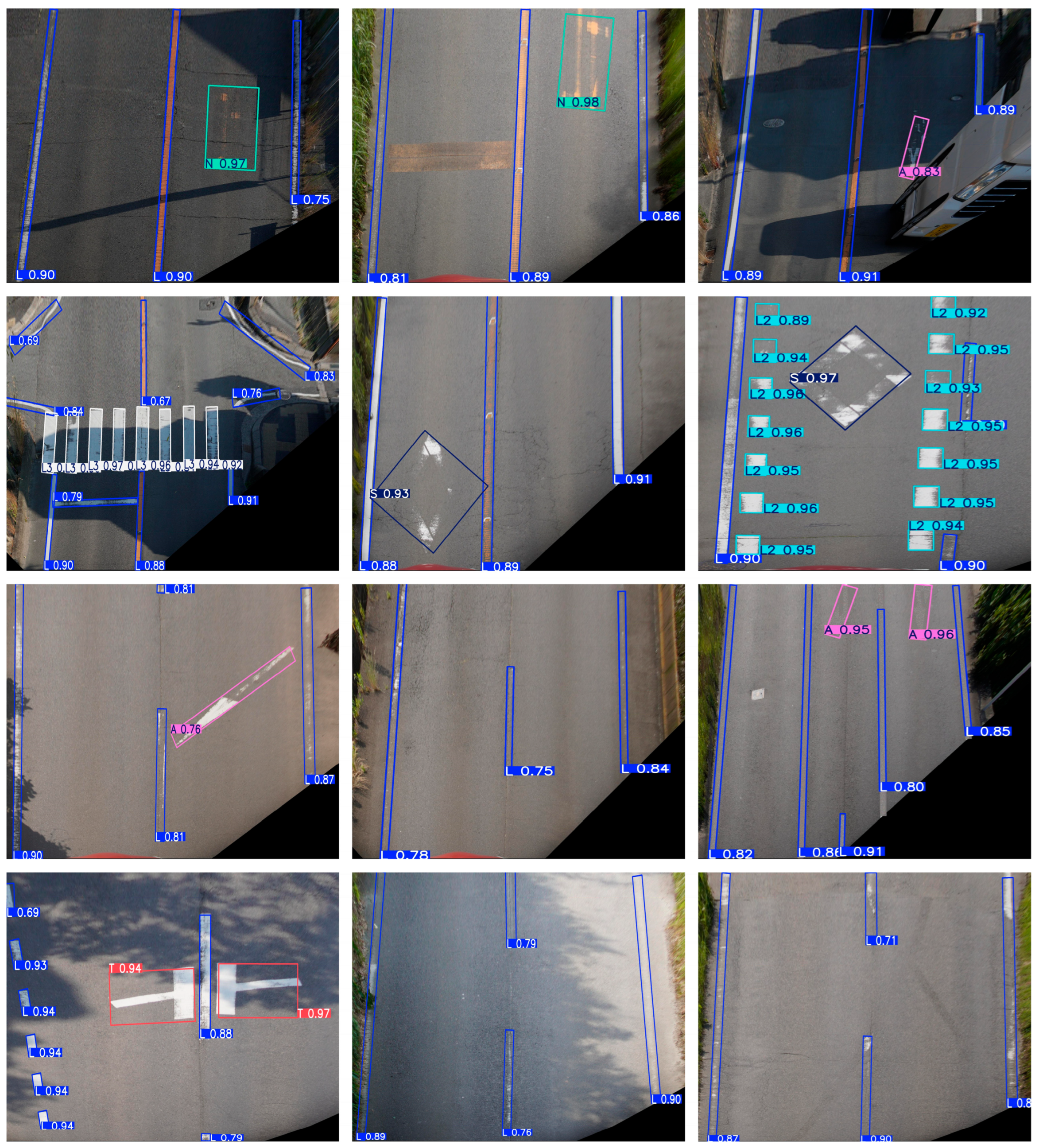

4.2. Dataset

4.3. Data Labeling

4.4. Experimental Verification

4.4.1. Mathematical Relationship Between a Labeled Line and Its Minimum Outer Rectangle

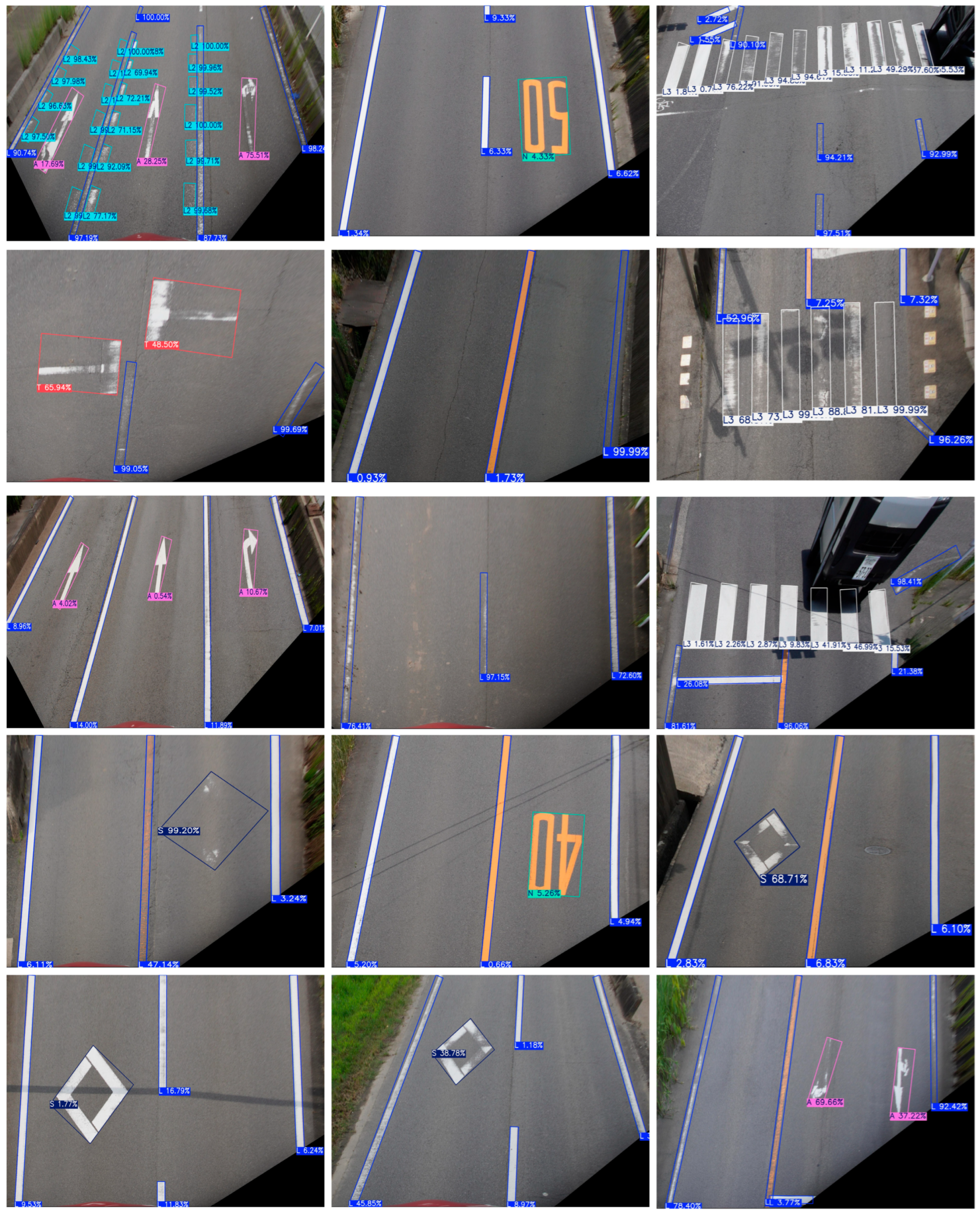

4.4.2. Minimum Outer Rectangular Box Detection

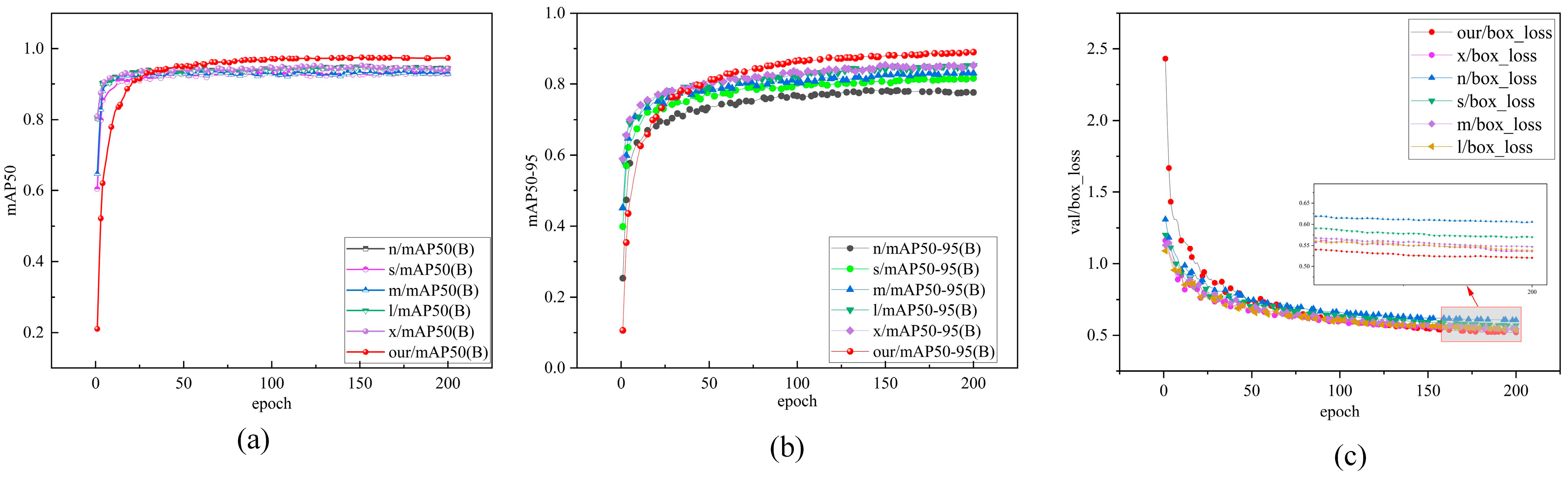

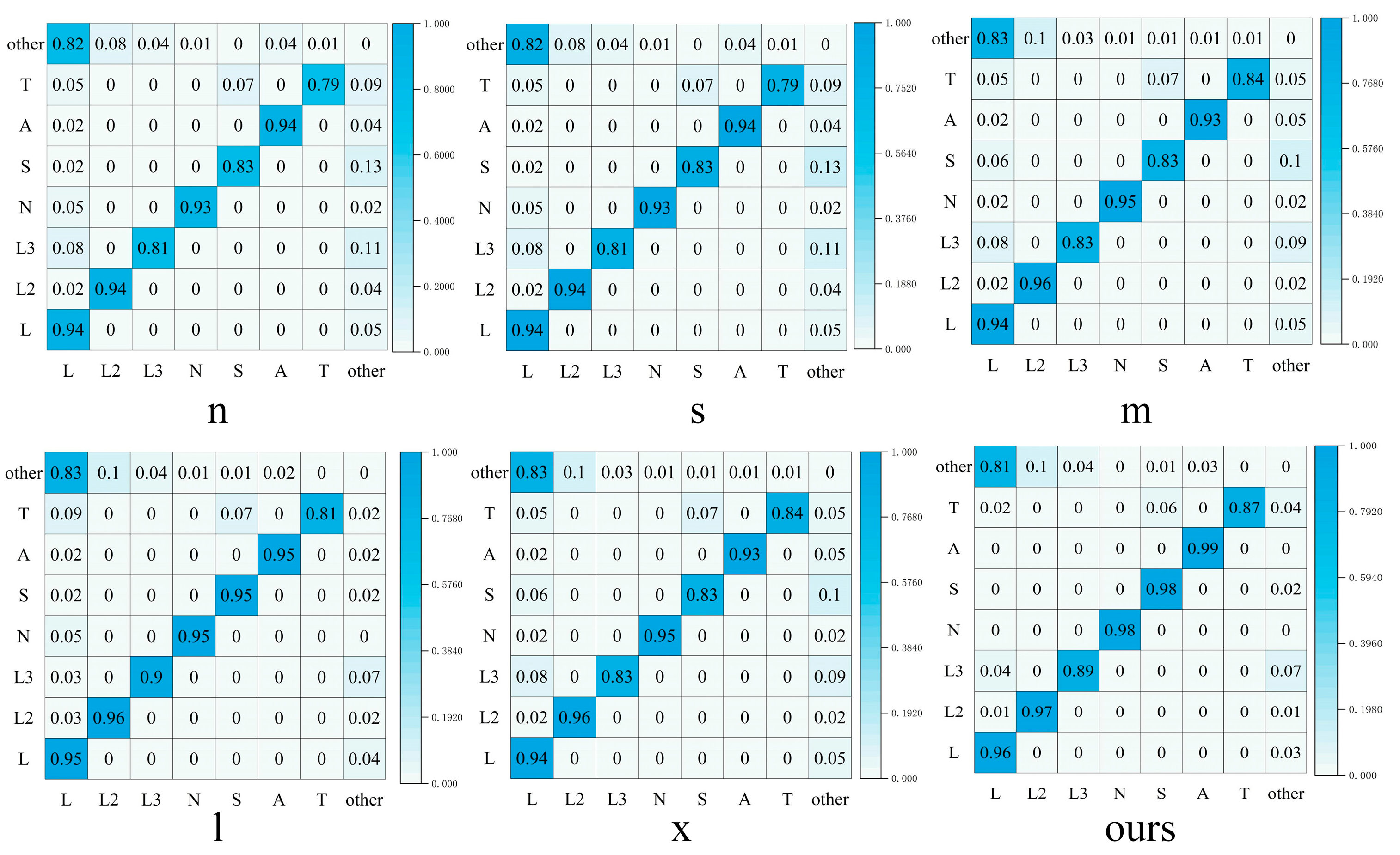

- (1)

- Analysis of experimental results

- (2)

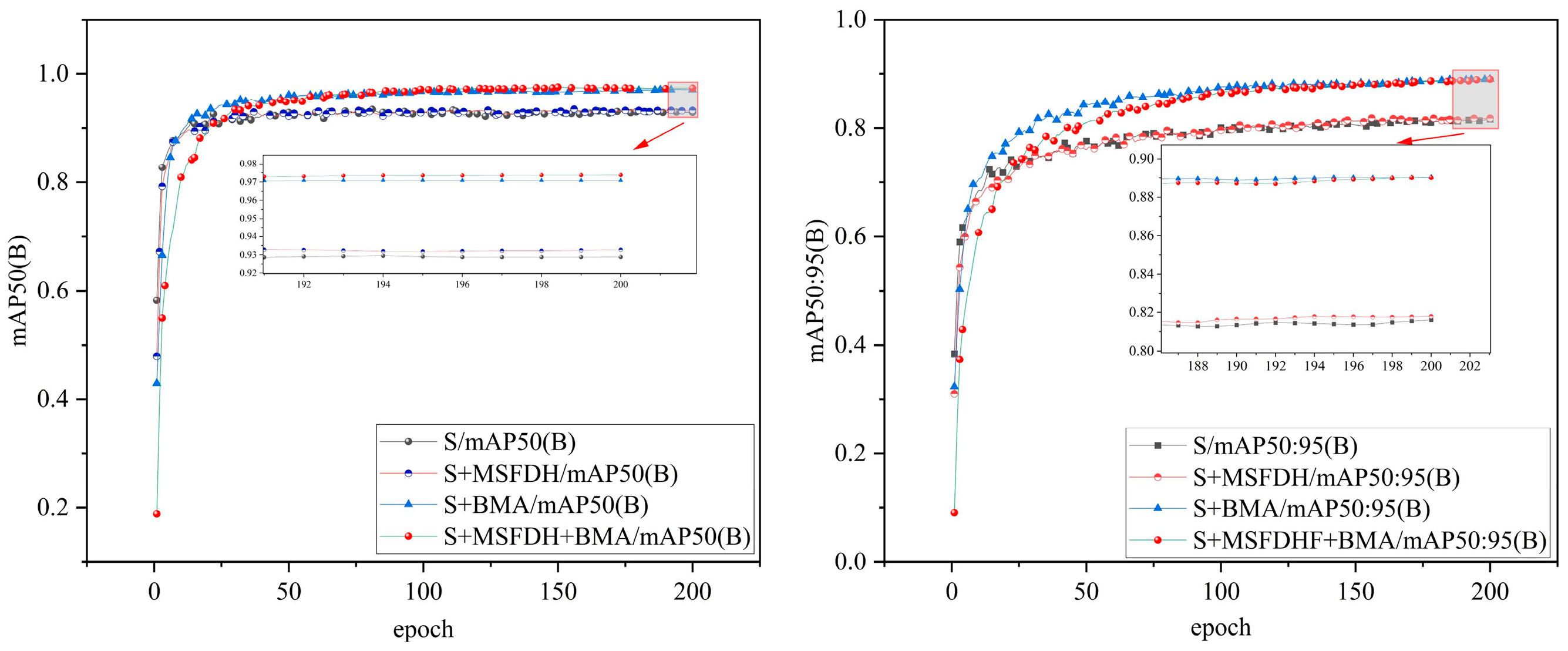

- Analysis of ablation experiments

- (3)

- Experimental detection of the degree of damage

5. Methodological Limitations and Future Research Directions

6. Summary and Discussion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chen, T.; Dai, J.; Dong, B.; Zhang, T.; Xu, W.; Wang, Z. Road marking defect detection based on CFG_SI_YOLO network. Digit. Signal Process. 2024, 153, 104614. [Google Scholar] [CrossRef]

- Zou, Q.; Jiang, H.; Dai, Q.; Yue, Y.; Chen, L.; Wang, Q. Robust Lane Detection From Continuous Driving Scenes Using Deep Neural Networks. IEEE Trans. Veh. Technol. 2019, 69, 41–54. [Google Scholar] [CrossRef]

- Jin, D.; Park, W.; Jeong, S.-G.; Kwon, H.; Kim, C.-S. Eigenlanes: Data-Driven Lane Descriptors for Structurally Diverse Lanes. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 17142–17150. [Google Scholar]

- Zhang, Y.; Lu, Z.; Ma, D.; Xue, J.-H.; Liao, Q. Ripple-GAN: Lane Line Detection With Ripple Lane Line Detection Network and Wasserstein GAN. IEEE Trans. Intell. Transp. Syst. 2020, 22, 1532–1542. [Google Scholar] [CrossRef]

- Yu, Y.; Li, Y.; Liu, C.; Wang, J.; Yu, C.; Jiang, X.; Wang, L.; Liu, Z.; Zhang, Y. MarkCapsNet: Road Marking Extraction From Aerial Images Using Self-Attention-Guided Capsule Network. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Cao, J.; Song, C.; Song, S.; Xiao, F.; Peng, S. Lane Detection Algorithm for Intelligent Vehicles in Complex Road Conditions and Dynamic Environments. Sensors 2019, 19, 3166. [Google Scholar] [CrossRef]

- Andreev, S.; Petrov, V.; Huang, K.; Lema, M.A.; Dohler, M. Dense Moving Fog for Intelligent IoT: Key Challenges and Opportunities. IEEE Commun. Mag. 2019, 57, 34–41. [Google Scholar] [CrossRef]

- Dong, Z.; Zhang, H.; Zhang, A.A.; Liu, Y.; Lin, Z.; He, A.; Ai, C. Intelligent pixel-level pavement marking detection using 2D laser pavement images. Measurement 2023, 219, 113269. [Google Scholar] [CrossRef]

- Chimba, D.; Kidando, E.; Onyango, M. Evaluating the Service Life of Thermoplastic Pavement Markings: Stochastic Approach. J. Transp. Eng. Part B Pavements 2018, 144, 04018029. [Google Scholar] [CrossRef]

- Pike, A.M.; Whitney, J.; Hedblom, T.; Clear, S. How Might Wet Retroreflective Pavement Markings Enable More Robust Machine Vision? Transp. Res. Rec. J. Transp. Res. Board 2019, 2673, 361–366. [Google Scholar] [CrossRef]

- Chen, T.; Chen, Z.; Shi, Q.; Huang, X. Road marking detection and classification using machine learning algorithms. In Proceedings of the IEEE Intelligent Vehicles Symposium, Seoul, Republic of Korea, 28 June–1 July 2015; pp. 617–621. [Google Scholar] [CrossRef]

- Mammeri, A.; Boukerche, A.; Lu, G. Lane detection and tracking system based on the MSER algorithm, hough transform and kalman filter. In Proceedings of the MSWiM’14: 17th ACM International Conference on Modeling, Analysis and Simulation of Wireless and Mobile Systems, Montreal, QC, Canada, 21 September 2014; pp. 259–266. [Google Scholar]

- Ye, Y.Y.; Chen, H.J.; Hao, X.L. Lane marking detection based on waveform analysis and CNN. In Proceedings of the Second International Workshop on Pattern Recognition, Singapore, 19 June 2017; pp. 211–215. [Google Scholar]

- Ying, Z.; Li, G. Robust lane marking detection using boundary-based inverse perspective mapping. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 1921–1925. [Google Scholar]

- Zheng, B.; Tian, B.; Duan, J.; Gao, D. Automatic detection technique of preceding lane and vehicle. In Proceedings of the 2008 IEEE International Conference on Automation and Logistics (ICAL), Qingdao, China, 1–3 September; pp. 1370–1375.

- Zhou, S.; Jiang, Y.; Xi, J.; Gong, J.; Xiong, G.; Chen, H. A novel lane detection based on geometrical model and gabor filter. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010; pp. 59–64. [Google Scholar]

- Al-Huda, Z.; Peng, B.; Algburi, R.N.A.; Al-Antari, M.A.; Al-Jarazi, R.; Zhai, D. A hybrid deep learning pavement crack semantic segmentation. Eng. Appl. Artif. Intell. 2023, 122, 106142. [Google Scholar] [CrossRef]

- Wei, C.; Li, S.; Wu, K.; Zhang, Z.; Wang, Y. Damage inspection for road markings based on images with hierarchical semantic segmentation strategy and dynamic homography estimation. Autom. Constr. 2021, 131, 103876. [Google Scholar] [CrossRef]

- Jang, W.; Hyun, J.; An, J.; Cho, M.; Kim, E. A Lane-Level Road Marking Map Using a Monocular Camera. IEEE/CAA J. Autom. Sin. 2021, 9, 187–204. [Google Scholar] [CrossRef]

- Sun, L.; Yang, Y.; Yang, Z.; Zhou, G.; Li, L. DUCTNet: An Effective Road Crack Segmentation Method in UAV Remote Sensing Images Under Complex Scenes. IEEE Trans. Intell. Transp. Syst. 2024, 25, 12682–12695. [Google Scholar] [CrossRef]

- Kong, W.; Zhong, T.; Mai, X.; Zhang, S.; Chen, M.; Lv, G. Automatic Detection and Assessment of Pavement Marking Defects with Street View Imagery at the City Scale. Remote Sens. 2022, 14, 4037. [Google Scholar] [CrossRef]

- Wu, P.; Wu, J.; Xie, L. Pavement distress detection based on improved feature fusion network. Measurement 2024, 236, 115119. [Google Scholar] [CrossRef]

- Li, J.; Yuan, C.; Wang, X. Real-time instance-level detection of asphalt pavement distress combining space-to-depth (SPD) YOLO and omni-scale network (OSNet). Autom. Constr. 2023, 155, 105062. [Google Scholar] [CrossRef]

- Iparraguirre, O.; Iturbe-Olleta, N.; Brazalez, A.; Borro, D. Road Marking Damage Detection Based on Deep Learning for Infrastructure Evaluation in Emerging Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2022, 23, 22378–22385. [Google Scholar] [CrossRef]

- Hoang, T.M.; Nguyen, P.H.; Truong, N.Q.; Lee, Y.W.; Park, K.R. Deep RetinaNet-Based Detection and Classification of Road Markings by Visible Light Camera Sensors. Sensors 2019, 19, 281. [Google Scholar] [CrossRef]

- Azmi, N.H.; Sophian, A.; Bawono, A.A. Deep-learning-based detection of missing road lane markings using YOLOv5 algorithm. IOP Conf. Ser. Mater. Sci. Eng. 2022, 1244, 012021. [Google Scholar]

- Wu, J.; Liu, W.; Maruyama, Y. Street View Image-Based Road Marking Inspection System Using Computer Vision and Deep Learning Techniques. Sensors 2024, 24, 7724. [Google Scholar] [CrossRef]

- Wang, J.; Zeng, X.; Wang, Y.; Ren, X.; Wang, D.; Qu, W.; Liao, X.; Pan, P. A Multi-Level Adaptive Lightweight Net for Damaged Road Marking Detection Based on Knowledge Distillation. Remote Sens. 2024, 16, 2593. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A. Ultralytics YOLO. Available online: https://github.com/ultralytics/ultralytics (accessed on 3 June 2024).

- Zhang, H.; Wang, Y.; Dayoub, F.; Sunderhauf, N. Varifocalnet: An iou-aware dense object detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June; pp. 8514–8523.

- Lin, H.; Liu, J.; Zhi, N. Yolov7-DROT: Rotation Mechanism Based Infrared Object Fault Detection for Substation Isolator. IEEE Trans. Power Deliv. 2024, 40, 50–61. [Google Scholar] [CrossRef]

- Zou, H.; Wang, Z. An enhanced object detection network for ship target detection in SAR images. J. Supercomput. 2024, 80, 17377–17399. [Google Scholar] [CrossRef]

- Zhang, C.; Xiong, B.; Li, X.; Zhang, J.; Kuang, G. Learning Higher Quality Rotation Invariance Features for Multioriented Object Detection in Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 5842–5853. [Google Scholar] [CrossRef]

- Tan, Z.; Jiang, Z.; Yuan, Z.; Zhang, H. OPODet: Toward Open World Potential Oriented Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–13. [Google Scholar] [CrossRef]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018, IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar] [CrossRef]

- Sun, Z.; Leng, X.; Lei, Y.; Xiong, B.; Ji, K.; Kuang, G. BiFA-YOLO: A Novel YOLO-Based Method for Arbitrary-Oriented Ship Detection in High-Resolution SAR Images. Remote Sens. 2021, 13, 4209. [Google Scholar] [CrossRef]

- Jiang, L.; Yuan, B.; Du, J.; Chen, B.; Xie, H.; Tian, J.; Yuan, Z. MFFSODNet: Multiscale Feature Fusion Small Object Detection Network for UAV Aerial Images. IEEE Trans. Instrum. Meas. 2024, 73, 1–14. [Google Scholar] [CrossRef]

- Wang, C.; Ding, Y.; Cui, K.; Li, J.; Xu, Q.; Mei, J. A Perspective Distortion Correction Method for Planar Imaging Based on Homography Mapping. Sensors 2025, 25, 1891. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2019, arXiv:1706.05587. [Google Scholar]

- Bradski, G.; Kaehler, A. Learning OpenCV: Computer Vision with the OpenCV Library, 2nd ed.; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2008. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13708–13717. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Berlin, Germany, 6 October 2018. [Google Scholar]

| Experimental Environment | Model Hyperparameter Settings | ||

|---|---|---|---|

| GPU | 4090(24 G) | Image Size | 640 × 640 |

| CPU | Intel, i9-14900K, 3.20 GHz | Epochs | 200 |

| Python | 3.8.18 | Batch Size | 4 |

| Pytorch | 2.1.1 | Momentum | 0.937 |

| Cuda | 11.8 | Initial learning rate | 0.01 |

| systems | Windows 11 | Final learning rate | 0.01 |

| Methods | AP50 | mAP50 | ||||||

|---|---|---|---|---|---|---|---|---|

| L | L2 | L3 | N | S | A | T | ||

| YOLOv8n | 0.955 | 0.972 | 0.874 | 0.977 | 0.889 | 0.931 | 0.887 | 0.927 |

| YOLOv8s | 0.96 | 0.974 | 0.872 | 0.975 | 0.914 | 0.924 | 0.881 | 0.929 |

| YOLOv8m | 0.962 | 0.975 | 0.879 | 0.968 | 0.920 | 0.939 | 0.871 | 0.931 |

| YOLOv8l | 0.963 | 0.977 | 0.916 | 0.974 | 0.946 | 0.951 | 0.886 | 0.945 |

| YOLOv8x | 0.963 | 0.979 | 0.924 | 0.978 | 0.948 | 0.969 | 0.907 | 0.953 |

| ours | 0.976 | 0.985 | 0.935 | 0.992 | 0.993 | 0.995 | 0.941 | 0.974 |

| Methods | AP50:95 | mAP50:95 | ||||||

|---|---|---|---|---|---|---|---|---|

| L | L2 | L3 | N | S | A | T | ||

| YOLOv8n | 0.821 | 0.869 | 0.788 | 0.821 | 0.722 | 0.767 | 0.707 | 0.784 |

| YOLOv8s | 0.854 | 0.889 | 0.803 | 0.843 | 0.755 | 0.797 | 0.761 | 0.816 |

| YOLOv8m | 0.866 | 0.910 | 0.814 | 0.849 | 0.791 | 0.838 | 0.751 | 0.831 |

| YOLOv8l | 0.869 | 0.912 | 0.852 | 0.868 | 0.843 | 0.848 | 0.781 | 0.853 |

| YOLOv8x | 0.86 | 0.907 | 0.849 | 0.873 | 0.839 | 0.864 | 0.907 | 0.855 |

| ours | 0.862 | 0.903 | 0.878 | 0.879 | 0.957 | 0.960 | 0.799 | 0.891 |

| Methods | Category | Size(M) | FLOPs | Processing Time |

|---|---|---|---|---|

| YOLOv8 | n | 5.8 | 7.1G | 3.3 ms |

| s | 20.5 | 24.3G | 3.3 ms | |

| m | 47.9 | 69.6G | 3.4 ms | |

| l | 81.8 | 148.9G | 3.8 ms | |

| x | 126.4 | 232.5G | 4.5 ms | |

| ours | 27.8 | 29.1G | 3.5 ms |

| Methods | AP50 | mAP50 | ||||||

|---|---|---|---|---|---|---|---|---|

| L | L2 | L3 | N | S | A | T | ||

| YOLOv3-tiny | 0.913 | 0.94 | 0.824 | 0.986 | 0.904 | 0.926 | 0.896 | 0.913 |

| w/ | 0.932 | 0.955 | 0.902 | 0.989 | 0.989 | 0.986 | 0.915 | 0.952 |

| YOLOv5n | 0.953 | 0.972 | 0.844 | 0.991 | 0.921 | 0.939 | 0.836 | 0.922 |

| w/ | 0.905 | 0.965 | 0.878 | 0.954 | 0.987 | 0.99 | 0.936 | 0.945 |

| YOLOv5s | 0.959 | 0.982 | 0.874 | 0.973 | 0.902 | 0.942 | 0.89 | 0.932 |

| w/ | 0.973 | 0.985 | 0.921 | 0.987 | 0.991 | 0.995 | 0.914 | 0.966 |

| YOLOv6n | 0.947 | 0.969 | 0.826 | 0.974 | 0.868 | 0.909 | 0.854 | 0.907 |

| w/ | 0.876 | 0.976 | 0.859 | 0.956 | 0.964 | 0.976 | 0.815 | 0.917 |

| YOLOv6s | 0.951 | 0.969 | 0.856 | 0.993 | 0.901 | 0.917 | 0.884 | 0.924 |

| w/ | 0.873 | 0.974 | 0.885 | 0.98 | 0.972 | 0.991 | 0.876 | 0.936 |

| YOLOv9-tiny | 0.96 | 0.977 | 0.87 | 0.989 | 0.902 | 0.936 | 0.849 | 0.926 |

| w/ | 0.893 | 0.984 | 0.921 | 0.98 | 0.992 | 0.991 | 0.913 | 0.953 |

| YOLOv9s | 0.963 | 0.98 | 0.87 | 0.983 | 0.93 | 0.934 | 0.899 | 0.937 |

| w/ | 0.976 | 0.985 | 0.948 | 0.985 | 0.987 | 0.995 | 0.935 | 0.973 |

| Mobilev3 | 0.923 | 0.945 | 0.836 | 0.983 | 0.924 | 0.937 | 0.894 | 0.921 |

| w/ | 0.937 | 0.956 | 0.902 | 0.989 | 0.989 | 0.983 | 0.914 | 0.953 |

| ours | 0.976 | 0.985 | 0.935 | 0.992 | 0.993 | 0.995 | 0.941 | 0.974 |

| Methods | Mosaic | Mixup | BMA | Map50 | Map50:95 |

|---|---|---|---|---|---|

| YOLOv8n | - | - | - | 92.7 | 78.3 |

| √ | - | - | 93.5 | 79.2 | |

| - | √ | - | 93.0 | 79.1 | |

| - | - | √ | 95.7 | 86.1 | |

| YOLOv8s | - | - | - | 92.9 | 81.6 |

| √ | - | - | 94.4 | 82.3 | |

| - | √ | - | 93.6 | 81.8 | |

| - | - | √ | 97.1 | 89.0 | |

| YOLOv8m | - | - | - | 93.1 | 83.2 |

| √ | - | - | 94.1 | 83.6 | |

| - | √ | - | 94.0 | 84.4 | |

| - | - | √ | 96.8 | 90.1 |

| Method | SE | CA | CBAM | MSFDH | Map50 | Map50:95 | R |

|---|---|---|---|---|---|---|---|

| YOLOv8s | - | - | - | - | 92.9 | 81.6 | 89.5 |

| √ | - | - | - | 93.1 | 81.7 | 90.4 | |

| - | √ | - | - | 92.9 | 81.5 | 89.7 | |

| - | - | √ | - | 92.5 | 80.9 | 86.9 | |

| - | - | - | √ | 93.5 | 81.9 | 90.1 |

| Method | BMA | MSFDH | Map50 | Map50:95 | R |

|---|---|---|---|---|---|

| YOLOv8s | - | - | 92.9 | 81.6 | 89.5 |

| √ | - | 97.1 | 89.0 | 95.6 | |

| - | √ | 93.5 | 81.9 | 90.1 | |

| √ | √ | 97.4 | 89.1 | 94.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Ikeura, R.; Hayakawa, S.; Zhang, Z. Evaluation of Pavement Marking Damage Degree Based on Rotating Target Detection in Real Scenarios. Automation 2025, 6, 70. https://doi.org/10.3390/automation6040070

Wang Z, Ikeura R, Hayakawa S, Zhang Z. Evaluation of Pavement Marking Damage Degree Based on Rotating Target Detection in Real Scenarios. Automation. 2025; 6(4):70. https://doi.org/10.3390/automation6040070

Chicago/Turabian StyleWang, Zheng, Ryojun Ikeura, Soichiro Hayakawa, and Zhiliang Zhang. 2025. "Evaluation of Pavement Marking Damage Degree Based on Rotating Target Detection in Real Scenarios" Automation 6, no. 4: 70. https://doi.org/10.3390/automation6040070

APA StyleWang, Z., Ikeura, R., Hayakawa, S., & Zhang, Z. (2025). Evaluation of Pavement Marking Damage Degree Based on Rotating Target Detection in Real Scenarios. Automation, 6(4), 70. https://doi.org/10.3390/automation6040070