Human Middle Ear Anatomy Based on Micro-Computed Tomography and Reconstruction: An Immersive Virtual Reality Development

Abstract

1. Introduction

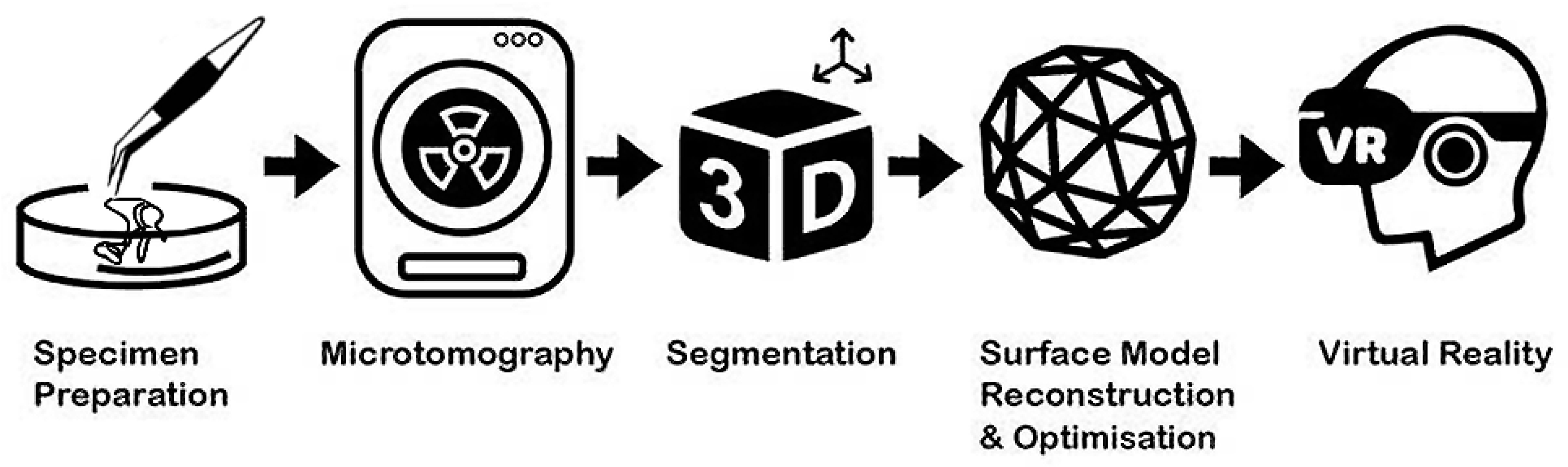

2. Methods

2.1. Specimen Processing and Scanning

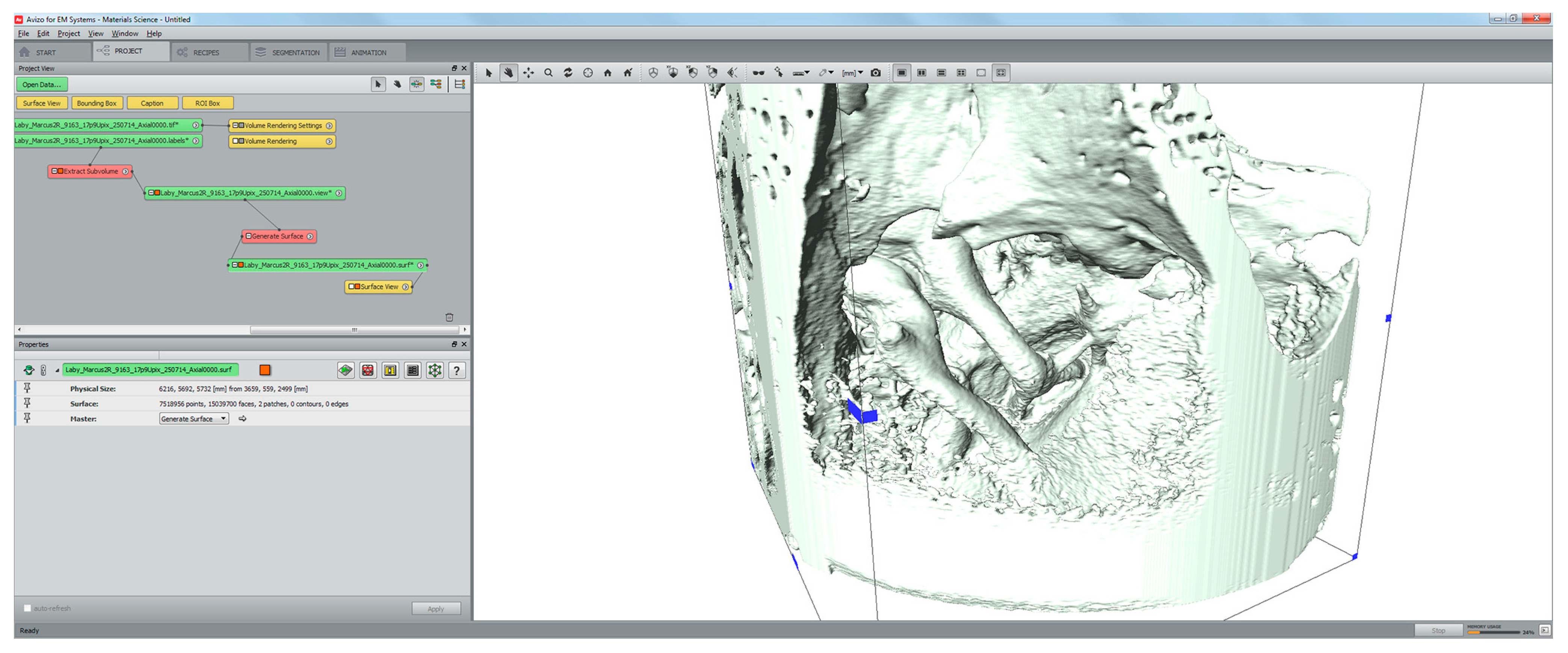

2.2. Segmentation and Surface Model Reconstruction

2.3. Adaptive Polygon Optimisation

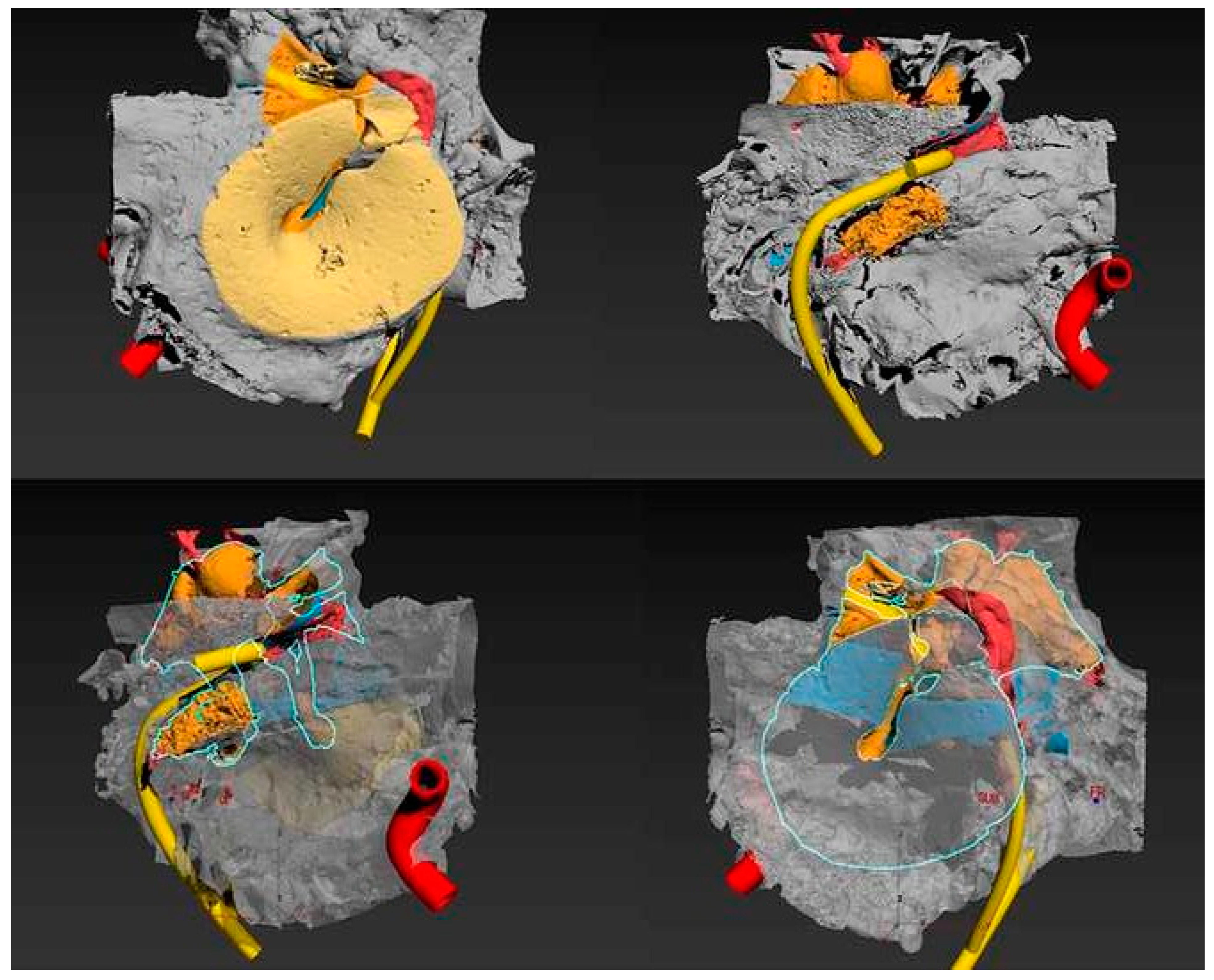

3. Results

4. Discussion

4.1. Strengths of the Reported Method

4.2. Rendering, Image Optimisation and Visualisation Techniques

4.3. Limitations of the Reported Method

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mukherjee, P.; Cheng, K.; Chung, J.; Grieve, S.M.; Solomon, M.; Wallace, G. Precision Medicine in Ossiculoplasty. Otol. Neurotol. 2021, 42, 177–185. [Google Scholar] [CrossRef] [PubMed]

- Cohen, J.; Reyes, S.A. Creation of a 3D Printed Temporal Bone Model from Clinical CT Data. Am. J. Otolaryngol. 2015, 36, 619–624. [Google Scholar] [CrossRef] [PubMed]

- Mukherjee, P.; Cheng, K.; Wallace, G.; Chiaravano, E.; Macdougall, H.; O’Leary, S.; Solomon, M. 20 Year Review of Three-dimensional Tools in Otology: Challenges of Translation and Innovation. Otol. Neurotol. 2020, 41, 589–595. [Google Scholar] [CrossRef] [PubMed]

- Mowry, S.E.; Hansen, M.R. Resident Participation in Cadaveric Temporal Bone Dissection Correlates with Improved Performance on a Standardized Skill Assessment Instrument. Otol. Neurotol. 2014, 35, 77–83. [Google Scholar] [CrossRef]

- Sieber, D.M.; Andersen, S.A.W.; Sørensen, M.S.; Mikkelsen, P.T. OpenEar Image Data Enables Case Variation in High Fidelity Virtual Reality Ear Surgery. Otol. Neurotol. 2021, 42, 1245–1252. [Google Scholar] [CrossRef] [PubMed]

- Zirkle, M.; Roberson, D.W.; Leuwer, R.; Dubrowski, A. Using a Virtual Reality Temporal Bone Simulator to Assess Otolaryngology Trainees. Laryngoscope 2007, 117, 258–263. [Google Scholar] [CrossRef]

- Zhao, K.G.; Kennedy, G.; Yukawa, K.; Pyman, B.; O’Leary, S. Can Virtual Reality Simulator Be Used as a Training Aid to Improve Cadaver Temporal Bone Dissection? Results of a Randomized Blinded Control Trial. Laryngoscope 2011, 121, 831–837. [Google Scholar] [CrossRef]

- Khemani, S.; Arora, A.; Singh, A.; Tolley, N.; Darzi, A. Objective Skills Assessment and Construct Validation of a Virtual Reality Temporal Bone Simulator. Otol. Neurotol. 2012, 33, 1225–1231. [Google Scholar] [CrossRef]

- Linke, R.; Leichtle, A.; Sheikh, F.; Schmidt, C.; Frenzel, H.; Graefe, H.; Wollenberg, B.; Meyer, J.E. Assessment of Skills Using a Virtual Reality Temporal Bone Surgery Simulator. ACTA Otorhinolaryngol. Ital. 2013, 33, 273–281. [Google Scholar]

- Arora, A.; Swords, C.; Khemani, S.; Awad, Z.; Darzi, A.; Singh, A.; Tolley, N. Virtual Reality Case-specific Rehearsal in Temporal Bone Surgery: A Preliminary Evaluation. Int. J. Surg. 2013, 12, 141–145. [Google Scholar] [CrossRef]

- Javia, L.R.; Sardesai, M.G. Physical Models and Virtual Reality Simulators in Otolaryngology. Otolaryngol. Clin. N. Am. 2017, 50, 875–891. [Google Scholar] [CrossRef] [PubMed]

- Sorensen, M.S.; Dobrzeniecki, A.B.; Larsen, P.; Frisch, T.; Sporring, J.; Darvann, T.A. The Visible Ear: A Digital Image Library of the Temporal Bone. J. Oto-Rhino-Laryngol. Its Relat. Spec. 2002, 64, 378–381. [Google Scholar] [CrossRef] [PubMed]

- Kelly, D.J.; Prendergast, P.J.; Blayney, A.W. The effect of prosthesis design on vibration of the reconstructed ossicular chain: A comparative finite element analysis of four prostheses. Otol. Neurotol. 2003, 24, 11–19. [Google Scholar] [CrossRef] [PubMed]

- Prendergast, P.J.; Kelly, D.J.; Rafferty, M.; Blayney, A.W. The effect of ventilation tubes on stresses and vibration motion in the tympanic membrane: A finite element analysis. Clin. Otolaryngol. 1999, 24, 542–548. [Google Scholar] [CrossRef] [PubMed]

- Kamrava, B.; Gerstenhaber, J.A.; Amin, M.; Har-el, Y.; Roehm, P.C. Preliminary Model for the Design of a Custom Middle Ear Prosthesis. Otol. Neurotol. 2017, 38, 839–845. [Google Scholar] [CrossRef] [PubMed]

- Kuru, I.; Maier, H.; Müller, M.; Lenarz, T.; Lueth, T.C. A 3D-printed functioning anatomical human middle ear model. Hear. Res. 2016, 340, 204–213. [Google Scholar] [CrossRef] [PubMed]

- Saha, R.; Srimani, P.; Mazumdar, A.; Mazumdar, S. Morphological Variations of Middle Ear Ossicles and its Clinical Implications. J. Clin. Diagn. Res. 2017, 11, AC01–AC04. [Google Scholar] [CrossRef]

- Kozerska, S.J.; Spulber, A.; Walocha, J.; Wroński, S.; Tarasiuk, J. Micro-CT study of the dehiscences of the tympanic segment of the facial canal. Surg. Radiol. Anat. 2017, 39, 375–382. [Google Scholar] [CrossRef]

- McManus, L.J.; Dawes, P.J.D.; Stringer, M.D. Surgical anatomy of the chorda tympani: A micro-CT study. Surg. Radiol. Anat. 2012, 34, 513–518. [Google Scholar] [CrossRef]

- Lee, D.H.; Chan, S.; Salisbury, C.; Kim, N.; Salisbury, K.; Puria, S.; Blevins, N.H. Reconstruction and exploration of virtual middle-ear models derived from micro-CT datasets. Hear. Res. 2010, 263, 198–203. [Google Scholar] [CrossRef]

- Udupa, J.K.; Hung, H.M.; Chuang, K.S. Surface and volume rendering in three-dimensional imaging: A comparison. J. Digit. Imaging 1991, 4, 159–168. [Google Scholar] [CrossRef] [PubMed]

- van Ooijen, P.M.A.; van Geuns, R.J.M.; Rensing, B.J.W.M.; Bongaerts, A.H.H.; de Feyter, P.J.; Oudkerk, M. Noninvasive Coronary Imaging Using Electron Beam CT: Surface Rendering Versus Volume Rendering. Am. J. Roentgenol. 2003, 180, 223–226. [Google Scholar] [CrossRef]

- Wiet, G.J.; Stredney, D.; Kerwin, T.; Hittle, B.; Fernandez, S.A.; Abdel-Rasoul, M.; Welling, D.B. Virtual temporal bone dissection system: OSU virtual temporal bone system: Development and testing. Laryngoscope 2012, 122, S1–S12. [Google Scholar] [CrossRef] [PubMed]

- Hardcastle, T.N.; Wood, A. The utility of virtual reality surgical simulation in the undergraduate otorhinolaryngology curriculum. J. Laryngol. Otol. 2018, 132, 1072–1076. [Google Scholar] [CrossRef] [PubMed]

- Morris, D.; Sewell, C.; Barbagli, F.; Salisbury, K.; Blevins, N.H.; Girod, S. Visuohaptic Simulation of Bone Surgery for Training and Evaluation. IEEE Comput. Graph. Appl. 2006, 26, 48–57. [Google Scholar] [CrossRef] [PubMed]

- Rose, A.S.; Kimbell, J.S.; Webster, C.E.; Harrysson, O.L.A.; Formeister, E.J.; Buchman, C.A. Multi-material 3D Models for Temporal Bone Surgical Simulation. Ann. Otol. Rhinol. Laryngol. 2015, 124, 528–536. [Google Scholar] [CrossRef]

- Rose, A.S.; Webster, C.E.; Harrysson, O.L.A.; Formeister, E.J.; Rawal, R.B.; Iseli, C.E. Pre-operative simulation of pediatric mastoid surgery with 3D-printed temporal bone models. Int. J. Pediatr. Otorhinolaryngol. 2015, 79, 740–744. [Google Scholar] [CrossRef]

- Rodt, T.; Ratiu, P.; Becker, H.; Bartling, S.; Kacher, D.F.; Anderson, M.; Jolesz, F.A.; Kikinis, R. 3D visualisation of the middle ear and adjacent structures using reconstructed multi-slice CT datasets, correlating 3D images and virtual endoscopy to the 2D cross-sectional images. Neuroradiology 2002, 44, 783–790. [Google Scholar] [CrossRef]

- Entsfellner, K.; Kuru, I.; Strauss, G.; Lueth, T.C. A new physical temporal bone and middle ear model with complete ossicular chain for simulating surgical procedures. In Proceedings of the 2015 IEEE International Conference on Robotics and Biomimetics (ROBIO), Zhuhai, China, 6–9 December 2015; pp. 1654–1659. [Google Scholar]

- Pandey, A.K.; Bapuraj, J.R.; Gupta, A.K.; Khandelwal, N. Is there a role for virtual otoscopy in the preoperative assessment of the ossicular chain in chronic suppurative otitis media? Comparison of HRCT and virtual otoscopy with surgical findings. Eur. Radiol. 2009, 19, 1408–1416. [Google Scholar] [CrossRef]

- Jain, N.; Youngblood, P.; Hasel, M.; Srivastava, S. An augmented reality tool for learning spatial anatomy on mobile devices. Clin. Anat. 2017, 30, 736–741. [Google Scholar] [CrossRef]

- Meehan, M.; Razzaque, S.; Insko, B.; Whitton, M.; Brooks, F.P. Review of four studies on the use of physiological reaction as a measure of presence in stressful virtual environments. Appl. Psychophysiol. Biofeedback 2005, 30, 239–258. [Google Scholar] [CrossRef] [PubMed]

| Polygons | Model Size (MB) | VR Loading Time | Frame Rate (FPS) | Optimisation Ratio | Surface Details |

|---|---|---|---|---|---|

| 8.5 million | 128 | 12 min and 20 s | 35 | 59.5% | ☑ |

| 6.5 million | 96 | 9 min and 45 s | 51 | 69% | ☑ |

| 4.3 million | 64 | 5 min and 10 s | 63 | 79.5% | ☑ |

| 2.5 million | 32 | 2 min and 55 s | 82 | 88% | ☑ |

| 2 million | 25 | 2 min and 30 s | 90 | 90% | ☑ |

| 1.5 million | 19 | 1 min and 55 s | 90 | 93% | ⊠ |

| 1 million | 12 | 1 min and 20 s | 90 | 95.2% | ⊠ |

| 600 k | 5.5 | 30 s | 90 | 97.1% | ⊠ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, K.; Curthoys, I.; MacDougall, H.; Clark, J.R.; Mukherjee, P. Human Middle Ear Anatomy Based on Micro-Computed Tomography and Reconstruction: An Immersive Virtual Reality Development. Osteology 2023, 3, 61-70. https://doi.org/10.3390/osteology3020007

Cheng K, Curthoys I, MacDougall H, Clark JR, Mukherjee P. Human Middle Ear Anatomy Based on Micro-Computed Tomography and Reconstruction: An Immersive Virtual Reality Development. Osteology. 2023; 3(2):61-70. https://doi.org/10.3390/osteology3020007

Chicago/Turabian StyleCheng, Kai, Ian Curthoys, Hamish MacDougall, Jonathan Robert Clark, and Payal Mukherjee. 2023. "Human Middle Ear Anatomy Based on Micro-Computed Tomography and Reconstruction: An Immersive Virtual Reality Development" Osteology 3, no. 2: 61-70. https://doi.org/10.3390/osteology3020007

APA StyleCheng, K., Curthoys, I., MacDougall, H., Clark, J. R., & Mukherjee, P. (2023). Human Middle Ear Anatomy Based on Micro-Computed Tomography and Reconstruction: An Immersive Virtual Reality Development. Osteology, 3(2), 61-70. https://doi.org/10.3390/osteology3020007