Abstract

This paper presents a novel approach to synthesizing phased antenna arrays (PAAs) by combining Taylor-series expansion with neural networks (NNs), enhancing the PAA synthesis process for modern communication and radar systems. Synthesizing PAAs is crucial for these systems, offering versatile beamforming capabilities. Traditional methods often rely on complex analytical formulations or numerical optimizations, leading to suboptimal solutions or high computational costs. The proposed method uses Taylor-series expansion to derive analytical expressions for PAA radiation patterns and beamforming characteristics, simplifying the optimization process. Additionally, neural networks are employed to model the intricate relationships between PAA parameters and desired performance metrics, providing adaptive learning and real-time adjustments. A validation of the proposed method is performed on a dual-band 5G antenna, which exhibits marked resonances at 28.14 GHz and 37.88 GHz, with reflection coefficients of = −19 dB and = −19.33 dB, respectively. The integration of Taylor expansion with NNs offers improved efficiency, reduced computational complexity, and the ability to explore a broader design space. Simulation results and case studies demonstrate the effectiveness and applicability of the approach in practical scenarios. This work represents a significant advancement in PAA synthesis, showcasing the synergistic integration of mathematical modeling and artificial intelligence for optimized antenna design in modern communication and radar systems.

1. Introduction

The integration of the Taylor method with neural networks in antenna array design signifies a sophisticated synergy between mathematical modeling and computational learning, yielding unprecedented levels of precision and adaptability. Unlike Chebyshev- or Fourier-based designs, Taylor excitation offers a balance between analytical simplicity and control over sidelobe levels, making it well suited to integration with learning-based optimization [1].

At its core, the Taylor method, founded on mathematical principles, provides engineers with a robust framework for approximating complex functions through series expansions. Within the context of antenna arrays, this method enables the manipulation of radiation patterns by fine-tuning polynomial coefficients, affording precise control over crucial parameters like beamwidth, sidelobe levels, and null steering [2,3]. Complementing this mathematical prowess, neural networks harness their intrinsic learning capabilities to optimize these coefficients based on desired radiation patterns [4,5]. Through iterative training processes, neural networks iteratively adjust the Taylor-series coefficients to minimize discrepancies between predicted and desired radiation patterns, refining their understanding with each iteration. This collaborative optimization process enables antenna arrays to achieve remarkable levels of performance across a myriad of applications. For instance, in beamforming, this integrated approach facilitates the precise steering of radiation beams towards specific directions while mitigating interference from undesired sources, enhancing signal quality and coverage. Furthermore, it enables the customization of radiation patterns to suit diverse application requirements, such as minimizing sidelobes for radar systems or optimizing coverage patterns for wireless communication networks [6,7]. Unlike previous methods that treat analytical and machine learning techniques separately, this work introduces a hybrid synthesis framework where Taylor series-based excitation is used to guide and initialize a neural network model, resulting in enhanced optimization and interpretability. Additionally, in environments prone to interference, adaptive antenna arrays equipped with neural networks can dynamically adjust radiation patterns in real time to mitigate interference sources, ensuring robust performance even in challenging operating conditions. Overall, the integration of the Taylor method with neural networks empowers antenna arrays with unparalleled levels of performance, adaptability, and versatility, driving transformative advancements in wireless communication, radar systems, and beyond.

The applications of integrating the Taylor method with neural networks in antenna design are extensive and span various domains. Wireless communication and mobile networks: In cellular networks and wireless communication systems, optimizing antenna radiation patterns is crucial to maximizing coverage, capacity, and signal quality. Integrating the Taylor method with neural networks enables the dynamic adaptation of radiation patterns to varying channel conditions, ensuring optimal network performance [8,9]. Radars: In radar systems, the precision and reliability of radar data largely depend on the antenna’s ability to form directional beams and suppress unwanted echoes [10,11,12]. The joint use of the Taylor method and neural networks optimizes radiation patterns for precise target detection and localization, even in noisy environments. Remote sensing and surveillance: In applications such as environmental monitoring, natural resource management, and traffic surveillance, antennas must detect and track objects accurately over large areas [13,14]. Integrating the Taylor method with neural networks enables the design of adaptive antennas capable of meeting the specific needs of each surveillance application. Smart antenna systems: Smart antenna systems, which use adaptive algorithms to optimize antenna performance based on channel conditions, greatly benefit from integrating the Taylor method with neural networks. These systems can dynamically adapt to changes in the RF environment, ensuring reliable connectivity and high-quality service delivery in various scenarios [15,16,17]. The integration of the Taylor method with neural networks opens up new perspectives in antenna design, offering optimized performance and increased adaptability across a wide range of applications, from wireless communication networks to radar systems to environmental surveillance.

Table 1 compares different beamforming methods for antenna arrays, each with specific advantages and disadvantages. The Taylor method adjusts antenna phases using Taylor coefficients, offering easy implementation but requiring prior knowledge of the spatial signal distribution [18]. The Dolph–Chebyshev method [19] minimizes amplitude error through Chebyshev polynomials, achieving high sidelobe attenuation, though its complexity increases in certain antenna configurations. Particle swarm optimization (PSO) [20] adjusts parameters by simulating the collective behavior of animals, ensuring quick convergence, but its effectiveness depends on the initial setup. Genetic algorithms (GAs) [21] optimize parameters by simulating natural selection mechanisms, making them well suited to complex designs, although they require significant computation times. Therefore, the choice of method depends on performance goals and implementation constraints. This hybrid integration strategy provides a structured design pipeline that not only accelerates convergence but also enhances the physical interpretability of the learned model, distinguishing it from purely data-driven or purely analytical approaches.

Table 1.

Beamforming methods comparison.

2. Modern Radar and Phased Antenna Arrays

Phased-array radars use antenna arrays that can be electronically controlled to steer the radar beam without mechanical movement, enabling fast scanning and efficient target detection and tracking. The array factor () plays a crucial role in beamforming and is defined as the sum of contributions from each antenna element in the array, where each element is fed with a phase-adjusted signal [22,23]. For a linear array of N elements spaced with d, the array factor is given by the following:

where is the wave number, is the wavelength of the radar signal, is the observation angle, and is the phase shift applied to each antenna element. In the case of a linear phase shift with an angle, , of the main beam direction, each element receives a phase, , where is the direction of the main beam. This allows the beam to be steered towards without requiring the physical movement of the antennas [24,25].

The array factor is often expressed in a closed form as follows:

where . By manipulating this equation, the array factor can also be expressed in a trigonometric form:

This formulation shows that the main beam is directed toward , and the radiation pattern, including the main lobe width and side lobes, depends on the number of elements, N, the element spacing, d, and the phase shift, . The angular resolution of the radar, which defines the ability to distinguish closely spaced targets, is determined by the width of the main lobe at −3 dB, calculated as follows:

Thus, increasing the number of elements in the array results in a narrower beam and better angular resolution, but it also requires optimal element spacing to avoid the formation of grating lobes. If the element spacing, d, exceeds , unwanted side lobes, or grating lobes, may appear, leading to detection errors. To mitigate these effects, windowing functions such as Taylor, Chebyshev, or Hamming windows are applied to reduce side lobes, optimizing radar performance without significantly increasing the main beam width [26,27]. Among these, Taylor-series expansion is favored in this work due to its analytical tractability and ability to produce physically interpretable excitation profiles with controlled sidelobe behavior. Unlike Chebyshev- or Fourier-based designs, Taylor excitation enables the smooth tapering of the amplitude distribution, which integrates naturally with neural network optimization and facilitates convergence. This balance between simplicity, control, and compatibility with data-driven tuning makes Taylor series a strong candidate for hybrid synthesis frameworks.

Phased-array radars enable electronic beam steering, where the beam can be directed in any direction by electronically adjusting the phase of each array element. This allows for faster scanning compared to traditional mechanical antenna radars, as well as real-time adaptability to track multiple targets simultaneously, which is essential for modern radar systems used in defense, airborne surveillance, weather radars, and autonomous vehicles. By adjusting the phases, , of the array elements, the radar can track multiple targets, perform frequency hopping across different angles, and provide real-time information on the position and velocity of targets [28].

Phased array radars are used in critical applications where speed and accuracy are essential [29,30,31]. For example, in missile defense, phased array radars such as the AN/SPY-1 enable the real-time detection and tracking of missiles. Similarly, in airborne radar systems, AESA (active electronically scanned array) radars provide comprehensive surveillance of airspace, enabling precise flight path control and the better detection of stealth targets. In meteorology, these radars allow for the detailed monitoring of weather phenomena, such as storms and precipitation, with high spatial resolution and the ability to scan large areas quickly.

Phased-array radars offer advanced capabilities in electronic beam steering and multi-target detection, enabling them to be used in a wide range of complex applications. The precise control of the beam, enabled by the array factor, and the ability to adapt to dynamic tracking needs make phased array radars an essential technology in modern defense, airborne surveillance, and intelligent transportation systems.

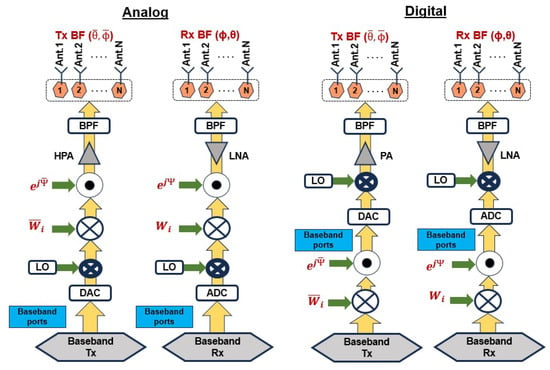

Figure 1 illustrates the structure of a smart antenna, which consists of an array of antennas, including both analog and digital low noise amplifiers (LNAs), a high-power amplifier (HPA), a power amplifier (PA), an analog-to-digital Converter (ADC), a digital-to-analog converter (DAC), a local oscillator (LO) for baseband transmission (Tx) and reception (Rx), and the weights for antenna array synthesis. The antenna array synthesis involves adjusting the weights applied to each antenna element to optimize the overall radiation pattern and performance of the system for various communication or sensing applications, based on the architecture proposed by Prof. A. Manikas [32].

Figure 1.

General arrangement of a linear antenna array [32].

3. The Taylor Method

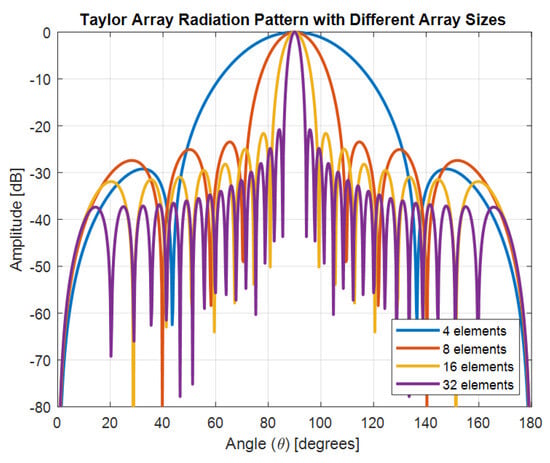

The simulated antenna consists of 16 identical microstrip patch elements arranged in a uniform linear array with spacing. This structure serves as the physical basis for the optimization framework and simulation results presented in Figure 2, Figure 3, Figure 4 and Figure 5. The Taylor method is a classical technique used in antenna array design to control how energy is distributed across the radiation pattern. It enables designers to fine-tune parameters such as beamwidth and sidelobe level by adjusting excitation amplitudes across the array elements using a mathematical series expansion. When a precise expression of the electromagnetic field is not easily available, Taylor expansion allows engineers to locally approximate the field distribution and apply optimized amplitude weights to shape the desired beam [25,33,34,35].

Figure 2.

Synthesis of antenna weights for different lobe widths and array sizes.

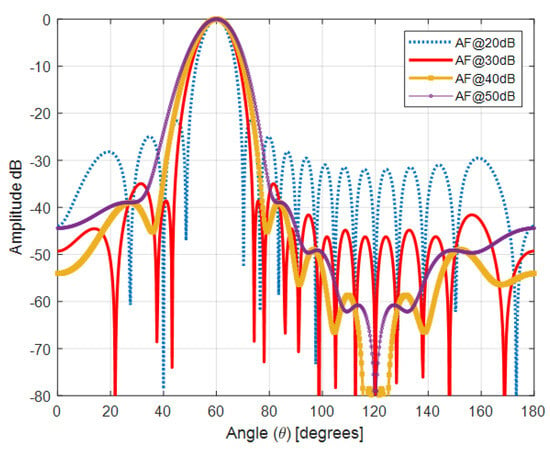

Figure 3.

Taylor antenna array with Kaiser window , , @60°.

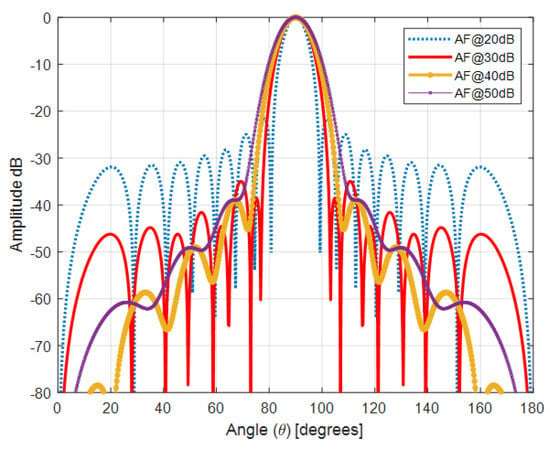

Figure 4.

Taylor antenna array with Kaiser window , , @90°.

Figure 5.

Taylor antenna array with Kaiser window , , @120°.

Using spherical coordinates , where r represents the radial distance from the observation point, represents the azimuthal angle, and represents the elevation angle, the Taylor method can be extended to approximate the spatial distribution of the electromagnetic field as follows [36,37]:

In this expression, the following applies:

- represents the spatial distribution of the electromagnetic field of the antenna array.

- is the reference point around which the Taylor series is developed.

- , , and are the partial derivatives of E with respect to r, , and respectively, evaluated at the reference point .

The Taylor method is utilized in antenna design to dictate the amplitude attenuation of elements within an antenna array, often characterized by a single parameter such as the main lobe width. This amplitude attenuation, when applied to the array elements, directly impacts the array factor, a mathematical function delineating each array element’s contribution to the overall radiation pattern formation. Thus, employing the Taylor method to ascertain the amplitude of array elements directly influences the array factor and subsequently the comprehensive radiation pattern of the antenna array [38,39,40]. Through parameter adjustments in the Taylor method, like modifying the main lobe width, one can manipulate the amplitude of the array factor, enabling precise control and optimization of the antenna array’s radiation pattern to align with specific requirements. This Algorithm 1 outlines the steps to synthesize a Taylor–Kaiser antenna array. It starts by choosing the appropriate window and calculating coefficients based on the given specifications. Then, for each antenna element, it calculates the weight and position. If the performance is satisfactory, it finalizes the design; otherwise, it adjusts the parameters to improve performance.

| Algorithm 1 Synthesize Taylor–Kaiser antenna array. |

|

This Taylor series can be truncated after the first order if a linear approximation is sufficient for the spatial distribution of the electromagnetic field around the reference point.

The amplitude distribution for the Taylor one-parameter distribution is given by the following:

where the following applies:

- is the amplitude weighting coefficient for the n-th element of the array.

- is the zeroth-order Bessel function of the first kind.

- is the design parameter that controls the characteristics of the radiation pattern.

- is the normalized distance between array elements, where

- -

- d is the spacing between elements;

- -

- is the wavelength;

- -

- is the angle of radiation.

The Bessel function of the first kind of order zero, , is defined by the infinite series:

It satisfies the recurrence relation:

and its asymptotic behavior for large x is approximated by

This function frequently appears in applications such as wave propagation in cylindrical waveguides, heat conduction in cylindrical coordinates, and vibration analysis in cylindrical structures.

3.1. Synthesizing Antenna Arrays Using Taylor One-Parameter Distribution

Synthesizing antenna arrays using the Taylor one-parameter distribution involves defining requirements such as radiation pattern and beamwidth, selecting array geometry and element patterns, applying the Taylor distribution to determine element amplitude tapering, calculating the array factor to describe pattern formation, optimizing parameters through iterative refinement, and validating the design via simulation or testing. This process enables the creation of antenna arrays tailored to specific needs, whether for beamforming, direction finding, or other applications [41,42,43,44].

3.1.1. Define Radiation Pattern Requirements

In the first step of antenna synthesis, it is essential to define how the antenna should radiate. This includes specifying the width of the main beam (which affects angular coverage), the acceptable levels of sidelobes (to reduce interference), and the placement of nulls (to suppress unwanted directions). These criteria guide the selection of parameters in the Taylor distribution, ensuring that the final design meets application-specific performance needs, such as high resolution in radar or reduced interference in wireless systems [45,46]. The main lobe width defines the angular spread of the primary radiation direction, influencing the antenna’s coverage area and directionality. Meanwhile, the side lobe level indicates the level of radiation in directions other than the main lobe, with lower levels indicating better rejection of unwanted radiation. Additionally, the placement of nulls in the radiation pattern can be strategically determined to minimize interference or steer the beam away from sources of noise or interference. These requirements serve as guiding principles for selecting design parameters, such as in the Taylor one-parameter distribution, shaping the antenna array’s behavior to meet specific performance criteria essential for its intended application.

- Determine the desired characteristics of the radiation pattern, such as the side lobe level, the main lobe width, and null placement.

- These requirements will guide the selection of the design parameter .

3.1.2. Select Design Parameter

The design parameter in antenna array synthesis using the Taylor one-parameter distribution represents the main lobe width or beamwidth, crucial for controlling the radiation pattern’s directivity and sidelobe levels.

- The design parameter determines the overall shape of the amplitude distribution.

- Choose based on the desired trade-off between the side lobe level and the main lobe width.

- Higher values of result in lower side lobes but wider main lobes, and vice versa.

3.1.3. Calculate Amplitude Weights

The amplitude weights for an antenna array using the Taylor one-parameter distribution are calculated as follows:

- Use the formula to calculate the amplitude weighting coefficients for each element of the array.

- depends on the array geometry and the angle of radiation.

3.1.4. Apply Amplitude Weights

The amplitude weights are applied to the elements of an antenna array by multiplying each element’s magnitude by its corresponding weight. The weighted amplitude of the nth element is calculated as follows:

- Apply the calculated amplitude weighting coefficients as the weights for the elements of the antenna array.

- These weights determine the relative strength of each element and thus shape the radiation pattern.

3.2. Example 1

Figure 2 illustrates the synthesis of antenna weights for varying lobe widths and array sizes, demonstrating how these factors influence the radiation pattern. By adjusting the antenna weights, it is possible to control the main lobe’s width, affecting both angular resolution and directivity. Additionally, increasing the array size sharpens the beam, reduces side lobes, and enhances gain in the primary direction. This optimization process aims to shape the radiation pattern to minimize interference and maximize system performance.

In Example 1, the radiation patterns of antenna arrays are generated and analyzed using the Taylor method for different array sizes (4, 8, 16, and 32 elements). The taylor1p function is used to calculate the weights of the array, considering a side-lobe level of 20 dB and a half-wavelength element spacing. The gain1d function then computes the array gain at 720 angles. The radiation patterns (gain versus angle) are plotted for each array configuration, with thickened curves for better visibility using the LineWidth property. Additionally, the weights are converted into magnitude and phase values, which are displayed in degrees. The results are presented both numerically (magnitude and phase) and graphically in dB, showcasing how increasing the number of array elements reduces sidelobes and narrows the main lobe. This demonstrates the impact of Taylor’s array synthesis method on antenna performance.

3.3. Example 2

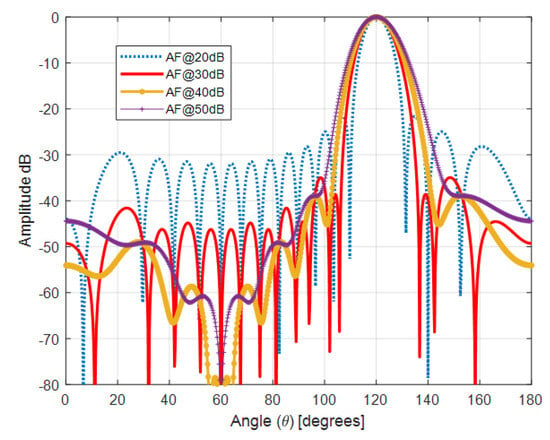

To achieve the specified side lobe levels (−20 dB, −30 dB, −40 dB, and −50 dB), amplitude tapering among the elements is optimized using Taylor distributions. This optimization ensures that the radiation pattern meets the desired sidelobe levels. The main lobe width can be adjusted accordingly during the optimization process to meet any specific requirements. Finally, the array factor representing the radiation pattern is computed, followed by simulation and iterative optimization using MATLAB R2023a until meeting the specified design criteria [47].

Figure 3 shows the Taylor antenna array with a Kaiser window, where the array size is , and the inter-element spacing is . This configuration operates at an angle of , demonstrating the radiation pattern at this specific angle. Figure 4 presents a similar Taylor antenna array with the same parameters (, ), but the array operates at . Finally, Figure 5 illustrates the Taylor antenna array with a Kaiser window under the same conditions, but operating at . These figures highlight how the antenna’s radiation pattern varies with different operating angles while maintaining the same array size and element spacing.

4. Validation Using CST Microwave Studio for 5G Antenna Arrays

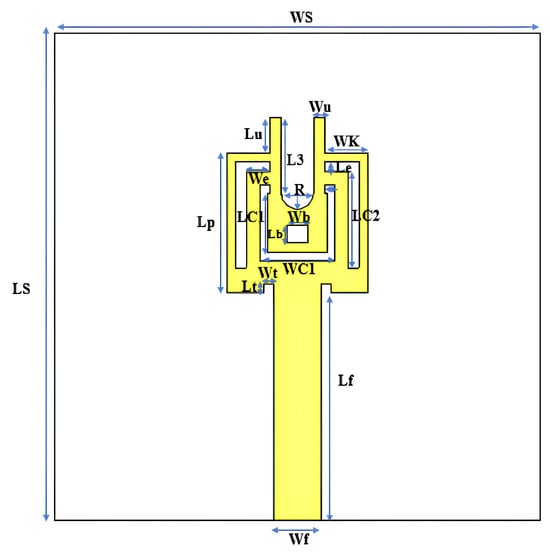

4.1. Antenna Design

The basic structure envisaged for validation is a dual-band rectangular microstrip patch antenna fabricated on a Rogers RT4003C substrate. It exhibits two resonances at 28.14 GHz and 37.88 GHz, tailored for 5G applications. The substrate features a thickness of 0.41 mm, a relative permittivity of , and a low loss tangent of 0.0027. The patch is fed via a 50 microstrip line to ensure proper impedance matching. Dual-band operation is achieved through slot insertion and careful tuning of the patch geometry. All geometric parameters—including length, width, slot configuration, and feed position—were first derived analytically and then refined through full-wave CST simulations.

Millimeter-wave (mm-wave) frequency bands have attracted considerable attention in wireless communications, particularly for 5G networks, due to their wide spectral bandwidth and ability to support exceptionally high data rates. To harness these frequency bands effectively, a meticulous antenna design process is essential, with the selection of substrate material playing a pivotal role. Parameters such as thickness, relative permittivity, and loss tangent directly impact the antenna’s electromagnetic performance, influencing radiation efficiency, impedance matching, and dielectric losses. In this section, we will design and simulate antenna arrays to validate our method using CST Microwave Studio.

We also present the design and optimization of a dual-band antenna operating at the critical 5G frequencies of 28 GHz and 38 GHz, specifically tailored to next-generation communication systems. The proposed antenna is fabricated using the Rogers RT4003C substrate, selected for its excellent electromagnetic properties, which are characterized by the following:

- Thickness: 0.41 mm.

- Relative permittivity: .

- Loss tangent: .

The antenna is fed via a 50 microstrip transmission line to ensure optimal impedance matching with high-frequency circuitry. Its structural design features a rectangular patch geometry with a radiating element of width W and length L, carefully determined using classical analytical models for microstrip patch antennas. The slot dimensions were empirically adjusted to support the dual-band response and ensure proper impedance matching.

Geometric parameter optimization aimed at minimizing reflection losses, enhancing radiation efficiency, and meeting the stringent requirements of mm-Wave communications. The final antenna dimensions are summarized in Figure 6 and Table 2, illustrating the necessary design refinements for stable operation at the target frequencies.

Figure 6.

Geometry of the studied antenna.

Table 2.

Geometric parameters of the antenna.

The patch width W is calculated using the following equation:

The central frequency, , and the effective permittivity, , are crucial in determining the microstrip patch antenna dimensions. The effective permittivity, accounting for the dielectric substrate’s influence, is given by the following:

where h is the substrate thickness.

The extended incremental length, , caused by fringing fields, is approximated as follows:

The effective length is calculated as follows:

Finally, the actual physical length L, accounting for fringing effects, is determined by the following:

where c is the speed of light in free space ( m/s), and is the resonant frequency.

This detailed design methodology ensures that the proposed antenna meets the stringent performance requirements of 5G millimeter-wave communications while achieving optimal impedance matching and enhanced radiation efficiency. Equations (10)–(15) present standard design formulas for microstrip patch antennas. They are included here for completeness and clarity and are derived from the classical reference [25].

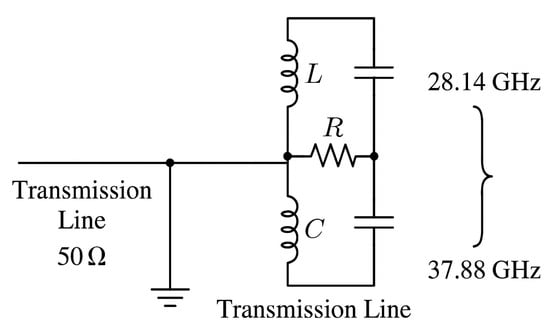

To enhance the analytical understanding of the antenna’s dual-band behavior, an equivalent circuit model (ECM) is proposed, as shown in Figure 7. The ECM consists of two parallel RLC resonators, each representing one of the antenna’s resonant frequencies at 28.14 GHz and 37.88 GHz. These branches are connected to a 50 transmission line that models the microstrip feed. Each RLC cell captures the resonant characteristics, impedance response, and loss mechanisms of its respective frequency band. This circuit-level abstraction provides an analytical perspective on the impedance matching and coupling effects observed in the electromagnetic simulations and can serve as a useful tool for future parametric tuning and rapid prototyping. Although a detailed parameter extraction is outside the scope of this work, the conceptual ECM reinforces the physical interpretation of the antenna design. Such equivalent circuit models have been widely adopted in the literature to interpret the impedance behavior and mode separation in dual-band or multi-band antennas, especially in the millimeter-wave range [48,49].

Figure 7.

Equivalent circuit model of the dual-band antenna.

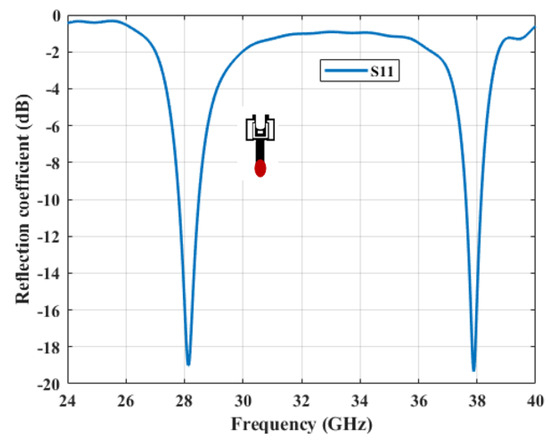

The analysis of the results presented in Figure 8 highlights the dual-band behavior of the proposed antenna. It shows distinct resonances at 28.14 GHz and 37.88 GHz, with respective reflection coefficients of S11 = −19 dB and S11 = −19.33 dB. These values indicate optimal impedance matching, significantly reducing reflection losses and ensuring efficient signal transmission in the two targeted frequency bands.

Figure 8.

Simulated reflection coefficient with CST MICROWAVE STUDIO.

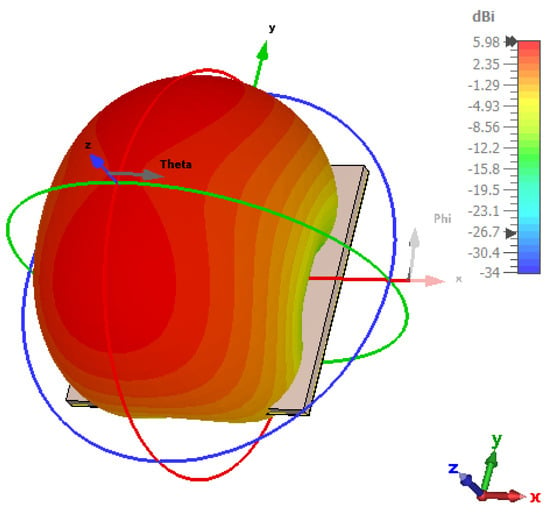

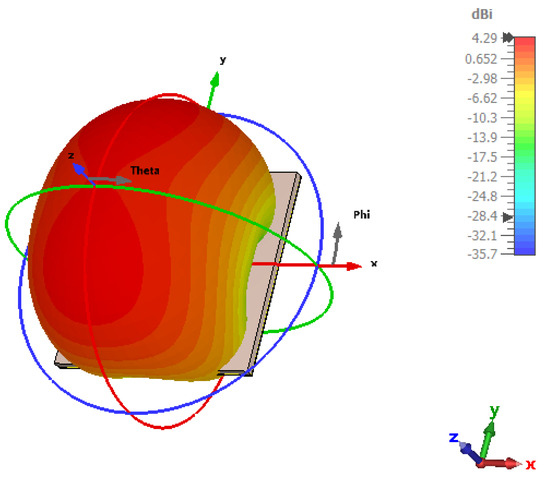

Figure 9, which represents the antenna’s directivity, highlights the antenna’s ability to focus its radiation in a specific direction. The directivity of the antenna is 5.98 dBi, indicating that the antenna emits in a narrow beam, concentrating energy in a particular area. This high directivity is essential for applications like point-to-point communications and radar systems. Conversely, low directivity would indicate broader coverage but less targeted radiation. Figure 10 shows the antenna’s gain, which measures the antenna’s efficiency in transmitting or receiving signals in a given direction compared to an isotropic antenna. The gain of the antenna is 4.29 dBi, meaning the antenna is quite efficient at focusing energy in a specific direction. A high gain enhances the range and performance in applications for which signal focus is crucial, such as long-distance communication systems, satellites, and radar. Together, these two parameters (directivity of 5.98 dBi and gain of 4.29 dBi) characterize the antenna’s efficiency and performance in various use cases.

Figure 9.

Directivity of the antenna.

Figure 10.

Gain of the antenna.

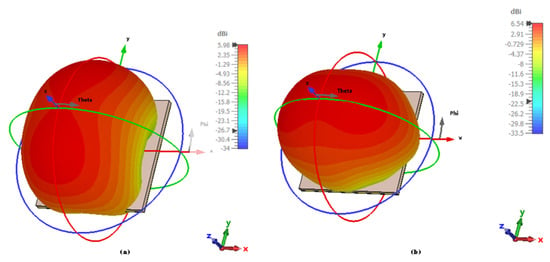

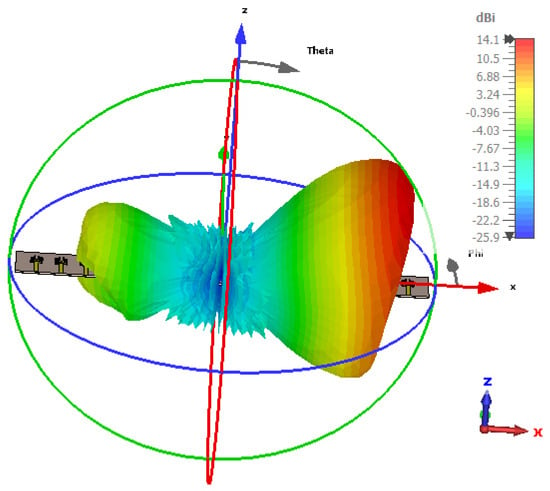

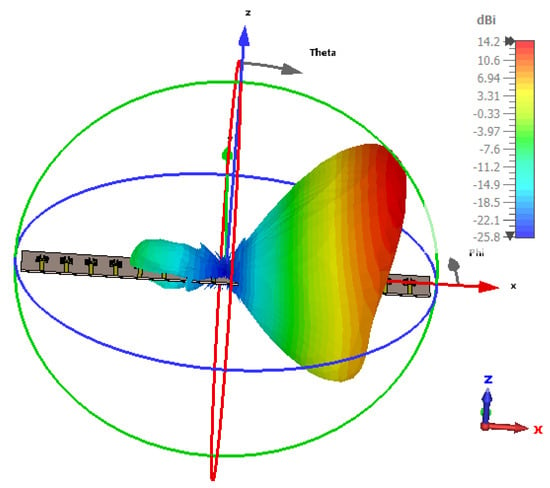

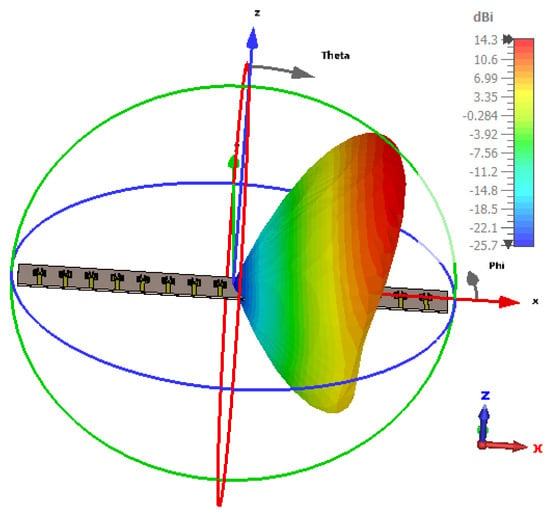

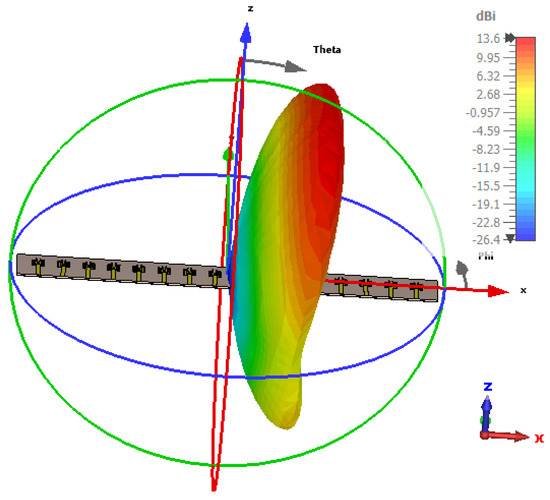

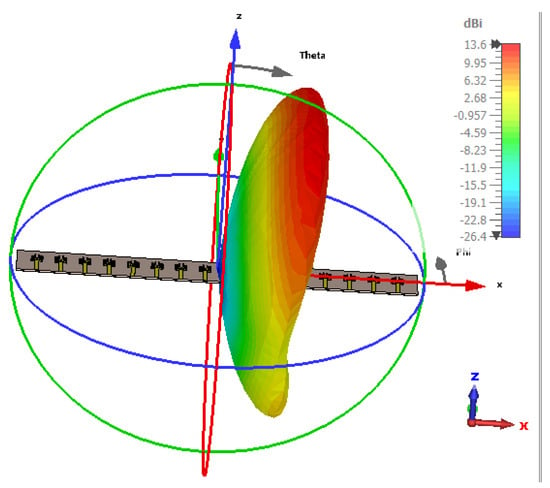

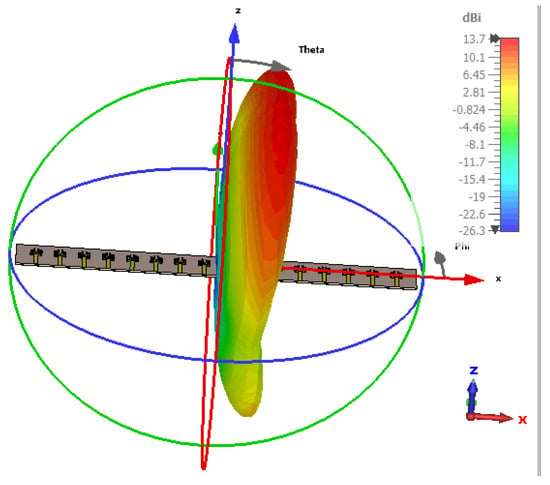

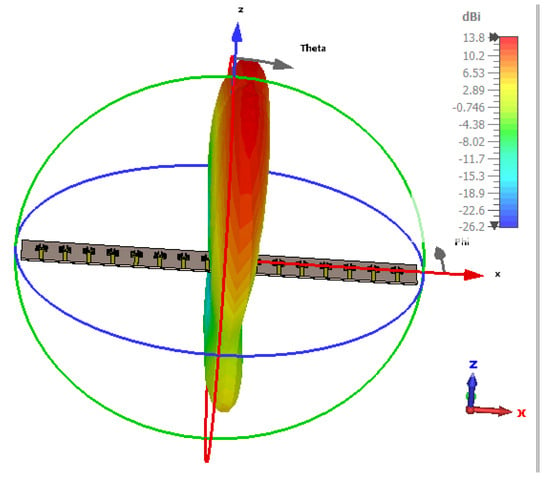

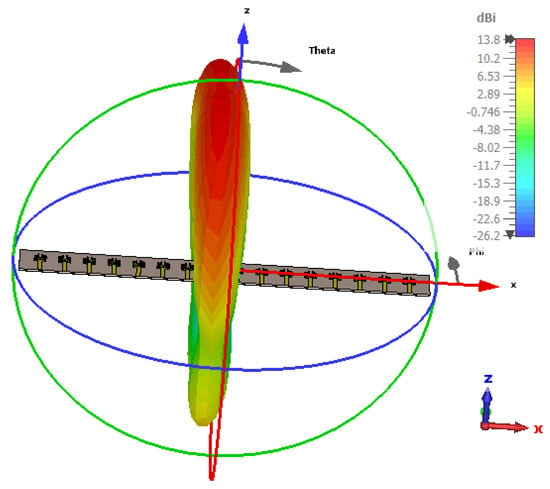

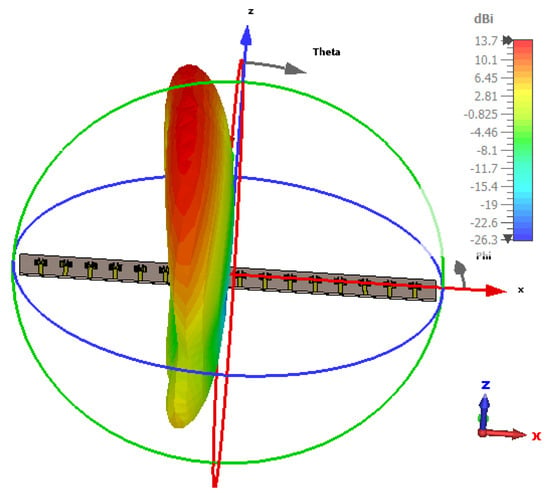

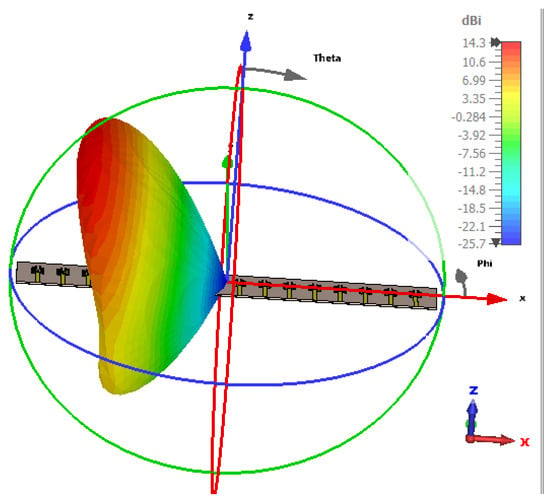

The 3D radiation pattern of the antenna, presented in Figure 11, provides valuable insights into the antenna’s performance at two distinct frequencies: (a) at 28.14 GHz and (b) at 37.88 GHz.

Figure 11.

Three-dimensional radiation pattern of the antenna. (a) At 28.14 GHz; (b) at 37.88 GHz.

(a) At 28.14 GHz: The radiation pattern at this frequency shows a specific distribution of the radiated energy, with the main lobe directed at a particular angle, indicating the primary direction of maximum radiation. The pattern might exhibit a relatively narrow beamwidth, which suggests that the antenna is highly directional at this frequency, focusing its energy towards a specific region in space. Side lobes and back lobes might also appear, indicating minor radiation in directions other than the main beam. The low sidelobe levels typically indicate minimal unwanted radiation, contributing to higher efficiency in the desired direction.

(b) At 37.88 GHz: At this higher frequency, the antenna’s radiation pattern could differ significantly, reflecting a change in its directional properties. The main lobe might shift in angle or broaden, depending on the antenna design and its impedance matching at this frequency. Higher frequencies typically result in a narrower beamwidth, but the antenna might also demonstrate a more complex radiation pattern due to factors like frequency-dependent impedance mismatch or interference effects. The side lobes may also appear differently, and the overall pattern might show reduced efficiency in certain directions due to different propagation characteristics at this higher frequency.

In both cases, the patterns highlight the dual-band performance of the antenna, which is crucial for applications requiring high efficiency in multiple frequency bands. The optimal radiation patterns at 28.14 GHz and 37.88 GHz ensure that the antenna can be effectively used in these bands, minimizing reflection losses and maximizing signal transmission in their respective regions.

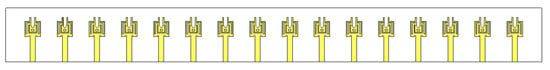

4.2. 16-Element MIMO Antenna Array

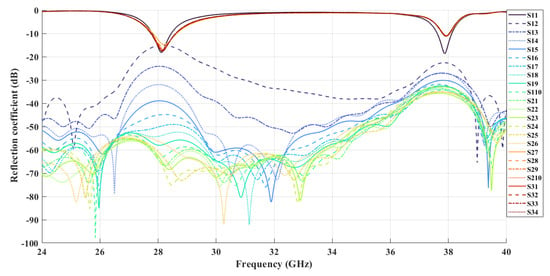

The MIMO system under investigation comprises 16 antennas aligned in the same direction and arranged parallel to each other, with an inter-element spacing of , as shown in Figure 12. This configuration has been carefully designed to maximize network directivity while minimizing mutual coupling between radiating elements, ensuring efficient signal transmission in millimeter-wave frequency bands. Each patch element has a width of mm and a length of mm and is designed on a substrate with a thickness of mm. The elements are uniformly spaced by , resulting in a total array length of approximately 89.4 mm. The results presented in Figure 13 highlight the stability of mutual coupling across the entire operational frequency band. Specifically, the electromagnetic performance assessment shows that the maximum mutual coupling between antennas remains below −15 dB, indicating excellent isolation among the array elements. Such a high degree of isolation is essential for preserving radiation efficiency, as it reduces interference and mitigates losses caused by unwanted electromagnetic interactions. This characteristic is crucial for optimizing MIMO system performance, enabling enhanced spatial multiplexing and higher data transmission rates, particularly in millimeter-wave communication systems.

Figure 12.

Design of a 16-element MIMO antenna array.

Figure 13.

S-parameters of the antenna.

The Figure 13 shows the S-parameters of the antenna, which are crucial for assessing its reflection and transmission characteristics. S11 represents the reflection coefficient, indicating how much signal is reflected back into the antenna; lower values (typically below −10 dB) suggest good impedance matching and minimal reflection losses. S21 indicates the forward transmission efficiency, with higher values reflecting better signal transmission. S12, the reverse transmission coefficient, should ideally be low to minimize back reflections, while S22, the reflection coefficient at the output port, provides insight into the matching at the output. Overall, low reflection coefficients (S11 and S22) and high transmission coefficients (S21) indicate optimal antenna performance, ensuring efficient signal transmission with minimal losses across the operating frequencies.

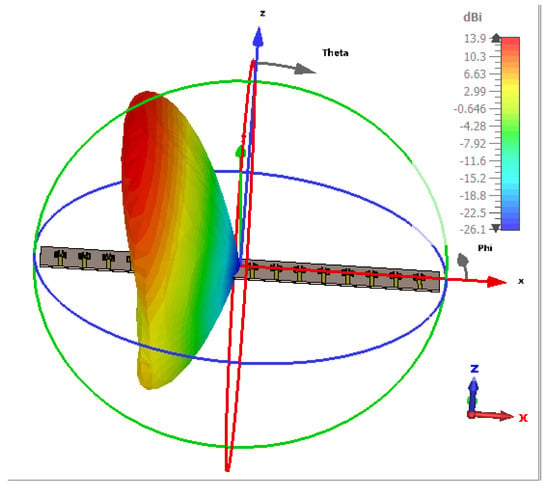

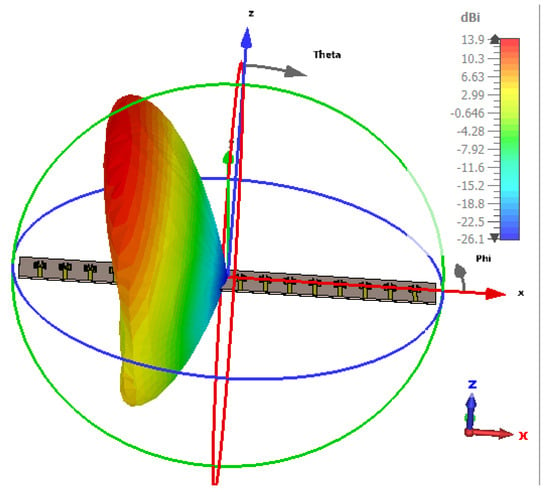

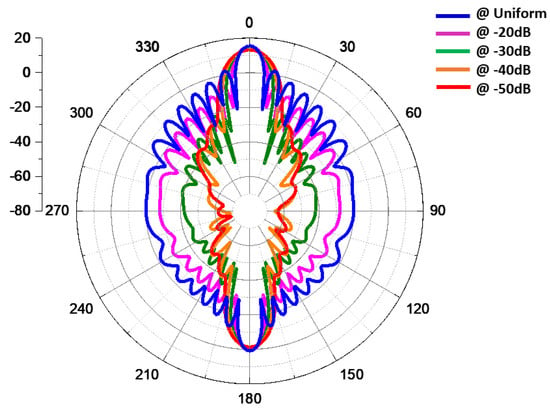

Figure 14, Figure 15, Figure 16, Figure 17, Figure 18, Figure 19, Figure 20, Figure 21, Figure 22, Figure 23, Figure 24 and Figure 25 show the radiation patterns of the antenna at various angles, ranging from 25° to 135°. At lower angles such as 25° and 35°, the radiation pattern is more concentrated, with the main lobe pointing in a specific direction, indicating a narrower beamwidth. As the angle increases to 45° and 55°, the main lobe begins to widen, indicating an expansion of the radiation coverage. This trend continues through angles of 65°, 75°, and 85°, when the antenna starts to radiate more broadly, providing wider coverage. At higher angles, such as 95° to 135°, the radiation pattern continues to spread, and the energy is directed over a larger area. The main lobe shifts progressively, and the side lobes also change in intensity, reflecting how the antenna adapts its radiation characteristics across different angular orientations. This behavior illustrates the antenna’s ability to provide directional or omnidirectional radiation, depending on the required application, and it emphasizes the flexibility of the antenna design in accommodating a wide range of angular orientations for optimal signal distribution. Figure 26 presents the radiation pattern of 16 antennas synthesized using the Taylor method with different amplitude synthesis levels of −20 dB, −30 dB, −40 dB, and −50 dB, along with uniform excitation.

Figure 14.

Radiation pattern at 25°.

Figure 15.

Radiation pattern at 35°.

Figure 16.

Radiation pattern at 45°.

Figure 17.

Radiation pattern at 55°.

Figure 18.

Radiation pattern at 65°.

Figure 19.

Radiation pattern at 75°.

Figure 20.

Radiation pattern at 85°.

Figure 21.

Radiation pattern at 95°.

Figure 22.

Radiation pattern at 105°.

Figure 23.

Radiation pattern at 115°.

Figure 24.

Radiation pattern at 125°.

Figure 25.

Radiation pattern at 135°.

Figure 26.

Radiation pattern of 16 antennas synthesized using the Taylor method with different amplitude synthesis levels.

5. Neural Networks for Synthesis and Optimization of Antenna Arrays

Artificial neural networks (ANNs) provide a flexible and powerful framework for improving antenna array design. These models are capable of learning complex, nonlinear relationships between the excitation parameters of antenna elements and the resulting radiation pattern. Once trained, ANNs can quickly predict optimal excitation weights to achieve desired performance metrics such as minimized sidelobe levels or enhanced beam directivity. This is particularly useful in adaptive antenna systems, where fast real-time adjustments are needed to respond to changing environments, such as in mobile communication, radar, or satellite applications. By training on large datasets, ANNs can optimize parameters such as directivity, sidelobe levels, and the overall adaptability of antenna arrays. This capability enables them to deliver superior results compared to traditional optimization techniques.

In particular, neural networks can significantly improve the performance of antenna arrays by reducing sidelobes, enhancing the main lobe, and optimizing array configurations to achieve specific radiation patterns. They are capable of managing multiple constraints simultaneously, such as power distribution, array size, and performance under various environmental conditions. This makes ANNs particularly useful in designing adaptive and reconfigurable antenna systems for advanced communication systems, radar, and smart antennas.

In comparison to conventional numerical methods like Taguchi, Sequential Quadratic Programming (SQP), Dolph–Chebyshev, and Particle Swarm Optimization (PSO), neural networks offer faster convergence and are more effective at dealing with the complexity of large parameter spaces. These traditional methods often require iterative processes that can be computationally expensive and time-consuming. However, ANNs, once trained, can generate solutions quickly and with high accuracy.

The dataset used in this context is based on numerical algorithms and synthesis techniques, including Taguchi, SQP, Dolph-Chebyshev, and PSO. These methods are well established in antenna array design for optimizing radiation patterns, but in this paper, the focus is specifically on the synthesis of antenna arrays using the Taylor method. The Taylor method allows for the generation of optimal phase distributions and array element positions, leading to highly efficient antenna designs. By integrating this method with the power of neural networks, this approach aims to further enhance the synthesis process and improve overall array performance.

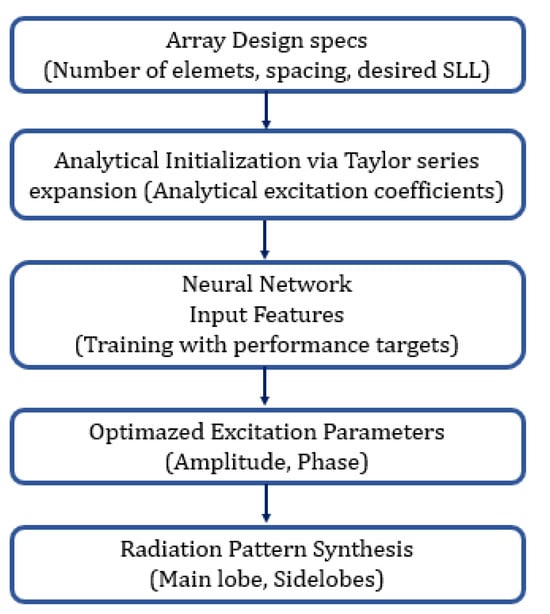

To bridge the analytical synthesis and neural optimization components of our approach, a visual representation of the workflow is introduced below.

To facilitate a clearer understanding of the proposed hybrid approach, Figure 27 presents a flowchart illustrating the interaction between the Taylor series expansion and the neural network. This schematic outlines the sequential process beginning with array design specifications, followed by the analytical initialization of excitation coefficients via the Taylor method. These coefficients are then used as input features for the neural network, which optimizes the excitation parameters based on predefined performance targets such as sidelobe level and beam direction. The result is an enhanced radiation pattern synthesis that integrates both analytical modeling and learning-based refinement. This visual representation helps clarify the modular structure of the framework and emphasizes the complementarity between deterministic and data-driven components.

Figure 27.

Flowchart of the proposed hybrid synthesis process combining Taylor series initialization with neural network refinement.

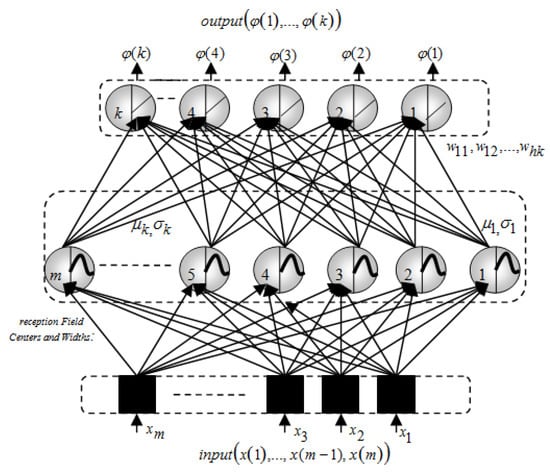

5.1. Taylor–Neural Network Architectures

Taylor–Neural Network architectures combine the advantages of the Taylor method with the learning capabilities of neural networks to optimize antenna array designs. This hybrid approach allows for a more efficient synthesis of radiation patterns by leveraging the strengths of both techniques. Below is a detailed exploration of their interaction.

Figure 28 illustrates the architecture of the Taylor-neural network. This figure presents a schematic representation of how the Taylor series expansion is integrated with the neural network model. It likely shows the layers, nodes, and activation functions used within the network, as well as how the Taylor series is applied to enhance the network’s ability to model complex patterns or solve specific problems. This architecture may be used for applications such as pattern recognition, signal processing, or optimization tasks, where the combination of Taylor series and neural networks provides an efficient method for approximation and learning. The Taylor method is a widely used technique for antenna array synthesis, particularly in phased arrays, where it aims to control the radiation pattern by optimizing the phase distribution across the antenna elements. The method involves using Taylor series expansion to define the phase distribution , which dictates the desired radiation pattern in a specific direction.

Figure 28.

Taylor-neural network architecture.

The phase distribution for a uniformly spaced linear array with N elements and a desired radiation pattern can be expressed as follows:

where

- is the angle of observation;

- are the Taylor-series coefficients;

- M is the order of the Taylor expansion;

- is the central angle where the main lobe is located.

The Taylor method allows for controlling the main lobe width and sidelobe levels by adjusting the values of the coefficients . For instance, the coefficients can be adjusted to minimize sidelobes or create a specific shape for the main lobe, depending on the application.

Although the Taylor method is effective in many cases, it involves limitations, especially when dealing with complex antenna array designs that involve multiple constraints such as non-uniform spacing, irregular element distributions, or specific performance goals. These constraints make it difficult to achieve optimal results using the Taylor method alone.

Furthermore, the Taylor method does not account for nonlinearities in real-world systems or the interactions between different antenna elements, which could lead to suboptimal performance in practical applications.

Neural networks can address these limitations by learning complex, nonlinear relationships between the input parameters (such as element positions, excitation amplitudes, etc.) and the desired output (such as radiation patterns). By using a training dataset of antenna configurations and their corresponding radiation patterns, the neural network can learn the optimal configuration for the antenna array.

A feed-forward neural network for antenna array optimization can be formulated as follows:

where

- y is the output (the optimized radiation pattern or phase distribution);

- is the input vector containing parameters such as element positions, excitation amplitudes, and other design factors;

- represents the weights of the network, and

- b is the bias term.

The network is trained to minimize a loss function that measures the difference between the desired radiation pattern and the output produced via the network. A feedforward, fully connected neural network was used, consisting of three hidden layers with 64, 32, and 16 neurons, respectively. ReLU activation was applied to all hidden layers, while the output layer used a linear activation function. The network inputs include initial Taylor-based excitation values and a steering angle, and the outputs correspond to optimized excitation weights. The model was trained using the Adam optimizer with a learning rate of and mean squared error loss. A dataset of 1000 samples was synthetically generated by varying array parameters and desired pattern characteristics. A validation set of 200 samples was used, and training was stopped after 74 epochs based on the convergence of validation loss.

In the hybrid Taylor–neural network architecture, the neural network is integrated with the phase distribution generated via the Taylor method. The Taylor method provides an initial phase distribution, , which is then refined and optimized by the neural network.

Let the phase distribution generated via the Taylor method be denoted as , which serves as an input to the neural network:

The neural network adjusts the phase distribution by fine-tuning the excitation parameters or array configurations to achieve the desired radiation pattern while satisfying multiple constraints. This could include minimizing sidelobes, improving directivity, or optimizing element positions.

The training process involves optimizing the weights and bias b to minimize the loss function, typically defined as the squared error between the desired output and the network’s output :

where N is the number of training samples.

5.2. Benefits of the Taylor-Neural Network Architecture

The integration of Taylor-series phase synthesis with neural network optimization offers a powerful solution to improve antenna array performance. This hybrid approach effectively reduces sidelobe levels, shapes the main beam, and enhances overall directivity. While the Taylor method provides a mathematically grounded initial phase distribution, it faces limitations in handling practical constraints such as irregular element spacing or varying antenna geometries. Neural networks complement this by learning from data and adapting to complex design conditions, including those affected by environmental factors or hardware imperfections. Compared to traditional methods like genetic algorithms (GAs) or particle swarm optimization (PSO), neural networks require less computational time once trained and can rapidly adjust excitation parameters to meet performance targets. Moreover, their ability to generalize allows them to be used across different array configurations, including MIMO antennas, radar systems, and satellite platforms, making the approach versatile and scalable.

The hybrid Taylor–neural network approach can be summarized as follows:

where

- is the optimized phase distribution;

- f represents the neural network optimization function;

- and b are the weights and biases of the neural network;

- are the Taylor-series coefficients for phase distribution.

The Taylor–neural network architecture provides a robust framework for optimizing antenna array designs, combining the mathematical precision of the Taylor method with the adaptability and learning power of neural networks. This hybrid approach enables efficient, high-performance antenna array synthesis capable of meeting the stringent demands of modern communication systems.

The Taylor method for antenna array synthesis is a well-established approach used to optimize the distribution of the array’s element amplitudes in order to achieve a desired radiation pattern, such as minimizing side lobes while maintaining the main lobe characteristics. This method involves solving an optimization problem to determine the appropriate excitation levels for each element in the array, ensuring that the synthesized radiation pattern meets specific requirements. The Taylor method is widely used for controlling side lobe levels in antenna arrays, providing a smooth, continuous pattern that reduces interference from unwanted directions.

When applying neural networks to this problem, the Taylor method can be combined with machine learning techniques to enhance the process of optimizing the antenna array design. In this context, neural networks can learn from a dataset that includes different configurations of antenna arrays and their corresponding radiation patterns. The neural network is trained to recognize the relationship between the array’s excitation parameters and the resulting radiation pattern, improving its ability to predict and optimize array designs. The dataset used for training typically contains both the array configurations (as input features) and their associated radiation patterns (as target outputs). Through this approach, the neural network can generalize and efficiently identify optimal solutions for antenna array synthesis, reducing the computational cost and time required compared to traditional methods.

In essence, by using the Taylor method as a dataset, neural networks can become an effective tool for synthesizing antenna arrays, automating the design process, and optimizing performance in terms of radiation pattern control and interference management.

Table 3 compares various neural network training algorithms and their performance characteristics. The Levenberg–Marquardt (LM) algorithm is known for its high convergence rate, making it efficient in reaching an optimal solution quickly. The BFGS Quasi-Newton (BFG) algorithm also offers fast convergence, while resilient backpropagation (RP) is robust to noise, performing well even with inconsistent data. Bayesian regularization (BR) is particularly effective for small datasets, preventing overfitting. The Scaled Conjugate Gradient (SCG) algorithm is memory-efficient, making it suitable for large-scale problems. Conjugate Gradient with Powell/Beale Restarts (CGB) offers balanced performance, providing a trade-off between speed and accuracy, while Fletcher–Powell Conjugate Gradient (CGF) ensures stable convergence. Polak–Ribiére Conjugate Gradient (CGP) excels with sparse data, handling many zero values efficiently. The One-Step Secant (OSS) algorithm is known for its fast convergence, while Variable Learning Rate Backpropagation (GDX) adapts its learning rate during training for improved performance. Basic gradient descent (GD) is simple and easy to implement but may not converge as efficiently, whereas gradient descent with momentum (GDM) accelerates convergence by incorporating momentum, helping avoid local minima. Each algorithm offers specific advantages, and the choice depends on factors like data size, speed, and memory constraints.

Table 3.

Comparison of neural network-training algorithms.

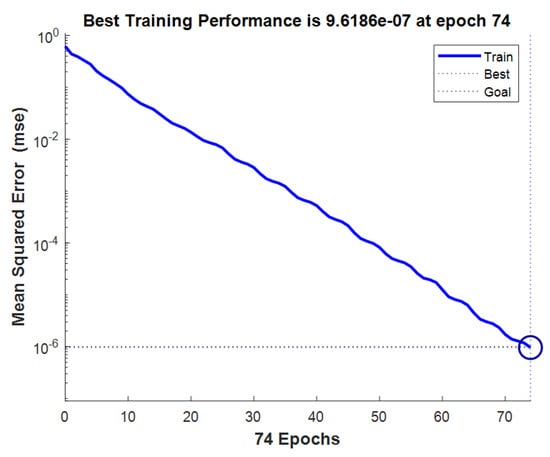

Figure 29 shows the progress of the Taylor neural network training at epoch 74. At this stage, the performance goal has been achieved, meaning the model has converged to an optimal solution according to the specified performance criteria. This suggests that the training process has effectively adjusted the model’s parameters to meet the desired objectives, with a significant reduction in error over the iterations. This figure could also represent the convergence curve, showing the decrease in the cost function or error during the training iterations.

Figure 29.

Taylor–neural network training (epoch 74, performance goal met).

Figure 30 illustrates the evolution of the mean squared error (MSE) for the Taylor neural network. MSE is a key indicator of model quality, measuring the difference between predicted values and actual values. A low MSE indicates good performance, as it shows that the model’s predictions are close to the expected results. This figure likely shows the progressive reduction in the MSE as the neural network learns and adjusts its parameters. A steadily decreasing MSE curve would suggest that the network is effectively converging to a solution with minimal prediction error.

Figure 30.

Taylor–neural network mean squared error (MSE).

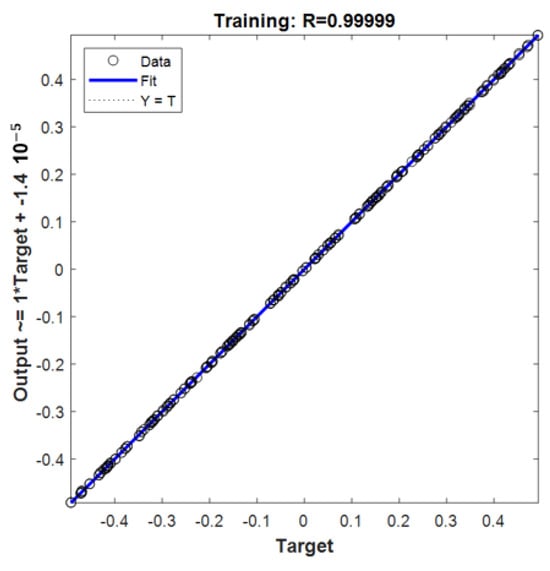

Figure 31 presents the correlation coefficient (R) achieved with the Taylor neural network, which is 0.99999. Such a high correlation coefficient indicates an extremely strong relationship between the predicted values and the actual values. In other words, the model’s predictions are almost identical to the expected results. This level of performance is exceptional and suggests that the model is very well fitted to the training data. A correlation of 0.99999 indicates that the model has learned with great accuracy, which is a strong indicator of the quality of the training and the model’s ability to generalize to new data.

Figure 31.

Taylor–neural network training; R = 0.99999.

The training process was stopped at epoch 74, where the network reached convergence with minimal loss variation and no further improvement in validation performance, ensuring both efficiency and generalization.

Compared to conventional synthesis approaches, the proposed Taylor–NN method offers a favorable balance between accuracy and computational cost. While methods like PSO and GA require iterative population-based optimization and may take several seconds to converge, the trained neural network provides near-instantaneous inference with high fidelity to the target pattern. Table 4 summarizes these aspects across several popular techniques.

Table 4.

Performance comparison of PAA synthesis methods.

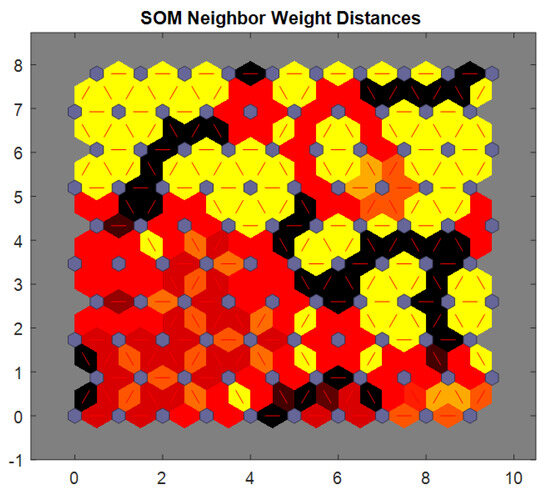

Figure 32 illustrates the SOM (self-organizing map) neighbor weight distances. The SOM is a type of unsupervised neural network used for clustering and dimensionality reduction. In this figure, the distances between the weight vectors of neighboring neurons in the SOM are represented. Each neuron in the SOM has an associated weight vector that represents the characteristics of the data points it maps to. The distances between these weight vectors indicate how similar or different the neurons are in terms of the data they represent. A smaller distance between two neurons suggests that the data points associated with those neurons are similar to each other, while a larger distance indicates greater dissimilarity. This figure likely shows a heatmap or a color-coded representation of the weight distances, where the colors represent the magnitude of the distances. A well-organized SOM would show smaller distances between neurons that are closer to each other on the map, indicating good clustering of similar data points. This figure is crucial for evaluating how the SOM algorithm has grouped the data and how well the structure of the dataset has been captured.

Figure 32.

SOM-neighbor weight distances.

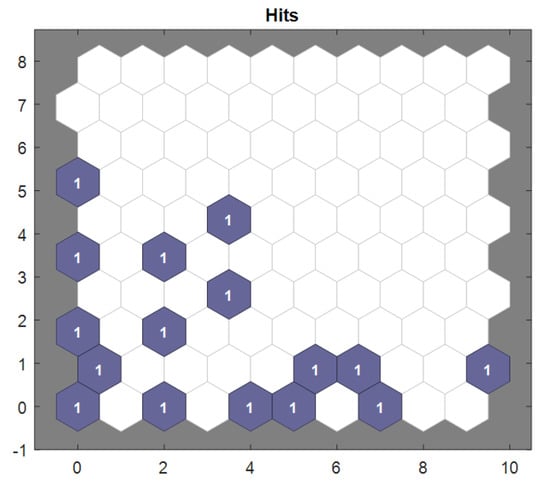

Figure 33 presents the sample hits for the Taylor method dataset. Sample hits refer to the number of data points that have been mapped to each individual neuron in the SOM. In this context, the figure shows a visualization of how frequently each neuron has been activated via the input samples. A high number of hits for a particular neuron indicates that the neuron has been frequently activated via similar data points, suggesting that the neuron represents a dense region or cluster of the dataset. Conversely, a neuron with fewer hits may represent a region in the dataset that is sparsely populated or less representative of the overall data distribution. This figure helps assess the distribution of data across the SOM grid, with areas of the map that have a high number of hits corresponding to well-represented regions of the data. The distribution of hits across the map also reveals how well the SOM has managed to generalize the data into different regions, providing insights into the quality of the clustering. A uniform distribution of hits would indicate a balanced representation of the dataset, whereas a skewed distribution could suggest that some regions of the data are underrepresented or overrepresented in the map. Please refer to the Appendix A for detailed data.

Figure 33.

Sample hits.

In addition to the benefits discussed above, scalability and future application domains must also be considered. While the current study validates the proposed framework on a 16-element linear array operating at mmWave frequencies (28.14 GHz and 37.88 GHz), the architecture is designed to be scalable and adaptable to more complex configurations, including arrays with 64 elements or more. At such scales, mutual coupling, nonlinear interactions, and hardware-induced uncertainties become more pronounced. These effects can be addressed in future work by incorporating domain-specific mitigation strategies—such as inter-element spacing adjustments, electromagnetic decoupling techniques, or the use of engineered metasurfaces—into the neural network’s training dataset. Furthermore, we aim to extend the applicability of this approach to THz-band antenna arrays, where fabrication tolerances, material dispersion, and surface-wave effects require more intricate modeling. By retraining the network with simulation or measurement-based data that reflect these challenges, the proposed method has the potential to remain robust across a wide range of real-world deployment scenarios.

Beyond scalability, we also recognize that the current focus on uniform linear arrays may limit the generalization of the method to more complex array geometries. Many practical applications involve non-linear configurations, such as conformal, sparse, or irregular arrays—especially in aerospace, vehicular, and satellite systems. While the linear assumption facilitates analytical modeling and initial validation, the proposed Taylor–neural network framework is not intrinsically constrained by geometry. Future developments will extend the approach by incorporating geometric position encodings and training on synthetic datasets representing diverse topologies. These adaptations will enable the model to handle non-uniform or platform-integrated arrays with varying curvature and element spacing. We have explicitly outlined this limitation in the revised manuscript and defined it as a key direction for future research.

6. Conclusions

In conclusion, this paper has presented a novel methodology for the synthesis of phased antenna arrays (PAAs) by combining Taylor-series expansion with neural networks (NNs), achieving notable improvements over traditional techniques. Taylor expansion offers an analytical foundation that simplifies the optimization process by approximating complex radiation behaviors using polynomial expressions. This enables the derivation of more efficient and accurate formulations for beamforming and radiation characteristics. The neural network component complements this by learning the nonlinear relationships between design parameters and target performance metrics, allowing for adaptive tuning and real-time adjustment. The main contributions of this work include the following: (i) the integration of Taylor -series modeling with neural network refinement, (ii) simulation-based validation on a realistic millimeter-wave array, and (iii) performance improvements in sidelobe control and computational efficiency compared to traditional techniques. The proposed method was validated through simulations and an electromagnetic analysis of a 16-element linear array operating at millimeter-wave frequencies (28.14 GHz and 37.88 GHz), ensuring relevance to 5G and 6G use cases. The simulation results demonstrate that the hybrid Taylor–NN framework reduces computational complexity, enhances adaptability, and enables broader design-space exploration. The validation confirms the method’s practical potential for the design of efficient antenna arrays in advanced communication and radar systems. It is worth noting that, although experimental measurements were not included in the current study, the simulation framework was built upon detailed electromagnetic modeling using realistic substrate and layout parameters. Future investigations may involve hardware prototyping and empirical validation as part of an extended deployment-oriented study.

While the current work focused on uniform linear arrays, we acknowledge its present limitations in addressing more complex configurations such as conformal or sparse geometries, which are prevalent in aerospace and vehicular applications. Future extensions will target these scenarios by incorporating non-linear geometries, generalized position encoding, and data-driven training strategies. This work marks a significant advancement in phased antenna array synthesis, highlighting the benefits of integrating analytical modeling with artificial intelligence to enable robust and flexible antenna design. By integrating scalability, adaptability, and extensibility into the design pipeline, the proposed approach provides a promising solution to modern challenges in wireless communication and radar engineering. Although experimental measurements are not included in the current study, the simulation framework is built upon detailed electromagnetic modeling using realistic substrate and layout parameters. Future investigations may involve hardware prototyping and empirical validation as part of an extended deployment-oriented study.

Author Contributions

Conceptualization, A.K., R.K. and R.G.; methodology J.F. and I.E.G.; software, A.K., R.G. and W.A.; validation, I.E.G. and J.F.; formal analysis, L.B.A.; investigation, L.L.; resources I.E.G.; data curation, A.K. and R.G.; writing—original draft preparation, A.K. and R.G. writing review and editing, L.B.A. and I.E.G.; visualization, R.G. and R.K.; supervision, L.B.A.; project administration. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Umm Al-Qura University, Saudi Arabia, Grant Number 25UQU4361156GSSR03. The APC was funded by Umm Al-Qura University.

Data Availability Statement

The data supporting the findings of this study are available within the article. Further inquiries can be directed to the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| SOM | Self-Organizing Maps (SOMs) |

| PSO | Particle Swarm Optimization |

| RF | Radio Frequency |

| BMU | Best Matching Unit |

| IoT | Internet of Things |

| DACs | Digital-to-Analog Converters |

| ADCs | Analog-to-Digital Converters |

| SNR | Signal-to-Noise Ratio |

| MSE | Mean Squared Error |

| OA | Orthogonal Array |

| PAA | Phased Antenna Array |

| NN | Neural Network |

| 5G | Fifth Generation (referring to the fifth generation of wireless technology) |

| GHz | Gigahertz |

| LNA | Low-Noise Amplifier |

| HPA | High-Power Amplifier |

| PA | Power Amplifier |

| ADC | Analog-to-Digital Converter |

| DAC | Digital-to-Analog Converter |

| LO | Local Oscillator |

| Tx | Transmission |

| Rx | Reception |

| dB | Decibel |

Appendix A

Appendix A.1

This Table A1 presents the phase excitations for each antenna element at observation angles ranging from 165° to 15°, synthesized using the Taylor method. This method focuses on controlling the sidelobe levels by adjusting the phase values of the antenna elements. The Taylor method optimizes the phase distribution to create a desired beam pattern while maintaining low sidelobe levels. The phase values in the table are determined to minimize the sidelobe amplitudes while ensuring that the main beam is directed toward the desired angle, enhancing directivity and performance.

Table A1.

Optimization of linear antenna array radiation patterns using Taylor method (16 elements).

Table A1.

Optimization of linear antenna array radiation patterns using Taylor method (16 elements).

| Angles (Degrees) | |||||||

|---|---|---|---|---|---|---|---|

| 165 | 155 | 145 | 135 | 125 | 115 | 105 | 95 |

| 136.000 | −143.515 | −25.855 | 125.405 | −54.328 | 149.465 | 10.594 | −117.660 |

| −50.133 | 19.619 | 121.592 | −107.314 | 48.915 | −134.463 | 57.181 | −101.972 |

| 123.733 | −177.244 | −90.960 | 19.964 | 152.159 | −58.392 | 103.769 | −86.284 |

| −62.399 | −14.109 | 56.486 | 147.243 | −104.596 | 17.679 | 150.356 | −70.596 |

| 111.466 | 149.026 | −156.065 | −85.477 | −1.353 | 93.750 | −163.055 | −54.908 |

| −74.666 | −47.838 | −8.618 | 41.801 | 101.890 | 169.821 | −116.468 | −39.220 |

| 99.200 | 115.296 | 138.828 | 169.081 | −154.865 | −114.106 | −69.881 | −23.532 |

| −86.933 | −81.567 | −73.723 | −63.639 | −51.621 | −38.035 | −23.293 | −7.844 |

| 86.933 | 81.567 | 73.723 | 63.639 | 51.621 | 38.035 | 23.293 | 7.844 |

| −99.200 | −115.296 | −138.828 | −169.081 | 154.865 | 114.106 | 69.881 | 23.532 |

| 74.666 | 47.838 | 8.618 | −41.801 | −101.890 | −169.821 | 116.468 | 39.220 |

| −111.466 | −149.026 | 156.065 | 85.477 | 1.353 | −93.750 | 163.055 | 54.908 |

| 62.399 | 14.109 | −56.486 | −147.243 | 104.596 | −17.679 | −150.356 | 70.596 |

| −123.733 | 177.244 | 90.960 | −19.964 | −152.159 | 58.392 | −103.769 | 86.284 |

| 50.133 | −19.619 | −121.592 | 107.314 | −48.915 | 134.463 | −57.181 | 101.972 |

| −136.000 | 143.515 | 25.855 | −125.405 | 54.328 | −149.465 | −10.594 | 117.660 |

| Angles (Degrees) | |||||||

| 85 | 75 | 65 | 55 | 45 | 35 | 25 | 15 |

| 117.660 | −10.594 | −149.465 | 54.328 | −125.405 | 25.855 | 143.515 | −136.000 |

| 101.972 | −57.181 | 134.463 | −48.915 | 107.314 | −121.592 | −19.619 | 50.133 |

| 86.284 | −103.769 | 58.392 | −152.159 | −19.964 | 90.960 | 177.244 | −123.733 |

| 70.596 | −150.356 | −17.679 | 1.353 | −147.243 | −56.486 | 14.109 | 62.399 |

| 54.908 | 163.055 | −93.750 | −101.890 | 85.477 | 156.065 | −149.026 | −111.466 |

| 39.220 | 116.468 | −169.821 | 154.865 | −41.801 | 8.618 | 47.838 | 74.666 |

| 23.532 | 69.881 | 114.106 | 51.621 | −169.081 | −138.828 | −115.296 | −99.200 |

| 7.844 | 23.293 | 38.035 | −51.621 | 63.639 | 73.723 | 81.567 | 86.93 |

| −7.844 | −23.293 | −38.035 | 51.621 | −63.639 | −73.723 | −81.567 | −86.93 |

| −23.532 | −69.881 | −114.106 | −51.621 | 169.081 | 138.828 | 115.296 | 99.200 |

| −39.220 | −116.468 | 169.821 | −154.865 | 41.801 | −8.618 | −47.838 | −74.666 |

| −54.908 | −163.055 | 93.750 | 101.890 | −85.477 | −156.065 | 149.026 | 111.466 |

| −70.596 | 150.356 | 17.679 | −1.353 | 147.243 | 56.486 | −14.109 | −62.399 |

| −86.284 | 103.769 | −58.392 | 152.159 | 19.964 | −90.960 | −177.244 | 123.733 |

| −101.972 | 57.181 | −134.463 | 48.915 | −107.314 | 121.592 | 19.619 | −50.133 |

| −117.660 | 10.594 | 149.465 | −54.328 | 125.405 | −25.855 | −143.515 | 136.000 |

Appendix A.2

Synthesized excitations (weights).

| Elements | @−20 dB | @−30 dB | @−40 dB | @−50 dB |

| 1 | 0.116 | 0.035 | 0.010 | 0.003 |

| 2 | 0.156 | 0.076 | 0.039 | 0.020 |

| 3 | 0.196 | 0.129 | 0.086 | 0.057 |

| 4 | 0.233 | 0.190 | 0.151 | 0.119 |

| 5 | 0.267 | 0.252 | 0.228 | 0.203 |

| 6 | 0.295 | 0.310 | 0.307 | 0.298 |

| 7 | 0.317 | 0.358 | 0.376 | 0.386 |

| 8 | 0.330 | 0.388 | 0.423 | 0.449 |

This second table shows the synthesized excitation weights for each element at different sidelobe levels (−20 dB, −30 dB, −40 dB, and −50 dB), where the Taylor method tailors the amplitude distribution across the array. As the sidelobe levels are reduced, the weight distribution becomes more concentrated toward the central elements, further enhancing the array’s ability to focus energy and reduce interference, thus optimizing the radiation pattern for applications like radar, communication, and satellite systems.

References

- Hammami, A.; Ghayoula, R.; Gharsallah, A. Antenna array synthesis with Chebyshev-Genetic Algorithm method. In Proceedings of the 2011 International Conference on Communications, Computing and Control Applications (CCCA), Hammamet, Tunisia, 3–5 March 2011. [Google Scholar]

- Mailloux, R.J. Phased Array Antenna Handbook, 2nd ed.; Artech House: Norwood, MA, USA, 2005. [Google Scholar]

- Ruze, J. Antenna tolerance theory—A review. Proc. IEEE 1966, 54, 633–640. [Google Scholar] [CrossRef]

- Anselmi, N.; Manica, L.; Rocca, P.; Massa, A. Tolerance analysis of antenna arrays through interval arithmetic. IEEE Trans. Antennas Propag. 2013, 61, 5496–5507. [Google Scholar] [CrossRef]

- Poli, L.; Rocca, P.; Anselmi, N.; Massa, A. Dealing with uncertainties on phase weighting of linear antenna arrays by means of interval-based tolerance analysis. IEEE Trans. Antennas Propag. 2015, 63, 3229–3234. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, H.; Chen, W.; Zhang, Y. A Generalizing Radiation Pattern Synthesis Method for Conformal Antenna Arrays Based on Convolutional Neural Network. IEEE Trans. Antennas Propag. 2022, 70, 1234–1244. Available online: https://ieeexplore.ieee.org/document/9935193 (accessed on 4 November 2022).

- Lovato, R.; Gong, X. Phased Antenna Array Beamforming using Convolutional Neural Networks. In Proceedings of the 2019 IEEE International Symposium on Antennas and Propagation and USNC-URSI Radio Science Meeting, Atlanta, GA, USA, 7–12 July 2019; Available online: https://ieeexplore.ieee.org/document/8888573 (accessed on 31 October 2019).

- Tang, X.; Wang, L. A Fast Pattern Synthesis Method for Conformal Antenna Array Based on Combined Convex Optimization and Neural Network. In Proceedings of the 2024 International Applied Computational Electromagnetics Society Symposium (ACES-China), Xi’an, China, 16–19 August 2024; Available online: https://ieeexplore.ieee.org/document/10699598 (accessed on 4 October 2024).

- Haupt, R.L.; Rahmat-Samii, Y. Antenna Array Developments: A Perspective on the Past, Present and Future. IEEE Antennas Propag. Mag. 2015, 57, 86–96. Available online: https://ieeexplore.ieee.org/document/7050249 (accessed on 26 February 2015). [CrossRef]

- Fowler, C.A. Old radar types never die, they just phased array or 55 years of trying to avoid mechanical scan. IEEE Aerosp. Electron. Syst. Mag. 1998, 13, 24A–24L. Available online: https://ieeexplore.ieee.org/document/715527 (accessed on 6 August 2002). [CrossRef]

- Fenn, A.J.; Temme, D.H.; Delaney, W.P.; Courtney, W.E. The Development of Phased Array Radar Technology. Linc. Lab. J. 2000, 12, 321–340. [Google Scholar]

- Hammami, A.; Ghayoula, R.; Gharsallah, A. Design of Concentric Ring Arrays for Low Side Lobe Level Using SQP Algorithm. J. Electromagn. Waves Appl. 2013, 27, 858–867. [Google Scholar] [CrossRef]

- Parker, D.; Zimmermann, D.C. Phased Arrays, Part I: Theory and Architectures. IEEE Trans. Microw. Theory Tech. 2002, 53, 678–687. [Google Scholar] [CrossRef]

- Parker, D.; Zimmermann, D.C. Phased Arrays, Part II: Implementations, Applications, and Future Trends. IEEE Trans. Microw. Theory Tech. 2002, 53, 688–698. [Google Scholar] [CrossRef]

- Poon, A.S.Y.; Taghivand, M. Supporting and Enabling Circuits for Antenna Arrays in Wireless Communications. Proc. IEEE 2012, 100, 2207–2218. [Google Scholar] [CrossRef]

- Brookner, E. Developments and Breakthroughs in Radars and Phased-Arrays. In Proceedings of the 2016 IEEE Radar Conference, Philadelphia, PA, USA, 2–6 May 2016; pp. 1–6. [Google Scholar] [CrossRef]

- He, G.; Gao, X.; Zhang, R. Impact Analysis and Calibration Methods of Excitation Errors for Phased Array Antennas. IEEE Access 2021, 9, 59010–59026. Available online: https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=9404182 (accessed on 14 April 2021). [CrossRef]

- Li, P.; Wang, C.; Xu, W.; Song, L. Taylor Expansion and Matrix-Based Interval Analysis of Linear Arrays With Patch Element Pattern Tolerance. IEEE Access 2021, 9, 24059–24068. [Google Scholar] [CrossRef]

- Jacob, M.J.; Lee, P.C. Synthesis of Antenna Arrays with Chebyshev Beam Patterns. IEEE Trans. Antennas Propag. 1994, 42, 1067–1071. [Google Scholar]

- Kennedy, J.; Eberhart, R.C. Particle Swarm Optimization. Proc. IEEE Int. Conf. Neural Netw. 1995, 4, 1942–1948. [Google Scholar]

- Goldberg, D.E. Genetic Algorithms in Search, Optimization, and Machine Learning; Addison-Wesley: Reading, MA, USA, 1989. [Google Scholar]

- Brown, A.D. Active Electronically Scanned Arrays: Fundamentals and Applications; Wiley-IEEE Press: Hoboken, NJ, USA, 2021; ISBN 978-1-119-74905-9. [Google Scholar]

- Brown, A.D. (Ed.) Electronically Scanned Arrays: MATLAB Modeling and Simulation, 1st ed.; CRC Press: Boca Raton, FL, USA, 2012; ISBN 9781315217130. [Google Scholar] [CrossRef]

- Milligan, T.A. Modern Antenna Design, 2nd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2005; ISBN 0471720607. [Google Scholar]

- Balanis, C.A. Antenna Theory: Analysis and Design, 4th ed.; John Wiley & Sons: Hoboken, NJ, USA, 2015; pp. 302–303. ISBN 978-1119178989. [Google Scholar]

- Stutzman, W.L.; Thiele, G.A. Antenna Theory and Design; John Wiley & Sons: Hoboken, NJ, USA, 2012; p. 315. ISBN 978-0470576649. [Google Scholar]

- Lida, T. Satellite Communications: System and Its Design Technology; IOS Press: Amsterdam, The Netherlands, 2000; ISBN 4274903796. [Google Scholar]

- Laplante, P.A. Comprehensive Dictionary of Electrical Engineering; Springer Science and Business Media: Berlin/Heidelberg, Germany, 1999; ISBN 3540648356. [Google Scholar]

- Gargouri, L.; Ghayoula, R.; Fadlallah, N.; Gharsallah, A.; Rammal, M. Steering an adaptive antenna array by LMS algorithm. In Proceedings of the 2009 16th IEEE International Conference on Electronics, Circuits and Systems (ICECS 2009), Hammamet, Tunisia, 13–16 December 2009. [Google Scholar]

- Hammami, A.; Ghayoula, R.; Gharsallah, A. Planar array antenna pattern nulling based on sequential quadratic programming (SQP) algorithm. In Proceedings of the Eighth International Multi-Conference on Systems, Signals & Devices, Sousse, Tunisia, 21–24 March 2011. [Google Scholar]

- Kheder, R.; Ghayoula, R.; Smida, A.; El Gmati, I.; Latrach, L.; Amara, W.; Hammami, A.; Fattahi, J.; Waly, M.I. Enhancing Beamforming Efficiency Utilizing Taguchi Optimization and Neural Network Acceleration. Telecom 2024, 5, 451–475. [Google Scholar] [CrossRef]

- Manikas, A. EE3-27: Principles of Classical and Modern Radar Phased-Array Radar; Imperial College London: London, UK, 2020. [Google Scholar]

- Sun, J. A Novel Design of 45° Linearly Polarized Array Antenna with Taylor Distribution. Prog. Electromagn. Res. Lett. 2022, 106, 151–155. [Google Scholar] [CrossRef]

- Taylor, T.T. Design of line source antennas for narrow beamwidth and low sidelobes. IRE Trans. Antennas Propag. 1955, AP-7, 16–28. [Google Scholar] [CrossRef]

- Kavya, K.C.S.; Devi, Y.N.S.; Kumar, G.S.; Neupane, N. Performance evaluation of array antennas. Int. J. Mod. Eng. Res. (IJMER) 2012, 1, 510–515. [Google Scholar]

- Ghayoula, R. Contribution à l’Optimisation de la Synthèse des Antennes Intelligentes par les Réseaux de Neurones. Ph.D. Thesis, Université Tunis El Manar, Tunis, Tunisia, 2008. [Google Scholar]

- Nemri, N.; Smida, A.; Ghayoula, R.; Trabelsi, H.; Gharsallah, A. Phase-only array beam control using a Taguchi optimization method. In Proceedings of the 2011 11th Mediterranean Microwave Symposium (MMS), Hammamet, Tunisia, 6–8 November 2011; Available online: https://ieeexplore.ieee.org/abstract/document/6068537 (accessed on 3 November 2011).

- Cui, Y.; Li, R.; Fu, H. A broadband dual-polarized planar antenna for 2G/3G/LTE base stations. IEEE Trans. Antennas Propag. 2014, 62, 4836–4840. Available online: https://ieeexplore.ieee.org/document/6832541 (accessed on 12 June 2014). [CrossRef]

- Wen, L.-H.; Gao, S.; Luo, Q.; Mao, C.-X.; Hu, W.; Yin, Y. Compact dual-polarized shared-dipole antennas for base station applications. IEEE Trans. Antennas Propag. 2018, 66, 6826–6834. Available online: https://ieeexplore.ieee.org/document/8470132 (accessed on 23 September 2018). [CrossRef]

- Ali, M.M.M.; Afifi, I.; Sebak, A.-R. A dual-polarized magneto-electric dipole antenna based on printed ridge gap waveguide technology. IEEE Trans. Antennas Propag. 2020, 68, 7589–7594. Available online: https://ieeexplore.ieee.org/abstract/document/9040868 (accessed on 18 March 2020). [CrossRef]

- Sun, L.; Li, Y.; Zhang, Z.; Wang, H. Self-decoupled MIMO antenna pair with shared radiator for 5G smartphones. IEEE Trans. Antennas Propag. 2020, 68, 3423–3432. [Google Scholar] [CrossRef]

- Li, Y.; Zhao, Z.; Tang, Z.; Yin, Y. Differentially fed dual-band dual-polarized filtering antenna with high selectivity for 5G sub-6 GHz base station applications. IEEE Trans. Antennas Propag. 2020, 68, 3231–3236. [Google Scholar] [CrossRef]

- Costantine, J.; Tawk, Y.; Barbin, S.E.; Christodoulou, C.G. Reconfigurable antennas: Design and applications. Proc. IEEE 2015, 103, 424–437. [Google Scholar] [CrossRef]

- Tang, M.-C.; Duan, Y.; Wu, Z.; Chen, X.; Li, M.; Ziolkowski, R.W. Pattern reconfigurable vertically polarized low-profile compact near-field resonant parasitic antenna. IEEE Trans. Antennas Propag. 2019, 67, 1467–1475. [Google Scholar] [CrossRef]

- Qin, P.; Song, L.; Guo, Y.J. Beam steering conformal transmitarray employing ultra-thin triple-layer slot elements. IEEE Trans. Antennas Propag. 2019, 67, 5390–5398. [Google Scholar] [CrossRef]

- Peng, J.-J.; Qu, S.-W.; Xia, M.; Yang, S. Conformal phased array antenna for unmanned aerial vehicle with ±70° scanning range. IEEE Trans. Antennas Propag. 2021, 69, 4580–4587. [Google Scholar] [CrossRef]

- Ouerghi, K.; Smida, A.; Ghayoula, R.; Boulejfen, N. Design and analysis of a microstrip antenna array for biomedical applications. In Proceedings of the 2017 IEEE International Conference on Advanced Technologies for Signal and Image Processing (ATSIP), Fez, Morocco, 22–24 May 2017. [Google Scholar]

- Ez-zaki, F.; Belaid, K.A.; Ahmad, S.; Belahrach, H.; Ghammaz, A.; Al-Gburi, A.J.A.; Parchin, N.O. Circuit Modelling of Broadband Antenna Using Vector Fitting and Foster Form Approaches for IoT Applications. Electronics 2022, 11, 3724. [Google Scholar] [CrossRef]

- Alamro, W.; Seet, B.-C.; Wang, L.; Parthiban, P. Design and Equivalent Circuit Model Extraction of a Fractal Slot-Loaded 3–40 GHz Super Wideband Antenna. Electronics 2024, 13, 4380. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).