RA-CottNet: A Real-Time High-Precision Deep Learning Model for Cotton Boll and Flower Recognition

Abstract

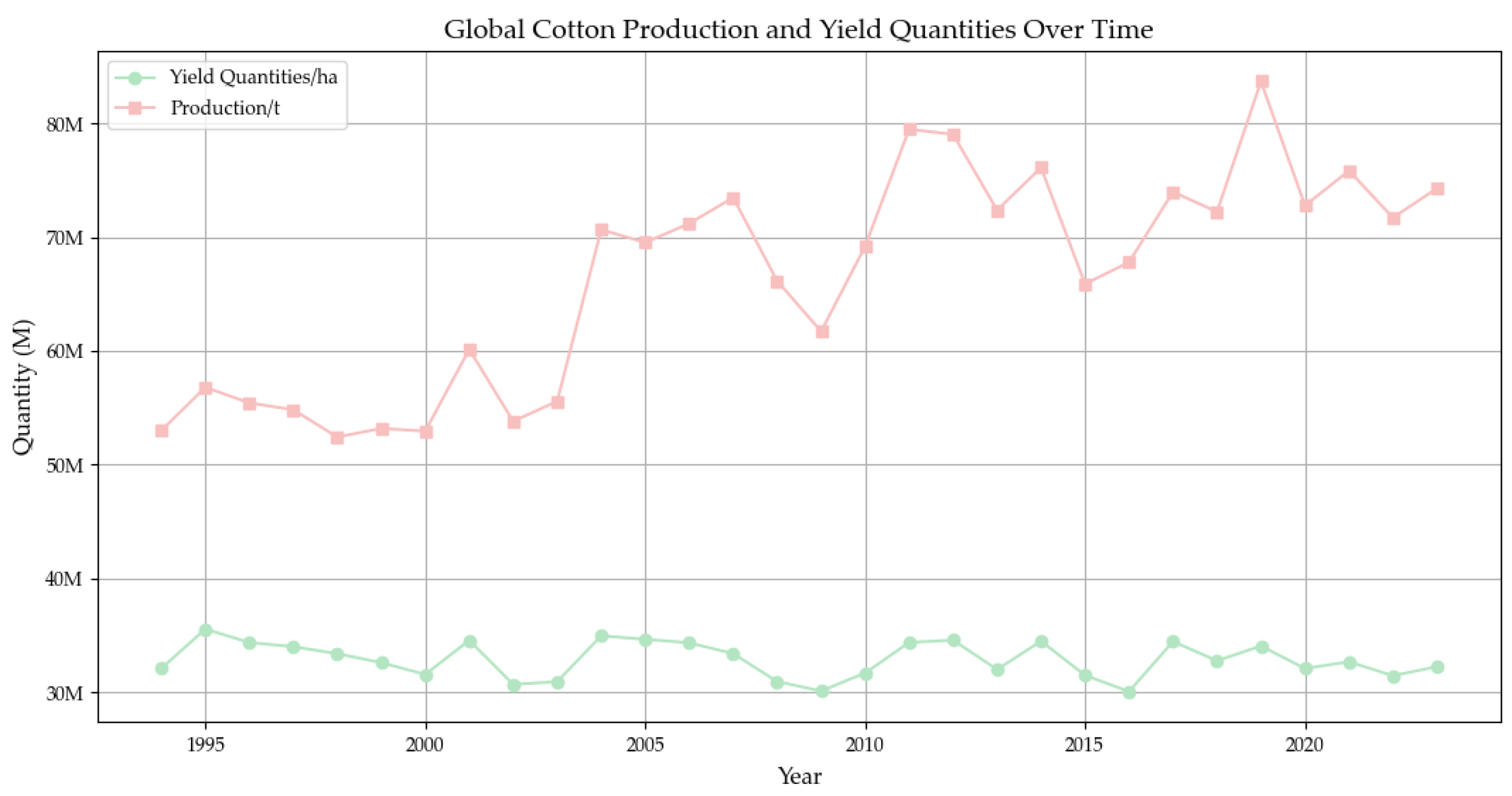

1. Introduction

- 1

- Open data resources to support follow-up research: To address the scarcity of high-quality cotton boll imagery, we curated a dataset from existing acquisitions and annotations. Both the dataset and training configuration files are open-source to the research community.

- 2

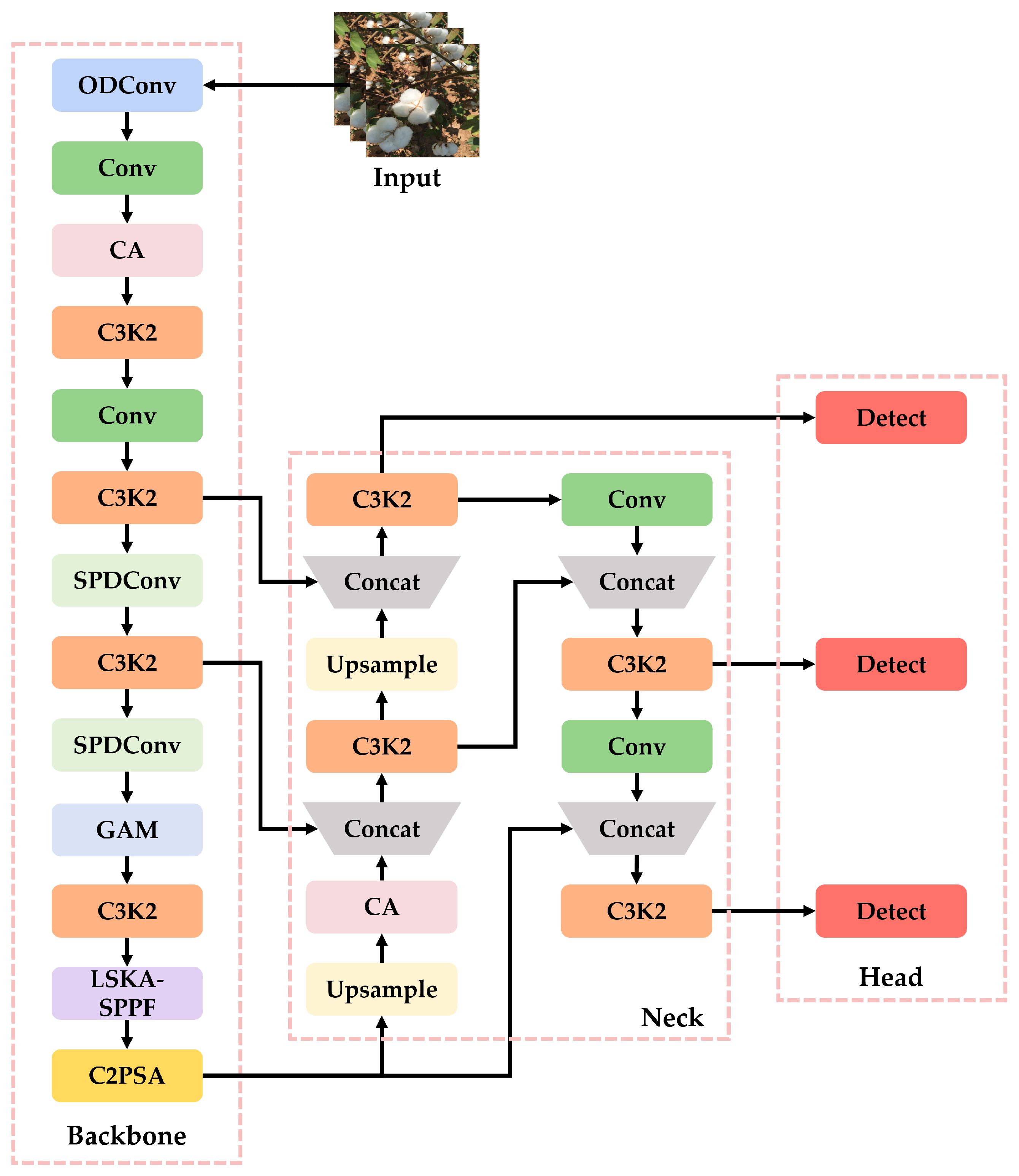

- Proposed ODConv–SPDConv detection framework: To address the rotated, small-scale characteristics of cotton bolls in field scenes, we integrated Omni-Dimensional Dynamic Convolution (ODConv) and Space-to-Depth Convolution (SPDConv) into the architecture for the first time, enhancing multi-angle and multi-scale feature representation and improving detection accuracy in complex environments.

- 3

- Multi-attention mechanisms for stronger aggregation and representation: We incorporated a Global Attention Mechanism (GAM), Coordinate Attention (CA), and Large-Kernel Self-Attention (LSKA) to strengthen global context capture and fine-grained spatial feature extraction, effectively mitigating the weak feature nature of cotton boll targets.

- 4

- Empirical validation with significant performance gains: Obtained results demonstrated superior performance on cotton boll detection, achieving a Precision, Recall, mAP50, mAP95, and F1-Score of 93.683%, 86.040%, 93.496%, 72.857%, and 89.692%, respectively, while also maintaining stable detection under multi-scale and rotational disturbances.

2. Materials and Methods

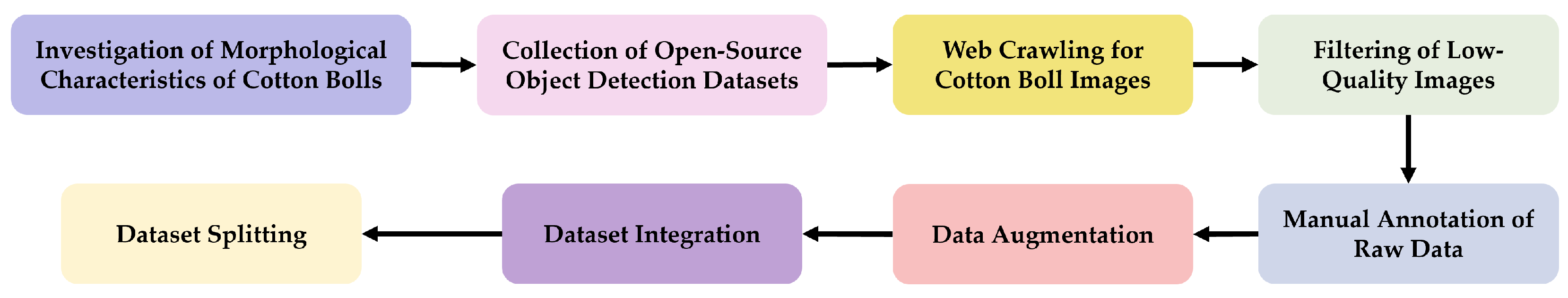

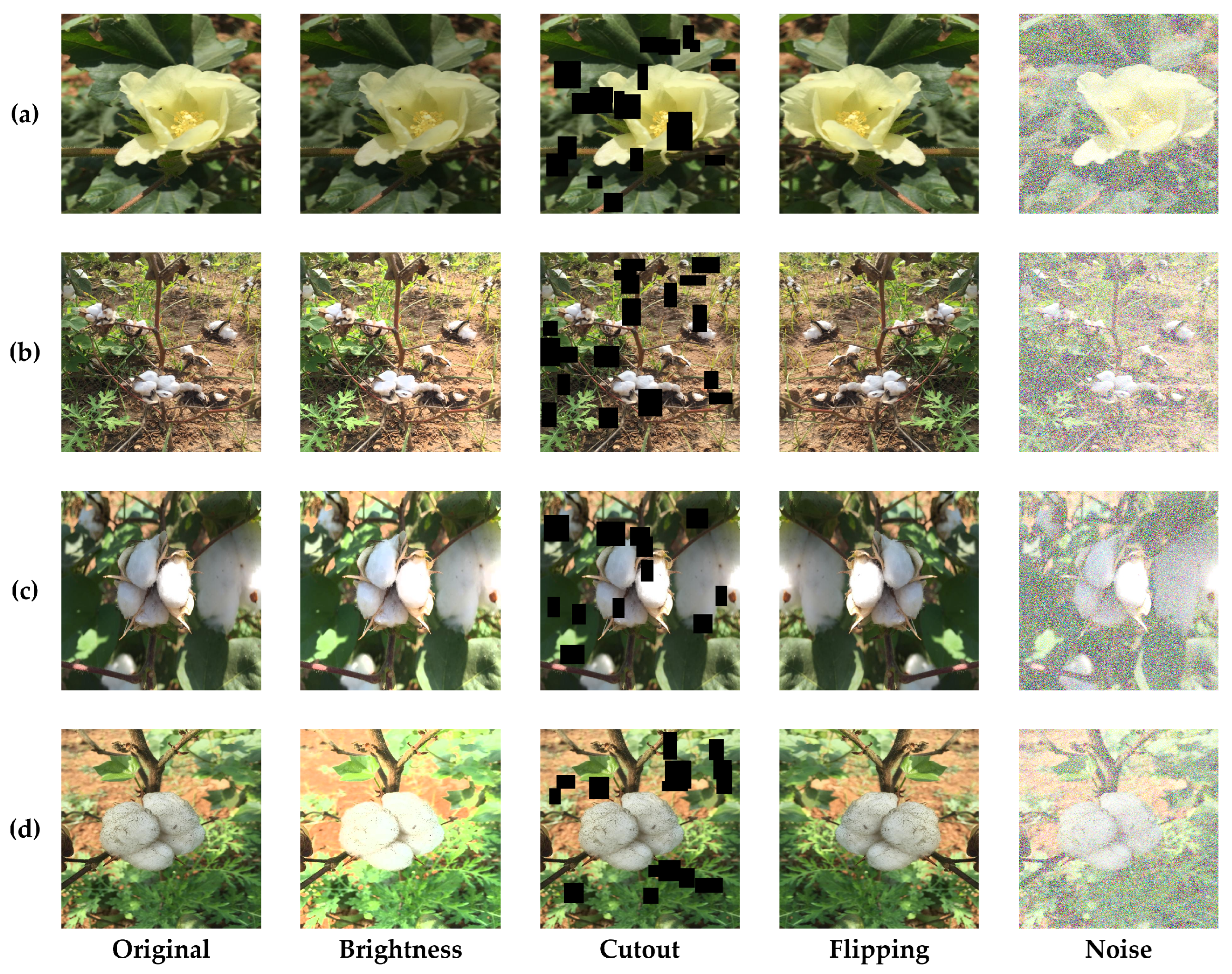

2.1. Dataset

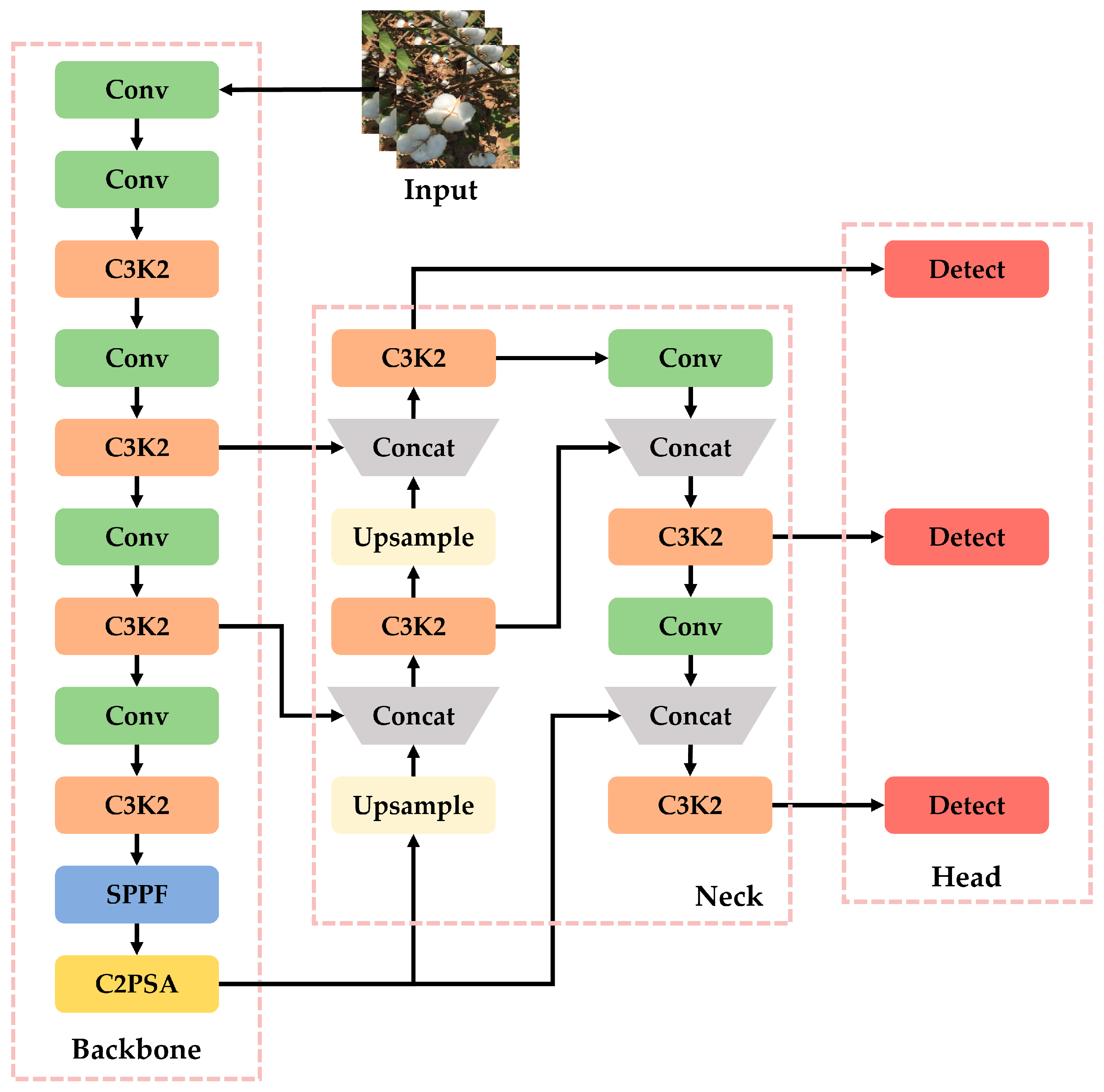

2.2. YOLOv11n

2.3. Convolutions

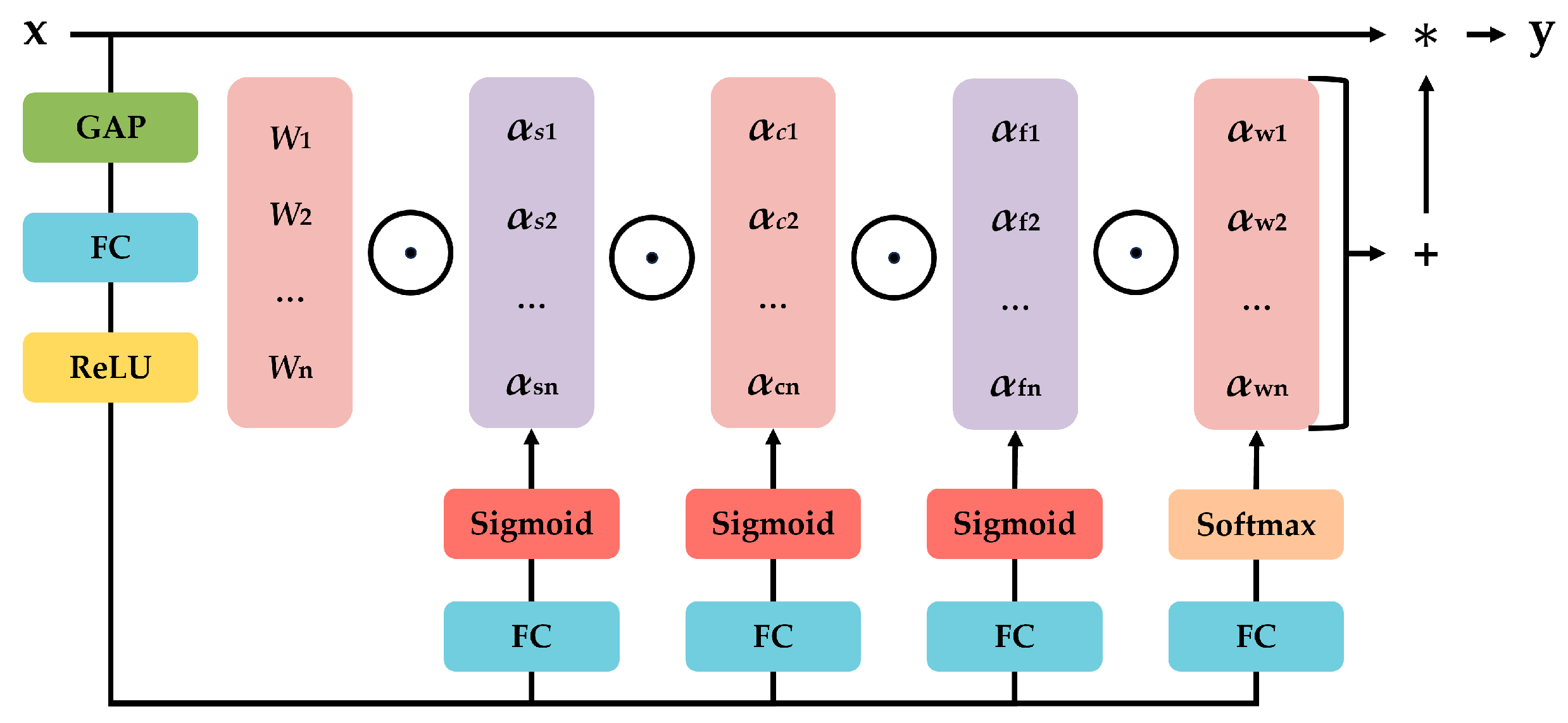

2.3.1. Omni-Dimensional Dynamic Convolution

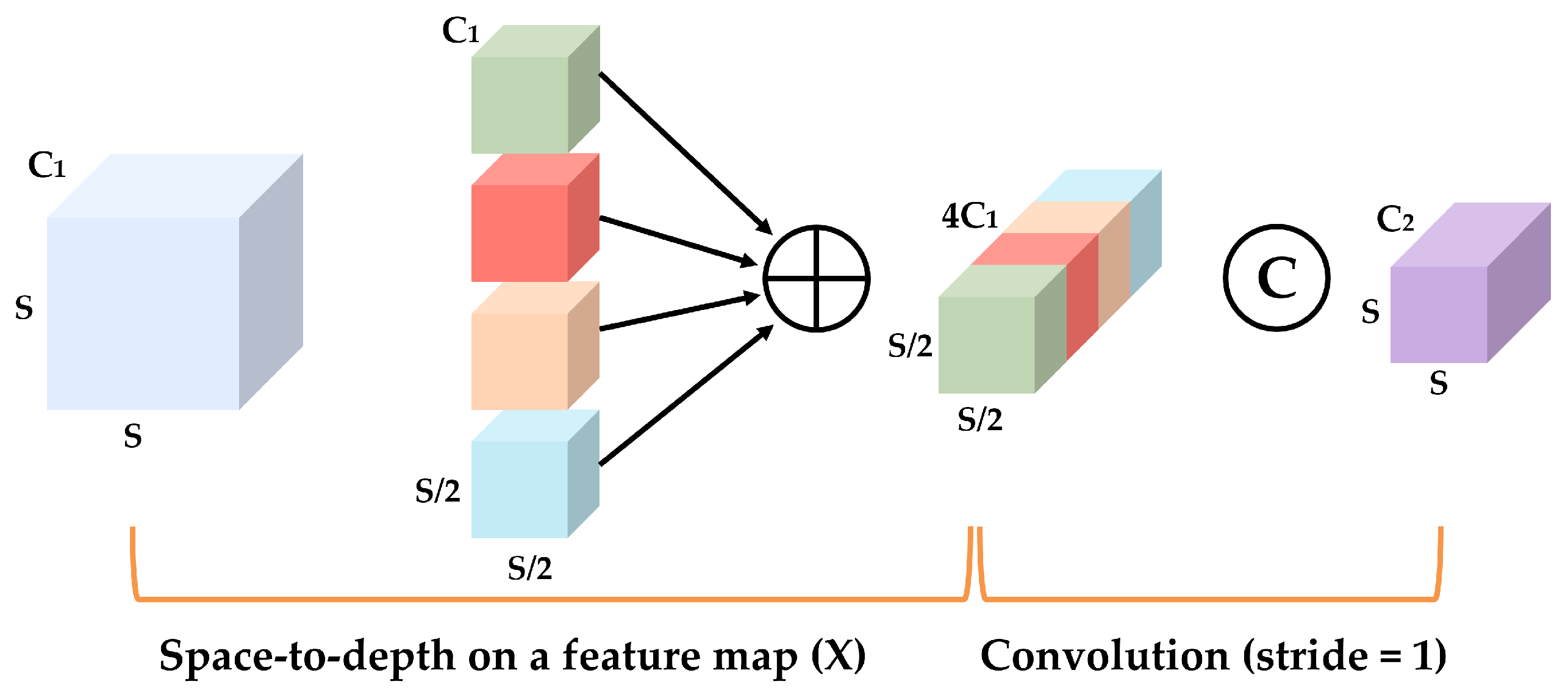

2.3.2. Space-to-Depth Convolution

2.4. Attention Mechanisms

2.4.1. Coordinate Attention Mechanism

2.4.2. Optimized Global Attention Mechanism

2.4.3. Large Separable Kernel Attention

2.5. Proposed Model (RA-CottNet)

3. Experiments and Evaluation Metrics

3.1. Model Training Device and Parameter Setup

3.2. Model Evaluation Experiment

3.2.1. Baseline Models for Comparative Evaluation

3.2.2. Evaluation Metrics

3.3. Ablation Experiment

4. Results

4.1. Results of Model Evaluation Experiment

4.2. Results of Ablation Experiment

5. Discussion

5.1. Advantages

5.2. Challenges

5.3. Future Perspectives

- Boost Recall and performance in complex scenes: While maintaining high Precision, introduce lightweight attention or improved feature fusion strategies [27] to raise Recall and reduce missed detections, especially under weak textures and heavy occlusion.

- Enhance robustness via multimodal fusion: Integrate RGB, near-infrared (NIR), and hyperspectral imagery to handle challenging illumination and background variation, thereby improving adaptability and stability across diverse cotton field conditions.

- Deployment and lightweight optimization: Apply pruning, quantization, and knowledge distillation at deployment to cut computational cost and meet real-time inference needs on resource-limited edge devices such as UAVs and handheld terminals.

- Cross-domain generalization and large-scale testing: Conduct extensive field trials across regions, climates, and cotton varieties to systematically assess cross-domain generalization to ensure stable performance of RA-CottNet in varied agricultural production scenarios.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Scarpin, G.J.; Bhattarai, A.; Hand, L.C.; Snider, J.L.; Roberts, P.M.; Bastos, L.M. Cotton lint yield and quality variability in Georgia, USA: Understanding genotypic and environmental interactions. Field Crops Res. 2025, 325, 109822. [Google Scholar] [CrossRef]

- Yang, Z.Y.; Xia, W.K.; Chu, H.Q.; Su, W.H.; Wang, R.F.; Wang, H. A comprehensive review of deep learning applications in cotton industry: From field monitoring to smart processing. Plants 2025, 14, 1481. [Google Scholar] [CrossRef]

- Smith, C.W.; Cothren, J.T. Cotton: Origin, History, Technology, and Production; John Wiley & Sons: Hoboken, NJ, USA, 1999. [Google Scholar]

- Huang, G.; Huang, J.Q.; Chen, X.Y.; Zhu, Y.X. Recent advances and future perspectives in cotton research. Annu. Rev. Plant Biol. 2021, 72, 437–462. [Google Scholar] [CrossRef]

- Fortucci, P. The contribution of cotton to economy and food security in developing countries. In Proceedings of the Cotton and Global Trade Negotiations Sponsored by the World Bank and ICAC Conference, Washington, DC, USA, 8–9 July 2002; Volume 8, pp. 8–9. [Google Scholar]

- Mathangadeera, R.W.; Hequet, E.F.; Kelly, B.; Dever, J.K.; Kelly, C.M. Importance of cotton fiber elongation in fiber processing. Ind. Crops Prod. 2020, 147, 112217. [Google Scholar] [CrossRef]

- Krifa, M.; Stevens, S.S. Cotton utilization in conventional and non-conventional textiles—A statistical review. Agric. Sci. 2016, 7, 747–758. [Google Scholar] [CrossRef]

- Shahriari Khalaji, M.; Lugoloobi, I. Biomedical application of cotton and its derivatives. In Cotton Science and Processing Technology: Gene, Ginning, Garment and Green Recycling; Springer: Singapore, 2020; pp. 393–416. [Google Scholar]

- Gu, S.; Sun, S.; Wang, X.; Wang, S.; Yang, M.; Li, J.; Maimaiti, P.; van der Werf, W.; Evers, J.B.; Zhang, L. Optimizing radiation capture in machine-harvested cotton: A functional-structural plant modelling approach to chemical vs. manual topping strategies. Field Crops Res. 2024, 317, 109553. [Google Scholar] [CrossRef]

- Ibrahim, A.A.; Hamoda, S. Effect of planting and harvesting dates on the physiological characteristics of cotton-seed quality. J. Plant Prod. 2021, 12, 1295–1299. [Google Scholar] [CrossRef]

- Sanjay, N.A.; Venkatramani, N.; Harinee, V.; Dinesh, V. Cotton harvester through the application of machine learning and image processing techniques. Mater. Today Proc. 2021, 47, 2200–2205. [Google Scholar] [CrossRef]

- Pabuayon, I.L.B.; Sun, Y.; Guo, W.; Ritchie, G.L. High-throughput phenotyping in cotton: A review. J. Cotton Res. 2019, 2, 18. [Google Scholar] [CrossRef]

- Zhang, Z.; Janvekar, N.A.S.; Feng, P.; Bhaskar, N. Graph-Based Detection of Abusive Computational Nodes. U.S. Patent 12,223,056, 11 February 2025. [Google Scholar]

- Li, L.; Li, J.; Wang, H.; Georgieva, T.; Ferentinos, K.; Arvanitis, K.; Sygrimis, N. Sustainable energy management of solar greenhouses using open weather data on MACQU platform. Int. J. Agric. Biol. Eng. 2018, 11, 74–82. [Google Scholar] [CrossRef]

- Qin, Y.M.; Tu, Y.H.; Li, T.; Ni, Y.; Wang, R.F.; Wang, H. Deep Learning for sustainable agriculture: A systematic review on applications in lettuce cultivation. Sustainability 2025, 17, 3190. [Google Scholar] [CrossRef]

- Zhou, Y.; Xia, H.; Yu, D.; Cheng, J.; Li, J. Outlier detection method based on high-density iteration. Inf. Sci. 2024, 662, 120286. [Google Scholar] [CrossRef]

- Wang, J.X.; Fan, L.F.; Wang, H.H.; Zhao, P.F.; Li, H.; Wang, Z.Y.; Huang, L. Determination of the moisture content of fresh meat using visible and near-infrared spatially resolved reflectance spectroscopy. Biosyst. Eng. 2017, 162, 40–56. [Google Scholar] [CrossRef]

- Tan, L.; Lu, J.; Jiang, H. Tomato leaf diseases classification based on leaf images: A comparison between classical machine learning and deep learning methods. AgriEngineering 2021, 3, 542–558. [Google Scholar] [CrossRef]

- Cui, K.; Tang, W.; Zhu, R.; Wang, M.; Larsen, G.D.; Pauca, V.P.; Alqahtani, S.; Yang, F.; Segurado, D.; Fine, P.; et al. Efficient Localization and Spatial Distribution Modeling of Canopy Palms Using UAV Imagery. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4413815. [Google Scholar] [CrossRef]

- Wu, A.Q.; Li, K.L.; Song, Z.Y.; Lou, X.; Hu, P.; Yang, W.; Wang, R.F. Deep Learning for Sustainable Aquaculture: Opportunities and Challenges. Sustainability 2025, 17, 5084. [Google Scholar] [CrossRef]

- Nagano, S.; Moriyuki, S.; Wakamori, K.; Mineno, H.; Fukuda, H. Leaf-movement-based growth prediction model using optical flow analysis and machine learning in plant factory. Front. Plant Sci. 2019, 10, 227. [Google Scholar] [CrossRef]

- Sliwa, B.; Piatkowski, N.; Wietfeld, C. LIMITS: Lightweight machine learning for IoT systems with resource limitations. In Proceedings of the ICC 2020–2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020; pp. 1–7. [Google Scholar]

- Zhang, S.; Zhao, K.; Huo, Y.; Yao, M.; Xue, L.; Wang, H. Mushroom image classification and recognition based on improved ConvNeXt V2. J. Food Sci. 2025, 90, e70133. [Google Scholar] [CrossRef]

- Lu, W.; Wang, J.; Wang, T.; Zhang, K.; Jiang, X.; Zhao, H. Visual style prompt learning using diffusion models for blind face restoration. Pattern Recognit. 2025, 161, 111312. [Google Scholar] [CrossRef]

- Zhou, Y.; Liu, C.; Urgaonkar, B.; Wang, Z.; Mueller, M.; Zhang, C.; Zhang, S.; Pfeil, P.; Horn, D.; Liu, Z.; et al. PBench: Workload Synthesizer with Real Statistics for Cloud Analytics Benchmarking. arXiv 2025, arXiv:2506.16379. [Google Scholar] [CrossRef]

- Yao, S.; Guan, R.; Wu, Z.; Ni, Y.; Huang, Z.; Liu, R.W.; Yue, Y.; Ding, W.; Lim, E.G.; Seo, H.; et al. Waterscenes: A multi-task 4d radar-camera fusion dataset and benchmarks for autonomous driving on water surfaces. IEEE Trans. Intell. Transp. Syst. 2024, 25, 16584–16598. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, H.W.; Dai, Y.Q.; Cui, K.; Wang, H.; Chee, P.W.; Wang, R.F. Resource-Efficient Cotton Network: A Lightweight Deep Learning Framework for Cotton Disease and Pest Classification. Plants 2025, 14, 2082. [Google Scholar] [CrossRef]

- Cynthia, E.P.; Ismanto, E.; Arifandy, M.I.; Sarbaini, S.; Nazaruddin, N.; Manuhutu, M.A.; Akbar, M.A.; Abdiyanto. Convolutional Neural Network and Deep Learning Approach for Image Detection and Identification. J. Phys. Conf. Ser. 2022, 2394, 012019. [Google Scholar]

- Cui, K.; Zhu, R.; Wang, M.; Tang, W.; Larsen, G.D.; Pauca, V.P.; Alqahtani, S.; Yang, F.; Segurado, D.; Lutz, D.; et al. Detection and geographic localization of natural objects in the wild: A case study on palms. arXiv 2025, arXiv:2502.13023. [Google Scholar]

- Wang, R.F.; Su, W.H. The application of deep learning in the whole potato production Chain: A Comprehensive review. Agriculture 2024, 14, 1225. [Google Scholar] [CrossRef]

- Sun, L.; Cui, X.; Fan, X.; Suo, X.; Fan, B.; Zhang, X. Automatic detection of pesticide residues on the surface of lettuce leaves using images of feature wavelengths spectrum. Front. Plant Sci. 2023, 13, 929999. [Google Scholar] [CrossRef] [PubMed]

- Tetila, E.C.; Machado, B.B.; Astolfi, G.; de Souza Belete, N.A.; Amorim, W.P.; Roel, A.R.; Pistori, H. Detection and classification of soybean pests using deep learning with UAV images. Comput. Electron. Agric. 2020, 179, 105836. [Google Scholar] [CrossRef]

- Machefer, M.; Lemarchand, F.; Bonnefond, V.; Hitchins, A.; Sidiropoulos, P. Mask R-CNN refitting strategy for plant counting and sizing in UAV imagery. Remote Sens. 2020, 12, 3015. [Google Scholar] [CrossRef]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo algorithm developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Badgujar, C.M.; Poulose, A.; Gan, H. Agricultural object detection with You Only Look Once (YOLO) Algorithm: A bibliometric and systematic literature review. Comput. Electron. Agric. 2024, 223, 109090. [Google Scholar] [CrossRef]

- Darwin, B.; Dharmaraj, P.; Prince, S.; Popescu, D.E.; Hemanth, D.J. Recognition of bloom/yield in crop images using deep learning models for smart agriculture: A review. Agronomy 2021, 11, 646. [Google Scholar] [CrossRef]

- Di, X.; Cui, K.; Wang, R.F. Toward Efficient UAV-Based Small Object Detection: A Lightweight Network with Enhanced Feature Fusion. Remote Sens. 2025, 17, 2235. [Google Scholar] [CrossRef]

- Huo, Y.; Wang, R.F.; Zhao, C.T.; Hu, P.; Wang, H. Research on Obtaining Pepper Phenotypic Parameters Based on Improved YOLOX Algorithm. AgriEngineering 2025, 7, 209. [Google Scholar] [CrossRef]

- Cardellicchio, A.; Renò, V.; Cellini, F.; Summerer, S.; Petrozza, A.; Milella, A. Incremental Learning with Domain Adaption for Tomato Plant Phenotyping. Smart Agric. Technol. 2025, 12, 101324. [Google Scholar] [CrossRef]

- Wang, R.; Chen, Y.; Zhang, G.; Yang, C.; Teng, X.; Zhao, C. YOLO11-PGM: High-Precision Lightweight Pomegranate Growth Monitoring Model for Smart Agriculture. Agronomy 2025, 15, 1123. [Google Scholar] [CrossRef]

- Liu, Q.; Zhang, Y.; Yang, G. Small unopened cotton boll counting by detection with MRF-YOLO in the wild. Comput. Electron. Agric. 2023, 204, 107576. [Google Scholar] [CrossRef]

- Zhang, M.; Chen, W.; Gao, P.; Li, Y.; Tan, F.; Zhang, Y.; Ruan, S.; Xing, P.; Guo, L. YOLO SSPD: A small target cotton boll detection model during the boll-spitting period based on space-to-depth convolution. Front. Plant Sci. 2024, 15, 1409194. [Google Scholar] [CrossRef]

- Xiang, L.; Ruoxue, X.; Chenglong, B.; Min, T.; Mingtian, T.; Kaiwen, H. Lightweight Cotton Boll Detection Model and Yield Prediction Method Based on Improved YOLO v8. Nongye Jixie Xuebao/Trans. Chin. Soc. Agric. Mach. 2025, 56, 130–140. [Google Scholar]

- Yu, G.; Ma, B.; Zhang, R.; Xu, Y.; Lian, Y.; Dong, F. CPD-YOLO: A cross-platform detection method for cotton pests and diseases using UAV and smartphone imaging. Ind. Crops Prod. 2025, 234, 121515. [Google Scholar] [CrossRef]

- Khan, M.A.; Wahid, A.; Ahmad, M.; Tahir, M.T.; Ahmed, M.; Ahmad, S.; Hasanuzzaman, M. World cotton production and consumption: An overview. In Cotton Production and Uses: Agronomy, Crop Protection, and Postharvest Technologies; Springer: Cham, Switzerland, 2020; pp. 1–7. [Google Scholar]

- Hidayatullah, P.; Syakrani, N.; Sholahuddin, M.R.; Gelar, T.; Tubagus, R. YOLOv8 to YOLO11: A comprehensive architecture in-depth comparative review. arXiv 2025, arXiv:2501.13400. [Google Scholar]

- Alkhammash, E.H. Multi-classification using YOLOv11 and hybrid YOLO11n-MobileNet models: A fire classes case study. Fire 2025, 8, 17. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Wang, R.F.; Tu, Y.H.; Li, X.C.; Chen, Z.Q.; Zhao, C.T.; Yang, C.; Su, W.H. An Intelligent Robot Based on Optimized YOLOv11l for Weed Control in Lettuce. In Proceedings of the 2025 ASABE Annual International Meeting. American Society of Agricultural and Biological Engineers, Toronto, ON, Canada, 13–16 July 2025; p. 1. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Li, Y.; Ren, F. Light-weight retinanet for object detection. arXiv 2019, arXiv:1905.10011. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Zhao, Z.; Chen, S.; Ge, Y.; Yang, P.; Wang, Y.; Song, Y. Rt-detr-tomato: Tomato target detection algorithm based on improved rt-detr for agricultural safety production. Appl. Sci. 2024, 14, 6287. [Google Scholar] [CrossRef]

- Xie, W.; Zhao, M.; Liu, Y.; Yang, D.; Huang, K.; Fan, C.; Wang, Z. Recent advances in Transformer technology for agriculture: A comprehensive survey. Eng. Appl. Artif. Intell. 2024, 138, 109412. [Google Scholar] [CrossRef]

- Li, C.; Zhou, A.; Yao, A. Omni-dimensional dynamic convolution. arXiv 2022, arXiv:2209.07947. [Google Scholar] [CrossRef]

- Qian, J.; Lin, J.; Bai, D.; Xu, R.; Lin, H. Omni-dimensional dynamic convolution meets bottleneck transformer: A novel improved high accuracy forest fire smoke detection model. Forests 2023, 14, 838. [Google Scholar] [CrossRef]

- Sunkara, R.; Luo, T. No more strided convolutions or pooling: A new CNN building block for low-resolution images and small objects. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Grenoble, France, 19–23 September 2022; Springer: Cham, Switzerland, 2022; pp. 443–459. [Google Scholar]

- Sun, R.; Fan, H.; Tang, Y.; He, Z.; Xu, Y.; Wu, E. Research on small target detection algorithm for UAV inspection scene based on SPD-conv. In Proceedings of the Fourth International Conference on Computer Vision and Data Mining (ICCVDM 2023), Changchun, China, 20–22 October 2023; SPIE: Bellingham, WA, USA, 2024; Volume 13063, pp. 686–691. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European conference on computer vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global attention mechanism: Retain information to enhance channel-spatial interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar] [CrossRef]

- Elfwing, S.; Uchibe, E.; Doya, K. Sigmoid-weighted linear units for neural network function approximation in reinforcement learning. Neural Netw. 2018, 107, 3–11. [Google Scholar] [CrossRef]

- Agarap, A.F. Deep learning using rectified linear units (relu). arXiv 2018, arXiv:1803.08375. [Google Scholar]

- Lau, K.W.; Po, L.M.; Rehman, Y.A.U. Large separable kernel attention: Rethinking the large kernel attention design in cnn. Expert Syst. Appl. 2024, 236, 121352. [Google Scholar] [CrossRef]

- Tie, J.; Zhu, C.; Zheng, L.; Wang, H.; Ruan, C.; Wu, M.; Xu, K.; Liu, J. LSKA-YOLOv8: A lightweight steel surface defect detection algorithm based on YOLOv8 improvement. Alex. Eng. J. 2024, 109, 201–212. [Google Scholar] [CrossRef]

- Deng, L.; Wu, S.; Zhou, J.; Zou, S.; Liu, Q. LSKA-YOLOv8n-WIoU: An Enhanced YOLOv8n Method for Early Fire Detection in Airplane Hangars. Fire 2025, 8, 67. [Google Scholar] [CrossRef]

- Wu, X.X.; Liu, J.G. A new early stopping algorithm for improving neural network generalization. In Proceedings of the 2009 Second International Conference on Intelligent Computation Technology and Automation, Washington, DC, USA, 10–11 October 2009; Volume 1, pp. 15–18. [Google Scholar]

- He, L.h.; Zhou, Y.z.; Liu, L.; Cao, W.; Ma, J.h. Research on object detection and recognition in remote sensing images based on YOLOv11. Sci. Rep. 2025, 15, 14032. [Google Scholar] [CrossRef]

- Varghese, R.; Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Tian, Y.; Ye, Q.; Doermann, D. Yolov12: Attention-centric real-time object detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Zhang, Y.; Wang, X.; Hu, J.; Zhang, J.; Zhu, P.; Lu, W. Research on small target detection in complex substation environments based on an end-to-end improved RT-DETR model. In Proceedings of the International Conference on Mechatronic Engineering and Artificial Intelligence (MEAI 2024), Shenyang, China, 13–15 December 2024; SPIE: Bellingham, WA, USA, 2025; Volume 13555, pp. 813–821. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Kong, Y.; Shang, X.; Jia, S. Drone-DETR: Efficient small object detection for remote sensing image using enhanced RT-DETR model. Sensors 2024, 24, 5496. [Google Scholar] [CrossRef] [PubMed]

- Wu, M.; Qiu, Y.; Wang, W.; Su, X.; Cao, Y.; Bai, Y. Improved RT-DETR and its application to fruit ripeness detection. Front. Plant Sci. 2025, 16, 1423682. [Google Scholar] [CrossRef] [PubMed]

| Model | P (%) | R (%) | F1 (%) | Time (s) | ||

|---|---|---|---|---|---|---|

| YOLOv11n | 89.183 | 88.144 | 88.661 | 92.949 | 73.790 | 5019.29 |

| YOLOv11s | 89.227 | 90.419 | 89.818 | 93.442 | 73.550 | 2995.95 |

| YOLOv11m | 91.923 | 89.550 | 90.723 | 93.097 | 73.204 | 5713.89 |

| YOLOv11l | 89.985 | 89.515 | 89.749 | 93.471 | 73.310 | 7525.64 |

| YOLOv11x | 89.650 | 90.513 | 90.079 | 93.833 | 74.665 | 11916.40 |

| YOLOv8n | 93.577 | 86.693 | 90.022 | 92.308 | 71.674 | 2205.33 |

| YOLOv8s | 90.271 | 89.516 | 89.892 | 92.053 | 72.214 | 4216.21 |

| YOLOv8m | 91.246 | 87.544 | 89.358 | 92.708 | 72.757 | 3136.89 |

| YOLOv8l | 91.056 | 90.139 | 90.595 | 92.746 | 72.046 | 4241.90 |

| YOLOv8x | 92.053 | 87.980 | 89.968 | 92.807 | 72.914 | 7865.91 |

| YOLOv12n | 87.911 | 89.897 | 88.991 | 92.406 | 72.004 | 2373.79 |

| YOLOv12s | 89.671 | 89.100 | 89.384 | 92.990 | 73.434 | 6616.87 |

| YOLOv12m | 90.746 | 89.172 | 89.952 | 93.104 | 73.464 | 9010.27 |

| YOLOv12x | 89.694 | 86.045 | 87.829 | 92.649 | 72.552 | 6459.60 |

| RT-DETR-50 | 91.166 | 88.333 | 89.727 | 91.672 | 71.805 | 10054.10 |

| RT-DETR-101 | 89.041 | 87.907 | 88.471 | 91.500 | 71.547 | 8969.67 |

| RA-CottNet | 93.683 | 86.040 | 89.692 | 93.496 | 72.857 | 2565.07 |

| Model | P (%) | R (%) | F1 (%) | mAP50 (%) | mAP95 (%) | Time (s) |

|---|---|---|---|---|---|---|

| Without_CA | 88.092 | 90.523 | 89.290 | 93.113 | 72.211 | 5823.26 |

| Without_GAM | 89.983 | 86.799 | 88.360 | 92.717 | 71.472 | 2282.23 |

| Without_LSKA | 92.697 | 86.998 | 89.754 | 92.976 | 72.021 | 2727.82 |

| Without_All_Attn | 89.411 | 87.302 | 88.342 | 92.049 | 70.820 | 1209.43 |

| Without_ODConv | 92.164 | 85.896 | 88.918 | 92.422 | 71.951 | 3816.51 |

| Without_SPDConv | 89.812 | 86.845 | 88.301 | 92.642 | 71.360 | 2153.55 |

| RA-CottNet | 93.683 | 86.040 | 89.692 | 93.496 | 72.857 | 2565.07 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, R.-F.; Qin, Y.-M.; Zhao, Y.-Y.; Xu, M.; Schardong, I.B.; Cui, K. RA-CottNet: A Real-Time High-Precision Deep Learning Model for Cotton Boll and Flower Recognition. AI 2025, 6, 235. https://doi.org/10.3390/ai6090235

Wang R-F, Qin Y-M, Zhao Y-Y, Xu M, Schardong IB, Cui K. RA-CottNet: A Real-Time High-Precision Deep Learning Model for Cotton Boll and Flower Recognition. AI. 2025; 6(9):235. https://doi.org/10.3390/ai6090235

Chicago/Turabian StyleWang, Rui-Feng, Yi-Ming Qin, Yi-Yi Zhao, Mingrui Xu, Iago Beffart Schardong, and Kangning Cui. 2025. "RA-CottNet: A Real-Time High-Precision Deep Learning Model for Cotton Boll and Flower Recognition" AI 6, no. 9: 235. https://doi.org/10.3390/ai6090235

APA StyleWang, R.-F., Qin, Y.-M., Zhao, Y.-Y., Xu, M., Schardong, I. B., & Cui, K. (2025). RA-CottNet: A Real-Time High-Precision Deep Learning Model for Cotton Boll and Flower Recognition. AI, 6(9), 235. https://doi.org/10.3390/ai6090235