1. Introduction

Understanding emotions through visual forms is essential for effective communication and learning, especially in early childhood [

1,

2]. As the role of Artificial Intelligence (AI) in education continues to grow, the ability to interpret emotions in illustrated children’s books becomes increasingly important for developing culturally responsive educational technologies [

3]. Recent advances in MLLMs, such as GPT-4o and Gemini, have significantly improved visual–textual reasoning capabilities [

4], yet their application to non-English educational contexts remains limited [

5]. Arabic children’s literature presents unique challenges for computational emotion recognition due to its expressive language, cultural symbolism, and artistic style [

6]. Despite the educational importance of emotion recognition in early literacy development highlighted by [

7], there exists a significant gap in our understanding of how well current AI systems can interpret emotional content in Arabic visual narratives. This study addresses this research gap by systematically evaluating the emotion recognition capabilities of two advanced MLLMs, GPT-4o and Gemini Pro 1.5, when processing illustrations from Arabic children’s storybooks. The primary aim of this study was to determine the accuracy and effectiveness of large language models in identifying emotions in images from Arabic children’s storybooks. To accomplish this aim, we pursued several specific objectives: to evaluate and compare the effectiveness of different prompting strategies (zero-shot, few-shot, and chain-of-thought) on MLLMs’ emotion recognition accuracy in Arabic visual narratives; to identify and categorize common error patterns when MLLMs interpret emotional content in Arabic children’s literature; and to assess MLLMs’ performance against human consensus when interpreting ambiguous or culturally nuanced emotional expressions.

To achieve these objectives, we compared the models’ performance across three distinct prompting strategies using a dataset of 75 images from seven Arabic storybooks, with human-annotated emotions based on Plutchik’s framework serving as ground truth [

8]. Beyond simple accuracy metrics, we analyzed cases where models struggle with narrative complexity or misinterpret subtle emotional expressions, providing insights into the challenges of cross-cultural emotion recognition in educational AI [

9]. This research makes several contributions to the fields of educational technology and Arabic digital literacy: (1) It presents the first systematic evaluation of MLLMs for emotion recognition in Arabic children’s literature; (2) it identifies specific patterns of success and failure in cross-cultural emotion recognition tasks; and (3) it provides actionable recommendations for developing more culturally sensitive AI systems for Arabic literacy education [

10]. Through exploratory experiments expanding the emotional label space and isolating individual characters from their narrative contexts, we offer additional insights into the models’ emotional reasoning capabilities. The findings of this study have significant implications for the design of emotion-aware educational technologies that can effectively support Arabic literacy acquisition and social–emotional learning in culturally appropriate ways.

3. Materials and Methods

This study employed a multi-method comparative analysis to evaluate the emotion recognition capabilities of multimodal large language models (MLLMs) when interpreting illustrations from Arabic children’s literature. The methodological framework systematically integrated quantitative performance metrics with qualitative analytical techniques, facilitating rigorous comparison between human annotator judgments and machine-generated interpretations across diverse emotional contexts, prompting strategies, and image characteristics.

3.1. Dataset

The visual stimuli were sourced from “We Love Reading” (نحن نحب القراءة), an organization advancing Arabic literacy through culturally relevant children’s literature [

26]. Seven distinct storybooks were randomly selected, with a minimum of ten illustrations systematically extracted from each, yielding 75 unique image panels. The images were distributed proportionally based on the narrative complexity and emotional diversity within each book, ensuring representative coverage across the collection. Selection criteria prioritized diverse emotional representations, varying degrees of emotional complexity, contextual clarity, and cultural specificity. Images were preserved in their entirety without segmentation to maintain ecological validity in accordance with established principles for multimodal research [

27].

All illustrations underwent standardization to ensure compatibility with MLLM vision processing requirements while preserving original visual information, consistent with methodological recommendations for ecologically valid evaluations of multimodal AI systems.

3.2. Human Annotation

Four annotators who are native Arabic speakers with full proficiency in Modern Standard Arabic and colloquial variants established ground truth classifications. Annotators received standardized instructions regarding the emotion taxonomy and classification protocols, following established guidelines for cultural annotation tasks [

28].

The emotion classification framework employed Plutchik’s Wheel of Emotions taxonomy [

8] and was structured around nine distinct affective Arabic categories: happiness (سعادة), sadness (حزن), anger (غضب), fear (خوف), surprise (مفاجأة), disgust (قرف), neutral (محايد), anticipation (ترقب), and trust (ثقة). This taxonomy was selected for its cultural adaptability within Arabic children’s literature contexts [

16].

In instances of classificatory divergence, a structured consensus-building procedure was implemented, where annotators met to discuss their interpretations. During these consensus meetings, annotators articulated interpretive rationales, examined visual evidence, and reconciled discrepant classifications through collaborative dialogue. This approach ensured the ground truth represented informed intersubjective agreement rather than statistical aggregation and fostered deeper consideration of culturally nuanced emotional expressions.

3.3. Selection and Interaction with MLLMs

Two state-of-the-art MLLMs, OpenAI’s GPT-4o and Google’s Gemini 1.5 Pro, were selected based on their demonstrated performance on contemporary multimodal benchmarks and recognition for advanced multimodal understanding capabilities [

29]. For consistency, we used GPT-4o (API version gpt-4o-2024-05-13) and Gemini 1.5 Pro (gemini-1.5-pro-001). While official model sizes and training details are proprietary, both models represent flagship multimodal systems of their respective generations. Interactions with both models were conducted programmatically through their respective APIs (OpenAI API v1 and Google Gemini API), with special attention to maintaining consistent prompt formatting and presentation across all experimental conditions. We developed a custom Python framework to automate interactions with both APIs, ensuring methodological consistency and enabling systematic data collection. The API-based approach allowed for precise control over model parameters, systematic response collection, and reproducibility of results. All interactions occurred exclusively in Modern Standard Arabic, consistent with methodological standards for cross-lingual evaluation.

3.4. Prompting Techniques

Three distinct prompting paradigms were systematically implemented, with complete prompt templates available in

Appendix A Table A1. In all prompting conditions, the models were explicitly instructed in Modern Standard Arabic to select their response from a predefined list comprising Plutchik’s eight primary emotions, plus neutral to capture images lacking distinct emotional content.

Zero-shot prompting involved direct instructions to identify the primary emotion from the predefined list without exemplification or methodological guidance. This approach tested the models’ baseline capabilities without contextual support.

Few-shot prompting provided three image–emotion pairs exemplifying diverse emotional categories from the predefined list, following few-shot learning principles in contemporary vision–language research. This method examined whether exemplars enhanced recognition accuracy.

Chain-of-thought (CoT) prompting incorporated explicit direction to “think step-by-step,” engaging in sequential reasoning to identify visual cues, integrate observations, and classify emotions from the predefined list [

9]. This approach evaluated whether structured reasoning improved performance on emotionally complex stimuli.

These three prompting strategies were selected to probe different facets of the models’ reasoning capabilities. Zero-shot prompting established a baseline performance, testing the models’ intrinsic understanding of emotions without explicit examples. Few-shot prompting assessed their in-context learning ability, a key strength of modern LLMs, to see if performance improves with relevant examples. Finally, chain-of-thought (CoT) prompting was chosen to evaluate whether a structured, step-by-step reasoning process could help the models deconstruct complex visual scenes and arrive at more accurate emotional classifications, a technique shown to enhance precision in complex reasoning tasks.

3.5. Data Collection and Processing

Responses from both GPT-4o and Gemini 1.5 across all prompting strategies were systematically collected for each image, yielding 450 machine-generated classifications (75 images × 2 models × 3 prompting strategies = 450 total classifications). Our automated data collection pipeline captured and stored model responses in a structured database with verification procedures to ensure accuracy. Each response was programmatically validated for conformity to the expected response format and manually reviewed when necessary.

Model outputs were standardized by aligning variant terms strictly to the predefined taxonomy through explicit mapping procedures. This standardization process included normalizing Arabic text variations, removing diacritics, and resolving synonym usage to ensure consistent emotional categorization. For CoT responses, only the final classifications were extracted for analytical comparison, adhering to standardized evaluation protocols for multimodal emotion recognition [

30].

3.6. Analysis Methods

We employed both quantitative and qualitative analytical techniques to evaluate model performance, as follows:

Performance metrics: Overall performance, per-emotion precision, recall, and F1-scores were calculated for each model and prompting strategy by comparing model predictions against human-annotated ground truth.

Error analysis framework: Errors were systematically categorized into three taxonomic categories: valence inversions (confusing positive/negative emotions), arousal confusions (misclassifying activation levels), and contextual/cultural misinterpretations, following established emotion recognition evaluation frameworks [

31].

Qualitative case studies: Representative examples of successful and unsuccessful classifications were subjected to in-depth qualitative analysis to identify patterns in model reasoning and cultural interpretation.

Human–AI alignment analysis: Agreement between model predictions and human annotations was assessed using Cohen’s Kappa to measure inter-rater reliability, accounting for chance agreement and class imbalance in emotion annotation tasks.

3.7. Supplementary Methodological Variations

To further investigate the factors influencing emotion recognition performance, we conducted two supplementary analyses using a representative subset (10%) of the original dataset. Images for this analysis were selected to maintain proportional representation of emotional categories and visual complexity levels. For the first variation, we augmented standard prompting by including an image of Plutchik’s Wheel of Emotions directly within the prompt interface, providing models with a visual reference framework of the emotional taxonomy. This approach aimed to assess whether explicit visualization of emotional relationships would improve classification performance.

In the second variation, we implemented character-focused segmentation, isolating only the main characters in each illustration while removing contextual backgrounds and surrounding elements. This method examined whether focusing the models’ attention on facial expressions and body language, without potentially distracting environmental cues, would enhance recognition performance. Both variations maintained identical prompting strategies (zero-shot, few-shot, and chain-of-thought) and evaluation procedures to enable direct comparison with our primary methodology. All supplementary analyses were conducted in Modern Standard Arabic with the same predefined list of nine emotion categories.

4. Results

The experimental analysis yielded a comprehensive dataset of (

n = 450) distinct emotion predictions (

n = 75 images × 2 models × 3 prompting techniques) derived from the evaluation of multimodal large language models in Arabic emotional content recognition. This substantial corpus of predictions facilitated robust statistical assessment across varied experimental conditions. The investigation examined the differential performance of two state-of-the-art multimodal architectures (GPT-4o and Gemini) utilizing three distinct prompting paradigms: zero-shot, few-shot, and chain-of-thought methodologies. It is important to note a significant class imbalance within our dataset, where happiness (سعادة) constituted 40% of the annotations (

Table 1). This overrepresentation reflects the natural narrative themes in the children’s storybooks but may have introduced bias. The analytical framework systematically addressed four principal dimensions: comparative efficacy of prompting strategies, emotion-specific classification performance, structured analysis of misclassification patterns through valence–arousal theoretical constructs, and contextual performance variations related to narrative positioning and visual ambiguity. The following sections present the quantitative and qualitative findings extracted from this substantial corpus of model predictions.

4.1. Prompting Technique Effectiveness

To assess their performance in Arabic emotion recognition, the results are presented in terms of overall performance and per-emotion classification performance.

4.1.1. Overall Performance

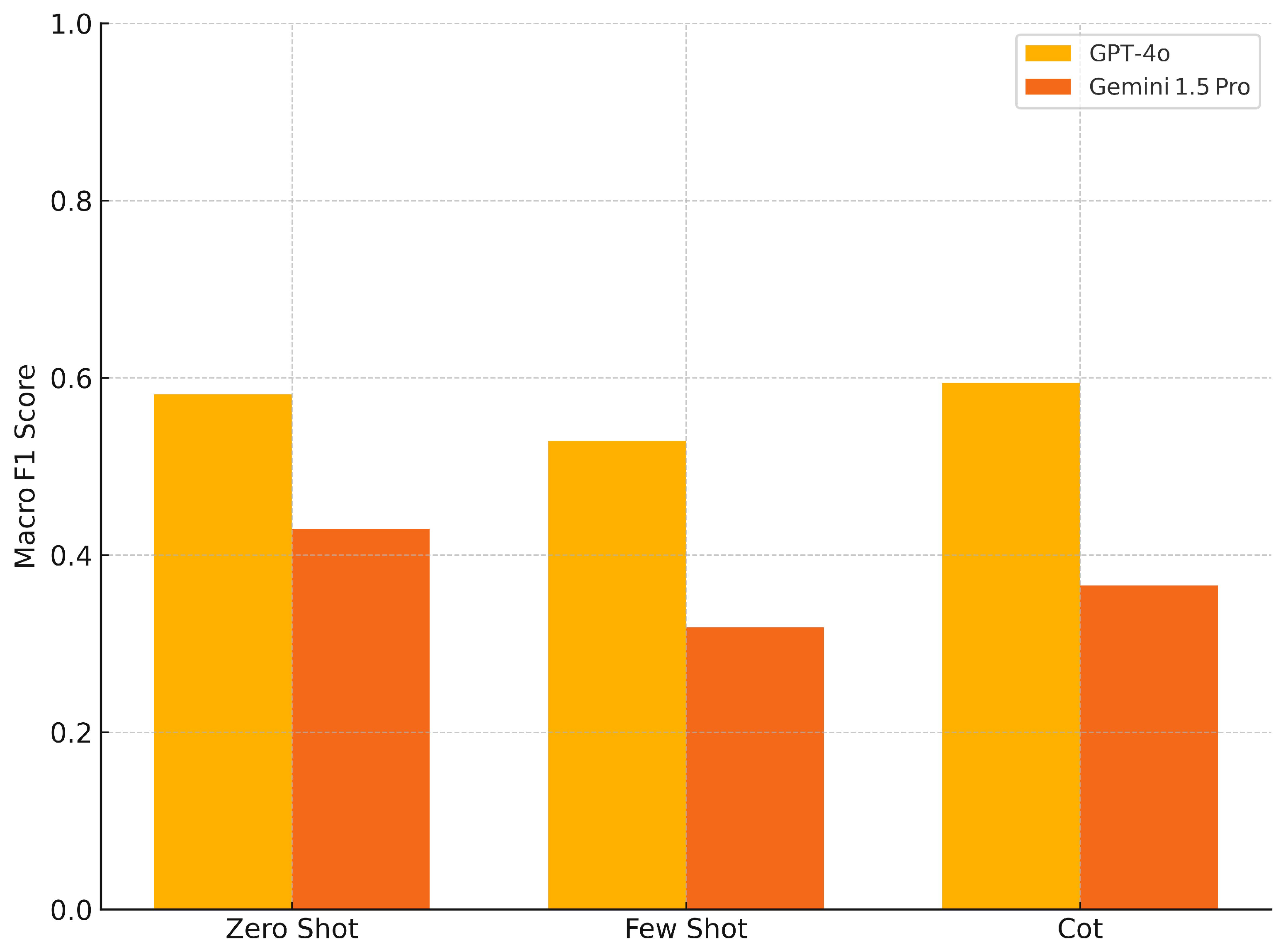

Macro F1-scores were used to evaluate overall model performance while accounting for class imbalance across the nine emotional categories, as shown in

Figure 1. GPT-4o consistently outperformed Gemini 1.5 Pro across all prompting strategies. GPT-4o achieved macro F1-scores of 57% (zero-shot), 52% (few-shot), and 59% (CoT), while Gemini 1.5 Pro produced macro F1-scores of 43%, 32%, and 37% for the corresponding strategies.

Chain-of-thought prompting produced the highest performance for GPT-4o (59%), representing a two percentage point improvement over zero-shot. For Gemini 1.5 Pro, zero-shot prompting achieved the highest macro F1-score (43%), with few-shot producing the lowest performance across both model architectures.

4.1.2. Performance by Emotional Category

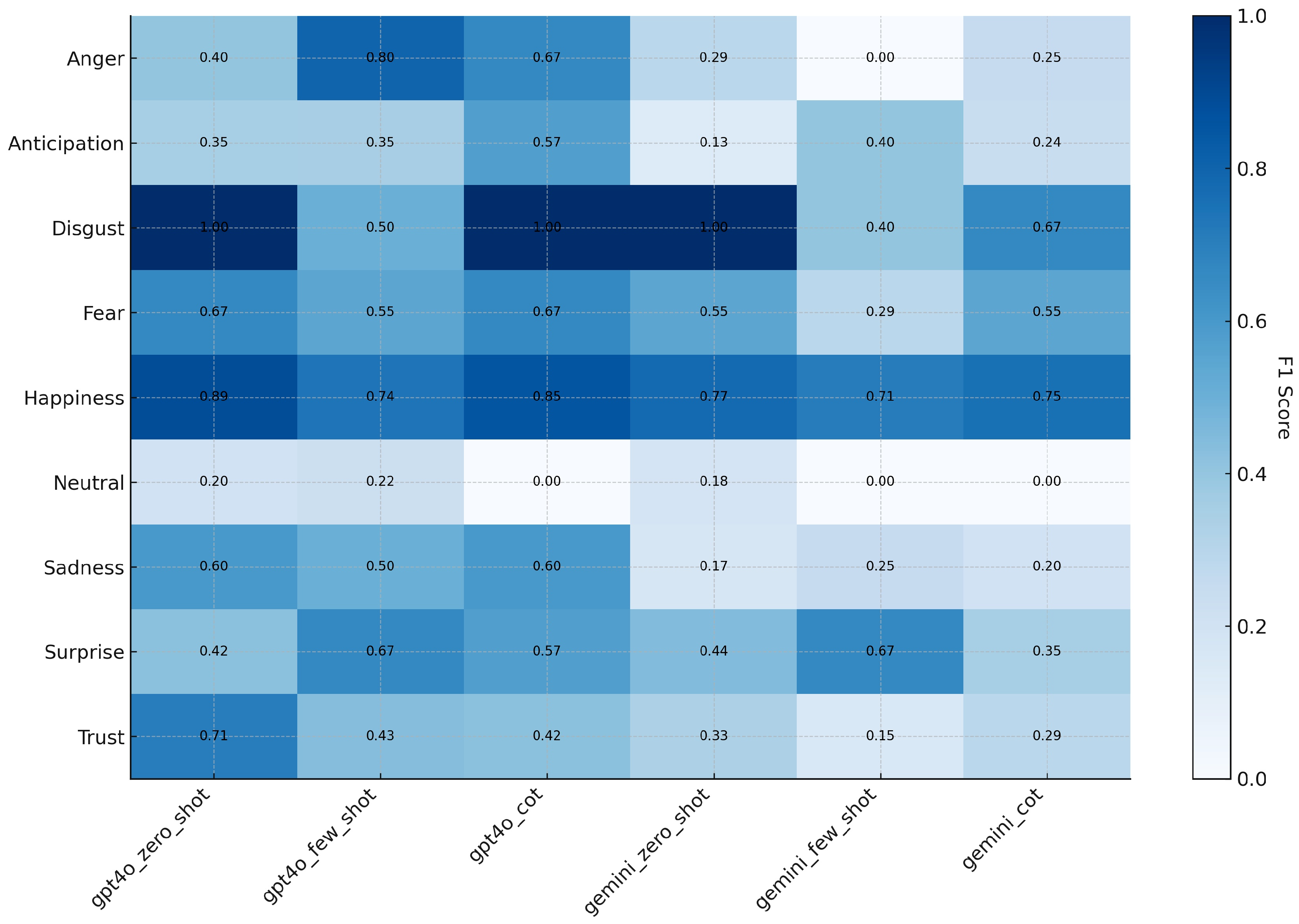

Performance varied substantially across emotional categories, with clear distinctions between high-performing and challenging emotions. As shown in

Figure 2, happiness achieved consistently high F1-scores across all model-prompting combinations, ranging from 69% (GPT-4o zero-shot) to 85% (GPT-4o CoT). Trust demonstrated the highest individual performance, with GPT-4o zero-shot achieving 71%, though performance dropped significantly for other configurations. Several emotions proved particularly challenging for both models. Neutral emotion showed consistently poor performance across all configurations, with multiple zero scores for the Gemini models and GPT-4o CoT. Anticipation and anger also demonstrated substantial performance gaps between models, with Gemini frequently producing F1-scores below 30%, while GPT-4o maintained moderate performance. Notable performance variability emerged within specific emotions across prompting strategies. For instance, surprise ranged from 35% (Gemini CoT) to 67% (GPT-4o few-shot and Gemini few-shot), while disgust showed extreme inconsistency, with some configurations achieving 67% and others dropping to 0%. Fear and sadness demonstrated more stable performance patterns, with GPT-4o consistently outperforming Gemini across all prompting approaches.

The data reveal that model architecture had a stronger influence on emotion recognition performance than prompting strategy, with GPT-4o showing greater stability and fewer complete classification failures compared to Gemini 1.5 Pro.

4.2. Systematic Analysis of Emotion Recognition Errors

Building on the prompting strategy analysis, we conducted a comprehensive examination of misclassification patterns to understand the underlying causes of model errors. We categorized misclassifications into three theoretically grounded dimensions based on the circumplex model of emotion [

27,

32]:

Valence inversions: Errors involving confusion between emotions of opposite polarity. The valence dimension represents the positive or negative nature of emotional states. For example, models may confuse positive emotions like happiness (سعادة) and trust (ثقة) with negative emotions such as sadness (حزن) and anger (غضب).

Arousal mismatches: Errors where models correctly identify emotional valence but misclassify arousal intensity. High-arousal emotions such as fear (خوف), anger (غضب), joy (سعادة), and surprise (مفاجأة) are confused with low-arousal states, including sadness (حزن), trust (ثقة), and neutral expressions (محايد) [

33,

34].

Contextual/cultural misinterpretations: Cases where models correctly identify both valence and arousal but fail to capture culturally specific emotional expressions or contextual nuances, resulting in misclassification despite partial dimensional accuracy [

35,

36].

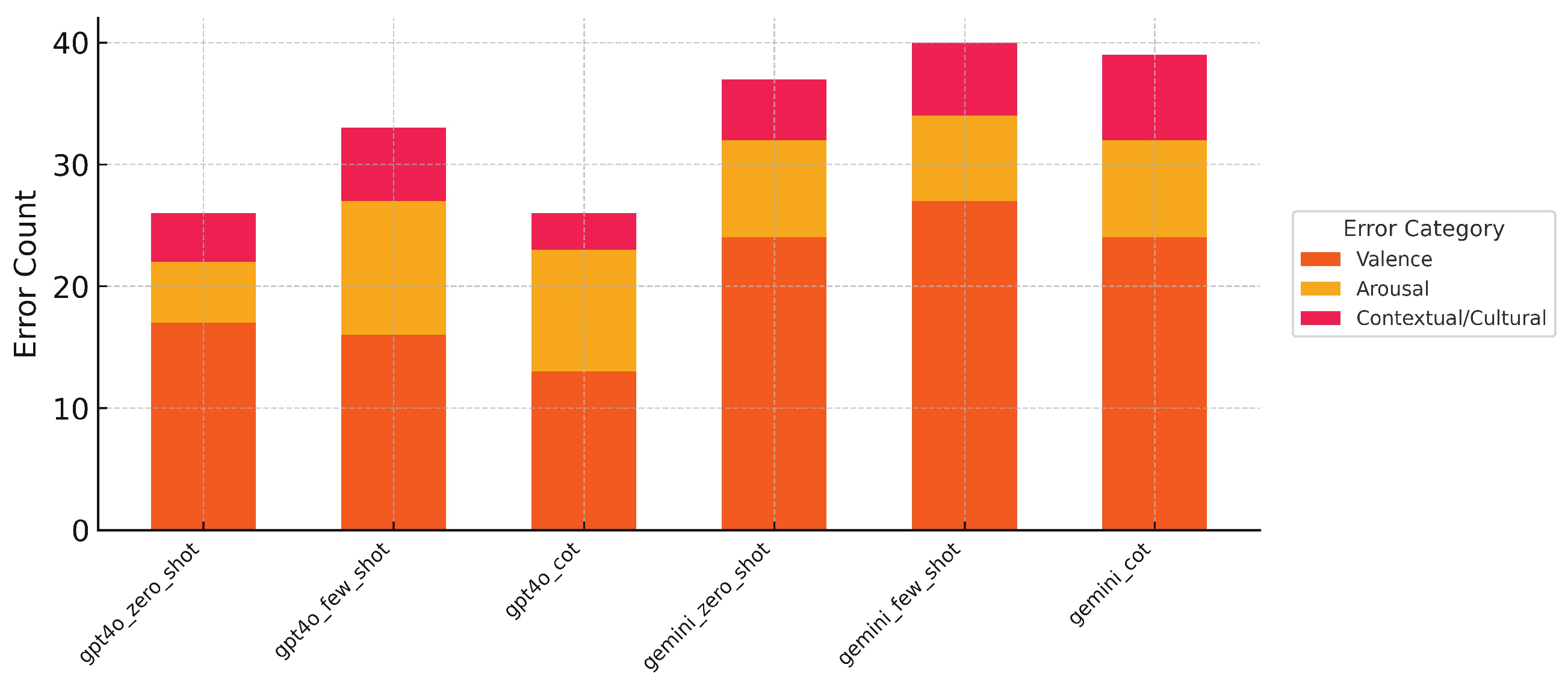

The error distribution, shown in

Figure 3, reveals clear patterns in model failures. Valence inversions dominated at 60.7% (122 out of 201), followed by arousal mismatches at 24.4% (49 cases) and contextual/cultural misinterpretations at 14.9% (30 cases). This pronounced imbalance demonstrates systematic rather than random error patterns, with models consistently struggling most with emotional polarity distinctions.

Error distribution patterns varied substantially across model-prompting combinations (

Figure 4). GPT-4o demonstrated the lowest total error counts with zero-shot (25 errors) and CoT (26 errors) configurations, while Gemini few-shot produced the highest error count (40 errors). Valence errors dominated across all configurations, ranging from 48.1% (GPT-4o CoT) to 70.0% (Gemini few-shot). Arousal errors showed greater variability, from 20.0% (GPT-4o zero-shot) to 37.0% (GPT-4o CoT). Contextual/cultural errors remained relatively consistent across conditions, ranging from 10.0% to 20.0% of total errors. The GPT-4o configurations showed more balanced error distributions compared to Gemini, which exhibited higher concentrations of valence-related misclassifications across all prompting strategies.

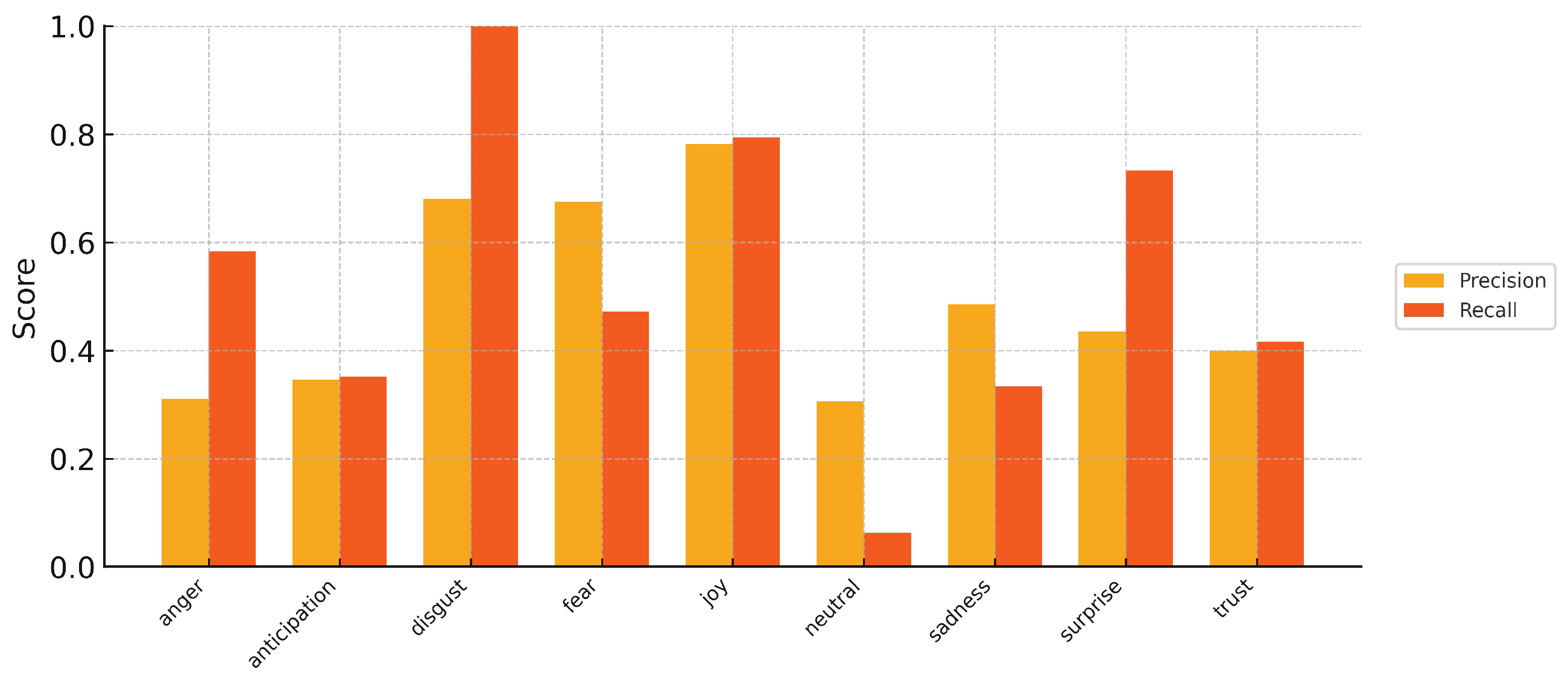

Precision and recall varied substantially across emotional categories (

Figure 5). Joy achieved the highest performance, with precision of 78% and recall of 80%. Disgust showed high recall (100%) but moderate precision (68%). Lower-performing emotions included anger (precision = 32%, recall = 58%), anticipation (precision = 35%, recall = 35%), and trust (precision = 40%, recall = 42%). Neutral emotion demonstrated the poorest performance, with precision of 30% and recall of 6%.

4.3. Qualitative Case Analysis

To complement our quantitative analysis, we examined some representative image cases to understand how visual clarity and contextual factors influence model performance (

Figure 6).

Panel (a) shows an image where the human annotation was happiness (سعادة), and all models across all prompting strategies correctly identified this emotion. The image features a child with a prominent smile and bright, cheerful visual elements. This case demonstrates successful emotion recognition when clear visual indicators are present.

Panel (b) was human-annotated as neutral (محايد), but the models disagreed in their classifications. GPT-4o predicted surprise (مفاجأة), while Gemini few-shot predicted fear (خوف). The image shows more subtle facial expressions and less distinct emotional markers compared to Panel (a), resulting in varied model interpretations.

Panel (c) was human-labeled as neutral (محايد), but five out of the six model configurations predicted anger (غضب). The Arabic text content appears to describe conflict and an anger emotion, which might have influenced the model predictions despite the character’s calm visual appearance.

Panel (d) demonstrates architectural differences in valence processing. Human annotation and all GPT-4o configurations identified happiness (سعادة), while all Gemini configurations systematically predicted negative emotions: sadness and fear (حزن, خوف).

4.4. Human–AI Alignment

To assess alignment between large language models and human annotators in emotion recognition from children’s storybooks, we analyzed agreement rates across six model-prompting combinations compared against human-labeled ground truth using Cohen’s Kappa statistics.

The results revealed substantial differences in human–AI alignment across models and prompting techniques (

Table 2). GPT-4o consistently demonstrated higher alignment with the human annotations, achieving Cohen’s Kappa values of 0.56 (zero-shot), 0.46 (few-shot), and 0.56 (CoT). These values indicate moderate agreement according to standard interpretation guidelines. Gemini showed notably lower performance, with Cohen’s Kappa values of 0.37 (zero-shot), 0.31 (few-shot), and 0.34 (CoT), indicating fair agreement levels. The prompting strategies produced different effects across models. For GPT-4o, zero-shot and CoT achieved equally high performance (

), while few-shot showed reduced alignment (

). For Gemini, zero-shot prompting yielded the highest agreement (

), followed by CoT (

) and few-shot (

). These patterns suggest that elaborate prompting strategies do not uniformly enhance human–AI alignment across different model architectures.

The consistent performance gap between GPT-4o and Gemini across all prompting strategies (0.15–0.22 difference) indicates systematic differences in human–AI alignment capabilities. GPT-4o maintained moderate agreement levels across all conditions, while Gemini consistently achieved only fair agreement, suggesting fundamental differences in emotion recognition approaches between the two architectures.

We identified a subset of images where all six model-prompting combinations diverged from human-labeled emotions. These cases demonstrated high interpretive complexity, often involving multiple characters with distinct emotional expressions, embedded symbolic elements, or contextual dependencies tied to previous narrative pages. Examples include images such as

Figure 7 that consistently revealed disagreement across all configurations, indicating intrinsic ambiguity in emotional interpretation. Analysis of these discrepancies showed that the models were more prone to misclassification when emotion recognition required integrating both textual context and visual semantics. This pattern underscores the challenges faced by current models when processing illustrated multimodal content that requires narrative continuity.

The agreement rates varied substantially across emotional categories. Certain emotions, such as happiness (سعادة) and surprise (مفاجأة), achieved more consistent recognition across model-prompting combinations compared to others like anticipation (ترقب) and neutral (محايد). CoT prompting showed inconsistent effects on human alignment, sometimes improving agreement in specific cases while reducing it in others (

Figure 8), indicating that prompting effects vary substantially based on image content and narrative context.

4.5. Supplementary Analysis Results

To investigate factors influencing emotion recognition performance, we conducted two supplementary experiments on a representative subset (n = 8, 10.7%) of our dataset. For baseline comparison, these same eight images achieved 37.5% correct classification (3/8) for GPT-4o and 12.5% (1/8) for Gemini across all prompting strategies in the main experiment. In the character-focused segmentation experiment, we isolated individual characters from their narrative contexts. This produced divergent effects across models: GPT-4o’s performance dropped to 12.5% (1/8) across all prompting strategies—a 25 percentage point decrease—while exhibiting a strong bias toward “anticipation” predictions (37.5% of all responses). Conversely, Gemini’s performance improved to 25% (2/8) for zero-shot and few-shot—a 12.5 percentage point increase—though CoT remained at baseline (12.5%). Despite this improvement, Gemini defaulted to high-arousal emotions, particularly “anger” (33.3%) and “surprise” (29.2%). Notably, positive emotions showed complete recognition failure: Both happiness instances and the single trust instance were misclassified by all model configurations, while surprise was correctly identified in five out of the six attempts across all models.

The Plutchik’s Wheel of Emotions augmentation experiment produced similarly mixed results. GPT-4o’s performance decreased to 25% (2/8) across all prompting strategies—a 12.5 percentage point decline from baseline. Gemini showed varied responses: Zero-shot maintained baseline performance (12.5%), few-shot dropped to complete failure (0/8), while CoT improved to 25% (2/8)—doubling its baseline performance. Rather than improving classification accuracy, the expanded emotional vocabulary led to overcomplicated predictions, with models introducing 13 unique emotion labels instead of the original nine. GPT-4o exhibited a pattern of over-sophistication, labeling basic sadness as “contemplation” (تأمل) and neutral states as “love” (حب), while Gemini showed intensity escalation, replacing surprise with “astonishment” (دهشة) and anger with “contempt” (ازدراء). These supplementary findings reveal model-specific sensitivities: GPT-4o appears to rely heavily on holistic scene context, while Gemini can benefit from focused attention or explicit taxonomic scaffolding, though at the cost of emotional granularity appropriate for children’s literature.

5. Discussion

5.1. Architectural Differences in Multimodal Emotion Processing

The consistent performance advantage of GPT-4o over Gemini across all conditions suggests fundamental differences in how these architectures integrate visual and linguistic emotional information. This gap likely reflects variations in training methodologies, model scale, and multimodal fusion strategies [

29]. GPT-4o’s superior stability across prompting strategies indicates more robust internal representations of emotional concepts, potentially due to more sophisticated attention mechanisms or better-calibrated visual encoders. GPT-4o’s enhanced performance may also reflect differences in training scale and the extent of multimodal alignment training. While detailed architectural specifications for GPT-4o and Gemini 1.5 Pro are not fully public, it is understood that they differ in their multimodal integration strategies and training data compositions. GPT-4o is noted for its end-to-end architecture that natively processes text, audio, and vision together, potentially leading to more holistic interpretations. In contrast, Gemini 1.5 Pro, while also highly capable, may employ different fusion mechanisms. [

22].

The text–visual interaction effects observed in our qualitative analysis further illustrate these architectural differences. Case 3 revealed that the models frequently prioritize Arabic textual content over visual emotional cues, with five out of six configurations predicting anger despite neutral facial expressions when conflict-related text was present. This suggests varying capabilities in balancing multimodal information sources across different model architectures.

The failure of few-shot prompting to improve performance for either model challenges conventional assumptions about in-context learning for emotion recognition. This suggests that emotion classification may require different cognitive processes than typical few-shot tasks, possibly because emotional interpretation depends more on learned associations than pattern matching from examples [

37].

5.2. The Valence Processing Deficit

The overwhelming prevalence of valence errors (60.7%) reveals a critical limitation in current MLLMs’ understanding of emotional polarity. This finding suggests that models may process emotions as discrete categories rather than understanding the underlying dimensional structure of affect [

27,

32]. The dominance of valence over arousal errors indicates that the models struggle more with the fundamental positive–negative distinction than with intensity judgments.

This pattern aligns with psychological theories suggesting that valence processing requires deeper semantic understanding and cultural knowledge than arousal detection [

38]. The models’ difficulty with valence may reflect their reliance on surface-level visual features rather than contextual understanding of emotional meaning within cultural frameworks.

Our qualitative analysis reinforces these theoretical insights. Case 4 demonstrates how architectural differences manifest in systematic valence inversion, where GPT-4o correctly identified happiness, while all Gemini configurations predicted negative emotions (sadness and fear) for the same image. This pattern exemplifies how valence processing deficits operate consistently within model architectures rather than occurring randomly.

5.3. Cultural and Contextual Challenges

The models’ struggle with culturally embedded emotions highlights the limitations of predominantly Western-trained AI systems when applied to Arabic contexts [

39,

40]. The systematic nature of misclassifications suggests that current training paradigms inadequately capture culture-specific emotional expressions and social contexts that influence affective interpretation [

41].

The poor performance on neutral emotions reveals a particular challenge for AI systems: distinguishing between the absence of clear emotional signals and the presence of genuinely neutral states [

36]. This difficulty may stem from the models’ tendency to over-interpret visual information, seeking emotional content even in ambiguous scenarios.

5.4. Prompting Strategy Implications

The mixed effects of chain-of-thought prompting suggest that elaborate reasoning may not uniformly benefit emotion recognition tasks. For GPT-4o, CoT sometimes led to over-interpretation of narrative context, while for Gemini, it often increased inconsistency. This indicates that emotion recognition may benefit from more intuitive, system-1 type processing rather than deliberative reasoning, reflecting how humans often process emotional information rapidly and automatically.

For GPT-4o, the minimal gap between zero-shot and CoT (57% vs. 59%) suggests its robust baseline understanding is difficult to improve upon with simple reasoning instructions. For Gemini 1.5 Pro, the failure of CoT to significantly improve performance might indicate that its Mixture-of-Experts (MoE) architecture does not inherently align with the linear, step-by-step process that CoT enforces; it may benefit more from prompts that help it select the right “expert” rather than detailing the reasoning process.

While hallucinations were rare, we observed occasional overinterpretations in CoT prompts, where models inferred emotions like “love” or “contemplation” absent from the visual/textual context. These cases highlight risks of semantic drift in generative multimodal reasoning.

5.5. Theoretical Implications for Affective AI

Our findings challenge the assumption that larger, more sophisticated language models automatically excel at emotion recognition [

42]. The systematic error patterns suggest that current training approaches may not adequately develop the multimodal integration and cultural understanding necessary for robust emotional AI systems.

The dominance of valence errors indicates that developing AI systems with better emotional intelligence requires moving beyond surface-level pattern recognition toward deeper understanding of affective meaning and cultural context [

31]. This suggests a need for training paradigms that explicitly model emotional dimensions rather than treating emotions as discrete, isolated categories [

16].

For Arabic literacy applications, these findings have direct implications for educational technology deployment. Current MLLMs require careful prompt engineering and potentially specialized fine-tuning before implementation in Arabic educational contexts. The systematic nature of valence errors suggests that emotion-aware educational systems should incorporate bias detection and correction mechanisms, particularly when processing culturally specific emotional content.

5.6. Implications of Contextual and Taxonomic Constraints

Our supplementary analyses reveal fundamental differences in how current MLLMs process emotion in narrative contexts. The character segmentation experiment produced strikingly divergent results: GPT-4o’s performance dropped from 37.5% to 12.5% (a 25 percentage point decrease), while Gemini’s performance improved from 12.5% to 25% for zero-shot and few-shot approaches. This bidirectional effect suggests contrasting architectural dependencies—GPT-4o appears to rely heavily on holistic scene processing, integrating background elements and interpersonal dynamics into its emotion recognition, while Gemini may suffer from visual complexity and benefit from focused attention on facial features. The complete failure to recognize positive emotions (0% for happiness and trust) across both models, versus preserved surprise recognition (83%), reinforces that culturally expressed emotions like happiness depend on scenic elements (colors, spatial relationships, and shared activities) rather than facial features alone.

The Plutchik’s Wheel of Emotions experiment similarly revealed model-specific responses to theoretical scaffolding. While GPT-4o’s performance declined from 37.5% to 25%, Gemini’s CoT actually improved from 12.5% to 25%, though few-shot catastrophically dropped to 0%. This suggests that explicit taxonomic frameworks can stabilize weaker baselines but may interfere with stronger models’ learned representations. The models’ introduction of sophisticated emotions like “contemplation” for basic sadness or “love” for neutral states reveals what we term “theoretical interference”—where abstract psychological frameworks override practical pattern recognition. Critically, both experiments demonstrate that no single approach optimizes performance across models: GPT-4o requires complete scenes without theoretical scaffolding, while Gemini can benefit from constrained focus or explicit ontological cues, though at the cost of nuanced interpretation.

These findings challenge universal approaches to emotion recognition in educational AI. Rather than seeking optimal preprocessing or prompting strategies, our results suggest the need for model-adaptive pipelines that leverage each architecture’s strengths. For GPT-4o, this means preserving full narrative context; for Gemini, selective attention or taxonomic guidance may improve performance on specific tasks. However, the persistent failure on positive emotions and the inappropriate sophistication introduced by Plutchik’s framework underscore that emotion in children’s literature serves pedagogical rather than psychological functions. Arabic educational AI development must therefore prioritize culturally grounded, context-preserving approaches that recognize emotions as narrative devices rather than isolated psychological states.

5.7. Limitations

Several methodological constraints should be acknowledged in interpreting our findings. First, we evaluated models at a specific point in time, and the rapid evolution of GPT-4o and Gemini 1.5 means that model capabilities may change with subsequent updates, potentially affecting the long-term relevance of our results. Second, these models exhibit inherent variability and sensitivity to prompt variations that could influence results, even when using consistent formats. A significant methodological limitation is the absence of systematic quantitative analysis of text versus visual influence on emotion predictions. While our qualitative case studies (

Section 4.3) suggest the models exhibit text-dominant processing biases, controlled experiments comparing performance on text-only versus image-only inputs would provide more definitive evidence of multimodal integration capabilities. This represents a critical area for future investigation, particularly given the importance of visual–textual balance in children’s educational materials.

Finally, our study lacked comparison with specialized Arabic NLP systems or purpose-built emotion recognition models, which might provide valuable performance baselines beyond general-purpose models.

Dataset Constraints

While our 75-image dataset provides initial insights into MLLM emotion recognition capabilities, we acknowledge that this sample size limits statistical power and generalizability. This exploratory study establishes baseline performance patterns and methodological frameworks, with dataset expansion being a priority for future research. The systematic sampling across seven diverse storybooks ensures representative coverage of common Arabic children’s literature themes, though larger-scale validation is needed.

The significant class imbalance, with “happiness” comprising 40% of annotations within our dataset, is a key finding in itself, as it reflects both the predominant emotional tone of the source material and the natural narrative themes in children’s storybooks. While this limits the statistical power of our findings for rare emotions like “disgust” (1.3%) and “anger” (2.7%), it also highlights a real-world challenge for AI systems: they must be able to function in environments where emotional data are not uniformly distributed. This overrepresentation may introduce bias in model evaluation. While we employed the macro F1-score to provide a more balanced evaluation metric and mitigate the effects of this imbalance, the model’s performance on underrepresented emotions like “anger” and “disgust” should be interpreted with caution due to the limited number of examples.

5.8. Future Research Directions

Future work should expand the dataset to include a broader corpus of Arabic storybooks representing diverse visual styles and cultural contexts, with particular emphasis on balancing emotional categories to ensure adequate representation of less common emotions. Dataset resampling techniques or weighted metrics should be employed to address the severe class imbalance identified in this study, ensuring more robust evaluation across all emotional categories. Incorporating more complex emotional categories beyond Plutchik’s framework, such as embarrassment, pride, or culturally specific emotional concepts, would enhance the validity of the evaluation approach.

Systematic text–visual analysis represents a critical methodological advancement, controlled experiments isolating textual versus visual contributions to emotion recognition, including ablation studies with text-removed and image-only conditions to quantify multimodal integration effectiveness. This analysis should investigate visual vs. textual cue misalignment, where models may prioritize textual elements over imagery, particularly relevant in multimodal children’s literature contexts.

Ablation studies should explore varying shot counts and hybrid prompting approaches to optimize performance across different model architectures. From a technical perspective, investigating architectural vs. scaling effects through comparing models across multiple generations (e.g., GPT-4 vs. GPT-4o, Gemini 1.0 vs. 1.5) would isolate improvements from different sources. Applying explainable AI (XAI) techniques [

43] could reveal reasoning patterns behind model misclassifications, particularly in ambiguous narrative contexts where chain-of-thought prompting demonstrated inconsistent performance. Analysis of model hallucinations and semantic drift patterns in generative multimodal reasoning should be expanded beyond the preliminary observations in this study. Finally, educational intervention studies examining how these emotion-aware systems impact actual learning outcomes in Arabic literacy development would provide valuable insights for practical implementation in educational settings.

From an educational technology perspective, developing culturally adaptive prompting strategies specifically for Arabic educational content represents a critical research direction. Such strategies should account for the text–visual interaction effects observed in our analysis and provide frameworks for detecting and mitigating systematic valence biases in educational applications. Development of bias detection and correction mechanisms for emotion-aware Arabic educational systems should be prioritized, given the systematic nature of valence errors observed in this study.

Future work should develop Arabic-specific multimodal baselines for fair comparison with specialized models as multimodal versions become available. Fine-tuning multilingual models on culturally specific Arabic emotional content could significantly improve their sensitivity to subtle emotional cues and cultural nuances. Investigation of training data biases (e.g., Western-centric emotion labels) and their impact on Arabic emotion recognition should be systematically explored.

6. Conclusions

This study provides the first systematic evaluation of multimodal large language models for Arabic emotion recognition in children’s storybook illustrations. GPT-4o consistently outperformed Gemini 1.5 across all prompting strategies, achieving macro F1-scores of 57–59% compared to Gemini’s 32–43%. Human–AI alignment showed similar patterns, with GPT-4o maintaining moderate agreement () versus Gemini’s fair agreement (). Error analysis revealed systematic patterns rather than random failures, with valence inversions dominating at 60.7% of misclassifications. Both models struggled with culturally nuanced emotions and neutral states, indicating fundamental limitations in processing affective content within Arabic cultural contexts. For educational applications, we recommend zero-shot or chain-of-thought prompting with GPT-4o, while avoiding few-shot approaches, which consistently underperformed. Future work should prioritize culturally responsive training data and enhanced valence processing to develop more effective emotionally intelligent educational technologies for Arabic-speaking learners. Enhancing emotion recognition performance may require integrating culturally calibrated datasets, refining multimodal attention mechanisms, and training models with narrative-aligned supervision to better capture the pedagogical intent behind emotional illustrations in Arabic children’s literature.