In this section, we present an open-vocabulary detection method incorporating attribute decomposition and aggregation. The learned attributes mitigate semantic misinterpretation caused by low-quality or non-standardized textual inputs for novel categories. This overview outlines the core elements, implementation workflow, and notable characteristics of the proposed method.

2.1. Overall Framework

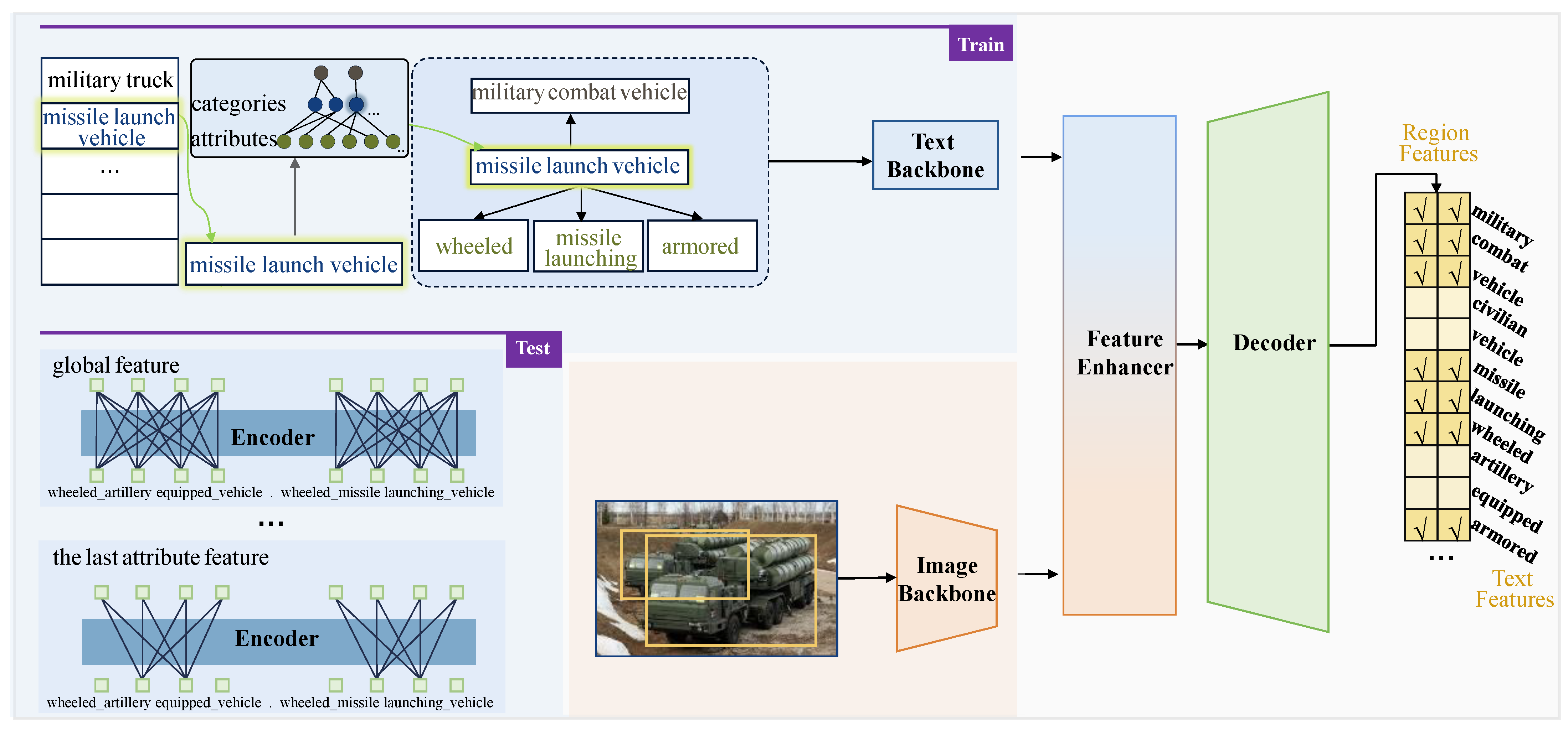

The overall framework is illustrated in

Figure 2. We employ Grounding DINO [

29] as the baseline algorithm in subsequent experiments, which adopts a dual-encoder single-decoder architecture. We employ Swin-Transformer [

30] as the image backbone, leveraging its strong generalization capability from pre-training on Objects365 [

31] and goldG [

28] datasets, while using BERT [

32] for the text feature extraction. The extracted image and text features are fed into a feature-fusion module for multi-modal feature integration. In the decoder component, a language-guided query-selection module initializes the decoder queries, followed by refinement through a cross-modal decoder to predict the position and category for each query.

To address the core issue of performance degradation in OVOD within domain-specific, fine-grained scenarios, primarily caused by the unstable quality of class-level textual descriptions, we propose a novel detection framework based on attribute decomposition and aggregation. The framework comprises three key components: Attribute Text-Decomposition Mechanism: Prior to text encoding during training, prior knowledge of the semantic structure of category names was leveraged to decompose each class name into multiple shareable fine-grained attributes. This transforms the conventional single-label classification paradigm into a multi-label attribute recognition task, thereby reducing the model’s dependence on the precise accuracy of full category names and enhancing its robustness against novel terminology or subjective descriptive variations. Multi-Label Attribute Learning: A multi-positive contrastive learning strategy is employed to align region-level visual features with multiple fine-grained textual attributes. This enhances the discriminative capability of the model among visually and semantically similar categories. Attribute Text Feature-Aggregation Mechanism: During inference, we introduce a multi-category, multi-attribute text-aggregation method. By integrating both category names and decomposed attribute features within the text branch, the model is encouraged to focus more on part-level attribute characteristics, effectively mitigating the adverse impact of low-quality textual inputs.

The proposed framework not only preserves the zero-shot generalization capability inherent in open-vocabulary detection but also significantly improves detection reliability and adaptability for hard-to-name or ambiguously described novel objects in specialized domains, such as military reconnaissance, through a fine-grained semantic disentanglement and re-composition mechanism.

2.2. Attribute Text-Decomposition Mechanism

To achieve alignment between region-level image features and multiple attribute-based textual features, it is essential to decompose each category into multiple attribute representations based on prior knowledge. In fine-grained object-detection tasks, particularly for recognizing diverse vehicle categories (including novel classes) in reconnaissance scenarios, the semantic similarity between fine-grained category names typically indicates shared attributes inherited from their common parent class. For instance, tanks and infantry fighting vehicles, both belonging to the military combat vehicle category, exhibit the shared “artillery-equipped” attribute.

This paper proposes a component-based attribute-decomposition framework for military vehicles, grounded in two foundational principles: (1) alignment with domain-specific understanding of essential vehicle characteristics, and (2) compatibility with the practical requirements of dataset construction for fine-grained visual recognition. These dual considerations ensure that the resulting attribute system is both semantically meaningful within the military context and operationally viable for annotation and downstream analysis. According to drive mechanism, vehicles are categorized into wheeled and tracked types; based on function, they are classified into artillery equipped and missile launching types; and in terms of appearance type, they are divided into armored, monocoque, and cargo style types. An example illustrated in

Figure 2 shows the decomposition of a fine-grained category into its corresponding parent class text and attribute text. During text encoding, deduplicated combinations of parent class names and attributes from all training samples are formed, separated by “.”, and then fed into the text encoder for processing.

To satisfy the first principle, namely alignment with domain expertise, the framework adopts a component-based attribute-decomposition scheme. Specifically, the drive mechanism reflects the chassis configuration such as wheeled or tracked; the functional roles captures category-discriminative auxiliary systems such as artillery equipped or missile launching; and the appearance type characterizes the structural integration between the cab and hull, encompassing designs such as monocoque or monocoque. This tripartite schema enables a preliminary yet effective fine-grained distinction among diverse military vehicle types, based on physically interpretable and functionally relevant components. Task-oriented viability constitutes the second principle, prioritizing attributes that are both visually annotatable and discriminative at the fine-grained level. Camouflage patterns, although prevalent in military vehicles, exhibit limited discriminative power across categories and are therefore excluded from the system. Likewise, attributes such as crew size are not visually observable in armored platforms like tanks, due to design imperatives related to protection and concealment. As a result, only those attributes that simultaneously satisfy semantic relevance and annotation feasibility are retained in the final attribute ontology.

According to the above attribute-decomposition rules, the datasets and their corresponding formats used in the subsequent experiments are as follows: a custom military dataset and the LAD dataset [

33], both featuring attribute-based annotation schemes to enable fine-grained open-vocabulary detection. The military dataset focuses on military vehicles while the LAD dataset covers civilian transportation, with both employing structured attribute decomposition to facilitate novel category recognition through attribute fusion.

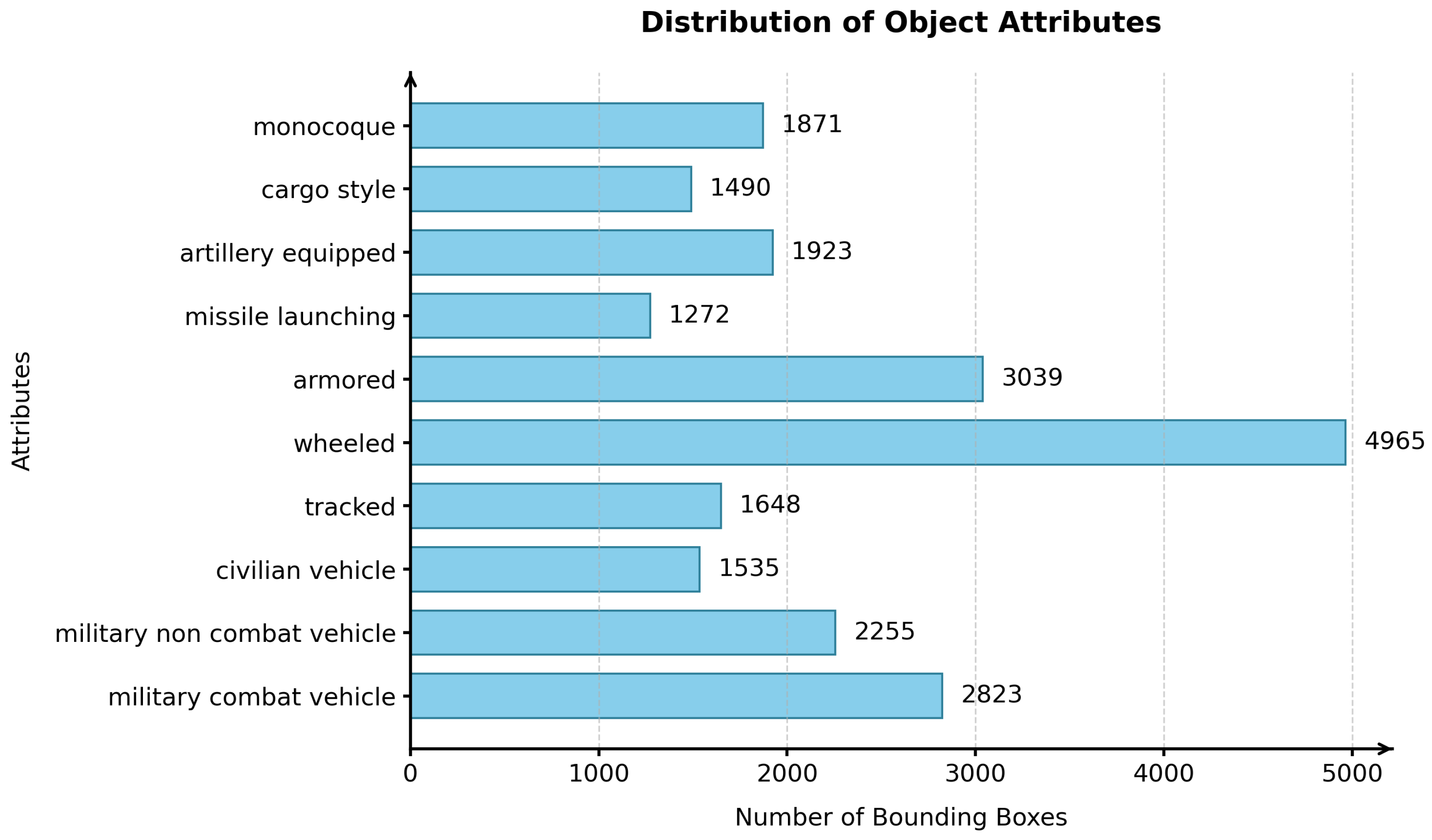

Custom Military Dataset. To address the limitations of existing military datasets which suffer from limited scene diversity and lack of detailed attributes, we curated a new dataset containing 15,438 high-quality annotated images. The military dataset comprises primary categories including personnel, vehicles, and buildings, with further granularity into nine secondary subcategories (e.g., combat/non-combat vehicles). The annotation strategy emphasizes attribute decomposition, particularly for vehicles as they represent clearly decomposable entities. Each vehicle is characterized through three attribute dimensions: drive mechanism (wheeled or tracked), functional role (artillery equipped or missile launching), and appearance type (armored, monocoque, or cargo style). This structured annotation enables our model to learn compositional relationships between attributes and objects. After offline data augmentation, the coarse-grained categories and the number of instances per attribute in the final training dataset are illustrated in

Figure 3, while the overall structure of the self-constructed dataset is presented in

Figure 4.

LAD [

33] Dataset. For cross-domain validation, we employ the LAD dataset which contains 5 supercategories and 230 fine-grained classes. From its transportation supercategory (encompassing cars, ships and aircraft), we selected 8599 images across 24 vehicle classes. The proposed annotation scheme systematically categorizes vehicles along three primary dimensions: drive mechanism, body type, and roof characteristics. For drive mechanism, vehicles are classified into three-wheel, multi-wheel (≥4 wheels), and tracked configurations. Body types encompass six categories: compact, full-size, flatbed, enclosed, tanker, and crane. Roof characteristics are further divided into convertible and hardtop designs. This hierarchical structure enables comprehensive yet efficient vehicle characterization for detection tasks.

2.3. Attribute Text Feature-Aggregation Mechanism

During inference, given an input image and a human-provided textual description composed of multiple attributes (e.g., “wheeled artillery equipped vehicle, tracked armored artillery equipped tank”), conventional text encoders in OVOD exhibit limited capacity in modeling composite attribute structures. While selfattention mechanisms implicitly encode inter-attribute relationships, they often fail to disambiguate semantically similar categories due to diffuse feature representations. To address this limitation, we propose aggregating multiattribute descriptions (e.g., “wheeled_artillery equipped_vehicle”) into a unified, discriminative textual embedding within the text-encoding module. This aggregation explicitly enhances the representation of constituent attributes, thereby improving fine-grained category discrimination.

To this end, we propose an attribute text feature-aggregation mechanism. The core idea is to explicitly model attribute-level features of fine-grained categories through structured text inputs and a constrained attention masking strategy, thereby enhancing discriminative capability across visually similar classes.

We introduce multi-category joint attribute encoding, a structured text-encoding framework for fine-grained category representation. To enable explicit attribute-level modeling, we first construct structured textual inputs with explicit syntactic delimiters: attributes within a single category are concatenated using underscores “_”, while distinct categories are separated by periods “.”. This formatting allows precise identification of attribute boundaries and category scope during tokenization. For example, the input is formatted as: “wheeled_artillery equipped_vehicle. tracked_armored_artillery equipped_tank”.

For the trained model, given an image and attribute description, our objective is to integrate multiple attributes and the combination of class names across various categories into a global feature representation. This global feature should emphasize the semantics of the attributes to facilitate differentiation among other fine-grained categories. For multi-category multi-attribute-detection scenarios, this paper proposes an efficient parallel encoding scheme. Specifically, a hierarchical encoding framework is adopted to process multiple category texts in batches. The global features of all category texts are computed simultaneously, or the attribute features at the same positional index within each global text are processed concurrently.

For each category text, our approach encompasses two stages: global encoding and attribute-level encoding. Initially, a global text containing complete attribute and category information is encoded, facilitating thorough interaction among textual components through a self-attention mechanism. The formula for global text encoding is:

where,

T denotes the text to be encoded, and

is the mask applicable for global encoding.

is the global text feature.

Building on this, to enhance the semantic representation of attribute words, we devise a context-dependent attribute-encoding method. This method employs a masking mechanism to preserve interactions between the current attribute word and the global text while masking out attention from other irrelevant tokens. The formula forattribute text encoding is:

where

T denotes the text to be encoded,

is the mask applicable for attribute encoding and

denotes the feature corresponding to the

i-th attribute text.

To enable attribute encoding, it is essential to identify the positions of the tokens corresponding to the attribute text within the global text. We adopt the following method to identify the token positions corresponding to the attribute texts: For a text string composed of multiple attributes and categories, such as “wheeled_artillery equipped_vehicle . tracked_armored_artillery equipped_tank”, we use the symbol “.” to separate different categories and append an underscore “_” after each attribute text to mark the position of attribute words. The obtained mask can be applied to the self-attention computation part in the text-encoding process:

where

M include the global mask and attribute masks, and

F consist of both the global text feature and attribute text features. The global mask governs the computation of global text features, while each attribute mask controls the derivation of its corresponding attribute text features.

The mask is constructed as:

where

can refer to either

, which is associated with the global text, or

, which is associated with the attribute text.

where,

denotes the mask in which the positions corresponding to the tokens of each global text are set to false.

where

denotes the mask in which only the positions corresponding to the tokens of a single attribute within each global text are set to false.

Furthermore, to address the synchronization challenge in encoding varying numbers of attributes across different categories, we implement a zero-padding strategy during attribute text encoding. Specifically, for categories with missing attributes, their corresponding feature representations are set to zero vectors post-encoding. This approach effectively prevents text features from being contaminated by irrelevant category encoding.

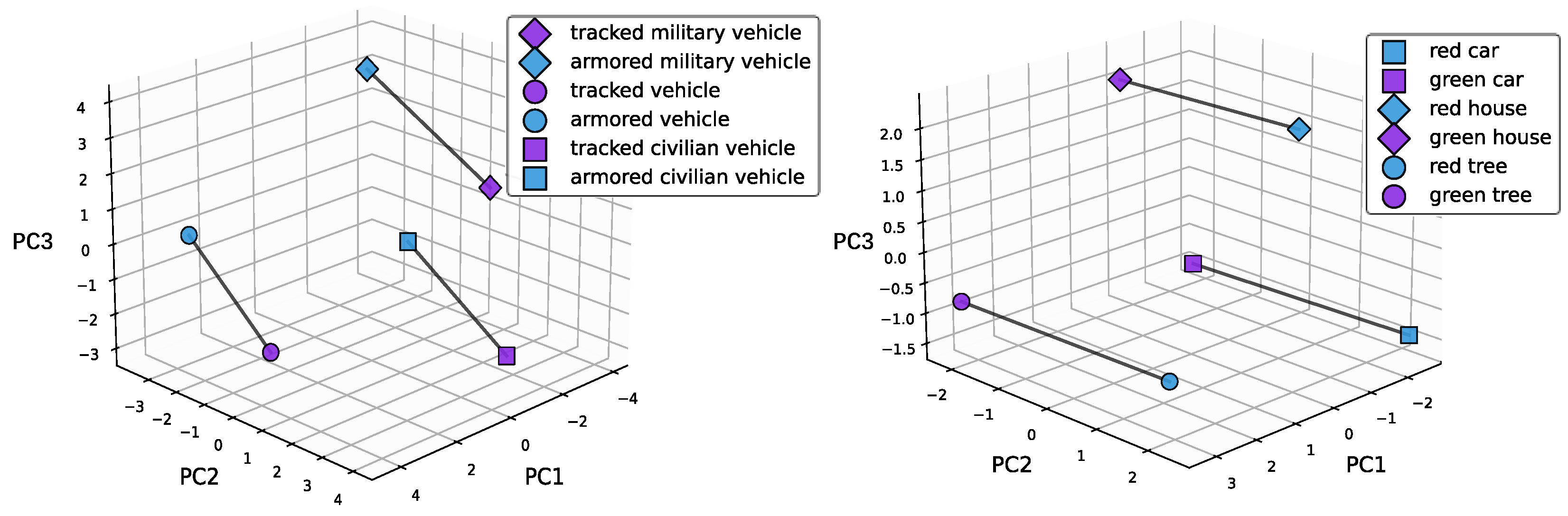

Existing research [

34] has found that embeddings of compositional concepts in the CLIP [

10] model can typically be approximately decomposed into the sum of vectors corresponding to each individual factor. The addition of global text features and attribute text features is due to the approximate linear nature of text encoding. The features encoded by BERT [

32] are visualized in 3D space after principal component analysis (PCA) dimensionality reduction, as shown in

Figure 5. PCA is a widely used linear transformation method for feature dimensionality reduction.

The encoded representations of attribute texts differing by a single attribute exhibit approximately linear relationships in the feature space. Based on this observation, this paper employs a linear combination approach to effectively integrate attribute features, thereby enhancing detection performance. As shown in

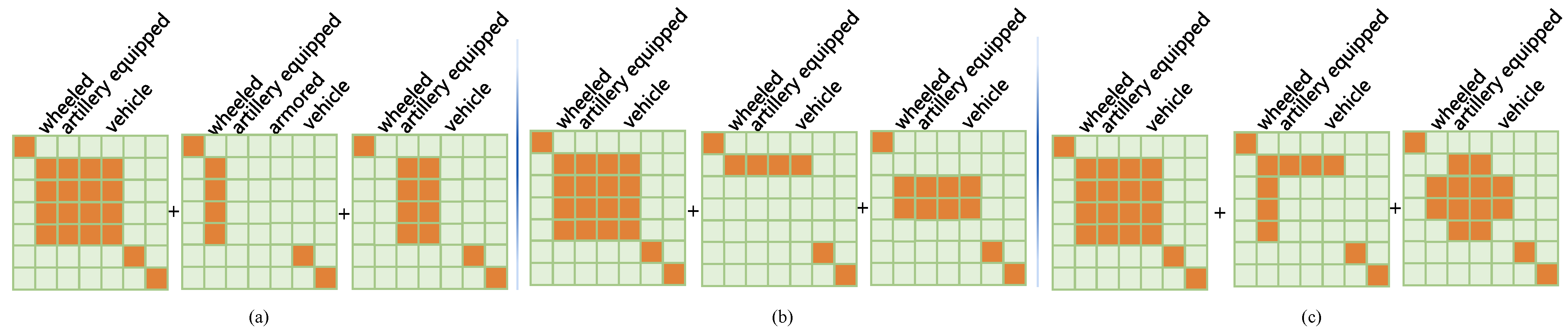

Figure 6, different masking strategies are applied in the attribute-encoding module. Experimental results demonstrate that column-wise interaction approach yields the best performance.

Finally, the global features and individual attribute features are summed to form the fused text representation.

This comprehensive encoding strategy ensures robust differentiation between fine-grained categories while maintaining computational efficiency.

Given that the text inputs during the testing phases are fixed, all text features need to be precomputed only once, ensuring that attribute-level aggregation does not increase the computational burden during inference.

2.4. Multi-Positive Contrastive Learning

Existing methods typically employ image–text contrastive learning to align visual and textual features. When training with detection-format datasets, each image region is associated with a single textual label. The process of calculating the similarity between image region features and individual text features is as follows: first, following the previously defined formulation in Equation (

1), textual descriptions or attributes are encoded into textual feature representations F. Subsequently, the input image is processed through an image encoder to generate visual feature representations:

The similarity measure between each image region feature

I and corresponding text feature

F is subsequently computed through dot product operations:

where

I and

F denote the encoded features of the image and text prompt, respectively, while

S represents their similarity matrix.

However, in multi-label classification scenarios, a single object may correspond to multiple text labels. For instance, a “truck” image might simultaneously relate to both “wheeled” and “cargo-style” labels. For text obtained via an offline attribute correspondence table, which includes parent class names and their associated subclass attributes, multi-label training is employed. During training, both the parent category and related attributes of the same object are treated as positive samples, establishing a many-to-many relationship between fine-grained category texts and attribute texts. The loss function is calculated accordingly to accommodate this complexity. We formally define the set of positive samples as:

where

i and

j indicate that the

j-th text is a valid label for the

i-th image region. The similarity score between the

i-th image and the

j-th text is normalized via a sigmoid function:

The contrastive loss is then computed to maximize the similarity between the image and its associated text labels while minimizing similarity with irrelevant texts:

where

i is the index of the current image region (

), and

j denotes a positive text label associated with the

i-th image region (

), and

k is the index of any text label in the vocabulary.

This approach ensures that the model effectively learns from multiple relevant labels for each object, enhancing its capability to accurately distinguish and classify objects within fine-grained categories under data-limited conditions.