Artificial Intelligence in Healthcare: How to Develop and Implement Safe, Ethical and Trustworthy AI Systems

Abstract

1. Introduction

2. Research Hypotheses

3. AI Ethics Concepts in Healthcare

3.1. Types of AI

3.2. AI Ethics Challenges

3.3. Accountability of AI-Driven Healthcare Decisions

3.4. Balancing Accountability with Innovation

3.5. Balancing Innovation and Patient Safety

3.6. Product Liability of AI Systems

4. Materials and Methods

- Studies that focused on the application of AI systems in health;

- Studies that focused on the development of AI systems in health;

- Studies that discussed the benefits and challenges of AI systems in health;

- Studies that provided a comprehensive review of regulatory compliance of AI systems in health.

- Studies that did not focus on AI systems;

- Studies that were not published in English;

- Studies that were not peer-reviewed;

- Studies that were published before 2010.

- Thematic analysis to identify the benefits and challenges of AI systems in health;

- Content analysis to examine the regulatory compliance of AI systems;

- Network analysis to visualize the relationships between different concepts related to AI in health systems.

- Fill information gaps and verify best practices mapped during a review of publicly available information

- Capture perceptions regarding challenges and trends

- What are the technical implications and lessons learned?

- How is the ethics perspective taken into account (data privacy)?

- What are the current/envisaged AI governance frameworks?

- Is the implementation of AI in healthcare regulated and how (data privacy, liability, procurement) at State level/at Federal level?

- What kind of guidelines/checklists exist and how are they enforced?

- Literature Review Foundation: Initial questions were informed by our preliminary review of existing literature and competency frameworks, which revealed fragmented understanding of optimal AI technologies related to ethics, legal and regulatory knowledge, and competencies within healthcare education or medical practice settings.

- Research Team Expertise: Questions were collaboratively developed by the multidisciplinary research team, drawing on the following:

- Clinical and medical education expertise (senior academics, medical educators, and practicing physicians)

- Healthcare professional and research methodology experience (healthcare professional with extensive realist research experience)

- Educational technology expertise (education adviser with technology focus)

- Information technology professional perspective (senior IT professional)

- Stakeholder-Specific Adaptation: The core question framework was adapted for each stakeholder group (students, medical educators, digital sector experts) to ensure relevance while maintaining consistency across groups for comparative analysis.

- Exploratory Design Principles: Following our qualitative interpretivist and inductive research design, questions were deliberately broad and open-ended to encourage brainstorming and discussion, allowing themes to emerge naturally from participants rather than being constrained by predetermined categories.

Relevance and Rationale

5. Results

5.1. Validation of Research Hypotheses

5.2. Regulatory Compliance of AI Systems in Healthcare

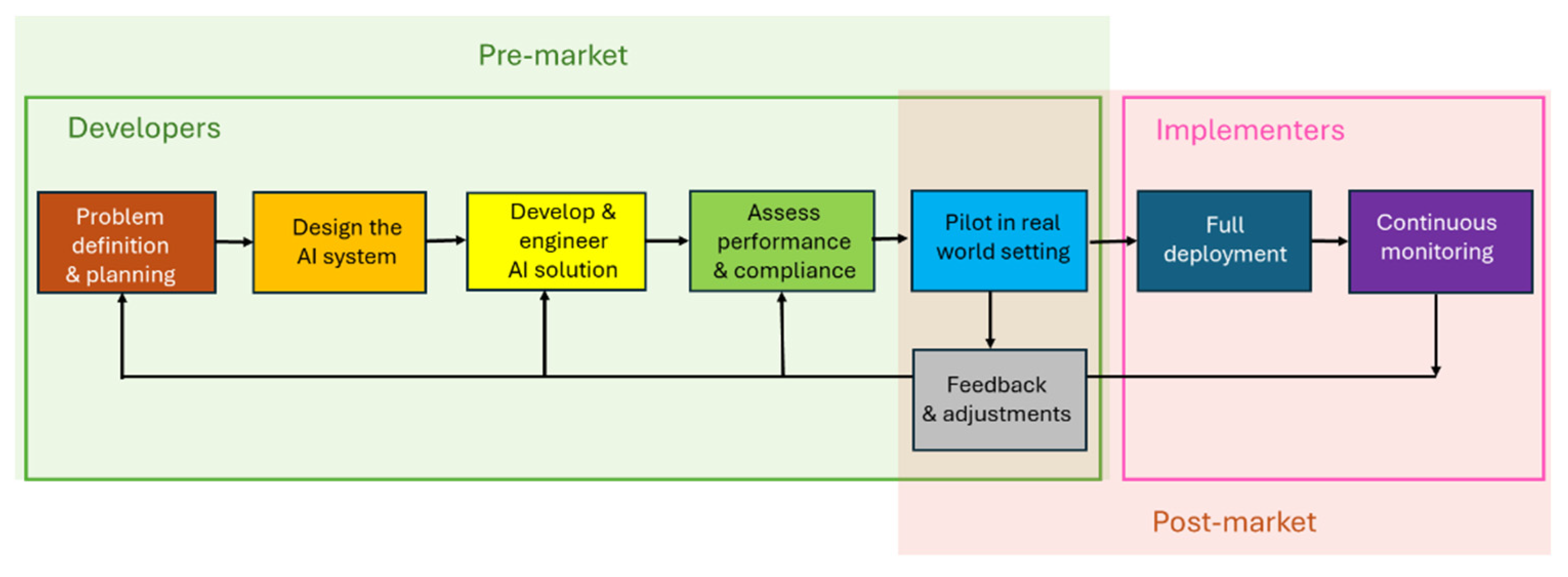

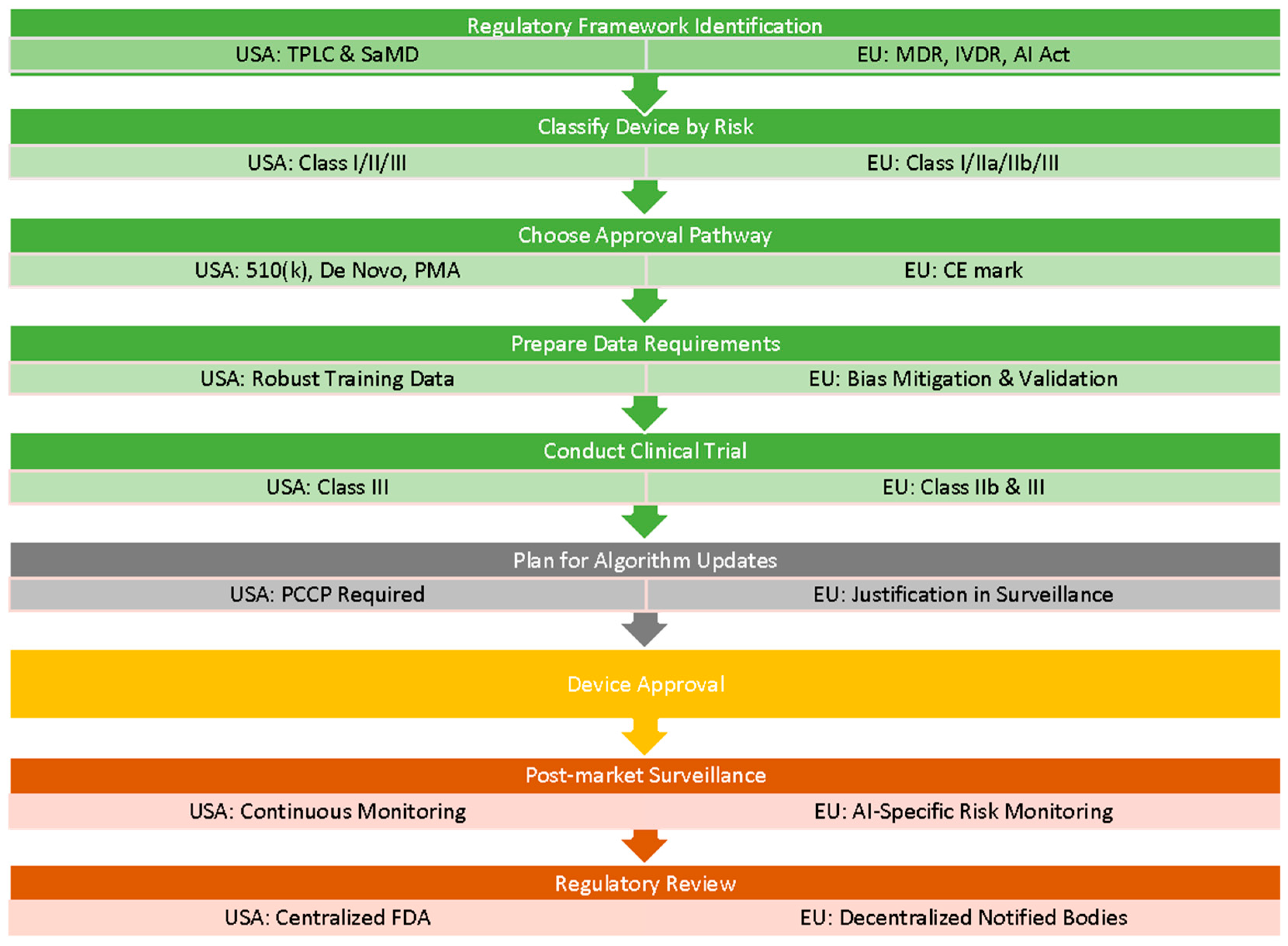

Regulatory Compliance Processes

5.3. Requirements for Developers

| Legislation Name | What Does It Do? | How Is It Useful for Developers? |

|---|---|---|

| EU Legislation and Guidance | ||

| European AI Act (AIA) [23] | Classifies AI systems by risk and regulates high-risk applications to ensure safety, transparency, and fundamental rights. | Establishes clear compliance pathways, requiring risk assessments, data governance, transparency, and conformity checks. |

| Medical Device Regulation (MDR) [38] | Regulates medical devices in the EU with stricter requirements for clinical evaluation, safety, and traceability. | Requires rigorous evidence and post-market surveillance for medical software classified as devices; ensures patient safety. |

| In vitro diagnostic medical device (IVDR) Regulation [39] | Regulates diagnostics like lab tests and reagents with a strong emphasis on risk-based classification and performance. | Mandates clinical evidence, labeling, traceability, and post-market monitoring—important for developers of diagnostic tools. |

| EU Product Liability Directive [40] | Establishes strict liability for producers of defective products, including digital and AI-driven goods, ensuring compensation for harm caused without needing to prove negligence. | Developers must ensure proper deployment, monitoring, and documentation of AI tools to avoid liability and demonstrate due diligence in mitigating product risk. |

| EU Clinical Trials Regulation (Regulation (EU) No 536/2014) [41] | Harmonizes clinical trial approval and oversight processes across the EU. | Facilitates multi-country trials and ensures participant safety, transparency, and data integrity. |

| Health Technology Assessment Regulation (HTAR) [42] | Coordinates clinical effectiveness assessments of health technologies across EU countries. | Reduces duplication, speeds up access to markets, and supports evidence-based reimbursement decisions. |

| Commission Nationale de l’Informatique et des Libertés” (CNIL) ‘Self-assessment guide for artificial intelligence systems’ [43] | Offers practical steps for compliance with GDPR when using AI systems. | Helps identify risks and ethical issues early in AI design and development. |

| Reform of the EU Pharmaceutical Legislation [44] | Aims to ensure timely access to safe and affordable medicines, fostering innovation and addressing shortages within the EU pharmaceutical sector. | Encourages the development of innovative medical solutions and streamlines regulatory processes, facilitating faster time-to-market for new therapies. |

| EHDS—European Health Data Space Regulation [45] | Enables secure sharing and access to health data across EU Member States. | Supports interoperability, innovation, and cross-border health services while ensuring data privacy. |

| General Data Protection Regulation (GDPR) [46] | Standardizes data privacy laws across the EU, enhancing individuals’ data rights and mandating secure, lawful, and transparent data processing. | Developers must implement privacy-by-design, conduct DPIAs for high-risk systems, obtain consent, and ensure secure data handling. |

| US Legislation and Guidance | ||

| The Health Insurance Portability and Accountability Act (HIPAA) [47] | Protects the privacy and security of health information and sets standards for electronic health data. | Developers must ensure compliance with Privacy and Security Rules, especially in healthcare apps or platforms. |

| FDA Regulations Relating to Good Clinical Practice and Clinical Trials [48] | Ensures ethical, legal, and scientific standards for clinical trials involving human subjects. | Guides trial conduct, data integrity, informed consent, and compliance with ethical requirements. |

| FDA guidance for developers of AI enabled medical devices [49] | Provides a regulatory framework for AI/ML-based Software as a Medical Device (SaMD), covering design, updates, and oversight. | Enables continuous innovation via PCCP while ensuring safety using GMLP and real-world monitoring. |

| State level product liability laws [50] | Holds manufacturers liable for defective products under state-specific rules. | Developers of AI systems must assess risk for potential liability due to defects, especially for consumer or health-facing products. |

5.4. Requirements for Implementers

| Legislation Name | What Does It Do? | How Is It Useful for Implementers? |

|---|---|---|

| EU Legislation and Guidance | ||

| General Data Protection regulation (GDPR) [46] | Standardizes data privacy laws across the EU, enhancing individuals’ data rights and mandating secure, lawful, and transparent data processing. | Implementers must ensure systems securely handle personal data, obtain valid consent, and meet data subject rights obligations. |

| European AI Act (AIA) [23] | Classifies AI systems by risk and regulates high-risk applications to ensure safety, transparency, and fundamental rights. | Implementers must monitor deployment for conformity, ensure transparency, and maintain human oversight in high-risk applications. |

| Health Technology Assessment Regulation (HTAR) [42] | Coordinates clinical effectiveness assessments of health technologies across EU countries. | Implementers must align deployments with evidence expectations for reimbursement and decision-making across Member States. |

| EU Clinical Trials Regulation (Regulation (EU) No 536/2014) [41] | Harmonizes clinical trial approval and oversight processes across the EU. | Implementers must ensure that AI tools used in trials meet centralized authorization, safety reporting, and transparency requirements. |

| EU Product Liability Directive [40] | Establishes strict liability for producers of defective products, including digital and AI-driven goods, ensuring compensation for harm caused without needing to prove negligence. | Implementers can still bear responsibility if they modify an AI system beyond its intended use, if their integration or deployment of the system introduces a defect, and if they fail to monitor or properly train users, leading to misuse. |

| EHDS—European Health Data Space Regulation [45] | Enables secure sharing and access to health data across EU Member States. | Implementers must ensure systems are compatible with cross-border data sharing standards, support secure data access for healthcare delivery and research, and comply with governance rules for ethical secondary data use. |

| US Legislation and Guidance | ||

| The Health Insurance Portability and Accountability Act (HIPAA) [47] | Protects the privacy and security of health information and sets standards for electronic health data. | Implementers must ensure systems enforce access controls, audit logs, encryption, and incident response mechanisms. |

| FDA Regulations Relating to Good Clinical Practice and Clinical Trials [48] | Ensures ethical, legal, and scientific standards for clinical trials involving human subjects. | Implementers must use validated AI systems in trials and follow oversight, informed consent, and reporting rules. |

| 21st Century Cures Act (2016) [58] | Modernizes healthcare regulation and exempts some clinical decision support software from device regulations. | Implementers must determine the regulatory status of AI tools and ensure proper transparency for non-regulated CDS tools. |

| AI Bill of Rights (2022) [59] | Outlines principles for responsible AI use, including safety, privacy, fairness, and transparency. | Implementers must evaluate deployed AI for potential harms and bias and ensure informed user engagement. |

| Algorithmic Accountability Act [60] | Proposes mandatory risk assessments and impact audits for automated systems. | Implementers may be required to assess system risks, document mitigation strategies, and report on algorithmic impact. |

| Equal Credit Opportunity Act (ECOA) and Fair Housing Act [61] | Prohibit algorithmic bias in credit and housing contexts, affecting healthcare AI related to access and coverage. | Implementers must avoid biased AI outputs affecting vulnerable groups and comply with anti-discrimination laws. |

| State-Level AI and Data Privacy Laws (e.g., California Consumer Privacy Act—CCPA) [62] | Regulate personal data use, AI profiling, and user rights at the state level. | Implementers must ensure AI system compliance with consent, explainability, and opt-out requirements. |

| NIST AI Risk Management Framework (2023) [63] | Provides voluntary best practices for trustworthy AI development and deployment. | Implementers can use it to assess and reduce system risks while promoting safe and ethical operations. |

| Federal Trade Commission (FTC) Guidelines on AI and Healthcare [64] | Advises against deceptive AI claims and biased systems in consumer health. | Implementers must ensure systems are transparent, non-misleading, and aligned with consumer protection laws. |

6. Discussion

6.1. Product and Medical Liability and the Use of AI Systems

6.2. Accrediting AI Technologies for Hospitals

6.3. Requirements for AI Agents in Healthcare

6.4. Ensuring Trustworthiness in Complex “Black Box” AI Models

- Developing explainable AI frameworks: Creating frameworks that provide insights into the decision-making processes of complex AI models can help build trust among stakeholders.

- Implementing model-agnostic interpretability methods: Techniques that can be applied to various AI models, regardless of their architecture, can facilitate the interpretation of complex models.

- Establishing standards for transparency and explainability: Developing standardized guidelines for transparency and explainability can ensure consistency across different AI models and applications.

- Fostering collaboration between AI developers and healthcare professionals: Encouraging collaboration between AI developers and healthcare professionals can help ensure that AI models are designed with transparency, explainability, and trustworthiness in mind.

6.5. Case Study—Implementing AI Locally: Best Practice Example of University of Washington (UW) Medicine

7. Concluding Remarks

8. Potential Future Research Directions

- Pilot Studies for Questionnaire Validation: Our results produced comprehensive questionnaires (Appendix A and Appendix B), but these instruments require empirical validation. Future studies should test these questionnaires with actual AI developers and implementers to assess their practicality, completeness, and effectiveness. Mixed-method approaches combining quantitative usability metrics with qualitative feedback through interviews will be crucial to refine these tools and ensure they adequately capture all relevant regulatory and ethical considerations.

- Addressing Regulatory Gaps Identified: Our analysis revealed significant gaps in AI-specific hospital accreditation standards (Section 6.2) and uncertainties in liability allocation (Section 6.1). Future research should develop and test frameworks for AI-specific accreditation criteria and conduct comparative legal analyses across jurisdictions to propose clearer liability models for AI-related medical errors.

- Longitudinal Analysis of AI System Performance: While our study provides a snapshot of current regulatory requirements, it cannot assess long-term compliance or system evolution. Future research should track AI systems throughout their lifecycle, from initial deployment through multiple update cycles, to understand how regulatory compliance evolves and identify patterns of model drift or performance degradation that current frameworks may not adequately address.

- Comparative Effectiveness of Different Governance Models: Our case study (Section 6.5) presented one institutional approach, but broader comparative research is needed. Future studies should examine multiple healthcare institutions’ AI governance models to identify best practices and develop evidence-based recommendations for organizational structures that effectively balance innovation with safety.

- Quantitative Assessment of Regulatory Impact: Our discussion of GDPR’s impact (Section 4) was largely qualitative. Future research should quantitatively measure how comprehensive AI regulations affect healthcare organizations through metrics such as implementation costs, time to deployment, patient trust scores, and clinical outcomes. This empirical data will be crucial for policymakers to refine regulatory frameworks.

- Development of AI Agent Certification Frameworks: Our results highlighted the unique challenges posed by AI agents (Section 6.3) but could not propose detailed certification processes. Future research should develop and pilot certification frameworks for AI agents that mirror medical professional certification, including competency assessments, continuous monitoring, and recertification requirements.

- Longitudinal Analysis of AI Model Drift: This investigation can focus on AI model drift in healthcare applications, tracking performance indicators over time. Continuous monitoring will evaluate re-validation protocols’ effectiveness, informing best practices for AI system maintenance and risk mitigation.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Correction Statement

Appendix A

- Do you as a manufacturer identify all roles involved in AI development and their competencies?

- 2.

- Have you tested the accuracy of your system under varying conditions?

- 3.

- Does the technical documentation of your AI system include detailed descriptions of design specifications, training methodologies, validation procedures, and performance?

- 4.

- Who owns the data you will be using? What data-sharing agreements are in place? How are the data owners involved in this process?

- 5.

- Who are the target end users of your system? What kinds of clinical or medical training do they have that may impact use of your system? How are they involved in your design and requirements gathering?

- 6.

- What is the intended medical purpose of the AI-based device (e.g., diagnosis, therapy, monitoring)? How will your system be integrated into existing workflows?

- 7.

- Does the device characterize the patient population, including indications, contraindications, and relevant demographics?

- 8.

- Are the stakeholder requirements translated into performance specifications?

- 9.

- Will stakeholders be aware of the AI component of the system, or will it be hidden in the technology? How might this decision impact its use?

- 10.

- Do you collect, label, and process training, validation, and test data?

- 11.

- Have you ensured that your training, validation, and testing datasets meet the quality, diversity, and representativeness requirements?

- 12.

- How is data storage and retention managed, especially for patient data?

- 13.

- Is there a justification for selected model parameters and architectures?

- 14.

- Are procedures established to handle changes in model parameters pre- and post-market deployment?

- 15.

- Is the model designed to be interpretable and explainable to users?

- 16.

- Do you ensure that the system maintains accuracy, robustness, and cybersecurity resilience over its lifecycle?

- 17.

- What quantitative quality criteria (e.g., accuracy, precision) are defined for the model?

- 18.

- Can you provide evidence of testing against foreseeable circumstances that might impact the system’s expected performance?

- 19.

- What mechanisms are in place for users to understand, oversee, and intervene in the AI system’s decisions?

- 20.

- Are there mechanisms to notify users that they are interacting with an AI system?

- 21.

- Is there documentation for specific user training and user interface behavior under abnormal conditions?

- 22.

- Can you provide documentation on your risk assessment and mitigation measures?

- 23.

- Do you identify and address potential risks to health, safety, and fundamental rights during development?

- 24.

- Are systems in place to generate and store automated logs to ensure traceability and facilitate investigations of adverse events?

- 25.

- Does the clinical evaluation demonstrate the AI system’s safety, performance, and benefit relative to the state of the art?

- 26.

- Are clinical outcome parameters defined and justified?

- 27.

- Are post-market clinical follow-ups (PMCF) planned and implemented as needed?

- 28.

- Is there a post-market surveillance (PMS) plan specifying data collection, quality criteria, and thresholds for action?

- 29.

- Are field data and real-world performance monitored for consistency with training data?

- 30.

- Are processes in place to ensure timely reporting of adverse effects and behavioral changes?

- 31.

- Are all development, validation, and monitoring activities comprehensively documented and version-controlled?

- 32.

- Do you address regulatory requirements for instructions for use (IFU), including intended use, limitations, and updates?

- 33.

- Do you ensure compliance with relevant data protection and non-discrimination laws?

- 34.

- If you are based outside the EU, have you appointed an EU-authorized representative? How do they ensure compliance with Art. 25 of the EU AI Act?

- 35.

- Are you coordinating with distributors, importers, and deployers to ensure adherence to their obligations under Arts. 24–27 of the EU AI Act?

- 36.

- What steps have you taken to ensure the AI system aligns with EU ethical guidelines for trustworthy AI?

- 37.

- Do you ensure compliance with ethical standards, especially regarding patient privacy and data protection (alignment with GDPR)?

- 38.

- Have you assessed and documented the impact of your system on fundamental rights, as required for high-risk AI systems?

- 39.

- Have you addressed challenges related to determining the system’s intended use under both MDR and the AI Act?

- 40.

- Do you validate and regulate the outputs of general-purpose AI when incorporated into high-risk applications?

- 41.

- Do you ensure that AI systems have a comprehensive understanding of medical knowledge before deployment?

- 42.

- Are there independent validation processes to test AI models outside of their original training data?

- 43.

- Do you ensure that the AI handles complex patient case analysis and scenario-based decision-making according to the latest medical standards/guidelines?

- 44.

- Do you track deviations from clinical guidelines, and what threshold is acceptable for errors?

- 45.

- What metrics are used to measure AI’s performance in specialty medical tasks?

- 46.

- Are there human oversight mechanisms in place when AI assists clinicians?

- 47.

- Does AI identify its limitations and defer to human experts when needed?

- 48.

- Do healthcare professionals provide feedback to improve AI performance, and how is this feedback incorporated?

- 49.

- What are the criteria for progressing AI from supervised use to conditional autonomy?

- 50.

- Is AI regularly recertified, and what happens if it fails to meet performance standards?

- 51.

- Are there safeguards against AI drifting away from best practices over time?

- 52.

- Is liability assigned if an AI-driven diagnosis leads to patient harm?

- 53.

- Are measures in place to prevent AI from being used beyond its certified competencies?

- 54.

- What strategies are being developed to help AI learn from real-world feedback in a reliable and ethical way?

- 55.

- Do you plan to update AI models to keep pace with medical advancements and new research?

Appendix B

- Are there documented policies and guidelines outlining the ethical use of AI in clinical decision-making?

- Has the hospital developed a formal accountability structure for AI-related risks and errors?

- Is there a publicly accessible transparency report on AI systems used in the hospital?

- 4.

- Do AI-driven clinical tools allow for human oversight and intervention, including decision overrides when necessary?

- 5.

- Have patient safety risks related to AI use been formally assessed and documented?

- 6.

- Does the hospital have a process for tracking AI-generated clinical decisions and their impact on patient outcomes?

- 7.

- Are AI systems used in the hospital compliant with privacy and security standards?

- 8.

- If AI is used for diagnostic or treatment purposes, does it have regulatory clearance or approval?

- 9.

- Does the hospital document data governance and patient consent when AI interacts with Electronic Health Records (EHRs)?

- 10.

- Does the hospital track Key Performance Indicators (KPIs) for AI effectiveness, such as the following:

- AI diagnostic accuracy compared to human clinicians;

- Reduction in hospital readmission rates;

- Impact on clinical workflow efficiency.

- 11.

- Are AI models regularly updated and retrained based on real-world performance data?

- 12.

- Does the hospital conduct independent external audits on AI system performance?

- 13.

- Has the hospital implemented mandatory AI training for doctors, nurses, and administrative staff?

- 14.

- Does the hospital provide continuous AI education programs for staff?

- 15.

- Do employees understand how AI interacts with Electronic Health Records (EHRs) and patient data?

- 16.

- Are there structured processes in place for recording and reporting AI-related incidents?

- 17.

- Are AI-driven processes integrated into the hospital’s existing quality improvement initiatives?

- 18.

- Does the hospital ensure transparency in AI use for patients and stakeholders?

References

- Sanford, S.T.; Showalter, J.S. The Law of Healthcare Administration, 10th ed.; Association of University Programs in Health Administration/Health Administration Press: Washington, DC, USA, 2023. [Google Scholar]

- World Health Organization: Regional Office for Europe. Everything You Always Wanted to Know About European Union Health Policies but Were Afraid to Ask, 2nd ed.; Greer, S.L., Ed.; WHO Regional Office for Europe: Copenhagen, Denmark, 2019.

- History of Medical Device Regulatory Framework in the EU. Available online: https://learning.eupati.eu/mod/page/view.php?id=928 (accessed on 14 April 2025).

- Susskind, D. A Model of Technological Unemployment; Economics Series Working Papers; University of Oxford: Oxford, UK, 2018; Available online: https://www.danielsusskind.com/s/SUSSKIND-Technological-Unemployment-2018.pdf (accessed on 14 April 2025).

- Seyyed-Kalantari, L.; Zhang, H.; McDermott, M.B.A.; Chen, I.Y.; Ghassemi, M. Underdiagnosis bias of artificial intelligence algorithms applied to chest radiographs in under-served patient populations. Nat. Med. 2021, 27, 2176–2182. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, M.I.; Spooner, B.; Isherwood, J.; Lane, M.; Orrock, E.; Dennison, A. A systematic review of the barriers to the implementation of artificial intelligence in healthcare. Cureus 2023, 15, e46454. [Google Scholar] [CrossRef] [PubMed]

- Topol, E.J. High-performance medicine: The convergence of human and artificial intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Chen, E.; Banerjee, O.; Topol, E.J. AI in health and medicine. Nat. Med. 2022, 28, 31–38. [Google Scholar] [CrossRef]

- AI Power Consumption and Share of Total Data Center Consumption Worldwide in 2023 with Forecasts to 2028 in Statista and Environmental Impact of AI in Statista. Available online: https://www.statista.com/statistics/1536969/ai-electricity-consumption-worldwide/ (accessed on 14 April 2025).

- Artificial Intelligence (AI) in Health Care. Available online: https://www.congress.gov/crs-product/R48319 (accessed on 14 April 2025).

- Khan, S.D.; Hoodbhoy, Z.; Raja, M.H.R.; Kim, J.Y.; Hogg, H.D.J.; Manji, A.A.A.; Gulamali, F.; Hasan, A.; Shaikh, A.; Tajuddin, S.; et al. Frameworks for procurement, integration, monitoring, and evaluation of artificial intelligence tools in clinical settings: A systematic review. PLoS Digit. Health 2024, 3, e0000514. [Google Scholar] [CrossRef]

- DeGusta, M. Are Smart Phones Spreading Faster than Any Technology in Human History? MIT Technology Review. Available online: https://www.technologyreview.com/2012/05/09/186160/are-smart-phones-spreading-faster-than-any-technology-in-human-history/ (accessed on 2 April 2025).

- Shevtsova, D.; Ahmed, A.; A Boot, I.W.; Sanges, C.; Hudecek, M.; Jacobs, J.J.L.; Hort, S.; Vrijhoef, H.J.M. Trust in and acceptance of artificial intelligence applications in medicine: Mixed methods study. JMIR Hum. Factors 2024, 11, e47031. [Google Scholar] [CrossRef]

- Gangwal, A.; Lavecchia, A. Unleashing the power of generative AI in drug discovery. Drug Discov. Today 2024, 29, 103992. [Google Scholar] [CrossRef]

- Generative AI Agents. Available online: https://www.oracle.com/artificial-intelligence/generative-ai/agents/ (accessed on 2 April 2025).

- OECD Principles on Artificial Intelligence. Available online: https://www.oecd.org/en/topics/policy-issues/artificial-intelligence.html (accessed on 26 May 2025).

- World Health Organization. Global Strategy on Digital Health 2020–2025; World Health Organization: Geneva, Switzerland, 2021. Available online: https://www.who.int/health-topics/digital-health#tab=tab_1 (accessed on 26 May 2025).

- Papadopoulou, E.; Gerogiannis, D.; Namorado, J.; Exarchos, T. An “algorithmic ethics” effectiveness impact assessment framework’ for developers of artificial intelligence (AI) systems in healthcare. Med. Case Rep. 2024, 10, 382. [Google Scholar] [CrossRef]

- Habli, I.; Lawton, T.; Porter, Z. Artificial intelligence in health care: Accountability and safety. Bull. World Health Organ. 2020, 98, 251–256. [Google Scholar] [CrossRef] [PubMed]

- Regulation—EU—2024/1689—EN-EUR-Lex. Available online: https://eur-lex.europa.eu/eli/reg/2024/1689/oj/eng (accessed on 2 April 2025).

- Gerke, S.; Minssen, T.; Cohen, G. Ethical and legal challenges of artificial intelligence-driven healthcare. In Artificial Intelligence in Healthcare; Elsevier: Amsterdam, The Netherlands, 2020; pp. 295–336. [Google Scholar]

- Warraich, H.J.; Tazbaz, T.; Califf, R.M. FDA perspective on the regulation of artificial intelligence in health care and biomedicine. JAMA 2025, 333, 241–247. [Google Scholar] [CrossRef]

- EUR-Lex. Regulation (EU) 2024/1689 of the European Parliament and of the Council; European Union: Brussels, Belgium, 2024; Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=OJ:L_202401689&qid=1732305030769 (accessed on 14 April 2025).

- Alami, H.; Lehoux, P.; Auclair, Y.; de Guise, M.; Gagnon, M.-P.; Shaw, J.; Roy, D.; Fleet, R.; Ahmed, M.A.A.; Fortin, J.-P. Artificial intelligence and health technology assessment: Anticipating a new level of complexity. J. Med. Internet Res. 2020, 22, e17707. [Google Scholar] [CrossRef] [PubMed]

- Kristensen, F.B.; Lampe, K.; Wild, C.; Cerbo, M.; Goettsch, W.; Becla, L. The HTA Core Model®—10 years of developing an international framework to share multidimensional value assessment. Value Health 2017, 20, 244–250. [Google Scholar] [CrossRef]

- Aboy, M.; Minssen, T.; Vayena, E. Navigating the EU AI Act: Implications for regulated digital medical products. NPJ Digit. Med. 2024, 7, 237. [Google Scholar] [CrossRef]

- GDPR in Healthcare: Compliance Guide. GDPR Register, 30 October 2024. Available online: https://www.gdprregister.eu/gdpr/healthcare-sector-gdpr/ (accessed on 2 April 2025).

- Yuan, B.; Li, J. The policy effect of the general data protection regulation (GDPR) on the digital public health sector in the European Union: An empirical investigation. Int. J. Environ. Res. Public Health 2019, 16, 1070. [Google Scholar] [CrossRef] [PubMed]

- Ebers, M. Truly risk-based regulation of artificial intelligence how to implement the EU’s AI Act. Eur. J. Risk Regul. 2024, 1–20. [Google Scholar] [CrossRef]

- Wachter, S. Limitations and loopholes in the EU AI act and AI liability directives: What this means for the European union, the United States, and beyond. Yale J. Law Technol. 2024, 26, 671. [Google Scholar] [CrossRef]

- Holland, J.H. Complex adaptive systems. Daedalus 1992, 121, 17–30. [Google Scholar]

- European Commission Directorate-General for Health and Food Safety. Briefing Document Template for Parallel HTA Coordination Group (HTACG)/European Medicines Agency (EMA) Joint Scientific Consultation (JSC) for Medicinal Products (MP); Directorate-General for Health and Food Safety: Brussels, Belgium, 2024. Available online: https://health.ec.europa.eu/publications/briefing-document-template-parallel-hta-coordination-group-htacgeuropean-medicines-agency-ema-joint_en (accessed on 14 April 2025).

- European Commission Directorate-General for Health and Food Safety. Guidance on Filling in the Joint Clinical Assessment (JCA) Dossier Template—Medicinal Products; Directorate-General for Health and Food Safety: Brussels, Belgium, 2024. Available online: https://health.ec.europa.eu/publications/guidance-filling-joint-clinical-assessment-jca-dossier-template-medicinal-products_en (accessed on 8 April 2025).

- Haverinen, J.; Turpeinen, M.; Falkenbach, P.; Reponen, J. Implementation of a new Digi-HTA process for digital health technologies in Finland. Int. J. Technol. Assess. Health Care 2022, 38, e68. [Google Scholar] [CrossRef]

- Moshi, M.R.; Tooher, R.; Merlin, T. Development of a health technology assessment module for evaluating mobile medical applications. Int. J. Technol. Assess. Health Care 2020, 36, 252–261. [Google Scholar] [CrossRef]

- Farah, L.; Borget, I.; Martelli, N.; Vallee, A. Suitability of the current health technology assessment of innovative artificial intelligence-based medical devices: Scoping literature review. J. Med. Internet Res. 2024, 26, e51514. [Google Scholar] [CrossRef]

- Coalition for Health AI. Responsible AI Guide (CHAI); Coalition for Health AI: Boston, MA, USA, 2024; Available online: https://assets.ctfassets.net/7s4afyr9pmov/6e7PrdrsNTQ5FjZ4uyRjTW/c4070131c523d4e1db26105aa51f087d/CHAI_Responsible-AI-Guide.pdf (accessed on 14 April 2025).

- Eur-Lex. Regulation (EU) 2017/745 of the European Parliament and of the Council; European Union: Brussels, Belgium, 2017; Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32017R0745 (accessed on 14 April 2025).

- Eur-Lex. Regulation (EU) 2017/746 of the European Parliament and of the Council; European Union: Brussels, Belgium, 2017; Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32017R0746 (accessed on 14 April 2025).

- Directive (EU) 2024/2853 of the European Parliament and of the Council of 23 October 2024 on Liability for Defective Products and Repealing Council Directive 85/374/EEC (Text with EEA Relevance); European Union: Brussels, Belgium, 2024; Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=celex%3A32024L2853 (accessed on 14 April 2025).

- Eur-Lex. Regulation (EU) No 536/2014 of the European Parliament and of the Council; European Union: Brussels, Belgium, 2014; Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32014R0536 (accessed on 14 April 2025).

- Eur-Lex. Commission Implementing Regulation (EU) 2024/1381. 2024. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=OJ:L_202401381 (accessed on 14 April 2025).

- Commission Nationale de l’Informatique et des Libertés. Self-Assessment Guide for Artificial Intelligence (AI) Systems. Available online: https://www.cnil.fr/en/self-assessment-guide-artificial-intelligence-ai-systems (accessed on 7 April 2025).

- European Commission. Reform of the EU Pharmaceutical Legislation. 2023. Available online: https://health.ec.europa.eu/medicinal-products/legal-framework-governing-medicinal-products-human-use-eu/reform-eu-pharmaceutical-legislation_en (accessed on 7 April 2025).

- European Commission. Regulation (EU) 2025/327 of the European Parliament and of the Council on the European Health Data Space and Amending Directive 2011/24/EU and Regulation (EU) 2024/2847; European Union: Brussels, Belgium, 2025. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=OJ:L_202500327 (accessed on 14 April 2025).

- EUR-Lex. Regulation (EU) 2016/679 of the European Parliament and of the Council; European Union: Brussels, Belgium, 2016; Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32016R0679 (accessed on 14 April 2025).

- U.S. Department of Health and Human Services. Health Insurance Portability and Accountability Act of 1996; ASPE: Washington, DC, USA, 1996. Available online: http://aspe.hhs.gov/reports/health-insurance-portability-accountability-act-1996 (accessed on 14 April 2025).

- Office of the Commissioner. Regulations: Good Clinical Practice and Clinical Trial, 14 January 2021. Available online: https://www.fda.gov/science-research/clinical-trials-and-human-subject-protection/regulations-good-clinical-practice-and-clinical-trials (accessed on 9 April 2025).

- Office of the Commissioner. FDA Issues Comprehensive Draft Guidance for Developers of Artificial Intelligence-Enabled Medical Devices, 6 January 2025. Available online: https://www.fda.gov/news-events/press-announcements/fda-issues-comprehensive-draft-guidance-developers-artificial-intelligence-enabled-medical-devices (accessed on 9 April 2025).

- American Legislative Exchange Council. Product Liability Act, 1 January 2012. Available online: https://alec.org/model-policy/product-liability-act/ (accessed on 9 April 2025).

- European Commission. Notified Bodies. Available online: https://single-market-economy.ec.europa.eu/single-market/goods/building-blocks/notified-bodies_en (accessed on 14 April 2025).

- The European Association of Medical Devices Notified Bodies. Artificial Intelligence in Medical Devices Questionnaire; Team-NB: Sprimont, Belgium, 2024; Available online: https://www.team-nb.org/wp-content/uploads/2024/12/Joint-Team-NB-IG-NB-PositionPaper-AI-in-MD-Questionnaire-V1.1.pdf (accessed on 14 April 2025).

- U.S. Food and Drug Administration. Center for Devices, Radiological Health. Premarket Notification 510(k), 22 August 2024. Available online: https://www.fda.gov/medical-devices/premarket-submissions-selecting-and-preparing-correct-submission/premarket-notification-510k (accessed on 14 April 2025).

- U.S. Food and Drug Administration. Center for Devices, Radiological Health. De Novo Classification Request, 9 August 2024. Available online: https://www.fda.gov/medical-devices/premarket-submissions-selecting-and-preparing-correct-submission/de-novo-classification-request (accessed on 14 April 2025).

- U.S. Food and Drug Administration. Center for Devices, Radiological Health. Premarket Approval (PMA), 15 August 2023. Available online: https://www.fda.gov/medical-devices/premarket-submissions-selecting-and-preparing-correct-submission/premarket-approval-pma (accessed on 14 April 2025).

- Center for Devices, Radiological Health. Artificial Intelligence-Enabled Device Software Functions: Lifecycle Management and Marketing Submission Recommendations, 6 January 2025. Available online: https://www.fda.gov/regulatory-information/search-fda-guidance-documents/artificial-intelligence-enabled-device-software-functions-lifecycle-management-and-marketing (accessed on 14 April 2025).

- Gottlieb, S. New FDA policies could limit the full value of AI in medicine. JAMA Health Forum 2025, 6, e250289. [Google Scholar] [CrossRef] [PubMed]

- United States Congress. 21st Century Cures Act 114–255; U.S. Government Publishing Office: Washington, DC, USA, 2016. Available online: https://www.congress.gov/114/plaws/publ255/PLAW-114publ255.pdf (accessed on 10 January 2025).

- The White House. Blueprint for an AI Bill of Rights, 4 October 2022. Available online: https://bidenwhitehouse.archives.gov/ostp/ai-bill-of-rights/ (accessed on 10 January 2025).

- Wyden, R. Algorithmic Accountability Act of 2023. 2892 Sep 21, 2023. Available online: https://www.congress.gov/bill/118th-congress/senate-bill/2892 (accessed on 14 April 2025).

- The Equal Credit Opportunity Act, 6 August 2015. Available online: https://www.justice.gov/crt/equal-credit-opportunity-act-3 (accessed on 14 April 2025).

- State of California—Department of Justice—Office of the Attorney General. California Consumer Privacy Act (CCPA), 15 October 2018. Available online: https://oag.ca.gov/privacy/ccpa (accessed on 14 April 2025).

- Artificial Intelligence Risk Management Framework: Generative Artificial Intelligence Profile; National Institute of Standards and Technology (U.S.): Gaithersburg, MD, USA, 2024. [CrossRef]

- Federal Trade Commission. Compliance Plan for OMB Memoranda M-24-10: On Advancing Governance, Innovation, and Risk Management for Agency Use of Artificial Intelligence, September 2024; Federal Trade Commission: Washington, DC, USA. Available online: https://www.ftc.gov/system/files/ftc_gov/pdf/FTC-AI-Use-Policy.pdf (accessed on 14 April 2025).

- Tobia, K.; Nielsen, A.; Stremitzer, A. When does physician use of AI increase liability? J. Nucl. Med. 2021, 62, 17–21. [Google Scholar] [CrossRef]

- Cestonaro, C.; Delicati, A.; Marcante, B.; Caenazzo, L.; Tozzo, P. Defining medical liability when artificial intelligence is applied on diagnostic algorithms: A systematic review. Front. Med. 2023, 10, 1305756. [Google Scholar] [CrossRef]

- Shumway, D.O.; Hartman, H.J. Medical malpractice liability in large language model artificial intelligence: Legal review and policy recommendations. J. Osteopath. Med. 2024, 124, 287–290. [Google Scholar] [CrossRef] [PubMed]

- Sullivan, H.R.; Schweikart, S.J. Are current tort liability doctrines adequate for addressing injury caused by AI? AMA J. Ethics 2019, 21, E160–E166. [Google Scholar] [PubMed]

- O’Sullivan, S.; Nevejans, N.; Allen, C.; Blyth, A.; Leonard, S.; Pagallo, U.; Holzinger, K.; Holzinger, A.; Sajid, M.I.; Ashrafian, H. Legal, regulatory, and ethical frameworks for development of standards in artificial intelligence (AI) and autonomous robotic surgery. Int. J. Med. Robot. Comput. Assist. Surg. 2019, 15, e1968. [Google Scholar] [CrossRef]

- The Joint Commission. The Joint Commission Announces Responsible Use of Health Data Certification for U.S. Hospitals, 2 February 2024. Available online: https://www.jointcommission.org/resources/news-and-multimedia/news/2023/12/responsible-use-of-health-data-certification-for-hospitals/ (accessed on 14 April 2025).

- DNV. ISO/IEC 42001 Certification: AI Management System. Available online: https://www.dnv.com/services/iso-iec-42001-artificial-intelligence-ai--250876/ (accessed on 14 April 2025).

- The Joint Commission. Hospital Accreditation. Available online: https://www.jointcommission.org/what-we-offer/accreditation/health-care-settings/hospital/ (accessed on 14 April 2025).

- Accreditation Commission for Health Care. About Accreditation, 16 August 2021. Available online: https://www.achc.org/about-accreditation/ (accessed on 14 April 2025).

- NCQA. Health Care Accreditation, Health Plan Accreditation Organization—NCQA, 18 December 2017. Available online: https://www.ncqa.org/ (accessed on 14 April 2025).

- World Health Organization. Health Care Accreditation and Quality of Care: Exploring the Role of Accreditation and External Evaluation of Health Care Facilities and Organizations, 14 October 2022. Available online: https://www.who.int/publications/i/item/9789240055230 (accessed on 14 April 2025).

- Peeters, G.; Vinck, I.; Vermeyen, K.; de Walcque, C.; Seuntjens, B. Comparative Study of Hospital Accreditation Programs in Europe; Federaal Kenniscentrum voor de Gezondheidszorg: Brussels, Belgium, 2008. [Google Scholar] [CrossRef]

- International Society for Quality in Health Care External Evaluation Association. International Accreditation Programme. Available online: https://ieea.ch/ (accessed on 14 April 2025).

- Bond, R.R.; Mulvenna, M.D.; Potts, C.; O’Neill, S.; Ennis, E.; Torous, J. Digital transformation of mental health services. npj Ment. Health Res. 2023, 2, 13. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Topol, E.J. A clinical certification pathway for generalist medical AI systems. Lancet 2025, 405, 20. [Google Scholar] [CrossRef]

- American Board of Medical Specialties. What Is ABMS Board Certification?, 10 February 2021. Available online: https://www.abms.org/board-certification/ (accessed on 14 April 2025).

- Physician and Surgeon. Available online: https://wmc.wa.gov/licensing/licensing-requirements/physician-and-surgeon (accessed on 14 April 2025).

- American Board of Family Medicine. ABFM Family Medicine Board Review. 2025. Available online: https://www.boardvitals.com/family-medicine-board-review (accessed on 14 April 2025).

- ACCME. Accreditation Council for Continuing Medical Education, 30 April 2024. Available online: https://accme.org/ (accessed on 14 April 2025).

- EU AI Act Compliance Checker. Available online: https://artificialintelligenceact.eu/assessment/eu-ai-act-compliance-checker/ (accessed on 14 April 2025).

- University of Washington Medicine Huddle. Generative AI at UW Medicine, 26 August 2024. Available online: https://huddle.uwmedicine.org/genai/ (accessed on 14 April 2025).

- The AI Community Building the Future. Available online: https://huggingface.co (accessed on 14 April 2025).

- Committee for Medicinal Products for Human Use (CHMP) Methodology Working Party. Reflection Paper on the Use of Artificial Intelligence (AI) in the Medicinal Product Lifecycle, 9 September 2024. Available online: https://www.ema.europa.eu/en/documents/scientific-guideline/reflection-paper-use-artificial-intelligence-ai-medicinal-product-lifecycle_en.pdf (accessed on 14 April 2025).

- Dospinescu, N.; Dospinescu, O. A Managerial Approach on Organisational Culture’s Influence over the Authenticity at Work; Editura Universităţii “Alexandru Ioan Cuza” din Iaşi: Iași, Romania, 2022; Available online: https://www.ceeol.com/search/chapter-detail?id=1176431 (accessed on 27 May 2025).

- EU Grants. How to Complete Your Ethics Self-Assessment, 13 July 2021. Available online: https://ec.europa.eu/info/funding-tenders/opportunities/docs/2021-2027/common/guidance/how-to-complete-your-ethics-self-assessment_en.pdf (accessed on 14 April 2025).

- Schmidt, J.; Schutte, N.M.; Buttigieg, S.; Novillo-Ortiz, D.; Sutherland, E.; Anderson, M.; de Witte, B.; Peolsson, M.; Unim, B.; Pavlova, M.; et al. Mapping the regulatory landscape for artificial intelligence in health within the European Union. npj Digit. Med. 2024, 7, 229. [Google Scholar] [CrossRef]

- Joint Commission International. Joint Commission International Accreditation Standards for Hospitals Including Standards for Academic Medical Center Hospitals; Joint Commission International: Oakbrook Terrace, IL, USA, 2025; Available online: https://www.jointcommissioninternational.org/-/media/jci/jci-documents/accreditation/hospital-and-amc/jcih24_standards-only.pdf (accessed on 14 April 2025).

- Association of American Medical Colleges. Guide to Evaluating Vendors on AI Capabilities and Offerings and Guide to Assessing Your Institution’s Readiness for Implementing AI in Selection. Available online: https://www.aamc.org/media/81196/download (accessed on 14 April 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jenko, S.; Papadopoulou, E.; Kumar, V.; Overman, S.S.; Krepelkova, K.; Wilson, J.; Dunbar, E.L.; Spice, C.; Exarchos, T. Artificial Intelligence in Healthcare: How to Develop and Implement Safe, Ethical and Trustworthy AI Systems. AI 2025, 6, 116. https://doi.org/10.3390/ai6060116

Jenko S, Papadopoulou E, Kumar V, Overman SS, Krepelkova K, Wilson J, Dunbar EL, Spice C, Exarchos T. Artificial Intelligence in Healthcare: How to Develop and Implement Safe, Ethical and Trustworthy AI Systems. AI. 2025; 6(6):116. https://doi.org/10.3390/ai6060116

Chicago/Turabian StyleJenko, Sasa, Elsa Papadopoulou, Vikas Kumar, Steven S. Overman, Katarina Krepelkova, Joseph Wilson, Elizabeth L. Dunbar, Carolin Spice, and Themis Exarchos. 2025. "Artificial Intelligence in Healthcare: How to Develop and Implement Safe, Ethical and Trustworthy AI Systems" AI 6, no. 6: 116. https://doi.org/10.3390/ai6060116

APA StyleJenko, S., Papadopoulou, E., Kumar, V., Overman, S. S., Krepelkova, K., Wilson, J., Dunbar, E. L., Spice, C., & Exarchos, T. (2025). Artificial Intelligence in Healthcare: How to Develop and Implement Safe, Ethical and Trustworthy AI Systems. AI, 6(6), 116. https://doi.org/10.3390/ai6060116