Evaluating a Hybrid LLM Q-Learning/DQN Framework for Adaptive Obstacle Avoidance in Embedded Robotics

Abstract

1. Introduction

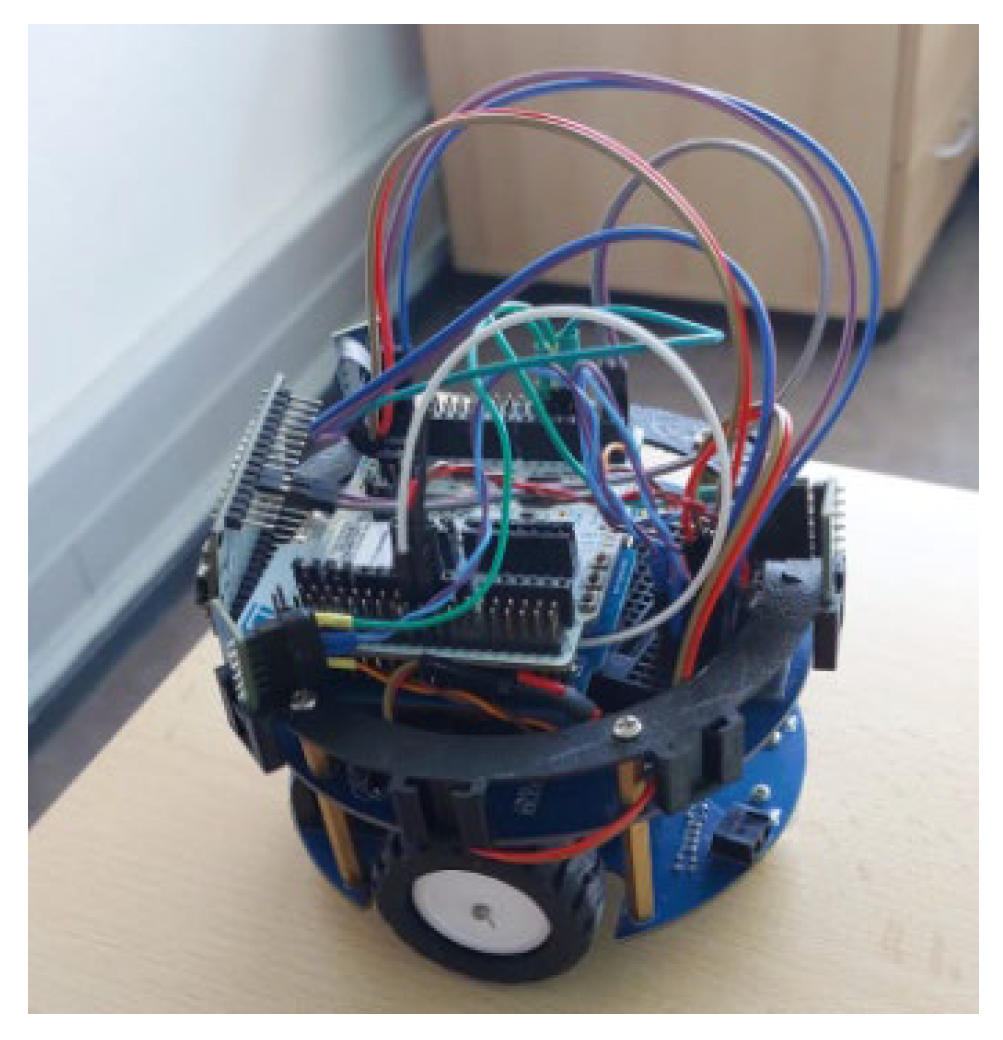

2. System Architecture

3. DQN and Q-Learning for Obstacle Avoidance

3.1. Q-Learning

- Q(s,a) is the current Q-value for state s and action a;

- α is the learning rate (controls how much new information overrides old information);

- R(s,a) is the immediate reward for taking action (a) in state (s);

- γ is a discount factor (weighs the importance of future rewards vs. immediate rewards);

- Q(s′, a′) is the maximum Q-value for the next state s′.

- Exploitation: Choosing the action with the highest Q-value to maximize immediate rewards.

- Exploration: Randomly selecting an action to discover better strategies and to improve long-term performance.

- State Space: (s) = [dfront, dleft, dright], Discretized into “Close”, “Medium”, “Far” bins.where:

- dfront = Distance to the nearest obstacle in front.

- dleft = Distance to the nearest obstacle on the left.

- dright = Distance to the nearest obstacle on the right.

- Action Space: A={Move Forward,Turn Left,Turn Right,Stop}1. Initialize Q-tables-Initialize Q-table with zeros for all state-action pairs.-Set exploration rate ϵ = 1.0 (initial probability of random actions)2. Training Loop:For each training episode:

- Reset the environment → Set initial state (s)

- Loop for each time step until episode ends:

- ⚬

- 2.1 Choose Action:

- ▪

- With probability ε, choose a random action (a) (exploration).

- ▪

- Otherwise, choose the action with the highest Q-value

- ⚬

- 2.2 Execute Action & Observe Reward:

- ▪

- Perform action (a) in the environment.

- ▪

- Observe reward® and next state (s′).

- ⚬

- 2.3 Update Q-Table (Standard Q-learning Update Rule):

- ▪

- α → Learning rate (controls how much new experience overrides old values).

- ▪

- γ → Discount factor (weighs future rewards vs. immediate rewards).

- ⚬

- 2.4 Decay Exploration Rate:

- ▪

- εdecay = 0.995

- ▪

- εmin = 0.01 (ensures some exploration).

- ⚬

- 2.5 Update State:

- ▪

- Set (s) = (s′).

- Repeat Until Episode Ends (collision or max steps reached)

3.2. DQN for Obstacle Avoidance

- STM32WB55RG collects data (states, actions, rewards) from sensors and stores them.

- ▪

- Initialize sensors ToF.

- ▪

- For each step in the environment:

- ⚬

- Read sensor data → Get state (s) = [dfront, dleft, dright];

- ⚬

- Select an action (a) randomly or based on simple Q-table;

- ⚬

- Execute action and observe reward (r);

- ⚬

- Store (s,a,r,s′) in memory (e.g., SD card, Flash, or send via UART).

- A computer (PC with GPU) trains the DQN model using collected data.

- The trained network is deployed back to STM32WB55RG, where it runs only inference for decision-making.

- ▪

- Load trained neural network (stored in Flash or loaded via UART).

- ▪

- For each step:

- ⚬

- Read sensor data → Get state (s);

- ⚬

- Run the trained DQN model to get action ;

- ⚬

- Execute action (motor movement).

- ▪

- Repeat until the task is completed.

4. Hybrid Learning Approach

- Q-learning/DQN runs locally on STM32WB55RG:

- ▪

- The robot observes state (s) using ToF sensors.

- ▪

- The decision function for LLM prompt selection, implemented on STM32WB55RG, evaluates the robot’s current state by analyzing key parameters, such as obstacle proximity, recent states, Q-values, and action history.

- ▪

- The Q-table (for Q-learning) or the predicted Q-value (for the DQN) is queried to select an action (a) using an ε-greedy policy.

- ▪

- The robot executes action (a) and observes the reward (r) and the next state (s’).

- ▪

- If Q-Learning is used, the Q-table is updated using the standard Bellman equation:

- If the decision function detects challenges (e.g., repeated collisions, deadlocks, or ineffective learning), the WB55RG triggers the RPi5 LLM for strategic assistance.

- The Raspberry Pi 5 structures a prompt including:

- ▪

- Current state (s), recent actions (a), observed reward®, and current Q-table values for (s);

- ▪

- Current Q-values (Q-learning) or Q-network outputs (DQN) for (s).

- The prompt is sent to the RPi5 LLM.

- The LLM responds with a strategic decision:

- ▪

- Option 1: If the LLM recommends a new action (e.g., “Turn left instead of moving forward”), the STM32WB55RG executes it directly;

- ▪

- Option 2: If the LLM adjusts Q-values (for Q-learning) (e.g., “Increase reward for turning left in state (s’)”), the WB55RG updates its model accordingly and triggers a new action.

- To ensure the LLM-generated decision is effective, a feedback control mechanism is introduced:

- ▪

- Reward comparison: After executing the LLM-suggested action, the new reward (r’) is compared to the previous reward (r)

- ⚬

- If (r’ > r) → The LLM decision is considered effective;

- ⚬

- If (r’ ≤ r) → The system sends a correction prompt to the LLM for refinement.

- The updated Q-values influence future learning and actions

- As the robot learns from refined data, Q-learning/DQN gradually becomes more autonomous, reducing dependence on the LLM

5. Decision Function for LLM Prompt Selection

- If an obstacle is critically close, the system prioritizes a high-risk obstacle avoidance prompt;

- If the robot repeats the same state, it triggers a deadlock resolution prompt.

6. LLM Prompting Strategies for the Robot

6.1. LLM Prompting with Q-Learning

- Navigation Optimization:

- Deadlock Resolution:

- Q-Table Enhancement:

- Adaptive Exploration vs. Exploitation Prompt Purpose:

- High-Risk Obstacle Avoidance:

- Adaptive Speed Adjustment

- Learning Rate Optimization

6.2. LLM Prompting with DQN

- Navigation Optimization:

- Deadlock Resolution:

- High-Risk Obstacle Avoidance:

- Adaptive Speed Adjustment

7. Preparing LLM Prompts

8. LLM on Raspberry Pi 5

9. Comparison of Q-Learning/DQN vs. Q-Learning/DQN + LLM

10. Advantages of the Hybrid Q-Learning + LLM Approach over Classical and Deep RL Methods

10.1. Limitations of Classical Path Planning and RL Methods

10.2. Hardware Constraints of Deep RL on STM32WB55RG

10.3. Limitations of Concurrent LLM and Deep RL Execution on Raspberry Pi 5 in Real-World Embedded Robotics

10.4. Advantages of the Hybrid Q-Learning + LLM Approach

11. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pang, W.; Zhu, D.; Sun, C. Multi-AUV Formation Reconfiguration Obstacle Avoidance Algorithm Based on Affine Transformation and Improved Artificial Potential Field Under Ocean Currents Disturbance. IEEE Trans. Autom. Sci. Eng. 2023, 21, 1469–1487. [Google Scholar] [CrossRef]

- Guo, B.; Guo, N.; Cen, Z. Obstacle Avoidance with Dynamic Avoidance Risk Region for Mobile Robots in Dynamic Environments. IEEE Robot. Autom. Lett. 2022, 7, 5850–5857. [Google Scholar] [CrossRef]

- Zhai, L.; Liu, C.; Zhang, X.; Wang, C. Local Trajectory Planning for Obstacle Avoidance of Unmanned Tracked Vehicles Based on Artificial Potential Field Method. IEEE Access 2024, 12, 19665–19681. [Google Scholar] [CrossRef]

- Li, J.; Xiong, X.; Yan, Y.; Yang, Y. A Survey of Indoor UAV Obstacle Avoidance Research. IEEE Access 2023, 11, 51861–51891. [Google Scholar] [CrossRef]

- Zhang, C.; Zhou, L.; Li, Y.; Fan, Y. A dynamic path planning method for social robots in the home environment. Electronics 2020, 9, 1173. [Google Scholar] [CrossRef]

- Yang, B.; Yan, J.; Cai, Z.; Ding, Z.; Li, D.; Cao, Y.; Guo, L. A novel heuristic emergency path planning method based on vector grid map. ISPRS Int. J. Geo-Inf. 2021, 10, 370. [Google Scholar] [CrossRef]

- Xiao, S.; Tan, X.; Wang, J. A simulated annealing algorithm and grid map-based UAV coverage path planning method for 3D reconstruction. Electronics 2021, 10, 853. [Google Scholar] [CrossRef]

- Guo, J.; Liu, L.; Liu, Q.; Qu, Y. An Improvement of D* Algorithm for Mobile Robot Path Planning in Partial Unknown Environment. In Proceedings of the 2009 Second International Conference on Intelligent Computation Technology and Automation, Changsha, China, 10–11 October 2009. [Google Scholar]

- Lin, T. A path planning method for mobile robot based on A and antcolony algorithms. J. Innov. Soc. Sci. Res. 2020, 7, 157–162. [Google Scholar]

- Wang, J.; Zhang, Y.; Xia, L. Adaptive Genetic Algorithm Enhancements for Path Planning of Mobile Robots. In Proceedings of the 2010 International Conference on Measuring Technology and Mechatronics Automation, Changsha, China, 13–14 March 2010; pp. 416–419. [Google Scholar]

- Saska, M.; Macăs, M.; Přeučil, L.; Lhotská, L. Robot path planning using particle swarm optimization of Ferguson splines. In Proceedings of the IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Prague, Czech Republic, 20–22 September 2006; pp. 833–839. [Google Scholar]

- Li, C.-L.; Wang, N.; Wang, J.-F.; Xu, S.-Y. A Path Planing Algorithm for Mobile Robot Based on Particle Swarm. In Proceedings of the 2023 2nd International Symposium on Control Engineering and Robotics (ISCER), Hangzhou, China, 17–19 February 2023; pp. 319–322. [Google Scholar]

- Zhao, J.-P.; Gao, X.-W.; Liu, J.-G.; Chen, Y.-Q. Research of path planning for mobile robot based on improved ant colony optimization algorithm. In Proceedings of the 2010 2nd International Conference on Advanced Computer Control, Shenyang, China, 27–29 March 2010; pp. 241–245. [Google Scholar]

- Rasheed, J.; Irfan, H. Q-Learning of Bee-Like Robots through Obstacle Avoidance. In Proceedings of the 2024 12th International Conference on Control, Mechatronics and Automation (ICCMA), London, UK, 11–13 November 2024; pp. 166–170. [Google Scholar]

- Mohanty, P.K.; Saurabh, S.; Yadav, S.; Pooja; Kundu, S. A Q-Learning Strategy for Path Planning of Robots in Unknown Terrains. In Proceedings of the 2022 1st International Conference on Sustainable Technology for Power and Energy Systems (STPES), Srinagar, India, 4–6 July 2022; pp. 1–6. [Google Scholar]

- Kumaar, A.A.N.; Kochuvila, S. Mobile Service Robot Path Planning Using Deep Reinforcement Learning. IEEE Access 2023, 11, 100083–100096. [Google Scholar] [CrossRef]

- Masoud, M.; Hami, T.; Kourosh, F. Path Planning and Obstacle Avoidance of a Climbing Robot Subsystem using Q-learning. In Proceedings of the 2024 12th RSI International Conference on Robotics and Mechatronics (ICRoM), Tehran, Iran, 17–19 December 2024; pp. 149–155. [Google Scholar]

- Watkins, C.J.C.H. Learning from Delayed Rewards. Ph.D. Thesis, University of Cambridge, Cambridge, UK, 1989. [Google Scholar]

- Pieters, M.; Wiering, M.A. Q-learning with experience replay in a dynamic environment. In Proceedings of the 2016 IEEE Symposium Series on Computational Intelligence (SSCI), Athens, Greece, 6–9 December 2016; pp. 1–8. [Google Scholar]

- Du, H.B.; Zhao, H.; Zhang, J.; Wang, J.; Qi, Q. A Path-Planning Approach Based on Potential and Dynamic Q-Learning for Mobile Robots in Unknown Environment. Mob. Robot. Autom. 2022, 2022, 2540546. [Google Scholar]

- Hanh, L.D.; Cong, V.D. Path following and avoiding obstacle for mobile robot under dynamic environments using reinforcement learning. J. Robot. Control. 2022, 13, 158–167. [Google Scholar] [CrossRef]

- Gharbi, A. A dynamic reward-enhanced Q-learning approach for efficient path planning and obstacle avoidance of mobile robots. Appl. Comput. Inform. 2024. ahead of print. [Google Scholar] [CrossRef]

- Ribeiro, T.; Gonçalves, F.; Garcia, I.; Lopes, G.; Ribeiro, A.F. Q-Learning for Autonomous Mobile Robot Obstacle Avoidance. In Proceedings of the 2019 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Porto, Portugal, 24–26 April 2019; pp. 1–7. [Google Scholar]

- Li, X. Path planning in dynamic environments based on Q-learning. In Proceedings of the 5th International Conference on Mechanics, Simulation; Control (ICMSC 2023), San Jose, CA, USA, 24–25 June 2023; Volume 63, pp. 222–230. [Google Scholar]

- Ha, V.T.; Vinh, V.Q. Experimental Research on Avoidance Obstacle Control for Mobile Robots Using Q-Learning (QL) and Deep Q-Learning (DQL) Algorithms in Dynamic Environments. Actuators 2024, 13, 26. [Google Scholar] [CrossRef]

- Tadele, S.B.; Kar, B.; Wakgra, F.G.; Khan, A.U. Optimization of End-to-End AoI in Edge-Enabled Vehicular Fog Systems: A Dueling-DQN Approach. IEEE Internet Things J. 2025, 12, 843–853. [Google Scholar] [CrossRef]

- Zhou, X.; Han, G.; Zhou, G.; Xue, Y.; Lv, M.; Chen, A. Hybrid DQN-Based Low-Computational Reinforcement Learning Object Detection with Adaptive Dynamic Reward Function and ROI Align-Based Bounding Box Regression. IEEE Trans. Image Process. 2025, 34, 1712–1725. [Google Scholar] [CrossRef]

- Guo, L.; Jia, J.; Chen, J.; Wang, X. QRMP-DQN Empowered Task Offloading and Resource Allocation for the STAR-RIS Assisted MEC Systems. IEEE Trans. Veh. Technol. 2025, 74, 1252–1266. [Google Scholar] [CrossRef]

- Luo, C.; Yang, S.X.; Stacey, D.A. Real-time path planning with deadlock avoidance of multiple cleaning robots. In Proceedings of the 2003 IEEE International Conference on Robotics and Automation (Cat. No.03CH37422), Taipei, Taiwan, 14–19 September 2003; Volume 3, pp. 4080–4085. [Google Scholar] [CrossRef]

- Li, G.; Han, X.; Zhao, P.; Hu, P.; Nie, L.; Zhao, X. RoboChat: A Unified LLM-Based Interactive Framework for Robotic Systems. In Proceedings of the 2023 5th International Conference on Robotics, Intelligent Control and Artificial Intelligence (RICAI), Hangzhou, China, 1–3 December 2023; pp. 466–471. [Google Scholar]

- Zhou, H.; Lin, Y.; Yan, L.; Zhu, J.; Min, H. LLM-BT: Performing Robotic Adaptive Tasks based on Large Language Models and Behavior Trees. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 16655–16661. [Google Scholar]

- Tan, R.; Lou, S.; Zhou, Y.; Lv, C. Multi-modal LLM-enabled Long-horizon Skill Learning for Robotic Manipulation. In Proceedings of the 2024 IEEE International Conference on Cybernetics and Intelligent Systems (CIS) and IEEE International Conference on Robotics, Automation and Mechatronics (RAM), Hangzhou, China, 8–11 August 2024; pp. 14–19. [Google Scholar]

- Shuvo, M.I.R.; Alam, N.; Fime, A.A.; Lee, H.; Lin, X.; Kim, J.-H. A Novel Large Language Model (LLM) Based Approach for Robotic Collaboration in Search and Rescue Operations. In Proceedings of the IECON 2024—50th Annual Conference of the IEEE Industrial Electronics Society, Chicago, IL, USA, 3–6 November 2024; pp. 1–6. [Google Scholar]

- Alto, V. Building LLM Powered Applications: Create Intelligent Apps and Agents with Large Language Models; Packt Publishing: Birmingham, UK, 2024. [Google Scholar]

- Auffarth, B. Generative AI with LangChain: Build Large Language Model (LLM) Apps with Python, ChatGPT, and Other LLMs; Packt Publishing: Birmingham, UK, 2024. [Google Scholar]

- Chen, J.; Yang, Z.; Xu, H.G.; Zhang, D.; Mylonas, G. Multi-Agent Systems for Robotic Autonomy with LLMs. arXiv 2024, arXiv:2505.05762. [Google Scholar]

- Zu, W.; Song, W.; Chen, R.; Guo, Z.; Sun, F.; Tian, Z.; Pan, W.; Wang, J. Language and Sketching: An LLM-driven Interactive Multimodal Multitask Robot Navigation Framework. arXiv 2024, arXiv:2311.08244. [Google Scholar]

- Mon-Williams, R.; Li, G.; Long, R.; Du, W.; Lucas, C.G. Embodied large language models enable robots to complete complex tasks in unpredictable environments. Nat. Mach. Intell. 2025, 7, 592–601. [Google Scholar] [CrossRef]

- Tao, Y.; Yang, J.; Ding, D.; Erickson, Z. LAMS: LLM-Driven Automatic Mode Switching for Assistive Teleoperation. In Proceedings of the 2025 20th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Melbourne, Australia, 4–6 March 2025; pp. 242–251. [Google Scholar]

- Tardioli, D.; Matellán, V.; Heredia, G.; Silva, M.F.; Marques, L. (Eds.) ROBOT2022: Fifth Iberian Robotics Conference, Advances in Robotics; Springer Nature: Berlin, Germany, 2023; Volume 2. [Google Scholar]

- Zhang, P.; Zeng, G.; Wang, T.; Lu, W. TinyLlama: An Open-Source Small Language Model. arXiv 2024, arXiv:2401.02385. [Google Scholar] [CrossRef]

- Lamaakal, L.; Maleh, Y.; El Makkaoui, K.; Ouahbi, I.; Pławiak, P.; Alfarraj, O.; Almousa, M.; El-Latif, A.A.A. Tiny Language Models for Automation and Control: Overview, Potential Applications, and Future Research Directions. Sensors 2025, 25, 1318. [Google Scholar] [CrossRef] [PubMed]

- Jiang, A.Q.; Sablayrolles, A.; Mensch, A.; Bamford, C.; Chaplot, D.S.; de las Casas, D.; Bressand, F.; Lengyel, G.; Lample, G.; Saulnier, L.; et al. Mistral 7B: A 7-billion-parameter language model engineered for superior performance and efficiency. arXiv 2023, arXiv:2310.06825. [Google Scholar] [CrossRef]

- Sanh, V.; Debut, L.; Chaumond, J.; Wolf, T. DistilBERT, a distilled version of BERT: Smaller, faster, cheaper and lighter. arXiv 2019. [Google Scholar] [CrossRef]

- Jiao, X.; Yin, Y.; Shang, L.; Jiang, X.; Chen, X.; Li, L.; Wang, F.; Liu, Q. TinyBERT: Distilling BERT for Natural Language Understanding. arXiv 2020, arXiv:1909.10351. [Google Scholar]

- Su, C.; Wen, J.; Kang, J.; Wang, Y.; Su, Y.; Pan, H.; Zhong, Z.; Hossain, M.S. Hybrid RAG-Empowered Multimodal LLM for Secure Data Management in Internet of Medical Things: A Diffusion-Based Contract Approach. IEEE Internet Things J. 2025, 12, 13428–13440. [Google Scholar] [CrossRef]

- Seif, R.; Oskoei, M.A. Mobile Robot Path Planning by RRT* in Dynamic Environments. Int. J. Intell. Syst. Appl. 2015, 7, 24–30. [Google Scholar] [CrossRef]

- LaValle, S.M.; Kuffner, J.J. Randomized Kinodynamic Planning. Int. J. Robot. Res. 2001, 20, 378–400. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Chapter 5: Particle Swarm Optimization. In Swarm Intelligence; Morgan Kaufmann Publishers: Burlington, MA, USA, 2001; pp. 287–318. ISBN 978-1558605954. [Google Scholar]

- Pal, N.S.; Sharma, S. Robot Path Planning using Swarm Intelligence: A Survey. Int. J. Comput. Appl. 2013, 83, 5–12. [Google Scholar] [CrossRef]

- Barraquand, J.; Langlois, B.; Latombe, J.-C. Numerical potential field techniques for robot path planning. IEEE Trans. Syst. Man Cybern. 1992, 22, 224–241. [Google Scholar] [CrossRef]

- Engstrom, L.; Ilyas, A.; Santurkar, S.; Tsipras, D.; Janoos, F.; Rudolph, L.; Madry, A. Implementation Matters in Deep RL: A Case Study on PPO and TRPO. arXiv 2020, arXiv:2005.12729. [Google Scholar]

- Lu, J.; Wu, X.; Cao, S.; Wang, X.; Yu, H. An Implementation of Actor-Critic Algorithm on Spiking Neural Network Using Temporal Coding Method. Appl. Sci. 2022, 12, 10430. [Google Scholar] [CrossRef]

- Zephyr Project. Nucleo WB55RG—Zephyr Project Documentation. Available online: https://docs.zephyrproject.org/latest/boards/st/nucleo_wb55rg/doc/nucleo_wb55rg.html (accessed on 14 May 2025).

- Badalian, K.; Koch, L.; Brinkmann, T.; Picerno, M.; Wegener, M.; Lee, S.-Y.; Andert, J. LExCI: A framework for reinforcement learning with embedded systems. Appl. Intell. 2024, 54, 8384–8398. [Google Scholar] [CrossRef]

- Dulac-Arnold, G.; Levine, N.; Mankowitz, D.J.; Li, J.; Paduraru, C.; Gowal, S.; Hester, T. Challenges of real-world reinforcement learning: Definitions, benchmarks and analysis. Mach. Learn. 2021, 110, 2419–2468. [Google Scholar] [CrossRef]

| Parameter | Description | Value |

|---|---|---|

| α (Learning Rate) | Controls the weight of new experiences in Q-value updates. Higher values make learning faster but noisier | 0.1 |

| γ (Discount Factor) | Determines how much future rewards are considered. Closer to 1 means long-term rewards are prioritized | 0.9 |

| ε (Exploration Rate) | Probability of selecting a random action instead of the best-known action. Decays over time to prioritize exploitation. | Initially 1.0, decays to 0.1 |

| Episodes | Number of training iterations | 10,000 |

| Model | Size (Quantized GGUF/ONNX) | Min RAM Needed | Inference Speed (RPi 5) | Best for | Obstacle Avoidance Suitability and Justification |

|---|---|---|---|---|---|

| TinyLlama 1.1B [41] | ~700 MB (4-bit GGUF) | 1 GB | 0.5–1.2 s/response | Fast real-time decisions | Best choice for fast, real-time navigation decisions on 4 GB RPi 5. |

| Phi-2 (Quantized) [42] | 1.8 GB (4-bit GGUF) | 2 GB | 1.5–3 s/response | Small-scale reasoning | Good for low-power reasoning |

| DistilGPT-2 [42] | ~350 MB (ONNX) | 512 MB–1 GB | 0.3–1 s/response | Simple rule-based responses | Can work for basic action suggestions, but lacks deep reasoning |

| GPT-2 Small [42] | ~500 MB (ONNX) | 1 GB | 1–2 s/response | Basic decision-making | Similar to DistilGPT-2, can be used for simple command-based decisions |

| Mistral 7B (Quantized) [43] | ~3.8 GB (4-bit GGUF) | 5 GB | 2–5 s/response | Better reasoning (8 GB RPi 5 only) | More advanced reasoning, but too slow for real-time use on 4 GB RPi. Works for high-level decision-making in 8 GB RPi 5. |

| DistilBERT [44] | ~300 MB (ONNX) | 512 MB–1 GB | 0.2–0.8 s/response | Fast text-based reasoning | Not suitable for obstacle avoidance. Designed for NLP tasks |

| TinyBERT [45] | ~120 MB (ONNX) | 256 MB–512 MB | 0.1–0.5 s/response | Super lightweight NLP | Not optimized for real-time decisions. |

| Metric | Q-Learning | Q-Learning + LLM | DQN | DQN + LLM | Improvement (Q → Q + LLM) (%) | Improvement (DQN → DQN + LLM) (%) |

|---|---|---|---|---|---|---|

| Deadlock Recovery Rate (Static) | 55% | 88% | 70% | 92% | +33% | +22% |

| Deadlock Recovery Rate (Dynamic) | 40% | 81% | 62% | 89% | +41% | +27% |

| Time to Reach Goal (Static) | 45 s | 30 s | 35 s | 25 s | −33% | −29% |

| Time to Reach Goal (Dynamic) | 58 s | 38 s | 44 s | 31 s | −34% | −30% |

| Collision Rate (Static) | 18% | 7% | 12% | 5% | −11% | −7% |

| Collision Rate (Dynamic) | 25% | 11% | 17% | 8% | −14% | −9% |

| Successful Navigation Attempts (Static) | 72% | 91% | 85% | 94% | +19% | +9% |

| Successful Navigation Attempts (Dynamic) | 66% | 87% | 78% | 91% | +21% | +13% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Farkh, R.; Oudinet, G.; Deleruyelle, T. Evaluating a Hybrid LLM Q-Learning/DQN Framework for Adaptive Obstacle Avoidance in Embedded Robotics. AI 2025, 6, 115. https://doi.org/10.3390/ai6060115

Farkh R, Oudinet G, Deleruyelle T. Evaluating a Hybrid LLM Q-Learning/DQN Framework for Adaptive Obstacle Avoidance in Embedded Robotics. AI. 2025; 6(6):115. https://doi.org/10.3390/ai6060115

Chicago/Turabian StyleFarkh, Rihem, Ghislain Oudinet, and Thibaut Deleruyelle. 2025. "Evaluating a Hybrid LLM Q-Learning/DQN Framework for Adaptive Obstacle Avoidance in Embedded Robotics" AI 6, no. 6: 115. https://doi.org/10.3390/ai6060115

APA StyleFarkh, R., Oudinet, G., & Deleruyelle, T. (2025). Evaluating a Hybrid LLM Q-Learning/DQN Framework for Adaptive Obstacle Avoidance in Embedded Robotics. AI, 6(6), 115. https://doi.org/10.3390/ai6060115