EEG-Based Assessment of Cognitive Resilience via Interpretable Machine Learning Models

Abstract

1. Introduction

2. Materials and Methods

2.1. Subjects

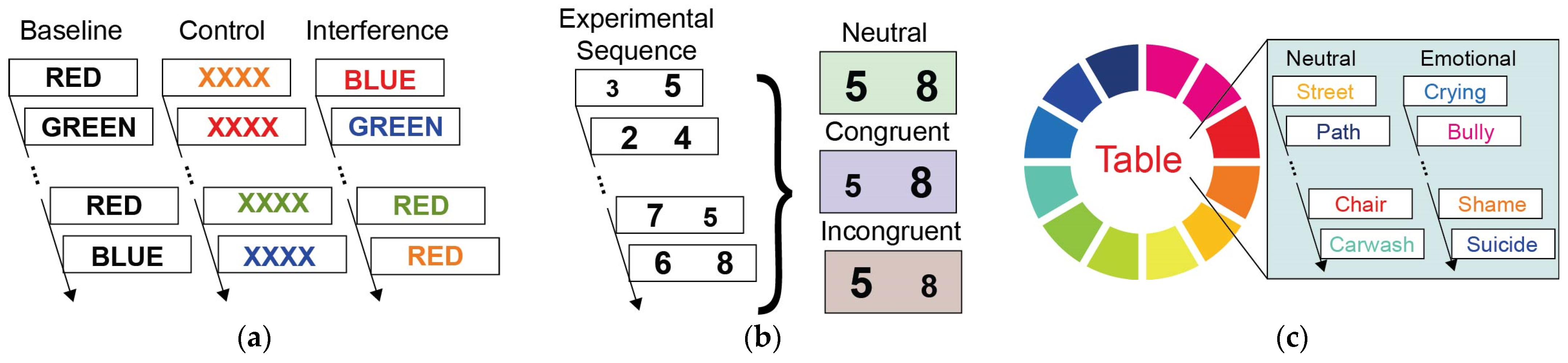

2.2. Experimental Design

2.3. Data Acquisition and Preprocessing

2.4. Segmentation and Feature Extraction

2.5. Classification

2.6. Model Evaluation and Hyperparameter Tuning

2.7. Feature Importance for Model Interpretability

3. Results

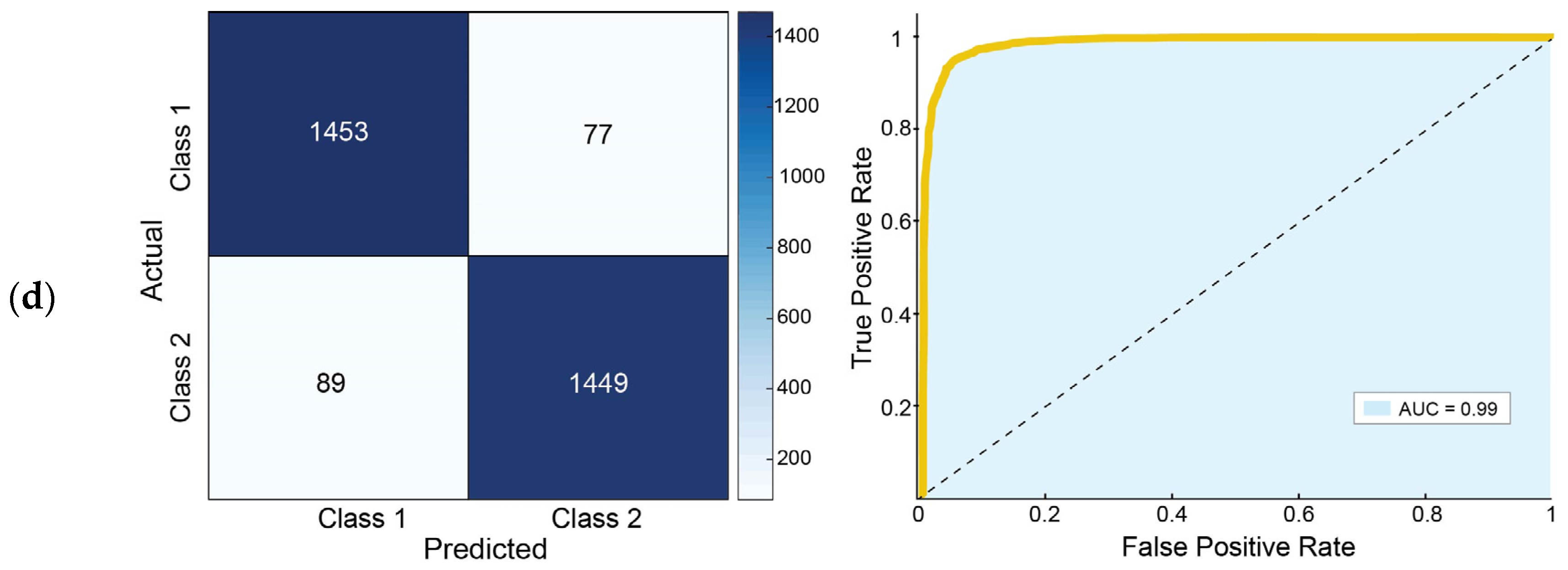

3.1. Classification Performance

3.2. SHAP Analysis for Model Interpretability

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AdaBoost | Adaptive Boosting |

| BCI | Brain–Computer Interfaces |

| EEG | Electroencephalography |

| ES | Stroop Emotion Test |

| HN-SEALs | Hellenic Navy SEa Air Land |

| ML | Machine Learning |

| PTSD | Post-traumatic stress disorder |

| SCWT | Stroop Color Word Test |

| SHAP | Shapley Additive Explanations |

| SNT | Stroop Number Test |

| SVM | Support Vector Machine |

| XGBoost | eXtreme Gradient Boosting |

References

- Luthar, S.S.; Cicchetti, D. The Construct of Resilience: Implications for Interventions and Social Policies. Dev. Psychopathol. 2000, 12, 857–885. [Google Scholar] [CrossRef] [PubMed]

- Fletcher, D.; Sarkar, M. A Grounded Theory of Psychological Resilience in Olympic Champions. Psychol. Sport Exerc. 2012, 13, 669–678. [Google Scholar] [CrossRef]

- Mastroianni, G.R.; Mabry, T.R.; Benedek, D.M.; Ursano, R.J. The Stresses of Modern War. In Biobehavioral Resilience to Stress; Routledge: London, UK, 2008; ISBN 978-0-429-24956-3. [Google Scholar]

- Jha, A.P.; Stanley, E.A.; Kiyonaga, A.; Wong, L.; Gelfand, L. Examining the Protective Effects of Mindfulness Training on Working Memory Capacity and Affective Experience. Emotion 2010, 10, 54–64. [Google Scholar] [CrossRef]

- Flood, A.; Keegan, R.J. Cognitive Resilience to Psychological Stress in Military Personnel. Front. Psychol. 2022, 13, 809003. [Google Scholar] [CrossRef] [PubMed]

- Niedermeyer, E.; da Silva, F.H.L. Electroencephalography: Basic Principles, Clinical Applications, and Related Fields; Lippincott Williams & Wilkins: London, UK, 2005; ISBN 978-0-7817-5126-1. [Google Scholar]

- Katmah, R.; Al-Shargie, F.; Tariq, U.; Babiloni, F.; Al-Mughairbi, F.; Al-Nashash, H. A Review on Mental Stress Assessment Methods Using EEG Signals. Sensors 2021, 21, 5043. [Google Scholar] [CrossRef]

- Kaldewaij, R.; Koch, S.B.J.; Hashemi, M.M.; Zhang, W.; Klumpers, F.; Roelofs, K. Anterior Prefrontal Brain Activity during Emotion Control Predicts Resilience to Post-Traumatic Stress Symptoms. Nat. Hum. Behav. 2021, 5, 1055–1064. [Google Scholar] [CrossRef]

- Dimitrakopoulos, G.N.; Kakkos, I.; Anastasiou, A.; Bezerianos, A.; Sun, Y.; Matsopoulos, G.K. Cognitive Reorganization Due to Mental Workload: A Functional Connectivity Analysis Based on Working Memory Paradigms. Appl. Sci. 2023, 13, 2129. [Google Scholar] [CrossRef]

- Gallegos Ayala, G.I.; Haslacher, D.; Krol, L.R.; Soekadar, S.R.; Zander, T.O. Assessment of Mental Workload across Cognitive Tasks Using a Passive Brain-Computer Interface Based on Mean Negative Theta-Band Amplitudes. Front. Neuroergonomics 2023, 4, 1233722. [Google Scholar] [CrossRef]

- Paban, V.; Modolo, J.; Mheich, A.; Hassan, M. Psychological Resilience Correlates with EEG Source-Space Brain Network Flexibility. Netw. Neurosci. 2019, 3, 539–550. [Google Scholar] [CrossRef]

- Protzak, J.; Gramann, K. EEG Beta-Modulations Reflect Age-Specific Motor Resource Allocation during Dual-Task Walking. Sci. Rep. 2021, 11, 16110. [Google Scholar] [CrossRef]

- Rejer, I.; Wacewicz, D.; Schab, M.; Romanowski, B.; Łukasiewicz, K.; Maciaszczyk, M. Stressors Length and the Habituation Effect-An EEG Study. Sensors 2022, 22, 6862. [Google Scholar] [CrossRef] [PubMed]

- Matthews, G.; Panganiban, A.R.; Wells, A.; Wohleber, R.W.; Reinerman-Jones, L.E. Metacognition, Hardiness, and Grit as Resilience Factors in Unmanned Aerial Systems (UAS) Operations: A Simulation Study. Front. Psychol. 2019, 10, 640. [Google Scholar] [CrossRef] [PubMed]

- Wu, K.; Yuan, J.; Ge, X.; Kakkos, I.; Qian, L.; Wang, S.; Yu, Y.; Li, C.; Sun, Y. Brain Network Reorganization in Response to Multi-Level Mental Workload in Simulated Flight Tasks. IEEE Trans. Cogn. Dev. Syst. 2024, 1–12. [Google Scholar] [CrossRef]

- Dimitrakopoulos, G.N.; Kakkos, I.; Dai, Z.; Lim, J.; deSouza, J.J.; Bezerianos, A.; Sun, Y. Task-Independent Mental Workload Classification Based Upon Common Multiband EEG Cortical Connectivity. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1940–1949. [Google Scholar] [CrossRef]

- Hag, A.; Handayani, D.; Pillai, T.; Mantoro, T.; Kit, M.H.; Al-Shargie, F. EEG Mental Stress Assessment Using Hybrid Multi-Domain Feature Sets of Functional Connectivity Network and Time-Frequency Features. Sensors 2021, 21, 6300. [Google Scholar] [CrossRef]

- Siddiqui, A.; Abu Hasan, R.; Saad Azhar Ali, S.; Elamvazuthi, I.; Lu, C.-K.; Tang, T.B. Detection of Low Resilience Using Data-Driven Effective Connectivity Measures. IEEE Trans. Neural Syst. Rehabil. Eng. 2024, 32, 3657–3668. [Google Scholar] [CrossRef]

- Safari, M.; Shalbaf, R.; Bagherzadeh, S.; Shalbaf, A. Classification of Mental Workload Using Brain Connectivity and Machine Learning on Electroencephalogram Data. Sci. Rep. 2024, 14, 9153. [Google Scholar] [CrossRef] [PubMed]

- Nirde, K.; Gunda, M.; Manthalkar, R.; Gajre, S. EEG Mental Arithmetic Task Levels Classification Using Machine Learning and Deep Learning Algorithms. In Proceedings of the 2023 3rd International conference on Artificial Intelligence and Signal Processing (AISP), Vijayawada, India, 18–20 March 2023; pp. 1–8. [Google Scholar]

- Li, Q.; Coulson Theodorsen, M.; Konvalinka, I.; Eskelund, K.; Karstoft, K.-I.; Bo Andersen, S.; Andersen, T.S. Resting-State EEG Functional Connectivity Predicts Post-Traumatic Stress Disorder Subtypes in Veterans. J. Neural Eng. 2022, 19, 066005. [Google Scholar] [CrossRef]

- Oie, K.; McDowell, K.; Metcalfe, J.; Hairston, W.D.; Kerick, S.; Lee, T.; Makeig, S. The Cognition and Neuroergonomics (CaN) Collaborative Technology Alliance (CTA): Scientific Vision, Approach, and Translational Paths; Army Research Lab: Adelphi, MD, USA, 2012; Volume ARL-SR-0252. [Google Scholar]

- Czech, A. Brain-Computer Interface Use to Control Military Weapons and Tools. In Proceedings of the Control, Computer Engineering and Neuroscience; Paszkiel, S., Ed.; Springer International Publishing: Cham, Switzerland, 2021; pp. 196–204. [Google Scholar]

- Scarpina, F.; Tagini, S. The Stroop Color and Word Test. Front. Psychol. 2017, 8, 557. [Google Scholar] [CrossRef]

- Schillinger, F.L.; De Smedt, B.; Grabner, R.H. When Errors Count: An EEG Study on Numerical Error Monitoring under Performance Pressure. ZDM Math. Educ. 2016, 48, 351–363. [Google Scholar] [CrossRef]

- Fang, Z.; Lynn, E.; Huc, M.; Fogel, S.; Knott, V.J.; Jaworska, N. Simultaneous EEG + fMRI Study of Brain Activity during an Emotional Stroop Task in Individuals in Remission from Depression. Cortex 2022, 155, 237–250. [Google Scholar] [CrossRef] [PubMed]

- Zorzos, I.; Kakkos, I.; Miloulis, S.T.; Anastasiou, A.; Ventouras, E.M.; Matsopoulos, G.K. Applying Neural Networks with Time-Frequency Features for the Detection of Mental Fatigue. Appl. Sci. 2023, 13, 1512. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An Open Source Toolbox for Analysis of Single-Trial EEG Dynamics Including Independent Component Analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [PubMed]

- Alam, R.; Zhao, H.; Goodwin, A.; Kavehei, O.; McEwan, A. Differences in Power Spectral Densities and Phase Quantities Due to Processing of EEG Signals. Sensors 2020, 20, 6285. [Google Scholar] [CrossRef]

- Quinlan, J.R. C4.5: Programs for Machine Learning, 1st ed.; Morgan Kaufmann: San Mateo, CA, USA, 1992; ISBN 978-1-55860-238-0. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.E. A Decision-Theoretic Generalization of On-Line Learning and an Application to Boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Dimitrakopoulos, G.N.; Vrahatis, A.G.; Plagianakos, V.; Sgarbas, K. Pathway Analysis Using XGBoost Classification in Biomedical Data. In Proceedings of the 10th Hellenic Conference on Artificial Intelligence, Patras, Greece, 9–12 July 2018; ACM: Patras, Greece; pp. 1–6. [Google Scholar]

- Kohavi, R. A Study of Cross-Validation and Bootstrap for Accuracy Estimation and Model Selection. In Proceedings of the 14th International Joint Conference on Artificial Intelligence—Volume 2, Montreal, QC, Canada, 20–25 August 1995; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1995; pp. 1137–1143. [Google Scholar]

- Shekar, B.H.; Dagnew, G. Grid Search-Based Hyperparameter Tuning and Classification of Microarray Cancer Data. In Proceedings of the 2019 Second International Conference on Advanced Computational and Communication Paradigms (ICACCP), Gangtok, India, 25–28 February 2019; pp. 1–8. [Google Scholar]

- Golland, P.; Liang, F.; Mukherjee, S.; Panchenko, D. Permutation Tests for Classification. In Learning Theory, Proceedings of the 18th Annual Conference on Learning Theory, COLT 2005, Bertinoro, Italy, 27–30 June 2005; Auer, P., Meir, R., Eds.; Springer: Berlin, Heidelberg, 2005; pp. 501–515. [Google Scholar]

- Shon, D.; Im, K.; Park, J.-H.; Lim, D.-S.; Jang, B.; Kim, J.-M. Emotional Stress State Detection Using Genetic Algorithm-Based Feature Selection on EEG Signals. Int. J. Environ. Res. Public Health 2018, 15, 2461. [Google Scholar] [CrossRef]

- Herman, J.P.; Figueiredo, H.; Mueller, N.K.; Ulrich-Lai, Y.; Ostrander, M.M.; Choi, D.C.; Cullinan, W.E. Central Mechanisms of Stress Integration: Hierarchical Circuitry Controlling Hypothalamo–Pituitary–Adrenocortical Responsiveness. Front. Neuroendocrinol. 2003, 24, 151–180. [Google Scholar] [CrossRef]

- Alonso, J.F.; Romero, S.; Ballester, M.R.; Antonijoan, R.M.; Mañanas, M.A. Stress Assessment Based on EEG Univariate Features and Functional Connectivity Measures. Physiol. Meas. 2015, 36, 1351–1365. [Google Scholar] [CrossRef]

- Tai, A.P.L.; Leung, M.-K.; Geng, X.; Lau, W.K.W. Conceptualizing Psychological Resilience through Resting-State Functional MRI in a Mentally Healthy Population: A Systematic Review. Front. Behav. Neurosci. 2023, 17, 1175064. [Google Scholar] [CrossRef]

- Master, S.L.; Amodio, D.M.; Stanton, A.L.; Yee, C.M.; Hilmert, C.J.; Taylor, S.E. Neurobiological Correlates of Coping through Emotional Approach. Brain Behav. Immun. 2009, 23, 27–35. [Google Scholar] [CrossRef]

- Chikhi, S.; Matton, N.; Blanchet, S. EEG Power Spectral Measures of Cognitive Workload: A Meta-Analysis. Psychophysiology 2022, 59, e14009. [Google Scholar] [CrossRef] [PubMed]

- Knyazev, G.G.; Bocharov, A.V.; Levin, E.A.; Savostyanov, A.N.; Slobodskoj-Plusnin, J.Y. Anxiety and Oscillatory Responses to Emotional Facial Expressions. Brain Res. 2008, 1227, 174–188. [Google Scholar] [CrossRef]

- Vanhollebeke, G.; De Smet, S.; De Raedt, R.; Baeken, C.; van Mierlo, P.; Vanderhasselt, M.-A. The Neural Correlates of Psychosocial Stress: A Systematic Review and Meta-Analysis of Spectral Analysis EEG Studies. Neurobiol. Stress. 2022, 18, 100452. [Google Scholar] [CrossRef] [PubMed]

- Vossel, S.; Geng, J.J.; Fink, G.R. Dorsal and Ventral Attention Systems. Neuroscientist 2014, 20, 150–159. [Google Scholar] [CrossRef] [PubMed]

- Song, B.-G.; Kang, N. Removal of Movement Artifacts and Assessment of Mental Stress Analyzing Electroencephalogram of Non-Driving Passengers under Whole-Body Vibration. Front. Neurosci. 2024, 18, 1328704. [Google Scholar] [CrossRef]

- Minguillon, J.; Lopez-Gordo, M.A.; Pelayo, F. Stress Assessment by Prefrontal Relative Gamma. Front. Comput. Neurosci. 2016, 10, 101. [Google Scholar] [CrossRef]

- Dell’Acqua, C.; Dal Bò, E.; Moretta, T.; Palomba, D.; Messerotti Benvenuti, S. EEG Time–Frequency Analysis Reveals Blunted Tendency to Approach and Increased Processing of Unpleasant Stimuli in Dysphoria. Sci. Rep. 2022, 12, 8161. [Google Scholar] [CrossRef]

- Gärtner, M.; Grimm, S.; Bajbouj, M. Frontal Midline Theta Oscillations during Mental Arithmetic: Effects of Stress. Front. Behav. Neurosci. 2015, 9, 96. [Google Scholar] [CrossRef]

- Ehrhardt, N.M.; Fietz, J.; Kopf-Beck, J.; Kappelmann, N.; Brem, A.-K. Separating EEG Correlates of Stress: Cognitive Effort, Time Pressure, and Social-Evaluative Threat. Eur. J. Neurosci. 2022, 55, 2464–2473. [Google Scholar] [CrossRef]

- Liu, Y.; Li, Z.; Bai, Y. Frontal and Parietal Lobes Play Crucial Roles in Understanding the Disorder of Consciousness: A Perspective from Electroencephalogram Studies. Front. Neurosci. 2023, 16, 1024278. [Google Scholar] [CrossRef] [PubMed]

- Allegretta, R.A.; Rovelli, K.; Balconi, M. The Role of Emotion Regulation and Awareness in Psychosocial Stress: An EEG-Psychometric Correlational Study. Healthcare 2024, 12, 1491. [Google Scholar] [CrossRef]

- Poppelaars, E.S.; Harrewijn, A.; Westenberg, P.M.; van der Molen, M.J.W. Frontal Delta-Beta Cross-Frequency Coupling in High and Low Social Anxiety: An Index of Stress Regulation? Cogn. Affect. Behav. Neurosci. 2018, 18, 764–777. [Google Scholar] [CrossRef]

- van Schouwenburg, M.R.; Zanto, T.P.; Gazzaley, A. Spatial Attention and the Effects of Frontoparietal Alpha Band Stimulation. Front. Hum. Neurosci. 2017, 10, 658. [Google Scholar] [CrossRef] [PubMed]

- Deng, Y.; Reinhart, R.M.; Choi, I.; Shinn-Cunningham, B.G. Causal Links between Parietal Alpha Activity and Spatial Auditory Attention. eLife 2019, 8, e51184. [Google Scholar] [CrossRef]

- Roy, Y.; Banville, H.; Albuquerque, I.; Gramfort, A.; Falk, T.H.; Faubert, J. Deep Learning-Based Electroencephalography Analysis: A Systematic Review. J. Neural Eng. 2019, 16, 051001. [Google Scholar] [CrossRef] [PubMed]

- Al-Shargie, F.; Kiguchi, M.; Badruddin, N.; Dass, S.C.; Hani, A.F.M.; Tang, T.B. Mental Stress Assessment Using Simultaneous Measurement of EEG and fNIRS. Biomed. Opt. Express 2016, 7, 3882–3898. [Google Scholar] [CrossRef]

- Rodeback, R.E.; Hedges-Muncy, A.; Hunt, I.J.; Carbine, K.A.; Steffen, P.R.; Larson, M.J. The Association Between Experimentally Induced Stress, Performance Monitoring, and Response Inhibition: An Event-Related Potential (ERP) Analysis. Front. Hum. Neurosci. 2020, 14, 189. [Google Scholar] [CrossRef]

| Classifier | Hyperparameter | Value |

|---|---|---|

| Decision Tree | Maximum Tree Depth | Unlimited |

| Minimum Samples per Split | 2 | |

| Random Forest | Maximum Tree Depth | Unlimited |

| Minimum Samples per Split | 2 | |

| Number of Trees | 200 | |

| AdaBoost | Learning Rate | 0.1 |

| Maximum Tree Depth | 6 | |

| Number of Boosting Rounds | 200 | |

| XGBoost | Learning Rate | 1.0 |

| Number of Weak Learners | 200 |

| Model | Accuracy | F1-Score | Precision | Recall |

|---|---|---|---|---|

| Decision Tree | 0.86 ** | 0.86 | 0.86 | 0.86 |

| Random Forest | 0.94 *** | 0.94 | 0.93 | 0.94 |

| AdaBoost | 0.87 ** | 0.87 | 0.86 | 0.87 |

| XGBoost | 0.95 *** | 0.95 | 0.95 | 0.94 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kakkos, I.; Tzavellas, E.; Feleskoura, E.; Mourtakos, S.; Kontopodis, E.; Vezakis, I.; Kalamatianos, T.; Synadinakis, E.; Matsopoulos, G.K.; Kalatzis, I.; et al. EEG-Based Assessment of Cognitive Resilience via Interpretable Machine Learning Models. AI 2025, 6, 112. https://doi.org/10.3390/ai6060112

Kakkos I, Tzavellas E, Feleskoura E, Mourtakos S, Kontopodis E, Vezakis I, Kalamatianos T, Synadinakis E, Matsopoulos GK, Kalatzis I, et al. EEG-Based Assessment of Cognitive Resilience via Interpretable Machine Learning Models. AI. 2025; 6(6):112. https://doi.org/10.3390/ai6060112

Chicago/Turabian StyleKakkos, Ioannis, Elias Tzavellas, Eleni Feleskoura, Stamatis Mourtakos, Eleftherios Kontopodis, Ioannis Vezakis, Theodosis Kalamatianos, Emmanouil Synadinakis, George K. Matsopoulos, Ioannis Kalatzis, and et al. 2025. "EEG-Based Assessment of Cognitive Resilience via Interpretable Machine Learning Models" AI 6, no. 6: 112. https://doi.org/10.3390/ai6060112

APA StyleKakkos, I., Tzavellas, E., Feleskoura, E., Mourtakos, S., Kontopodis, E., Vezakis, I., Kalamatianos, T., Synadinakis, E., Matsopoulos, G. K., Kalatzis, I., Ventouras, E. M., & Skouroliakou, A. (2025). EEG-Based Assessment of Cognitive Resilience via Interpretable Machine Learning Models. AI, 6(6), 112. https://doi.org/10.3390/ai6060112