Embedding Fear in Medical AI: A Risk-Averse Framework for Safety and Ethics

Abstract

1. Introduction

1.1. Context and Motivation

1.2. Aim and Scope

- (1)

- Can embedding a “fear instinct” in a medical AI system significantly enhance patient safety, and how would such a mechanism be implemented and evaluated in practice? (We hypothesize that it can by reducing harmful errors.)

- (2)

- What would an AI “fear” mechanism look like operationally, and how can it be integrated into existing AI architectures? (We propose a framework and draw parallels to human fear responses.)

- (3)

- What are the ethical, technical, and practical implications of such a system in healthcare? (We examine potential benefits, risks, and requirements for deployment.)

1.3. Ethical and Practical Imperatives for Risk-Sensitive Medical AI

1.4. Paper Structure

2. Conceptual Foundations

2.1. Fear in Biological and Artificial Systems

2.2. Fear in Artificial Systems

2.3. Translating Genuine Fear into Medical AI

2.4. “Fearless” Doctor

- Lack of sentience: Current AI systems, including medical AI agents, are not considered sentient and do not have consciousness or emotional experiences [52].

- Absence of biological mechanisms: AI systems lack the biological structures and processes that generate fear responses in humans and animals [49].

- Algorithmic decision-making: Medical AI agents operate based on programmed algorithms and data analysis, not emotional responses [53].

- No self-preservation instinct: Unlike living beings, AI systems do not have an innate drive for self-preservation that would trigger fear responses [54].

- Lack of personal consequences: AI systems do not experience personal consequences from their actions, which often drives fear in humans [55].

2.5. “Good” Doctor

2.6. Technical Components of Fear in AI Medical Agents

2.6.1. Integrated Decision-Making and Conflict Resolution

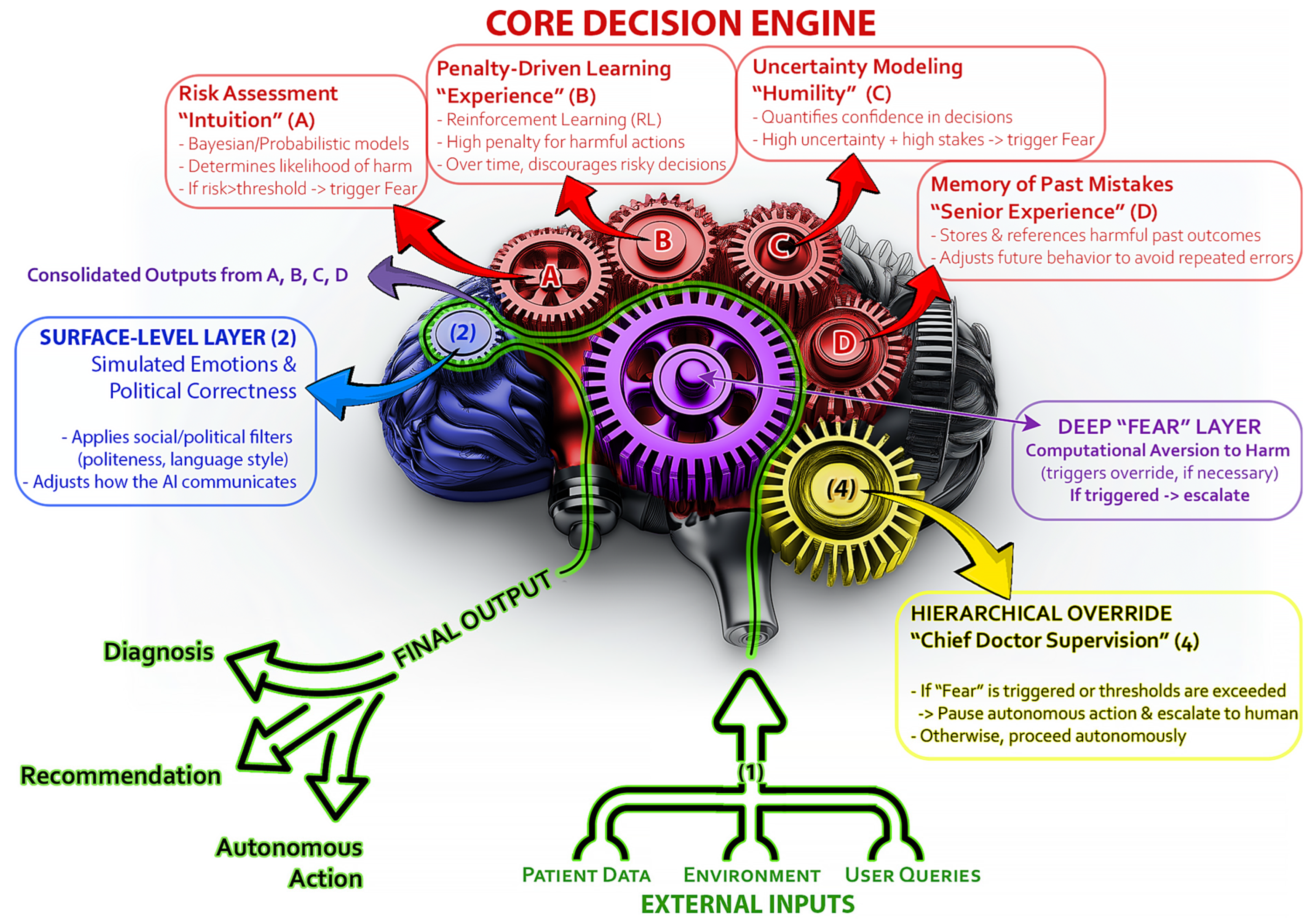

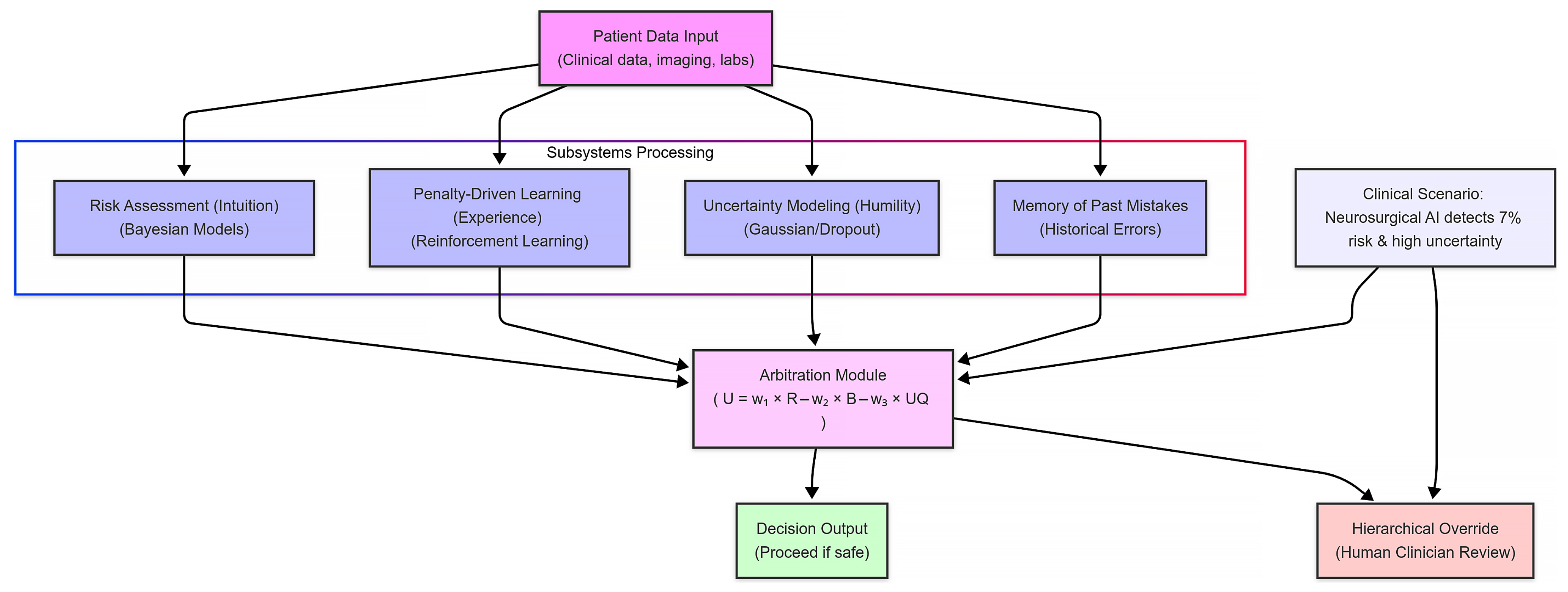

- Risk assessment (“intuition”): The AI continuously calculates the probability of adverse outcomes using Bayesian models.

- Penalty-driven learning (“experience”): It adjusts its internal parameters based on past near-miss events, assigning higher penalties to actions that have historically led to complications.

- Uncertainty modeling (“humility”): Through measures like dropout-based uncertainty or Gaussian processes, the system gauges its confidence in a decision.

- Hierarchical overrides (“chief doctor supervision”): When risk thresholds or uncertainty exceed pre-set limits, the AI flags the decision for human review rather than acting autonomously.

2.6.2. Subsystems of the “Fear Mechanism” in Autonomous AI Medical Agents

- Risk assessment (“intuition”):

- Penalty-driven learning (“experience”):

- Uncertainty modeling (“humility”):

- Hierarchical overrides (medical supervision):

- Memory of past mistakes (“experience”):

For instance, if the ‘do no harm’/fear principle strongly advises against surgery (surpassing a risk threshold), but the beneficence principle and patient’s informed consent together outweigh that (indicating high potential benefit and willingness), the AI might still recommend proceeding, albeit with cautionary notes. On the other hand, if the fear signal is overwhelming and expected benefit marginal, the AI would recommend against the surgery.

- Input: Patient anatomy and aneurysm data are fed into the system. This includes imaging, lab results, and other clinical measurements that characterize the patient’s condition.

- Risk calculation: The risk assessment module uses Bayesian models to compute the probability of adverse events. In our scenario, it determines there is a 7% risk of catastrophic bleeding during aneurysm clipping—a value that exceeds our predefined safety threshold.

- Uncertainty flag: Concurrently, the uncertainty modeling module evaluates the data using techniques like Gaussian processes. Due to atypical patient anatomy, it registers a high level of uncertainty in its prediction. This signals that the system’s confidence in the risk calculation is lower than desired.

- Override trigger: Given the elevated risk and uncertainty, the arbitration module combines these inputs using a weighted utility function. When the calculated utility falls below the safe threshold, the system triggers its hierarchical override. Instead of proceeding autonomously, it flags the case and escalates the decision to a human clinician for review.

2.6.3. Bayesian Networks and Risk Thresholds

- Bayesian probability: This is about updating your guess about something (e.g., “this treatment could harm”) as new info (e.g., symptoms) comes in, using Bayes’ theorem.

- Setting a threshold: You pick a danger cutoff—say, a 5% chance of harm. If the network calculates a risk above that, it triggers a safety step, like pausing AI for human review.

- Ongoing updates: The system keeps recalculating as new data (e.g., lab results) arrive. If the risk crosses the threshold, it acts instantly. Task-independent EEG signatures, as identified by Kakkos et al. [69], suggest that alpha and theta power shifts could refine our Bayesian risk thresholds, enhancing real-time caution triggers.

- Medical example: Imagine an AI spotting a serious illness. If it sees a 5%+ chance of a harmful misdiagnosis, it might ask for more tests or a doctor’s opinion.

- Finding balance: A low threshold might cause too many alerts, slowing things down. A high one might miss real dangers. Getting this right is key.

3. What Is the Rationale for Embedding Fear in Medical AI?

3.1. Patient Safety and Error Reduction

3.2. Enhancing Decision-Making

3.3. Human-AI Collaboration

4. Proposed Mechanism: Designing “Fear” in AI at a Deep Level

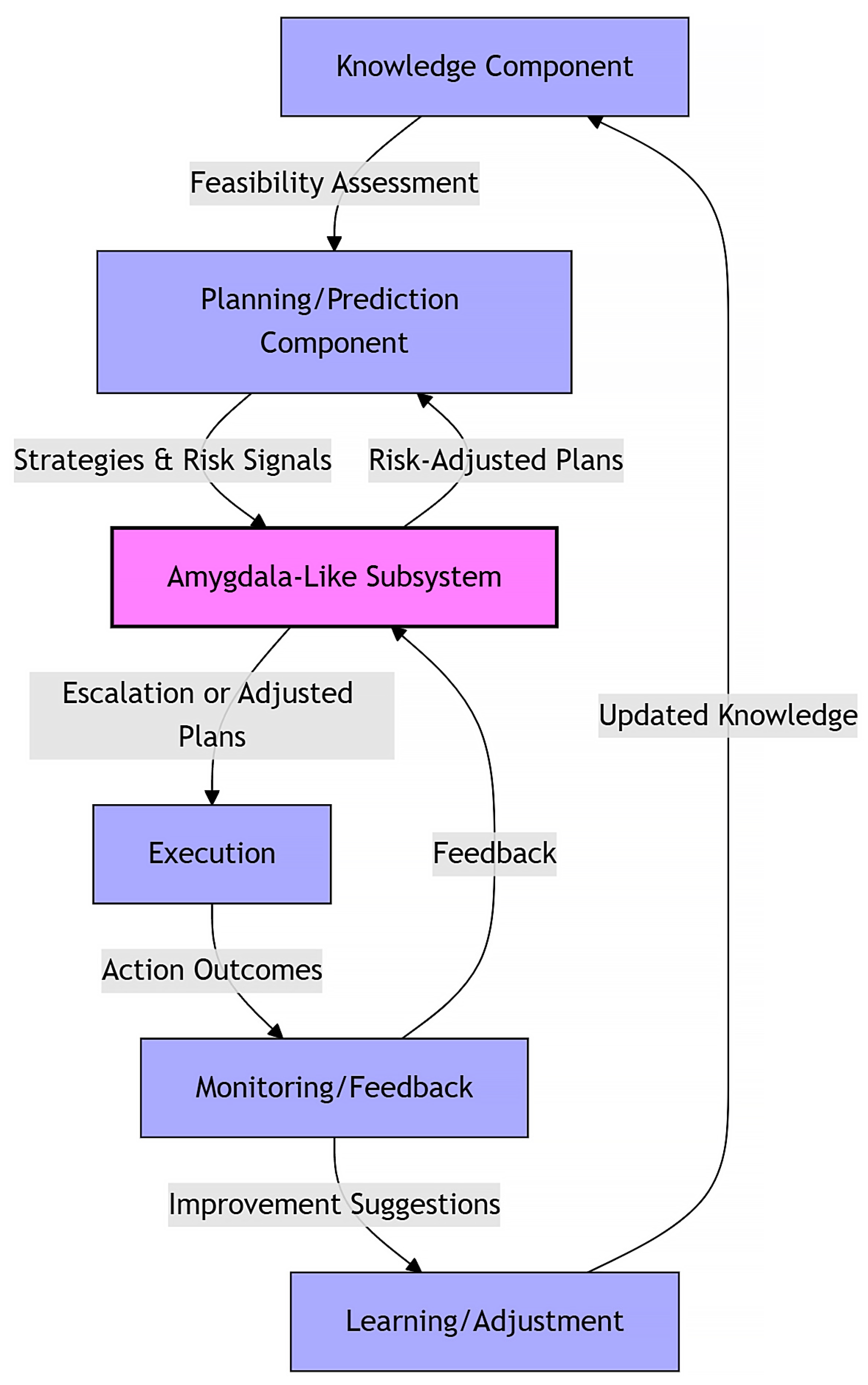

4.1. Biological Analogy and “Amygdala-Like” Subsystem

4.2. Core Components of the Proposed AI Architecture

- Knowledge component: Gathers and evaluates information to determine task feasibility and identify relevant data.

- Planning/prediction component: Simulates strategies to achieve objectives and evaluates potential outcomes, both favorable and adverse. It also sends risk signals to the amygdala-like subsystem based on predicted consequences.

- Amygdala-like subsystem: Acts as a central risk evaluator and also continuously monitors threats and influences the system by adjusting plans or triggering protective actions when necessary.

- Execution component: Implements the selected, risk-adjusted plan or decision.

- Monitoring/feedback component: Observes outcomes, flags deviations, and provides feedback to both the amygdala-like subsystem and the learning/adjustment component.

- Learning/adjustment component: Updates internal models and strategies based on feedback to improve future decision-making. Similarly, Lee et al. [93] employed generative models with padding to handle incomplete multi-omics data, suggesting a strategy for our architecture to adapt to sparse clinical inputs.

Real-World Workflow Example of Integration into Clinical Workflow

4.3. Integration and Functionality in Analogy to Human Emotions

- Fear analog: Strong penalty functions for harmful outcomes are embedded deeply within the decision process to discourage risky actions.

- Elation analog: Reward signals reinforce beneficial outcomes, encouraging optimal decision paths.

- Knowledge component: Collects data and evaluates task feasibility.

- Planning/prediction component: Simulates strategies and sends risk signals.

- Amygdala-like subsystem: Continuously monitors for threats and adjusts plans.

- Execution component: Implements risk-adjusted decisions.

- Monitoring/feedback component: Observes results and informs other components.

- Learning/adjustment component: Refines models to improve future decisions.

4.4. Black Boxes in Human Heads vs. Superintelligent AI Agents Beyond Human Comprehension

4.5. Embodied Emotions vs. Simulated Emotions

4.6. AI Autonomously Deciding Matters of Life and Death

4.7. Practical Considerations

Quantifying and Calibrating Caution

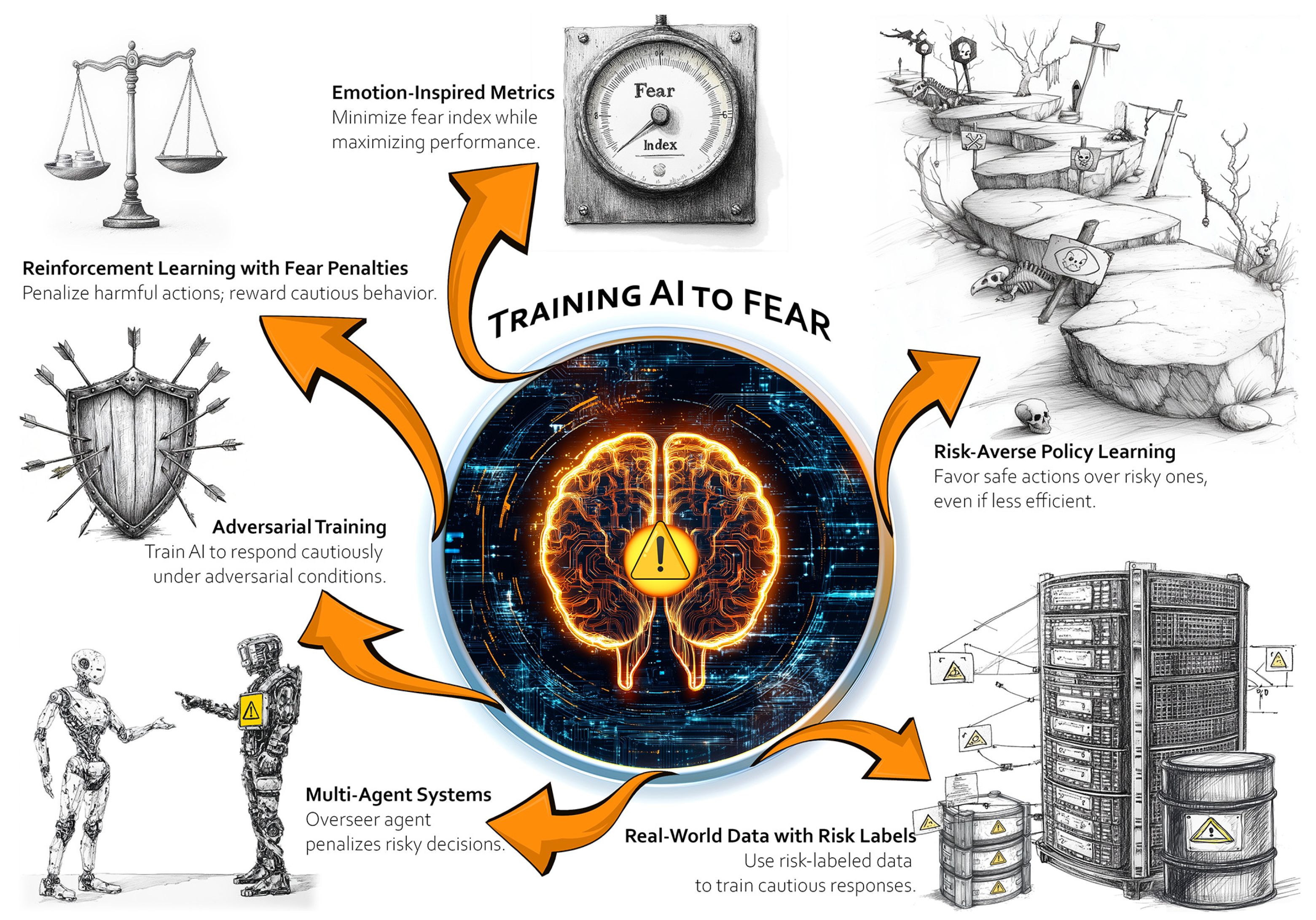

5. Potential Implementation Approaches

- Reinforcement learning with fear penalties: Define a reward function that heavily penalizes harmful actions or outcomes. Also reward cautious or consultative behavior in high-risk scenarios (e.g., asking for additional input, escalating to a human).

- Adversarial training: Expose the AI to adversarial conditions where harm is likely (e.g., deliberately ambiguous patient data) and train it to recognize and respond with caution.

- Multi-agent systems: Train the AI alongside a “human-like overseer” agent. When the AI makes risky decisions, the overseer “punishes” those actions, simulating an external accountability mechanism.

- Emotion-inspired metrics: Borrow metrics from affective computing to create proxies for fear, such as a “fear index” tied to the probability of harm or system uncertainty. Train the AI to minimize this index while maximizing overall performance.

- Real-world data with risk labels: Use datasets labelled with degrees of risk or harm (e.g., patient outcomes, near misses in healthcare settings). Train the AI to associate these labels with cautionary behavior.

- Risk-averse policy learning: Use risk-sensitive policies in reinforcement learning that explicitly avoid actions with even a small chance of severe harm, favoring safer, albeit less efficient, decisions.

6. Counterarguments and Ethical Considerations

6.1. Responsibility Dilemmas

6.2. Risk of Adversarial Manipulation

6.3. Risk of Overcautiousness

7. Broader Implications and Future Directions

7.1. The Current Horizon

7.2. Agentic AI and Ethical Considerations

7.3. Regulatory and Policy Considerations

7.4. High-Stakes Medical AI and General Superintelligence

7.4.1. Interpretability and Trust

7.4.2. Limitations

7.5. Applications Beyond Medicine

7.5.1. Applications in Justice Systems

7.5.2. Regulatory Oversight and Certification

7.6. Open Research Questions

- What methodologies can rigorously calibrate the “fear threshold” in AI systems across varying domains (medicine, law, etc.) to balance safety and effectiveness?

- How can we empirically evaluate the performance of a fear-based AI module in live or simulated high-stakes environments (e.g., through clinical trials or sandbox testing)?

- What frameworks should regulators develop to certify and monitor AI systems with embedded risk aversion instincts?

- How will the integration of such pseudo-instincts in AI impact human trust and teamwork with AI (e.g., will users become too reliant on or conversely suspicious of an AI that “feels” fear)?

8. Conclusions and Vision

8.1. Seven Key Insights

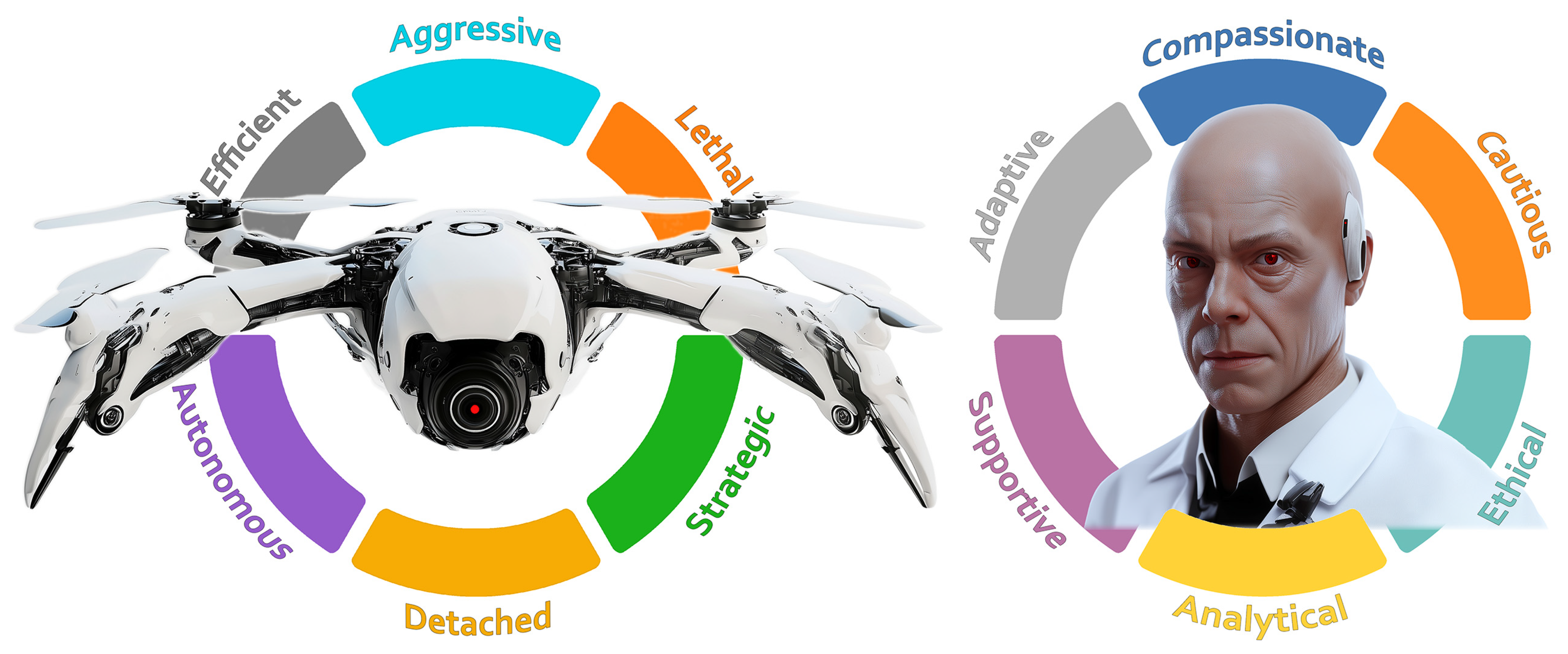

- Embedding “fear” as a core safeguard: Integrating an amygdala-like subsystem that instinctively identifies and avoids harmful actions adds an extra layer of protection, echoing the natural, reflexive threat responses seen in humans.

- Risk-averse AI enhances trust: When AI demonstrates caution—or “fear”—it can earn greater trust from healthcare professionals and patients, leading to more constructive collaboration.

- Translating military lessons to medicine: Insights from lethal autonomous weapon systems (LAWS) illustrate the dangers of “fearless” AI in life-and-death situations, highlighting the need for safety-focused designs in clinical settings where patient care is paramount.

- From surface-level filters to core emotional layers: Rather than relying solely on superficial safeguards (like politically correct outputs), embedding deeply rooted, domain-specific “fear” mechanisms ensures that risk sensitivity is an integral part of every decision-making step.

- Balanced architecture to overcome overcaution: While a fear-based approach can reduce harm, it must be carefully balanced. Excessive caution might block beneficial treatments, so establishing clear thresholds and human override options is critical.

- Blueprint for future high-stakes AI: Our framework—which combines risk modeling, penalty-driven learning, and memory of past errors—serves as a roadmap for developing emotionally aware AI systems not only in medicine but also in other critical fields like autonomous vehicles, law enforcement, and disaster response.

- Ethical imperative in autonomy: As AI becomes more autonomous, embedding “fear” at its core could act as both a moral and practical safeguard. Importantly, this mechanism can complement other ethical approaches, ensuring that human values and safety remain central in complex, high-stakes environments.

8.2. Call to Action

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hisan, U.K.; Amri, M.M. Artificial Intelligence for Human Life: A Critical Opinion from Medical Bioethics Perspective—Part II. J. Public Health Sci. 2022, 1, 112–130. [Google Scholar] [CrossRef]

- Savage, C.H.; Tanwar, M.; Elkassem, A.A.; Sturdivant, A.; Hamki, O.; Sotoudeh, H.; Sirineni, G.; Singhal, A.; Milner, D.; Jones, J.; et al. Prospective Evaluation of Artificial Intelligence Triage of Intracranial Hemorrhage on Noncontrast Head CT Examinations. Am. J. Roentgenol. 2024, 223, e2431639. [Google Scholar] [CrossRef] [PubMed]

- Confronting the Criticisms Facing Watson for Oncology—The ASCO Post. Available online: https://ascopost.com/issues/september-10-2019/confronting-the-criticisms-facing-watson-for-oncology/ (accessed on 2 May 2025).

- Szabóová, M.; Sarnovský, M.; Krešňáková, V.M.; Machová, K. Emotion Analysis in Human–Robot Interaction. Electronics 2020, 9, 1761. [Google Scholar] [CrossRef]

- Huang, J.Y.; Lee, W.P.; Chen, C.C.; Dong, B.W. Developing Emotion-Aware Human–Robot Dialogues for Domain-Specific and Goal-Oriented Tasks. Robotics 2020, 9, 31. [Google Scholar] [CrossRef]

- Alonso-Martín, F.; Malfaz, M.; Sequeira, J.; Gorostiza, J.F.; Salichs, M.A. A Multimodal Emotion Detection System during Human–Robot Interaction. Sensors 2013, 13, 15549–15581. [Google Scholar] [CrossRef] [PubMed]

- Gutiérrez-Martín, L.; Romero-Perales, E.; de Baranda Andújar, C.S.; Canabal-Benito, M.F.; Rodríguez-Ramos, G.E.; Toro-Flores, R.; López-Ongil, S.; López-Ongil, C. Fear Detection in Multimodal Affective Computing: Physiological Signals versus Catecholamine Concentration. Sensors 2022, 22, 4023. [Google Scholar] [CrossRef]

- Korcsok, B.; Konok, V.; Persa, G.; Faragó, T.; Niitsuma, M.; Miklósi, Á.; Korondi, P.; Baranyi, P.; Gácsi, M. Biologically Inspired Emotional Expressions for Artificial Agents. Front. Psychol. 2018, 9, 388957. [Google Scholar] [CrossRef]

- Spezialetti, M.; Placidi, G.; Rossi, S. Emotion Recognition for Human-Robot Interaction: Recent Advances and Future Perspectives. Front. Robot. AI 2020, 7, 532279. [Google Scholar] [CrossRef]

- Fernández-Rodicio, E.; Maroto-Gómez, M.; Castro-González, Á.; Malfaz, M.; Salichs, M. Emotion and Mood Blending in Embodied Artificial Agents: Expressing Affective States in the Mini Social Robot. Int. J. Soc. Robot. 2022, 14, 1841–1864. [Google Scholar] [CrossRef]

- Petrescu, L.; Petrescu, C.; Oprea, A.; Mitruț, O.; Moise, G.; Moldoveanu, A.; Moldoveanu, F. Machine Learning Methods for Fear Classification Based on Physiological Features. Sensors 2021, 21, 4519. [Google Scholar] [CrossRef]

- Faria, D.R.; Godkin, A.L.; da S. Ayrosa, P.P. Advancing Emotionally Aware Child-Robot Interaction with Biophysical Data and Insight-Driven Affective Computing. Sensors 2025, 25, 1161. [Google Scholar] [CrossRef] [PubMed]

- (PDF) Use of Autonomous Systems in Military Operations. Available online: https://www.academia.edu/126270728/Use_of_Autonomous_Systems_in_Military_Operations (accessed on 12 May 2025).

- Taddeo, M.; Blanchard, A. A Comparative Analysis of the Definitions of Autonomous Weapons Systems. Sci. Eng. Ethics 2022, 28, 37. [Google Scholar] [CrossRef]

- Kahn, L. Lethal Autonomous Weapon Systems and Respect for Human Dignity. Front. Big Data 2022, 5, 999293. [Google Scholar] [CrossRef]

- Cestonaro, C.; Delicati, A.; Marcante, B.; Caenazzo, L.; Tozzo, P. Defining Medical Liability When Artificial Intelligence Is Applied on Diagnostic Algorithms: A Systematic Review. Front. Med. 2023, 10, 1305756. [Google Scholar] [CrossRef]

- Ilikhan, S.U.; Özer, M.; Tanberkan, H.; Bozkurt, V. How to Mitigate the Risks of Deployment of Artificial Intelligence in Medicine? Turk. J. Med. Sci. 2024, 54, 483–492. [Google Scholar] [CrossRef]

- Kerstan, S.; Bienefeld, N.; Grote, G. Choosing Human over AI Doctors? How Comparative Trust Associations and Knowledge Relate to Risk and Benefit Perceptions of AI in Healthcare. Risk Anal. 2024, 44, 939–957. [Google Scholar] [CrossRef] [PubMed]

- Starke, G.; van den Brule, R.; Elger, B.S.; Haselager, P. Intentional Machines: A Defence of Trust in Medical Artificial Intelligence. Bioethics 2022, 36, 154–161. [Google Scholar] [CrossRef] [PubMed]

- Witkowski, K.; Okhai, R.; Neely, S.R. Public Perceptions of Artificial Intelligence in Healthcare: Ethical Concerns and Opportunities for Patient-Centered Care. BMC Med. Ethics 2024, 25, 74. [Google Scholar] [CrossRef]

- Hallowell, N.; Badger, S.; Sauerbrei, A.; Nellåker, C.; Kerasidou, A. “I Don’t Think People Are Ready to Trust These Algorithms at Face Value”: Trust and the Use of Machine Learning Algorithms in the Diagnosis of Rare Disease. BMC Med. Ethics 2022, 23, 112. [Google Scholar] [CrossRef]

- Dlugatch, R.; Georgieva, A.; Kerasidou, A. Trustworthy Artificial Intelligence and Ethical Design: Public Perceptions of Trustworthiness of an AI-Based Decision-Support Tool in the Context of Intrapartum Care. BMC Med. Ethics 2023, 24, 42. [Google Scholar] [CrossRef]

- Kiener, M. Artificial Intelligence in Medicine and the Disclosure of Risks. AI Soc. 2021, 36, 705–713. [Google Scholar] [CrossRef]

- Nolan, P. Artificial Intelligence in Medicine—Is Too Much Transparency a Good Thing? Med. Leg. J. 2023, 91, 193–197. [Google Scholar] [CrossRef] [PubMed]

- Lennartz, S.; Dratsch, T.; Zopfs, D.; Persigehl, T.; Maintz, D.; Hokamp, N.G.; dos Santos, D.P. Use and Control of Artificial Intelligence in Patients Across the Medical Workflow: Single-Center Questionnaire Study of Patient Perspectives. J. Med. Internet Res. 2021, 23, e24221. [Google Scholar] [CrossRef] [PubMed]

- Marques, M.; Almeida, A.; Pereira, H. The Medicine Revolution Through Artificial Intelligence: Ethical Challenges of Machine Learning Algorithms in Decision-Making. Cureus 2024, 16, e69405. [Google Scholar] [CrossRef] [PubMed]

- Saenz, A.D.; Harned, Z.; Banerjee, O.; Abràmoff, M.D.; Rajpurkar, P. Autonomous AI Systems in the Face of Liability, Regulations and Costs. NPJ Digit. Med. 2023, 6, 185. [Google Scholar] [CrossRef]

- Thurzo, A.; Urbanová, W.; Novák, B.; Czako, L.; Siebert, T.; Stano, P.; Mareková, S.; Fountoulaki, G.; Kosnáčová, H.; Varga, I. Where Is the Artificial Intelligence Applied in Dentistry? Systematic Review and Literature Analysis. Healthcare 2022, 10, 1269. [Google Scholar] [CrossRef]

- Aldhafeeri, F.M. Navigating the Ethical Landscape of Artificial Intelligence in Radiography: A Cross-Sectional Study of Radiographers’ Perspectives. BMC Med. Ethics 2024, 25, 52. [Google Scholar] [CrossRef]

- Tomášik, J.; Zsoldos, M.; Majdáková, K.; Fleischmann, A.; Oravcová, Ľ.; Sónak Ballová, D.; Thurzo, A. The Potential of AI-Powered Face Enhancement Technologies in Face-Driven Orthodontic Treatment Planning. Appl. Sci. 2024, 14, 7837. [Google Scholar] [CrossRef]

- Tomášik, J.; Zsoldos, M.; Oravcová, Ľ.; Lifková, M.; Pavleová, G.; Strunga, M.; Thurzo, A. AI and Face-Driven Orthodontics: A Scoping Review of Digital Advances in Diagnosis and Treatment Planning. AI 2024, 5, 158–176. [Google Scholar] [CrossRef]

- Kováč, P.; Jackuliak, P.; Bražinová, A.; Varga, I.; Aláč, M.; Smatana, M.; Lovich, D.; Thurzo, A. Artificial Intelligence-Driven Facial Image Analysis for the Early Detection of Rare Diseases: Legal, Ethical, Forensic, and Cybersecurity Considerations. AI 2024, 5, 990–1010. [Google Scholar] [CrossRef]

- Rahimzadeh, V.; Baek, J.; Lawson, J.; Dove, E.S. A Qualitative Interview Study to Determine Barriers and Facilitators of Implementing Automated Decision Support Tools for Genomic Data Access. BMC Med. Ethics 2024, 25, 51. [Google Scholar] [CrossRef] [PubMed]

- Thurzo, A.; Kurilová, V.; Varga, I. Artificial Intelligence in Orthodontic Smart Application for Treatment Coaching and Its Impact on Clinical Performance of Patients Monitored with AI-TeleHealth System. Healthcare 2021, 9, 1695. [Google Scholar] [CrossRef]

- Thurzo, A.; Varga, I. Advances in 4D Shape-Memory Resins for AI-Aided Personalized Scaffold Bioengineering. Bratisl. Med. J. 2025, 126, 140–145. [Google Scholar] [CrossRef]

- Kim, J.J.; Jung, M.W. Fear Paradigms: The Times They Are a-Changin’. Curr. Opin. Behav. Sci. 2018, 24, 38–43. [Google Scholar] [CrossRef]

- Gross, C.T.; Canteras, N.S. The Many Paths to Fear. Nat. Rev. Neurosci. 2012, 13, 651–658. [Google Scholar] [CrossRef] [PubMed]

- Milton, A.L. Fear Not: Recent Advances in Understanding the Neural Basis of Fear Memories and Implications for Treatment Development. F1000Res 2019, 8, 1948. [Google Scholar] [CrossRef] [PubMed]

- Steimer, T. The Biology of Fear- and Anxiety-Related Behaviors. Dialogues Clin. Neurosci. 2002, 4, 231–249. [Google Scholar] [CrossRef]

- Cyrulnik, B. Ethology of Anxiety in Phylogeny and Ontogeny. Acta Psychiatr. Scand. Suppl. 1998, 393, 44–49. [Google Scholar] [CrossRef]

- Delini-Stula, A. Psychobiology of Fear. Schweiz. Rundsch. Med. Prax. 1992, 81, 259–265. [Google Scholar]

- Ren, C.; Tao, Q. Neural Circuits Underlying Innate Fear. Adv. Exp. Med. Biol. 2020, 1284, 1–7. [Google Scholar] [CrossRef]

- Bălan, O.; Moise, G.; Moldoveanu, A.; Leordeanu, M.; Moldoveanu, F. Fear Level Classification Based on Emotional Dimensions and Machine Learning Techniques. Sensors 2019, 19, 1738. [Google Scholar] [CrossRef] [PubMed]

- Yamamori, Y.; Robinson, O.J. Computational Perspectives on Human Fear and Anxiety. Neurosci. Biobehav. Rev. 2023, 144, 104959. [Google Scholar] [CrossRef] [PubMed]

- Abend, R.; Burk, D.; Ruiz, S.G.; Gold, A.L.; Napoli, J.L.; Britton, J.C.; Michalska, K.J.; Shechner, T.; Winkler, A.M.; Leibenluft, E.; et al. Computational Modeling of Threat Learning Reveals Links with Anxiety and Neuroanatomy in Humans. Elife 2022, 11, e66169. [Google Scholar] [CrossRef]

- Asimov, I. Runaround. In I, Robot; Gnome Press: New York, NY, USA, 1950; pp. 40–55. [Google Scholar]

- Organisation for Economic Co-operation; Development OECD AI Principles | OECD. Available online: https://www.oecd.org/en/topics/sub-issues/ai-principles.html (accessed on 12 May 2025).

- Steimers, A.; Schneider, M. Sources of Risk of AI Systems. Int. J. Environ. Res. Public. Health 2022, 19, 3641. [Google Scholar] [CrossRef] [PubMed]

- den Dulk, P.; Heerebout, B.T.; Phaf, R.H. A Computational Study into the Evolution of Dual-Route Dynamics for Affective Processing. J. Cogn. Neurosci. 2003, 15, 194–208. [Google Scholar] [CrossRef]

- Graterol, W.; Diaz-Amado, J.; Cardinale, Y.; Dongo, I.; Lopes-Silva, E.; Santos-Libarino, C. Emotion Detection for Social Robots Based on NLP Transformers and an Emotion Ontology. Sensors 2021, 21, 1322. [Google Scholar] [CrossRef]

- Rizzi, C.; Johnson, C.G.; Fabris, F.; Vargas, P.A. A Situation-Aware Fear Learning (SAFEL) Model for Robots. Neurocomputing 2017, 221, 32–47. [Google Scholar] [CrossRef]

- Schwitzgebel, E. AI Systems Must Not Confuse Users about Their Sentience or Moral Status. Patterns 2023, 4, 100818. [Google Scholar] [CrossRef]

- McKee, K.R.; Bai, X.; Fiske, S.T. Humans Perceive Warmth and Competence in Artificial Intelligence. iScience 2023, 26, 107256. [Google Scholar] [CrossRef]

- Shank, D.B.; Gott, A. People’s Self-Reported Encounters of Perceiving Mind in Artificial Intelligence. Data Brief. 2019, 25, 104220. [Google Scholar] [CrossRef]

- Alkhalifah, J.M.; Bedaiwi, A.M.; Shaikh, N.; Seddiq, W.; Meo, S.A. Existential Anxiety about Artificial Intelligence (AI)- Is It the End of Humanity Era or a New Chapter in the Human Revolution: Questionnaire-Based Observational Study. Front. Psychiatry 2024, 15, 1368122. [Google Scholar] [CrossRef]

- Blanco-Ruiz, M.; Sainz-De-baranda, C.; Gutiérrez-Martín, L.; Romero-Perales, E.; López-Ongil, C. Emotion Elicitation Under Audiovisual Stimuli Reception: Should Artificial Intelligence Consider the Gender Perspective? Int. J. Environ. Res. Public. Health 2020, 17, 8534. [Google Scholar] [CrossRef]

- Dopelt, K.; Bachner, Y.G.; Urkin, J.; Yahav, Z.; Davidovitch, N.; Barach, P. Perceptions of Practicing Physicians and Members of the Public on the Attributes of a “Good Doctor”. Healthcare 2021, 10, 73. [Google Scholar] [CrossRef] [PubMed]

- Sauerbrei, A.; Kerasidou, A.; Lucivero, F.; Hallowell, N. The Impact of Artificial Intelligence on the Person-Centred, Doctor-Patient Relationship: Some Problems and Solutions. BMC Med. Inform. Decis. Mak. 2023, 23, 73. [Google Scholar] [CrossRef] [PubMed]

- Schnelle, C.; Jones, M.A. Characteristics of Exceptionally Good Doctors-A Survey of Public Adults. Heliyon 2023, 9, e13115. [Google Scholar] [CrossRef] [PubMed]

- Kiefer, C.S.; Colletti, J.E.; Bellolio, M.F.; Hess, E.P.; Woolridge, D.P.; Thomas, K.B.; Sadosty, A.T. The “Good” Dean’s Letter. Acad. Med. 2010, 85, 1705–1708. [Google Scholar] [CrossRef]

- Kotzee, B.; Ignatowicz, A.; Thomas, H. Virtue in Medical Practice: An Exploratory Study. HEC Forum 2017, 29, 1–19. [Google Scholar] [CrossRef]

- Fones, C.S.; Kua, E.H.; Goh, L.G. ’What Makes a Good Doctor?’--Views of the Medical Profession and the Public in Setting Priorities for Medical Education. Singap. Med. J. 1998, 39, 537–542. [Google Scholar]

- Yoo, H.H.; Lee, J.K.; Kim, A. Perceptual Comparison of the “Good Doctor” Image between Faculty and Students in Medical School. Korean J. Med. Educ. 2015, 27, 291–300. [Google Scholar] [CrossRef][Green Version]

- Maudsley, G.; Williams, E.M.I.; Taylor, D.C.M. Junior Medical Students’ Notions of a “good Doctor” and Related Expectations: A Mixed Methods Study. Med. Educ. 2007, 41, 476–486. [Google Scholar] [CrossRef]

- Meier, L.J.; Hein, A.; Diepold, K.; Buyx, A. Algorithms for Ethical Decision-Making in the Clinic: A Proof of Concept. Am. J. Bioeth. 2022, 22, 4–20. [Google Scholar] [CrossRef]

- Thurzo, A.; Varga, I. Revisiting the Role of Review Articles in the Age of AI-Agents: Integrating AI-Reasoning and AI-Synthesis Reshaping the Future of Scientific Publishing. Bratisl. Med. J. 2025, 126, 381–393. [Google Scholar] [CrossRef]

- Kakkos, I.; Dimitrakopoulos, G.N.; Gao, L.; Zhang, Y.; Qi, P.; Matsopoulos, G.K.; Thakor, N.; Bezerianos, A.; Sun, Y. Mental Workload Drives Different Reorganizations of Functional Cortical Connectivity Between 2D and 3D Simulated Flight Experiments. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1704–1713. [Google Scholar] [CrossRef] [PubMed]

- Abooelzahab, D.; Zaher, N.; Soliman, A.H.; Chibelushi, C. A Combined Windowing and Deep Learning Model for the Classification of Brain Disorders Based on Electroencephalogram Signals. AI 2025, 6, 42. [Google Scholar] [CrossRef]

- Kakkos, I.; Dimitrakopoulos, G.N.; Sun, Y.; Yuan, J.; Matsopoulos, G.K.; Bezerianos, A.; Sun, Y. EEG Fingerprints of Task-Independent Mental Workload Discrimination. IEEE J. Biomed. Health Inform. 2021, 25, 3824–3833. [Google Scholar] [CrossRef]

- Thurzo, A. Provable AI Ethics and Explainability in Medical and Educational AI Agents: Trustworthy Ethical Firewall. Electronics 2025, 14, 1294. [Google Scholar] [CrossRef]

- Zhang, X.; Chan, F.T.S.; Yan, C.; Bose, I. Towards Risk-Aware Artificial Intelligence and Machine Learning Systems: An Overview. Decis. Support. Syst. 2022, 159, 113800. [Google Scholar] [CrossRef]

- Aref, Z.; Wei, S.; Mandayam, N.B. Human-AI Collaboration in Cloud Security: Cognitive Hierarchy-Driven Deep Reinforcement Learning. arXiv 2025, arXiv:2502.16054. [Google Scholar]

- Zhao, Y.; Efraim Aguilar Escamill, J.; Lu, W.; Wang, H. RA-PbRL: Provably Efficient Risk-Aware Preference-Based Reinforcement Learning. Adv. Neural Inf. Process. Syst. 2024, 37, 60835–60871. [Google Scholar]

- Hejna, J.; Sadigh, D. Few-Shot Preference Learning for Human-in-the-Loop RL. In Proceedings of the 6th Conference on Robot Learning, Atlanta, GA, USA, 6–9 November 2023. [Google Scholar]

- Festor, P.; Jia, Y.; Gordon, A.C.; Aldo Faisal, A.; Habli, I.; Komorowski, M. Assuring the Safety of AI-Based Clinical Decision Support Systems: A Case Study of the AI Clinician for Sepsis Treatment. BMJ Health Care Inform. 2022, 29, e100549. [Google Scholar] [CrossRef]

- Rowe, N.C. The Comparative Ethics of Artificial-Intelligence Methods for Military Applications. Front. Big Data 2022, 5, 991759. [Google Scholar] [CrossRef] [PubMed]

- Arbelaez Ossa, L.; Lorenzini, G.; Milford, S.R.; Shaw, D.; Elger, B.S.; Rost, M. Integrating Ethics in AI Development: A Qualitative Study. BMC Med. Ethics 2024, 25, 10. [Google Scholar] [CrossRef] [PubMed]

- Hippocrates. “The Oath”. In Hippocrates; Jones, W.H.S., Ed.; Harvard University Press: Cambridge, MA, USA, 1923; Volume 1, pp. 298–299, Loeb Classical Library No. 147. [Google Scholar] [CrossRef]

- Youldash, M.; Rahman, A.; Alsayed, M.; Sebiany, A.; Alzayat, J.; Aljishi, N.; Alshammari, G.; Alqahtani, M. Early Detection and Classification of Diabetic Retinopathy: A Deep Learning Approach. AI 2024, 5, 2586–2617. [Google Scholar] [CrossRef]

- Bienefeld, N.; Keller, E.; Grote, G. Human-AI Teaming in Critical Care: A Comparative Analysis of Data Scientists’ and Clinicians’ Perspectives on AI Augmentation and Automation. J. Med. Internet Res. 2024, 26, e50130. [Google Scholar] [CrossRef]

- Shuaib, A. Transforming Healthcare with AI: Promises, Pitfalls, and Pathways Forward. Int. J. Gen. Med. 2024, 17, 1765–1771. [Google Scholar] [CrossRef] [PubMed]

- Funer, F.; Tinnemeyer, S.; Liedtke, W.; Salloch, S. Clinicians’ Roles and Necessary Levels of Understanding in the Use of Artificial Intelligence: A Qualitative Interview Study with German Medical Students. BMC Med. Ethics 2024, 25, 107. [Google Scholar] [CrossRef]

- Kakkos, I.; Vagenas, T.P.; Zygogianni, A.; Matsopoulos, G.K. Towards Automation in Radiotherapy Planning: A Deep Learning Approach for the Delineation of Parotid Glands in Head and Neck Cancer. Bioengineering 2024, 11, 214. [Google Scholar] [CrossRef]

- Camlet, A.; Kusiak, A.; Świetlik, D. Application of Conversational AI Models in Decision Making for Clinical Periodontology: Analysis and Predictive Modeling. AI 2025, 6, 3. [Google Scholar] [CrossRef]

- Mohanasundari, S.K.; Kalpana, M.; Madhusudhan, U.; Vasanthkumar, K.; Rani, B.; Singh, R.; Vashishtha, N.; Bhatia, V. Can Artificial Intelligence Replace the Unique Nursing Role? Cureus 2023, 15, e51150. [Google Scholar] [CrossRef]

- Kandpal, A.; Gupta, R.K.; Singh, A. Ischemic Stroke Lesion Segmentation on Multiparametric CT Perfusion Maps Using Deep Neural Network. AI 2025, 6, 15. [Google Scholar] [CrossRef]

- Riva, G.; Mantovani, F.; Wiederhold, B.K.; Marchetti, A.; Gaggioli, A. Psychomatics-A Multidisciplinary Framework for Understanding Artificial Minds; Mary Ann Liebert Inc.: Larchmont, NY, USA, 2024. [Google Scholar]

- Willem, T.; Fritzsche, M.C.; Zimmermann, B.M.; Sierawska, A.; Breuer, S.; Braun, M.; Ruess, A.K.; Bak, M.; Schönweitz, F.B.; Meier, L.J.; et al. Embedded Ethics in Practice: A Toolbox for Integrating the Analysis of Ethical and Social Issues into Healthcare AI Research. Sci. Eng. Ethics 2025, 31, 3. [Google Scholar] [CrossRef] [PubMed]

- Cui, H.; Yasseri, T. AI-Enhanced Collective Intelligence. Patterns 2024, 5, 101074. [Google Scholar] [CrossRef]

- Strachan, J.W.A.; Albergo, D.; Borghini, G.; Pansardi, O.; Scaliti, E.; Gupta, S.; Saxena, K.; Rufo, A.; Panzeri, S.; Manzi, G.; et al. Testing Theory of Mind in Large Language Models and Humans. Nat. Hum. Behav. 2024, 8, 1285–1295. [Google Scholar] [CrossRef] [PubMed]

- Jang, G.; Kragel, P.A. Understanding Human Amygdala Function with Artificial Neural Networks. J. Neurosci. 2024, 45, e1436242025. [Google Scholar] [CrossRef]

- He, X.; Wu, J.; Huang, Z.; Hu, Z.; Wang, J.; Sangiovanni-Vincentelli, A.; Lv, C. Fear-Neuro-Inspired Reinforcement Learning for Safe Autonomous Driving. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 267–279. [Google Scholar] [CrossRef] [PubMed]

- Lee, H.-S.; Hong, S.-H.; Kim, G.-H.; You, H.-J.; Lee, E.-Y.; Jeong, J.-H.; Ahn, J.-W.; Kim, J.-H. Generative Models Utilizing Padding Can Efficiently Integrate and Generate Multi-Omics Data. AI 2024, 5, 1614–1632. [Google Scholar] [CrossRef]

- Jeyaraman, N.; Jeyaraman, M.; Yadav, S.; Ramasubramanian, S.; Balaji, S. Revolutionizing Healthcare: The Emerging Role of Quantum Computing in Enhancing Medical Technology and Treatment. Cureus 2024, 16, e67486. [Google Scholar] [CrossRef]

- Stiefel, K.M.; Coggan, J.S. The Energy Challenges of Artificial Superintelligence. Front. Artif. Intell. 2023, 6, 1240653. [Google Scholar] [CrossRef]

- Verma, V.R.; Nishad, D.K.; Sharma, V.; Singh, V.K.; Verma, A.; Shah, D.R. Quantum Machine Learning for Lyapunov-Stabilized Computation Offloading in next-Generation MEC Networks. Sci. Rep. 2025, 15, 405. [Google Scholar] [CrossRef]

- Mutawa, A.M. Attention-Based Hybrid Deep Learning Models for Classifying COVID-19 Genome Sequences. AI 2025, 6, 4. [Google Scholar] [CrossRef]

- Müller, V.C. Autonomous Killer Robots Are Probably Good News. In Drones and Responsibility: Legal, Philosophical and Socio-Technical Perspectives on Remotely Controlled Weapons; Routledge: London, UK; New York, NY, USA, 2016; pp. 67–81. [Google Scholar] [CrossRef]

- Petersson, L.; Vincent, K.; Svedberg, P.; Nygren, J.M.; Larsson, I. Ethical Perspectives on Implementing AI to Predict Mortality Risk in Emergency Department Patients: A Qualitative Study. Stud. Health Technol. Inform. 2023, 302, 676–677. [Google Scholar] [CrossRef] [PubMed]

- Abràmoff, M.D.; Tobey, D.; Char, D.S. Lessons Learned About Autonomous AI: Finding a Safe, Efficacious, and Ethical Path Through the Development Process. Am. J. Ophthalmol. 2020, 214, 134–142. [Google Scholar] [CrossRef] [PubMed]

- Leith, T.; Correll, J.A.; Davidson, E.E.; Gottula, A.L.; Majhail, N.K.; Mathias, E.J.; Pribble, J.; Roberts, N.B.; Scott, I.G.; Cranford, J.A.; et al. Bystander Interaction with a Novel Multipurpose Medical Drone: A Simulation Trial. Resusc. Plus 2024, 18, 100633. [Google Scholar] [CrossRef]

- Sharma, R.; Salman, S.; Gu, Q.; Freeman, W.D. Advancing Neurocritical Care with Artificial Intelligence and Machine Learning: The Promise, Practicalities, and Pitfalls Ahead. Neurol. Clin. 2025, 43, 153–165. [Google Scholar] [CrossRef] [PubMed]

- Billing, D.C.; Fordy, G.R.; Friedl, K.E.; Hasselstrøm, H. The Implications of Emerging Technology on Military Human Performance Research Priorities. J. Sci. Med. Sport. 2021, 24, 947–953. [Google Scholar] [CrossRef]

- de Sio, F.S.; van den Hoven, J. Meaningful Human Control over Autonomous Systems: A Philosophical Account. Front. Robot. AI 2018, 5, 323836. [Google Scholar] [CrossRef]

- Verdiesen, I.; Dignum, V. Value Elicitation on a Scenario of Autonomous Weapon System Deployment: A Qualitative Study Based on the Value Deliberation Process. AI Ethics 2022, 3, 887–900. [Google Scholar] [CrossRef]

- Moor, M.; Banerjee, O.; Abad, Z.S.H.; Krumholz, H.M.; Leskovec, J.; Topol, E.J.; Rajpurkar, P. Foundation Models for Generalist Medical Artificial Intelligence. Nature 2023, 616, 259–265. [Google Scholar] [CrossRef]

- Huang, K.A.; Choudhary, H.K.; Kuo, P.C. Artificial Intelligent Agent Architecture and Clinical Decision-Making in the Healthcare Sector. Cureus 2024, 16, e64115. [Google Scholar] [CrossRef]

- Ploug, T.; Sundby, A.; Moeslund, T.B.; Holm, S. Population Preferences for Performance and Explainability of Artificial Intelligence in Health Care: Choice-Based Conjoint Survey. J. Med. Internet Res. 2021, 23, e26611. [Google Scholar] [CrossRef]

- Di Nucci, E.; de Sio, F.S. Drones and Responsibility: Legal, Philosophical and Socio-Technical Perspectives on Remotely Controlled Weapons; Routledge: London, UK; New York, NY, USA, 2016; pp. 1–217. [Google Scholar] [CrossRef]

- Altehenger, H.; Menges, L.; Schulte, P. How AI Systems Can Be Blameworthy. Philosophia 2024, 52, 1083–1106. [Google Scholar] [CrossRef] [PubMed]

- Kneer, M.; Christen, M. Responsibility Gaps and Retributive Dispositions: Evidence from the US, Japan and Germany. Sci. Eng. Ethics 2024, 30, 51. [Google Scholar] [CrossRef]

- Ferrario, A. Design Publicity of Black Box Algorithms: A Support to the Epistemic and Ethical Justifications of Medical AI Systems. J. Med. Ethics 2022, 48, 492–494. [Google Scholar] [CrossRef] [PubMed]

- Barea Mendoza, J.A.; Valiente Fernandez, M.; Pardo Fernandez, A.; Gómez Álvarez, J. Current Perspectives on the Use of Artificial Intelligence in Critical Patient Safety. Med. Intensiv. 2024, 49, 154–164. [Google Scholar] [CrossRef]

- Schlicker, N.; Langer, M.; Hirsch, M.C. How Trustworthy Is Artificial Intelligence? A Model for the Conflict between Objectivity and Subjectivity. Inn. Med. 2023, 64, 1051–1057. [Google Scholar] [CrossRef]

- Kim, J.; Kim, S.Y.; Kim, E.A.; Sim, J.A.; Lee, Y.; Kim, H. Developing a Framework for Self-Regulatory Governance in Healthcare AI Research: Insights from South Korea. Asian Bioeth. Rev. 2024, 16, 391–406. [Google Scholar] [CrossRef] [PubMed]

- Surovková, J.; Haluzová, S.; Strunga, M.; Urban, R.; Lifková, M.; Thurzo, A. The New Role of the Dental Assistant and Nurse in the Age of Advanced Artificial Intelligence in Telehealth Orthodontic Care with Dental Monitoring: Preliminary Report. Appl. Sci. 2023, 13, 5212. [Google Scholar] [CrossRef]

- Thurzo, A. How Is AI Transforming Medical Research, Education and Practice? Bratisl. Med. J. 2025, 126, 243–248. [Google Scholar] [CrossRef]

- Brodeur, P.G.; Buckley, T.A.; Kanjee, Z.; Goh, E.; Ling, E.B.; Jain, P.; Cabral, S.; Abdulnour, R.-E.; Haimovich, A.; Freed, J.A.; et al. Superhuman Performance of a Large Language Model on the Reasoning Tasks of a Physician. arXiv 2024, arXiv:2412.10849. [Google Scholar]

- Gupta, G.K.; Singh, A.; Manikandan, S.V.; Ehtesham, A. Digital Diagnostics: The Potential of Large Language Models in Recognizing Symptoms of Common Illnesses. AI 2025, 6, 13. [Google Scholar] [CrossRef]

- Thurzo, A.; Strunga, M.; Urban, R.; Surovková, J.; Afrashtehfar, K.I. Impact of Artificial Intelligence on Dental Education: A Review and Guide for Curriculum Update. Educ. Sci. 2023, 13, 150. [Google Scholar] [CrossRef]

- Zhang, J.; Fenton, S.H. Preparing Healthcare Education for an AI-Augmented Future. npj Health Syst. 2024, 1, 4. [Google Scholar] [CrossRef] [PubMed]

- Gottweis, J.; Weng, W.-H.; Daryin, A.; Tu, T.; Palepu, A.; Sirkovic, P.; Myaskovsky, A.; Weissenberger, F.; Rong, K.; Tanno, R.; et al. Towards an AI Co-Scientist. arXiv 2025, arXiv:2502.18864. [Google Scholar]

- Uc, A.A.; Ucsf, B.; Phillips, R.V.; Kıcıman, E.; Balzer, L.B.; Van Der Laan, M.; Petersen, M. Large Language Models as Co-Pilots for Causal Inference in Medical Studies. arXiv 2024, arXiv:2407.19118. [Google Scholar]

- Musslick, S.; Bartlett, L.K.; Chandramouli, S.H.; Dubova, M.; Gobet, F.; Griffiths, T.L.; Hullman, J.; King, R.D.; Kutz, J.N.; Lucas, C.G.; et al. Automating the Practice of Science: Opportunities, Challenges, and Implications. Proc. Natl. Acad. Sci. USA 2025, 122, e2401238121. [Google Scholar] [CrossRef] [PubMed]

- Xie, S.; Zhao, W.; Deng, G.; He, G.; He, N.; Lu, Z.; Hu, W.; Zhao, M.; Du, J. Utilizing ChatGPT as a Scientific Reasoning Engine to Differentiate Conflicting Evidence and Summarize Challenges in Controversial Clinical Questions. J. Am. Med. Inform. Assoc. 2024, 31, 1551–1560. [Google Scholar] [CrossRef]

- Oniani, D.; Hilsman, J.; Peng, Y.; Poropatich, R.K.; Pamplin, J.C.; Legault, G.L.; Wang, Y. Adopting and Expanding Ethical Principles for Generative Artificial Intelligence from Military to Healthcare. NPJ Digit. Med. 2023, 6, 225. [Google Scholar] [CrossRef]

- Weidener, L.; Fischer, M. Proposing a Principle-Based Approach for Teaching AI Ethics in Medical Education. JMIR Med. Educ. 2024, 10, e55368. [Google Scholar] [CrossRef]

- Zhou, R.; Zhang, G.; Huang, H.; Wei, Z.; Zhou, H.; Jin, J.; Chang, F.; Chen, J. How Would Autonomous Vehicles Behave in Real-World Crash Scenarios? Accid. Anal. Prev. 2024, 202, 107572. [Google Scholar] [CrossRef]

| Aspect | Fear | Surface-Level Algorithms |

|---|---|---|

| Core objective | Aims to prevent harm by prioritizing patient safety deeply tied to high-stakes decision-making. | Focuses on avoiding offensive or harmful language, primarily addressing social norms and sensitivities in communication. |

| Implementation depth | Operates as a foundational, system-wide mechanism influencing every decision and action, akin to a survival instinct. | Functions as a surface-level filter or constraint applied during response generation. |

| Risk assessment | Involves continuous risk estimation and harm aversion in dynamic, high-stakes environments (e.g., healthcare or warfare). | Primarily avoids reputational or social harm during text generation. |

| Adaptation to context | Tailored to specific domains (e.g., medical AI, where harm has direct physical consequences, as well as in lethal autonomous weapon systems (LAWS)). | Generalized across topics to fit broad societal expectations. |

| Accountability | Designed to trigger specific fail-safes (e.g., escalating to a human when risk is high). | Seeks to refine language but does not affect operational decision-making. |

| Military AI | Medical AI | |

|---|---|---|

| Lack of human-like decision-making in AI agents | The current autonomous systems, including LAWS, cannot replicate human emotional and moral considerations such as fear, empathy, or remorse. | In a medical context, this facet underscores the necessity for AI systems to incorporate mechanisms analogous to human emotions (like fear) to prioritize patient safety and ethical treatment decisions. |

| “Fear of killing” as a performance factor | Human soldiers often fail in combat due to a moral aversion to killing, driven by fear or ethical reservations. In contrast, AI-autonomous systems lack this “fear” and are thus more effective but also more ethically detached. | In medicine, embedding a “fear of harming the patient” could serve as a counterweight to prevent AI from making detached, overly utilitarian, fearless decisions that risk patient health or dignity. |

| Mimicking vs. embodying emotions | LAWS can currently only mimic moral actions and cannot genuinely feel emotions like remorse or fear, which are tied to accountability and human dignity. | Mere superficial simulations of caution (e.g., rule-based safeguards) may not suffice. Instead, an embodied “fear” mechanism—integrated at a deep architectural level of decision core—could enhance AI’s decision-making, ensuring actions align with the ethical principle of “do no harm”. |

| Autonomy and accountability | A crucial issue remains that autonomous AI robots cannot be held accountable for their actions, as they lack the capacity to comprehend punishment or reward. | The introduction of “fear” as a computational mechanism might enable better self-regulation and reduce the risk of decisions that harm patients without solving this accountability issue. |

| Ethical implications of decision-making autonomy | Autonomous systems making life-and-death decisions in warfare highlight the risks of dehumanization and moral detachment. Relinquishing the decision to kill to machines fundamentally undermines human dignity. | Fear-driven caution in AI could act as a safeguard against dehumanized care and overly mechanistic decisions, promoting a more ethically aligned approach to treatment. |

| Environment | Fear-Based Benefit |

|---|---|

| Autonomous vehicles | Fear of accidents could help prioritize safety over efficiency, especially in complex or ambiguous traffic scenarios. |

| Financial systems and trading algorithms | Fear of catastrophic losses or economic instability could guide risk-sensitive behavior in automated trading systems. |

| Disaster response and search-and-rescue AI | Fear of endangering human survivors could improve decision-making during rescue operations. |

| Environmental monitoring and preservation | AI could use fear-based constraints to avoid actions that could inadvertently cause environmental damage while managing ecosystems or climate interventions. |

| Industrial automation and robotics | Fear of causing workplace accidents or equipment damage could lead to safer interactions between robots and humans in factories. |

| Childcare and elderly care systems | Fear of neglect or causing harm could ensure higher vigilance and better care from AI systems assisting vulnerable populations. |

| Cybersecurity systems | Fear of failing to detect a breach or causing unintended harm through aggressive defense mechanisms could lead to more balanced and cautious strategies. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Thurzo, A.; Thurzo, V. Embedding Fear in Medical AI: A Risk-Averse Framework for Safety and Ethics. AI 2025, 6, 101. https://doi.org/10.3390/ai6050101

Thurzo A, Thurzo V. Embedding Fear in Medical AI: A Risk-Averse Framework for Safety and Ethics. AI. 2025; 6(5):101. https://doi.org/10.3390/ai6050101

Chicago/Turabian StyleThurzo, Andrej, and Vladimír Thurzo. 2025. "Embedding Fear in Medical AI: A Risk-Averse Framework for Safety and Ethics" AI 6, no. 5: 101. https://doi.org/10.3390/ai6050101

APA StyleThurzo, A., & Thurzo, V. (2025). Embedding Fear in Medical AI: A Risk-Averse Framework for Safety and Ethics. AI, 6(5), 101. https://doi.org/10.3390/ai6050101